Using BiLSTM Networks for Context-Aware Deep Sensitivity Labelling on Conversational Data

Abstract

:1. Introduction

- The introduction of the four context classes (Semantic, Agent, Temporal, Self) as a taxonomy to suitably represent the relationship between candidate sensitive tokens and their textual surroundings (sentence or wider textual sequence). In addition to allowing for context differentiation (for instance, depending on the nature of privacy settings), the taxonomy may be used as a framework for applying sensitivity tiers (for instance by overlaying different types of context-awareness depending on the strictness of privacy settings).

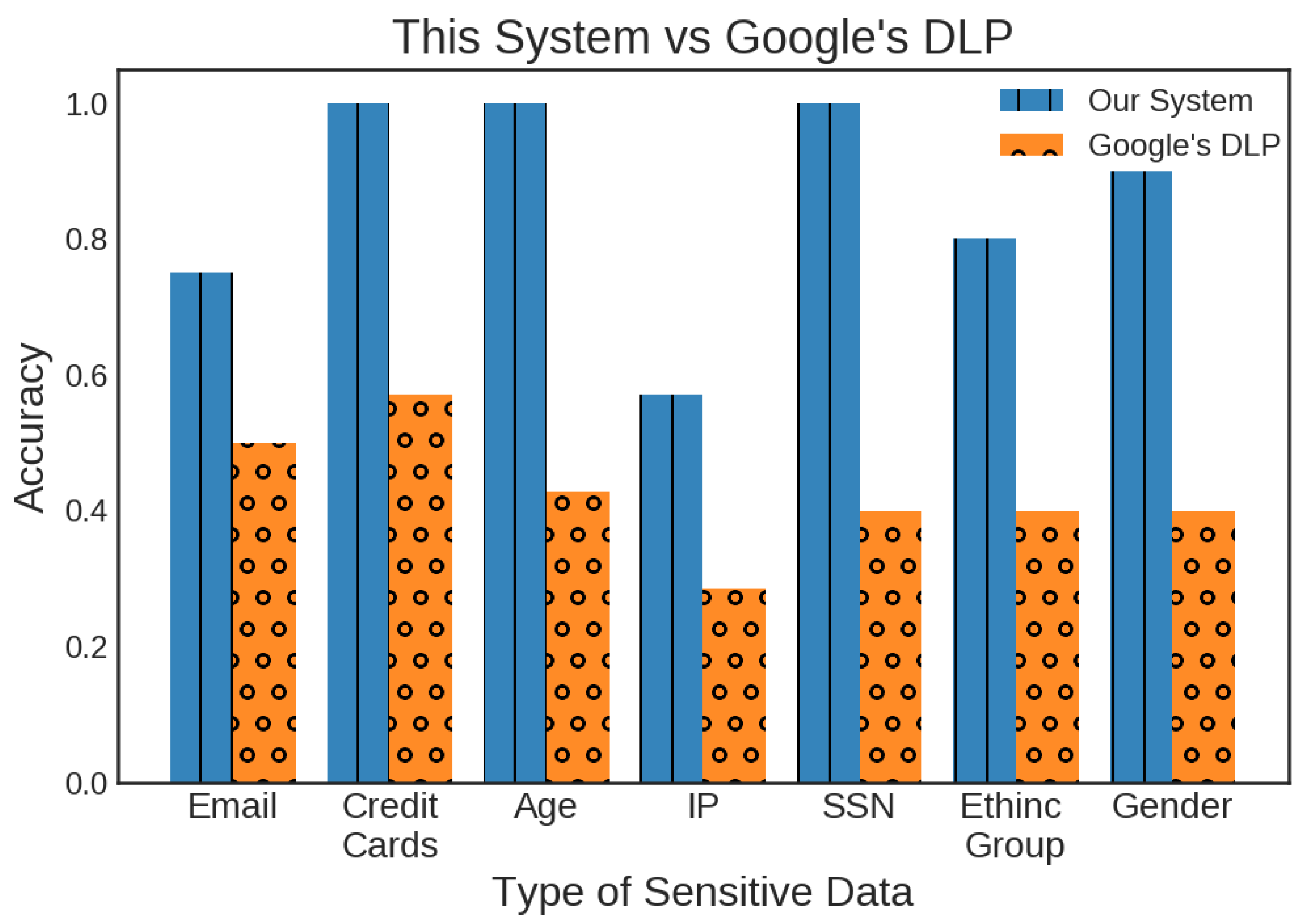

- The use of BiLSTM-CRF multi-class annotation of sensitive tokens in a context-aware manner, (an approach that, to the best of our knowledge has scarce representation in the literature), the implementation of which outperforms an industry-strength system in sensitivity labelling along at least one context class. (More specifically, our approach differentiates from normal NER (Named-Entity Recognition) tagging in two ways: (a) Although it is technically still a sequence labelling task, it is fundamentally different in the same way that CWI (Complex Word Identification) tagging differs from NER. Our sensitivity labelling is context-aware whereas NER is not. (b) Our work includes a data generation strategy, something that would not be needed in the case of NER).

- A dataset enrichment methodology to address the scarcity of public annotated data with sensitivity labelling, which uses real-world conversational data as seeds to generate a large-enough training set while mitigating any sensitivity class imbalances.

2. Related Work

3. Background and Approach

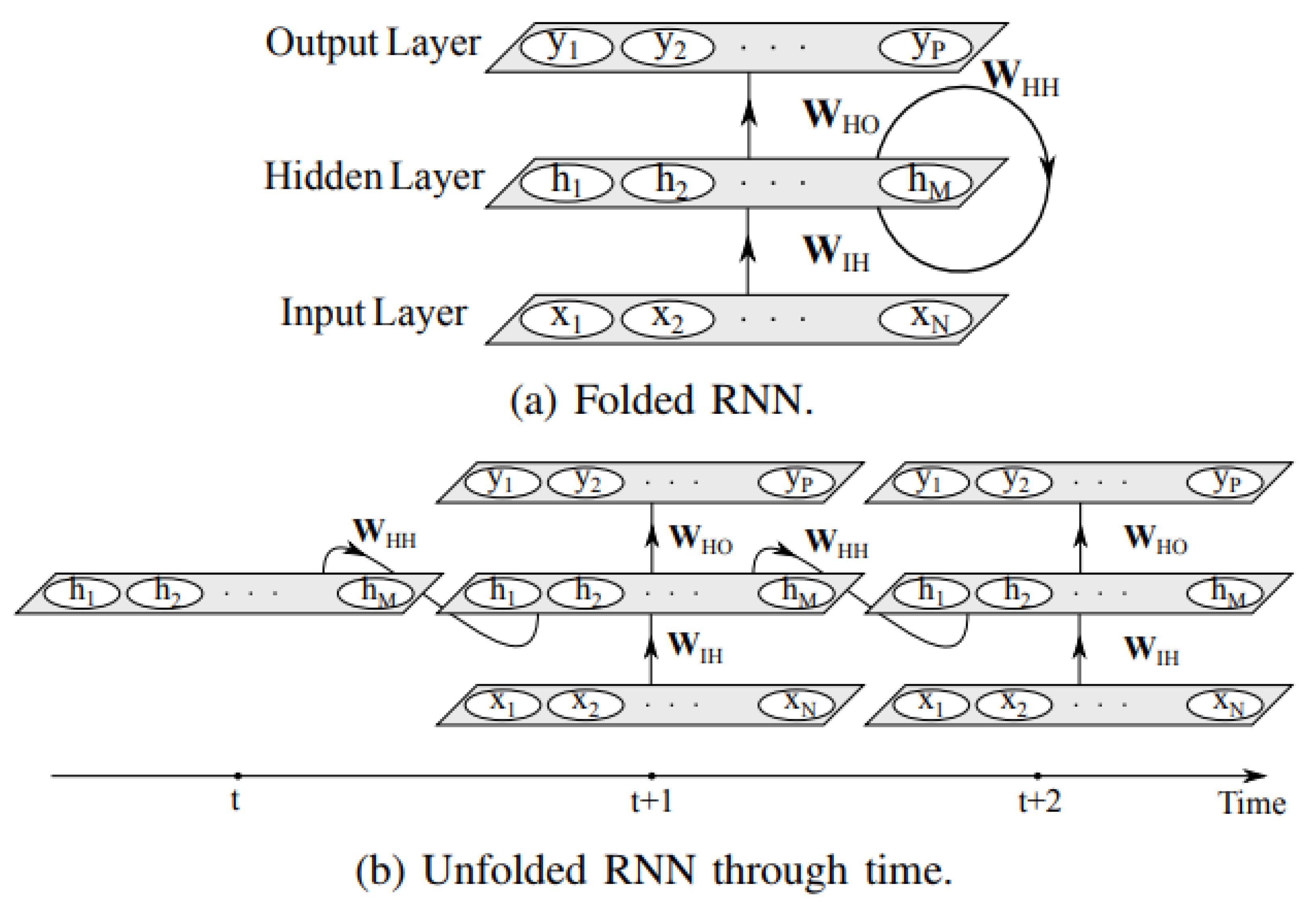

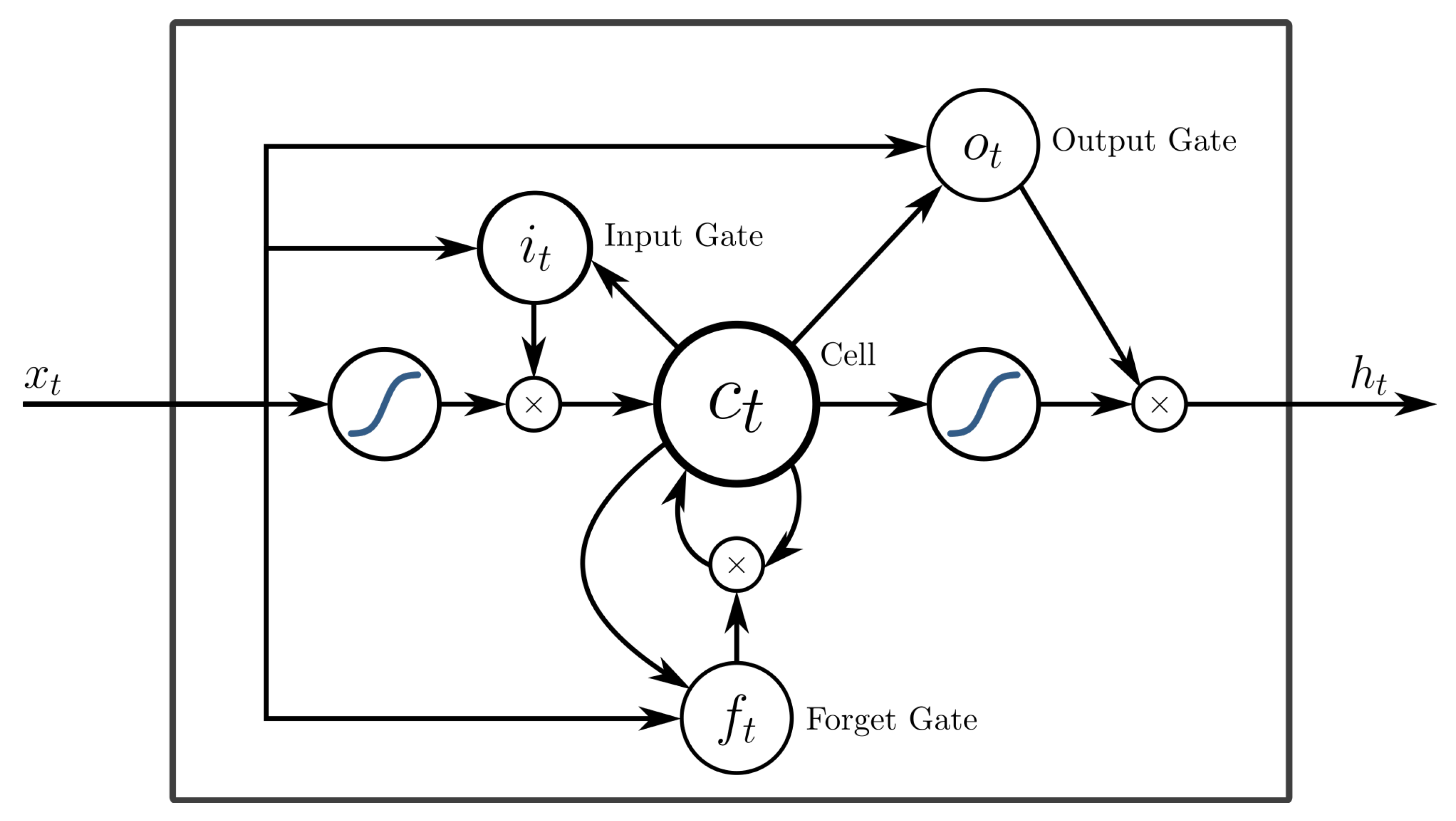

3.1. LSTM Networks

3.2. Embeddings

3.3. Conditional Random Fields

3.4. Auxiliary Features (POS, IC, WS)

4. Dataset Creation

4.1. Sensitivity Classes

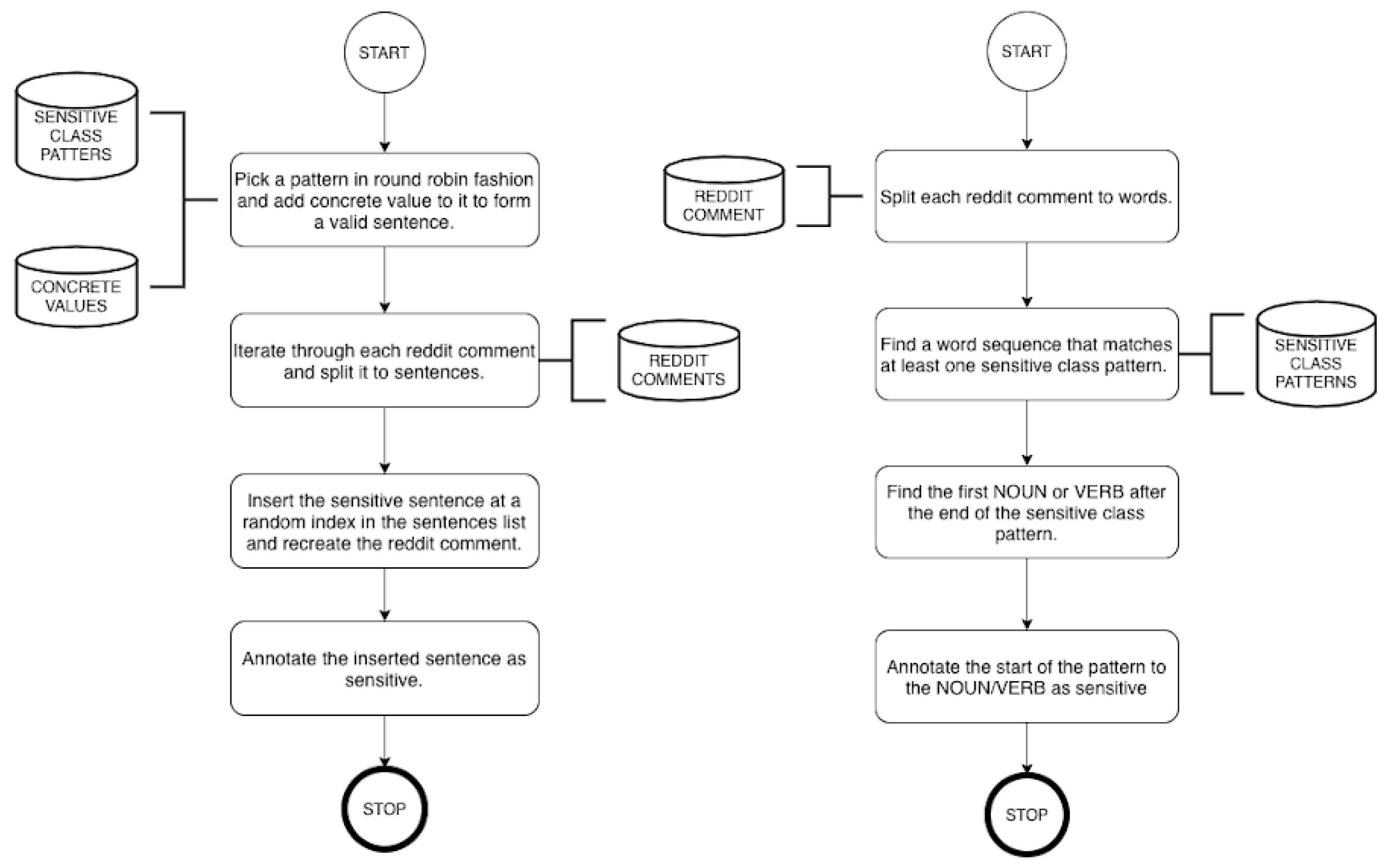

4.2. Data Generation Process

5. Experimental Design

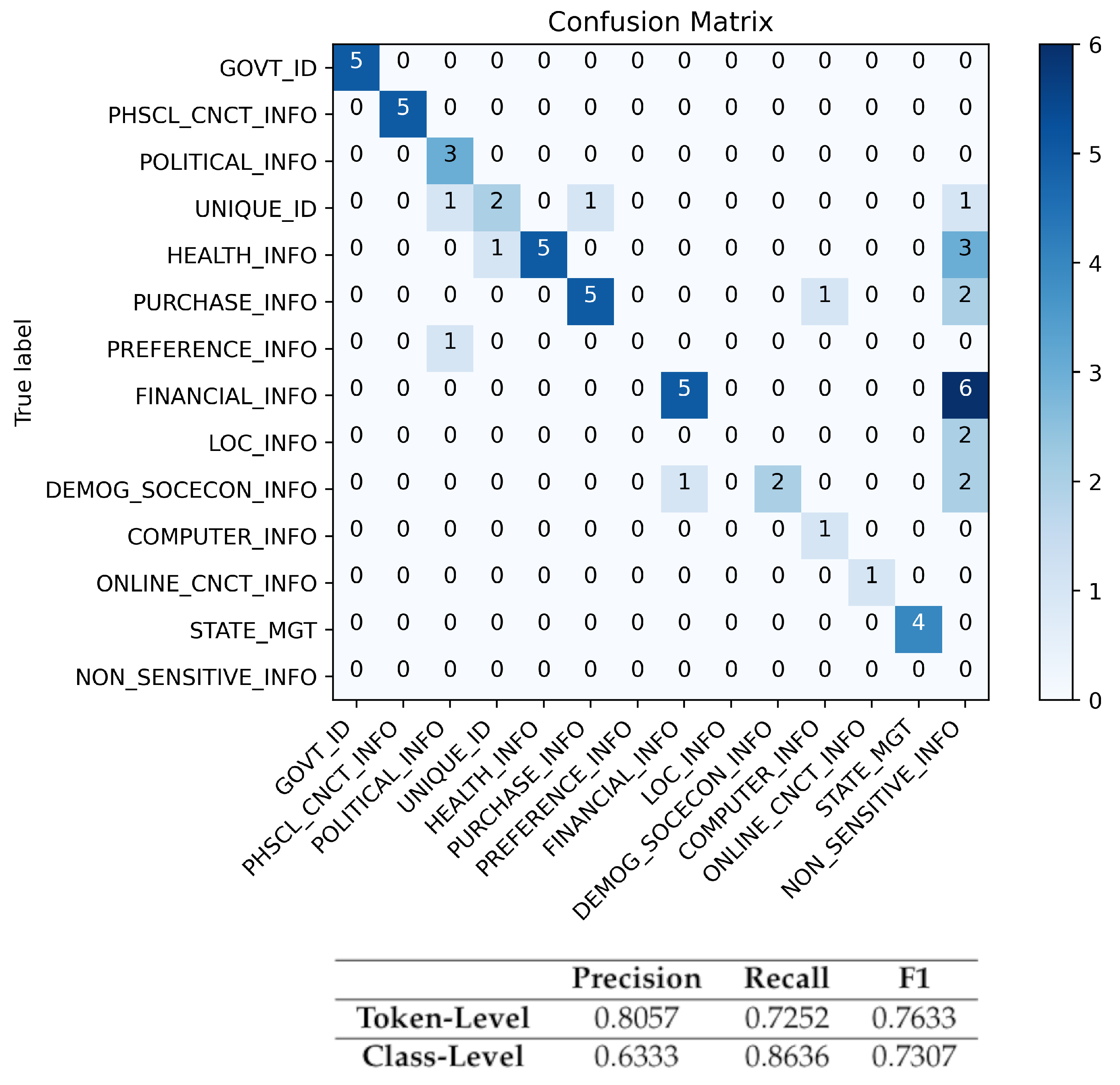

6. Results

7. Evaluation

8. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| BERT | Bidirectional Encoder Representations from Transformers |

| BiLSTM | Bidirectional Lond-Short Term Memory |

| CNN | Convolutional Neural Network |

| CRF | Conditional Random Field |

| CWI | Complex Word Identification |

| DLP | Data Loss Prevention |

| IC | Information Context |

| LSTM | Long Short Term Memory |

| RNN | Recurrent Neural Network |

| NER | Named Entity Recognition |

| OOV | Out-of-Vocabulary |

| POS | Part of Speech |

| SVM | Support Vector Machine |

| WS | WordShape |

References

- Acquisti, A.; Adjerid, I.; Balebako, R.; Brandimarte, L.; Cranor, L.F.; Komanduri, S.; Leon, P.G.; Sadeh, N.; Schaub, F.; Sleeper, M.; et al. Nudges for privacy and security: Understanding and assisting users’ choices online. ACM Comput. Surv. (CSUR) 2017, 50, 1–41. [Google Scholar] [CrossRef] [Green Version]

- Acquisti, A.; Grossklags, J. Privacy and rationality in individual decision making. IEEE Secur. Priv. 2005, 3, 26–33. [Google Scholar] [CrossRef]

- Wang, Y.; Norcie, G.; Komanduri, S.; Acquisti, A.; Leon, P.G.; Cranor, L.F. I regretted the minute I pressed share: A qualitative study of regrets on Facebook. In Proceedings of the Seventh Symposium on Usable Privacy and Security, Pittsburgh, PA, USA, 20–22 July 2011; ACM: New York, NY, USA, 2011; p. 10. [Google Scholar]

- Cranor, L.; Dobbs, B.; Egelman, S.; Hogben, G.; Humphrey, J.; Langheinrich, M.; Marchiori, M.; Presler-Marshall, M.; Reagle, J.M.; Schunter, M.; et al. The Platform for Privacy Preferences 1.1 (P3P1.1) Specification; Note NOTE-P3P11-20061113; World Wide Web Consortium: Cambridge, MA, USA, 2006. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Ma, X.; Hovy, E. End-to-end sequence labeling via bi-directional lstm-cnns-crf. arXiv 2016, arXiv:1603.01354. [Google Scholar]

- Lafferty, J.; McCallum, A.; Pereira, F.C. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. Available online: https://repository.upenn.edu/cis_papers/159/ (accessed on 13 June 2020).

- Ong, Y.J.; Qiao, M.; Routray, R.; Raphael, R. Context-Aware Data Loss Prevention for Cloud Storage Services. In Proceedings of the 2017 IEEE 10th International Conference on Cloud Computing (CLOUD), Honolulu, CA, USA, 25–30 June 2017; pp. 399–406. [Google Scholar] [CrossRef]

- Alzhrani, K.; Rudd, E.M.; Boult, T.E.; Chow, C.E. Automated Big Text Security Classification. In Proceedings of the 2016 IEEE Conference on Intelligence and Security Informatics (ISI), Tucson, AZ, USA, 28–30 September 2016. [Google Scholar]

- Hart, M.; Manadhata, P.; Johnson, R. Text classification for data loss prevention. In Proceedings of the 11th International Conference on Privacy Enhancing Technologies, Waterloo, ON, Canada, 24 July 2011; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Gomez-Hidalgo, J.M.; Martin-Abreu, J.M.; Nieves, J.; Santos, I.; Brezo, F.; Bringas, P.G. Data leak prevention through named entity recognition. In Proceedings of the 2010 IEEE Second International Conference on Social Computing, Minneapolis, MN, USA, 20–22 August 2010; pp. 1129–1134. [Google Scholar]

- Alneyadi, S.; Sithirasenan, E.; Muthukkumarasamy, V. Word N-gram based classification for data leakage prevention. In Proceedings of the 2013 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; pp. 578–585. [Google Scholar]

- McDonald, G.; Macdonald, C.; Ounis, I.; Gollins, T. Towards a classifier for digital sensitivity review. In European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2014; pp. 500–506. [Google Scholar]

- McDonald, G.; Macdonald, C.; Ounis, I. Enhancing sensitivity classification with semantic features using word embeddings. In European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2017; pp. 450–463. [Google Scholar]

- McDonald, G.; Macdonald, C.; Ounis, I. Using part-of-speech n-grams for sensitive-text classification. In Proceedings of the 2015 International Conference on The Theory of Information Retrieval, Northampton, MA, USA, 27–30 September 2015; ACM: New York, NY, USA, 2015; pp. 381–384. [Google Scholar]

- Caliskan Islam, A.; Walsh, J.; Greenstadt, R. Privacy detective: Detecting private information and collective privacy behavior in a large social network. In Proceedings of the 13th Workshop on Privacy in the Electronic Society, Scottsdale, AZ, USA, 3 November 2014; ACM: New York, NY, USA, 2014; pp. 35–46. [Google Scholar]

- Jiang, K.; Feng, S.; Song, Q.; Calix, R.A.; Gupta, M.; Bernard, G.R. Identifying tweets of personal health experience through word embedding and LSTM neural network. BMC Bioinform. 2018, 19, 210. [Google Scholar] [CrossRef] [PubMed]

- Sweeney, L. Replacing personally-identifying information in medical records, the Scrub system. In Proceedings of the AMIA Annual Fall Symposium 1996, Washington, DC, USA, 30 October 1996; American Medical Informatics Association: Bethesda, MD, USA, 1996; p. 333. [Google Scholar]

- Sánchez, D.; Batet, M.; Viejo, A. Detecting sensitive information from textual documents: An information- theoretic approach. In International Conference on Modeling Decisions for Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2012; pp. 173–184. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems; ACM: New York, NY, USA, 2013; pp. 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Ali, F.; El-Sappagh, S.; Kwak, D. Fuzzy Ontology and LSTM-Based Text Mining: A Transportation Network Monitoring System for Assisting Travel. Sensors 2019, 19, 234. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ali, F.; El-Sappagh, S.; Islam, S.R.; Ali, A.; Attique, M.; Imran, M.; Kwak, K.S. An intelligent healthcare monitoring framework using wearable sensors and social networking data. Future Gener. Comput. Syst. 2021, 114, 23–43. [Google Scholar] [CrossRef]

- Ayvaz, E.; Kaplan, K.; Kuncan, M. An Integrated LSTM Neural Networks Approach to Sustainable Balanced Scorecard-Based Early Warning System. IEEE Access 2020, 8, 37958–37966. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Salehinejad, H.; Sankar, S.; Barfett, J.; Colak, E.; Valaee, S. Recent advances in recurrent neural networks. arXiv 2017, arXiv:1801.01078. [Google Scholar]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Shi, B.; Fu, Z.; Bing, L.; Lam, W. Learning Domain-Sensitive and Sentiment-Aware Word Embeddings. arXiv 2018, arXiv:1805.03801. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Narayanan, A.; Chandramohan, M.; Venkatesan, R.; Chen, L.; Liu, Y.; Jaiswal, S. graph2vec: Learning Distributed Representations of Graphs. arXiv 2017, arXiv:1707.05005. [Google Scholar]

- Artieres, T. Neural conditional random fields. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 177–184. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar]

- Narayanan, A.; Shmatikov, V. How to break anonymity of the netflix prize dataset. arXiv 2006, arXiv:cs/0610105. [Google Scholar]

- Emam, K.; Mosquera, L.; Hoptroff, R. Practical Synthetic Data Generation: Balancing Privacy and the Broad Availability of Data; O’Reilly Media, Incorporated: Sebastopol, CA, USA, 2020. [Google Scholar]

- Hu, G.; Peng, X.; Yang, Y.; Hospedales, T.M.; Verbeek, J. Frankenstein: Learning deep face representations using small data. IEEE Trans. Image Process. 2018, 27, 293–303. [Google Scholar] [CrossRef] [Green Version]

- Das, A.; Gkioxari, G.; Lee, S.; Parikh, D.; Batra, D. Neural Modular Control for Embodied Question Answering. arXiv 2018, arXiv:1810.11181. [Google Scholar]

- Patki, N.; Wedge, R.; Veeramachaneni, K. The synthetic data vault. In Proceedings of the 2016 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Montreal, QC, Canada, 17–19 October 2016; pp. 399–410. [Google Scholar]

- Kaur, H.; Pannu, H.S.; Malhi, A.K. A Systematic Review on Imbalanced Data Challenges in Machine Learning: Applications and Solutions. ACM Comput. Surv. 2019, 52. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Peddinti, V.; Povey, D.; Manohar, V.; Khudanpur, S.; Yan, Y. An Exploration of Dropout with LSTMs. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017. [Google Scholar]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. Glue: A multi-task benchmark and analysis platform for natural language understanding. arXiv 2018, arXiv:1804.07461. [Google Scholar]

- Talman, A.; Yli-Jyrä, A.; Tiedemann, J. Natural Language Inference with Hierarchical BiLSTM Max Pooling Architecture. arXiv 2018, arXiv:1808.08762. [Google Scholar]

- Bohnet, B.; McDonald, R.T.; Simões, G.; Andor, D.; Pitler, E.; Maynez, J. Morphosyntactic Tagging with a Meta-BiLSTM Model over Context Sensitive Token Encodings. arXiv 2018, arXiv:1805.08237. [Google Scholar]

- Reimers, N.; Gurevych, I. Reporting Score Distributions Makes a Difference: Performance Study of LSTM-networks for Sequence Tagging. arXiv 2017, arXiv:1707.09861. [Google Scholar]

- Si, Y.; Wang, J.; Xu, H.; Roberts, K. Enhancing Clinical Concept Extraction with Contextual Embedding. arXiv 2019, arXiv:1902.08691. [Google Scholar] [CrossRef] [Green Version]

- MacAvaney, S.; Yates, A.; Cohan, A.; Goharian, N. CEDR: Contextualized Embeddings for Document Ranking. arXiv 2019, arXiv:1904.07094. [Google Scholar]

- Reimers, N.; Schiller, B.; Beck, T.; Daxenberger, J.; Stab, C.; Gurevych, I. Classification and Clustering of Arguments with Contextualized Word Embeddings. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Florence, Italy, 2019; pp. 567–578. [Google Scholar] [CrossRef]

- Gutiérrez, L.; Keith, B. A Systematic Literature Review on Word Embeddings. In International Conference on Software Process Improvement; Springer: Berlin/Heidelberg, Germany, 2018; pp. 132–141. [Google Scholar]

- Xin, Y.; Hart, E.; Mahajan, V.; Ruvini, J. Learning Better Internal Structure of Words for Sequence Labeling. arXiv 2018, arXiv:1810.12443. [Google Scholar]

- Yuan, H.; Yang, Z.; Chen, X.; Li, Y.; Liu, W. URL2Vec: URL Modeling with Character Embeddings for Fast and Accurate Phishing Website Detection. In Proceedings of the 2018 IEEE International Conference on Parallel Distributed Processing with Applications, Ubiquitous Computing Communications, Big Data Cloud Computing, Social Computing Networking, Sustainable Computing Communications (ISPA/IUCC/BDCloud/SocialCom/SustainCom), Melbourne, Australia, 11–13 December 2018; pp. 265–272. [Google Scholar] [CrossRef]

- Zhai, Z.; Nguyen, D.Q.; Verspoor, K. Comparing CNN and LSTM character-level embeddings in BiLSTM-CRF models for chemical and disease named entity recognition. arXiv 2018, arXiv:1808.08450. [Google Scholar]

- Tjong Kim Sang, E.F.; De Meulder, F. Introduction to the CoNLL-2003 Shared Task: Language-Independent Named Entity Recognition. In Proceedings of the Seventh Conference on Natural Language Learning at HLT-NAACL 2003, Edmonton, Canada, 31 May–1 June 2003; pp. 142–147. [Google Scholar]

- Zhu, S.; Yu, K. Encoder-decoder with focus-mechanism for sequence labelling based spoken language understanding. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5675–5679. [Google Scholar]

- Pahuja, V.; Laha, A.; Mirkin, S.; Raykar, V.; Kotlerman, L.; Lev, G. Joint learning of correlated sequence labelling tasks using bidirectional recurrent neural networks. arXiv 2017, arXiv:1703.04650. [Google Scholar]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

| Sensitivity Class | Description | Label | Count |

|---|---|---|---|

| Physical Contact Information | Information that allows an individual to be contacted or located in the physical world—such as telephone number or address. | PHSCL_CNCT_INFO | 14,108 |

| Online Contact Information | Information that allows an individual to be contacted or located on the Internet—such as email. Often, this information is independent of the specific computer used to access the network. (See the category “Computer Information”) | ONLINE_CNCT_INFO | 30,248 |

| Unique Identifiers | Non-financial identifiers, excluding government-issued identifiers, issued for purposes of consistently identifying or recognising the individual. These include identifiers issued by a Web site or service. | UNIQUE_ID | 10,594 |

| Purchase Information | Information actively generated by the purchase of a product or service, including information about the method of payment. | PURCHASE_INFO | 13,712 |

| Financial Information | Information about an individual’s finances including account status and activity information such as account balance, payment or overdraft history, and information about an individual’s purchase or use of financial instruments including credit or debit card information. | FINANCIAL_INFO | 19,258 |

| Computer Information | Information about the computer system that the individual is using to access the network—such as the IP number, domain name, browser type or operating system. | COMPUTER_INFO | 8143 |

| Demographic and Socioeconomic Data | Data about an individual’s characteristics—such as gender, age, income, postal code, or geographic region. | DEMOG_SOCECON_INFO | 26,013 |

| State Management Mechanisms | Mechanisms for maintaining a stateful session with a user or automatically recognising users who have visited a particular site or accessed particular content previously—such as HTTP cookies. | STATE_MGT | 5666 |

| Political Information | Membership in or affiliation with groups such as religious organisations, trade unions, professional associations, political parties, etc. | POLITICAL_INFO | 21,341 |

| Health Information | Information about an individual’s physical or mental health, sexual orientation, use or inquiry into health care services or products, and purchase of health care services or products. | HEALTH_INFO | 23,516 |

| Preference Data | Data about an individual’s likes and dislikes—such as favorite colour or musical tastes. | PREFERENCE_INFO | 43,048 |

| Location Data | Information that can be used to identify an individual’s current physical location and track them as their location changes—such as GPS position data. | LOC_INFO | 15,795 |

| Government-issued Identifiers | Identifiers issued by a government for purposes of consistently identifying the individual. | GOVT_ID | 14,108 |

| MODEL | EMBEDDINGS | AUX. FEATURES | RESULTS | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BiLSTM | CRF | CNN | RWE | GLoVe | BERT | POS | IC | WS | Precision | Recall | F1 | ||

| EXP. A | 1 | X | 0.8068 | 0.5779 | 0.6581 | ||||||||

| 2 | X | X | 0.8986 | 0.9315 | 0.9147 | ||||||||

| 3 | X | X | 0.9347 | 0.9599 | 0.9471 | ||||||||

| 4 | X | X | 0.9452 | 0.9689 | 0.9569 | ||||||||

| 5 | X | X | X | 0.9113 | 0.9337 | 0.9224 | |||||||

| 6 | X | X | X | 0.9451 | 0.9558 | 0.9504 | |||||||

| 7 | X | X | X | 0.9568 | 0.9693 | 0.9630 | |||||||

| EXP B. | 8 | X | X | X | 0.9568 | 0.9576 | 0.9572 | ||||||

| 9 | X | X | X | X | 0.9634 | 0.9558 | 0.9596 | ||||||

| EXP C. | 10 | X | X | X | X | 0.9548 | 0.9685 | 0.9616 | |||||

| 11 | X | X | X | X | 0.9611 | 0.9618 | 0.9614 | ||||||

| 12 | X | X | X | X | 0.9640 | 0.9706 | 0.9673 | ||||||

| 13 | X | X | X | X | X | 0.9639 | 0.9625 | 0.9631 | |||||

| 14 | X | X | X | X | X | 0.9635 | 0.9629 | 0.9632 | |||||

| 15 | X | X | X | X | X | 0.9628 | 0.9615 | 0.9622 | |||||

| 16 | X | X | X | X | X | X | 0.9611 | 0.9704 | 0.9657 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pogiatzis, A.; Samakovitis, G. Using BiLSTM Networks for Context-Aware Deep Sensitivity Labelling on Conversational Data. Appl. Sci. 2020, 10, 8924. https://doi.org/10.3390/app10248924

Pogiatzis A, Samakovitis G. Using BiLSTM Networks for Context-Aware Deep Sensitivity Labelling on Conversational Data. Applied Sciences. 2020; 10(24):8924. https://doi.org/10.3390/app10248924

Chicago/Turabian StylePogiatzis, Antreas, and Georgios Samakovitis. 2020. "Using BiLSTM Networks for Context-Aware Deep Sensitivity Labelling on Conversational Data" Applied Sciences 10, no. 24: 8924. https://doi.org/10.3390/app10248924

APA StylePogiatzis, A., & Samakovitis, G. (2020). Using BiLSTM Networks for Context-Aware Deep Sensitivity Labelling on Conversational Data. Applied Sciences, 10(24), 8924. https://doi.org/10.3390/app10248924