Abstract

Salient-object detection is a fundamental and the most challenging problem in computer vision. This paper focuses on the detection of salient objects, especially in low-contrast images. To this end, a hybrid deep-learning architecture is proposed where features are extracted on both the local and global level. These features are then integrated to extract the exact boundary of the object of interest in an image. Experimentation was performed on five standard datasets, and results were compared with state-of-the-art approaches. Both qualitative and quantitative analyses showed the robustness of the proposed architecture.

1. Introduction

Human beings very easily and quickly make vision-based decisions by extracting all important information from any real scene. All important objects in a scene are extracted, as well as relevant useful characteristic information such an object’s color, size, relative distance, and nearness. That is why salient-object detection (SOD) is extremely important and fundamental in many vision-related applications like computer vision, graphics, and robotics [1]. Due to its importance, much work is conducted in various research areas such as image captioning, target detection, scene classification, and semantic segmentation by utilising image-level annotations and image-quality evaluation [2,3,4,5,6,7].

Recently, deep learning has had remarkable success in salient-object detection because it provides a rich and discriminate representation of images. Convolutional neural networks (CNNs) are a very effective tool in machine learning, it working efficiently in salient-object detection because of the ability to extract both high- and low-level features. Early deep saliency models utilise multilayer perceptron (MLP) for detection. In these methods, the input image is split into small regions, and a CNN is then used to extract features that are passed to MLP to compute the saliency of the region. However, these models fail to extract high-level semantic information; thus, this semantic information is unavailable to further pass into fully connected layers. Therefore, this results in loss of information that is needed to obtain complete characteristic information of a salient object [1].

As an alternative, fully convolutional networks (FCNs) are often used [8]. In spite of the fact that FCNs produce good results, these models underperform for the images with low contrast, especially near boundaries of objects, because (1) saliency is heavily affected by the intense noise of low-contrast images; (2) in frequent pooling operations in deep-learning methods, loss of object structure and semantic information is unavoidable, which causes the poor detection of objects; and (3) since saliency is determined by the global contrast of image instead of local features, it becomes hard for the model to examine detailed boundary knowledge of the object.

To overcome these problems, we propose a boundary-aware fully convolutional network for the detection of salient objects that captures both the local and global context with a built-in refinement module to achieve segmentation with fine boundaries. Integration of both local and global features helps our model to accurately locate salient region. Features produced from multiple convolutional layers are merged into an integrated feature map to obtain the global context.To capture the local context and enhance local contrast features, multiresolution feature maps resulting from convolutional layers, contrast features, and upsampled feature maps produced from deconvolution layers are merged to form the local feature map. The predicted saliency map is produced by the fusion of global and local features. The predicted map is then passed to the refinement module, which is built as a residual block that purifies the input saliency map (see Figure 1).

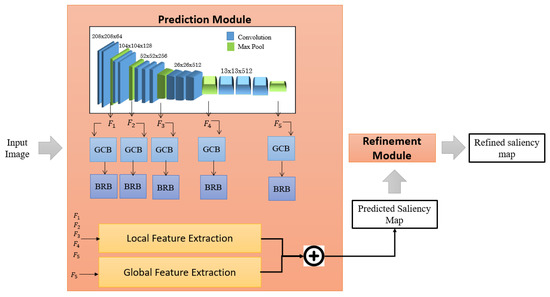

Figure 1.

Overview of proposed model (SODL), which consists of prediction module and refinement module. Image is passed to prediction module that learns deep features and produces a predicted saliency map, which is refined by the refinement module. This refined saliency map is taken as the final map.

The contributions of this work are summarised as follows:

- Generation of local and global deep feature maps to extract detailed structure of objects.

- Enhanced detection of objects in low-contrast images by integrating contrast features, and a global convolutional modules to introduce dense connections between features and classifiers.

- Boundary-refinement module to preserve boundary information present in initial layers.

- A residual refinement module is embedded that processes the predicted saliency map to refine boundaries by learning residuals.

2. Related Work

In general, methods for the detection of salient objects can broadly be classified into two groups: traditional and deep-learning-based approaches.

2.1. Traditional Approaches

These approaches use low-level features (e.g., color, contrast) to differentiate salient objects [9,10]. These features are useful in simple scenarios, but they have limited capability to caapture objects in complex scenarios. A comprehensive survey is provided by Borji et al. in [1].

2.2. Deep-Learning-Based Techniques

In deep learning, a model learns directly from image to perform classification. Wang et al. [11] developed a technique that makes use of prior saliency-detection techniques. Repetitive architecture allows for this method to refine the saliency map by rectifying previous errors, which results in fine predictions. They introduced a pretraining strategy using segmentation data for network training, which helps in segmentation or successful training and allows for the network to grasp objects for saliency detection. They trained an FCN to estimate the nonlinear mapping of saliency values from raw pixels and neglect saliency priors; then, these saliency priors were combined with deeply learned features, which are passed for iterative refinement. In this module the whole network is propagated forward, which causes an increase in computational cost and memory usage. Vivek et al. [12] used many saliency-detection methods to improve the quality of saliency map. First, they generated initial saliency maps by selecting saliency models. Then, they integrated these maps to form a binary map after that final saliency map had been generated using integration logic. The combined binary map defines pixels in finer way than a single binary map does. By using these labels, the final saliency map is produced by using logic in which maximal and minimal saliency values are assigned to pixels. The efficiency of this method relies on the saliency-detection model being selected. Therefore, the method of selecting existing techniques plays a key role. This method performs well when selected methods can detect images, and fails when those methods cannot. Feng et al. [13] introduced a model to enhance segmentation results near boundaries. An attentive feedback module was proposed to produce fine boundaries. Features from encoder blocks are passed to decoders where the feedback module is applied for segmentation. This module learns the structure and performs segmentation. Further, they proposed boundary-enhancement loss to assist the feedback module. VGG 16 was used as a base model by modifying it into an encoder network, followed by perception module that uses a fully connected layer that leads to the decoder module. Every decoder layer had a 3 × 3 convolutional layer. Multiscale features are collected via the encoder block; then, global saliency prediction is calculated, which is passed to the decoder for finer saliency predictions. For boundary correction, attentive feedback is applied.

Wang et al. [14] proposed a deep model that uses boundary knowledge to accurately locate salient objects. In boundary-guided networks, two subnetworks are defined: one for the mask and the other for boundaries; features are shared between these two networks. They also proposed focal loss to learn the loss of hard pixels near boundaries. Both subnetworks follow encoder decoder networks, features are extracted in the encoder, and the decoder gives the output. Decoders of both subnetworks are connected. The features extracted by the encoder have different resolutions, and bidirectional flow of information is enabled in encoders. Feature maps from two subnetworks are combined and passed to a convolutional layer. The decoders of mask and boundary subnetworks are connected. The mask decoder refines the mask prediction and uses focal loss, which focuses on boundary pixels. Girshick et al. [15] developed a robust and versatile detection technique that enhances the mean average precision by over 50% compared to prior techniques. This approach incorporates two concepts: (i) applying high CNNs to localise objects from bottom–up regions; and (ii) supervision pertaining to when abundant data are available, followed by fine tuning to increase performance. The whole technique comprises three modules. First, the module originates the regions. The second module consists of convolutional layers to collect features from regions. The final module is linear SVMs. The authors used selective search to select the technique for the region proposals. To extract features, regions are first converted into a form that is compatible with CNN; after features are extracted, optimisation is applied. Eitel et al. [16], proposed an RGB-D strategy for object detection. The network consisted of two different CNNs for each modality paired with a late fusion network. They set up a multistage training approach for effective training, and a data-augmentation framework for learning with images by manipulating them with noise patterns. The two convolutional models utilise color and depth information to examine RGB and depth data, which is integrated into a fusion technique. ImageNet is used for data pretraining; then, multimodal CNN is trained. Parameters are tuned for data classification, followed by the combined training of the parameters and fusion network. Images are resized before passing them to CNNs. The authors proposed an effective method for encoding depth images that normalises depth values that fall between 0 to 255. Then, the color amp is applied on images, which transforms them into three channel images. Table 1 highlights some of the other related state-of-the-art approaches available in the literature.

Table 1.

Summary of related-work review.

Both traditional and deep-learning-based models perform well for images having good contrast; however, their performance substantially degrades when contrast is low, and objects of interest have blurred boundaries. This work detects salient objects in low-contrast images using hybrid deep-learning models.

3. Proposed Approach

3.1. Architecture Overview

The proposed method consists of two main modules: (a) prediction of saliency maps and (b) refinement of the predicted saliency module. The prediction module consists of fully convolutional network that captures both local and global features. It also contains global-convolution and boundary-refinement blocks that focus on the refinement of features and the better extraction of boundary features. To further emphasise low-contrast images and enhance contours, a contrast module is also embedded in the model. Details are provided in subsequent sections.

3.2. Prediction Module

In this work, the prediction module was based on fully convolutional layers. To this end, the entire model network was based on a convolution operation and a deconvolution layer with varying output dimensions. This enabled our model to capture global and local features from different resolutions, as shown in Figure 1.

An input image is passed to five convolutional blocks to produce five feature maps , , , , and . Every convolutional block has kernel size of 3 × 3, followed by a max-pooling operation with stride 2 in order to reduce spatial resolution to 13 × 13 from 208 × 208. Pooling decreases the number of parameters and computations. Max pooling is obtained by using a max filter that captures the maximum of the selected region and produces a new one. After that, five global convolutional blocks are added into the network that allow for dense connection in the features and convolutional layers, which enables features to acquire diverse neural information and be immune to local disruptions. We also added boundary-refinement blocks (BRBs) for the feature maps to keep boundary information. These BRBs are based on a residual structure in which input to this block is directly added to the output of the deeper layer, which helps to maintain information present in the initial layers, and this information is needed by the upsampling layers. This structure also helps in avoiding information loss due to a multilayer network architecture.

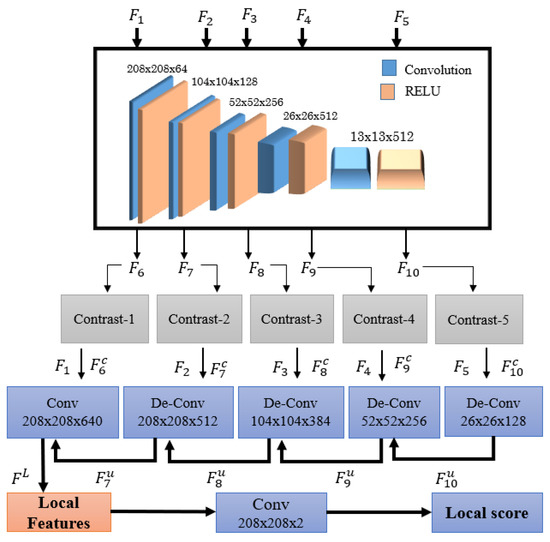

To generate local feature maps , five more convolutional blocks were added to the network, which processes the output of first five convolutional blocks as shown in Figure 2. These convolutional layers also have a kernel of 3 × 3 with 128 channels. The resulting diverse-scale local feature maps were , , , , . To further capture contrast feature () for each feature map, which is essential, especially for low-contrast images, we calculated the difference between feature map and its local average . In order to calculate the value of . We employed average pooling with a kernel size of 3 × 3. The contrast feature was thus calculated as

Since pooling operations were performed after convolutional layers, the spatial size of the image was decreased (to reduce computational cost). However, in the deconvolutional layer, we employed upsampling in order to attain the original image size. The final feature map was generated by the concatenation of contrast feature , local feature , and upsampled feature map using the equation below:

where is the concatenation function and is the upsampling function. To calculate final local map , local contrast feature , local feature , and upsampled feature map are concatenated and passed to convolution layer with kernel size set to 1 × 1.

Figure 2.

Extraction of local features. Local feature map produced by integration of contrast features, feature maps produced from convolutional layers, and upsampled feature maps.

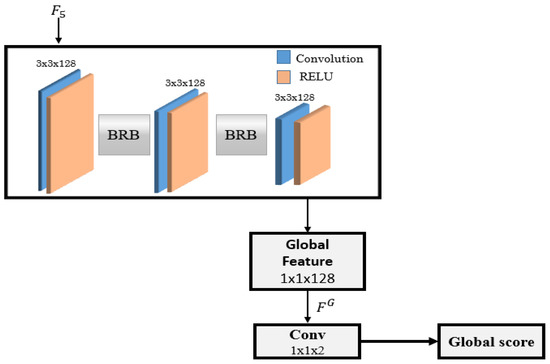

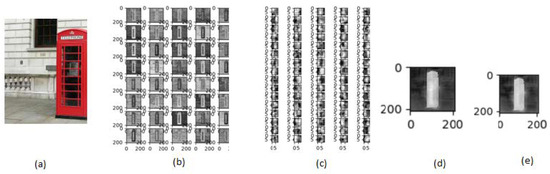

For the computation of global feature map , global features were extracted before allocating the saliency information of small regions. For this purpose, three convolutional layers with kernel size 3 × 3 and dilation with scale factor 2 is applied. Dilated convolutions reduces the loss of resolution. Figure 3 shows the structure of global convolutional block. Each convolutional layer was accompanied by an RELU activation function and a boundary-refinement block. Figure 4b,c shows the local and global feature maps of an input image. To produce the predicted saliency map, global and local feature maps were combined, and the resulting saliency map was passed as input to the refinement module. Figure 4d shows the predicted saliency map produced by the combination of local and global feature maps.

Figure 3.

Extraction of global features. Feature map from fifth convolutional layer was used, passed through dilated convolutions. For maintaining spatial information, boundary-refinement blocks (BRBs) were also used.

Figure 4.

Output of various components of proposed approach: (a) input image, (b) local feature map, (c) global feature map, (d) predicted saliency map, and (e) refined saliency map.

3.3. Global Convolutional Block

Enhancement in classification was achieved by using a global convolutional block (GCB). This helps to efficiently exploit accessible visual information in low-contrast images. The GCB seeks to extend the receptive region of feature maps. It also introduces dense connections between features and classifiers, which helps the model to identify the salient region with very little additional computational cost.

As mentioned before, the GCB enhances the classification efficiency of proposed model by considering the dense connections between classifiers and feature maps, which enables the network to handle different types of transitions. GCB’s large kernel is also useful in encoding more spatial information from feature maps, which increases precision in salient-object detection.

The GCB has two subdivisions that consist of two convolutional blocks. The left block has 7 × 1 convolutional layer, after which comes another convolutional layer of 1 × 7. The right block has 1 × 7 convolution followed by 7 × 1 convolution. These two subdivisions are combined to allow for dense connections, which enhances the receptive field’s validity. GCB’s computational cost is fairly low, which makes it more practicable.

3.4. Boundary-Refinement Block

The second module of the proposed network is the boundary-refinement block (BRB). It is a residual framework implanted after first five convolutional layers to maintain the boundary information of objects. This block is added for the refinement of feature maps and to enhance the accuracy of an object’s spatial location. The BRB was included to improve localisation near boundaries, which can significantly improve object detection in low-contrast images.

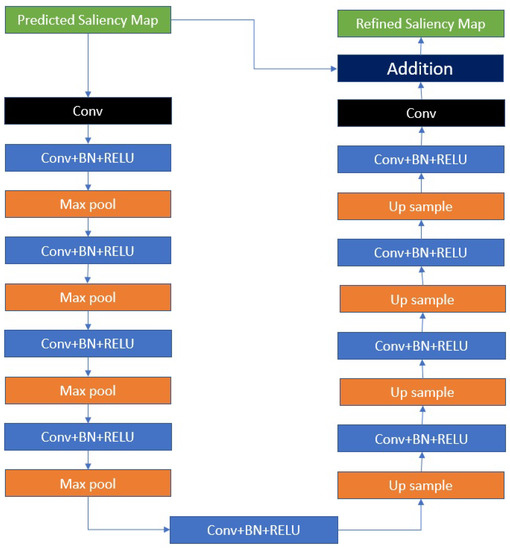

3.5. Refinement Module

The refinement module refines the overall saliency map. This module is different from BRB because the latter is used to refine the feature maps and preserve the rich spatial information present in initial layers, while the refinement module refines the predicted saliency map to enhance model accuracy. Predicted saliency map is input for this refinement module. Typically, a refinement module is built as a residual block that purifies the input saliency map through learning residuals between saliency map and its ground truth.

A refinement module is embedded to improve both regional and boundary limitations in coarse saliency maps. Coarse saliency maps refer to boundary blurriness and the uneven prediction of probabilities in regions. The refinement module consists of an input layer followed by an encoder, a decoder, a link stage between encoder and decoder networks, and an output layer. Both encoder and decoder networks comprise four stages. Every stage consists of one convolutional layer with 64 filters of 3 × 3 size. After each convolutional layer, batch normalisation and RELU function are performed. The link stage also contains a convolutional layer with the same filter and size, after which normalisation and RELU are performed.

To downsample in an encoder, max pooling is used; for upsampling, bilinear interpolation is used in the decoder. The resulting map is final saliency map . Final saliency map is produced by the refinement of the predicted saliency map. The soft-max function computes the probability that a pixel p in a feature map belongs to a salient object:

where ( , ) and ( , ) are linear operators, and is the ground truth. The loss function is the combination of cross-entropy loss and boundary loss. Figure 4e shows a refined saliency map. The results of the proposed approach on low-contrast images are compiled in Figure 5. The steps involved in encoding and decoding are shown in Figure 6.

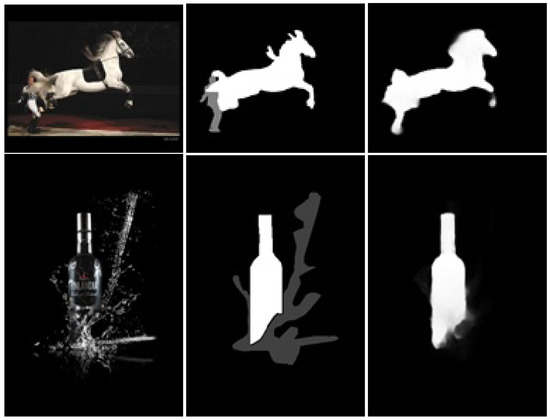

Figure 5.

Salient-object detection results of proposed approach on low-contrast images: (Column 1) input; (Column 2) ground truth; (Column 3) proposed-approach results.

Figure 6.

Encoder/decoder flow to extract refined saliency map.

4. Experimental Results

4.1. Datasets for Evaluation

Testing of the proposed approach was performed on five benchmark datasets:

- MSRA-B [31] comprises 5000 images and contains a single object mostly around the center position along with the bounding-box label.

- DUT-OMRON [32] includes 5168 images with complex backgrounds and a variety of content. Pixelwise ground-truth annotations are also available.

- The PASCAL-S [33] dataset contains 850 complex images. Eye-fixation records, and nonbinary and pixelwise annotations are also available.

- HKU-IS [34] comprises 4447 multiple distant objects. In these, a minimum of one object is present at the image boundary. Less difference between background and foreground makes these images more complex.

- DUTS [35] is very large Sod dataset containing 5019 test images and 10,553 training images. Many models use this dataset for training.

4.2. Evaluation Metrics

The following evaluation measures were used to measure the efficiency of the proposed model with other models:

- Precision–recall (PR) curve: Calculated by the conversion of the predicted saliency map into a binarised map and ground truth. Thresholding of 0–255 is applied to produce the binary map. For all saliency maps present in a dataset, every binarising threshold comes in a set of average precision and recall. An order of precision–recall pair is generated when the threshold varies from 0 to 1, which is used to plot the PR curve.

- F-measure curve: To provide comprehensive analysis, is calculated on both precision and recall as:The value is set as 0.3 to highlight precision more because the rate of recall is not as significant as that of precision. The value of the average F-measure is also presented in this research. The F-measure curve is generated with a comparison of the binary map with the ground truth that is obtained by changing threshold to decide if a pixel is owned by a salient object.

- Mean absolute error (MAE) is used to correctly measure false-negative pixels. It calculates the pixelwise error between saliency map and ground truth:If the MAE value is less, it indicates that ground truth and predicted saliency map are highly similar.

4.3. Implementation and Experiment Setup

The MSRA-B [31] dataset was used to train the model, and the training set contained 2500 images and 500 images for validation. To avoid overfitting, the horizontal flipping of images was used.

Pretrained VGG16 [36] was used for feature extraction. When passed to the proposed approach, the image was resized to . An Adam optimiser was utilised for training, and parameters were set to the default values.

Publicly available framework TensorFlow was used to implement this model. An Nvidia GeForce GTX 1080 (8119 MiB) GPU with 62 GB RAM and a Linux operating system was used to train the model, while model testing on the different datasets was performed on a Core i5 CPU with 8 GB RAM and a Windows operating system.

4.4. Comparison with the State of the Art

The proposed model was compared with four state-of-the-art saliency-detection models on five datasets. The deep saliency models included nonlocal features (NLDF) [29], contour to saliency (C2S) [11], visual saliency detection based on multiscale deep CNN features (MDF) [37], deep saliency with encoded low-level distance map and high-level features (ELD) [30], salient-object detection in low-contrast images via global convolution and boundary refinement (GCBR) [38], and aggregating multilevel convolutional features for salient-object detection (Amulet) [39].

4.4.1. Quantitative Comparison

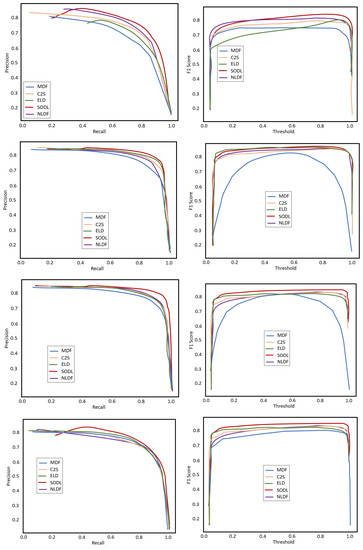

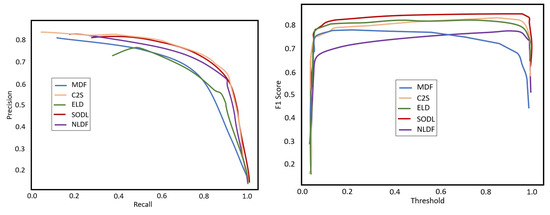

To determine the accuracy of the objects that the proposed model segmented, the proposed model and four others were tested on five highly used datasets. To show the results, we plotted precision, recall, and F-measure curves on each dataset (see Figure 7). Additionally, results of the proposed model and the four others in terms of mean absolute error (MAE) and weighted F-measure (WF) are presented (see Table 2).

Figure 7.

(left) Precision–recall (PR) and (right) F-measure curves of proposed model (SODL) and state-of-the-art (SOA) models.

Table 2.

Comparison of proposed method with SOA methods on five datasets in terms of F-measure (larger is better) and MAE (smaller is better). Note: NLDF, nonlocal features; C2S, contour to saliency; MDF, multiscale deep CNN features; ELD, deep saliency with encoded low-level distance map and high-level features; GCBR, salient-object detection in low-contrast images via global convolution and boundary refinement; Amulet, aggregating multilevel convolutional features for salient-object detection.

Figure 7 shows the PR and F-measure curves of the proposed model with four other state-of-the-art models, which show that the proposed model outperformed all other methods. The other quantitative comparison in terms of weighted F-measure and mean absolute error in Table 2 showed that the proposed model was first among all other models, and the F-measure was improved by 10%, 8%, 7%, 6.1%, and 7.9% for the MSRA-B, DUTS, DUT-OMRON, PASCAL-S, and HKU-IS datasets, respectively.

4.4.2. Qualitative Comparison

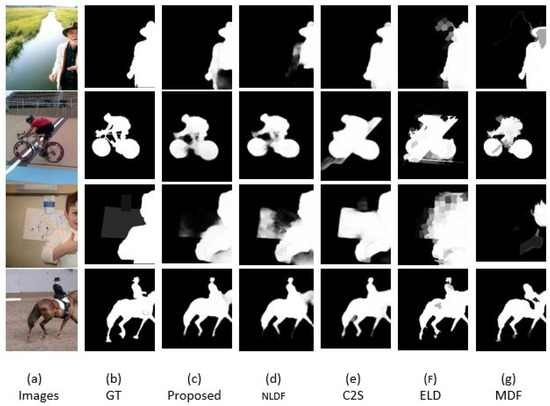

To further highlight the performance of the proposed model and quality of tge saliency map, qualitative analysis of the proposed model on five datasets was also performed. Figure 8 shows the quality of the maps and segmented salient objects from five widely used datasets.

Figure 8.

Visual saliency maps generated by our method and four other methods. Ours achieved the best results, especially in recovering spatial details of salient objects.

Qualitative comparison of the proposed model on the five datasets showed that the proposed model could accurately segment objects in different challenging scenarios, containing images that had much less color difference from their background, and objects that were large and touching image boundaries. Most strategies can produce good results for images with simple scenarios, while the proposed model could achieve better results even in complex scenarios. Many deep-learning models cannot segment and locate objects in complex backgrounds, but the proposed technique was successful in capturing most salient regions.

5. Conclusions

This paper presented a deep convolutional network with the integration of local and global features with boundary refinement. The combination of these features helps in the accurate detection and segmentation of salient objects. The embedded global convolutional and boundary-refinement blocks help in better feature extraction and preserve spatial location present in initial layers, which are later refined by the refinement module, resulting in more distinct features and accurate detection. Moreover, we examined the capabilities of the proposed model on five large and widely used datasets. Experiment results indicated that the proposed method outperformed state-of-the-art methods and has high capability for many computer-vision tasks.

Author Contributions

“Conceptualisation”: W.S., N.A. and N.R.; ”Methodology”: W.S. and N.A.; “Software”: W.S.; “Validation”: W.S., N.A. and M.S.; “Formal analysis”: W.S. and N.A.; “Resources”: N.A., M.S. and N.R.; “Initial draft”: W.S. and N.A.; “Review and editing”: N.A., M.S. and N.R.; “Supervision”: N.A.; “Project administration”: N.A. and N.R.; “Funding acquisition”: N.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by Under Erasmus CBHE Project Number 619483.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Borji, A.; Cheng, M.-M.; Hou, Q.; Jiang, H.; Li, J. Salient Object Detection: A Survey. Comput. Vis. Media 2019, 5, 117–150. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Fang, H.; Gupta, S.; Iandola, F.; Srivastava, R. From Captions to Visual Concepts and Back. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Borji, A.; Ahmadabadi, M.N.; Araabi, B.N. Cost-sensitive learning of top-down modulation for attentional control. Mach. Vis. Appl. 2011, 22, 61–76. [Google Scholar] [CrossRef]

- Borji, A.; Itti, L. Scene classification with a sparse set of salient regions. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Wei, Y.; Liang, X.; Chen, Y. A simple to complex framework for weakly-supervised semantic segmentation. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Li, A.; She, X.; Sun, Q. Color image quality assessment combining saliency and FSIM. In Proceedings of the International Conference on Digital Image Processing, Beijing, China, 21–22 April 2013. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantics egmentation. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Lin, Q.J.; Tao, Y.; Li, W.; Shi, Y. Salient object detection via color and texture cues. Neurocomputing 2017, 243, 35–48. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Wang, L.; Wang, L.; Lu, H.; Zhang, P.; Ruan, X. Salient Object Detection with Recurrent Fully Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1734–1746. [Google Scholar] [CrossRef] [PubMed]

- Singh, V.K.; Kumar, N. Saliency bagging: A novel framework for robust salient object detection. Vis. Comput. 2019, 36, 1423–1441. [Google Scholar] [CrossRef]

- Feng, M.; Lu, H.; Ding, E. Attentive Feedback Network for Boundary-Aware Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, Y.; Zhao, X.; Hu, X.; Li, Y.; Huang, K. Focal Boundary Guided Salient Object Detection. IEEE Trans. Image Process. 2019, 28, 2813–2824. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Eitel, A.; Springenberg, J.T.; Spinello, L.; Riedmiller, M.; Burgard, W. Multimodal deep learning for robust RGB-D object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 681–687. [Google Scholar]

- Sokalski, J.; Breckon, T.P.; Cowling, I. Automatic salient object detection in UAV imagery. In Proceedings of the Automatic Salient Object Detection In UAV Imagery 25th International UAV Systems Conference, Bristol, UK, 12–14 April 2010; pp. 1–12. [Google Scholar]

- Cheng, M.-M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S.-M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Hou, X.; Harel, J.; Koch, C. Image Signature: Highlighting Sparse Salient Regions. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 194–201. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. BASNet: Boundary-Aware Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Li, J.; Levine, M.D.; An, X.; Xu, X.; He, H. Visual saliency based on scale-space analysis in the Frequency Domain. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 996–1010. [Google Scholar] [CrossRef] [PubMed]

- Imamoglu, N.; Lin, W.; Fang, Y. A Saliency Detection Model Using Low-Level Features Based on Wavelet Transform. IEEE Trans. Multimed. 2013, 15, 96–105. [Google Scholar] [CrossRef]

- Zou, W.; Liu, Z.; Kpalma, K.; Ronsin, J.; Zhao, Y.; Komodakis, N. Unsupervised Joint Salient Region Detection and Object Segmentation. IEEE Trans. Image Process. 2015, 24, 3858–3873. [Google Scholar] [PubMed]

- Tong, N.; Lu, H.; Ruan, X.; Yang, M.-H. Salient object detection via bootstrap learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Liu, Y.; Han, J.; Zhang, Q.; Shan, C. Deep Salient Object Detection with Contextual Information Guidance. IEEE Trans. Image Process. 2019, 29, 360–374. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Chen, Y.; Yuille, A.; Freeman, W. Latent hierarchical structural learning for object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Ding, X.; Luo, Y.; Li, Q.; Cheng, Y.; Cai, G.; Munnoch, R.; Xue, D.; Yu, Q.; Zheng, X.; Wang, B. Prior knowledge-based deep learning method for indoor object recognition and application. Syst. Sci. Control Eng. 2018, 6, 249–257. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Zhang, Z.; Wu, F.; Zhao, L. Deep learning driven blockwise moving object detection with binary scene modeling. Neurocomputing 2015, 168, 454–463. [Google Scholar] [CrossRef]

- Luo, Z.; Mishra, A.; Achkar, A.; Eichel, J.; Li, S.; Jodoin, P.-M. Non-local deep features for salient object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6593–6601. [Google Scholar]

- Lee, G.; Tai, Y.-W.; Kim, J. Deep saliency with encoded low level distance map and high level features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 660–668. [Google Scholar]

- Liu, T.; Sun, J.; Zheng, N.-N.; Tang, X.; Shum, H.-Y. Learning to Detect A Salient Object. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M. Saliency detection via graph-based manifold ranking. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Li, Y.; Hou, X.; Koch, C.; Rehg, J.M.; Yuille, A.L. The secrets of salient object segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Li, G.; Yu, Y. Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5455–5463. [Google Scholar]

- Wang, L.; Lu, H.; Wang, Y.; Feng, M.; Wang, D.; Yin, B.; Ruan, X. Learning to detect salient objects with image-level supervision. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3796–3805. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Li, G.; Yu, Y. Visual saliency detection based on multiscale deep cnn features. IEEE Trans. Image Process. 2016, 25, 5012–5024. [Google Scholar] [CrossRef] [PubMed]

- Mu, N.; Xu, X.; Zhang, X. Salient Object Detection in Low Contrast Images via Global Convolution and Boundary Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhang, P.; Wang, D.; Lu, H.; Wang, H.; Ruan, X. Amulet: Aggregating multi-level convolutional features for salient object detection. In Proceedings of the ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).