Active Sonar Target Classification with Power-Normalized Cepstral Coefficients and Convolutional Neural Network

Abstract

Featured Application

Abstract

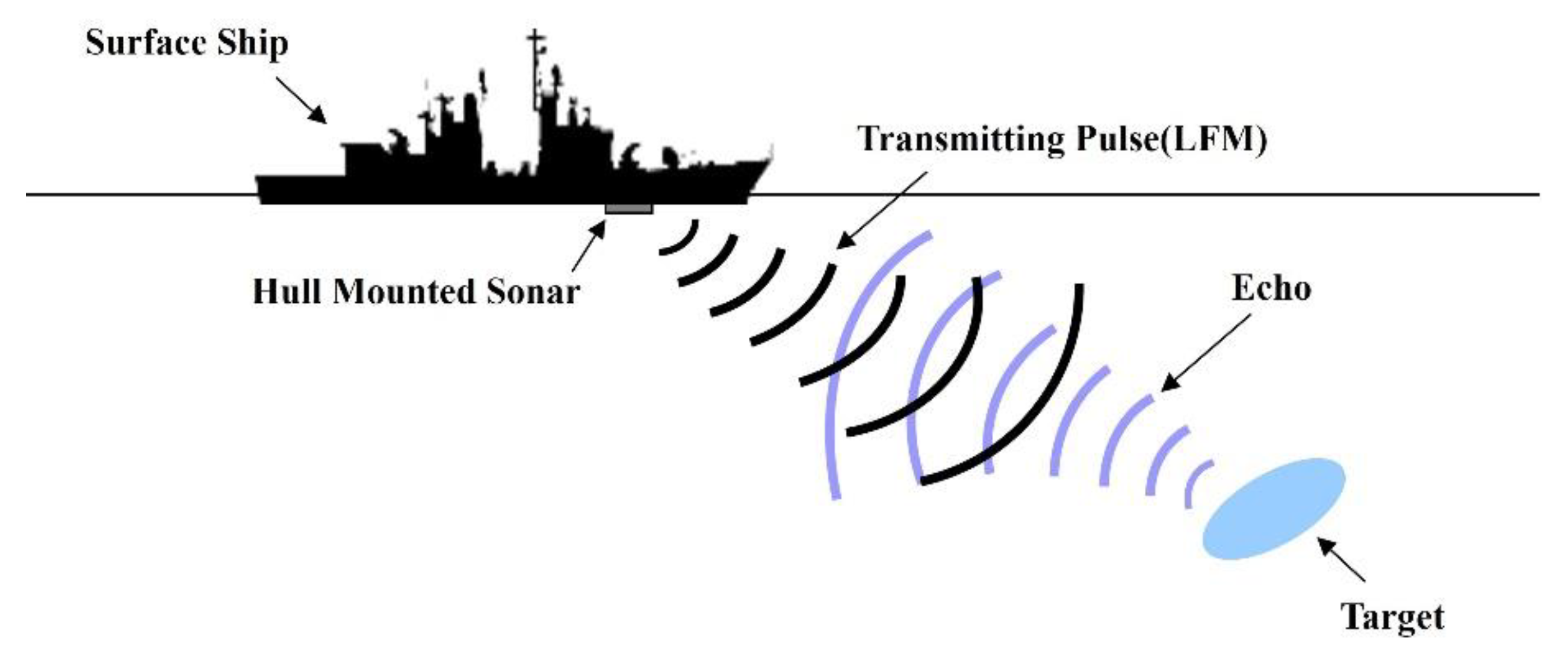

1. Introduction

- Target detection is a complex pattern classification problem due to changes over time and various underwater environments. The complexity of the acoustic transmission environment leads to loss of signal information, distortion of the acoustic signal waveform, and incomplete receipt of acoustic signals.

- Once a target is detected it will take evasive action; therefore it is necessary to continuously classify and track weak target echoes.

- Since the detection of long-range targets using low-frequency active sonar is low-resolution data, feature extraction algorithms are required.

- Long pulse repetition intervals (PRIs) are used to detect long-range targets, which results in relatively small data accumulation over time.

- It is very difficult to obtain data from various sea experiments on underwater targets.

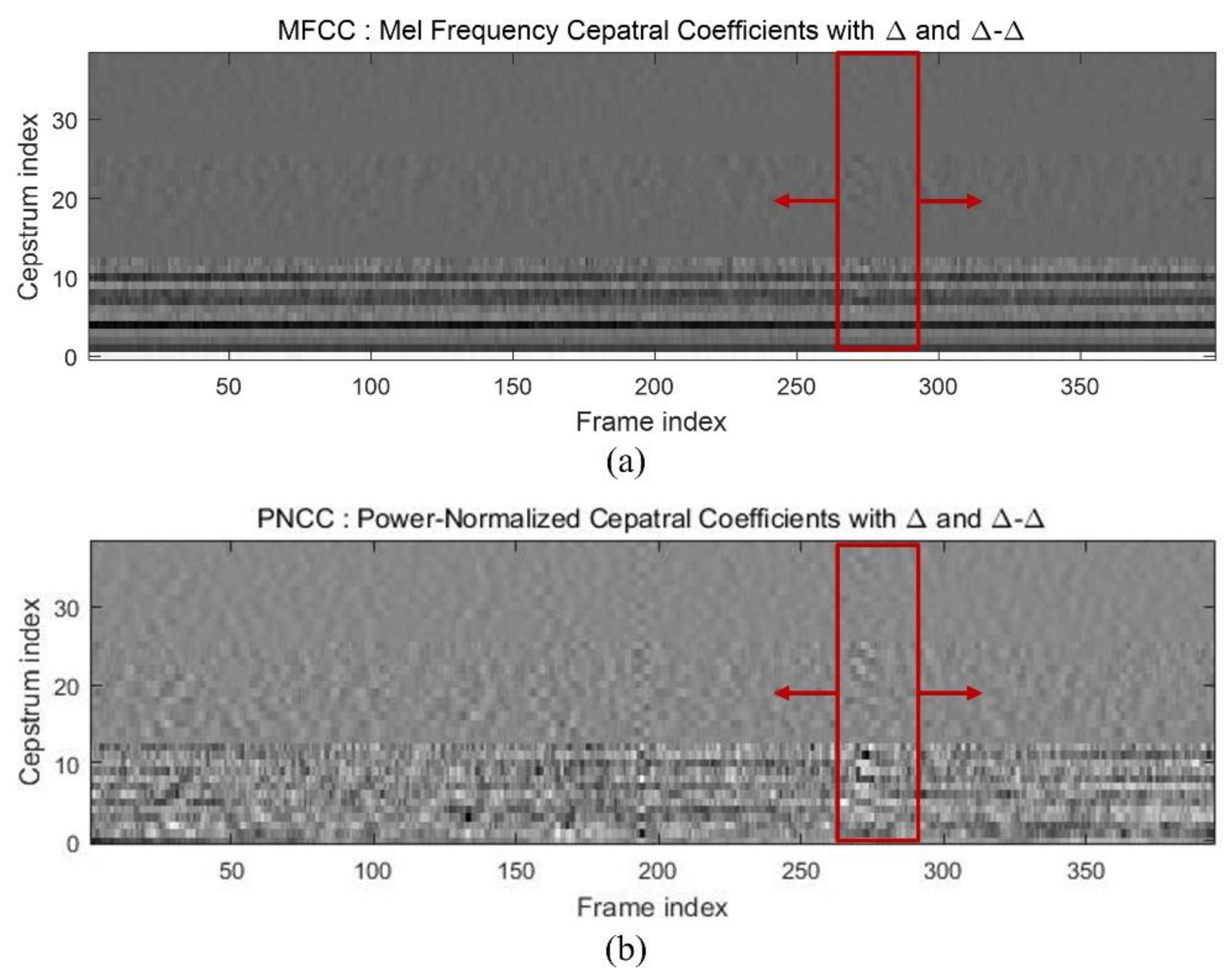

2. Introduction to Acoustic Feature Extraction Methods

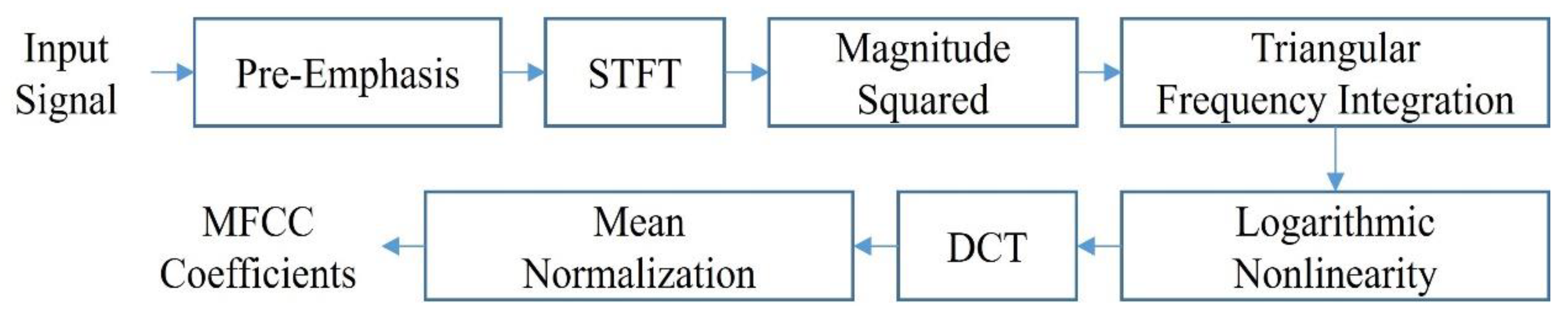

2.1. Mel-Frequency Cepstral Coefficients

- Pre-Emphasis: This performs the role of a kind of high-pass filter and emphasizes high frequency components.

- STFT: The length of the frame is divided into about 20 to 40 ms (short time), and a frequency component is obtained by performing Fourier transform for each frame.

- Magnitude Squared: Finds the energy for each frame.

- Triangular Frequency Integration: For each frame, a triangular filter bank is applied.

- Logarithmic Nonlinearity: This takes a logarithm of the integration result.

- DCT: Performs discrete cosine transform operation to make the cepstral coefficient.

- Mean Normalization: Takes an average to reduce the impact on fast-changing components.

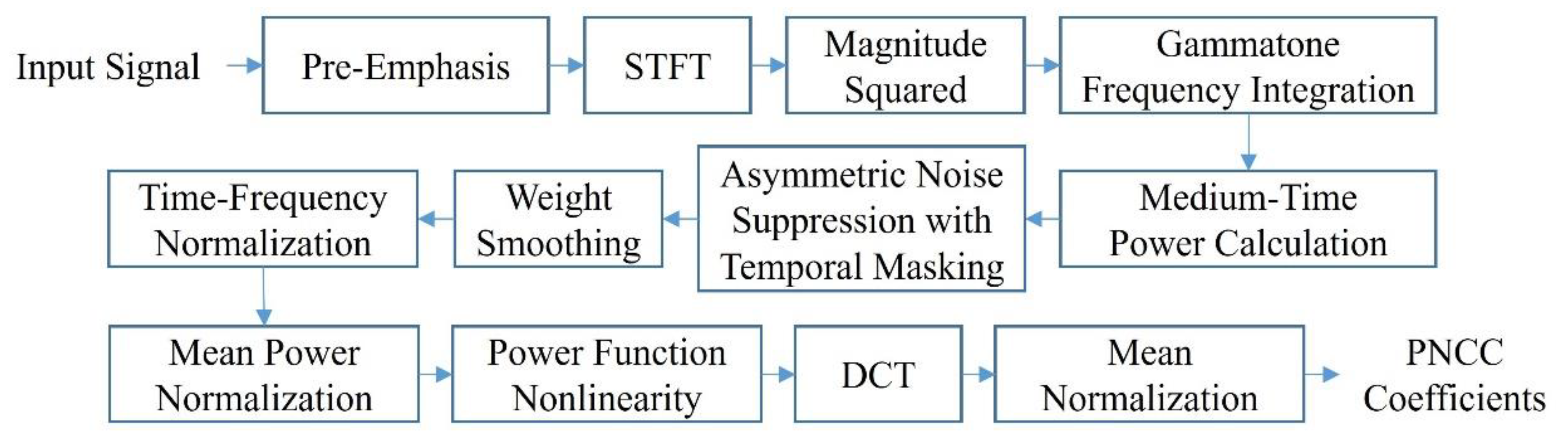

2.2. Power-Normalized Cepstral Coefficients

- Pre-Emphasis: This performs the role of a kind of high-pass filter and emphasizes high frequency components.

- STFT: The length of the frame is divided into about 20 to 40 ms (short time), and a frequency component is obtained by performing Fourier transform for each frame.

- Magnitude Squared: Finds the energy for each frame.

- Gammatone Frequency Integration: For each frame, a gammatone filterbank is applied.

- Medium-Time Power Calculation: The energy of the spectrum is obtained for a frame of 50 to 120 ms (medium-time).

- Asymmetric Noise Suppression with Temporal Masking: The predicted signal is subtracted from the input signal by predicting the level of background noise for each frame through asymmetric-nonlinear filtering.

- Weight Smoothing: This goes through the smoothing process for the transfer function.

- Time-Frequency Normalization: Takes the normalization process for time-frequency.

- Mean Power Normalization: This goes through the normalization process for the average power.

- Power Function Nonlinearity: This goes through a process of non-linearization of power.

- DCT: Performs the discrete cosine transform operation to make the cepstral coefficient.

- Mean Normalization: Takes an average to reduce the impact on fast-changing components.

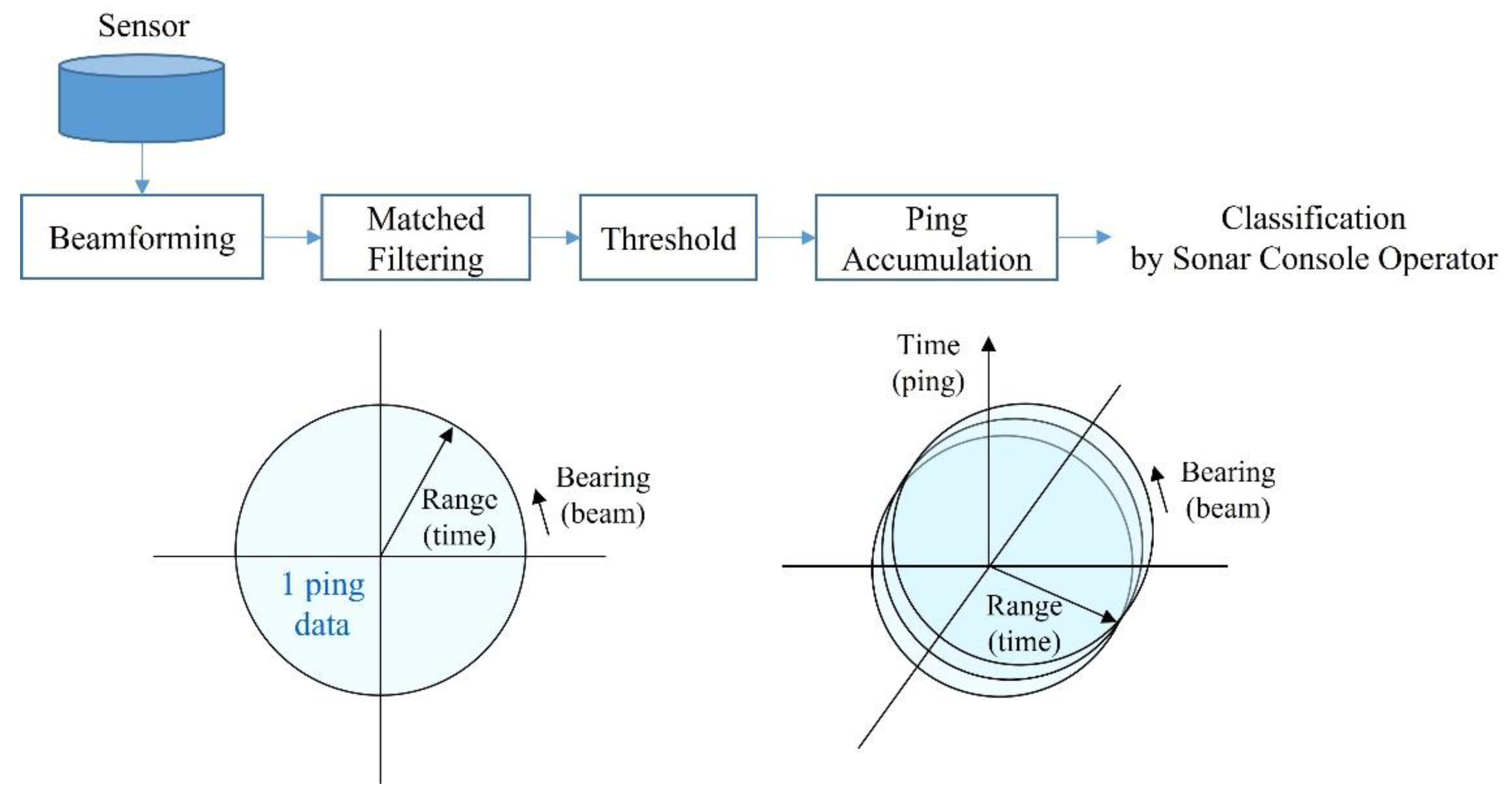

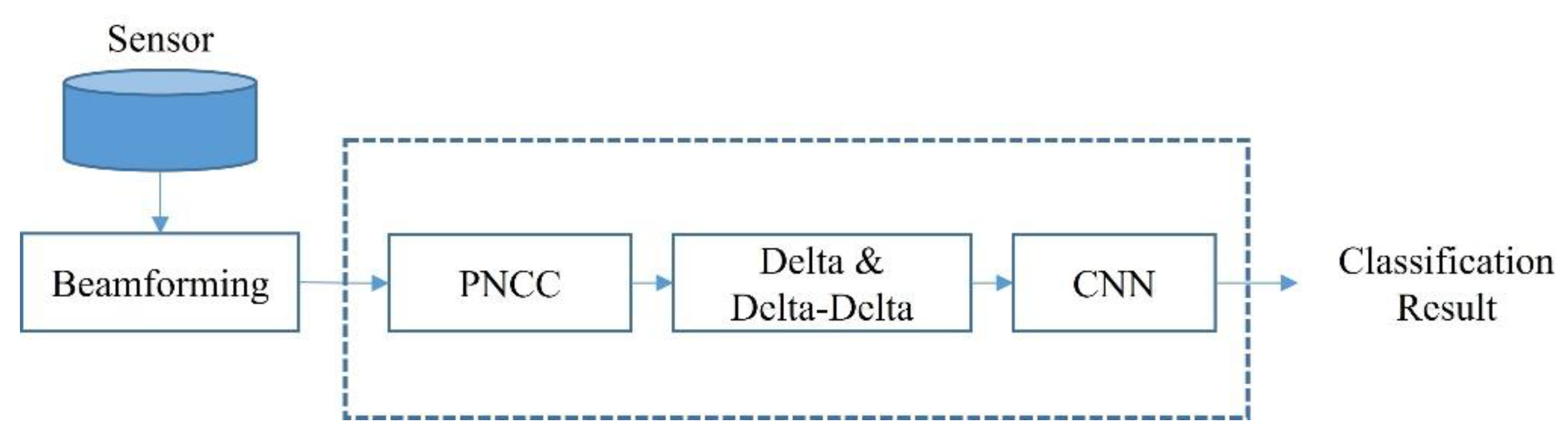

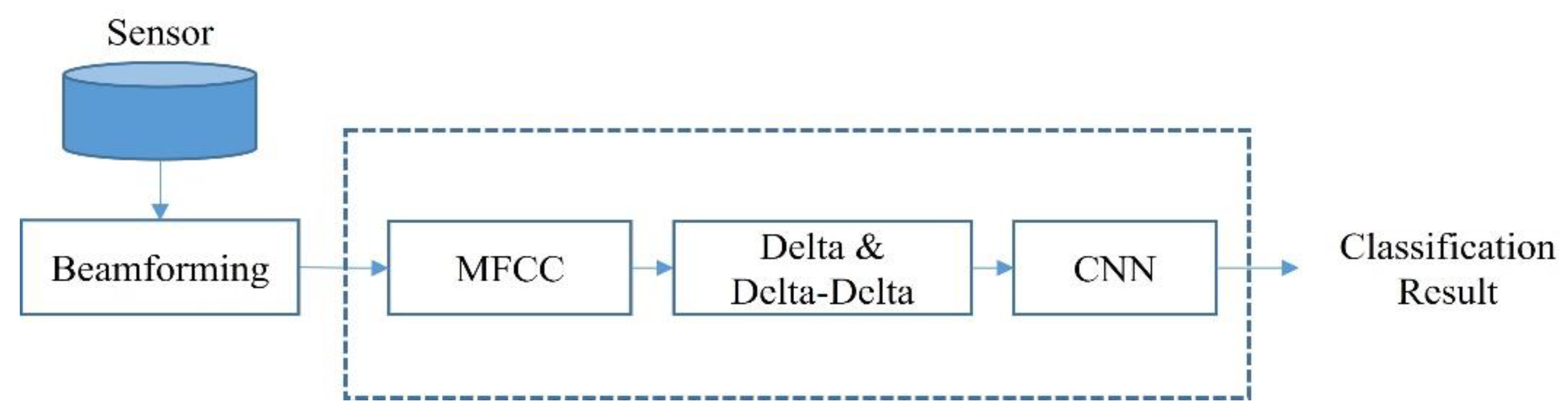

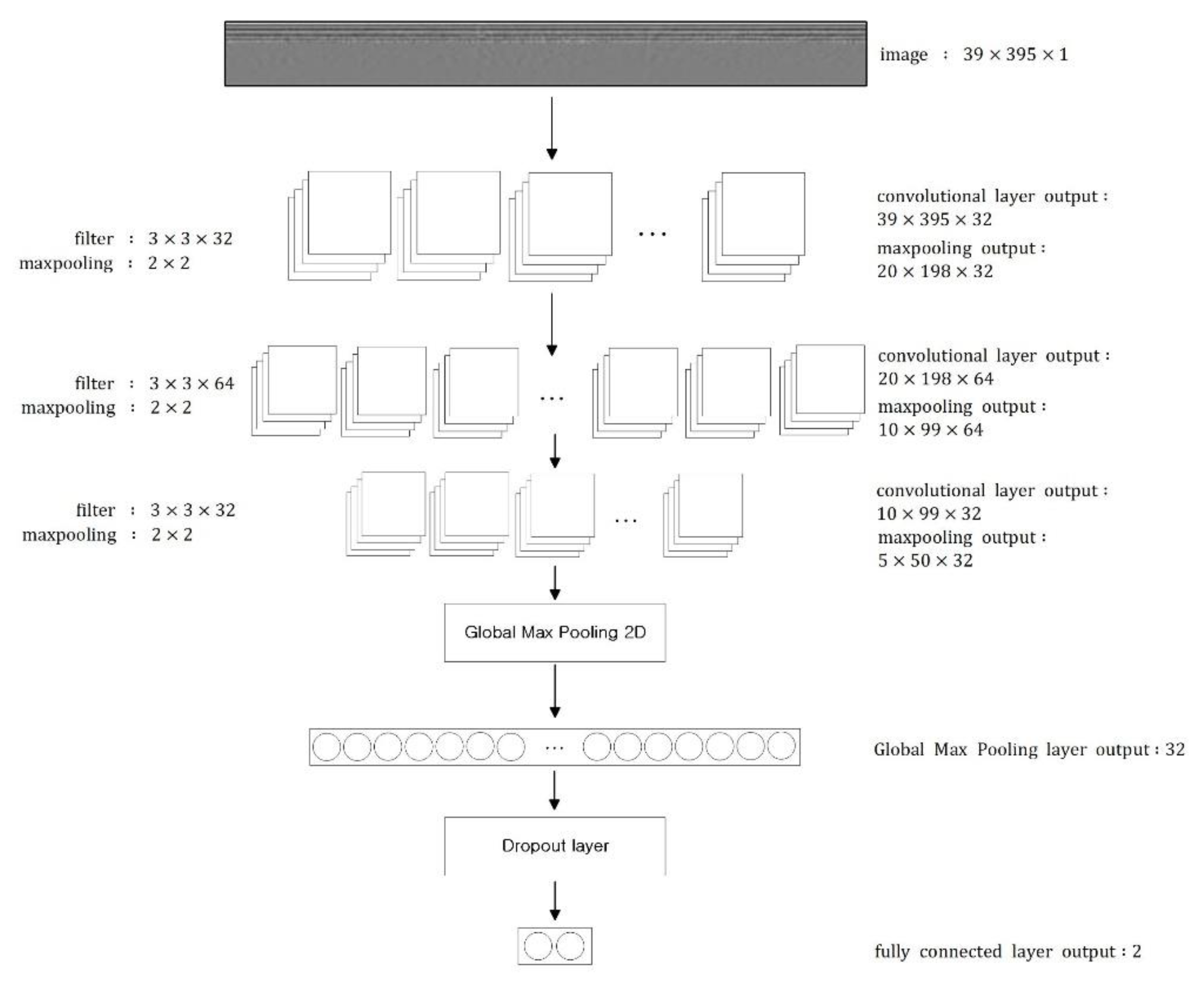

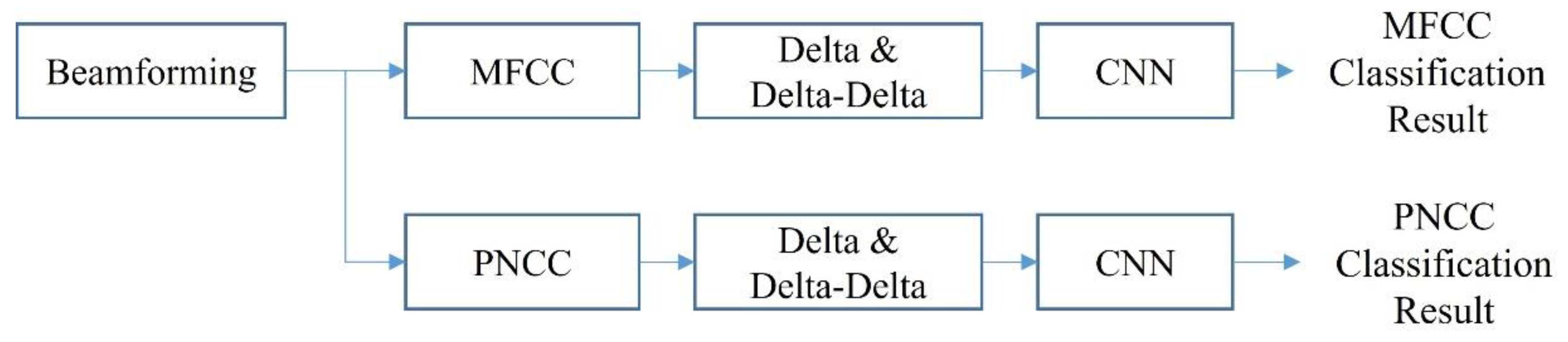

3. The Proposed Algorithm

4. Experimental Results and Discussion

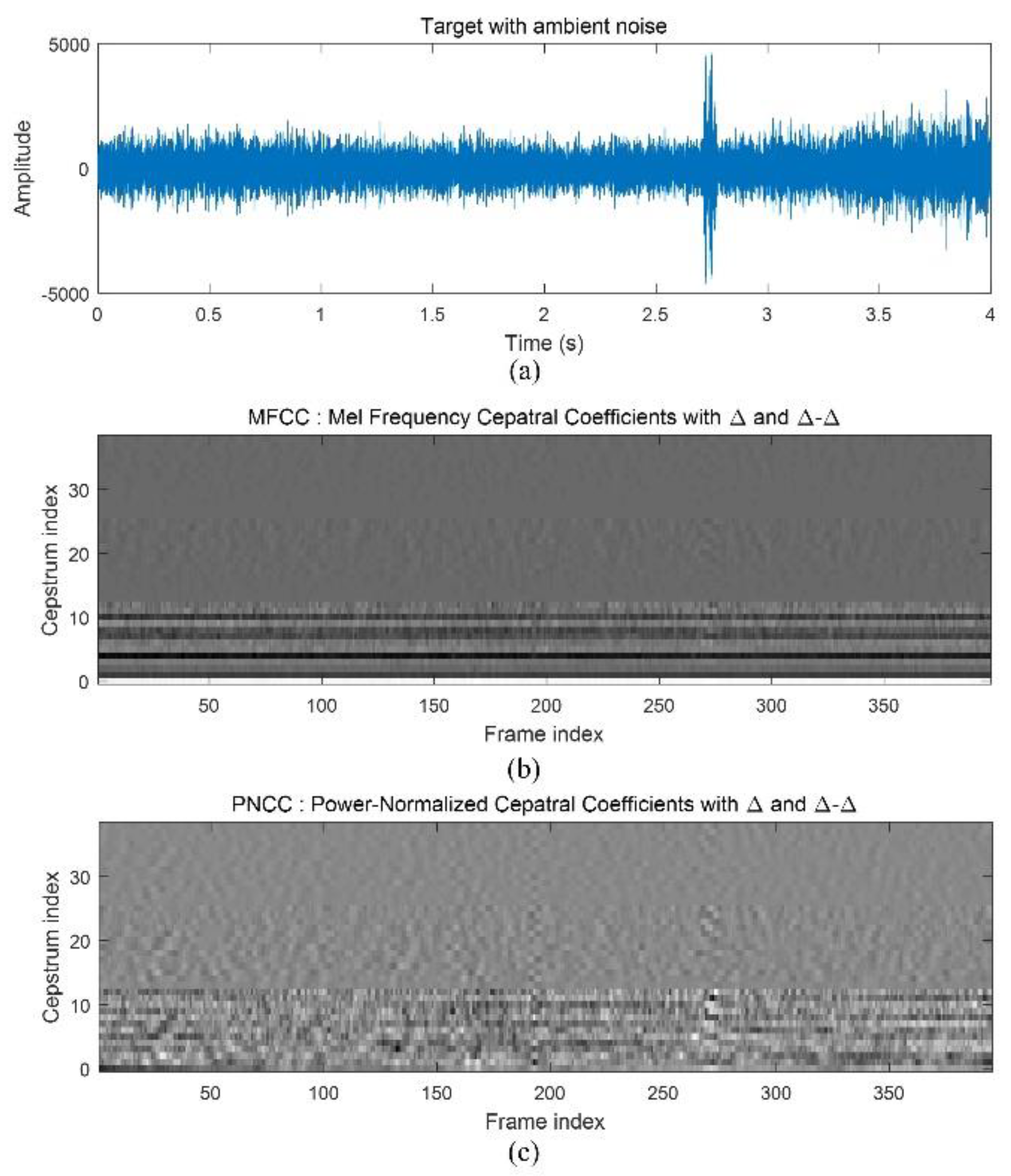

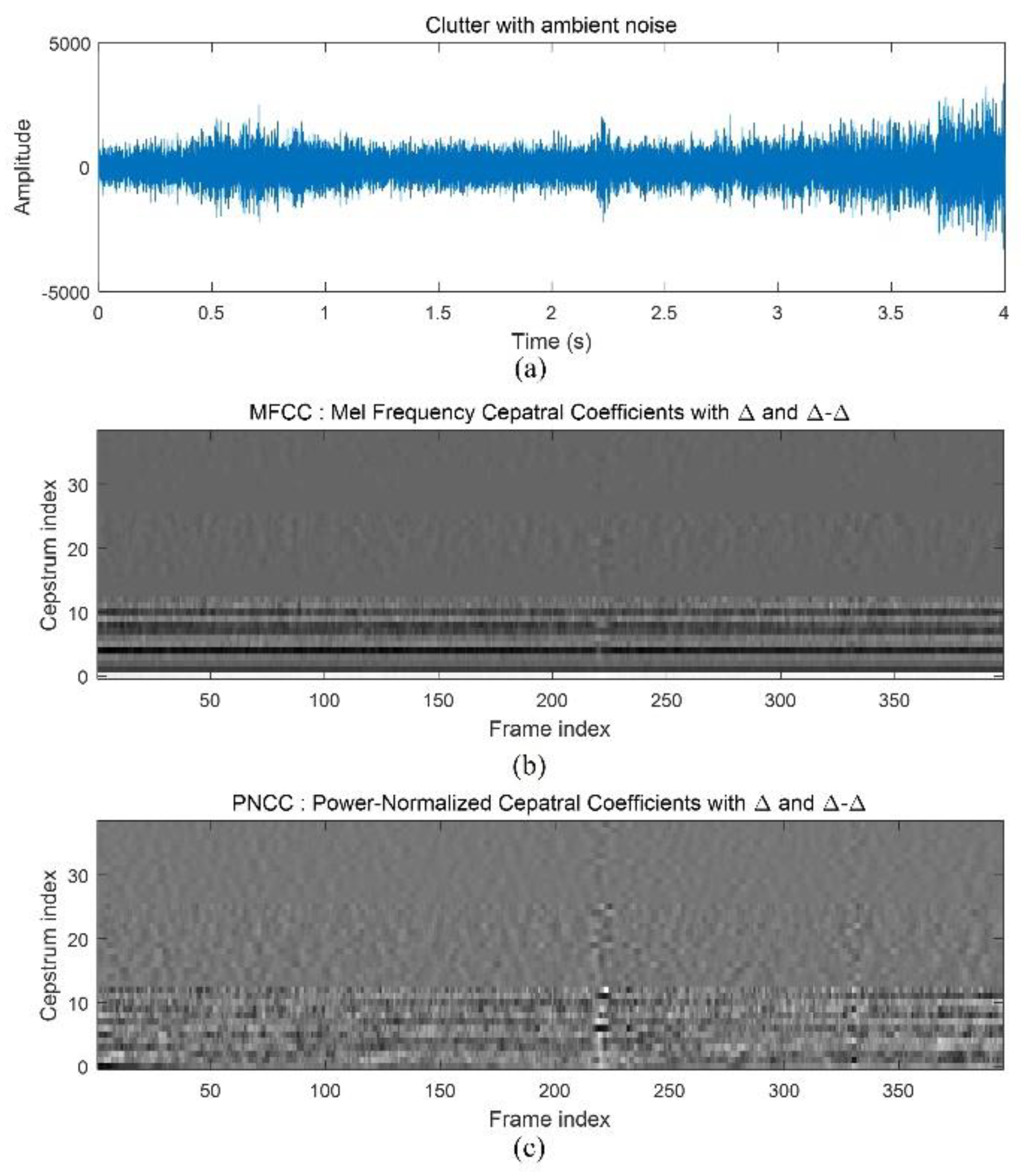

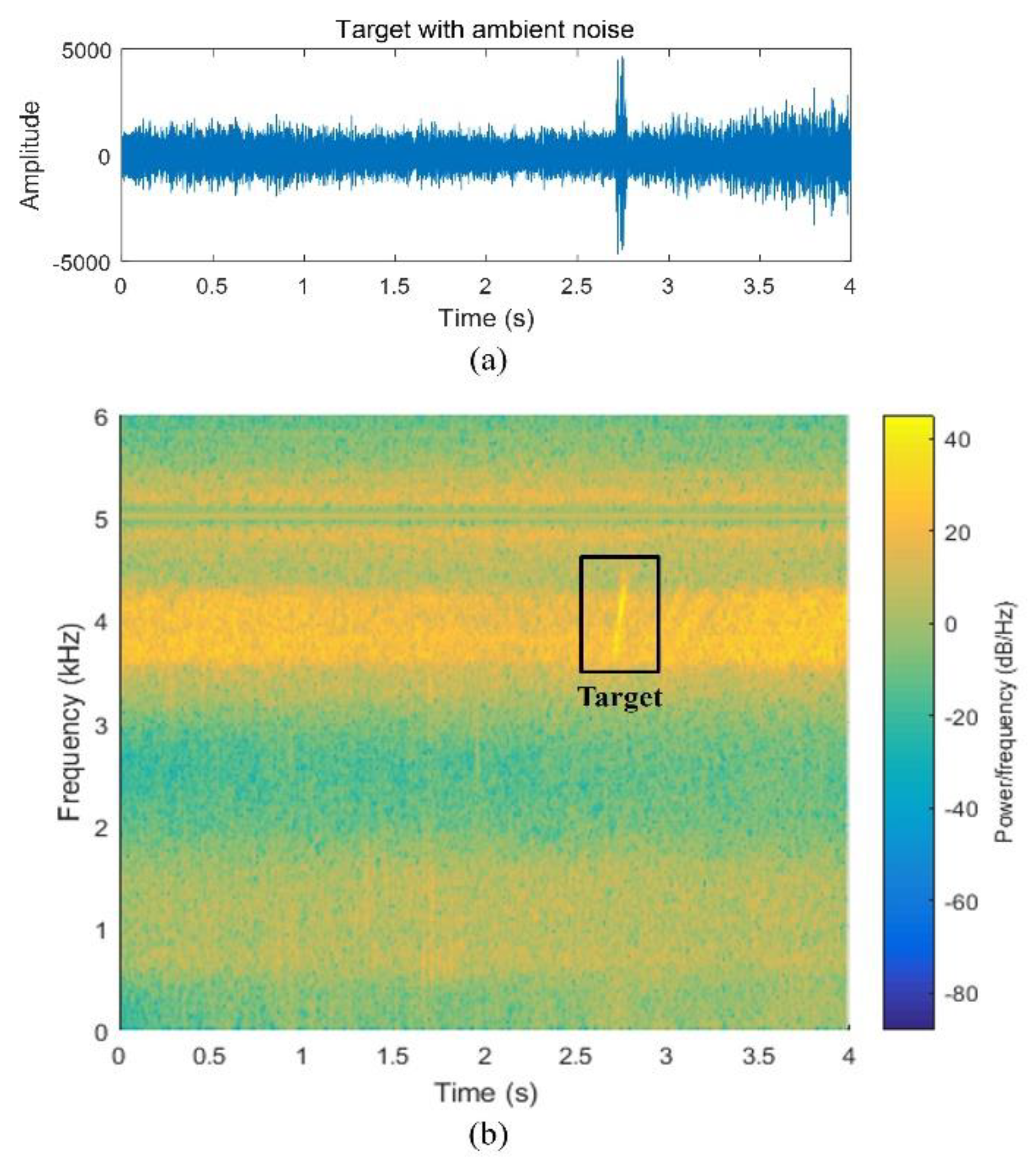

4.1. Experiments Data

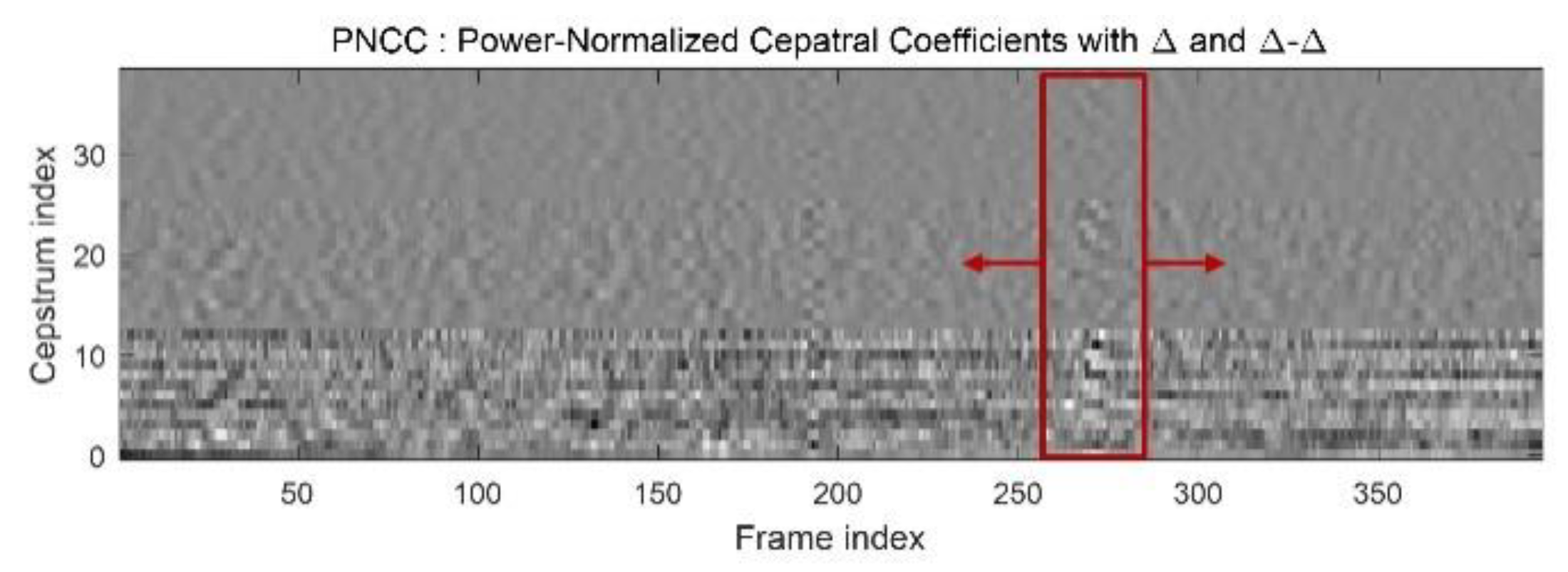

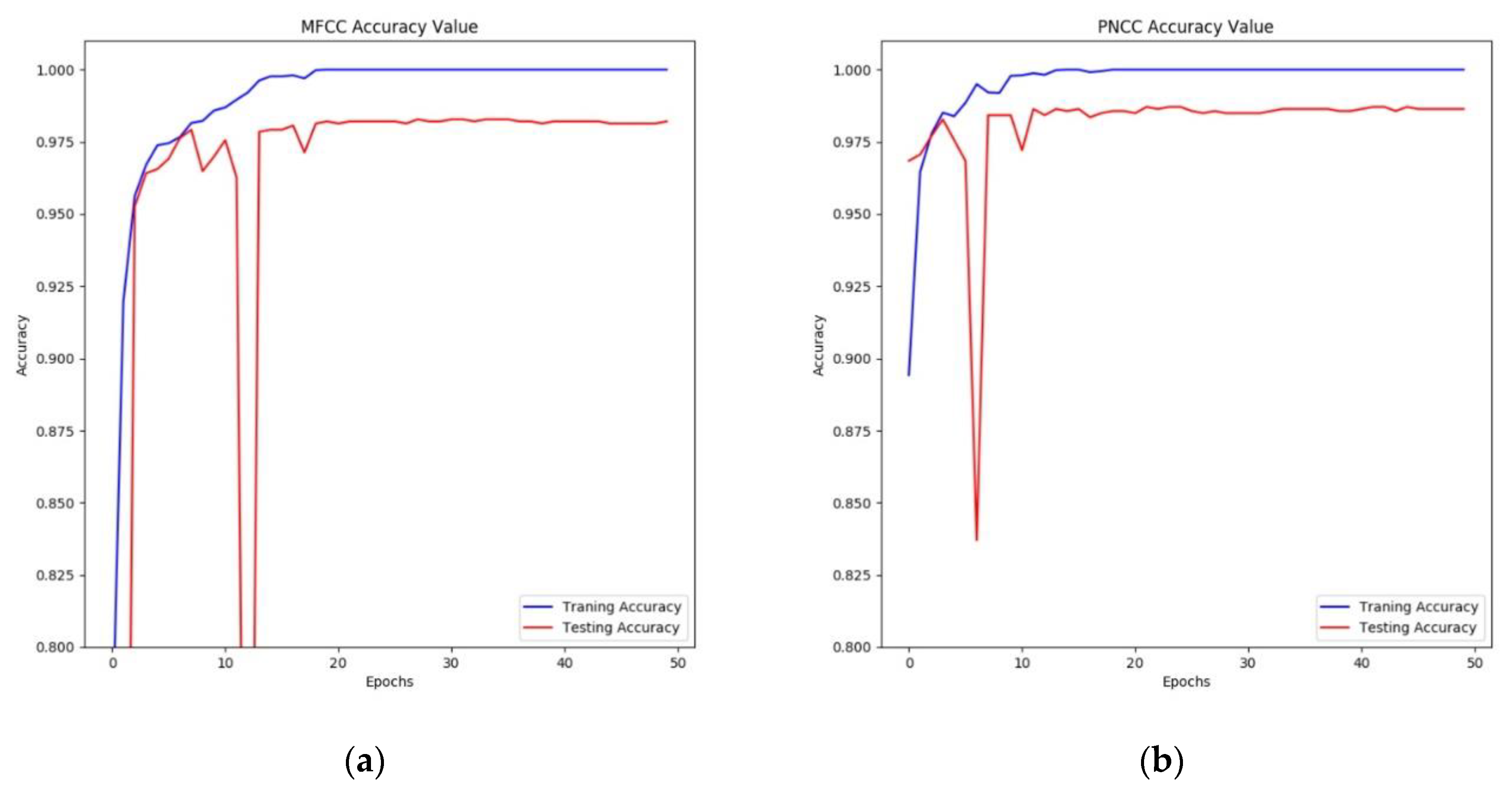

4.2. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Urick, R.J. Principles of Underwater Sound, 3rd ed.; McGraw-Hill: New York, NY, USA, 1983. [Google Scholar]

- Harrison, R.; Yang, C.; Lin, C.F.; Politopoulos, T.; Chang, E. Classification of Underwater Targets with Active Sonar. In Proceedings of the First IEEE Regional Conference on Aerospace Control Systems, Westlake Village, CA, USA, 25–27 May 1993; pp. 534–538. [Google Scholar]

- Kelly, J.G.; Carpenter, R.N.; Tague, J.A.; Haddad, N.K. Optimum Classification with Active Sonar: New Theoretical Results. In Proceedings of the ICASSP 91: 1991 International Conference on Acoustics, Speech, and Signal Processing, Toronto, ON, Canada, 14–17 April 1991; Volume 2, pp. 1445–1448. [Google Scholar]

- Young, V.W.; Hines, P.C. Perception-based Automatic Classification of Impulsive Source Active Sonar Echoes. J. Acoust. Soc. Am. 2007, 122, 1502–1517. [Google Scholar] [CrossRef] [PubMed]

- Ginolhac, G.; Chanussot, J.; Hory, C. Morphological and Statistical Approaches to Improve Detection in the Presence of Reverberation. IEEE J. Ocean. Eng. 2005, 30, 881–899. [Google Scholar] [CrossRef]

- Choi, J.; Yoon, K.; Lee, S.; Kwon, B.; Lee, K. Four Segmentalized CBD Algorithm Using Maximum Contrast Value to Improve Detection in the Presence of Reverberation. J. Acoust. Soc. Korea 2009, 28, 761–767. (In Korean) [Google Scholar]

- Laterveer, R. Single Ping Clutter Reduction: Segmentation Using Markov Random Fields; SACLANT undersea Research Centre: La Spezia, Italy, 1999. [Google Scholar]

- Design Disclosures for Interacting Multiple Model and for the Multi Ping Classifier; Technical Report; Applied Research Lab: State College, PA, USA, 1999.

- Carlson, B.D.; Evans, E.D.; Wilson, S.L. Search radar detection and track with the Hough transform, Part I: System concept. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 102–108. [Google Scholar] [CrossRef]

- Blondel, P. The Handbook of Sidescan Sonar; Springer Science & Business Media: New York, NY, USA, 2010. [Google Scholar]

- Wang, X.; Lie, X.; Japkowicz, N.; Matwin, S.; Nguyen, B. Automatic Target Recognition using multiple-aspect sonar images. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 2330–2337. [Google Scholar]

- Zerr, B.; Stage, B. Three-dimensional reconstruction of underwater objects from a sequence of sonar images. In Proceedings of the 1996 International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; IEEE: Piscataway, NJ, USA, 1996; Volume 3, pp. 927–930. [Google Scholar]

- Seo, Y.; On, B.; Im, S.; Shim, T.; Seo, I. Underwater Cylindrical Object Detection Using the Spectral Features of Active Sonar Signals with Logistic regression Models. Appl. Sci. 2018, 8, 116. [Google Scholar] [CrossRef]

- Ye, Z. A novel approach to sound scattering by cylinders of finite length. J. Acoust. Soc. Am. 1997, 102, 877–884. [Google Scholar] [CrossRef]

- On, B.; Kim, S.; Moon, W.; Im, S.; Seo, I. Detection of an Object Bottoming at Seabed by the Reflected Signal Modeling. J. Inst. Electron. Inf. Eng. 2016, 53, 55–65. [Google Scholar]

- Abraham, D.A.; Lyons, A.P. Novel physical interpretations of K-distributed reverberation. IEEE J. Ocean. Eng. 2002, 27, 800–813. [Google Scholar] [CrossRef]

- On, B.; Im, S.; Seo, I. Performance of Time Reversal Based Underwater Target Detection in Shallow Water. Appl. Sci. 2017, 7, 1180. [Google Scholar] [CrossRef]

- Kim, C.; Stern, R.M. Power-Normalized Cepstral Coefficients (PNCC) for Robust Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 1315–1329. [Google Scholar] [CrossRef]

- Seok, J. Active Sonar Target/Nontarget Classification Using Real Sea-trial Data. J. Korea Multimed. Soc. 2017, 20, 1637–1645. (In Korean) [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Choo, Y.; Hong, W.; Seong, W.; Lee, W.; Seo, I. Discrimination of Target Signals Using Convolution Neural Network. Proc. Acoust. Soc. Korea 2018, 37, 63. (In Korean) [Google Scholar]

- Zhang, L.; Wu, D.; Han, X.; Zhu, Z. Feature Extraction of Underwater Target Signal Using Mel Frequency Cepstrum Coefficients Based on Acoustic Vector Sensor. J. Sensors 2016, 2016, 7864213. [Google Scholar] [CrossRef]

- Davis, S.; Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, K.; Wan, J. Robust Feature for Underwater Targets Recognition Using Power-Normalized Cepstral Coefficients. In Proceedings of the 2018 14th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 12–16 August 2018; pp. 90–93. [Google Scholar]

| Item | Number of Data |

|---|---|

| Target (data augmentation output) | 3610 |

| Clutter | 3351 |

| Training | 5568 |

| Testing | 1393 |

| Parameter | Content |

|---|---|

| Epochs | 50 |

| Training rate | 0.001 |

| Batch size | 32 |

| Weight initialization | He |

| Feature Extraction | Class | Results of Classification | |

|---|---|---|---|

| Target | Clutter | ||

| MFCC | Target | 97.234% | 2.766% |

| Clutter | 1.493% | 98.507% | |

| PNCC | Target | 98.617% | 1.383% |

| Clutter | 0.896% | 99.104% | |

| Precision | Recall | F-Measure | |

|---|---|---|---|

| MFCC | 98.49% | 97.23% | 97.86% |

| PNCC | 99.10% | 98.62% | 98.86% |

| Item | MFCC | PNCC |

|---|---|---|

| Sum of Multiplications and Divisions | 13,010 | 17,516 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Seo, I.; Seok, J.; Kim, Y.; Han, D.S. Active Sonar Target Classification with Power-Normalized Cepstral Coefficients and Convolutional Neural Network. Appl. Sci. 2020, 10, 8450. https://doi.org/10.3390/app10238450

Lee S, Seo I, Seok J, Kim Y, Han DS. Active Sonar Target Classification with Power-Normalized Cepstral Coefficients and Convolutional Neural Network. Applied Sciences. 2020; 10(23):8450. https://doi.org/10.3390/app10238450

Chicago/Turabian StyleLee, Seungwoo, Iksu Seo, Jongwon Seok, Yunsu Kim, and Dong Seog Han. 2020. "Active Sonar Target Classification with Power-Normalized Cepstral Coefficients and Convolutional Neural Network" Applied Sciences 10, no. 23: 8450. https://doi.org/10.3390/app10238450

APA StyleLee, S., Seo, I., Seok, J., Kim, Y., & Han, D. S. (2020). Active Sonar Target Classification with Power-Normalized Cepstral Coefficients and Convolutional Neural Network. Applied Sciences, 10(23), 8450. https://doi.org/10.3390/app10238450