GPON PLOAMd Message Analysis Using Supervised Neural Networks

Abstract

1. Introduction

2. Related Work

3. Data Characteristics

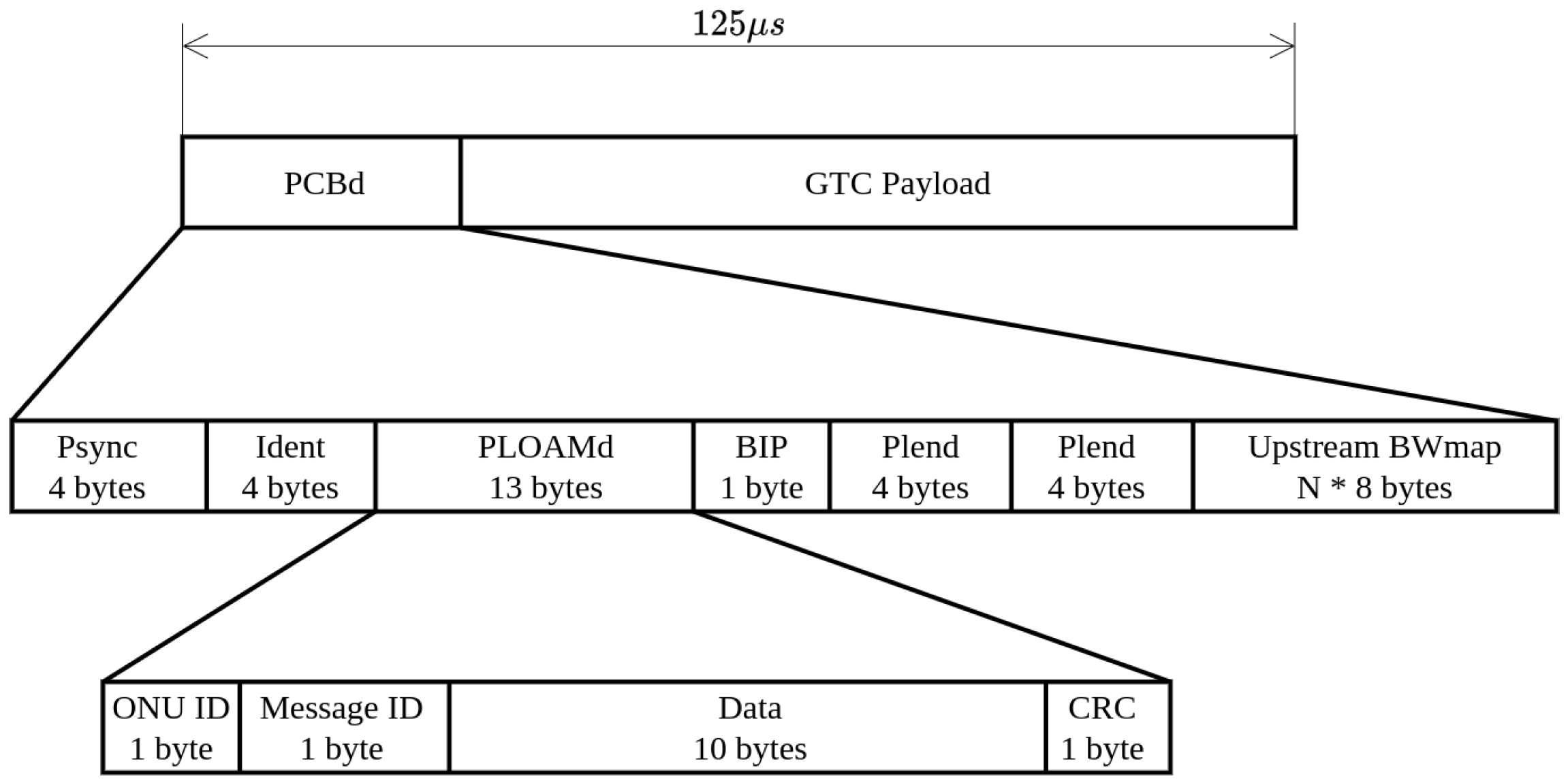

3.1. GPON Frame Header

- ONU ID specifies the receiving ONU.

- Message ID defines the type of message.

- DATA are unique data for specific message.

- Cyclic redundancy check (CRC) is used to verify the correctness of field data.

3.2. Raw Data Preparation

3.3. Data Shape

3.4. Invalid Data Generation

4. PLOAMd Analysis Using Various Recurrent Neural Networks and Results Discussion

4.1. Model Design

- from tensorflow.keras.layers import SimpleRNN, GRU, LSTM, Dense

- from tensroflow.keras import Sequential

- rnn_types = [SimpleRNN, GRU, LSTM]

- rnn_models = []

- for rnn in rnn_types:

- rnn_models.append(Sequential([

- rnn(32,input_shape=data[0].shape,

- return_sequences=True, activation=’tanh’),

- rnn(32, activation=’tanh’),

- Dense(64, activation=’relu’),

- Dense(1, activation=’sigmoid’)])

- )

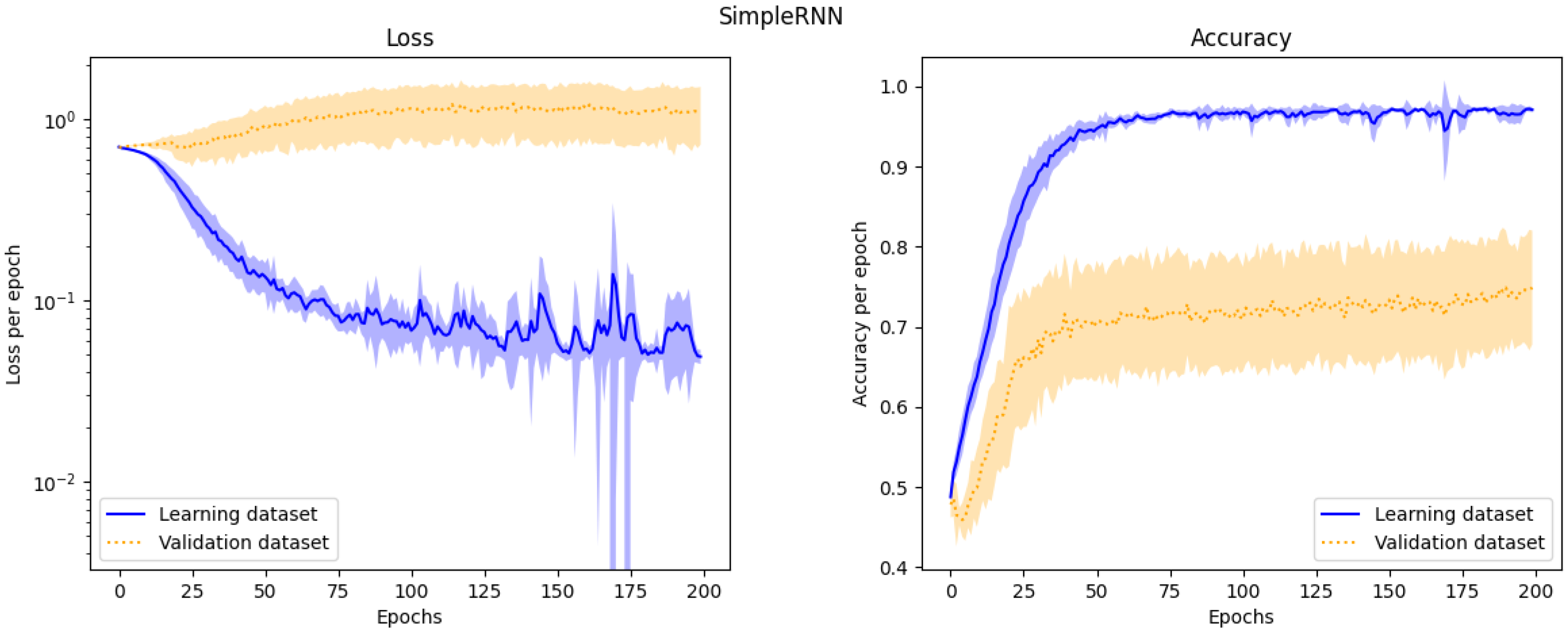

4.2. Learning Process

4.3. Evaluation of Models

5. Conclusions

Future Work Opportunities

- Convolutional network: One possible enhancement is replacing the recurrent layer with a sequence of convolutional and max-pooling layers, which should reduce the complexity of the final neural network and speed up the learning and evaluating process.

- Unsupervised learning techniques: Another possible continuation of this work is to create a machine learning model and train it using unsupervised learning techniques. The problem with the supervised algorithms used in this paper is that the model needs samples of non-standard or corrupted communication, but these samples may not exist during the model learning process. The result of this project would be an anomaly detector capable of marking suspicious or non-standard communication sequences.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ACO | Ant colony optimization |

| AI | Artificial intelligence |

| API | Application programming interface |

| ATM | Asynchronous transfer mode |

| BiRNN | Bidirectional a recurrent neural network |

| CATV | Cable television |

| CRC | Cyclic redundancy check |

| DWDM | Dense wavelength division multiplexing |

| EONs | Elastic optical networks |

| EPON | Ethernet passive optical network |

| FPGA | Field-programmable gate array |

| GPON | Gigabit-capable passive optical network |

| GRU | Gated recurrent unit |

| GTC | Gigabit-capable passive optical network transmission container |

| IEEE | Institute of Electrical and Electronics Engineers |

| IM-DD PON | Intensity modulation and direct detection passive optical network |

| ITU | International Telecommunication Union |

| ITU–T | ITU Telecommunication Standardization Sector |

| LSTM | Long short-term memory |

| ML | Machine learning |

| NG-PON2 | Next-generation passive optical network stage 2 |

| NN | Neural network |

| PCBd | Physical control block downstream |

| PLOAMd | Physical layer operations, administration and maintenance downstream |

| PON | Passive optical network |

| RNN | Recurrent neural network |

| SSMF | Standard single-mode fiber |

| OLT | Optical line termination |

| ONT | Optical network termination |

| ONU | Optical network unit |

| TWDM | Time and wavelength division multiplexing |

References

- Ford, G.S. Is faster better? Quantifying the relationship between broadband speed and economic growth. Telecommun. Policy 2018, 42, 766–777. [Google Scholar] [CrossRef]

- Hernandez, J.A.; Sanchez, R.; Martin, I.; Larrabeiti, D. Meeting the Traffic Requirements of Residential Users in the Next Decade with Current FTTH Standards. IEEE Commun. Mag. 2019, 57, 120–125. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, B.; Ren, J.; Wu, X.; Wang, X. Flexible Probabilistic Shaping PON Based on Ladder-Type Probability Model. IEEE Access 2020, 8, 34170–34176. [Google Scholar] [CrossRef]

- Li, S.; Cheng, M.; Chen, Y.; Fan, C.; Deng, L.; Zhang, M.; Fu, S.; Tang, M.; Shum, P.P.; Liu, D. Enhancing the Physical Layer Security of OFDM-PONs With Hardware Fingerprint Authentication. J. Light. Technol. 2020, 38, 3238–3245. [Google Scholar] [CrossRef]

- Houtsma, V.E.; Veen, D.T.V. Investigation of Modulation Schemes for Flexible Line-Rate High-Speed TDM-PON. J. Light. Technol. 2020, 38, 3261–3267. [Google Scholar] [CrossRef]

- Mikaeil, A.; Hu, W.; Hussain, S.; Sultan, A. Traffic-Estimation-Based Low-Latency XGS-PON Mobile Front-Haul for Small-Cell C-RAN Based on an Adaptive Learning Neural Network. Appl. Sci. 2018, 8, 1097. [Google Scholar] [CrossRef]

- Harstead, E.; Bonk, R.; Walklin, S.; van Veen, D.; Houtsma, V.; Kaneda, N.; Mahadevan, A.; Borkowski, R. From 25 Gb/s to 50 Gb/s TDM PON. J. Opt. Commun. Netw. 2020, 12, D17–D26. [Google Scholar] [CrossRef]

- Ichi Kani, J.; Terada, J.; Hatano, T.; Kim, S.Y.; Asaka, K.; Yamada, T. Future optical access network enabled by modularization and softwarization of access and transmission functions [Invited]. J. Opt. Commun. Netw. 2020, 12, D48–D56. [Google Scholar] [CrossRef]

- DeSanti, C.; Du, L.; Guarin, J.; Bone, J.; Lam, C.F. Super-PON. J. Opt. Commun. Netw. 2020, 12, D66–D77. [Google Scholar] [CrossRef]

- Zou, J.S.; Sasu, S.A.; Lawin, M.; Dochhan, A.; Elbers, J.P.; Eiselt, M. Advanced optical access technologies for next-generation (5G) mobile networks [Invited]. J. Opt. Commun. Netw. 2020, 12, D86–D98. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, D.; Wu, X.; Nesset, D. Progress of ITU-T higher speed passive optical network (50G-PON) standardization. J. Opt. Commun. Netw. 2020, 12, D99–D108. [Google Scholar] [CrossRef]

- Das, S.; Slyne, F.; Kaszubowska, A.; Ruffini, M. Virtualized EAST–WEST PON architecture supporting low-latency communication for mobile functional split based on multiaccess edge computing. J. Opt. Commun. Netw. 2020, 12, D109–D119. [Google Scholar] [CrossRef]

- Li, C.; Guo, W.; Wang, W.; Hu, W.; Xia, M. Bandwidth Resource Sharing on the XG-PON Transmission Convergence Layer in a Multi-operator Scenario. J. Opt. Commun. Netw. 2016, 8, 835–843. [Google Scholar] [CrossRef]

- Luo, Y.; Effenberger, F.; Gao, B. Transmission convergence layer framing in XG-PON1. In 2009 IEEE Sarnoff Symposium; IEEE: Princeton, NJ, USA, 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Kyriakopoulos, C.A.; Papadimitriou, G.I. Predicting and allocating bandwidth in the optical access architecture XG-PON. Opt. Switch. Netw. 2017, 25, 91–99. [Google Scholar] [CrossRef]

- Uzawa, H.; Honda, K.; Nakamura, H.; Hirano, Y.; ichi Nakura, K.; Kozaki, S.; Terada, J. Dynamic bandwidth allocation scheme for network-slicing-based TDM-PON toward the beyond-5G era. J. Opt. Commun. Netw. 2020, 12, A135–A143. [Google Scholar] [CrossRef]

- Skubic, B.; Chen, J.; Ahmed, J.; Chen, B.; Wosinska, L.; Mukherjee, B. Dynamic bandwidth allocation for long-reach PON. IEEE Commun. Mag. 2010, 48, 100–108. [Google Scholar] [CrossRef]

- Skubic, B.; Chen, J.; Ahmed, J.; Wosinska, L.; Mukherjee, B. A comparison of dynamic bandwidth allocation for EPON, GPON, and next-generation TDM PON. IEEE Commun. Mag. 2009, 47, S40–S48. [Google Scholar] [CrossRef]

- Horvath, T.; Munster, P.; Bao, N.H. Lasers in Passive Optical Networks and the Activation Process of an End Unit. Electronics 2020, 9, 1114. [Google Scholar] [CrossRef]

- Oujezsky, V.; Horvath, T.; Jurcik, M.; Skorpil, V.; Holik, M.; Kvas, M. Fpga Network Card And System For Gpon Frames Analysis At Optical Layer. In 2019 42nd International Conference on Telecommunications and Signal Processing (TSP); IEEE: Budapest, Hungary, 2019; pp. 19–23. [Google Scholar] [CrossRef]

- Satyanarayana, K.; Abhinov, B. Recent trends in future proof fiber access passive networks: GPON and WDM PON. In Proceedings of the 2014 International Conference on Recent Trends in Information Technology, Chennai, India, 10–12 April 2014; pp. 1–5. [Google Scholar]

- Nesset, D. NG-PON2 Technology and Standards. J. Light. Technol. 2015, 33, 1136–1143. [Google Scholar] [CrossRef]

- Cale, I.; Salihovic, A.; Ivekovic, M. Gigabit Passive Optical Network—GPON. In Proceedings of the 2007 29th International Conference on Information Technology Interfaces, Cavtat/Dubrovnik, Croatia, 25–28 June 2007; pp. 679–684. [Google Scholar]

- Kramer, G.; Pesavento, G. Ethernet passive optical network (EPON): Building a next-generation optical access network. IEEE Commun. Mag. 2002, 40, 66–73. [Google Scholar] [CrossRef]

- Houtsma, V.; van Veen, D.; Harstead, E. Recent Progress on Standardization of Next-Generation 25, 50, and 100G EPON. J. Light. Technol. 2017, 35, 1228–1234. [Google Scholar] [CrossRef]

- Knittle, C. IEEE 100 Gb/s EPON. In Proceedings of the Optical Fiber Communication Conference, Anaheim, CA, USA, 20–24 March 2016; Optical Society of America: Anaheim, CA, USA; p. Th1I.6. [Google Scholar] [CrossRef]

- Fadlullah, Z.M.; Tang, F.; Mao, B.; Kato, N.; Akashi, O.; Inoue, T.; Mizutani, K. State-of-the-Art Deep Learning. IEEE Commun. Surv. Tutorials 2017, 19, 2432–2455. [Google Scholar] [CrossRef]

- Liu, N.; Wang, H. Ensemble Based Extreme Learning Machine. IEEE Signal Process. Lett. 2010, 17, 754–757. [Google Scholar] [CrossRef]

- Srinivasan, S.M.; Truong-Huu, T.; Gurusamy, M. Machine Learning-Based Link Fault Identification and Localization in Complex Networks. IEEE Internet Things J. 2019, 6, 6556–6566. [Google Scholar] [CrossRef]

- Singh, S.K.; Jukan, A. Machine-Learning-Based Prediction for Resource (Re)allocation in Optical Data Center Networks. J. Opt. Commun. Netw. 2018, 10, D12–D28. [Google Scholar] [CrossRef]

- Morais, R.M.; Pedro, J. Machine Learning Models for Estimating Quality of Transmission in DWDM Networks. J. Opt. Commun. Netw. 2018, 10, D84–D99. [Google Scholar] [CrossRef]

- Côté, D. Using Machine Learning in Communication Networks [Invited]. J. Opt. Commun. Netw. 2018, 10, D100–D109. [Google Scholar] [CrossRef]

- Li, B.; Lu, W.; Liu, S.; Zhu, Z. Deep-Learning-Assisted Network Orchestration for On-Demand and Cost-Effective vNF Service Chaining in Inter-DC Elastic Optical Networks. J. Opt. Commun. Netw. 2018, 10, D29–D41. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y.; Zhang, X.; Geng, G.; Zhang, W.; Sun, Y. A Survey of Networking Applications Applying the Software Defined Networking Concept Based on Machine Learning. IEEE Access 2019, 7, 95397–95417. [Google Scholar] [CrossRef]

- Kibria, M.G.; Nguyen, K.; Villardi, G.P.; Zhao, O.; Ishizu, K.; Kojima, F. Big Data Analytics, Machine Learning, and Artificial Intelligence in Next-Generation Wireless Networks. IEEE Access 2018, 6, 32328–32338. [Google Scholar] [CrossRef]

- Wang, X.; Li, X.; Leung, V.C.M. Artificial Intelligence-Based Techniques for Emerging Heterogeneous Network. IEEE Access 2015, 3, 1379–1391. [Google Scholar] [CrossRef]

- Rafique, D.; Velasco, L. Machine Learning for Network Automation. J. Opt. Commun. Netw. 2018, 10, D126–D143. [Google Scholar] [CrossRef]

- Chu, A.; Poon, K.F.; Ouali, A. Using Ant Colony Optimization to design GPON-FTTH networks with aggregating equipment. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence for Communication Systems and Networks (CIComms), Singapore, 16–19 April 2013; pp. 10–17. [Google Scholar] [CrossRef]

- Liu, Y.; Li, W.; Li, Y. Network Traffic Classification Using K-means Clustering. In Proceedings of the Second International Multi-Symposiums on Computer and Computational Sciences (IMSCCS 2007), Iowa City, IA, USA, 13–15 August 2007; pp. 360–365. [Google Scholar]

- Arndt, D.J.; Zincir-Heywood, A.N. A Comparison of three machine learning techniques for encrypted network traffic analysis. In Proceedings of the 2011 IEEE Symposium on Computational Intelligence for Security and Defense Applications (CISDA), Paris, France, 11–15 April 2011; pp. 107–114. [Google Scholar]

- Gosselin, S.; Courant, J.; Tembo, S.R.; Vaton, S. Application of probabilistic modeling and machine learning to the diagnosis of FTTH GPON networks. In Proceedings of the 2017 International Conference on Optical Network Design and Modeling (ONDM), Budapest, Hungary, 15–18 May 2017; pp. 1–3. [Google Scholar]

- Liu, X.; Lun, H.; Fu, M.; Fan, Y.; Yi, L.; Hu, W.; Zhuge, Q. AI-Based Modeling and Monitoring Techniques for Future Intelligent Elastic Optical Networks. Appl. Sci. 2020, 10, 363. [Google Scholar] [CrossRef]

- Mata, J.; de Miguel, I.; Durán, R.J.; Merayo, N.; Singh, S.K.; Jukan, A.; Chamania, M. Artificial intelligence (AI) methods in optical networks. Opt. Switch. Netw. 2018, 28, 43–57. [Google Scholar] [CrossRef]

- Musumeci, F.; Rottondi, C.; Nag, A.; Macaluso, I.; Zibar, D.; Ruffini, M.; Tornatore, M. An Overview on Application of Machine Learning Techniques in Optical Networks. IEEE Commun. Surv. Tutorials 2019, 21, 1383–1408. [Google Scholar] [CrossRef]

- Yi, L.; Li, P.; Liao, T.; Hu, W. 100 Gb/s/λ IM-DD PON Using 20G-Class Optical Devices by Machine Learning Based Equalization. In 2018 European Conference on Optical Communication (ECOC); IEEE: Rome, Italy, 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Yi, L.; Liao, T.; Huang, L.; Xue, L.; Li, P.; Hu, W. Machine Learning for 100 Gb/s/λ Passive Optical Network. J. Light. Technol. 2019, 37, 1621–1630. [Google Scholar] [CrossRef]

- Mangal, S.; Joshi, P.; Modak, R. LSTM vs. GRU vs. Bidirectional RNN for script generation. arXiv 2019, arXiv:1908.04332. [Google Scholar]

- Apaydin, H.; Feizi, H.; Sattari, M.T.; Colak, M.S.; Shamshirband, S.; Chau, K.W. Comparative Analysis of Recurrent Neural Network Architectures for Reservoir Inflow Forecasting. Water 2020, 12, 1500. [Google Scholar] [CrossRef]

- Shewalkar, A.; Nyavanandi, D.; Ludwig, S.A. Performance Evaluation of Deep Neural Networks Applied to Speech Recognition: RNN, LSTM and GRU. J. Artif. Intell. Soft Comput. Res. 2019, 9, 235–245. [Google Scholar] [CrossRef]

- International Telecommunication Union. Gigabit-Capable Passive Optical Networks (G-PON): Transmission Convergence Layer Specification; International Telecommunication Union: Geneva, Switzerland, 2014. [Google Scholar]

- Boden, M. A guide to recurrent neural networks and backpropagation. Dallas Proj. 2002, 1–10. Available online: https://www.researchgate.net/publication/2903062_A_Guide_to_Recurrent_Neural_Networks_and_Backpropagation (accessed on 17 November 2020).

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Zhang, Z. Improved Adam Optimizer for Deep Neural Networks. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), Banff, AB, Canada, 4–6 June 2018; pp. 1–2. [Google Scholar]

- Blum, A.; Kalai, A.; Langford, J. Beating the hold-out: Bounds for k-fold and progressive cross-validation. In Proceedings of the Twelfth Annual Conference on Computational Learning Theory, Santa Cruz, CA, USA, 6–9 July 1999; pp. 203–208. [Google Scholar]

| Message ID | 1 | 3 | 4 | 5 | 6 | 8 | 10 | 11 | 14 | 18 | 20 | 21 | 24 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Count | 82 | 88 | 114 | 94 | 54 | 286 | 86 | 545,934 | 93 | 103 | 63 | 54 | 1 |

| SimpleRNN | LSTM | GRU | ||

|---|---|---|---|---|

| Learning | loss | |||

| accuracy | ||||

| recall | ||||

| precision | ||||

| auc | ||||

| Validation | loss | |||

| accuracy | ||||

| recall | ||||

| precision | ||||

| auc | ||||

| Trainable parameters | 5729 | 16,385 | 13,025 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tomasov, A.; Holik, M.; Oujezsky, V.; Horvath, T.; Munster, P. GPON PLOAMd Message Analysis Using Supervised Neural Networks. Appl. Sci. 2020, 10, 8139. https://doi.org/10.3390/app10228139

Tomasov A, Holik M, Oujezsky V, Horvath T, Munster P. GPON PLOAMd Message Analysis Using Supervised Neural Networks. Applied Sciences. 2020; 10(22):8139. https://doi.org/10.3390/app10228139

Chicago/Turabian StyleTomasov, Adrian, Martin Holik, Vaclav Oujezsky, Tomas Horvath, and Petr Munster. 2020. "GPON PLOAMd Message Analysis Using Supervised Neural Networks" Applied Sciences 10, no. 22: 8139. https://doi.org/10.3390/app10228139

APA StyleTomasov, A., Holik, M., Oujezsky, V., Horvath, T., & Munster, P. (2020). GPON PLOAMd Message Analysis Using Supervised Neural Networks. Applied Sciences, 10(22), 8139. https://doi.org/10.3390/app10228139