Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen

Abstract

1. Introduction

2. Related Work

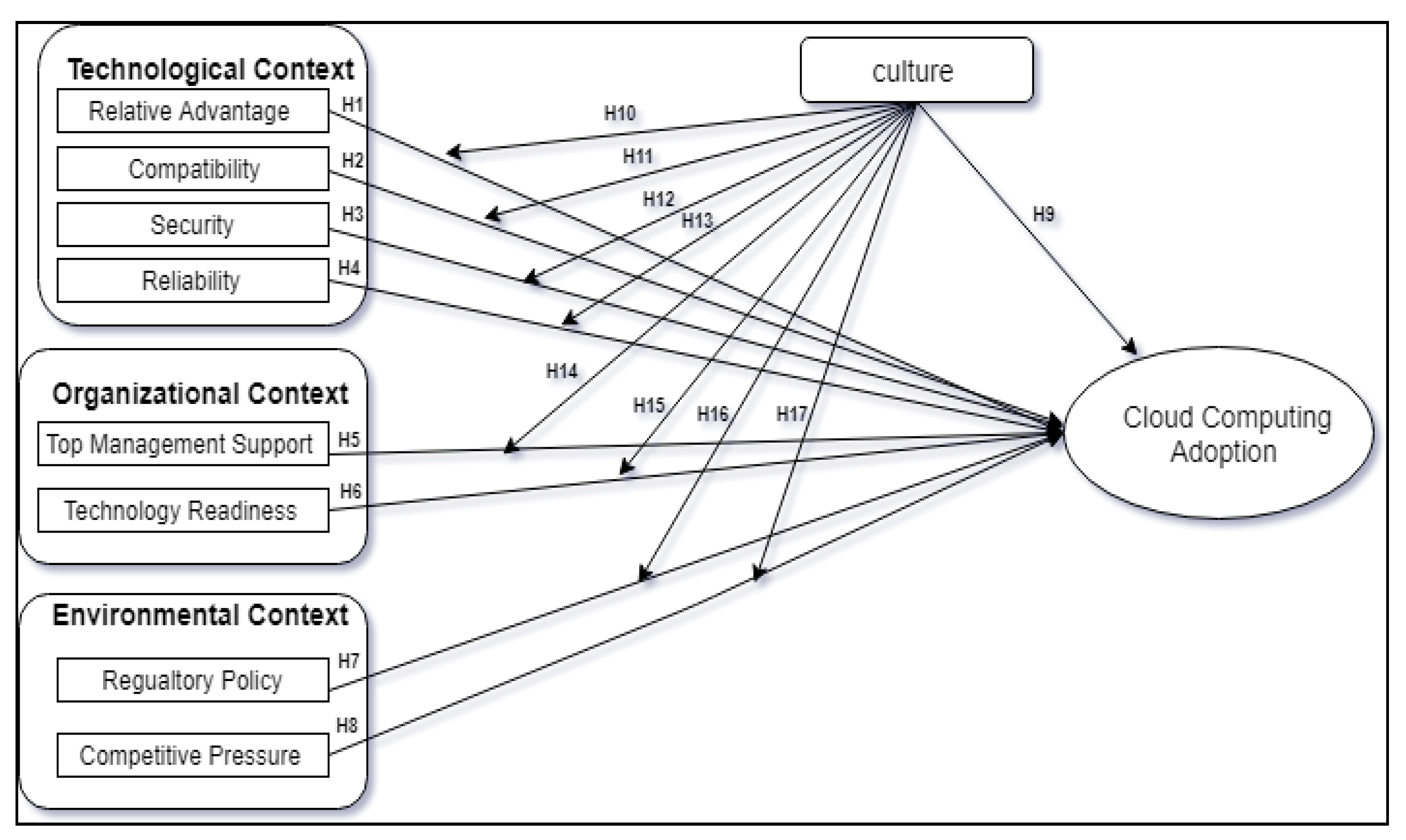

3. The Research Model

4. Methodology

4.1. Instrument Design

4.1.1. Content Validity

4.1.2. Pre-Testing the Questionnaire

4.1.3. Face Validity

4.2. Data Collection Procedure

5. Empirical Analysis and Results

5.1. The Measurement Model

5.2. Assessment of Measurement Model

5.2.1. Individual Item Reliability

5.2.2. Internal Consistency Reliability

5.2.3. Convergent Validity

5.2.4. Discriminant Validity

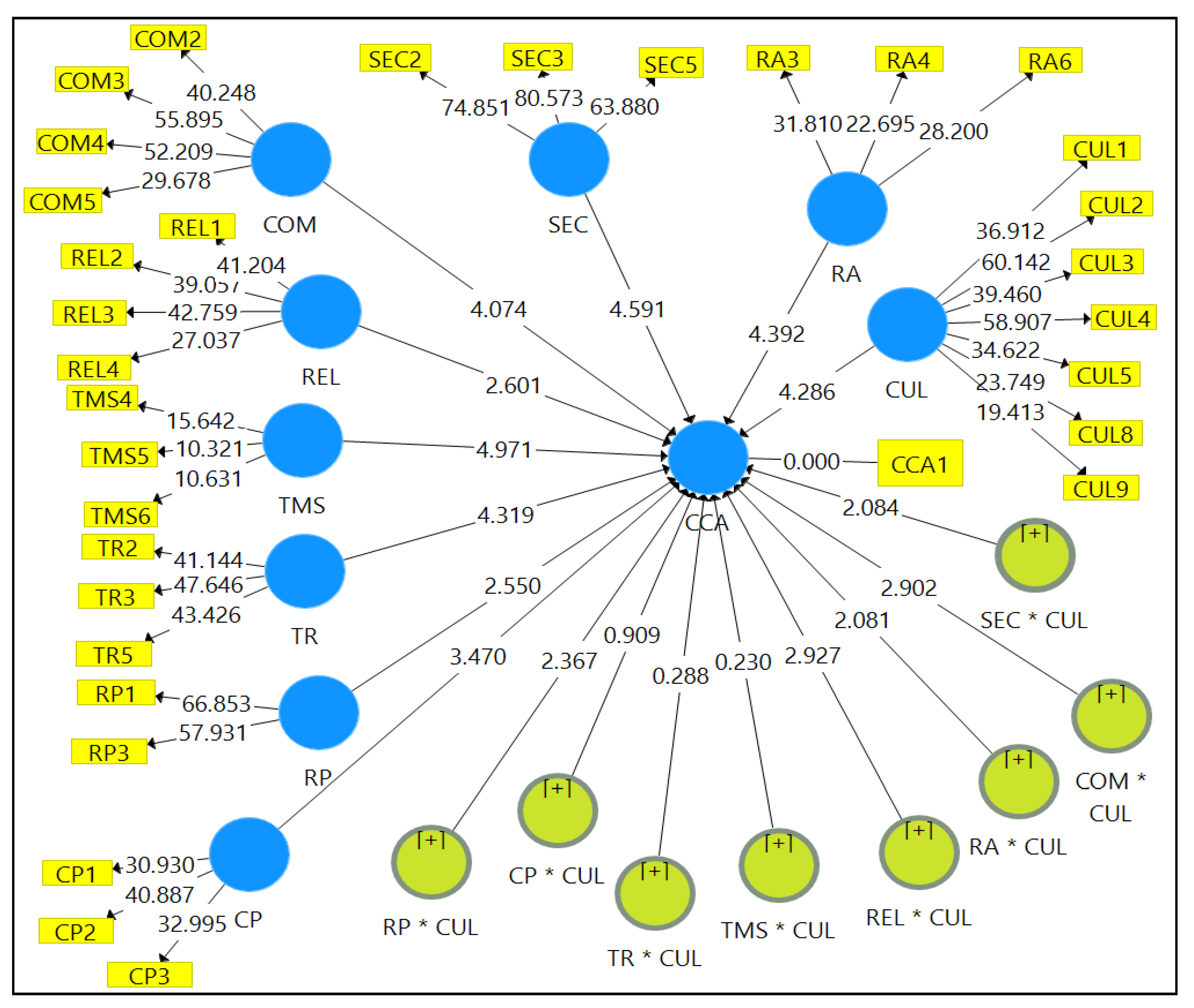

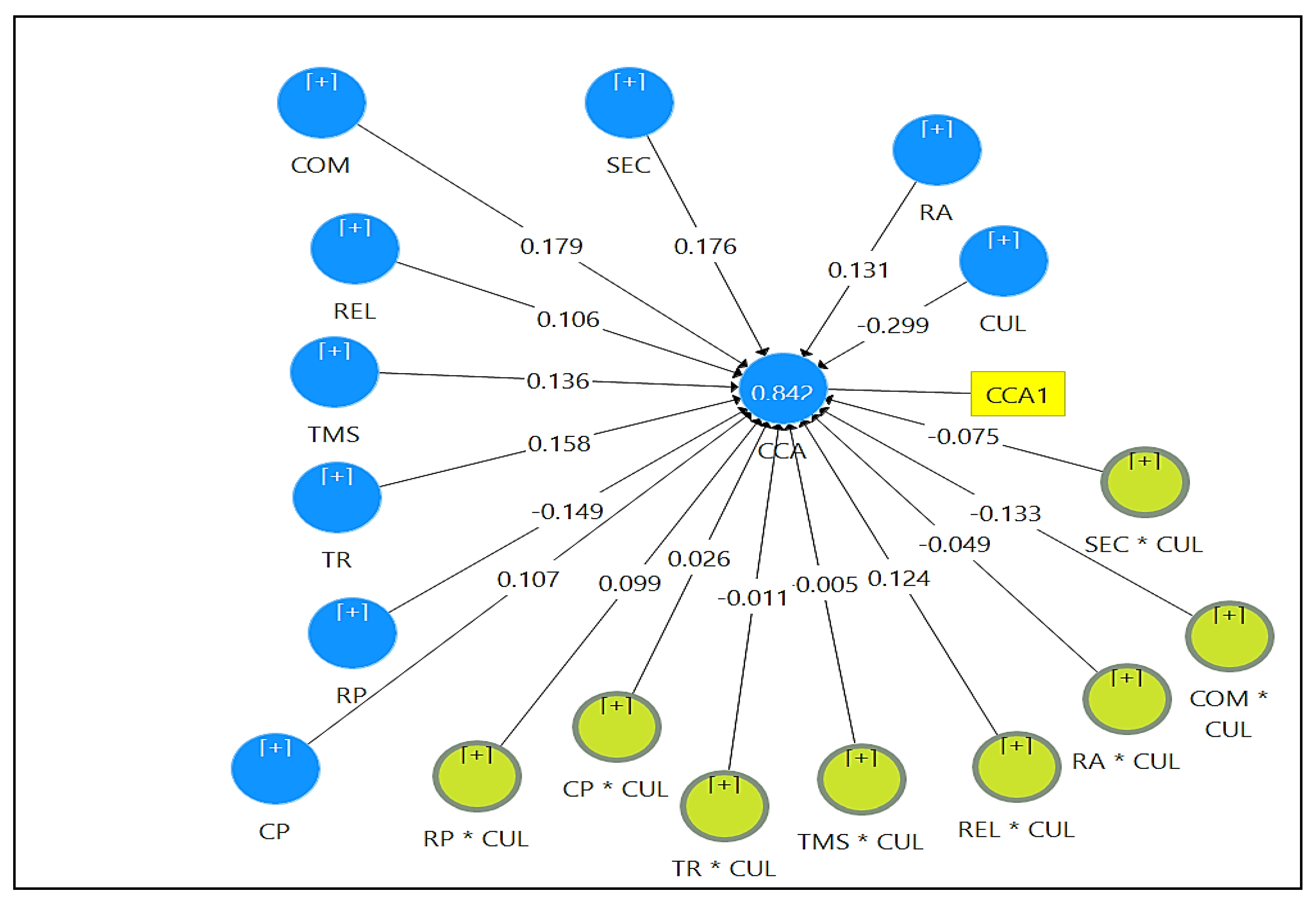

5.3. The Structural Model

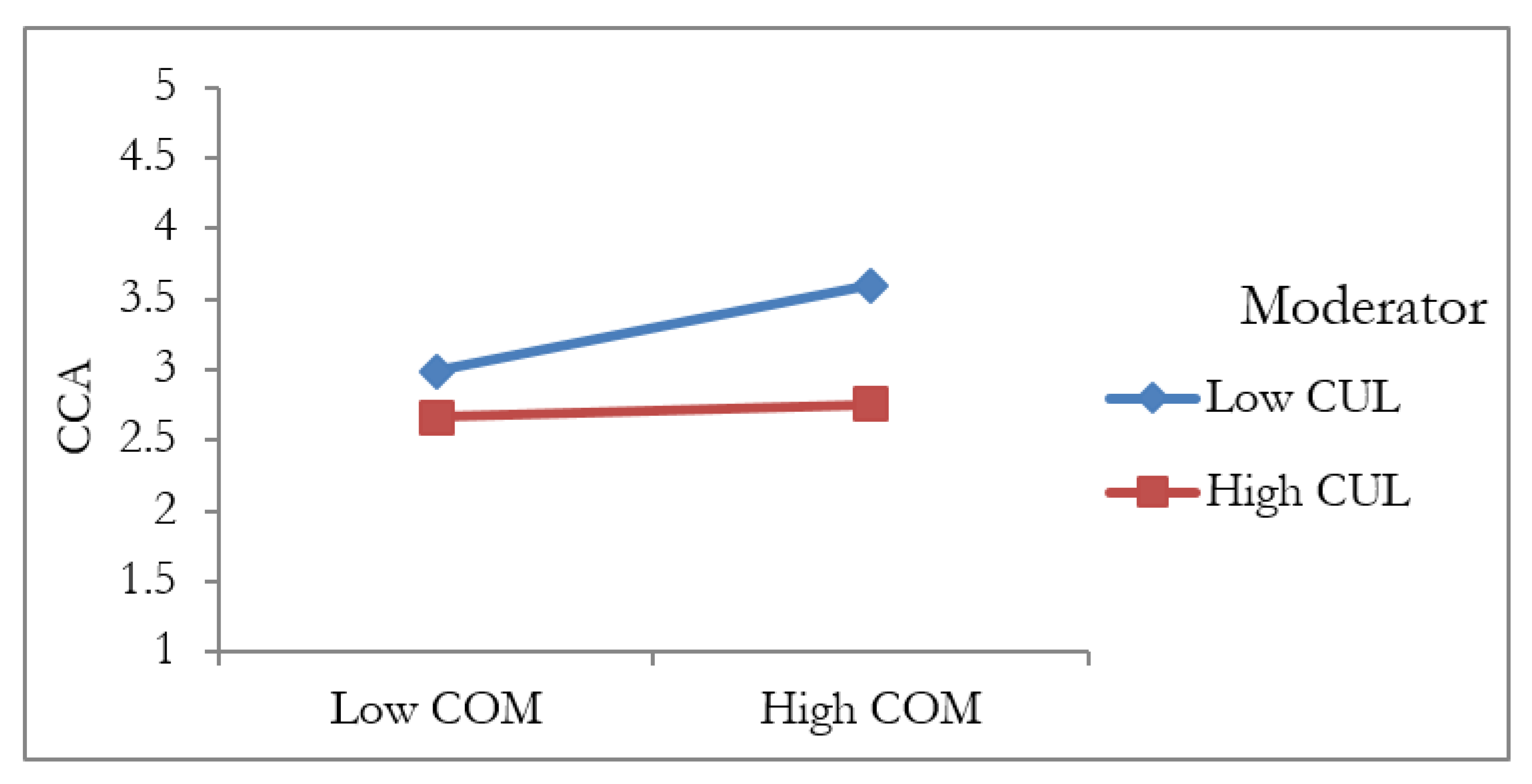

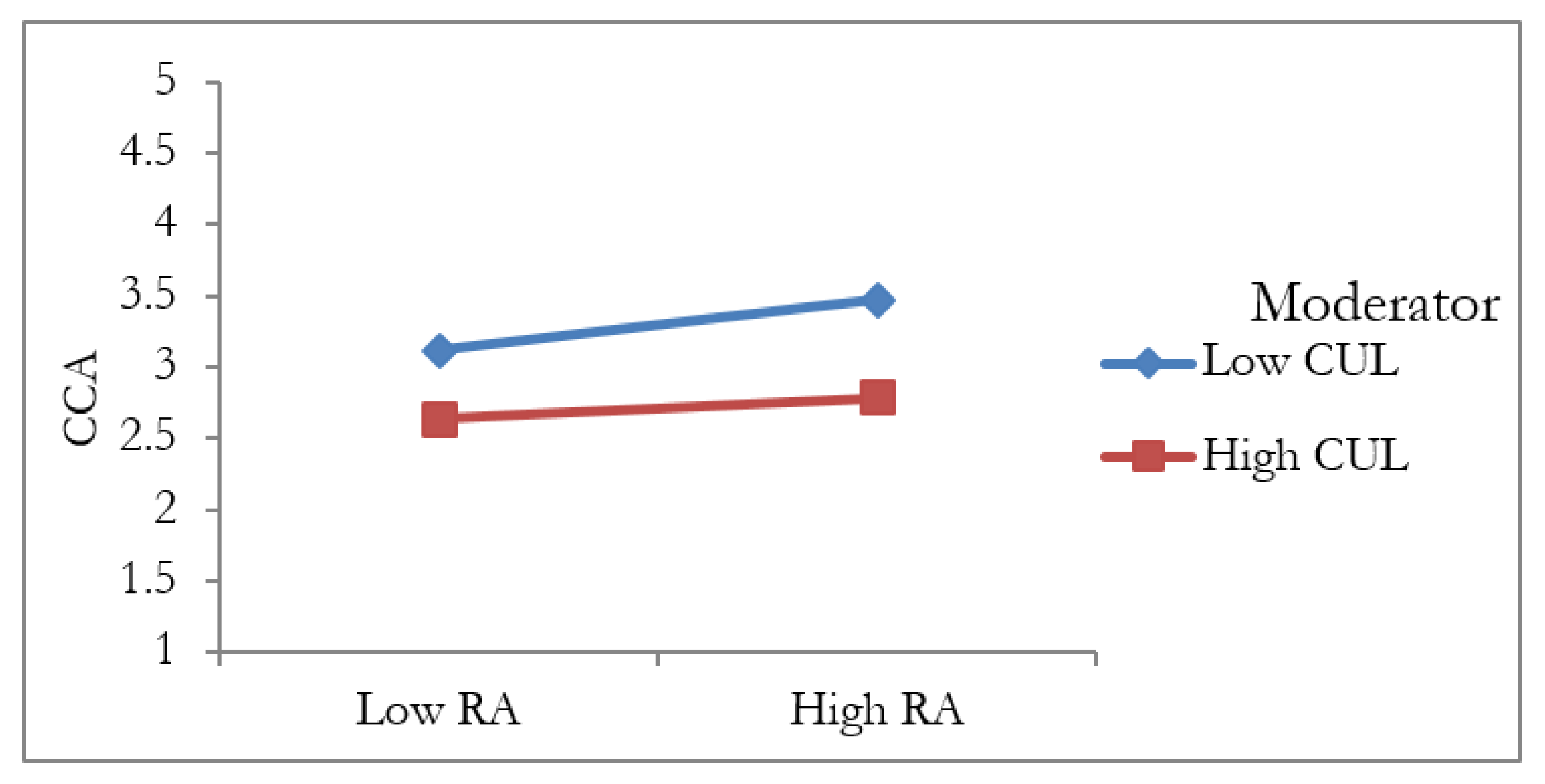

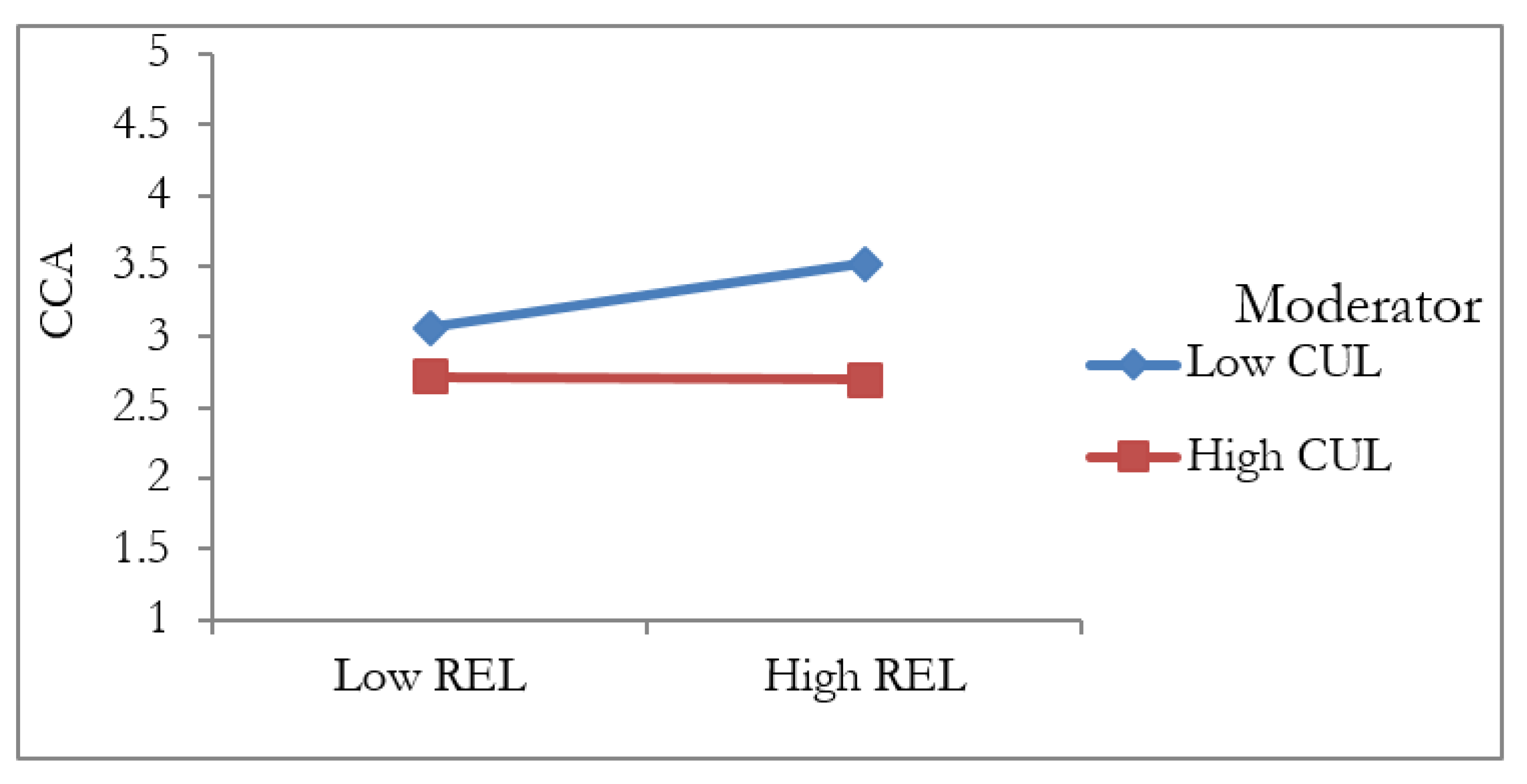

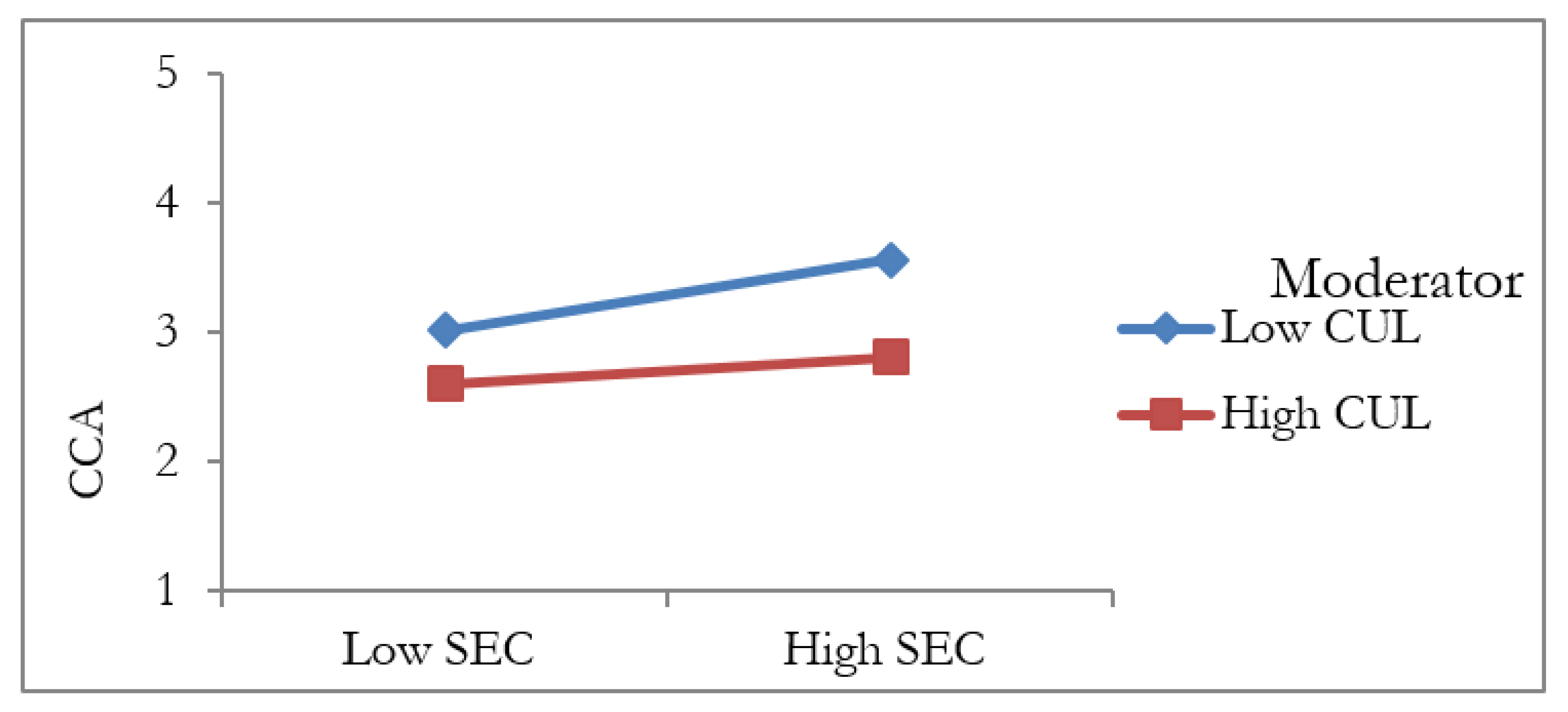

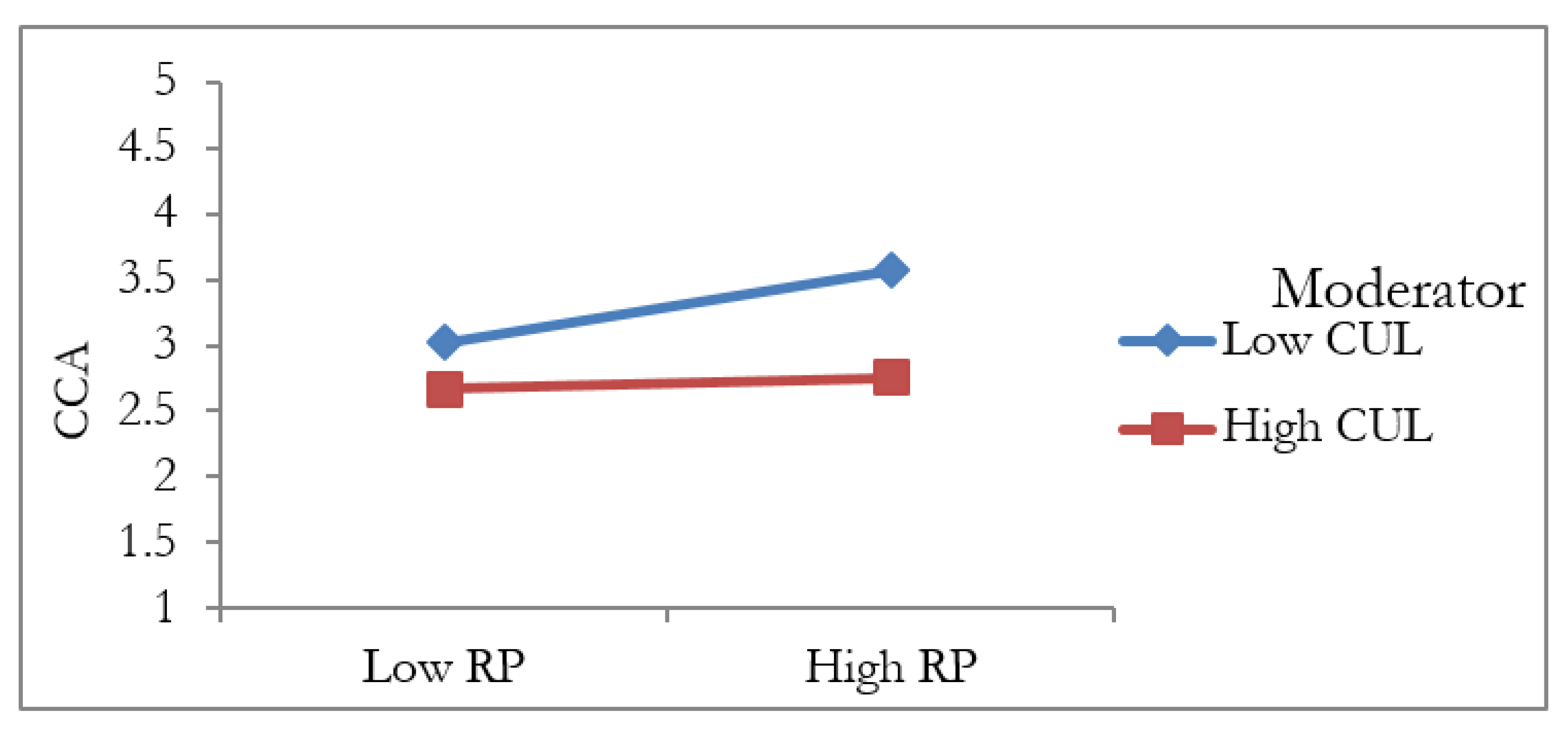

5.4. The Moderation Analysis

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Constructs | Measurement Items | ||

|---|---|---|---|

| Compatibility | 5 | 1. Cloud computing is suitable for our institution’s IT infrastructure. (COM1) 2. Using cloud computing is compatible with our institution’s culture. (COM2) 3. CCA is compatible with our preferred work practice. (COM3) 4. The use of cloud computing technologies fits well with the way we operate. (COM4) 5. Cloud computing is compatible with our institution’s current hardware and software infrastructure. (COM5) | [30] |

| Relative Advantage | 6 | 1. Cloud computing can curtail the time for Information Systems. (RA1) 2. Using cloud computing permits us to perform specific tasks more quickly. (RA2) 3. Cloud computing can lessen IT expenses. (RA3) 4. The use of cloud computing enables people to seize new educational and research opportunities. (RA4) 5. Cloud computing allows us to manage business operations in an efficient way. (RA5) 6. The use of cloud computing services improves the quality of operations. (RA6) | [30,74] |

| Security | 5 | 1. Our institution is concerned about data security in cloud computing. (SEC1) 2. Our institution is concerned about privacy in cloud computing. (SEC2) 3. Our institution is concerned that data cannot be manipulated by online criminals or hackers. (SEC3) 4. Our institution is concerned with no official data is used for commercial benefit by cloud providers. (SEC4) 5. Our institution is concerned that cloud computing data is not kept private. (SEC5) | [14] |

| Reliability | 5 | 1. Cloud computing is an excellent ‘backup’ for my institution’s data against hard-disk crash. (REL1) 2. Cloud computing is acts as excellent disaster recovery (in case of an unforeseen event) with uninterrupted access. (REL2) 3. Cloud computing offers a reliable storage solution for my institution’s data instead of a thumb drive (USB) or portable hard disk. (REL3). 4. Cloud computing offers high uptime and availability of the cloud services round the clock. (REL4). 5. The cloud computing service provider can recover our institution’s data safely even if it gets corrupted by spam or a malware attack. (REL5). | [12] |

| Top Management Support | 6 | 1. The institution’s top management advocates the implementation of cloud computing. (TMS1) 2. The institution’s top management demonstrates strong leadership and gets involved in the process with respect to cloud computing. (TMS2) 3. The institution’s management is willing to take risks (financial and organizational) involved in CCA. (TMS3) 4. The institution’s top management is aware of the benefits of cloud computing. (TMS4) 5. The institution’s top management is likely to consider the adoption of cloud computing as strategically important. (TMS5) 6. The institution’s top management provides resources for adopting cloud computing. (TMS6) | [30,75] |

| Technology Readiness | 5 | 1. Our institution knows how cloud computing can be used to support our operations. (TR1) 2. Our institution has the necessary technical, managerial and other skills to implement cloud computing. (TR2) 3. Our institutional values and norms support the adoption of cloud computing in our operations. (TR3) 4. Our institution has sufficient technological resources to implement cloud computing, including high bandwidth connectivity to the Internet. (TR4) 5. Our institution hires highly specialized or knowledgeable personnel for cloud computing. (TR5)” | [30] |

| Regulatory Policy | 6 | 1. Our country’s laws and regulations facilitate the use of cloud computing. (RP1) 2. Our country’s laws and regulations today are sufficient to protect the use of cloud computing. (RP2) 3. Our government is providing us with incentives to adopt cloud computing technologies. (RP3) 4. Our government is active in setting up the facilities to enable cloud computing. (RP4) 5. Our institution is under pressure from some government agencies to adopt cloud computing technology. (RP5) 6. Current laws and regulations do not allow us to utilize cloud computing resources and services hosted outside our country. (RP6) | [12,74,75,76,77,78] |

| Competitive Pressure | 3 | 1. Our institution thinks that cloud computing has an influence on competition. (CP1) 2. Our institution is under pressure from competitors to adopt cloud computing. (CP2) 3. Some of our competitors have already started using cloud computing. (CP3) | [12] |

| Culture | 10 | 1. The identity of my tribe is an obstacle to an atmosphere of trust toward cloud adoption in my institution (CUL1) 2. The identity of my tribe could be an obstacle to thoughts and ideas in my institution. (CUL2) 3. My tribe identity prevents me from making suggestions or decisions in my institution. (CUL3) 4. The identity of my tribe could be an obstacle to sharing data and to personal privacy in my institution. (CUL4) 5. The tribe culture influences the leaders’ decision in my institution to adopting cloud computing. (CUL5) 6. Cloud computing adoption is an important need to group rather than individual needs. (CUL6) 7. Developing and adopting new technologies requires more freedom and fewer tribal restrictions. (CUL7) 8. Because of tribal identity, it is not easy to adopt cloud computing in HEI. (CUL8) 9. Tribalism supports teamwork and will encourage working within a cloud computing environment. (CUL9) 10. Influential people in the tribe should establish campaigns in the society to spread awareness of CCA and encourage it use. (CUL10)” | Abdullah Hussein Alghushami (paper authoer) |

| Cloud computing adoption | 1 | CCA1:—What stage of cloud computing adoption is your institution currently involved in?

| [12,30] |

References

- Monroy, C.R.; Arias, C.A.; Guerrero, Y.N. The New Cloud Computing Paradigm: The Way to IT Seen as a Utility. Lat. Am. Caribb. J. Eng. Educ. 2013, 6, 24–31. [Google Scholar]

- Sabi, H.M.; Uzoka, F.-M.E.; Langmia, K.; Njeh, F.N. Conceptualizing a model for adoption of cloud computing in education. Int. J. Inf. Manag. 2016, 36, 183–191. [Google Scholar] [CrossRef]

- Anderson, J.; Rainie, L. The Future of Cloud Computing. 2010. Available online: http://pewinternet.org/Reports/2010/The-future-of-Cloud-computing.aspx (accessed on 11 August 2018).

- Cearley, D.W. The Top 10 Strategic Technology Trends for 2014. 2014. Available online: http://www.gartner.com/doc/2667526 (accessed on 29 January 2017).

- Ercan, T. Effective use of cloud computing in educational institutions. Procedia Soc. Behav. Sci. 2010, 2, 938–942. [Google Scholar] [CrossRef]

- Alabbadi, M.M. Cloud computing for education and learning: Education and learning as a service (ELaaS). In Proceedings of the 2011 14th International Conference on Interactive, Collaborative Learning, Piest’any, Slovakia, 21–23 September 2011; pp. 589–594. [Google Scholar]

- Zissis, D.; Lekkas, D. Addressing cloud computing security issues. Futur. Gener. Comput. Syst. 2012, 28, 583–592. [Google Scholar] [CrossRef]

- Chun, S.-H.; Choi, B.-S. Service models and pricing schemes for cloud computing. Clust. Comput. 2014, 17, 529–535. [Google Scholar] [CrossRef]

- Sakr, S. Cloud-hosted databases: Technologies, challenges and opportunities. Clust. Comput. 2013, 17, 487–502. [Google Scholar] [CrossRef]

- Greengard, S. Cloud computing and developing nations. Commun. ACM 2010, 53, 18–20. [Google Scholar] [CrossRef]

- Hailu, A. Factors Influencing Cloud-Computing Technology Adoption in Developing Countries. Ph.D. Thesis, Capella University, Minneapolis, MN, USA, 2012. [Google Scholar]

- Tashkandi, A.; Al-Jabri, I. Cloud Computing Adoption by Higher Education Institutions in Saudi Arabia: Analysis Based on TOE. In Proceedings of the 2015 International Conference on Cloud Computing (ICCC), Riyadh, Saudi Arabia, 26–29 April 2015; pp. 1–8. [Google Scholar]

- Abdulrab, S. The Impact of Culture on Information Technology Adoption in Universities; Robert Morris University: Pittsburgh, PA, USA, 2011. [Google Scholar]

- Law, M. Cloud Computing Basics; Hillcrest Media Group, Inc.: Minneapolis, MN, USA, 2011. [Google Scholar]

- United Nations. World Economic Situation and Prospects 2015. 2015. Available online: http://www.un.org/en/development/desa/policy/wesp/wesp_current/2015wesp_country_classification.pdf (accessed on 20 March 2016).

- UNCTAD. The Least Developed Countries Report, Transforming Rural Economies; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Benlian, A.; Hess, T. Opportunities and risks of software-as-a-service: Findings from a survey of IT executives. Decis. Support Syst. 2011, 52, 232–246. [Google Scholar] [CrossRef]

- Lin, A.; Chen, N.-C. Cloud computing as an innovation: Percepetion, attitude, and adoption. Int. J. Inf. Manag. 2012, 32, 533–540. [Google Scholar] [CrossRef]

- Alshamaila, Y.; Papagiannidis, S.; Li, F. Cloud Computing Adoption by SMEs in the North East of England: A Multi-perspective Framework. J. Enterp. Inf. Manag. 2013, 26, 250–275. [Google Scholar] [CrossRef]

- Herbig, P.; Dunphy, S. Culture and Innovation. Cross Cult. Manag. 1998, 5, 13–25. [Google Scholar] [CrossRef]

- Brender, N.; Markov, I. Risk perception and risk management in cloud computing: Results from a case study of Swiss companies. Int. J. Inf. Manag. 2013, 33, 726–733. [Google Scholar] [CrossRef]

- Srite, M.; Karahanna, E. The Role of Espoused National Cultural Values in Technology Ccceptance. MIS Q. 2006, 30, 679–704. [Google Scholar] [CrossRef]

- Motaher, M. National Information Center of the Republic of Yemen. Int. J. Soc. Econ. 2015, 31, 94–110. [Google Scholar]

- Baabbad, M. The Influence of Tribalism on Perceived Auditor Independence: The Yemeni Evidence. Int. Bus. Manag. 2015, 12, 17–25. [Google Scholar]

- Manea, E.M. Yemen, the Tribe and the State. In International Colloquium on Islam and Social Change; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Peterson, J.E. Tribes and Politics in Yemen. In Arabian Peninsula Background Note; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Corstange, D. Tribes and the Rule of Law in Yemen. In Annual Conference of the Middle East Studies Association; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Mdabesh, A. The Equation of the Tribe in Yemen. Middle East Newsp. 2010, 34, 11671. [Google Scholar]

- Phillips, S. Yemen: On the Brink, What Comes Next in Yemen? Al-Qaeda, the Tribes, and State-Building; Pearson Press: Washington, DC, USA, 2010. [Google Scholar]

- Oliveira, T.; Thomas, M.; Espadanal, M. Assessing the determinants of cloud computing adoption: An analysis of the manufacturing and services sectors. Inf. Manag. 2014, 51, 497–510. [Google Scholar] [CrossRef]

- Kendall, J.D.; Tung, L.L.; Chua, K.H.; Ng, C.H.D.; Tan, S.M. Receptivity of Singapore’s SMEs to electronic commerce adoption. J. Strat. Inf. Syst. 2001, 10, 223–242. [Google Scholar] [CrossRef]

- Kuan, K.K.Y.; Chau, P.Y.K. A perception-based model for EDI adoption in small businesses using a technology–organization–environment framework. Inf. Manag. 2001, 38, 507–521. [Google Scholar] [CrossRef]

- OECD. The E-Government Imperative: Main Findings; OECD: London, UK, 2003. [Google Scholar]

- Abdalla, S. An E-Government Adoption Framework for Developing Countries: A Case Study from Sudan. Ph.D. Thesis, Cranfield University, Cranfield, UK, 2012. [Google Scholar]

- Alsanea, M. Factors Affecting the Adoption of Cloud Computing in Saudi Arabia’s Government Sector. Master’s Thesis, University of London, London, UK, 2015. [Google Scholar]

- Borgman, H.P.; Bahli, B.; Heier, H.; Schewski, F. Cloudrise: Exploring Cloud Computing Adoption and Governance with the TOE Framework. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Malaysia, IEEE, Wailea, HI, USA, 7–10 January 2013; pp. 4425–4435. [Google Scholar]

- Chang, V.; Walters, R.J.; Wills, G. The development that leads to the Cloud Computing Business Framework. Int. J. Inf. Manag. 2013, 33, 524–538. [Google Scholar] [CrossRef]

- DePietro, R.; Wiarda, E.; Fleischer, M. The Context for Change: Organization, Technology and Environment. In The Process of Technological Innovation; Tornatzky, L.G., Fleischer, M., Eds.; Lexington Books: Lexington, KY, USA, 1990; pp. 151–175. [Google Scholar]

- Sabherwal, R.; Jeyaraj, A.; Chowa, C. Information System Success: Individual and Organizational Determinants. Manag. Sci. 2006, 52, 1849–1864. [Google Scholar] [CrossRef]

- Yoon, T. An Empirical Investigation of Factors Affecting Organizational Adoption of Virtual Worlds. Ph.D. Thesis, Florida State University, Tallahassee, FL, USA, 2009. [Google Scholar]

- Low, C.; Chen, Y.; Wu, M. Understanding the determinants of cloud computing adoption. Ind. Manag. Data Syst. 2011, 111, 1006–1023. [Google Scholar] [CrossRef]

- Klug, W. The Determinants of Cloud Computing Adoption by Colleges and Universities; ProQuest: Ann Arbor, MI, USA; Dissertations Publishing: London UK, 2014. [Google Scholar]

- Hsu, P.-F.; Ray, S.; Li-Hsieh, Y.-Y. Examining cloud computing adoption intention, pricing mechanism, and deployment model. Int. J. Inf. Manag. 2014, 34, 474–488. [Google Scholar] [CrossRef]

- Davison, R.M.; Martinsons, M.G. Guest editorial cultural issues and it management: Past and present. IEEE Trans. Eng. Manag. 2003, 50, 3–7. [Google Scholar] [CrossRef]

- Zhao, F.; Scheruhn, H.-J.; Von Rosing, M. The Impact of Culture Differences on Cloud Computing Adoption. Lect. Notes Comput. Sci. 2014, 22, 776–785. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Straub, D.; Loch, K.; Hill, C. Transfer of Information Technology to the Arab World: A Test of Cultural Influence Modeling. Adv. Top. Glob. Inf. Manag. 2003, 2, 141–172. [Google Scholar]

- Premkumar, G.; Ramamurthy, K. The Role of Interorganizational and Organizational Factors on the Decision Mode for Adoption of Interorganizational Systems. Decis. Sci. 1995, 26, 303–336. [Google Scholar] [CrossRef]

- Premkumar, G.; Roberts, M. Adoption of new information technologies in rural small businesses. Omega 1999, 27, 467–484. [Google Scholar] [CrossRef]

- Zhu, K.; Kraemer, K.L.; Xu, S.X. The Process of Innovation Assimilation by Firms in Different Countries: A Technology Diffusion Perspective on E-Business. Manag. Sci. 2006, 52, 1557–1576. [Google Scholar] [CrossRef]

- Luo, X.; Gurung, A.; Shim, J.P. Understanding the Determinants of User Acceptance of Enterprise Instant Messaging: An Empirical Study. J. Organ. Comput. Electron. Commer. 2010, 20, 155–181. [Google Scholar] [CrossRef]

- Alam, S.S.; Ali, Y.; Jani, M.F.M. An Empirical Study of Factors Affecting Electronic Commerce Adoption Among SMEs in Malaysia. J. Bus. Econ. Manag. 2011, 12, 375–399. [Google Scholar] [CrossRef]

- Ifinedo, P. An Empirical Analysis of Factors Influencing Internet/E-Business Technologies Adoption by SMEs in Canada. Int. J. Inf. Technol. Decis. Mak. 2011, 10, 731–766. [Google Scholar] [CrossRef]

- Sekaran, U. Research Methods for Business: A Skill Building Approach, 4th ed.; Wiley: New York, NY, USA, 2003. [Google Scholar]

- Peterson, R.A.; Kim, Y. On the relationship between coefficient alpha and composite reliability. J. Appl. Psychol. 2013, 98, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. PLS-SEM: Indeed a Silver Bullet. J. Mark. Theory Pract. 2011, 19, 139–152. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modelling (PLS-SEM); SAGE Publications, Inc.: Washington, DC, USA, 2014. [Google Scholar]

- Lee, Y.-C.; Lee, S.-K. Capabilities, processes, and performance of knowledge management: A structural approach. Hum. Factors Ergon. Manuf. 2006, 17, 21–41. [Google Scholar] [CrossRef]

- Bagozzi, R.P.; Yi, Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Coltman, T.; DeVinney, T.M.; Midgley, D.F.; Venaik, S. Formative versus reflective measurement models: Two applications of formative measurement. J. Bus. Res. 2008, 61, 1250–1262. [Google Scholar] [CrossRef]

- Chin, W.W. The Partial Least Squares Approach to Structural Equation Modeling. Mod. Methods Bus. Res. 1998, 295, 295–336. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Pearson Education: London, UK; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Chin, W.W. The Partial Least Squares Approach for Structural Equation Modeling; Marcoulides, G.A., Ed.; Lawrence Erlbaum Associates: London, UK, 1998; pp. 295–336. [Google Scholar]

- Duarte, P.; Raposo, M.L. A PLS Model to Study Brand Preference: An Application to the Mobile England, Pearson Phone Market; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39. [Google Scholar] [CrossRef]

- Henseler, J.; Sarstedt, M. Goodness-of-fit indices for partial least squares path modeling. Comput. Stat. 2013, 28, 565–580. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Hopkins, L.; Kuppelwieser, V.G. Partial least squares structural equation modeling (PLS-SEM). Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar] [CrossRef]

- Falk, R.; Miller, N. AXL Press, Primer for Soft Modeling; Brunel University: London, UK, 1992. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates Publishers: New York, NY, USA, 1988. [Google Scholar]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; SAGE Publications, Inc.: Washington, DC, USA, 2017. [Google Scholar]

- Altameem, T.A. The Critical Factors of E-Government Adoption: An Empirical Study in the Saudi Arabia Public Sectors; Brunel University: London, UK, 2007. [Google Scholar]

- Alhujran, O. Determinants of E-Government Services Adoption in Developing Countries: A Field Survey and a Case Study. Ph.D. Thesis, University of Wollongong, Dubai, UAE, 2009. [Google Scholar]

- Seng, W.M.; Jackson, S.; Philip, G. Cultural issues in developing E-Government in Malaysia. Behav. Inf. Technol. 2010, 29, 423–432. [Google Scholar] [CrossRef]

- Alghushami, A.; Zakaria, N.H.; Aji, Z.M. The determinants impacting the adoption of cloud computing in Yemen institutions. AIP Conf. Proc. 2018, 2016, 020022. [Google Scholar]

- Alkawsi, G.A.; Ali, N.; Mustafa, A.S.; Baashar, Y.; Alhussian, H.; Alkahtani, A.; Tiong, S.K.; Ekanayake, J. A hybrid SEM-neural network method for identifying acceptance factors of the smart meters in Malaysia: Challenges perspective. Alexandria Eng. J. 2020. [Google Scholar] [CrossRef]

- Alkawsi, G.A.; Mahmood, A.K.; Baashar, Y.M. Factors influencing the adoption of cloud computing in SME: A systematic review. In Proceedings of the 2015 International Symposium on Mathematical Sciences and Computing Research, iSMSC 2015—Proceedings, Ipon, Malaysia, 19–20 May 2015. [Google Scholar]

- Alkawsi, G.; Baashar, Y. An empirical study of the acceptance of IoT-based smart meter in Malaysia: The effect of electricity-saving knowledge and environmental awareness. IEEE Access 2020. [Google Scholar] [CrossRef]

- Alkawsi, G.A.; Ali, N.B.; Alghushami, A. Toward understanding individuals’ acceptance of internet of things—Based services: Developing an instrument to measure the acceptance of smart meters. J. Theor. Appl. Inf. Technol. 2018, 96. [Google Scholar]

| Name | Field of Specialization | Results/Outcomes | |

|---|---|---|---|

| First Presentation | A1 | PhD in IT |

|

| B1 | PhD in IT |

| |

| C1 | MSc in IS |

| |

| D1 | PhD in Project Management |

| |

| E1 | MSc in Project Management |

| |

| Second Presentation | A2 | PhD in English literature |

|

| B2 | MSc in Information Management |

| |

| C2 | PhD in IT |

| |

| D2 | BSc in Computer Networking. |

| |

| E2 | PhD in Business IT |

|

| Name | Country | Field of Specialization | Results/Outcomes |

|---|---|---|---|

| Expert A | Saudi Arabia (Aldammam) | PhD in IT |

|

| Expert B | Yemen (Amran) | Professor in IT (Cloud computing) |

|

| Expert C | Malaysia (Kuala Lumpur) | PhD in Computer Science |

|

| Expert D | Qatar (Doha) | Professor in IT |

|

| Expert E | Malaysia (Pahang) | PhD Student in Information System |

|

| Expert F | Sana’a (Yemen) | MSc in English Literature |

|

| Expert G | (Istanbul) Turkey | PhD student in Urban Planning |

|

| Constructs | Items | Loadings | Composite Reliability (CR) | Average Variance Extracted (AVE) |

|---|---|---|---|---|

| Culture | CUL1 CUL2 CUL3 CUL4 CUL5 CUL8 CUL9 | 0.831 0.865 0.834 0.876 0.818 0.727 0.730 | 0.932 | 0.662 |

| Competitive Pressure | CP1 CP2 CP3 | 0.819 0.859 0.815 | 0.870 | 0.691 |

| Top Management System | TMS4 TMS5 TMS6 | 0.720 0.705 0.727 | 0.761 | 0.515 |

| Technology Readiness | TR2 TR3 TR5 | 0.848 0.852 0.846 | 0.885 | 0.720 |

| Compatibility | COM2 COM3 COM4 COM5 | 0.838 0.879 0.870 0.815 | 0.913 | 0.724 |

| Relative Advantage | RA3 RA4 RA6 | 0.808 0.789 0.793 | 0.839 | 0.635 |

| Reliability | REL1 REL2 REL3 REL4 | 0.855 0.841 0.852 0.803 | 0.904 | 0.703 |

| Security | SEC2 SEC3 SEC5 | 0.903 0.918 0.881 | 0.928 | 0.811 |

| Regulatory Policy | RP1 RP3 | 0.903 0.889 | 0.890 | 0.802 |

| Cloud Computing Adoption | CCA1 | 0.989 | 0.989 | 0.989 |

| Latent Variables | CCA | COM | CP | CUL | RA | REL | RP | SEC | TMS | TR |

|---|---|---|---|---|---|---|---|---|---|---|

| CCA | 1.000 | |||||||||

| COM | 0.785 | 0.851 | ||||||||

| CP | 0.639 | 0.576 | 0.831 | |||||||

| CUL | −0.805 | −0.684 | −0.538 | 0.813 | ||||||

| RA | 0.564 | 0.520 | 0.402 | −0.458 | 0.797 | |||||

| REL | 0.763 | 0.820 | 0.536 | −0.661 | 0.450 | 0.838 | ||||

| RP | 0.734 | 0.629 | 0.510 | −0.929 | 0.432 | 0.612 | 0.896 | |||

| SEC | 0.754 | 0.627 | 0.522 | −0.787 | 0.381 | 0.610 | 0.747 | 0.901 | ||

| TMS | 0.621 | 0.478 | 0.414 | −0.610 | 0.239 | 0.474 | 0.570 | 0.556 | 0.717 | |

| TR | 0.771 | 0.680 | 0.491 | −0.722 | 0.467 | 0.722 | 0.663 | 0.685 | 0.510 | 0.849 |

| Constructs | CUL | CP | TMS | TR | COM | RA | REL | SEC | RP | CCA |

|---|---|---|---|---|---|---|---|---|---|---|

| CUL1 | 0.831 | −0.479 | −0.518 | −0.595 | −0.605 | −0.444 | −0.576 | −0.703 | −0.903 | −0.678 |

| CUL2 | 0.865 | −0.523 | −0.554 | −0.671 | −0.661 | −0.431 | −0.624 | −0.742 | −0.798 | −0.745 |

| CUL3 | 0.834 | −0.434 | −0.503 | −0.593 | −0.520 | −0.326 | −0.518 | −0.633 | −0.889 | −0.637 |

| CUL4 | 0.876 | −0.455 | −0.520 | −0.630 | −0.620 | −0.399 | −0.601 | −0.690 | −0.780 | −0.710 |

| CUL5 | 0.818 | −0.364 | −0.431 | −0.559 | −0.568 | −0.350 | −0.540 | −0.609 | −0.694 | −0.645 |

| CUL8 | 0.727 | −0.392 | −0.422 | −0.510 | −0.475 | −0.332 | −0.451 | −0.525 | −0.602 | −0.559 |

| CUL9 | 0.730 | −0.402 | −0.513 | −0.532 | −0.413 | −0.309 | −0.424 | −0.550 | −0.596 | −0.589 |

| CP1 | −0.431 | 0.819 | 0.325 | 0.360 | 0.464 | 0.327 | 0.419 | 0.431 | 0.418 | 0.515 |

| CP2 | −0.454 | 0.859 | 0.343 | 0.475 | 0.465 | 0.319 | 0.512 | 0.453 | 0.439 | 0.534 |

| CP3 | −0.456 | 0.815 | 0.363 | 0.386 | 0.504 | 0.355 | 0.405 | 0.418 | 0.415 | 0.543 |

| TMS4 | −0.661 | 0.427 | 0.720 | 0.526 | 0.445 | 0.292 | 0.459 | 0.590 | 0.604 | 0.573 |

| TMS5 | −0.228 | 0.195 | 0.705 | 0.227 | 0.242 | 0.055 | 0.217 | 0.214 | 0.219 | 0.336 |

| TMS6 | −0.267 | 0.178 | 0.727 | 0.233 | 0.271 | 0.084 | 0.260 | 0.260 | 0.269 | 0.340 |

| TR2 | −0.557 | 0.376 | 0.401 | 0.848 | 0.545 | 0.340 | 0.593 | 0.534 | 0.506 | 0.605 |

| TR3 | −0.625 | 0.442 | 0.442 | 0.852 | 0.614 | 0.453 | 0.652 | 0.571 | 0.580 | 0.692 |

| TR5 | −0.650 | 0.426 | 0.452 | 0.846 | 0.570 | 0.387 | 0.590 | 0.636 | 0.597 | 0.662 |

| COM2 | −0.563 | 0.479 | 0.399 | 0.560 | 0.838 | 0.491 | 0.625 | 0.512 | 0.518 | 0.666 |

| COM3 | −0.619 | 0.532 | 0.422 | 0.577 | 0.879 | 0.448 | 0.679 | 0.551 | 0.574 | 0.676 |

| COM4 | −0.591 | 0.472 | 0.387 | 0.591 | 0.870 | 0.429 | 0.719 | 0.548 | 0.529 | 0.673 |

| COM5 | −0.591 | 0.475 | 0.130 | 0.587 | 0.815 | 0.402 | 0.769 | 0.520 | 0.520 | 0.657 |

| RA3 | −0.392 | 0.349 | 0.213 | 0.374 | 0.444 | 0.808 | 0.397 | 0.291 | 0.363 | 0.466 |

| RA4 | −0.328 | 0.276 | 0.112 | 0.337 | 0.375 | 0.789 | 0.309 | 0.265 | 0.317 | 0.401 |

| RA6 | −0.369 | 0.330 | 0.235 | 0.399 | 0.420 | 0.793 | 0.362 | 0.349 | 0.348 | 0.474 |

| REL1 | −0.572 | 0.478 | 0.434 | 0.621 | 0.767 | 0.418 | 0.855 | 0.538 | 0.514 | 0.662 |

| REL2 | −0.525 | 0.397 | 0.335 | 0.613 | 0.669 | 0.349 | 0.841 | 0.453 | 0.483 | 0.619 |

| REL3 | −0.545 | 0.445 | 0.394 | 0.603 | 0.664 | 0.360 | 0.852 | 0.512 | 0.523 | 0.643 |

| REL4 | −0.573 | 0.475 | 0.422 | 0.582 | 0.647 | 0.378 | 0.803 | 0.541 | 0.530 | 0.633 |

| SEC2 | −0.720 | 0.467 | 0.476 | 0.605 | 0.596 | 0.390 | 0.558 | 0.903 | 0.680 | 0.684 |

| SEC3 | −0.706 | 0.442 | 0.494 | 0.631 | 0.525 | 0.317 | 0.544 | 0.918 | 0.676 | 0.671 |

| SEC5 | −0.701 | 0.502 | 0.532 | 0.614 | 0.570 | 0.322 | 0.547 | 0.881 | 0.661 | 0.680 |

| RP1 | −0.831 | 0.479 | 0.518 | 0.595 | 0.605 | 0.444 | 0.576 | 0.703 | 0.903 | 0.678 |

| RP3 | −0.834 | 0.434 | 0.503 | 0.593 | 0.520 | 0.326 | 0.518 | 0.633 | 0.889 | 0.637 |

| CCA1 | −0.805 | 0.639 | 0.621 | 0.771 | 0.785 | 0.564 | 0.763 | 0.754 | 0.734 | 1.000 |

| H | Relation | Original Sample | Standard Deviation | T Value | p Value | Finding |

|---|---|---|---|---|---|---|

| H1 | RA -> CCA | 0.131 | 0.030 | 4.392 | 0.000 | Supported |

| H2 | COM -> CCA | 0.179 | 0.044 | 3.562 | 0.000 | Supported |

| H3 | SEC -> CCA | 0.176 | 0.038 | 4.591 | 0.000 | Supported |

| H4 | REL -> CCA | 0.106 | 0.041 | 2.601 | 0.009 | Supported |

| H5 | TMS -> CCA | 0.136 | 0.027 | 4.971 | 0.000 | Supported |

| H6 | TR -> CCA | 0.158 | 0.037 | 4.319 | 0.000 | Supported |

| H7 | RP -> CCA | 0.149 | 0.059 | 2.550 | 0.011 | Supported |

| H8 | CP -> CCA | 0.107 | 0.031 | 3.470 | 0.001 | Supported |

| H9 | CUL -> CCA | −0.299 | 0.070 | 4.286 | 0.000 | Supported |

| H10 | RA * CUL -> CCA | −0.049 | 0.024 | 2.081 | 0.038 | Supported |

| H11 | COM * CUL -> CCA | −0.133 | 0.046 | 2.902 | 0.004 | Supported |

| H12 | SEC * CUL -> CCA | −0.075 | 0.036 | 2.084 | 0.037 | Supported |

| H13 | REL * CUL -> CCA | −0.124 | 0.043 | 2.927 | 0.003 | Supported |

| H14 | TMS * CUL -> CCA | −0.005 | 0.024 | 0.230 | 0.818 | Not Supported |

| H15 | TR * CUL -> CCA | −0.011 | 0.036 | 0.288 | 0.773 | Not Supported |

| H16 | CP * CUL -> CCA | −0.026 | 0.029 | 0.909 | 0.364 | Not Supported |

| H17 | RP * CUL -> CCA | −0.099 | 0.042 | 2.367 | 0.018 | Supported |

| Predecessor Latent Variables | Dependent Variable | (R2) Incl | (R2) Excl | Effect size (f2) | Remarks |

|---|---|---|---|---|---|

| Culture | CCA | 0.84 | 0.79 | 0.23 | Medium |

| Competitive Pressure | CCA | 0.84 | 0.79 | 0.12 | Small |

| Top Management System | CCA | 0.84 | 0.76 | 0.39 | Large |

| Technology Readiness | CCA | 0.84 | 0.80 | 0.15 | Small |

| Compatibility | CCA | 0.84 | 0.79 | 0.22 | Medium |

| Relative Advantage | CCA | 0.84 | 0.76 | 0.38 | Large |

| Reliability | CCA | 0.84 | 0.82 | 0.06 | Small |

| Security | CCA | 0.84 | 0.82 | 0.04 | Small |

| Regulatory Policy | CCA | 0.84 | 0.80 | 0.20 | Medium |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussein Alghushami, A.; Zakaria, N.H.; Mat Aji, Z. Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen. Appl. Sci. 2020, 10, 8098. https://doi.org/10.3390/app10228098

Hussein Alghushami A, Zakaria NH, Mat Aji Z. Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen. Applied Sciences. 2020; 10(22):8098. https://doi.org/10.3390/app10228098

Chicago/Turabian StyleHussein Alghushami, Abdullah, Nur Haryani Zakaria, and Zahurin Mat Aji. 2020. "Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen" Applied Sciences 10, no. 22: 8098. https://doi.org/10.3390/app10228098

APA StyleHussein Alghushami, A., Zakaria, N. H., & Mat Aji, Z. (2020). Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen. Applied Sciences, 10(22), 8098. https://doi.org/10.3390/app10228098