1. Introduction

The big data technology market grows at a 27% compound annual growth rate (CAGR), and big data market opportunities will reach over 203 billion dollars in 2020 [

1]. Big data application systems [

2,

3], abbreviated as big data applications, refer to the software systems that can collect, process, analyze or predict a large amount of data by means of different platforms, tools and mechanisms. Big data applications are now increasing, being used in many areas, such as recommendation systems, monitoring systems and statistical applications [

4,

5]. Big data applications are associated with the so-called 4V attributes, e.g., volume, velocity, variety and veracity [

6]. Due to the large amount of data generated, the fast velocity of arriving data and the various types of heterogeneous data, the quality of data is far from ideal, which makes the software quality of big data applications far from perfect [

7]. For example, due to the volume and velocity attributes [

8,

9], the data generated from big data applications are extremely numerous and more so with high speed Internet, which may affect data accuracy and data timeliness [

10], and consequently lead to software quality problems, such as performance and availability issues [

10,

11]. Due to the huge variety of heterogeneous data [

12,

13], data types and formats are increasingly rich, including structured, semi-structured and unstructured, which may affect data accessibility and data scalability, and hence lead to usability and scalability problems.

In general, quality assurance (QA) is a way to detect or prevent mistakes or defects in manufactured software/products and avoid problems when solutions or services are delivered to customers [

14]. However, compared with traditional software systems, big data applications raise new challenges for QA technologies due to the four big data attributes (for example, the velocity of arriving data, and the volume of data) [

15]. Many scholars have illustrated current QA problems for big data applications [

16,

17]. For example, it is a hard task to validate the performance, availability and accuracy of a big data prediction system due to the large-scale data size and the feature of timeliness. Due to the volume and variety attributes, keeping big data recommendation systems scalable is very difficult. Therefore, QA technologies of big data applications are becoming a hot research topic.

Compared with traditional applications, big data applications have the following special characteristics: (a) statistical computation based on large-scale, diverse formats, with structured and non-structured data; (b) machine learning and knowledge-based system evolution; (c) intelligent decision-making with uncertainty; and (d) more complex visualization requirements. These new features of big data applications need novel QA technologies to ensure quality. For example, compared with data in traditional applications (graphics, images, sounds, documents, etc.), there is a substantial amount of unstructured data in big data applications. These data are usually heterogeneous and lack integration. Since the handling of large-scale data is not required in the traditional applications, traditional testing processes lack testing methods for large-scale data, especially in the performance testing. Some novel QA technologies are urgently needed to solve these problems.

In the literature, many scholars have investigated the use of different QA technologies to assure the quality of big data applications [

15,

18,

19,

20]. Some papers have presented overviews on quality problems of big data applications. Zhou et al. [

18] presented the first comprehensive study on the quality of the big data platform. For example, they investigated the common symptoms, causes and mitigation strategies of quality issues, including hardware faults, code defects and so on. Juddoo [

19] et al. have systematically studied the challenges of data quality in the context of big data. Gao et al. [

15] did a profound study on the validation of big data and QA, including the basic concepts, issues and validation process. They also discussed the big data QA focuses, challenges and requirements. Zhang et al. [

20] introduced big data attributes and quality attributes; some quality assurance technologies such as testing and monitoring were also discussed. Although these authors have proposed a few QA technologies for big data applications, publications on QA technologies for big data applications remain scattered in the literature, and this hampers the analysis of the advanced technologies and the identification of novel research directions. Therefore, a systematic study of QA technologies for big data applications is still necessary and critical.

In this paper, we provide an exhaustive survey of QA technologies that have significant roles in big data applications, covering 83 papers published from Jan. 2012 to Dec. 2019. The major purpose of this study was to look into literature that is related to QA technologies for big data applications. Then, a comprehensive reference list concerning challenges of QA technologies for big data applications was prepared. In summary, the major contributions of the paper are described in the following:

The elicitation of big data attributes, and the quality problems they introduce to big data applications;

The identification of the most frequently used big data QA technologies, together with an analysis of their strengths and limitations;

A discussion of existing strengths and limitations of each kind of QA technology;

The proposed QA technologies are generally validated through real cases, which provides a reference for big data practitioners.

Our research resulted in four main findings which are summarized in

Table 1.

The findings of this paper contribute general information for future research, as the quality of big data applications will become increasingly more important. They also can help developers of big data applications apply suitable QA technologies. Existing QA technologies have a certain effect on the quality of big data applications; however, some challenges still exist, such as the lack of quantitative models and algorithms.

The rest of the paper is structured as follows. The next section reviews related background and previous studies.

Section 3 describes our systematic approach for conducting the review.

Section 4 reports the results of themes based on four research questions raised in

Section 3.

Section 5 provides the main findings of the survey and provides existing research challenges.

Section 6 describes some threats in this study. Conclusions and future research directions are given in the final section.

2. Related Work

There is no systematic literature review (including a systematic mapping study, a systematic study and a literature review) that focuses on QA technologies for big data applications. However, quality issues are prevalent in big data [

21], and the quality of big data applications has attracted attention and been the focus of research in previous studies. In the following, we first try to describe all the relevant reviews that are truly related to the quality of big data applications.

Zhou et al. [

18] presented a comprehensive study on the quality of the distributed big data platform. Massive workloads and scenarios make the data scale keep growing rapidly. Distributed storage systems (GFS, HDFS) and distributed data-parallel execution engines (MapReduce, Hadoop and Dryad) need to improve the processing ability of real-time data. They investigated common symptoms, causes and mitigation measures for quality problems. In addition, there will be different types of problems in big data computing, including hardware failure, code defects, etc. Their discovery is of great significance to the design and maintenance of future distributed big data platform.

Juddoo et al. [

19] systematically studied the challenges of data quality in the context of big data. They mainly analyzed and proposed the data quality technologies that would be more suitable for big data in a general context. Their goal was to probe diverse components and activities forming parts of data quality management, metrics, dimensions, data quality rules, data profiling and data cleansing. In addition, the volume, velocity and variety of data may make it impossible to determine the data quality rules. They believed that the measurement of big data attributes is very important to the users’ decision-making. Finally, they also listed existing data quality challenges.

Gao and Tao [

4,

15] first provided detailed discussions for QA problems and big data validation, including the basic concepts and key points. Then they discussed big data applications influenced by big data features. Furthermore, they also discussed big data validation processes, including data collection, data cleaning, data cleansing, data analysis, etc. In addition, they summarized the big data QA issues, challenges and needs.

Zhang et al. [

20] further considered QA problems of big data applications, and explored existing methods and tools to improve the quality of distributed platforms and systems; they summarized six QA technologies combined with big data attributes, and explored the big data attributes of existing QA technologies.

Liu et al. [

22] pointed out and summarized the issues faced by big data research in data collection, processing and analysis in the current big data area, including uncertain data collection, incomplete information, big data noise, representability, consistency, reliability and so on.

For the distributed systems, Ledmi et al. [

23] introduced the basic concept of distributed systems and their main fault types. In order to ensure the stable operation of cluster and grid computing and the cloud, the system needs to have a certain fault tolerance ability. Therefore, various fault-tolerant technologies were discussed in detail, including active fault-tolerance and reactive fault-tolerance. Niedermaier et al. [

24] found that the dynamics and complexity of distributed systems bring more challenges to system monitoring. Although there are many new technologies, it is difficult for companies to carry out practical operations. Therefore, they conducted an industry study from different stakeholders involved in monitoring; summarized the existing challenges and requirements; and proposed solutions from various angles.

To sum up, for the big data applications, Zhou et al. [

18] and Liu et al. [

22] mainly studied the problems and common failures. Gao and Tao [

4,

15] mainly discussed the QA problems from the perspective of data processing. Juddoo et al. [

19] surveyed the topic of data quality technologies. Zhang et al. [

20] considered data attributes, QA technologies and existing quality challenges. In addition to the six QA technologies identified in [

20], two QA technologies, specification and analysis, are newly added in this paper. Additionally, the QA technologies are introduced and discussed in more detail. Moreover, the strengths and limitations of QA technologies are compared and concluded. We also investigated empirical evidence of QA technologies which can provide references for practitioner.

3. Research Method

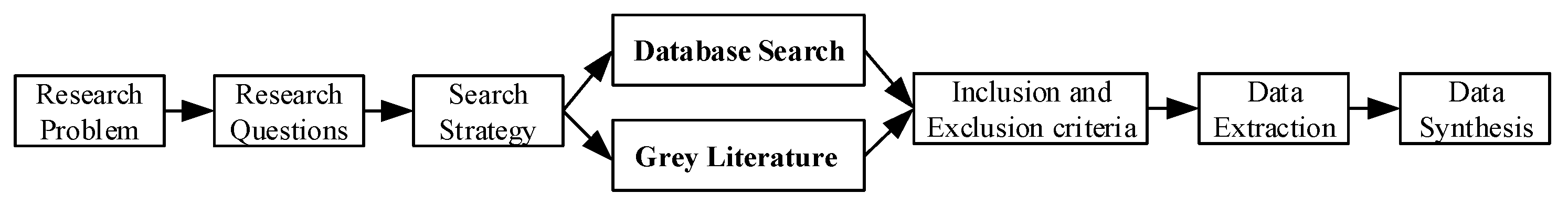

In this work, the systematic literature review (SLR) approach proposed by Kitchenham et al. [

25] was used to extract QA technologies for big data applications and related questions. Based on the SLR and our research problem, research steps can be raised, as shown in

Figure 1. Through these research steps, we can obtain the desired results.

3.1. Research Questions

We used the goal–question–metric (GQM) perspectives (i.e., purpose, issue, object and viewpoint) [

26] to draw up the aim of this study. The result of the application of the goal–question–metric approach is the specification of a measurement system targeting the given set of problems and a set of rules for understanding the measurement data [

27].

Table 2 provides the purpose, issue, object and viewpoint of the research topic.

Research questions can usually help us to perform an in-depth study and achieve purposeful research. Based on this research, there are four research questions.

Table 3 shows four research questions that we translated from

Table 2. RQ1 concentrates on identifying the quality attributes that are focused on in QA of the big data applications and analyzing the factors influencing them. RQ2 concentrates on identifying and classifying existing QA technologies and understanding the effects of them. RQ3 concentrates on analyzing the strengths and limitations of those QA technologies. RQ4 concentrates on validating the proposed QA technologies through real cases and providing a reference for big data practitioners.

3.2. Search Strategy

The goal of this systematic review is thoroughly examining the literature on QA technologies for big data applications. Three main phases of SLR are presented by EBSE (evidence-based software engineering) [

28] guidelines that include planning, execution and reporting results. Moreover, the search strategy is an indispensable part and consists of two different stages.

Stage 1: Database search.

Before we carried out automatic searches, the first step was the definition and validation of the search string to be used for automated search. This process started with pilot searches on seven databases, as shown in

Table 4. We combined different keywords that are related to research questions.

Table 5 shows the search terms we used in the seven databases, and the search string is defined in the following:

(a AND (b OR c) AND (d OR e OR f OR g)) IN (Title or Abstract or Keyword).

We used a “quasi-gold standard” [

29] to validate and guarantee the search string. We use IEEE and ACM libraries as representative search engines to perform automatic searches and refine the search string until all the search items met the requirements and the number of remaining papers was minimal. Then, we used the defined search string to carry out automatic searches. We chose ACM Digital Library, IEEE Xplore Digital Library, Engineering Village, Springer Link, Scopus, ISI Web of Science and Science Direct because those seven databases are the largest and most complete scientific databases that include computer science. We manually downloaded and searched the proceedings if venues were not included in the digital libraries. After the automatic search, a total of 3328 papers were collected.

Stage 2: Gray literature.

To cover gray literature, some alternative sources were investigated as follows:

In order to adapt the search terms to Google Scholar and improve the efficiency of the search process, search terms were slightly modified. We searched and collected 1220 papers according to the following search terms:

- -

(big data AND (application OR system) AND ((quality OR performance OR QA) OR testing OR analysis OR verification OR validation))

- -

(big data AND (application OR system) AND (quality OR performance OR QA) AND (technique OR method))

- -

(big data AND (application OR system) AND (quality OR performance OR QA) AND (problem OR issue OR question))

Through two stages, we found 4548 related papers. Only 102 articles met the selection strategy (discussed below) and are listed in

Section 3.3. Then, we scanned all the related results according to the snowball method [

30], and we referred to the references cited by the selected paper and included them if they were appropriate. We expanded the number of papers to 121; for example, we used this technique to find [

31], which corresponds to our research questions from the references in [

32].

To better manage the paper data, we used NoteExpress (

https://noteexpress.apponic.com/), which is a professional-level document retrieval and management system. Its core functions cover all aspects of “knowledge acquisition, management, application and mining.” It is a perfect tool for academic research and knowledge management. However, the number of these results is too large. Consequently, we filtered the results by using the selection strategy described in the next section.

3.3. Selection Strategy

In this subsection, we focus on the selection of research literature. According to the search strategy, much of the returned literature is unnecessary. It is essential to define the selection criteria (inclusion and exclusion criteria) for selecting the related literature. We describe each step of our selection process in the following:

Combination and duplicate removal. In this step, we sort out the results that we obtain from stage 1 and stage 2 and remove the duplicate content.

Selection of studies. In this step, the main objective is to filter all the selected literature in light of a set of rigorous inclusion and exclusion criteria. There are five inclusion and four exclusion selection criteria we have defined as described below.

Exclusion of literature during data extraction. When we read a study carefully, it can be selected or rejected according to the inclusion and exclusion criteria in the end.

When all inclusion criteria are met, the study is selected; otherwise, it is discarded if any exclusion criteria are met. According to the research questions and research purposes, we identified the following inclusion and exclusion criteria.

A study should be chosen if it satisfies the following inclusion criteria:

- (1)

The study of the literature focuses on the quality of big data applications or big data systems, in order to be aligned with the theme of our study.

- (2)

One or more of our research questions must be directly answered.

- (3)

The selected literature must be in English.

- (4)

The literature must consist of journal papers or papers published as part of conference or workshop proceedings.

- (5)

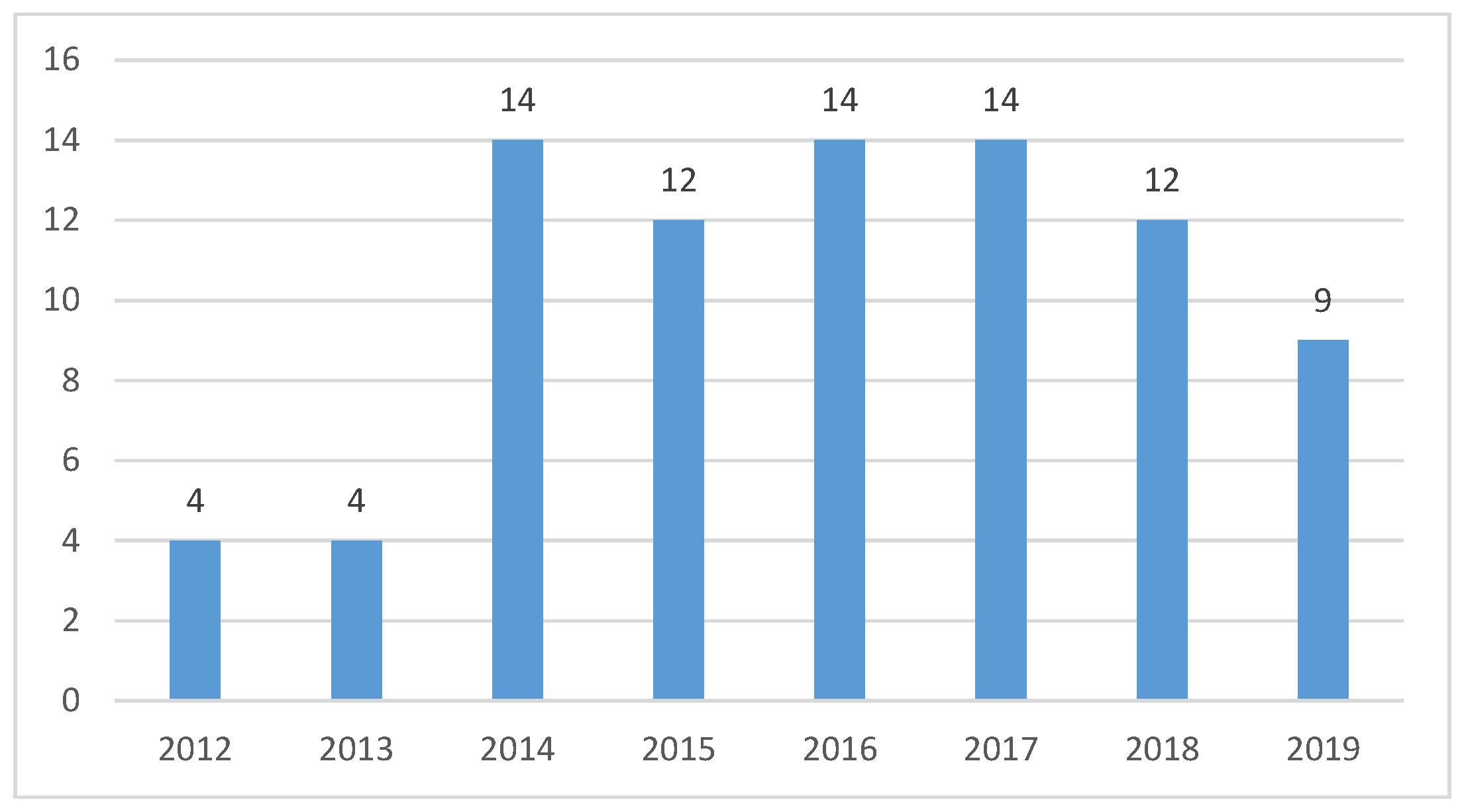

Studies must have been published in or after 2012. From a simple search (for which we use the search item “big data application”) in the EI (engineering index) search library, we can see that most of the papers on big data applications or big data systems were published after 2011, as shown in

Figure 2. The literature published before 2012 rarely took into account the quality of big data applications or systems. By reading the relevant literature abstracts, we found that these earlier papers were not relevant to our subject, so we excluded them.

The main objective of our study was to determine the current technologies of ensuring the quality of big data applications and the challenges associated with the quality of big data applications. This means that the content of the article should be related to the research questions of this paper.

A study should be discarded if it satisfies any one of the following exclusion criteria:

- (1)

It is related to big data but not related to the quality of big data applications. Our goal is to study the quality of big data applications or services, rather than the data quality of big data, although data quality can affect application quality.

- (2)

It does not explicitly discuss the quality of big data applications and the impact of big data applications or quality factors of big data systems.

- (3)

Duplicated literature. Many articles have been included in different databases, and the search results contain repeated articles. For conference papers that meet our selection criteria but are also extended to journal publications, we choose journal publications because they are more comprehensive in content.

- (4)

Studies that are not related to the research questions.

Inclusion criteria and exclusion criteria are complementary. Consequently, both the inclusion and exclusion criteria were considered. In this way, we could achieve the desired results. A detailed process of identifying relevant literature is presented in

Figure 3. Obviously, the analysis of all the literature presents a certain degree of difficulty. First, by applying these inclusion and exclusion criteria, two researchers separately read the abstracts of all studies selected in the previous step to avoid prejudice as much as possible. Of the initial studies, 488 were selected in this process. Second, for the final selection, we read the entire initial papers and then selected 102 studies. Third, we expanded the number of final studies to 121 according to our snowball method. Conflicts were resolved by extensive discussion. We excluded a number of papers because they were not related and had 83 primary studies at the end of this step.

In the process, the first author and the second author worked together to develop research questions and search strategies, and the second author and four students of the first author executed the search plan together. During the process of finalizing the primary articles, all members of the group had a detailed discussion on whether the articles excluded by only few researchers were in line with our research topic.

3.4. Quality Assessment

After screening the final primary studies by inclusion and exclusion criteria, the criteria for the quality of the study were determined according to the guidelines proposed by Kitchenham and Charters [

33]. The corresponding quality checklist is shown in

Table 6. The table includes 12 questions that consider four research quality dimensions, including research design, behavior, analysis and conclusions. For each quality item we set a value of 1 if the authors put forward an explicit description, 0.5 if there was a vague description and 0 if there was no description at all. The author and his research assistant applied the quality assessment method to each major article, compared the results and discussed any differences until a consensus was reached. We scored each possible answer for each question in the main article and converted it into a percentage after coming to an agreement. The presentation quality assessment results of the preliminary study indicate that most studies have deep descriptions of the problems and their background, and most studies have fully and clearly described the contributions and insights. Nevertheless, some studies do not describe the specific division of labor in the method introduction, and there is a lack of discussion of the limitations of the proposed method. However, the total average score of 8.8 out of 12 indicates that the quality of the research is good, supporting the validity of the extracted data and the conclusions drawn therefrom.

3.5. Data Extraction

The goal of this step was to design forms to identify and collect useful and relevant information from the selected primary studies so that it could answer our research questions proposed in

Section 3.1. To carry out an in-depth analysis, we could apply the data extraction form to all selected primary studies.

Table 7 shows the data extraction form. According to the data extraction form, we collected specific information in an excel file (

https://github.com/QXL4515/QA-techniques-for-big-data-application). In this process, the first author and the second author jointly developed an information extraction strategy to lay the foundation for subsequent analysis. In addition, the third author validated and confirmed this research strategy.

3.6. Data Synthesis

Data synthesis is used to collect and summarize the data extracted from primary studies. Moreover, the main goal is to understand, analyze and extract current QA technologies for big data applications. Our data synthesis was specifically divided into two main phases.

Phase 1: We analyzed the extracted data (most of which are included in

Table 7 and some were indispensable in the research process) to determine the trends and collect information about our research questions and record them. In addition, we classified and analyzed articles according to the research questions proposed in

Section 3.1.

Phase 2: We classified the literature according to different research questions. The most important task was to classify the articles according to the QA technologies through the relevant analysis.

4. Results

This section, deeply analyzing the primary studies provides an answer to the four research questions presented in

Section 3.

In addition,

Figure 4,

Figure 5 and

Figure 6 provide some simple statistics.

Figure 4 presents how our primary studies are distributed over the years.

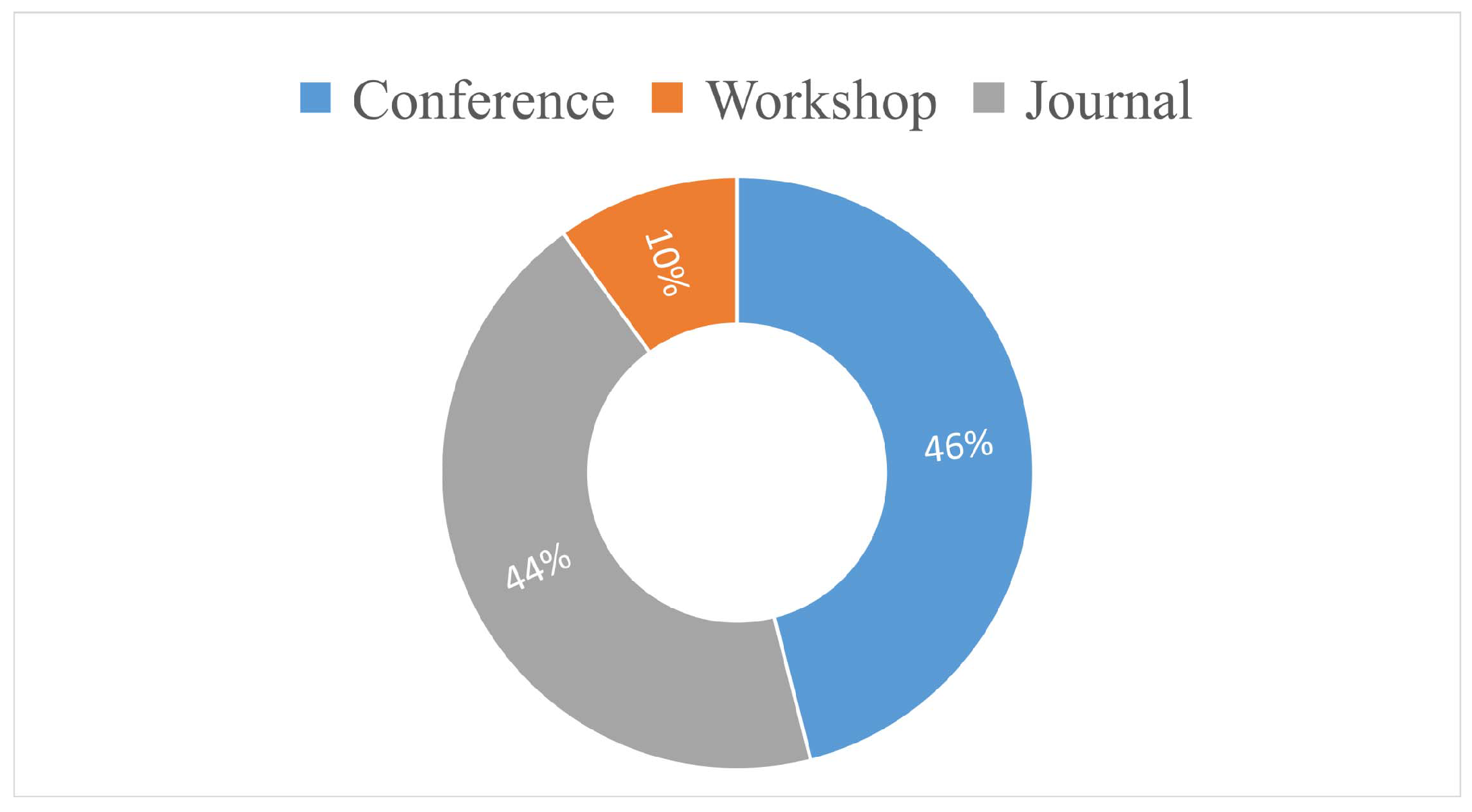

Figure 5 groups the primary studies according to the type of publication.

Figure 6 counts the number or studies retrieved from different databases.

4.1. Overview of the Main Concepts

While answering the four research questions identified in previous sections, we will utilize and correlate three different dimensions: big data attributes, data quality parameters and software quality attributes. (The output of this analysis is reported in

Section 5.2.)

Big data attributes: Big data applications are associated with the so-called 4V attributes, e.g., volume, velocity, variety and veracity [

6]. In this study, we take into account only three of the 4V big data attributes (excluding the veracity one) for the following reasons: First, through the initial reading of the literature, many papers are not concerned about the veracity. Second, big data currently have multi-V attributes; only three attributes (volume, variety and velocity) are recognized extensively [

34,

35].

Data quality parameters: Data quality parameters describe the measure of the quality of data. Since data are an increasingly vital part of applications, data quality becomes an important concern. Poor data quality could affect enterprise revenue, waste company resources, introduce lost productivity and even lead to wrong business decisions [

15]. According to the Experian Data Quality global benchmark report (Erin Haselkorn, “New Experian Data Quality research shows inaccurate data preventing desired customer insight”, Posted on Jan 29 2015 at URL

http://www.experian.com/blogs/news/2015/01/29/data-quality-research-study/), U.S. organizations claim that 32 percent of their data are wrong on average. Since data quality parameters are not universally agreed upon, we extracted them by analyzing papers [

15,

36,

37].

Software quality attributes: Software quality attributes describe the attributes that software systems shall expose. We started from the list provided in the ISO/IEC 25010:2011 standard and selected those quality attributes that are mostly recurrent in the primary studies. A quality model consists of five characteristics (correctness, performance, availability, scalability and reliability) that relate to the outcome of the interaction when a product is used in a particular context. This system model is applicable to the complete human–computer system, including both computer systems in use and software products in use.

QA technologies: Eight technologies (specification, analysis, architectural choice, fault tolerance, verification, testing, monitoring, fault and failure prediction) have been developed for the static attributes of software and dynamic attributes of the computer system. They can be classified into two types: implementation-specific technologies and process-specific technologies.

The implementation-specific QA technologies include:

Specification: A specification refers to a type of technical standard. The specification includes requirement specification, functional specification and non-functional specification of big data applications.

Architectural choice: Literature indicates that QA is achieved by selecting model-driven architecture (MDA) for helping developers creating software that meets non-functional properties, such as, reliability, safety and efficiency. As practitioners chose MDA and nothing else, it can be concluded that this solution is superior to other architectural choices.

Fault tolerance: Fault tolerance technique is used to improve the capability of maintaining performance of the big data application in cases of faults, such as correctness, reliability and availability.

The process-specific QA technologies include:

Analysis: The analysis technique is used to analyze the main factors which can affect the big data applications’ quality, such as performance, correctness and others, which plays an important role in the design phase of big data applications.

Verification: Verification relies on existing technologies to validate whether the big data applications satisfy desired quality attributes. Many challenges for big data applications appear due to the 3V properties. For example, the volume and the velocity of data may make it a difficult task to validate the correctness attribute of big data applications.

Testing: Different kinds of testing techniques are used to validate whether big data applications conform to requirement specifications. Using this technique, inconsistent or contradictory with the requirement specifications can be identified.

Monitoring: Runtime monitoring is an effective way to ensure the overall quality of big data applications. However, runtime monitoring may occur additional loading problems for big data applications. Hence, it is necessary to improve the performance of big data monitoring.

Fault and failure prediction: Big data applications faces many failures. If the upcoming failure can be predicted, the overall quality of big data applications may be greatly improved.

4.2. Identify the Focused Quality Attributes in Big Data Applications (RQ1)

The goal of this section is to answer RQ1 (which of the quality attributes are focused in QA of big data applications?). Besides identifying the quality attributes focused in the primary studies, we also analyze the influencing factors brought by the big data attributes, so that the factors can be taken into consideration in QA of big data applications.

We use the ISO/IEC 25010:2011 to extract the quality attributes of big data applications.

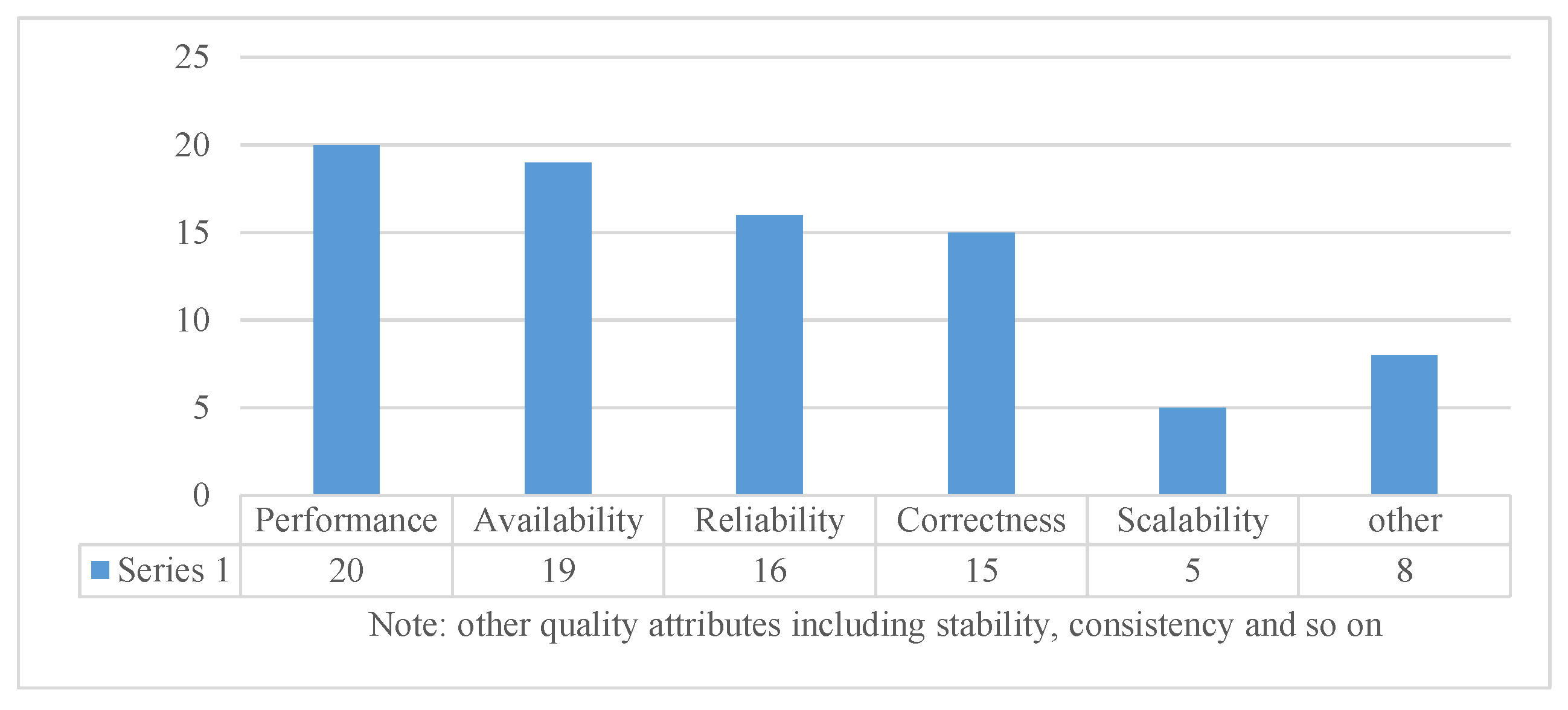

Table 8 provides the statistical distribution of different quality attributes. According to statistics, we identify related quality attributes, as shown in

Figure 7. For some articles that may involve more than one quality attribute, such as [

38,

39], we choose the main quality attribute that they convey.

From the 83 primary studies, some quality attributes are discussed, including correctness, performance, availability, scalability, reliability, efficiency, flexibility, robustness, stability, interoperability and consistency.

From

Figure 7, we can see that the five main quality attributes have been discussed in the 83 primary studies. However, there are fewer articles focused on other attributes, such as stability, consistency, efficiency and so on.

Correctness: Correctness measures the probability that big data applications can “get things right.” If the big data application cannot guarantee the correctness, then it will have no value at all. For example, a weather forecast system that always provides the wrong weather is obviously not of any use. Therefore, correctness is the first attribute to be considered in big data applications. The papers [

41,

44] provide the fault tolerance mechanism to guarantee the normal operation of applications. If the big data application runs incorrectly, it will cause inconvenience or even loss to the user. The papers [

40,

45] provide the testing method to check the fault of big data applications to assure the correctness.

Performance: Performance refers to the ability of big data applications to provide timely services, specifically in three areas, including the average response time, the number of transactions per unit time and the ability to maintain high-speed processing. Due to the large amounts of data, performance is a key topic in big data applications. In

Table 8, we show the many papers that refer to the performances of big data applications. The major purpose of focusing on the performance problem is to handle big data with limited resources in big data applications. To be precise, the processing performance of big data applications under massive data scenarios is its major selling point and breakthrough. According to the relevant literature, we can see that common performance optimization technologies for big data applications are generally divided into two parts [

44,

55,

56,

71,

72,

94]. The first one consists of hardware and system-level observations to find specific bottlenecks and make hardware or system-level adjustments. The second one is to achieve optimization mainly through adjustments to specific software usage methods.

Availability: Availability refers to the ability of big data applications to perform a required function under stated conditions. The rapid growth of data has made it necessary for big data applications to manage data streams and handle an impressive volume, and since these data types are complex (variety), the operation process may create different kinds of problems. Consequently, it is important to ensure the availability of big data applications.

Scalability: Scalability refers to the ability of large data applications to maintain service quality when users and data volumes increase. For a continuous stream of big data, processing systems, storage systems, etc., should be able to handle these data in a scalable manner. Moreover, the system would be very complex for big data applications. For better improvement, the system must be scalable. Paper [

89] proposes a flexible data analytic framework for big data applications, and the framework can flexibly handle big data with scalability.

Reliability: Reliability refers to the ability of big data applications to apply the specified functions within the specified conditions and within the specified time. Reliability issues are usually caused by unexpected exceptions in the design and undetected code defects. For example, paper [

39] uses a monitoring technique to monitor the operational status of big data applications in real time so that failures can occur in real time and developers can effectively resolve these problems.

Although big data applications have many other related quality attributes, the focal ones are the five mentioned above. Therefore, these five quality attributes are critical to ensuring the quality of big data applications and are the main focus of this survey.

To better perform QA of the focal quality attributes, the factors that may affect the quality attributes should be identified. We extracted the relationships existing among the big data attributes, the data quality parameters and the software quality attributes to analyze the influencing factors brought by big data attributes. We have finalized five data quality parameters, including data correctness, data completeness, data timeliness, data accuracy and data consistency. Data correctness refers to the correctness of data in transmission, processing and storage. Data completeness is a quantitative measurement that is used to evaluate how much valid analytical data is obtained compared to the planned number [

15] and is usually expressed as a percentage of usable analytical data. Data timeliness refers to real-time and effective data processing [

106]. Data accuracy refers to the degree to which data is equivalent to the corresponding real value [

107]. Data consistency is useful to evaluate the consistency of given datasets from different perspectives [

43]. The relationships that we found on the primary studies are reported in

Table 9 and discussed below.

Volume: The datasets used in industrial environments are huge, usually measured in terabytes, or even exabytes. According to [

17,

114], the larger the volume of the data, the greater the probability that the data will be modified, deleted and so on. In general, increased data reduces data completeness [

41,

107]. How to deal with a massive amount of data in a very short time is a vast challenge. If these data cannot be processed in a timely manner, the value of these data will decrease and the original goal of building big data systems will be lost [

38]. With incorrect data, correctness and availability can not be assured. Application performance will decline as data volume grows. When the amount of data reaches a certain size, the application crashes and cannot provide mission services [

38], which also affects reliability. Additionally, the volume of big data attributes will inevitably bring about the scalability issue of big data applications.

Velocity: With the flood of data generated quickly from smart phones and sensors, rapid analysis and processing of data need to be considered [

71]. These data must be analyzed in time because the velocity of data generation is very quick [

41,

115]. Low-speed data processing may result in the fact that the big data systems are unable to respond effectively to any negative changes (speed) [

94]. Therefore, the velocity engenders challenges to data timeliness and performance. Data in the high-speed transmission process will greatly increase the data failure rate. Abnormal or missing data will affect the correctness, availability and reliability of big data applications [

107].

Variety: The increasing number of sensors that are deployed on the Internet makes the data generated complex. It is impossible for human beings to write every rule for each type of data to identify relevant information. As a result, most of the events in these data are unknown, abnormal and indescribable. For big data applications, the sources of data are varied, including structured, semi-structured and unstructured data. Some of these data have no statistical significance, which greatly influences data accuracy [

54]. Unstructured data will produce the consistency problem [

17]. In addition, the contents of the database became corrupted by erroneous programs storing incorrect values and deleting essential records. It is hard to recognize such quality erosion in large databases, but over time, it spreads similarly to a cancerous infection, causing ever-increasing big data system failures. Thus, not only data quality but also the quality of applications suffer under erosion [

114].

4.3. Technologies for Assuring the QA of Big Data Applications (RQ2)

This section answers RQ2 (which kinds of technologies are used to guarantee the quality of big data applications?). We extracted the quality assurance technologies used in the primary articles. In

Figure 8, we show the distribution of papers for these different types of QA technologies. These technologies cover the entire development process for big data applications. According to the papers we collected, we identified the existing three implementation-specific technologies, including specification; architectural choice and fault tolerance; and five process-specific technologies, including analysis, verification, testing, monitoring and fault and failure prediction.

In fact, quality assurance is an activity that applies to the entire big data application process. The development of big data applications is a systems engineering task that includes requirements, analysis, design, implementation and testing. Accordingly, we mainly divide the QA technologies into design time and runtime. Based on the papers we surveyed and the development process of big data applications, we divide the quality assurance technologies for big data applications into the above eight types. In

Table 10, we have listed the simple descriptions of different types of QA technologies for big data applications.

We compare the quality assurance technologies from five different aspects, including suitable stage, application domain, quality attribute, effectiveness and efficiency.

Suitable stage refers to the possible stage using these quality assurance technologies, including design time, runtime or both. Application domain means the specific area that big data applications belong to, including DA (database application), DSS (distributed storage system), AIS (artificial intelligence system), BDCS (big data classification system), GSCS (geographic security control system), BS (bioinformatics software), AITS (automated IT system) and so on. Quality attribute identifies the most used quality attributes being addressed by the corresponding technologies. Effectiveness means that big data application quality assurance technologies can guarantee the quality of big data applications to a certain extent. Efficiency refers to the improvement of the quality of big data application through the QA technologies. For better comparisons, we present the results in

Table 11 (the QA are those presented in

Table 8).

4.4. Existing Strengths and Limitations (RQ3)

The main purpose of RQ3 (what are the advantages and limitations of the proposed technologies?) is to comprehend the strengths and limitations of QA approaches. To answer RQ3, we discuss the strengths and limitations of each technique.

From

Table 11, we can see that specification, analysis and architectural choice are used at design time; monitoring is used at runtime; fault tolerance, verification, testing and fault and failure prediction cover both design time and runtime. Design-time technologies are commonly used in MapReduce, which is a great help when designing big data application frameworks. The runtime technologies are usually used after the generation of big data applications, and their applications are very extensive, including intelligent systems, storage systems, cloud computing, etc.

For quality attributes, while most technologies can contend with performance and reliability, some technologies focus on correctness, scalability, etc. To a certain extent, these eight quality assurance technologies assure the quality of big data applications during their application phase, although their effectiveness and efficiencies are different. To better illustrate these three quality parameters, we carry out a specific analysis through two quality assurance technologies. Specification establishes complete descriptions of information, detailed functional and behavioral descriptions and performance requirements for big data applications to ensure the quality. Therefore, it can guarantee the integrity of the function of big data applications to achieve the designated goal of big data applications and guarantee satisfaction. Although it does not guarantee the quality of the application in runtime, it guarantees the quality of the application in the initial stage. As a vital technology in the QA of big data applications, the main function of testing is to test big data applications at runtime. As a well-known concept, the purpose of testing is to detect errors and measure quality, thereby ensuring effectiveness. In addition, the efficiency of testing is largely concerned with testing methods and testing tools. The detailed description of other technologies is shown in

Table 11.

The strengths and limitations of QA technologies are as follows:

Specification: Due to the large amounts of data generated for big data applications, suitable specifications can be used to select the most useful data at hand. This technology can effectively improve the efficiency, performance and scalability of big data applications by using UML [

96], ADL [

63] and so on. The quality of the system can be guaranteed at the design stage. In addition, the specification is also used to ensure that system functions in big data applications can be implemented correctly. However, all articles are aimed at specific applications or scenarios, and do not generalize to different types of big data applications [

63,

90].

Architectural choice: MDA is used to generate and export most codes for big data applications and can greatly reduce human error. To date, MDA metamodels and model mappings are only targeted for very special kinds of systems, e.g., MapReduce [

72,

74]. Metamodels and model mapping approaches for other kinds of big data applications are also urgently needed.

Fault tolerance: Fault tolerance is one of the staple metrics of quality of service in big data applications. Fault-tolerant mechanisms permit big data applications to allow or tolerate the occurrence of mistakes within a certain range. If a minor error occurs, the big data application can still offer stable operation [

99,

106]. Nevertheless, fault tolerance cannot always be optimal. Furthermore, fault tolerance can introduce performance issues, and most current approaches neglect this problem.

Analysis: The main factors that affect the big data applications’ quality analysis are the size of the data, the speed of data processing and data diversity. Analysis technologies can analyze major factors that may affect the quality of software operations during the design phase of big data applications. Current approaches only focus on analyzing performance attributes [

60,

89]. There is a need to develop approaches for analyzing other quality attributes. In addition, it is impossible to analyze all quality impact factors for big data applications. The specific conditions should be specified before analysis.

Verification: Due to the complexity of big data applications, there is no uniform verification technology in general. Verification technologies verify or validate quality attributes by using logical analysis, a theorem proving and model checking. There is a lack of formal models and corresponding algorithms to verify attributes of big data applications [

58,

59]. Due to the existence of big data attributes, traditional software verification standards no longer meet the quality requirements [

117].

Testing: In contrast to verification, the testing technique is always performed during the execution of big data applications. Due to the large amounts of data, automatic testing is an efficient approach for big data applications. Current research always applies traditional testing approaches in big data applications [

47]. However, novel approaches for testing big data attributes are urgently needed because testing focuses are different between big data application testing and conventional testing. Conventional testing focuses on diverse software errors regarding structures, functions, UI and connections to the external systems. In contrast, big data application testing focuses on involute algorithms, large-scale data input, complicated models and so on. Furthermore, conventional testing and big data application testing are different in the test input, the testing execution and the results. As an example, learning-based testing approaches [

52] can test the velocity attributes of big data applications.

Monitoring: Monitoring can obtain accurate status and behavioral information for big data applications in a real operating environment. For big data applications running in complex and variable network environments, their operating environments will affect the operations of the software system and produce some unexpected problems. Therefore, monitoring technologies will be more conducive to the timely response to the emergence of anomalies to prevent failures [

71,

91]. A stable and reliable big data application relies on monitoring technologies that not only monitor whether the service is alive or not but also monitor the operation of the system and data quality. The high velocity of big data engendered the challenge of monitoring accuracy issues and may produce overhead problems for big data applications.

Fault and failure prediction: Prediction technologies can predict errors that may occur in the operation of big data applications so that errors can be prevented in advance. Due to the complexity of big data applications, the accuracy of prediction is still a substantial problem that we need to consider in the future. Deep learning-based approaches [

44,

46,

55] can be combined with other technologies to improve prediction accuracy due to the large amounts of data.

4.5. Empirical Evidence (RQ4)

The goal of RQ4 (what are the real cases of using the proposed technologies?) is to elicit empirical evidence on the use of QA technologies. We organize the discussion of the QA technologies discussed in

Section 4.3, which is shown in

Table 12.

Specification. The approach in [

63] is explained by a case study of specifying and modeling a vehicular ad hoc network (VANET). The major merits of the posed method are its capacity to take into consideration big data attributes and cyber physical system attributes through customized concepts and models in a strict, simple and expressive approach.

Architectural choice. In [

74], the investigators demonstrate the effectiveness of the proposed approach by using a case study. This approach can overcome accidental complexities in analytics-intensive big data applications. Paper [

97] conducted a series of tests using Amazon’s AWS cloud platform to evaluate the performance and scalability of the observable architecture by considering the CPU, memory and network utilization levels. Paper [

65] uses a simple case study to evaluate the proposed architecture and a metamodel in the word count application.

Fault tolerance. The experiments in paper [

55] show that DAP architecture can improve the performance of joining two-way streams by analyzing the time consumption and recovery ratio. In addition, all data can be reinstated if the newly started VMs can be reinstated in a few seconds while nonadjacent nodes fail; meanwhile, if neighboring nodes fail, some data can be reinstated. Through analyzing the CPU utilization, memory footprint, disk throughput and network throughput, experiments in paper [

46] show that the performance of all cases (MapReduce data computing applications) can be significantly improved.

Analysis. The experiments in [

89] show that the two factors that are the most important concern the quality of scientific data compression and remote visualization, which are analyzed by latency and throughput. Experiments in [

60] were conducted to analyze the connection between the performance measures of several MapReduce applications and performance concepts, such as CPU processing time. The consequences of performance analysis illustrate that the major performance measures are processing time, job turnaround and so on. Therefore, in order to improve the performances of big data applications, we must take into consideration these measures.

Verification. In [

43], the author used CMA (cell morphology assay) as an example to describe the design of the framework. Verifying and validating datasets, software systems and algorithms in CMA demonstrates the effectiveness of the framework.

Testing. In [

38], the authors used a number of virtual users to simulate real users and observed the average response time and CPU performance in a network public opinion monitoring system. In [

49], the experiment verifies the effectiveness and correctness of the proposed technique in alleviating the Oracle problem in a region growth program. The testing method successfully detects all the embedded mutants.

Monitoring. The experiments in [

94] show that a large queue can increase the write speed and that the proposed framework supports a very high throughput in a reasonable amount of time in a cloud monitoring system. The authors also provide comparative tests to show the effectiveness of the framework. In [

54], the comparison experiment shows that the method is reliable and fast, especially with the increase of the data volume, and the speed advantage is obvious.

Fault and failure prediction. In [

108], the authors implemented the proactive failure management system and tested the performance in a production cloud computing environment. Experimental results show that the approach can reach a high true positive rate and a low false positive rate for failure prediction. In [

115], the authors provide emulation-based evaluations for different sets of data traces, and the results show that the new prediction system is accurate and efficient.

6. Threats to Validity

In the design of this study, several threats were encountered. Similarly to all SLR studies, a common threat to validity regards the coverage of all relevant studies. In the following, we discuss the main threats of our study and the ways we mitigated them.

External validity: In the data collection process, most of the data were collected by three researchers; this may have led to incomplete data collection, as some related articles may be missing. Although all authors have reduced the threat by determining unclear questions and discussing them together, this threat still exists. In addition, each researcher may have been biased and inflexible when he extracted the data, so at each stage of the study, we ensured that at least two other reviewers reviewed the work. Another potential threat is the consideration of studies which are only published in English. However, since the English language is the main language used for academic papers, this threat is considered to be minimal.

Internal validity: This SLR may have missed some related novel research papers. To alleviate this threat, we have searched for papers in big data-related journals/conferences/workshops. In total, 83 primary studies are selected by using the SLR. The possible threat is that QA technologies are not clearly shown for the selected primary studies. In addition, we endeavored as much as possible to extract information to analyze each article, which helps to avoid missing important information. This approach can minimize the threats as much as possible.

Construct validity: This concept relates to the validity of obtained data and the research questions. For the systematic literature review, it mainly addresses the selection of the main studies and how they represent the population of the questions. We have taken steps to reduce this threat in several ways. For example, the automatic search was performed on several electronic databases so as to avoid the potential biases.

Reliability: Reliability focuses on ensuring that the results are the same if our review would be conducted again. Different researchers who participated in the survey may be biased toward collecting and analyzing data. To solve this threat, the two researchers simultaneously extracted and analyzed data strictly according to the screening strategy, and further discussed the differences of opinion in order to enhance the objectivity of the research results. Nevertheless, the background and experience of the researchers may have produced some prejudices and introduced a certain degree of subjectivity in some cases. This threat is also related to replicating the same findings, which in turn affects the validity of the conclusion.

7. Conclusions and Future Work

A systematic literature review has been performed on QA technologies for big data applications. We did a large-scale literature search on seven electronic databases and a total of 83 papers were selected as primary studies. Regarding the research questions, we have identified that correctness, performance, availability, scalability and reliability are the focused quality attributes of big data applications; and three implementation-specific technologies, including specification, architectural choice and fault tolerance, and five process-specific technologies, including analysis, verification, testing, monitoring and fault and failure prediction have been studied for improving these quality attributes. For the focal quality attributes, the influencing factors brought by the big data attributes were identified. For the QA technologies, the strengths and limitations were compared and the empirical evidence was provided. These findings play an important role not only in the research but also in the practice of big data applications.

Although researchers have proposed these technologies to ensure quality, the research on big data quality is, however, still in its infancy, and problems regarding quality still exist in big data applications. Based on our discussions, the following topics may be part of future work:

Considering quality attributes with big data properties together to ensure the quality of big data applications.

Understanding and tapping into the limitations, advantages and applicable scenarios of QA technologies.

Researching quantitative models and algorithms to measure the relations among big data properties, data quality attributes and software quality attributes.

Developing mature tools to support QA technologies for big data applications.