Transfer Learning-Based Fault Diagnosis under Data Deficiency

Abstract

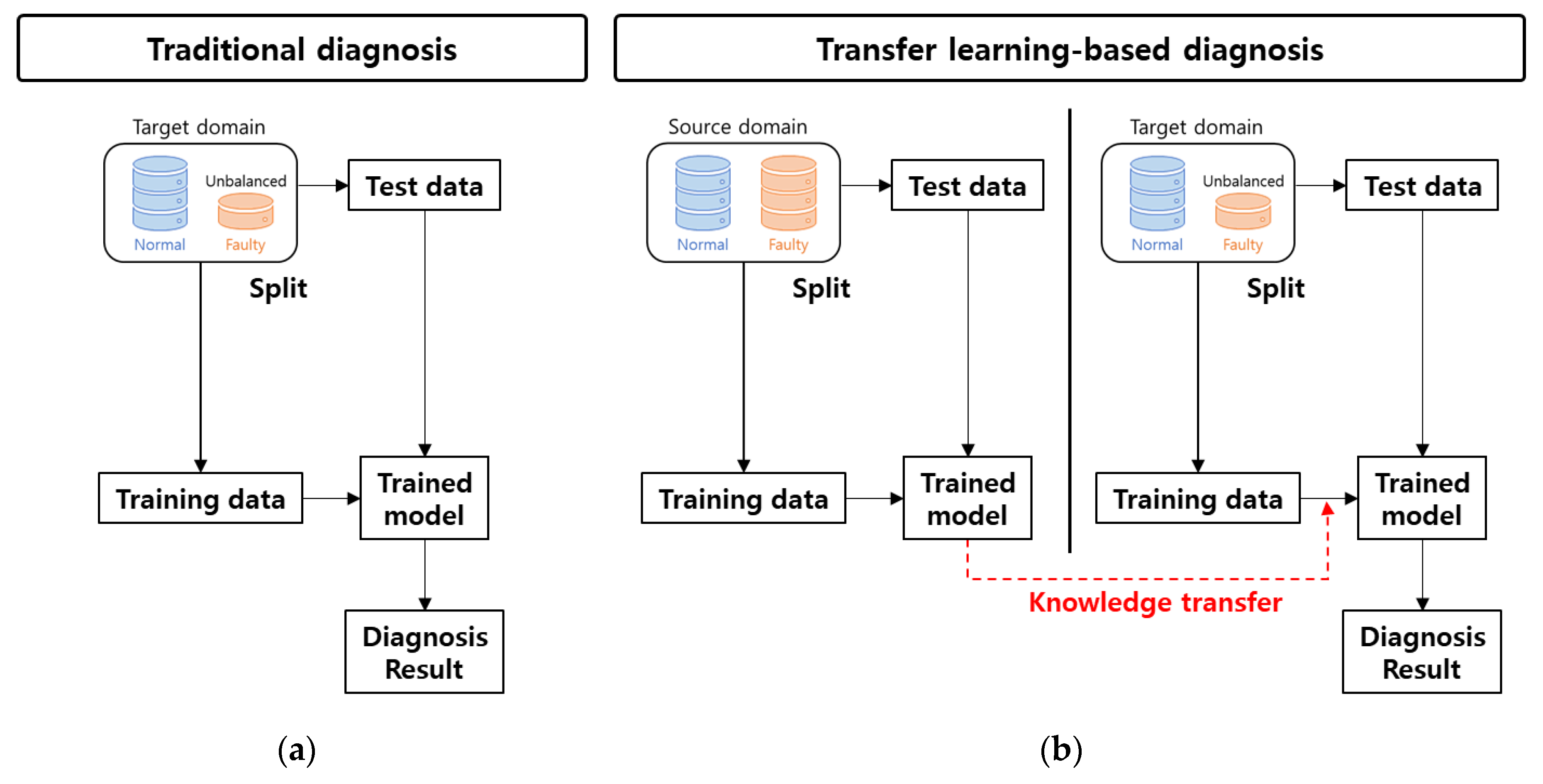

1. Introduction

2. Transfer Learning

3. Application

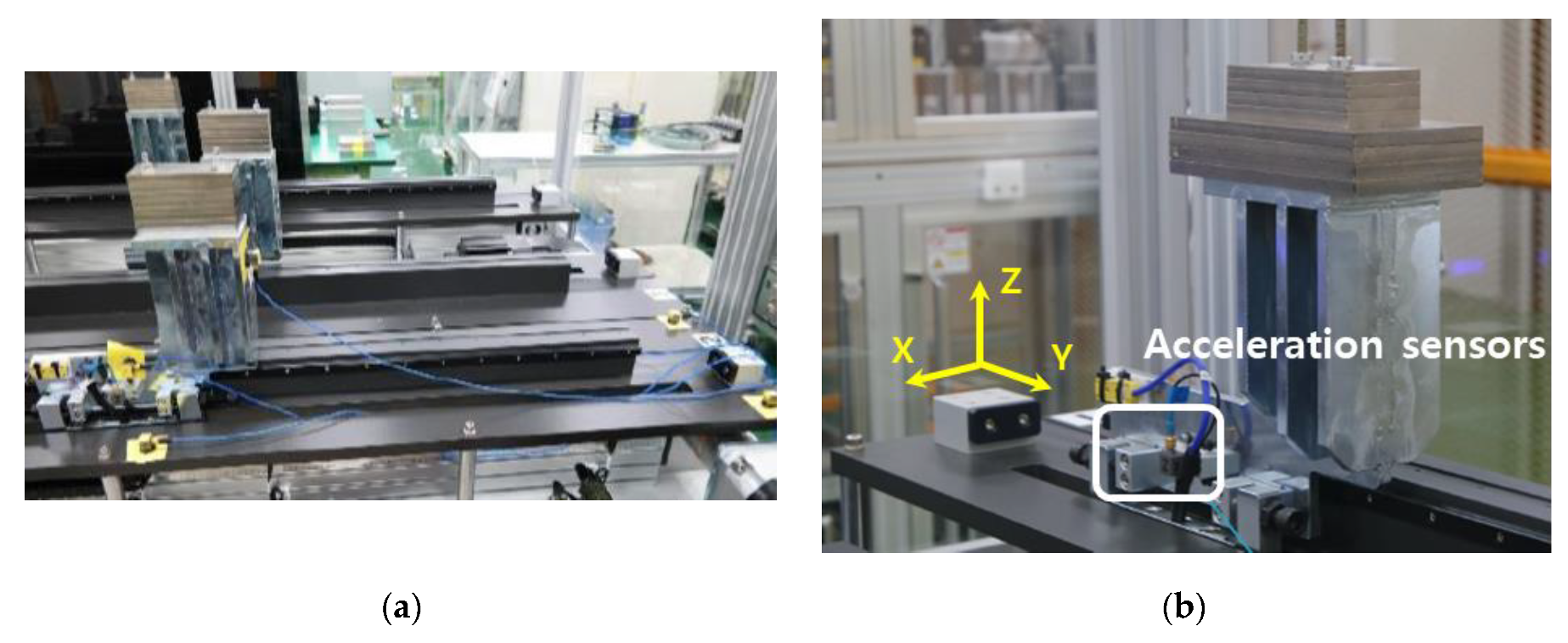

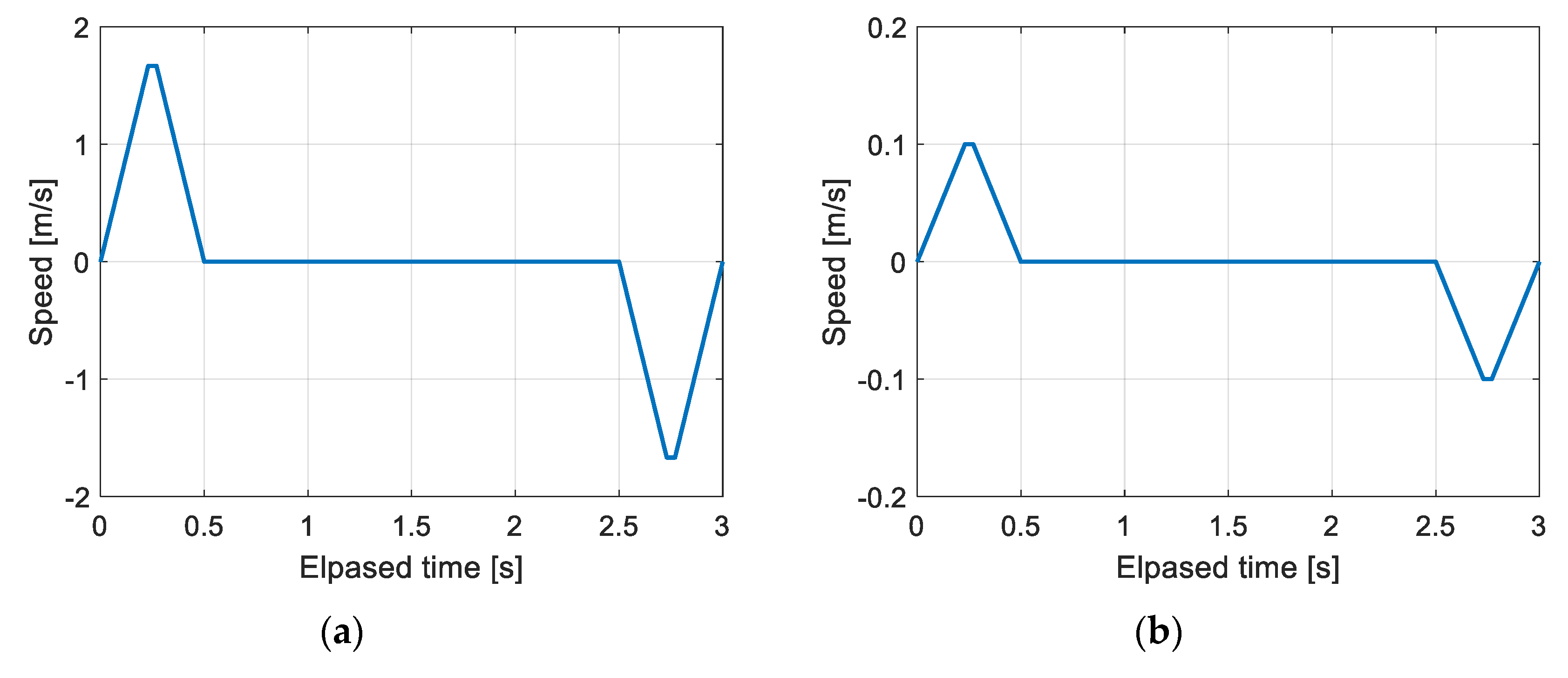

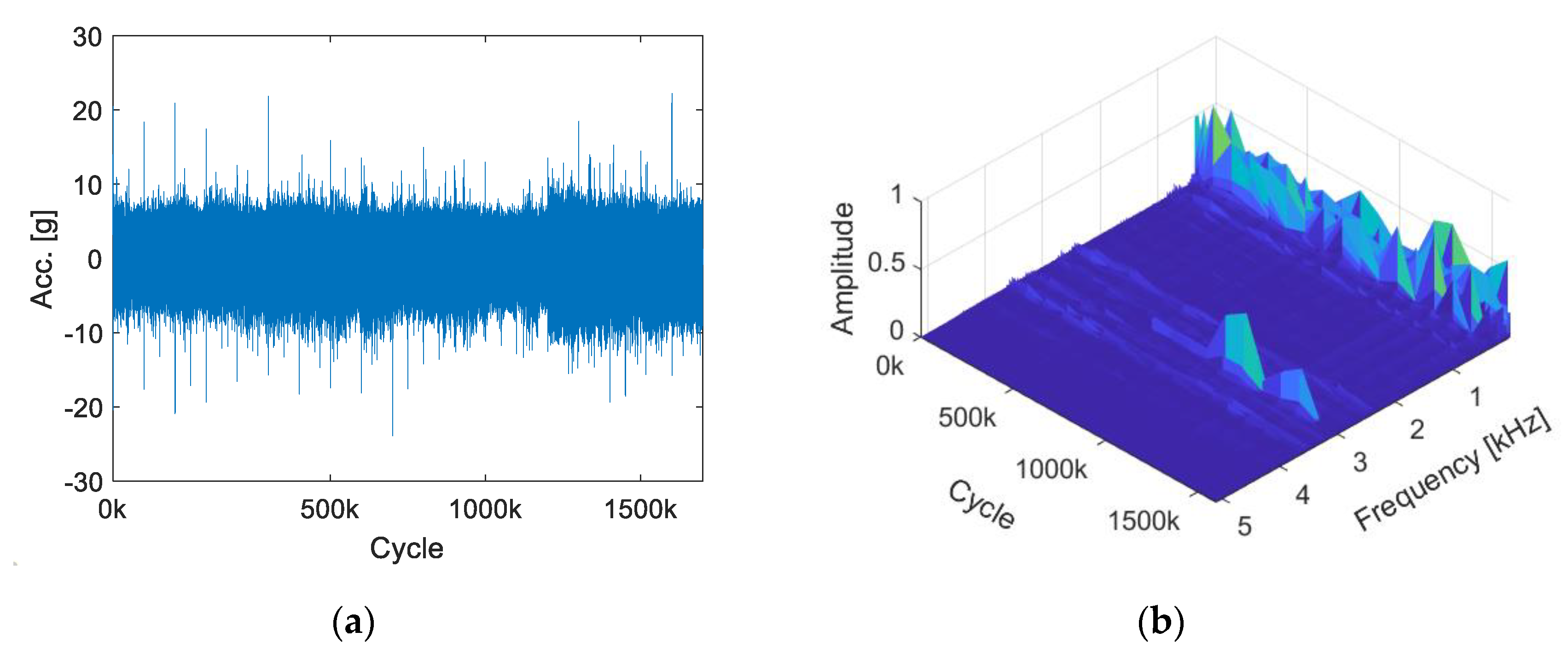

3.1. Linear Motion Guide Dataset

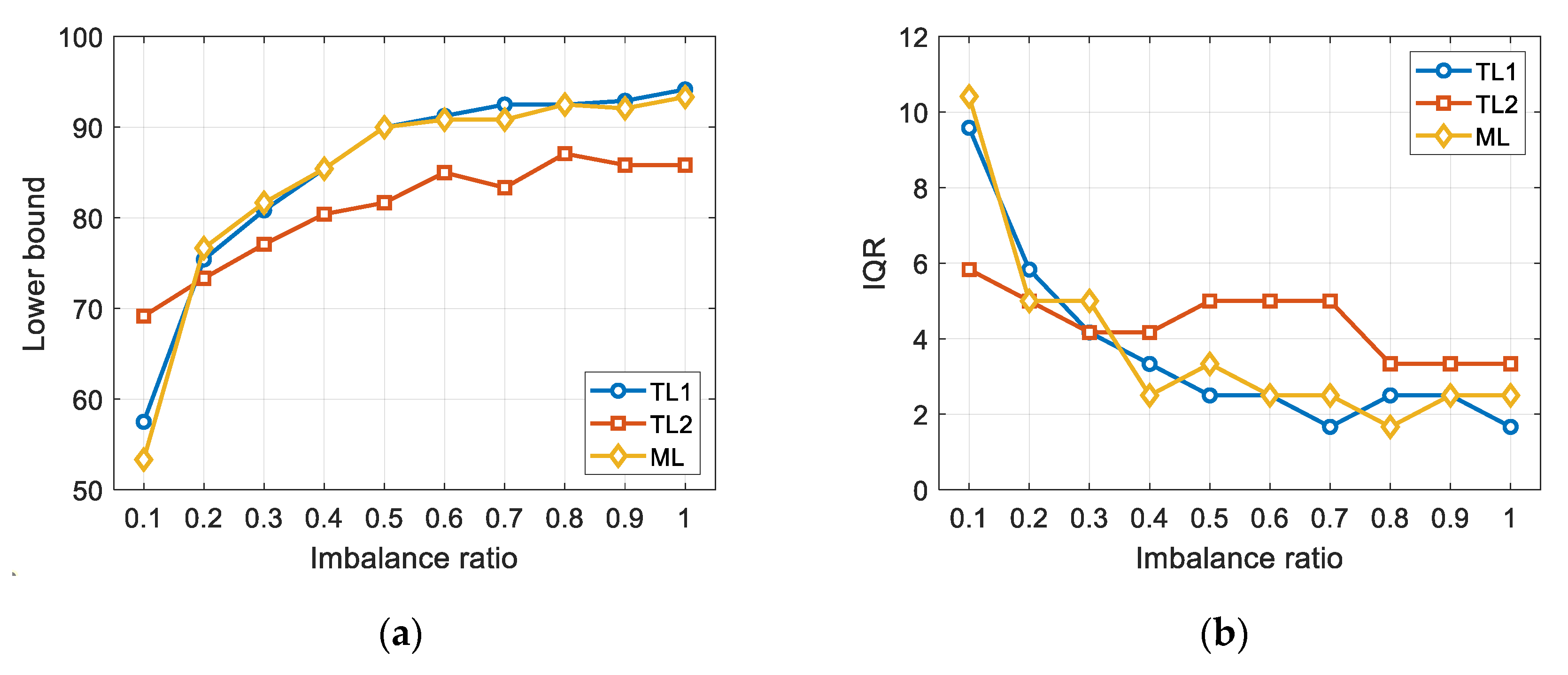

3.2. Training Neural Networks of Source Domain

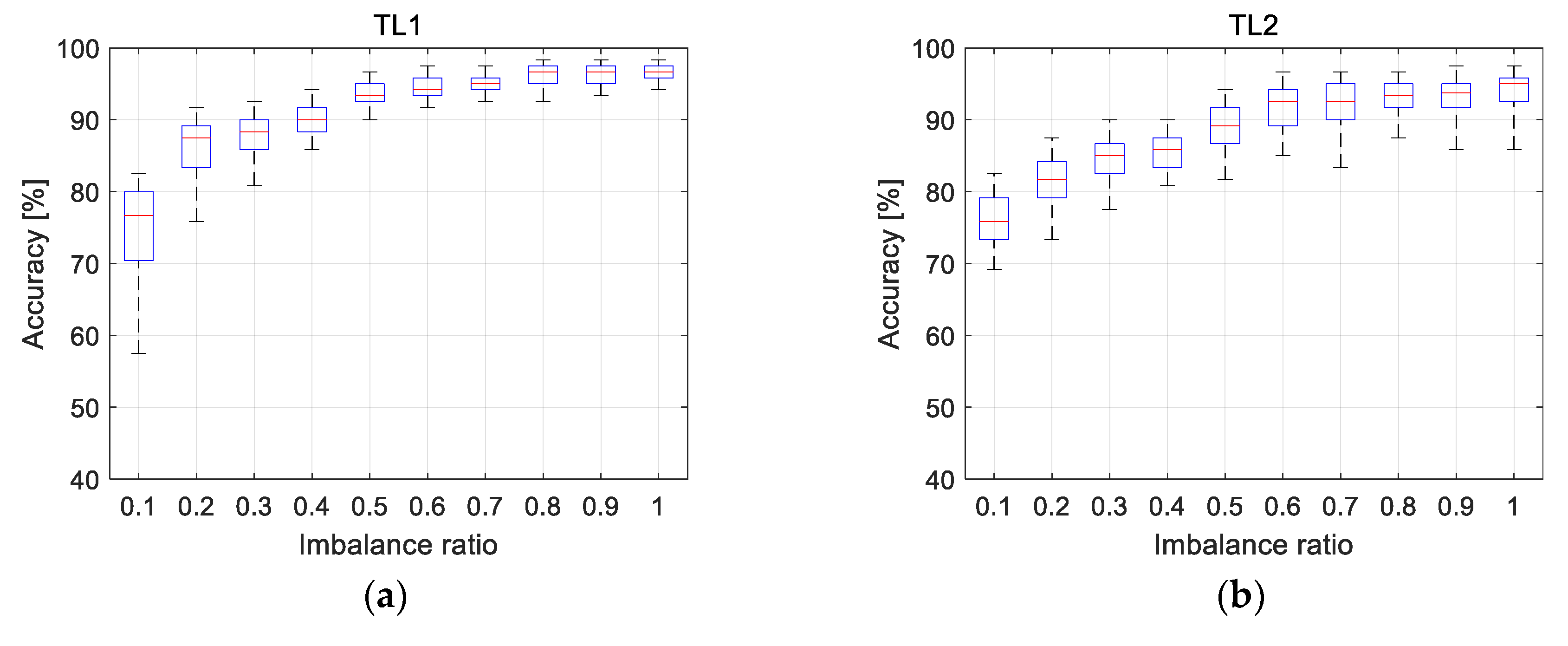

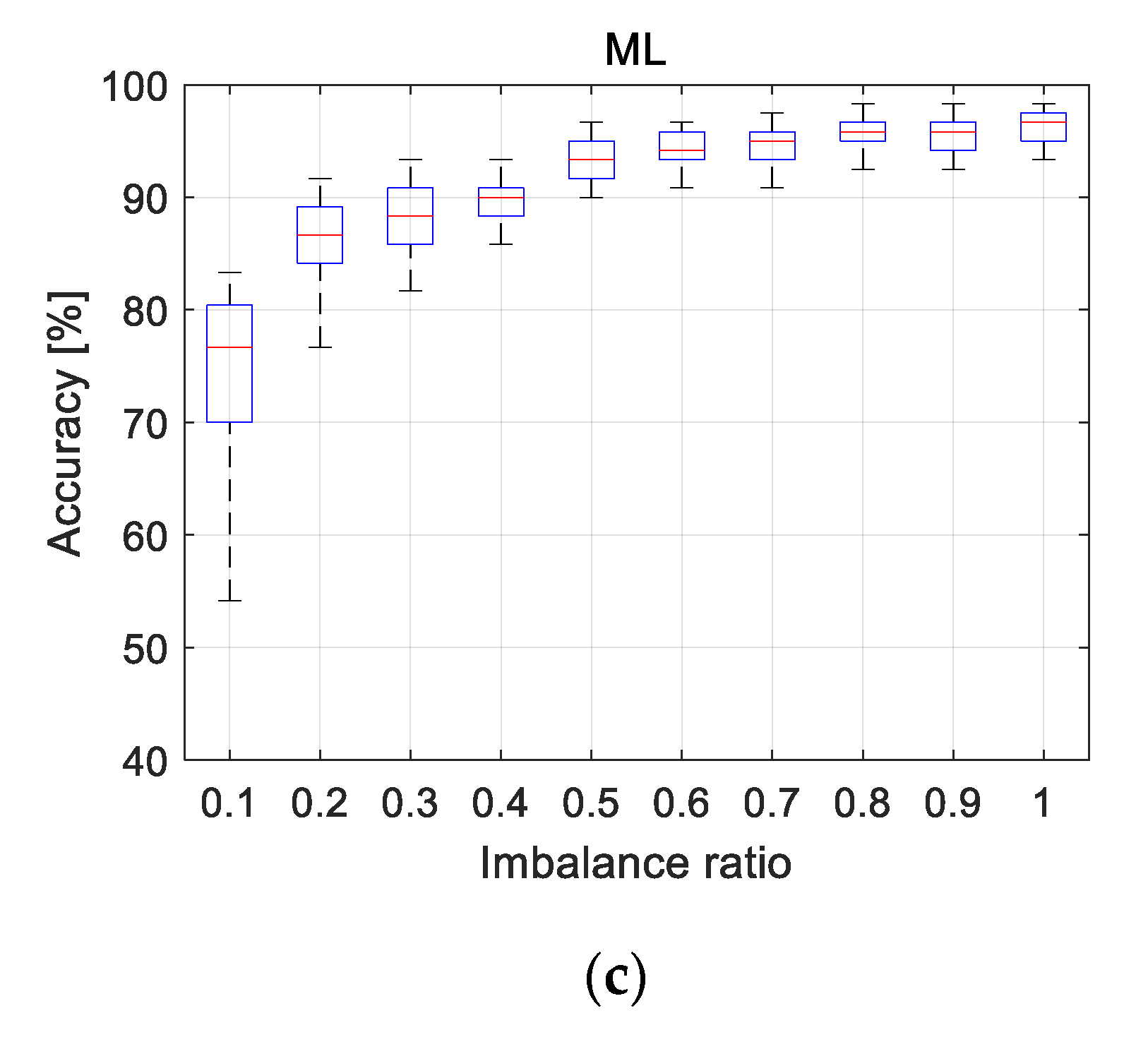

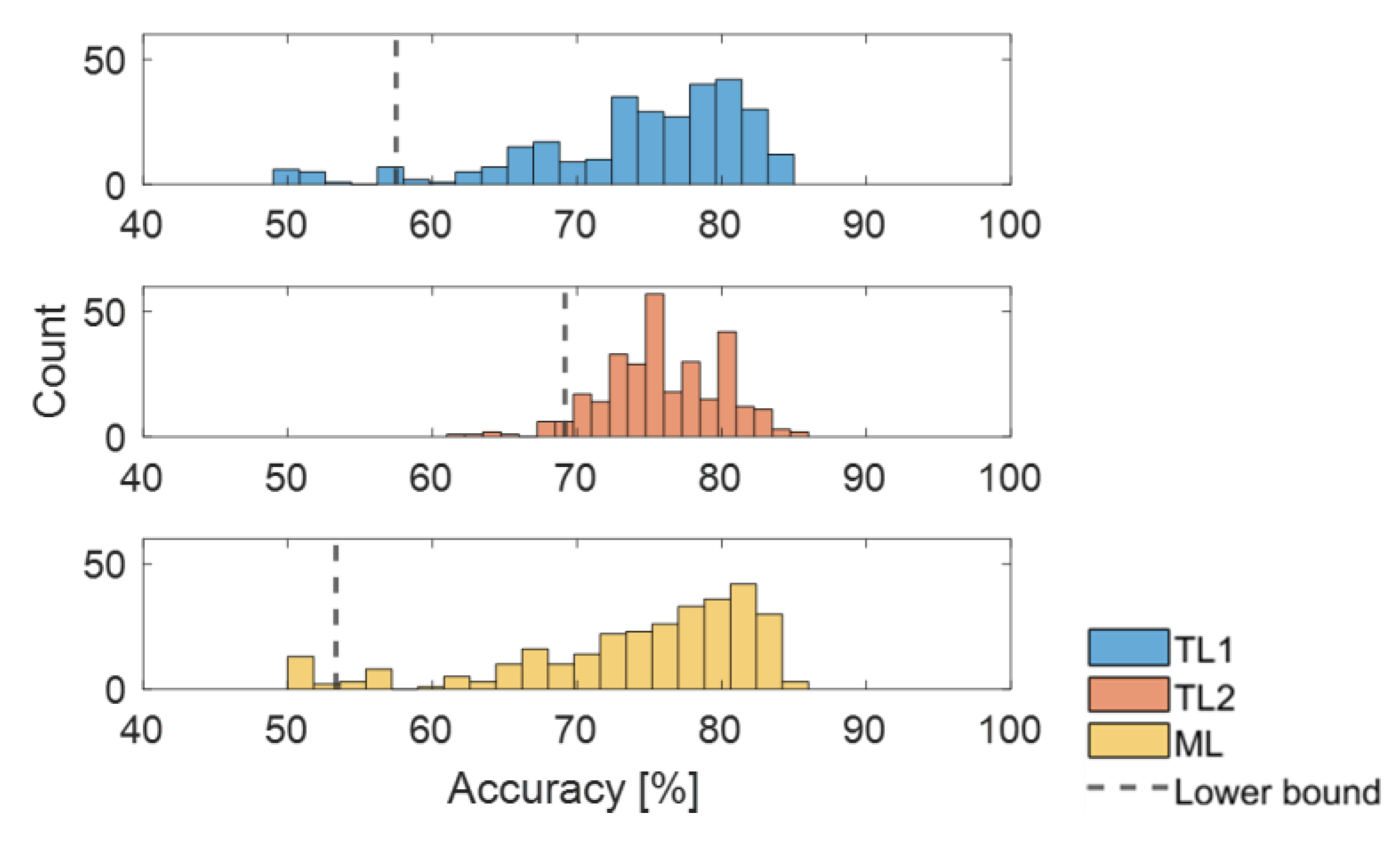

3.3. Training Neural Networks of Target Domain by Transfer Learning

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bagheri, B.; Ahmadi, H.; Labbafi, R. Application of Data Mining and feature extraction on Intelligent Fault Diagnosis by Artificial Neural Network and K-Nearest Neighbor. In Proceedings of the XIX International Conference on Electrical Machines—ICEM 2010, Rome, Italy, 6–8 September 2010. [Google Scholar] [CrossRef]

- Hu, Q.; He, Z.; Zhang, Z.; Zi, Y. Fault diagnosis of rotating machinery based on improved wavelet package transform and SVMs ensemble. Mech. Syst. Signal. Process. 2007, 21, 688–705. [Google Scholar] [CrossRef]

- Eren, L.; Ince, T.; Kiranyaz, S. A Generic Intelligent Bearing Fault Diagnosis System Using Compact Adaptive 1D CNN Classifier. J. Signal. Process. Syst. 2019, 91, 179–189. [Google Scholar] [CrossRef]

- Kim, S.; Kim, N.H.; Choi, J.-H. Prediction of remaining useful life by data augmentation technique based on dynamic time warping. Mech. Syst. Signal. Process. 2020, 136, 106486. [Google Scholar] [CrossRef]

- Sobie, C.; Freitas, C.; Nicolai, M. Simulation-driven machine learning: Bearing fault classification. Mech. Syst. Signal. Process. 2018, 99, 403–419. [Google Scholar] [CrossRef]

- Diez-Olivan, A.; Del Ser, J.; Galar, D.; Sierra, B. Data fusion and machine learning for industrial prognosis: Trends and perspectives towards Industry 4.0. Inf. Fusion 2019, 50, 92–111. [Google Scholar] [CrossRef]

- Azamfar, M.; Singh, J.; Bravo-Imaz, I.; Lee, J. Multisensor data fusion for gearbox fault diagnosis using 2-D convolutional neural network and motor current signature analysis. Mech. Syst. Signal. Process. 2020, 144, 106861. [Google Scholar] [CrossRef]

- Huang, M.; Liu, Z.; Tao, Y. Mechanical fault diagnosis and prediction in IoT based on multi-source sensing data fusion. Simul. Model. Pr. Theory 2020, 102, 101981. [Google Scholar] [CrossRef]

- Luwei, K.C.; Yunusa-Kaltungo, A.; Sha’Aban, Y.A. Integrated Fault Detection Framework for Classifying Rotating Machine Faults Using Frequency Domain Data Fusion and Artificial Neural Networks. Machines 2018, 6, 59. [Google Scholar] [CrossRef]

- Zhang, R.; Tao, H.; Wu, L.; Guan, Y. Transfer Learning With Neural Networks for Bearing Fault Diagnosis in Changing Working Conditions. IEEE Access 2017, 5, 14347–14357. [Google Scholar] [CrossRef]

- Wen, L.; Gao, L.; Li, X. A New Deep Transfer Learning Based on Sparse Auto-Encoder for Fault Diagnosis. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 136–144. [Google Scholar] [CrossRef]

- Qian, W.; Li, S.; Wang, J. A New Transfer Learning Method and its Application on Rotating Machine Fault Diagnosis under Variant Working Conditions. IEEE Access 2018, 6, 69907–69917. [Google Scholar] [CrossRef]

- Lu, S.; Sirojan, T.; Phung, B.T.; Zhang, D.; Ambikairajah, E. DA-DCGAN: An Effective Methodology for DC Series Arc Fault Diagnosis in Photovoltaic Systems. IEEE Access 2019, 7, 45831–45840. [Google Scholar] [CrossRef]

- Guo, L.; Lei, Y.; Xing, S.; Yan, T.; Li, N. Deep Convolutional Transfer Learning Network: A New Method for Intelligent Fault Diagnosis of Machines with Unlabeled Data. IEEE Trans. Ind. Electron. 2018, 66, 7316–7325. [Google Scholar] [CrossRef]

- Zheng, H.; Wang, R.; Yang, Y.; Yin, J.; Li, Y.; Li, Y.; Xu, M. Cross-Domain Fault Diagnosis Using Knowledge Transfer Strategy: A Review. IEEE Access 2019, 7, 129260–129290. [Google Scholar] [CrossRef]

- Bin, G.; Gao, J.; Li, X.; Dhillon, B. Early fault diagnosis of rotating machinery based on wavelet packets—Empirical mode decomposition feature extraction and neural network. Mech. Syst. Signal. Process. 2012, 27, 696–711. [Google Scholar] [CrossRef]

- Saravanan, N.; Ramachandran, K. Incipient gear box fault diagnosis using discrete wavelet transform (DWT) for feature extraction and classification using artificial neural network (ANN). Expert Syst. Appl. 2010, 37, 4168–4181. [Google Scholar] [CrossRef]

- Buch, K.D. Decision Based Non-Linear Filtering Using Interquartile Range Estimator for Gaussian Signals. In Proceedings of the 2014 Annual IEEE India Conference (INDICON), Pune, India, 11–13 December 2014. [Google Scholar] [CrossRef]

| Purpose | Normal Dataset | Fault Dataset | |

|---|---|---|---|

| Source Domain | Training | 152 | 152 |

| Target Domain | Training | 100 | 100*IR 1 |

| Test | 60 | 60 |

| Feature | Formula | Feature | Formula |

|---|---|---|---|

| Mean | Crest factor | ||

| Root amplitude | Clearance factor | ||

| RMS | Shape factor | ||

| Standard deviation | Impulse factor | ||

| Peak | Peak-to-peak | ||

| Skewness | Root sum of squares | ||

| Kurtosis |

| IR | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 |

|---|---|---|---|---|---|---|---|---|---|---|

| The number of nodes in hidden layer | 10 | 18 | 14 | 10 | 16 | 10 | 12 | 16 | 12 | 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, S.H.; Kim, S.; Choi, J.-H. Transfer Learning-Based Fault Diagnosis under Data Deficiency. Appl. Sci. 2020, 10, 7768. https://doi.org/10.3390/app10217768

Cho SH, Kim S, Choi J-H. Transfer Learning-Based Fault Diagnosis under Data Deficiency. Applied Sciences. 2020; 10(21):7768. https://doi.org/10.3390/app10217768

Chicago/Turabian StyleCho, Seong Hee, Seokgoo Kim, and Joo-Ho Choi. 2020. "Transfer Learning-Based Fault Diagnosis under Data Deficiency" Applied Sciences 10, no. 21: 7768. https://doi.org/10.3390/app10217768

APA StyleCho, S. H., Kim, S., & Choi, J.-H. (2020). Transfer Learning-Based Fault Diagnosis under Data Deficiency. Applied Sciences, 10(21), 7768. https://doi.org/10.3390/app10217768