Intent Detection Problem Solving via Automatic DNN Hyperparameter Optimization

Abstract

1. Introduction

2. Related Work

3. Datasets

4. Methodology

4.1. Formal Description of the Task

4.2. DNN-Based Classifiers

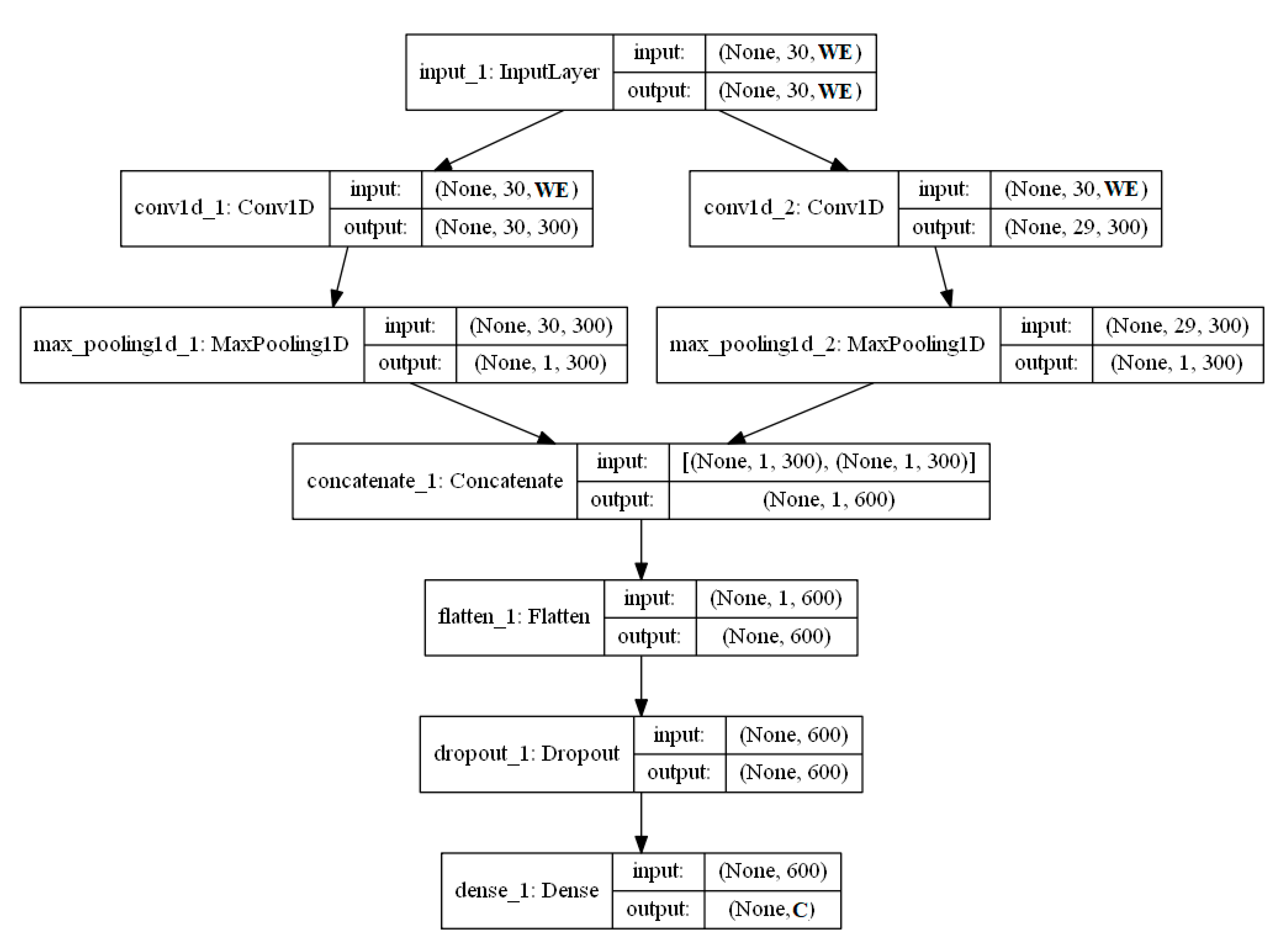

- CNN (convolutional neural network; introduced by LeCun [34]) is a DNN used to seek fixed-size patterns, so-called convolutions. The text has a one-dimensional structure in which sequences of tokens matter because convolutions are expressed with a sliding window function over these tokens. By resizing filters and linking their output to different sizes of patterns (consisting of 2, 3, or more adjacent tokens, so-called n-grams), tokens can be detected and generalized. The main advantage of the CNN method is that it learns to detect important patterns regardless of their position in the text. In our experiments, the architecture of the CNN, similar to [35], has been explored.

- The LSTM (long short-term memory) method (presented by Hochreiter and Schmidhuber [36]) is an improved version of the recurrent neural network (RNN). An advantage of RNNs over, e.g., feed forward neural networks is that RNNs have memory and, therefore, can be effectively applied on the sequential data (i.e., text). Sometimes, the presence/absence of some patterns (as in a case of CNN) does not play the major role, but rather, the order of tokens in sequences. However, RNNs confront a vanishing gradient problem and, therefore, cannot solve tasks that require learning long-term dependencies. In contrast, the LSTM method contains a “memory cell” that is able to maintain memory for a longer period of time; integrated gates control what information entering the “memory cell” is important, to which hidden state it has to be outputted, and when it has to be forgotten. Hence, LSTM methods are superior to RNNs when applied to longer sequences.

- The BiLSTM (bidirectional LSTM) method (introduced by Graves and Schmidhuber [37]). Like the LSTM classifier, the BiLSTM is suitable for tasks when a learning problem is sequential. If LSTMs run an input forward, preserving information only from the past, BiLSTMs analyze sequences in both directions, i.e., forward and backward; thus, in any hidden state, they preserve information from the-past-to-the-future and from the-future-to-the-past, respectively.

4.3. Vectorization Types

- FastText embeddings [38] introduced by Facebook’s AI Research Lab. In our experiments, separate English, Estonian, Latvian, Lithuanian and Russian fastText embedding models, cc.en.300.vec, cc.et.300.vec, cc.lv.300.vec, cc.lt.300.vec, and cc.ru.300.vec (the fastText embeddings were downloaded from https://fasttext.cc/docs/en/crawl-vectors.html), respectively, have been used. These models are trained on continuous bag-of-words (CBOW) architecture with position-weights, 300 dimensions, and character n-grams of a window size equal to 5 and 10 negatives [39]. Each fastText word embedding is a sum of sliding symbol n-gram embeddings composing that word (e.g., the 5-g embeddings <chat, chatb, hatbo, atbot, tbot> compose the word <chatbot>). Due to this reason, fastText word embeddings can be created even for misspelled words; moreover, obtained vectors are close to their correct equivalents. It is especially beneficial for languages having a missing diacritics problem in non-normative texts. Despite the fact that the Estonian, Latvian, Lithuanian, and Russian languages have the missing diacritics problem in non-normative texts, datasets in this research contain only normative texts.

- BERT (bidirectional encoder representations from transformers) [40] introduced by Google AI. This neural-based technique (with multidirectional language modeling and attention mechanisms) demonstrates state-of-the-art performance on a wide range of NLP tasks, including chatbot technology. BERT embeddings are robust to disambiguation problems as homonyms are represented by different word vectors based on their context. In our experiments, the BERT service (available online: https://github.com/hanxiao/bert-as-service), with the base multilingual cased 12-layer, 768-hidden, 12-heads model for 104 languages (covering English, Estonian, Latvian, Lithuanian, and Russian), has been used.

4.4. Hyperparameter Tuning

- Several DNN architectures (shallower and deeper), having different numbers of hidden layers (i.e., series of convolutional layers in CNN, simple or stacked LSTM and BiLSTM versions).

- DNN hyperparameters: numbers of neurons (100, 200, 300, or 400), dropouts (values from an interval [0, 1]), recurrent dropouts (from [0, 1]), activation functions (relu, softmax, tanh, elu or selu), optimizers (Adam, SGD, RMSprop, Adagrad, Adadelta, Adamax or Nadam), batch sizes (32 or 64), and numbers of epochs (20, 30, 40 or 50).

- Random.suggest performs a random search over a set of hyperparameter values in 100 iterations (the experimental investigation revealed that 100 iterations are enough to find the optimal hyperparameter value set that gives maximum accuracy on the validation dataset). When seeking the most accurate combination, it explores the hyperparameter value space by randomly checking different combinations.

- Tpe.suggest (tree-structured Parzen estimator) [42] performs a Bayesian-based iterative search (for the schematic representation of TPE, see Figure 1). The search strategy of TPE contains two phases. During an initial warm-up phase, it randomly explores a hyperparameter value space. These hyperparameter values can be conditional (additional layer in the architecture), sampled from an interval (as, e.g., for a dropout), or chosen from a determined list of values (e.g., activation functions). The chosen hyperparameter value combinations are used to train a model (with the training dataset split), which is evaluated with the validation split to see each chosen hyperparameter value combination impact on the accuracy. The warm-up phase lasts for n_init iterations (n_init = 20 in our experiments) and builds a function based on the Bayesian rule presented in Equation (1).

5. Experiments and Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Language + Dataset | Word Embedding Type + Classifier | Hyperparameter Values |

| EN + chatbot | fastText + BiLSTM (Figure 4) | Activation function (activation_1): elu Activation function (activation_2): relu Activation function (activation_3): selu Activation function in Dense (dense_3): softmax Batch size: 64 Epochs: 40 Optimizer: Adamax |

| BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adamax Dropout rate: 0.437 | |

| EN + askUbuntu | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| EN + webapps | fastText + CNN (Figure 3) | Activation function in Conv1D: tanh Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adagrad Dropout rate: 0.089 |

| fastText + BiLSTM (Figure 4) | Activation function (activation_1): tanh Activation function (activation_2): relu Activation function (activation_3): tanh Activation function in Dense (dense_3): softmax Batch size: 64 Epochs: 20 Optimizer: Adagrad | |

| ET + chatbot ET + askUbuntu ET + webapps | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| LV + chatbot | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| BERT + LSTM (Figure 5) | Activation function (activation_1): tanh Activation function (activation_2): relu Activation function in Dense (dense_2): softmax Batch size: 64 Epochs: 20 Optimizer: Adagrad | |

| LV + askUbuntu | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| LV + webapps | BERT + CNN (Figure 3) | Activation function in Conv1D: relu Activation function in Dense: softmax Batch size: 32 Epochs: 30 Optimizer: Adam Dropout rate: 0.276 |

| LT + chatbot | fastText + BiLSTM (Figure 6) | Activation function (activation): elu Activation function in Dense (dense): softmax Batch size: 64 Epochs: 40 Optimizer: Adamax |

| BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64 Epochs: 40 Optimizer: Adam Dropout rate: 0.437 | |

| LT + askUbuntu | BERT + CNN (Figure 3) | Activation function in Conv1D: selu Activation function in Dense: elu Batch size: 64 Epochs: 40 Optimizer: Adagrad Dropout rate: 0.734 |

| LT + webapps | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| RU + chatbot RU + askUbuntu | BERT + CNN (Figure 3) | Activation function in Conv1D: softmax Activation function in Dense: softmax Batch size: 64Epochs: 40 Optimizer: Adam Dropout rate: 0.437 |

| RU + webapps | BERT + CNN (Figure 3) | Activation function in Conv1D: selu Activation function in Dense: softmax Batch size: 32Epochs: 30 Optimizer: Nadam Dropout rate: 0.148 |

References

- Adamopoulou, E.; Moussiades, L. An Overview of Chatbot Technology. In Artificial Intelligence Applications and Innovations, IFIP Advances in Information and Communication Technology, Proceedings of the 16th IFIP WG 12.5 International Conference, AIAI 2020, Neos Marmaras, Greece, 5–7 June 2020; Maglogiannis, I., Iliadis, L., Pimenidis, E., Eds.; Springer: Cham, Switzerland; Volume 584.

- Battineni, G.; Chintalapudi, N.; Amenta, F. AI Chatbot Design during an Epidemic like the Novel Coronavirus. Healthcare 2020, 8, 154. [Google Scholar] [CrossRef] [PubMed]

- Maniou, T.A.; Veglis, A. Employing a Chatbot for News Dissemination during Crisis: Design, Implementation and Evaluation. Futur Internet 2020, 12, 109. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Arias-Navarrete, A.; Palacios-Pacheco, X. Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning. Sustainability 2020, 12, 1500. [Google Scholar] [CrossRef]

- Fonte, F.A.; Carlos, J.; Rial, B.; Nistal, M.L. TQ-Bot: An AIML-based tutor and evaluator bot. J. Univ. Comput. Sci. 2009, 15, 1486–1495. [Google Scholar]

- Van Der Goot, R.; Van Noord, G. MoNoise: Modeling Noise Using a Modular Normalization System. Comput. Linguist. Neth. J. 2017, 7, 129–144. [Google Scholar]

- Shawar, B.A.; Atwell, E. Machine Learning from dialogue corpora to generate chatbots. Expert Update J. 2003, 6, 25–29. [Google Scholar]

- Xu, P.; Sarikaya, R. Convolutional neural network based triangular CRF for joint intent detection and slot filling. In 2013 IEEE Workshop on Automatic Speech Recognition and Understanding; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2013; pp. 78–83. [Google Scholar]

- Yao, K.; Peng, B.; Zhang, Y.; Yu, D.; Zweig, G.; Shi, Y. Spoken language understanding using long short-term memory neural networks. In 2014 IEEE Spoken Language Technology Workshop (SLT); Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2014; pp. 189–194. [Google Scholar]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.C.; Pineau, J. Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence AAAI, Phoenix, AZ, USA, 12–17 February 2016; Volume 16, pp. 3776–3784. [Google Scholar]

- Shang, L.; Lu, Z.; Li, H. Neural responding machine for short-text conversation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; Volume 1, pp. 1577–1586. [Google Scholar]

- Wen, T.H.; Vandyke, D.; Mrkšíc, N.; Gašíc, M.; Rojas-Barahona, L.M.; Su, P.H.; Ultes, S.; Young, S. A network-based end-to-end trainable task-oriented dialogue system. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, EACL 2017-Proceedings of Conference, Valencia, Spain, 3–7 April 2017; Volume 1, pp. 438–449. [Google Scholar]

- Yang, X.; Chen, Y.-N.; Hakkani-Tür, D.; Crook, P.; Li, X.; Gao, J.; Deng, L. A Network-based End-to-End Trainable Task-oriented Dialogue System. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5690–5694. [Google Scholar]

- Kalchbrenner, N.; Blunsom, P. Recurrent Convolutional Neural Networks for Discourse Compositionality. In Proceedings of the Workshop on Continuous Vector Space Models and their Compositionality, Sofia, Bulgaria, 9 August 2013; pp. 119–126. [Google Scholar]

- Liu, C.; Xu, P.; Sarikaya, R. Deep contextual language understanding in spoken dialogue systems. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association (INTERSPEECH), Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Lowe, R.; Pow, N.; Serban, I.V.; Pineau, J. The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems. In Proceedings of the 16th Annual Meeting of the Special Interest Group on Discourse and Dialogue, Prague, Czech Republic, 2–4 September 2015; pp. 285–294. [Google Scholar]

- Wen, T.-H.; Gasic, M.; Mrkšić, N.; Su, P.-H.; Vandyke, D.; Young, S. Semantically Conditioned LSTM-based Natural Language Generation for Spoken Dialogue Systems. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1711–1721. [Google Scholar]

- Weizenbaum, J. ELIZA–A computer program for the study of natural language communication between man and machine. Commun. ACM 1996, 9, 36–45. [Google Scholar] [CrossRef]

- Vinyals, O.; Le, Q. A Neural Conversational Model. arXiv 2015, arXiv:1506.05869. [Google Scholar]

- Kim, J.; Lee, H.-G.; Kim, H.; Lee, Y.; Kim, Y.-G. Two-Step Training and Mixed Encoding-Decoding for Implementing a Generative Chatbot with a Small Dialogue Corpus. In Proceedings of the Workshop on Intelligent Interactive Systems and Language Generation (2IS&NLG), Tilburg, The Netherlands, 5 November 2018; pp. 31–35. [Google Scholar]

- Kapočiūtė-Dzikienė, J. A Domain-Specific Generative Chatbot Trained from Little Data. Appl. Sci. 2020, 10, 2221. [Google Scholar] [CrossRef]

- Kim, J.; Oh, S.; Kwon, O.-W.; Kim, H. Multi-Turn Chatbot Based on Query-Context Attentions and Dual Wasserstein Generative Adversarial Networks. Appl. Sci. 2019, 9, 3908. [Google Scholar] [CrossRef]

- Zhang, W.N.; Zhu, Q.; Wang, Y.; Zhao, Y.; Liu, T. Neural Personalized Response Generation as Domain Adaptation. World Wide Web 2019, 22, 1427–1446. [Google Scholar] [CrossRef]

- Sebastiani, F. Machine Learning in Automated Text Categorization. ACM Comput. Surv. 2002, 34, 1–47. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Lin, M. Review of Intent Detection Methods in the Human-Machine Dialogue System. J. Phys. Conf. Ser. 2019, 1267. [Google Scholar] [CrossRef]

- Akulick, S.; Mahmoud, E.S. Intent Detection through Text Mining and Analysis. In Proceedings of the Future Technologies Conference (FTC), Vancouver, WA, Canada, 29 November 2017. [Google Scholar]

- Gridach, M.; Haddad, H.; Mulki, H. Churn identification in microblogs using convolutional neural networks with structured logical knowledge. In Proceedings of the 3rd Workshop on Noisy User-generated Text, Copenhagen, Denmark, 7 September 2017; pp. 21–30. [Google Scholar]

- Abbet, C.; M’hamdi, M.; Giannakopoulos, A.; West, R.; Hossmann, A.; Baeriswyl, M.; Musat, C. Churn Intent Detection in Multilingual Chatbot Conversations and Social Media. In Proceedings of the 22nd Conference on Computational Natural Language Learning, Brussels, Belgium, 31 October–1 November 2018; pp. 161–170. [Google Scholar]

- Balodis, K.; Deksne, D. FastText-Based Intent Detection for Inflected Languages. Information 2019, 10, 161. [Google Scholar] [CrossRef]

- Xia, C.; Zhang, C.; Yan, X.; Chang, Y.; Yu, P. Zero-shot User Intent Detection via Capsule Neural Networks. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3090–3099. [Google Scholar]

- Zhou, X.; Li, L.; Dong, D.; Liu, Y.; Chen, Y.; Zhao, W.X.; Yu, D.; Wu, H. Multi-Turn Response Selection for Chatbots with Deep Attention Matching Network. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1118–1127. [Google Scholar]

- Kapočiūtė-Dzikienė, J. Intent Detection-Based Lithuanian Chatbot Created via Automatic DNN Hyper-Parameter Optimization. In Frontiers in Artificial Intelligence and Applications, Volume 328: Human Language Technologies–The Baltic Perspective; IOS Press: Amsterdam, The Netherlands, 2020; pp. 95–102. [Google Scholar]

- Braun, D.; Hernandez, M.A.; Matthes, F.; Langen, M. Evaluating Natural Language Understanding Services for Conversational Question Answering Systems. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, Saarbrücken, Germany, 15–17 August 2017; pp. 174–185. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition; IEEE: Pasadena, CA, USA, 1998; pp. 2278–2324. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Grave, E.; Bojanowski, P.; Gupta, P.; Joulin, A.; Mikolov, T. Learning Word Vectors for 157 Languages. In Proceedings of the International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Baioletti, M.; Di Bari, G.; Milani, A.; Poggioni, V. Differential Evolution for Neural Networks Optimization. Mathematics 2020, 8, 69. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Granada, Spain, December 2011; pp. 2546–2554. [Google Scholar]

- McNemar, Q.M. Note on the Sampling Error of the Difference Between Correlated Proportions or Percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

| Intent | Numb. of Instances | Numb. of Words | ||||

|---|---|---|---|---|---|---|

| EN | ET | LT | LV | RU | ||

| Training dataset | ||||||

| FindConnection | 57 | 510 (115) | 393 (186) | 460 (137) | 449 (145) | 482 (123) |

| DepartureTime | 43 | 328 (62) | 223 (74) | 255 (59) | 245 (64) | 250 (64) |

| Testing dataset | ||||||

| FindConnection | 71 | 508 (99) | 369 (154) | 484 (113) | 449 (120) | 483 (104) |

| DepartureTime | 35 | 241 (48) | 168 (53) | 201 (49) | 193 (55) | 192 (48) |

| Intent | Numb. of Instances | Numb. of Words | ||||

|---|---|---|---|---|---|---|

| EN | ET | LT | LV | RU | ||

| Training dataset | ||||||

| Make Update | 10 | 77 (42) | 59 (39) | 73 (40) | 69 (42) | 74 (40) |

| None | 3 | 17 (16) | 13 (12) | 13 (12) | 13 (12) | 15 (14) |

| Setup Printer | 10 | 109 (67) | 77 (57) | 85 (65) | 83 (60) | 93 (62) |

| Shutdown Computer | 13 | 96 (61) | 67 (48) | 78 (61) | 74 (53) | 87 (65) |

| Software Recommendation | 17 | 113 (77) | 90 (66) | 104 (85) | 98 (78) | 108 (80) |

| Testing dataset | ||||||

| Make Update | 37 | 305 (116) | 243 (139) | 288 (117) | 283 (120) | 293 (116) |

| None | 5 | 46 (39) | 34 (28) | 44 (37) | 38 (33) | 43 (39) |

| Setup Printer | 13 | 99 (54) | 77 (55) | 78 (52) | 81 (51) | 85 (50) |

| Shutdown Computer | 14 | 103 (64) | 75 (63) | 73 (61) | 81 (67) | 93 (77) |

| Software Recommendation | 40 | 322 (197) | 259 (174) | 296 (206) | 278 (201) | 303 (204) |

| Make Update | 37 | 305 (116) | 243 (139) | 288 (117) | 283 (120) | 293 (116) |

| Intent | Numb. of Instances | Numb. of Words | ||||

|---|---|---|---|---|---|---|

| EN | ET | LT | LV | RU | ||

| Training dataset | ||||||

| Change Password | 2 | 19 (15) | 16 (15) | 15 (13) | 15 (12) | 18 (15) |

| Delete Account | 7 | 50 (20) | 36 (19) | 40 (19) | 38 (17) | 42 (21) |

| Download Video | 1 | 7 (7) | 5 (5) | 5 (5) | 4 (4) | 5 (5) |

| Export Data | 2 | 16 (13) | 13 (12) | 17 (15) | 13 (11) | 19 (16) |

| Filter Spam | 6 | 43 (36) | 38 (37) | 43 (39) | 42 (38) | 42 (37) |

| Find Alternative | 7 | 40 (33) | 35 (30) | 34 (29) | 35 (30) | 38 (32) |

| None | 2 | 12 (12) | 11 (11) | 11 (11) | 17 (17) | 14 (14) |

| Sync Accounts | 3 | 29 (22) | 23 (21) | 26 (24) | 26 (21) | 26 (22) |

| Testing dataset | ||||||

| Change Password | 6 | 50 (37) | 42 (34) | 42 (31) | 42 (33) | 46 (37) |

| Delete Account | 10 | 75 (36) | 56 (36) | 65 (35) | 63 (36) | 70 (36) |

| Export Data | 3 | 35 (29) | 23 (23) | 32 (28) | 28 (26) | 30 (26) |

| Filter Spam | 14 | 141 (83) | 98 (76) | 123 (86) | 129 (92) | 134 (88) |

| Find Alternative | 16 | 104 (67) | 99 (69) | 94 (71) | 87 (63) | 89 (67) |

| None | 4 | 35 (33) | 26 (26) | 32 (32) | 31 (31) | 34 (32) |

| Sync Accounts | 6 | 61 (45) | 49 (40) | 53 (46) | 50 (39) | 58 (45) |

| Dataset | Random Baseline | Majority Baseline |

|---|---|---|

| chatbot | 0.558 | 0.670 |

| askUbuntu | 0.283 | 0.367 |

| webapps | 0.186 | 0.271 |

| fastText embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.981 | 0.981 | 0.991 | 0.725 | 0.642 | 0.826 | 0.373 | 0.424 | 0.661 |

| rand.suggest | 0.972 | 0.953 | 0.981 | 0.798 | 0.817 | 0.872 | 0.712 | 0.237 | 0.712 |

| BERT embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.991 | 0.962 | 0.943 | 0.853 | 0.826 | 0.817 | 0.508 | 0.542 | 0.559 |

| rand.suggest | 0.981 | 0.953 | 0.915 | 0.890 | 0.853 | 0.844 | 0.678 | 0.458 | 0.661 |

| fastText embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.943 | 0.943 | 0.953 | 0.780 | 0.706 | 0.633 | 0.508 | 0.475 | 0.542 |

| rand.suggest | 0.953 | 0.943 | 0.943 | 0.771 | 0.670 | 0.771 | 0.627 | 0.356 | 0.508 |

| BERT embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.953 | 0.943 | 0.943 | 0.872 | 0.752 | 0.761 | 0.644 | 0.407 | 0.508 |

| rand.suggest | 0.972 | 0.934 | 0.943 | 0.890 | 0.706 | 0.780 | 0.254 | 0.525 | 0.492 |

| fastText embeddings | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.962 | 0.934 | 0.943 | 0.761 | 0.706 | 0.798 | 0.559 | 0.475 | 0.593 |

| rand.suggest | 0.330 | 0.896 | 0.953 | 0.771 | 0.697 | 0.826 | 0.542 | 0.322 | 0.559 |

| BERT embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 1.000 | 0.972 | 0.953 | 0.826 | 0.780 | 0.835 | 0.644 | 0.458 | 0.525 |

| rand.suggest | 0.981 | 1.000 | 0.962 | 0.890 | 0.798 | 0.881 | 0.610 | 0.492 | 0.508 |

| fastText embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.972 | 0.962 | 0.972 | 0.780 | 0.752 | 0.853 | 0.356 | 0.508 | 0.508 |

| rand.suggest | 0.915 | 0.972 | 0.981 | 0.844 | 0.339 | 0.853 | 0.153 | 0.390 | 0.492 |

| BERT embeddings | |||||||||

| chatbot | askUbuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.981 | 0.972 | 0.962 | 0.844 | 0.651 | 0.771 | 0.661 | 0.542 | 0.492 |

| rand.suggest | 0.972 | 0.972 | 0.962 | 0.872 | 0.706 | 0.761 | 0.712 | 0.508 | 0.525 |

| fastText embeddings | |||||||||

| chatbot | ask Ubuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.934 | 0.943 | 0.943 | 0.771 | 0.743 | 0.798 | 0.610 | 0.542 | 0.610 |

| rand.suggest | 0.830 | 0.934 | 0.962 | 0.780 | 0.752 | 0.780 | 0.271 | 0.593 | 0.627 |

| BERT embeddings | |||||||||

| chatbot | ask Ubuntu | webapps | |||||||

| CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | CNN | LSTM | BiLSTM | |

| tpe.suggest | 0.962 | 0.962 | 0.934 | 0.844 | 0.798 | 0.826 | 0.661 | 0.593 | 0.644 |

| rand.suggest | 0.972 | 0.943 | 0.953 | 0.881 | 0.761 | 0.844 | 0.576 | 0.610 | 0.576 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapočiūtė-Dzikienė, J.; Balodis, K.; Skadiņš, R. Intent Detection Problem Solving via Automatic DNN Hyperparameter Optimization. Appl. Sci. 2020, 10, 7426. https://doi.org/10.3390/app10217426

Kapočiūtė-Dzikienė J, Balodis K, Skadiņš R. Intent Detection Problem Solving via Automatic DNN Hyperparameter Optimization. Applied Sciences. 2020; 10(21):7426. https://doi.org/10.3390/app10217426

Chicago/Turabian StyleKapočiūtė-Dzikienė, Jurgita, Kaspars Balodis, and Raivis Skadiņš. 2020. "Intent Detection Problem Solving via Automatic DNN Hyperparameter Optimization" Applied Sciences 10, no. 21: 7426. https://doi.org/10.3390/app10217426

APA StyleKapočiūtė-Dzikienė, J., Balodis, K., & Skadiņš, R. (2020). Intent Detection Problem Solving via Automatic DNN Hyperparameter Optimization. Applied Sciences, 10(21), 7426. https://doi.org/10.3390/app10217426