1. Introduction

In contemporary robotics-research, the study of man-machine interactions is a very important area, especially in the context of robots that are released from their traditional, isolated protection cage to instead collaborate hand-in-hand with humans. For effective and efficient usage of such robots, three aspects are crucial: modeling, actuation, and sensing. Modeling plays a vital role in the development and commissioning process of such robots. Traditionally, although kinematic models for standard configurations of serial manipulators exist [

1], when it comes to dynamical models these are usually derived using multibody systems (MBS) [

2]. Efficient implementations for MBS already exist, providing the nonlinear equations of motion in a symbolical or numerical manner [

3].

For industrial robots and modular manipulators, independent joint controllers are the most common control approach, for example, using PD or PID control schemes [

4]. However, these decentralized linear control structures disregard the highly nonlinear behavior of such systems, usually do not exploit a possibly available model, fail to take information from other subsystems into account or require an elaborate tuning of the controller parameters. Another commonly used control technique, especially in trajectory tracking for rigid MBS, is the so-called computed torque method [

5], which is a particular case of feedback linearization control that uses the method of inverse dynamics. Nevertheless, this model-based feed-forward control technique’s performance depends strongly on the modeling quality of the robot dynamics. Model uncertainties, that is, unmodeled, unknown or varying friction properties or a change in payload, worsen the tracking accuracy immensely. A further advanced control technique for nonlinear systems is model predictive control (MPC), also known as predictive or receding horizon control, which is firmly established in research [

6] and industrial application [

7]. MPC uses the advantageous capability of a model to forecast the future dynamical behavior of the system. An optimal input sequence is obtained by solving online an open-loop optimal control problem (OCP) over a finite horizon subject to system dynamics and constraints at every sample time based on the latest measurements. In case of serial manipulators, MPC offers numerous advantages, for example, handling the multivariable control problem naturally, operating as a centralized control system, intuitive adjustment of controller parameters, trajectory tracking, or considering actuator and state limitations already in the control design [

8]. Thanks to the vast increase of computational power and more efficient algorithms, MPC is getting applicable to systems with fast nonlinear dynamics like a robotic system. Numerous nonlinear MPC approaches exist to reduce the computational burden of online optimization and guarantee real-time feasibility. In Reference [

9], for example, a laboratory crane is controlled by a gradient-based MPC scheme using suboptimal solutions to meet the real-time requirements. In Reference [

10], the control of a KUKA LWR IV robot is considered and the so-called real-time iteration scheme [

11,

12] solves the underlying OCP of the MPC problem, implemented in the ACADO toolkit [

13]. A 6-DOF robotic arm is addressed in Reference [

14], and herein the OCP is solved with the commercial interior-point-based nonlinear programming (NLP) solver FORCES PRO [

15]. An approximate explicit MPC law is computed off-line beforehand for a robot manipulator in Reference [

16] to bypass the online optimization, but this demands massive off-line computations and results in a static control law which loses the possibility of adapting the control law during operation to a greater extent. But all of the mentioned works use specialized and expensive hardware setups, which is in contrast to the presented and intended low-cost approach. Another popular approach to manage the contrary facts of nonlinear system behavior and expensive online calculations for solving nonlinear OCPs in real-time is first linearizing the system in some manner and then exploiting the advanced techniques developed for linear MPC. For example, for linear MPC, highly applicable real-time implementations are available as open source [

17]. For this, a common approach is to use a linear time-varying (LTV) model to approximate the nonlinear system, see References [

18,

19,

20,

21]. This approach is reasonable and sufficient for the considered low-cost hardware environment because of its easy implementation in the presence of the equations of motion in a symbolic manner. Moreover, none of the mentioned MPC approaches for robotic manipulators use feedback directly obtained by a vision-based measurement of the robot’s pose to control the robot as considered in this work.

Sensing is essential for controlling the robot on the one hand and monitoring the environment on the other hand. Encoders usually provide control feedback, but camera-based systems can realize both robot state sensing and monitoring of the environment. They can detect the positions of robot links, humans, and workpieces, leading to safer and faster workflows. In this area, vision-based sensing is becoming more important, which is the second aspect of this paper. For example, it allows automatic recognition of a workpiece’s position and orientation, which therefore does not have to be positioned exactly before a robot processes it [

22]. In References [

23,

24], stereo camera systems are used to track a flying ball and catch it with a 6-DOF robot arm. The humanoid robot

Justin is even capable to catch two balls at the same time with its two arms [

25,

26,

27]. Vision-based sensing is also an opportunity for robots with elastic links, for which the end-effector position cannot be computed from encoder signals, that is, joint angles, only.

In this paper, both MPC and a vision-based approach for gaining control feedback in real-time are combined for a serial manipulator in an experimental study. Deriving the predictive control law includes a structured step-by-step design procedure involving parameter identification and derivation of the forward and inverse dynamics. The modeling is simplified to a large extent by exploiting the advantage of having the equations of motion in a symbolical and numerical form derived by the research code Neweul-M

2 [

3]. To handle the heavy online computational burden originating from the derived nonlinear MBS-model, a linear time-varying MPC (LTV MPC) scheme is developed based on linearizing the nonlinear system with respect to the desired trajectory and the a priori known feed-forward control torque. Instead of only using the angles measured by the built-in incremental encoders of the robot as feedback for the MPC, the robot’s current pose is detected based on images from a low-cost industrial camera as well. For this purpose, the robot is equipped with markers, which can be detected utilizing color filtering during image processing. From the detected markers’ image coordinates, the joint angles are calculated and fed back to the controller. The conducted experiments reveal the feasibility, advantages, and robustness of the proposed LTV MPC scheme when dealing with encoder feedback and show the effect of vision-based feedback on the MPC-based control loop.

Several aspects are making up the novel contributions of this work: To begin with, an encoder- and vision-based MPC is realized on a low-cost industrial-standard system, where a standard laptop PC performs the computations and process control. The controller shows that implementing and especially tuning an MPC is easy if the model equations, that is, the equations of motion, are available in symbolic form. In contrast to common control techniques, like PD or PID, adequate results are obtained with MPC without massive parameter tuning. Another novel aspect is the feedback of visually measured states, that is, joint positions, of the robot to the controller. Vision-based sensing is used in other publications as well [

25,

26,

27], but with a different indication, that is identifying a target position for the robot’s end-effector. The actual robot movement to reach this position is then controlled based on encoder feedback, whereas in this contribution, the motion is controlled by visual feedback in real-time. In general, this contribution’s work can be seen as proof-of-concept and preparation for further studies on robots with flexible links. The position of flexible bodies cannot be described solely by encoders in the joints because of the links’ elastic deformation, whereas, for vision-based sensing, elasticity in the links can be managed. In this work, also the challenges coming along with vision-based sensing are pointed out. Moreover, the used semi-automatic trajectory planning algorithm is presented, which has been developed incidentally to avoid obstacles.

The paper is organized along with the proposed step-by-step procedure for the vision- and MPC-based control system design.

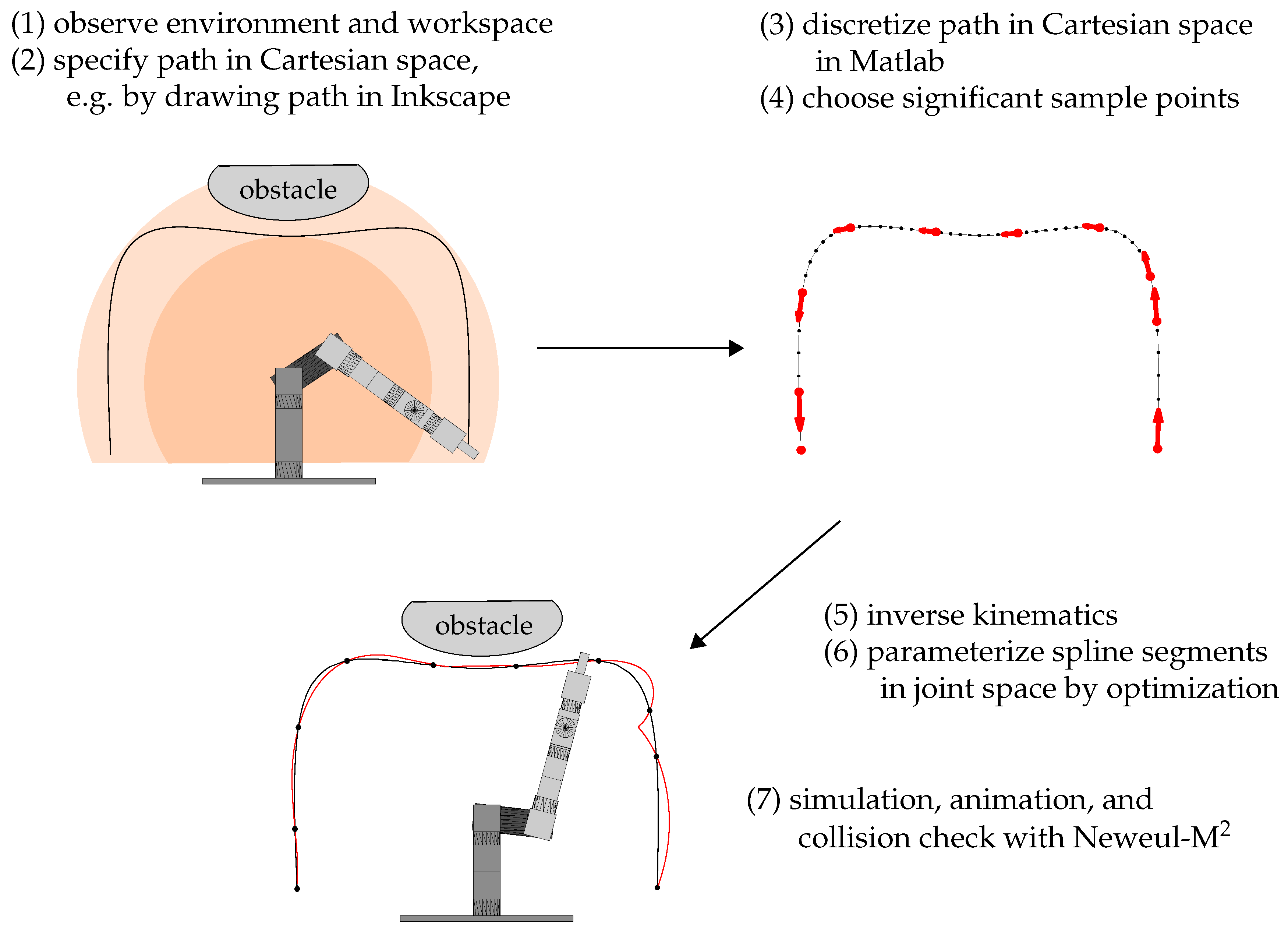

Section 2 presents the available hardware, that is, the modular manipulator and camera system, as well as the lean process control system. In

Section 3, the modeling is presented, including parameter identification and the derivation of the forward and inverse dynamics. Furthermore, the semi-automatic trajectory planning workflow is shown.

Section 4 is dedicated to control design. A description of the image processing to measure the system states visually and runtime analysis of the vision-based control loop is found in

Section 5.

Section 6 presents the results of two meaningful experimental studies to point out the advantages and restrictions of the proposed LTV MPC scheme for the rigid MBS and also show the effects of vision-based control feedback on the control performance. The contribution ends with the conclusion and outlook in the final section.

2. System Setup

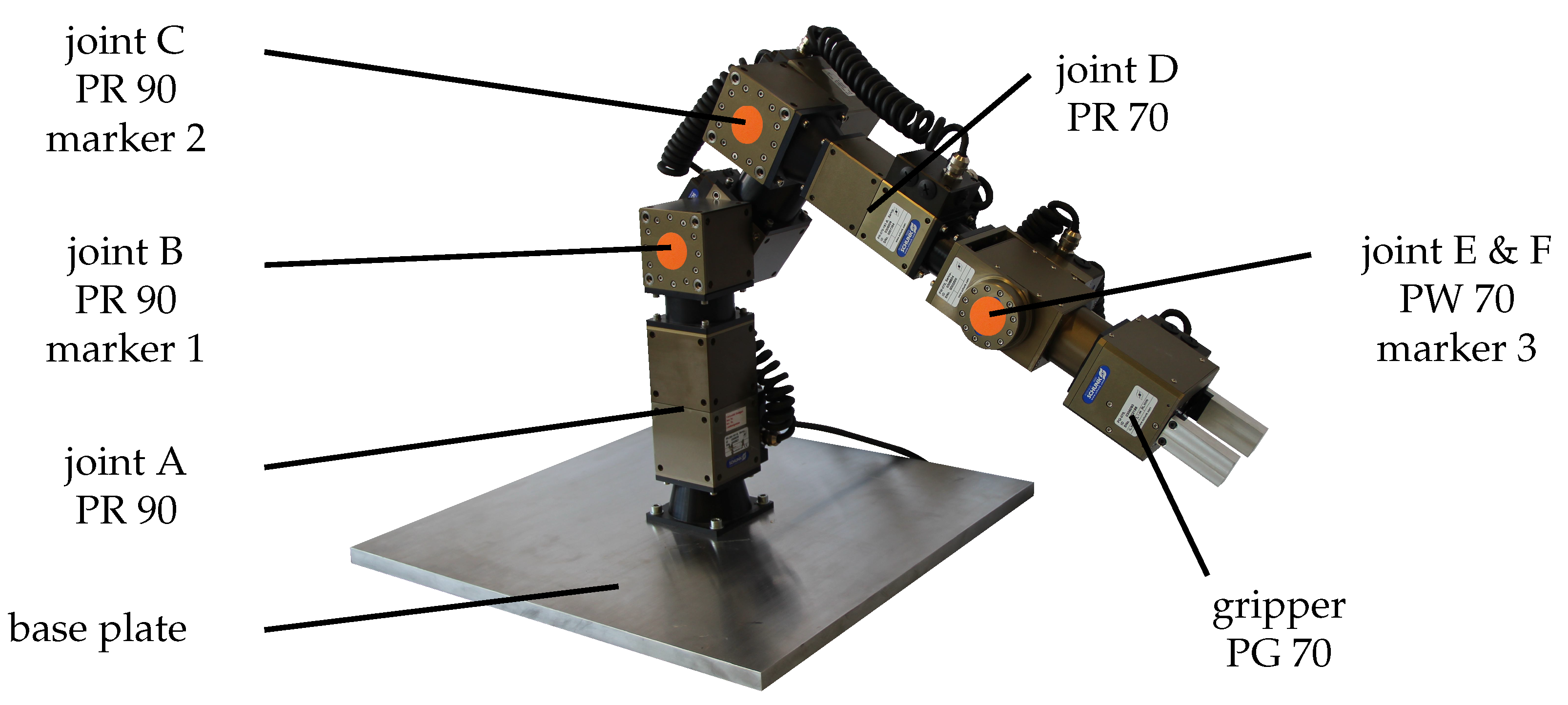

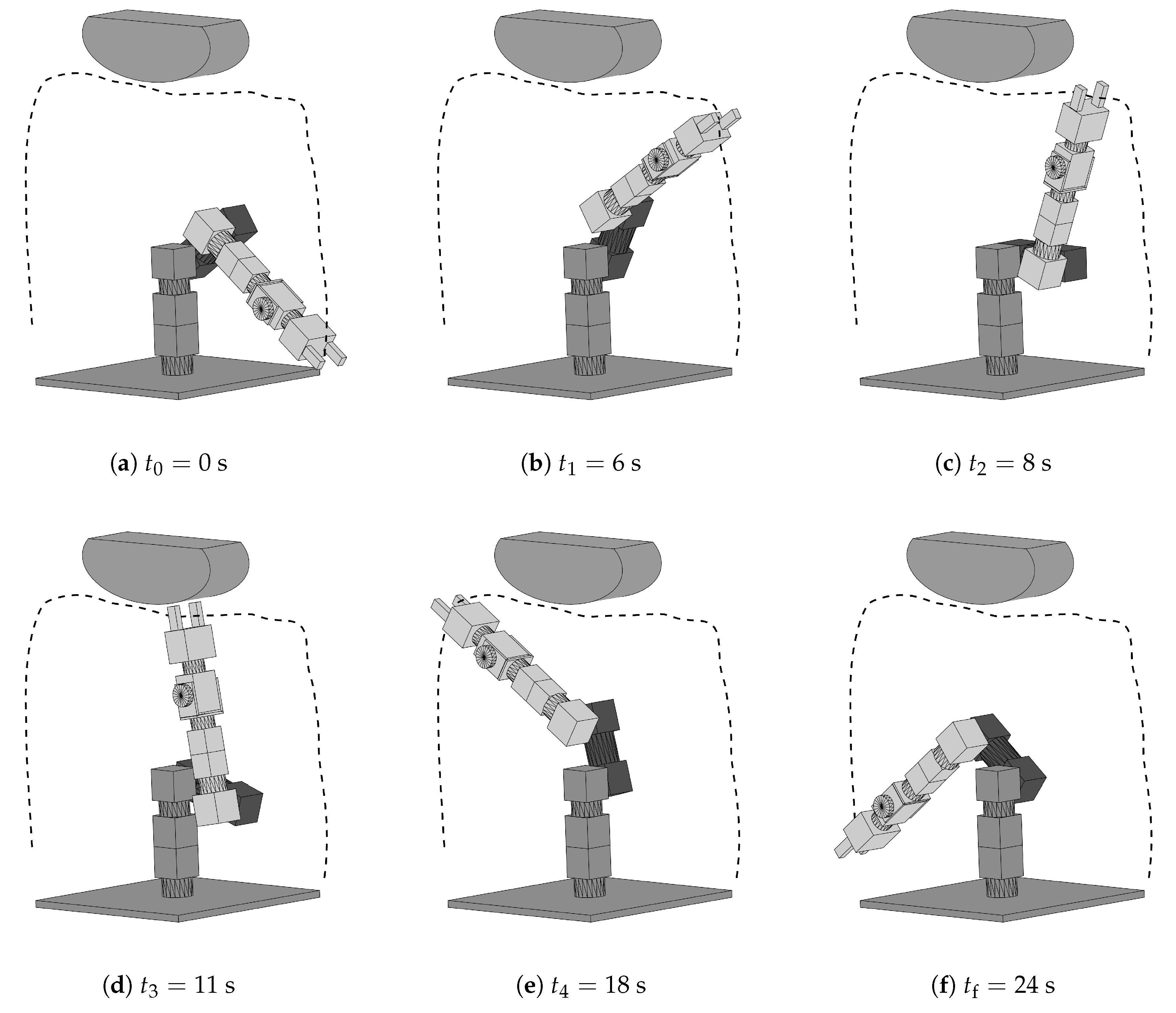

For evaluating the feasibility of the proposed MPC- and vision-based control scheme, a modular manipulator with components of the company Schunk is available, see

Figure 1. Such manipulators are used for various control tasks in industrial and service robotics. The particular advantage of the concept is their modularity and their hardware’s reconfigurability. The three subsequent subsections give a short overview of the available hardware, the implemented vision-based sensing, and the lean solution of the real-time process control system.

2.1. Modular Manipulator

The serial manipulator consists of five rotary modules with six degrees of freedom (DOF) and a gripper module for carrying a load. A massive aluminum plate acts as a foundation. The first three DOF are realized by rotary units of type PR 90 (joints A, B, C) followed by the fourth DOF for which a smaller rotary unit of type PR 70 (joint D) is used. The fifth rotary unit is of type PW 70 (joint E & F) and consists of a pan-tilt unit, representing the fifth and sixth DOF. The gripper module is of type PG 70 with two prismatic fingers, and the center of the two fingers is the tool center point and end-effector position. Five connection elements link the individual units and the plate. The resulting kinematic structure of the considered configuration can be described as an anthropomorphic arm with a spherical wrist.

Table 1 lists some technical properties of the modules.

Each rotary unit has integrated control and power electronics, an incremental encoder for position and speed evaluation, and a brake for holding functions on shutdown and power failure. A brushless DC servomotor in each rotary unit directly drives a Harmonic Drive gear, which provides torque at each DOF based on the defined motor current.

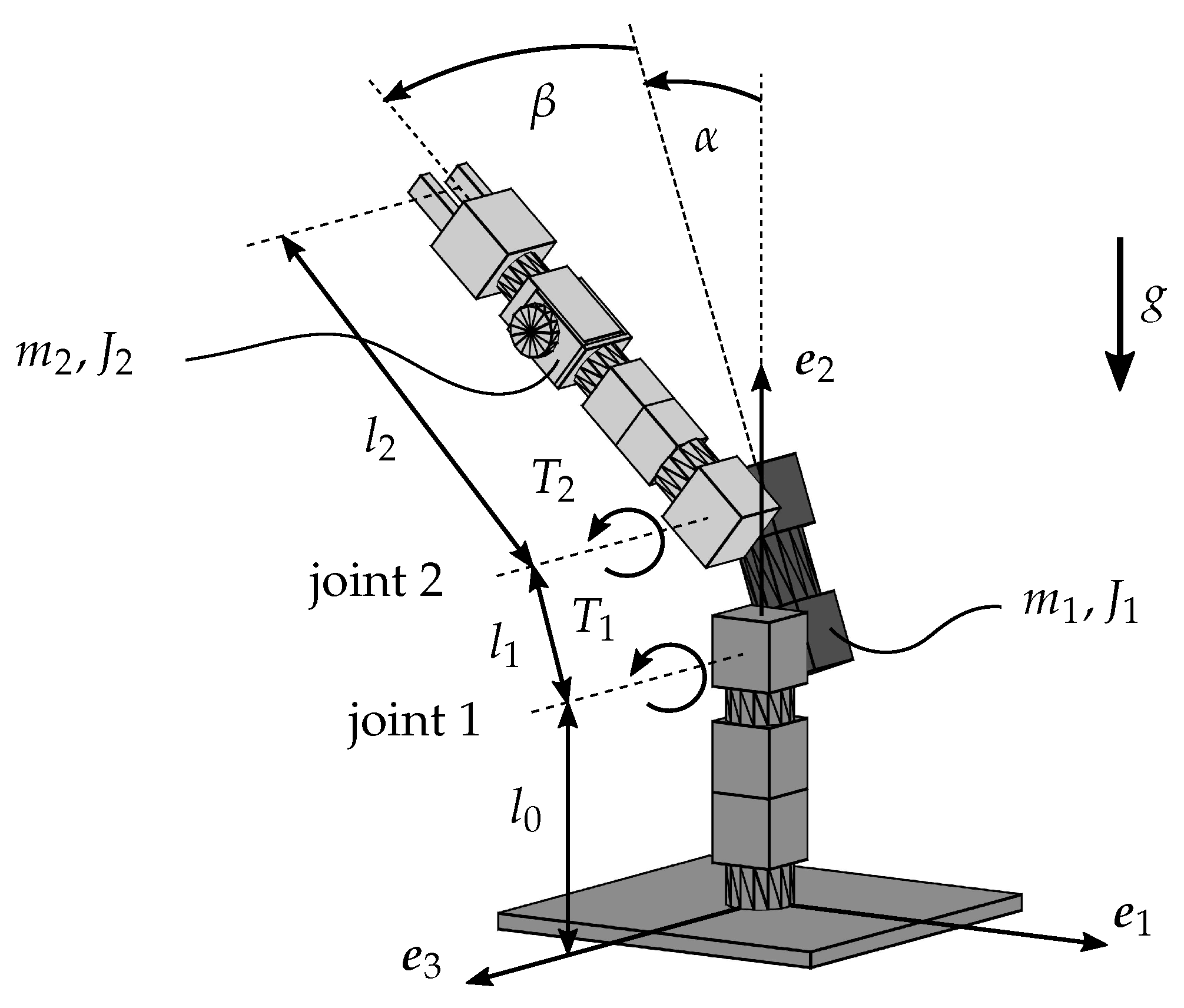

For simplification, only the second and third DOF of the 6-DOF manipulator are used to validate the control scheme and all other units are positioned as depicted in

Figure 1. Besides, the brakes of the unused units are applied. Consequently, this leads to a two-link manipulator with two DOF, which permits end-effector movements in a vertical plane. A two-link planar arm describes the resulting open-chain kinematic structure. For notational simplification, the rotary units B and C are denoted in the following as the first and second units, respectively.

2.2. Camera System Setup

For vision-based sensing, an IDS UI-3060CP-C-HQ Rev. 2 industrial camera is used. They can record 166 frames per second with a resolution of 2.35 megapixels (). Further technical data include a pixel size of 5.86 μm, 11.3 mm × 7.1 mm sensor dimensions, and an optical sensor class of 1/1.2. For communication with the laptop PC a USB 3.0 port is provided.

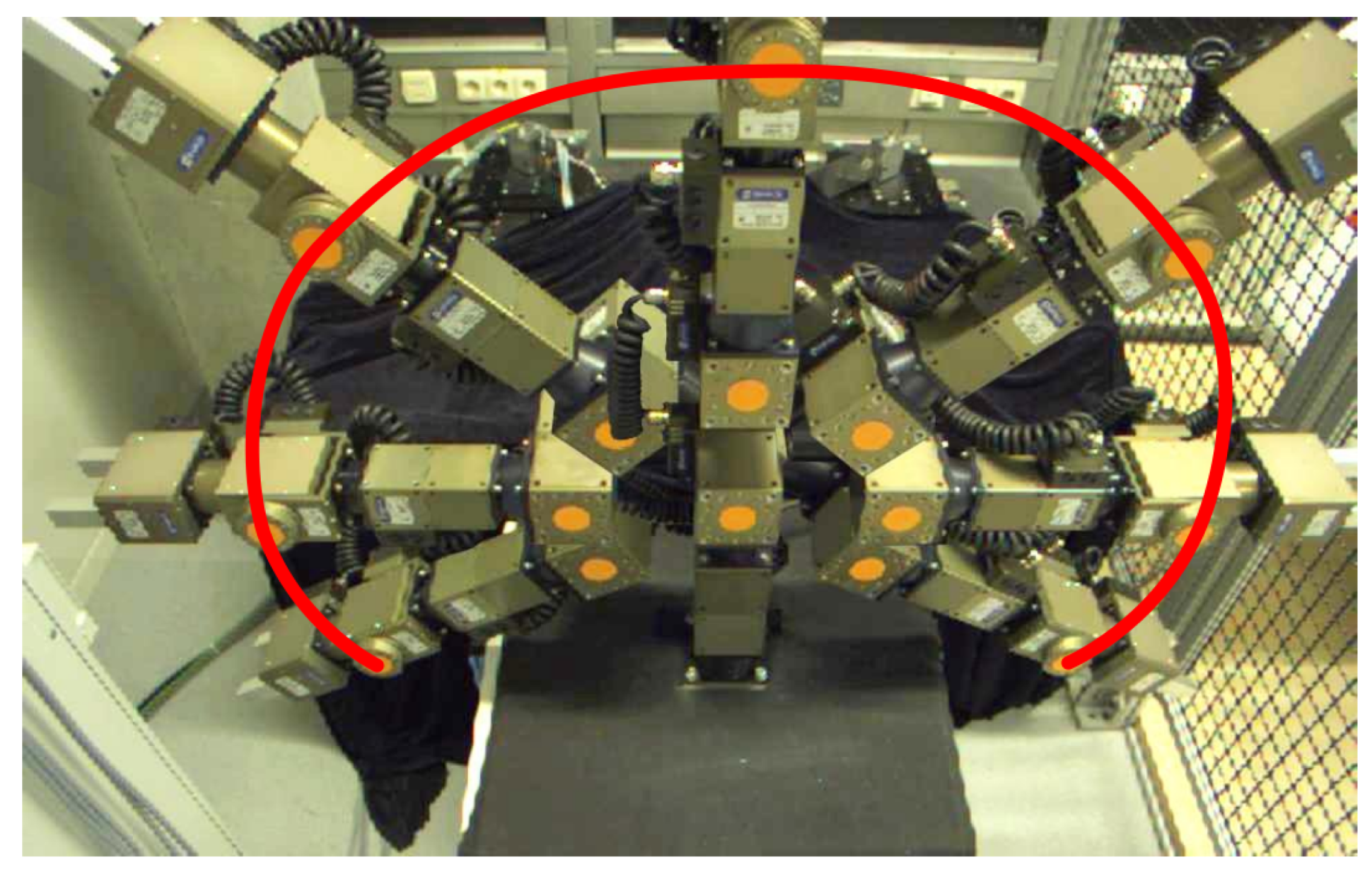

The camera’s position concerning the robot is chosen such that the image area covers all markers attached to the robot for the complete workspace of the robot. The spatial arrangement of robot and camera is shown in

Figure 2, highlighted by arrows.

The image area of the camera is shown in

Figure 3 for several configurations of the robot arm at the outer bounds of the workspace. As illustrated by the drawn line, the outermost marker is within the image area for the whole workspace.

The metallic surface of the foundation aluminum plate is covered by some black fabric in order to avoid light reflections that could cause problems in marker detection during image processing.

For a two-dimensional workspace of the robot, as it is the case for the experimental studies done in this paper, a single camera is sufficient for measuring the position. As soon as three-dimensional movements are allowed, a second camera for stereo vision is required.

Before the camera can be used for position estimation, the visual system has to be calibrated once utilizing a checkerboard pattern of known size. Thereby, both the internal and external camera parameters are identified, such as for example focal length and coordinate transformations.

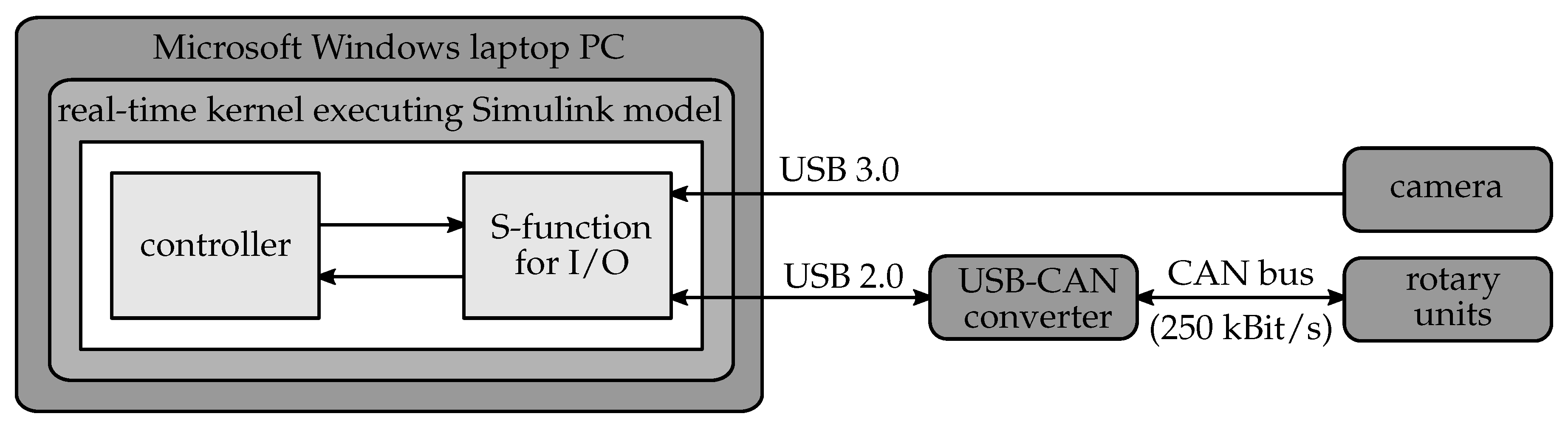

2.3. Real-Time Process Control System

For process control, a Microsoft Windows laptop PC with Matlab R2014b and Simulink, including the additional toolboxes Real-Time Windows Target and Simulink Coder, is used. In case of vision-based control, additionally, the Image Acquisition Toolbox and the Computer Vision Toolbox are required. The laptop PC is equipped with an Intel Core i5-3210M CPU (2x2.5 GHz, 8 GB DDR3). Real-Time Windows Target [

28] realizes a real-time engine for Simulink models on a Microsoft Windows PC and offers the capability to run hardware-in-the-loop simulations in real-time. It is a lean solution for rapid prototyping and provides an environment where a single computer can be used as a host and target computer. Consequently, the real-time simulations are executed in Simulink’s normal mode without an external target machine. The communication between the Simulink model running on the PC and the rotary units is implemented as follows. Schunk provides a function library written in C to communicate via a USB-CAN bus interface with the manipulator’s units. Instead of integrating the hardware via the supported input/output (I/O) boards into the loop, S-functions in the Simulink model are used for the I/O. S-functions offer the capability to integrate C code into Simulink models. For this purpose, C functions from the function library for determining the motor current, measuring the angle and angular velocity for each unit are implemented to realize I/O-communication with the rotary units.

The S-functions are compiled as MEX files using Microsoft Visual Studio C/C++ 2010 by linking the function libraries. An external power supply provides the rotary units with a constant voltage of 24 V. Because of integrated control and power electronics in each unit, no servo amplifiers are necessary. If no vision-based sensing is implemented, but encoder signals are taken as control feedback, the whole control loop and thus also the I/O-lines are processed with a sampling rate of 50 Hz, which corresponds to a sampling interval of 20 ms. The sampling rate results from the time for signal transmission via CAN bus for determining the motor current, measuring the angle and angular velocity of each unit and the calculations for the MPC. When vision-based sensing is implemented, the sampling rate is limited to 5 Hz due to the image processing algorithm’s high time requirements. For details see

Section 5 and

Section 6. The CAN bus works with a baud rate of 250 kBit/s.

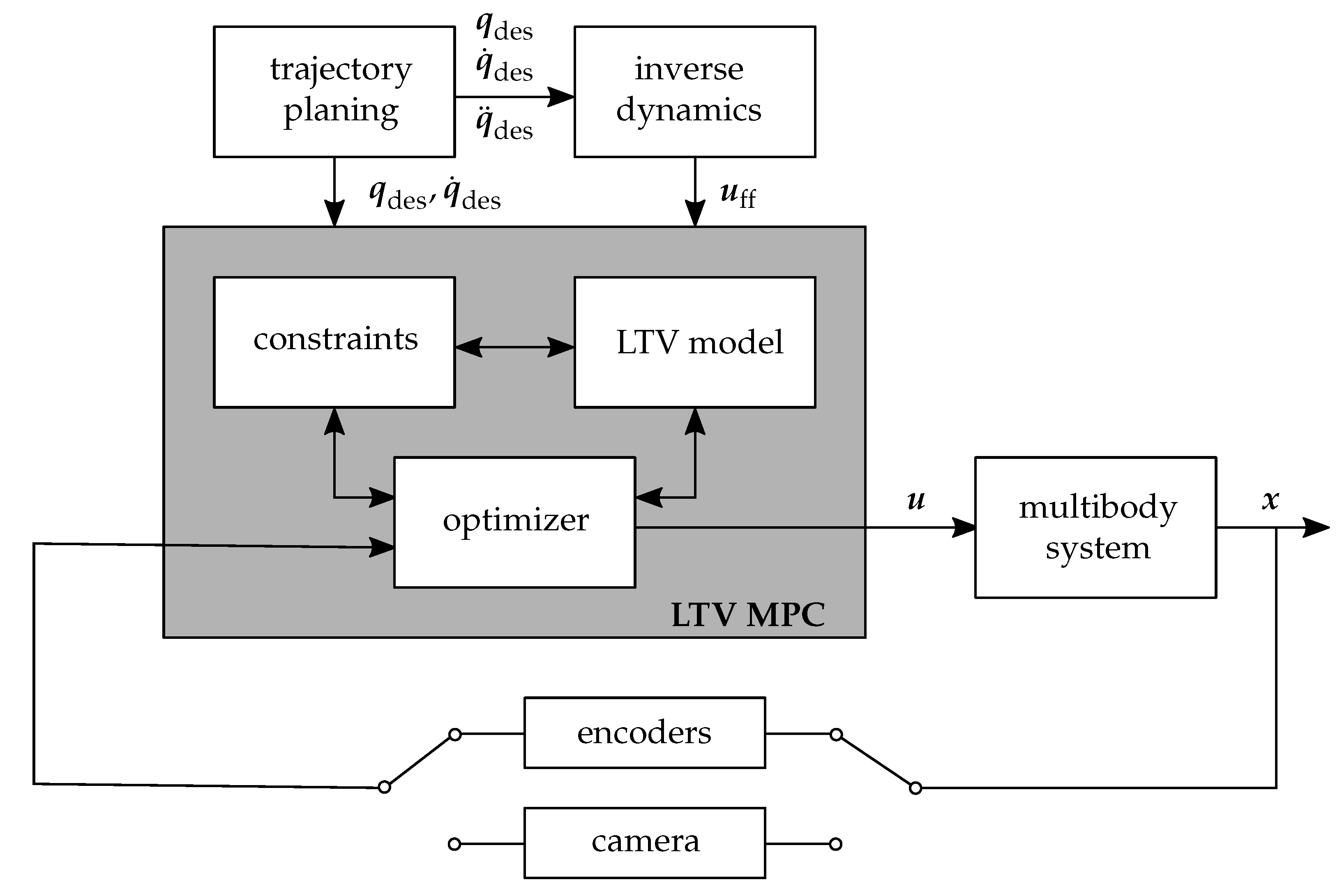

The camera is connected to the Laptop PC by a USB 3.0 connection. The Matlab Image Acquisition Toolbox offers a tool called Adaptor Kit, which is a C++ framework to create a custom adaptor, that is, a dynamic link library (DLL), for one’s own camera hardware. Having this DLL available, Matlab can communicate with the camera after loading it as a Video Input Object using the Image Acquisition Toolbox. The overall control system is visualized by

Figure 4.

It is worth mentioning that the used real-time process control system is a lean and cheap solution for controlling the considered systems in real-time since no other real-time target machine or I/O boards are necessary.

5. Vision-Based Sensing

Instead of using the signals from the robot’s incremental encoders as control feedback, the joint angles can also be determined by evaluating images from a camera system. The necessary experimental setup and the implemented image processing are in the scope of this section.

5.1. Marker Detection

In order to measure the current robot position visually, circular-shaped orange markers with a diameter of 4 cm are attached to three decisive points on the robot, see

Figure 1. The marker detection can be realized based on different criteria. The first option is a color-based detection, where the desired objects are identified with the help of color filters [

23,

24]. The second option is a shape-based detection, that is, to identify circles in the image. For this, a variant of the Hough transform or a Sobel filter can be used as in Reference [

27]. Another option would be the comparison of the current image with a reference image [

37]. By computing the difference of both images, moving objects can be detected.

The latter method based on image differences is not suitable here, since not only the three markers but all the moving parts of the robot would be detected. For a shape-based marker detection, first edges have to be detected, for example by means of the Canny algorithm [

38], before the circles can be identified using the Hough transform [

39]. Since this process takes about two seconds, the markers are tracked, for example, with the Kanadi-Lucas-Tomasi method (KLT tracking) [

40,

41] after they have been identified once. Unfortunately, often points get lost after a few seconds, as experiments show, and the markers have to be identified once again, which takes too long for real-time execution. Therefore, the shape-based detection method is unsuitable here as well.

The detection method chosen for the application presented in this paper is color-based. The images are analyzed in HSV color space, which is short for hue, saturation, and value. Hue stands for the color, for example, red, green, or blue, saturation indicates the colorfulness, and value corresponds to the brightness. The HSV color space is particularly suitable, since different shades are to be found coherently in one sector of the color space and can therefore be described independently from the brightness. The brightness of the ambient light thus has only little influence on the result.

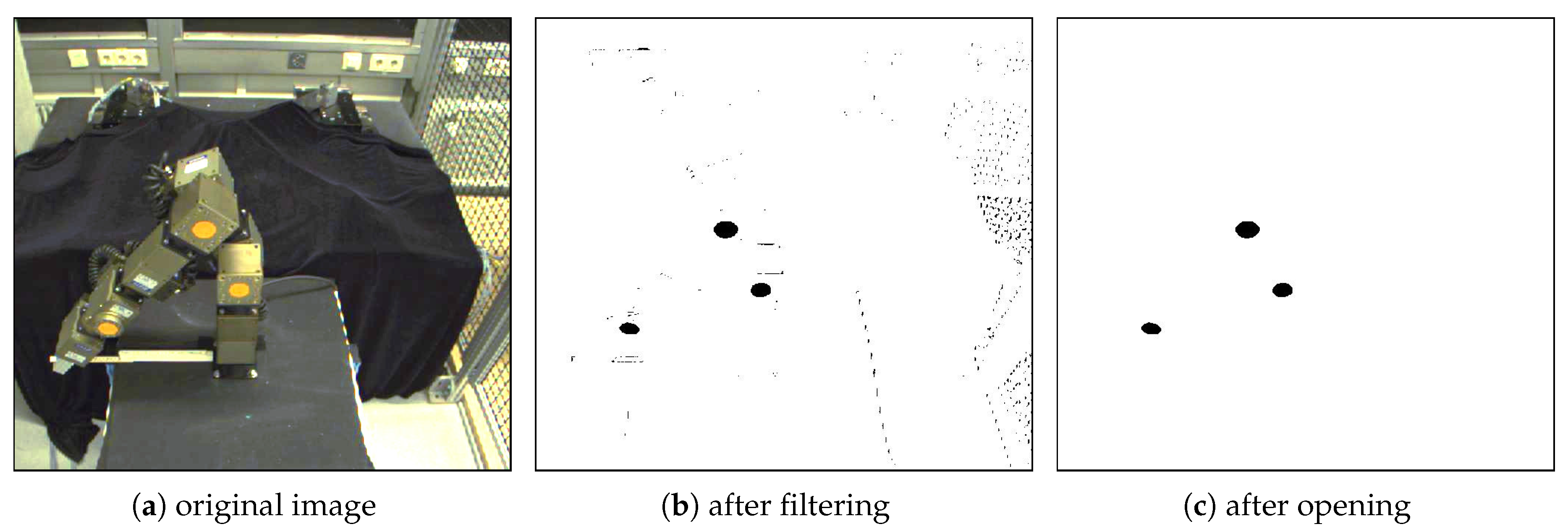

The individual steps of the color filtering process are visualized in

Figure 8.

The filter parameters have to be adjusted such that the areas of the original image, see

Figure 8a, which contain a marker, pass the filter completely, while the environment is filtered out as accurately as possible. Thereby, a binary image, see

Figure 8b, is created, which still contains some artifacts though, that is, pixels of the environment that passed the filter erroneously. To get rid of those, the morphological operation called opening is applied [

42], yielding the image in

Figure 8c, which contains exactly the desired markers. The center points of the remaining areas are the marker coordinates.

One-time at the beginning, the identified image coordinates have to be assigned to the corresponding markers. Afterwards, the matching can be done automatically using shortest distances to marker positions from the preceding image.

The main advantage of this method is on the one hand the significantly lower computation time compared to shape-based detection by Hough transform. On the other hand, it is still slower than KLT tracking. However, in total the advantages of color-based detection prevail. It allows a reliable detection of the markers during the complete period of robot movement with a time step size still suitable for real-time application.

5.2. Coordinate Transformation

From the identified image coordinates of the markers the joint angles, that is, the generalized coordinates, have to be computed in a second step. To do so, lens distortions, which make straight lines in real world look curved in the image, have to be eliminated first. For this purpose, the Matlab function

undistortPoints from the Computer Vision Toolbox is available. Then, with the Matlab function

pointsToWorld, the

x- and

y-coordinates of the markers given in the checkerboard coordinate system from calibration are obtained. Coordinate transformation to the desired world coordinate system is then realized by simple translation and rotation. Taking the offsets between the markers on the robot’s surface and the joint center points into account, the center point coordinates

,

,

of the joints equipped with markers are obtained in the world coordinate system. The joint angles

and

, see

Figure 5, are finally computed by the trigonometric relations

where

atan2 is the four-quadrant inverse tangent function. The indices represent the numbers of the markers according to

Figure 1.

Note that for tracking a spatial movement with more than two degrees of freedom, at least a second camera is necessary to set up a stereo vision system. Then, the transformation of marker coordinates from an image coordinate system to the checkerboard coordinate system or world coordinate system, respectively, is done by triangulation. In case of 3D motion, there is a risk for markers to be hidden behind the robot’s parts depending on its configuration and the camera positions. Therefore, these markers would be invisible for one or more cameras making a localization of the respective robot parts impossible. To address this problem, more cameras and/or markers are required to capture the mechanical components’ position for the complete workspace and all possible robot configurations. To do so, a motion capture system like OptiTrack might be used as it is done in Reference [

43] to capture human motion in a driver-in-the-loop simulator or in Reference [

44] to capture the motion of a flying robot. However, using a motion capture system contradicts the low-cost approach of slim hardware requirements pursued in this contribution.

5.3. Real-Time Capability

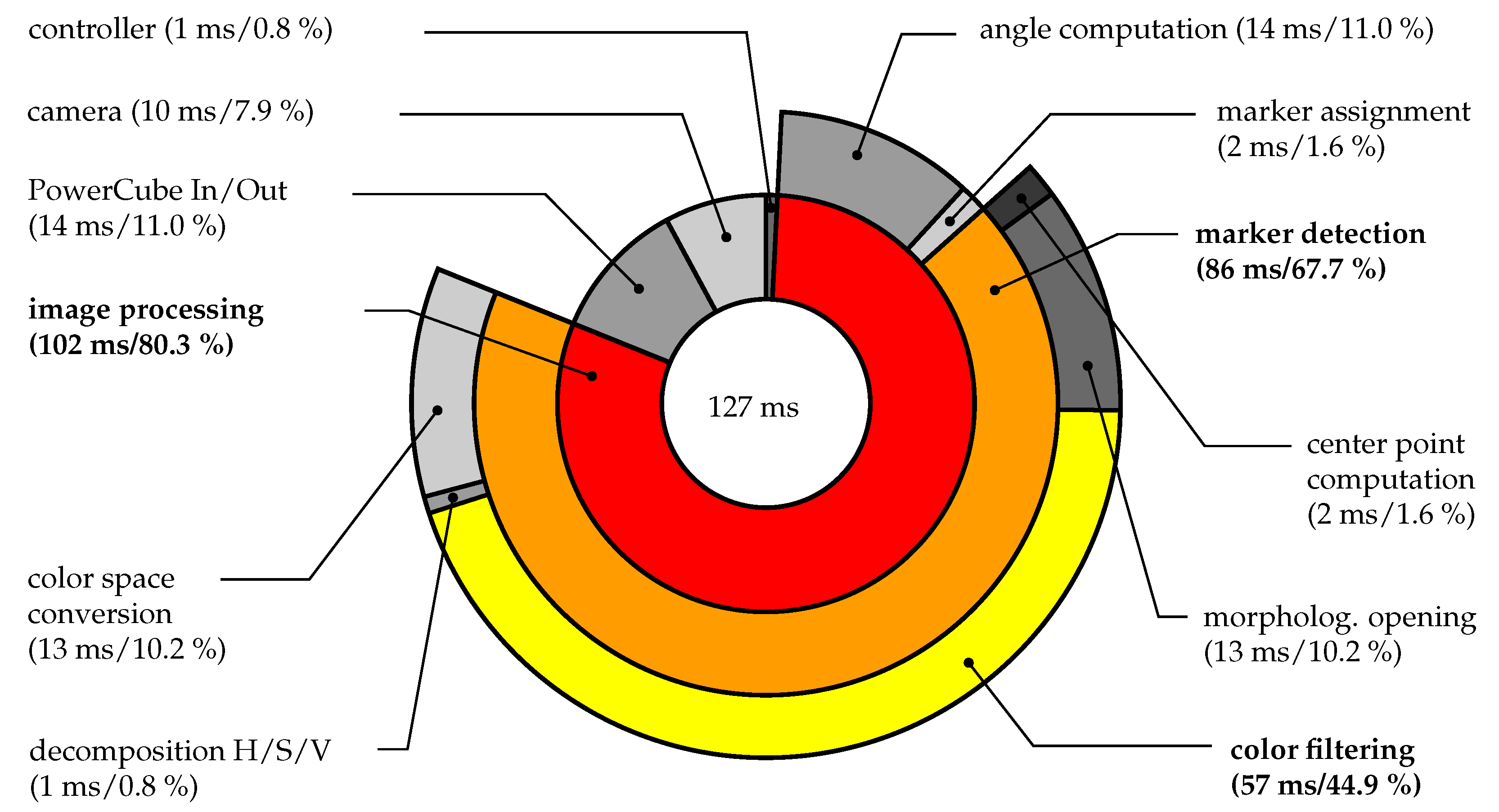

To use visual feedback from a single camera instead of incremental encoder signals, a time step size of 200 ms is required for real-time execution of the closed control loop. This is ten times as much as the time step size of 20 ms that is sufficient when encoder signals are used. Therefore, runtime is analyzed and time requirements of the individual control loop components are visualized in

Figure 9.

The inner circle ring represents the top level, while the middle and outer circle rings give a more detailed view of single subsystems.

On average, the entire computation takes 127 ms per sampling instance. Thereof, 10 ms are claimed by the camera for image acquisition and 14 ms for communication with the robot. The major portion of 80 %, which corresponds to 102 ms, is taken by the image processing part. A more detailed investigation of the image processing, shown in the middle ring, reveals that most of the time is required to detect the markers in the image. Breaking down things on an even lower level shows in the outer ring that color filtering is the most time-consuming part, taking 57 ms. This makes up for almost half of the time required for the overall control loop.

The reason for the high time requirements of color filtering and image processing in general is the big amount of data that needs to be processed. During the filtering process, about ten comparison operations, for example with color threshold values, have to be executed for each pixel. Even with a subsampled image resolution of 600,000 pixels the required computation time is accordingly high.

6. Experimental Studies

The proposed LTV MPC scheme described in

Section 4 is applied to the two-link manipulator in two ways. In the first study, the performance and robustness of the implemented LTV MPC scheme is demonstrated. For this purpose, the state measurement is obtained directly from the encoders and allows a sampling interval of 20 ms. In order to demonstrate the performance with vision-based state measurement, the control loop is closed with visual feedback instead of encoders in the second scenario. This requires a sampling interval of 200 ms, which results in limited performance of the overall closed-loop system.

In both studies, the MPC cost function (

22) is parameterized by diagonal weighting matrices to penalize the control error with respect to the desired trajectory, that is,

, whereas the changes in the input are weighted by

. A preliminary adjustment of the parameters has been obtained by simulations followed by re-adjusting the weights at the hardware. In order to not exceed the maximum currents of the rotatory modules, the constraints for the inputs are determined by

and

. Moreover, a prediction horizon of

is chosen and the controller is initialized by

and

. The repetitive linear-quadratic optimization problem from Equations (29) and (30) is solved by using the active set method applying the open-source implementation qpOASES [

17].

For interpreting the results, it is mentioned that the encoders can measure the angular velocity of the modules with a resolution of 0.04 rad/s = 2.29 deg/s.

6.1. Encoder-Based Tracking

Note that the trajectory is motivated by an obstacle avoidance and includes motion reversals in both joints to investigate the control performance on friction phenomena caused by the Harmonic Drive gears that underly highly nonlinear friction effects.

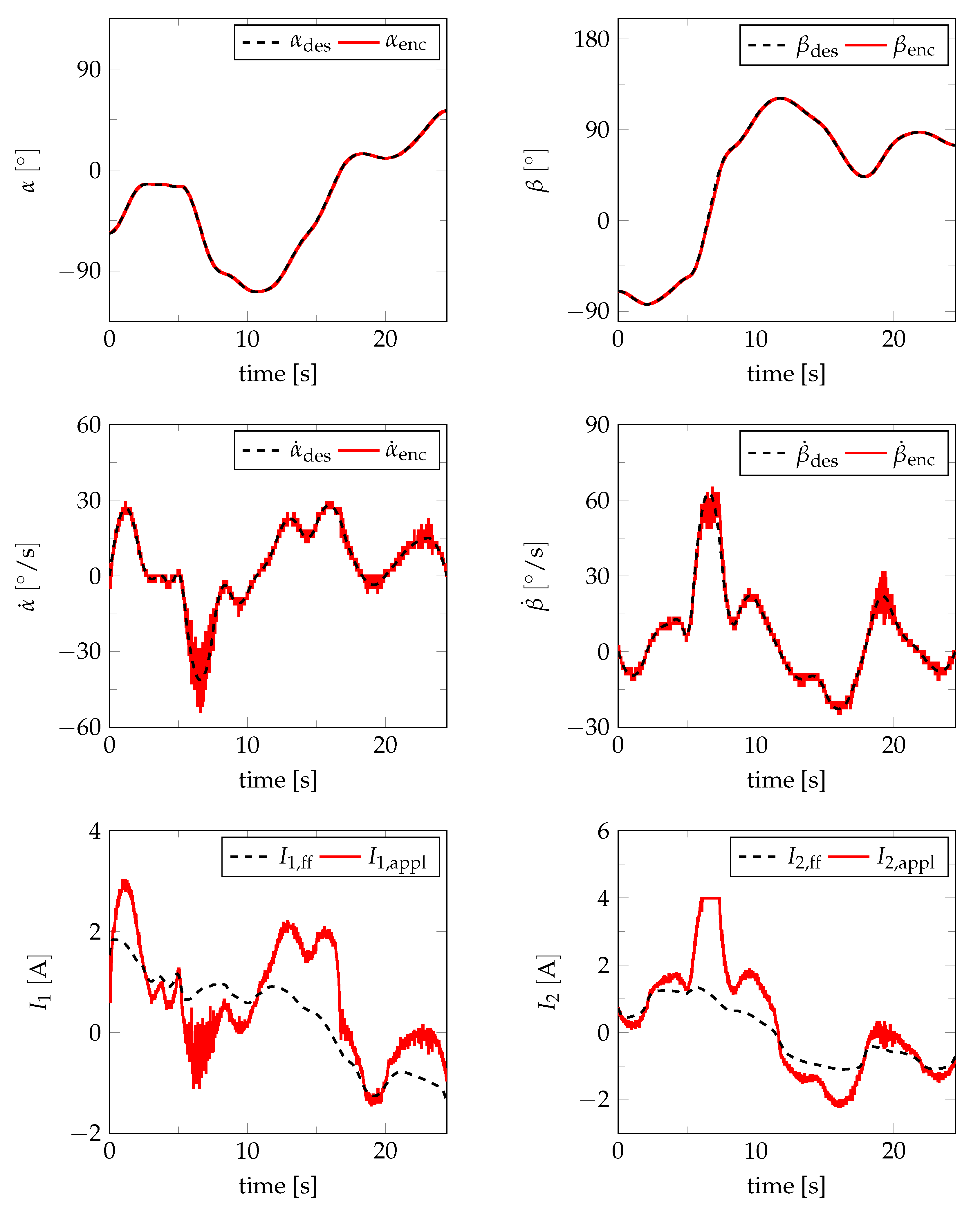

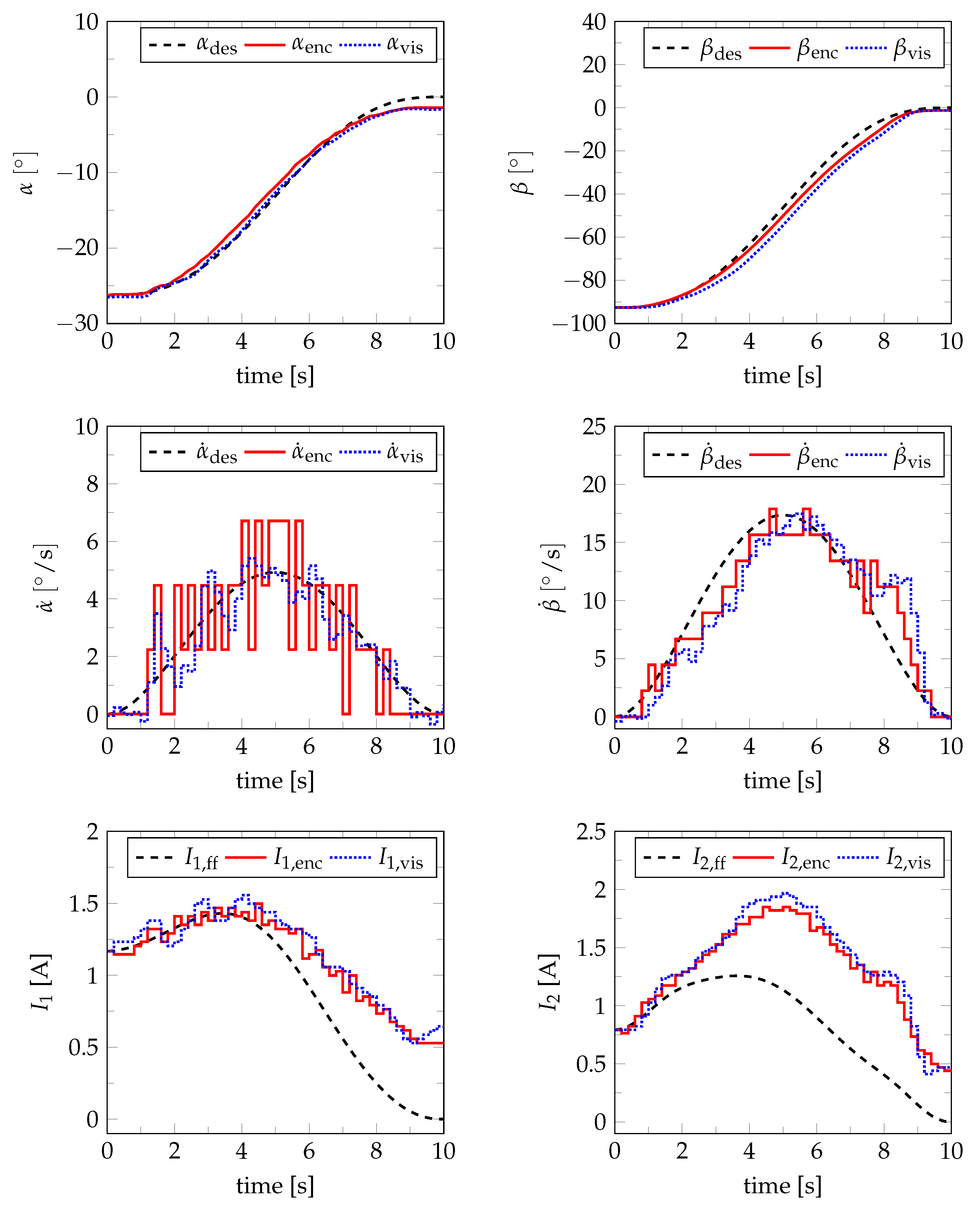

Figure 11 illustrates experimental results of the considered motion.

Therefore, the measured values of generalized coordinates and velocities are compared with the desired trajectories. In addition, the applied motor currents determined by the MPC, that is,

and

, are compared with the preliminarily calculated feed-forward controller based on Equation (

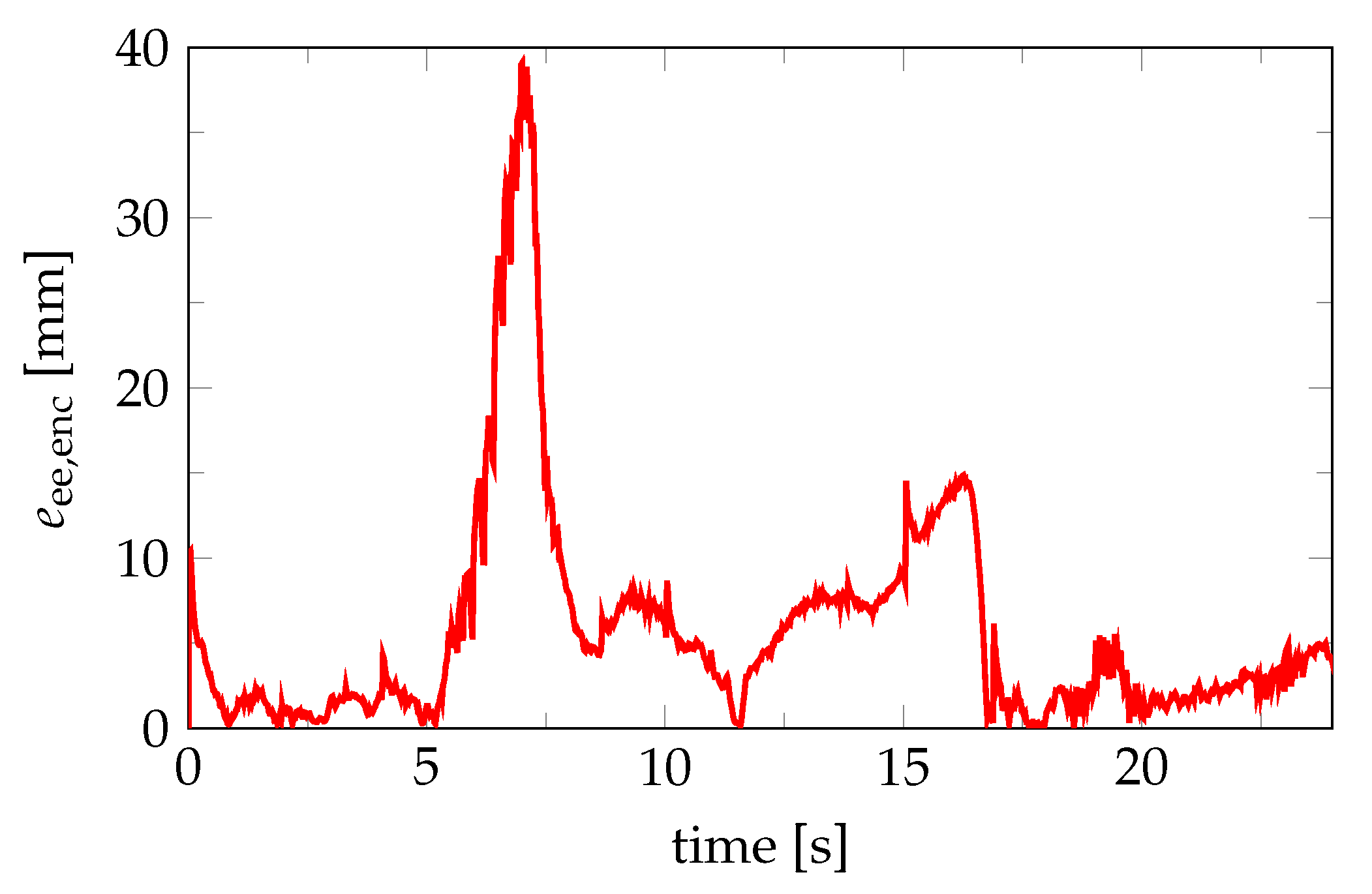

12). For validation purpose, the control error of the end-effector is defined as

and shown in

Figure 12. Thereby,

and

are the end-effector positions computed by the forward kinematics with the generalized coordinates measured by the encoders or with the desired generalized coordinates, respectively.

Obviously, the proposed LTV MPC scheme works well and can track the predefined trajectory with high accuracy in terms of the angle and angular velocities. Moreover, the proposed control structure can easily handle the predefined input constraints, which can be seen during the period

as the second joint reaches its input constraints whereby the motor in fact has to provide higher torques to track the desired trajectory. As a result, the tracking error of the end-effector increases, see

Figure 12. In contrast to a PID controller, no wind-up effects occur, no additional anti-wind-up control structure is necessary to handle input constraints, and due to the centralized control structure all states are taken into account to compute the inputs to reduce the control error in an optimal manner. The controller also deals with reversing motor directions and treats the occurring friction phenomena, which occur at sign changes in the velocities. Nevertheless, by comparing the implemented and the feed-forward motor currents, it becomes clear that there is a notable deviation. This deviation originates from uncertainties and disturbances since in the case of a perfect model and no disturbances the feed-forward controller (

12) would be sufficient. Despite the distinct model uncertainties, the proposed LTV MPC scheme compensates these uncertainties well and thus reveals its robustness. Consequently, the modeling and parameter identification efforts can be extremely reduced with the proposed LTV MPC scheme compared to a pure feed-forward controller. Furthermore, the command response, as well as changes in the input, can easily be adjusted and weighted by a modification of the weighting parameters for the states or inputs in the cost functional, which is much more intuitive and evident than tuning the parameters of a classical PD or PID controller.

The average computation times for the proposed MPC scheme with encoder-based tracking follow from

Figure 9. Since only the computation for the controller (1 ms) and the PowerCube I/O (14 ms), that is, the time to determine the motor currents and for measuring the angles and angular velocities by the encoders, are necessary, the overall computation time lies under the sampling interval of 20 ms assumed for the encoder-based tracking.

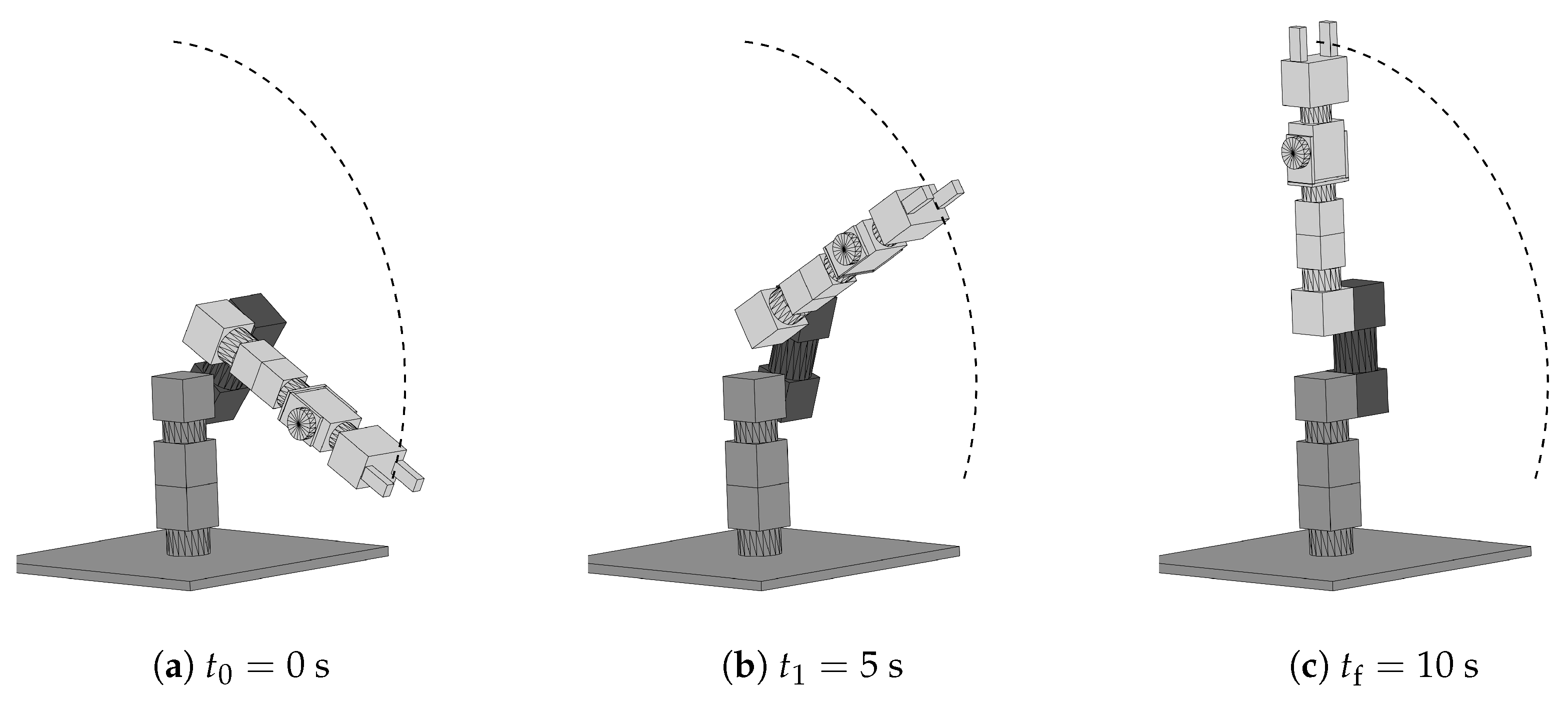

6.2. Vision-Based Tracking

In the second study, the vision-based sensing presented in

Section 5 is applied. The control loop is closed by state measurements from the camera system instead of the incremental encoders. This yields challenges, for example, concerning sampling times or accuracy.

Due to the necessarily increased sampling interval of

ms due to image processing, major drawbacks in the overall control loop performance are natural. Therefore, a much simpler trajectory from

to

is considered, which the control system with such a long sampling interval is still able to handle. The resulting path of the end-effector is shown in

Figure 13, whereas the complete motion of the manipulator can be seen in the deposited video (

https://www.itm.uni-stuttgart.de/en/research/vision-based-control-of-a-powercube-robot/).

Figure 14 shows the experimental results of the considered point-to-point motion with the visually determined joint angles being fed back to the controller.

The joint angles and their corresponding angular velocities as well as the motor currents are depicted. The angular velocities

at time step

k with sampling interval

are calculated from the visually measured angles

and

based on finite differences according to

As a reference, results for the same scenario with a sampling interval of 200 ms but with feedback from the encoders are plotted in the figure as well. Furthermore, the desired trajectories for the generalized coordinates and velocities as well as the previously computed feed-forward signals are part of the plot. The results are explained and interpreted in the following with respect to the quality of the visual feedback and control accuracy.

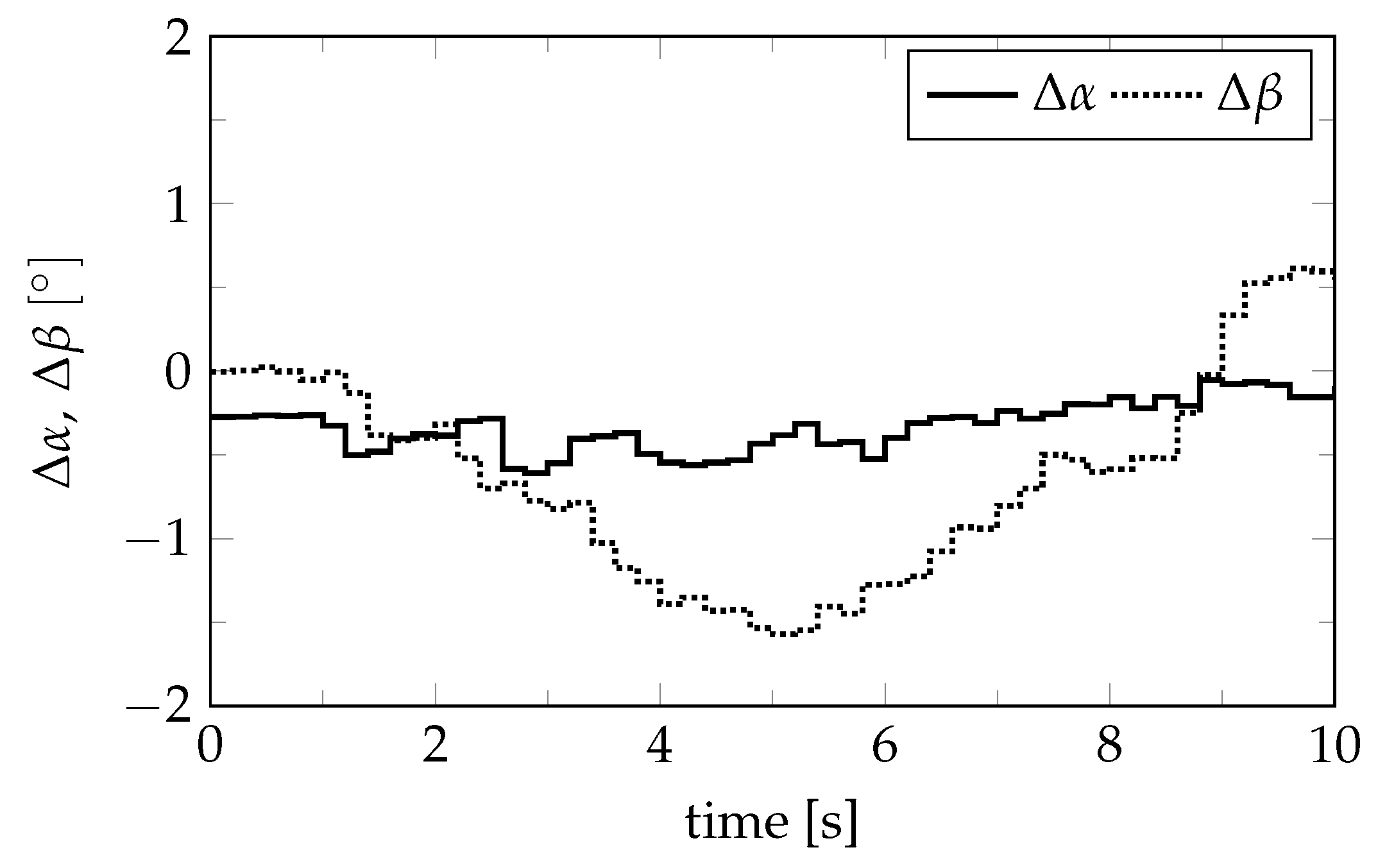

6.2.1. Validation of Visual Feedback

In order to validate the visually determined joint angles

,

, incremental encoder signals

,

are recorded in parallel, that is, measured by encoders while the control loop is closed with visual feedback, and taken as reference to compare with. The differences between visual and incremental measurements

are plotted in

Figure 15. The absolute deviation

is smaller than

and for

the maximum value is even smaller than

, which confirms the very good agreement of the visual measurement with the incremental reference measurement. Some reasons for the small remaining deviations are mentioned below:

Image resolution: For the chosen resolution, one pixel in the image corresponds up to 4 mm in the planar workspace. This discretization induces an inaccuracy in position estimation.

Marker detection: Because of the discretization of the image, the edge of a marker is not a sharp line, but spread across several pixels. Those pixels might pass the filter or not, depending on the color filter settings, which results in an inaccuracy for the marker’s computed center point.

Time offset between sensor signals: There is a time offset of up to 20 ms between the measurement signals that is not represented in

Figure 14. Taking such an offset into account, the signals match even better and the deviations shown in

Figure 15 are even smaller.

6.2.2. Control Accuracy

In contrast to very good agreement of the visual measurements with the incremental ones as stated above, control accuracy unfortunately is not yet satisfactory for this scenario. This becomes obvious considering the end-effector error

where

is the end-effector position computed by the forward kinematics with the generalized coordinates measured by encoders, while the control loop is closed with visual feedback. The maximum value of this end-effector tracking error is about 90 mm, which is way too big for most applications. This is also one reason why such a simple trajectory has to be chosen in this scenario. The large tracking error is caused by the long sampling interval of

ms required by image processing. It results in long prediction times, which negatively affects the validity of the linearization used for the prediction, since a change of operating points is not considered in the LTV MPC scheme for simplicity. Also, modeling errors like, for example, in the motor unit’s frictional torque have a bigger effect the longer the time step size is.

Thus, improvements in control accuracy can be achieved in different ways: One option is using shorter sampling times as shown in the first study, see

Section 6.1. This is achieved either in terms of software improvement, for example, by optimizing the presented image processing algorithm, or using more powerful hardware, like a faster processor, or by a combination of both aspects as in ready-to-use motion capture systems like, for example, OptiTrack, see References [

43,

44]. Another option is considering the change of operating points in the prediction, since neglecting it is only appropriate for small prediction horizons as stated in

Section 4.2. Being well aware of the necessity for improvement, further effort is spent on these topics in on-going research.

7. Conclusions

The paper discussed a structured step-by-step procedure necessary to implement a feasible nonlinear model predictive control concept that gains vision-based feedback from a camera on a low-cost robotic system. The proposed predictive control structure exploits the previously derived symbolical equations of motion and is thus easy to tune. No massive parameter tuning is required to obtain good results, as it would be the case for common approaches like PD or PID control schemes. The heavy computational burden is handled by using a linear time-varying model description with respect to the desired trajectory and an a priori computed feed-forward controller. The feasibility and performance of the proposed linear time-varying model predictive control scheme with a short sampling interval of 20 ms has been shown by means of a rigid two-link manipulator using encoder feedback and tracking a challenging trajectory in a first experimental study. The controller demonstrates advantages in case of constraint handling and compensating model uncertainties.

In contrast to other publications, where vision-based sensing is used to determine a target pose for the robot and the robot motion itself is then controlled by encoder feedback, in a second experimental study vision-based feedback has been realized instead of encoder feedback to measure the robot’s current position while moving along the desired trajectory. For flexible bodies, the position cannot be measured just by incremental encoders in the joints due to elastic deformations. Therefore, this is a preparatory study showing the viability for further studies with elastic bodies and pointing out challenges in visual sensing. It has been shown that position detection via camera provides accurate results, that is, there is good agreement between visually measured states and encoder signals, even with the low-cost solution used in this contribution consisting of a standard laptop PC, an industrial camera, and a non-hardware-near image processing in Matlab. Nevertheless, the tradeoff for vision-based feedback with the selected setting is a significantly increased sampling interval of 200 ms caused by the time required by image processing. Thus, the implementation of vision-based tracking presented in this paper is only suitable for controlling systems with slower dynamics as intended in safety-critical man-machine interaction, for example, but there seems to be no restriction to transfer the approach to similar systems. To handle rapidly moving systems as required for many industrial robots, improvements in the image processing algorithm are necessary and are topic of on-going research, for example, by using infrared-based tracking systems. In such systems, the image processing is handled directly on the camera system, for example, by FPGA techniques. With the advent of image recognition for autonomous cars, the costs for fast image detection should fall significantly in the next years.