Multi-Frame Labeled Faces Database: Towards Face Super-Resolution from Realistic Video Sequences

Abstract

Featured Application

Abstract

1. Introduction

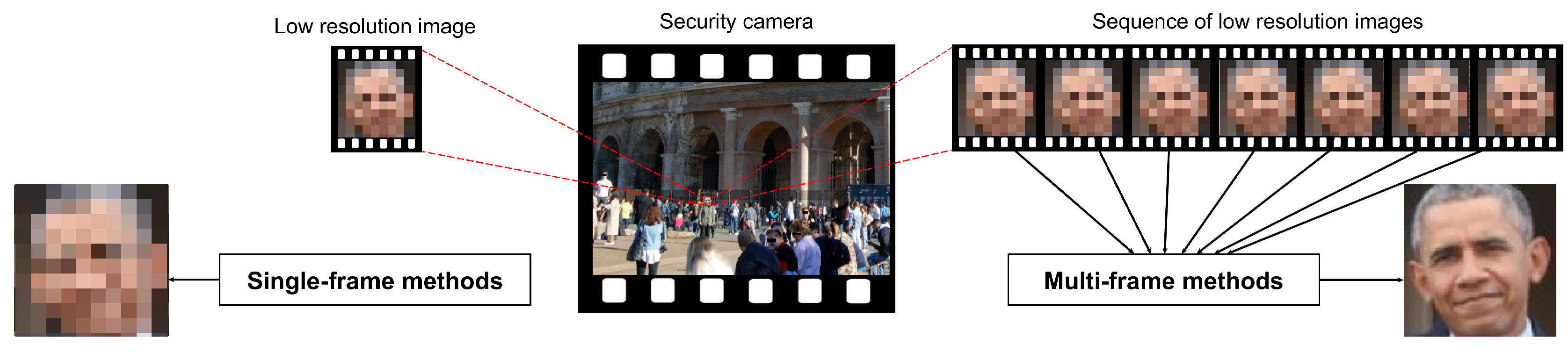

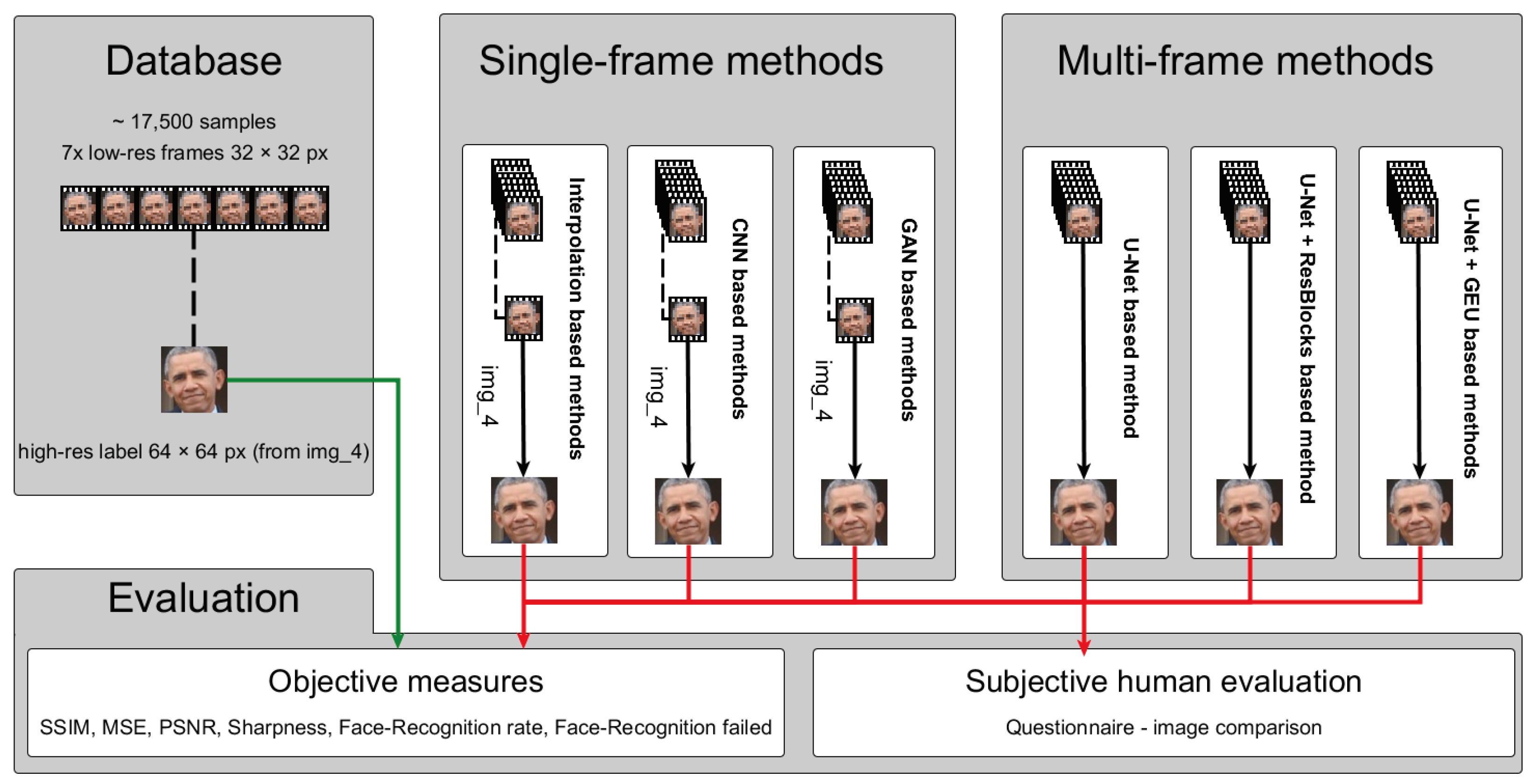

- This paper introduces a new methodology for super-resolution (SR) different from the general-purpose one, which is limited to faces and biometric applications.

- The sequence of multiple images is processed (multi-frame methods) instead of using just a single image as most existing datasets allow (single-frame methods).

- We proposed a methodology that was compared to other state-of-the-art methods, currently, the single-frame super-resolution methods can be considered as one of the best because of the lack of the progress and non-utilization of deep learning in multi-frame super-resolution methods [2,3]. Moreover, general super-resolution methods are often not applicable for purposes of biometrics, even if they provide good resolution.

- A new unique large-scale dataset, containing 17,426 samples of image sequences taken from a wide range of recording devices, is provided. They contain faces of various ages, races, illumination conditions that are taken from real-world environments.

- The paper also defines metrics for facial quality measurements that are reproducible and based on open-source solutions. These objective metrics are widely accessible approaches and can boost further research in this area.

- The whole experiment described in this paper is fully reproducible—the source codes and the dataset were released online (http://splab.cz/mlfdb/#download).

- The combination of the key points above creates a ground (basis) and it has the potential of creating a standard for future research in this field.

2. Related Work

2.1. General Single-Frame Super-Resolution Methods

2.2. Single-Frame Super-Resolution Focused on Faces

2.3. Multi-Frame Super-Resolution

2.4. Motivation

3. Materials and Methods

3.1. Training and Testing Data

3.1.1. Dataset General Information

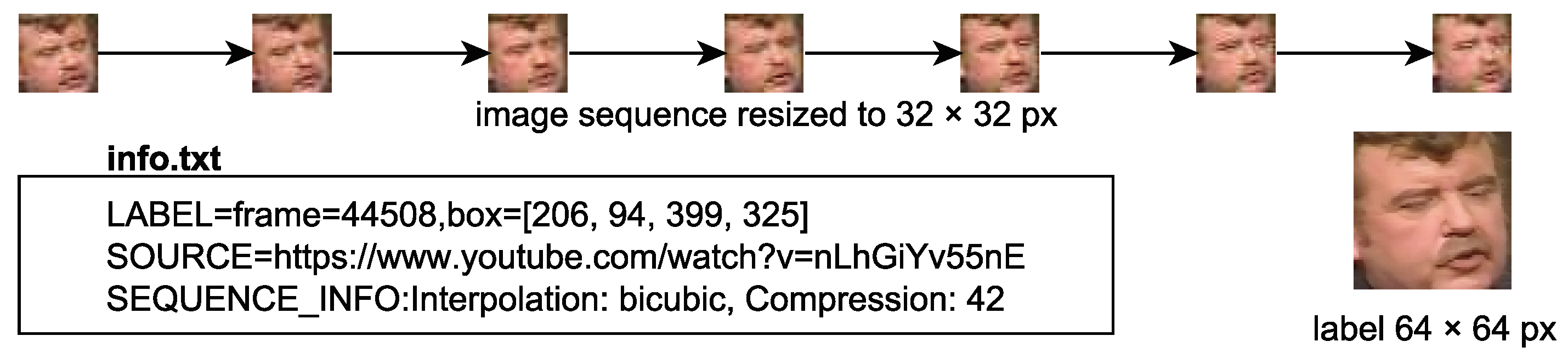

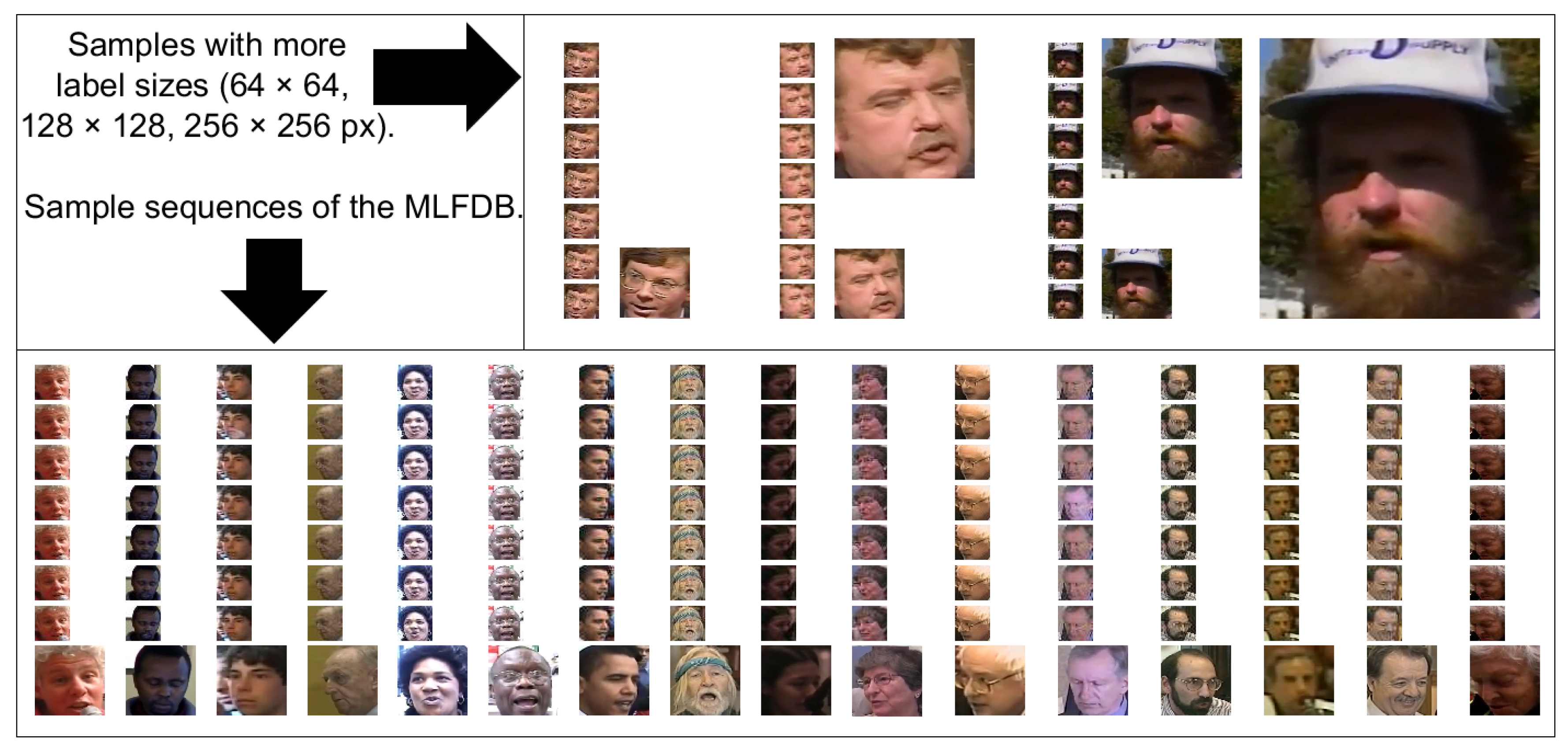

- 7 images (i.e., face sequences) in a low-resolution (32 × 32 px) named ‚img_1.JPG, …, ‚‘img_7.JPG’.

- The label is defined from the middle of the sequence (from img_4.JPG) with double resolution 64 × 64 px and file name label.JPG.

- ‘info.txt’ file with information about the label (bounding box and frame number), video source, and interpolation method together with the JPEG compression that were used for resizing input images.

3.1.2. Dataset Creation

- For each sequence, it is checked whether the label contains a face of sufficient quality so it can be used for face recognition. This was done using an open-source project (Face-recognition framework [31] v1.3.0, CNN model) which is inspired by Facenet [32]. This step actually validates whether the detected face is in good quality so it can be used for the face recognition similarity rate index metric (see Section 3.4.1). YOLO is a face detector, not a face recognition system, therefore, it also detects such faces where person identification is impossible (e.g., head from behind).

- In some cases, a sequence can contain multiple overlapping faces. It is not a problem if more faces or their parts occur in one image, but the problem arises, when—mainly due to a mistake of the processing algorithm—there is a different person at the beginning of the sequence and another at the end. From time to time it also happened due to a movie split. Therefore, the label is compared using the Face-recognition framework (used threshold was 0.7) with all other images in the sequence. Thus a possible presence of several faces in a single image was taken into account.

- Due to used randomness in sampling the videos, it can also happen from time to time that one person appeared more than once in the resulting dataset. The biggest issue has been how to distinguish between very similar images and images with different lighting, face angle, etc. It has been shown that the Face-recognition framework has not been an appropriate solution for this task due to its ability to face alignment, i.e., it can perform the recognition in different conditions. We decided to use a structural similarity metric—SSIM (MSE, PSNR, etc., are not efficient metrics for this case) with the threshold 0.7. Ground truth images are used for this which are first resized into a 64 × 64 px resolution.

- The next step was about validation whether all the sequence images contain a face. This was done using the Face-recognition framework and its method for face detection (not recognition). By using this, only those ground truth images, which can be used for recognition are included. Other images in the sequence must contain a face, but do not have to be recognizable (e.g., a different angle, partially covered by another person, etc.).

- The sequences which are originally of various resolutions (higher than 64 × 64 px) are downsampled into resolution 32 × 32 px and the ground truth (label) image into a 64 × 64 px resolution. When the original resolution allowed this, the labels were also recorded in resolution 128 × 128 px and 256 × 256 px). Each sequence randomly used a chosen method for pixel interpolations such as the nearest neighbor, bilinear, bicubic, lanczos (over 8 × 8 neighborhood). The label was saved with the best possible JPEG quality (compression 100) and sequence images were saved by a randomly chosen JPEG compression scale from 30–90.

- Unfortunately, after resizing the ground truth images (labels) into resolution 64 × 64 px some of the images were not possible to use for face recognition. Those sequences of face images were removed from the dataset.

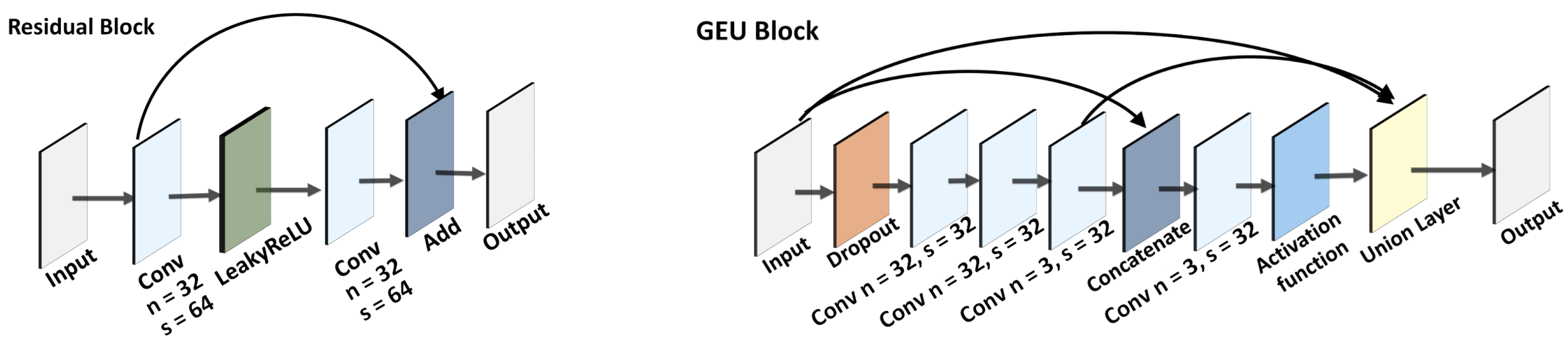

3.2. Neural Network Architectures

3.2.1. Single-Frame Methods

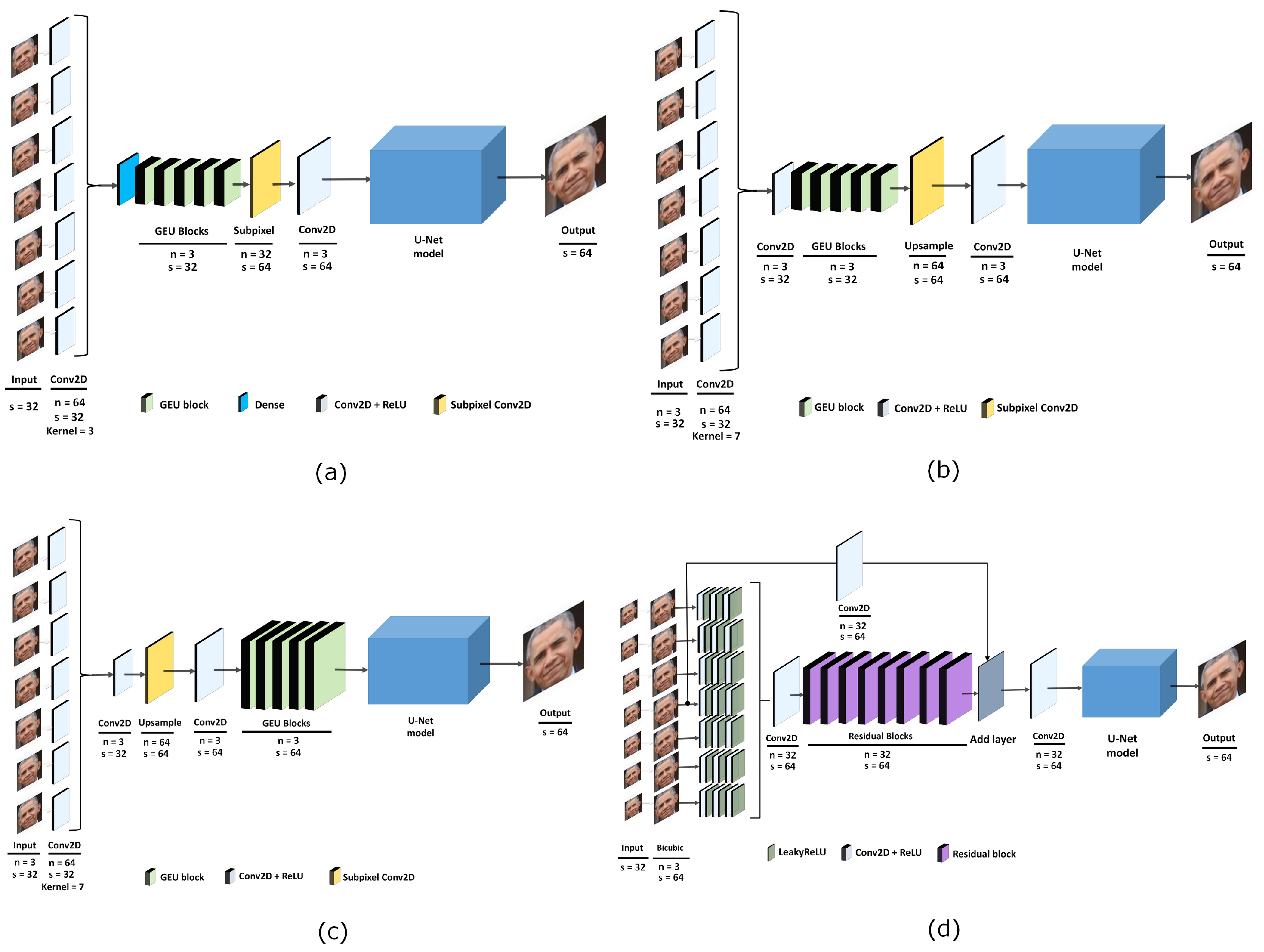

3.2.2. Multi-Frame Architectures

3.3. Training of Models

3.4. Evaluation Metrics

3.4.1. Objective Measures

3.4.2. Subjective Human Evaluation

4. Results

4.1. Resulting Dataset

4.2. Methods Comparison

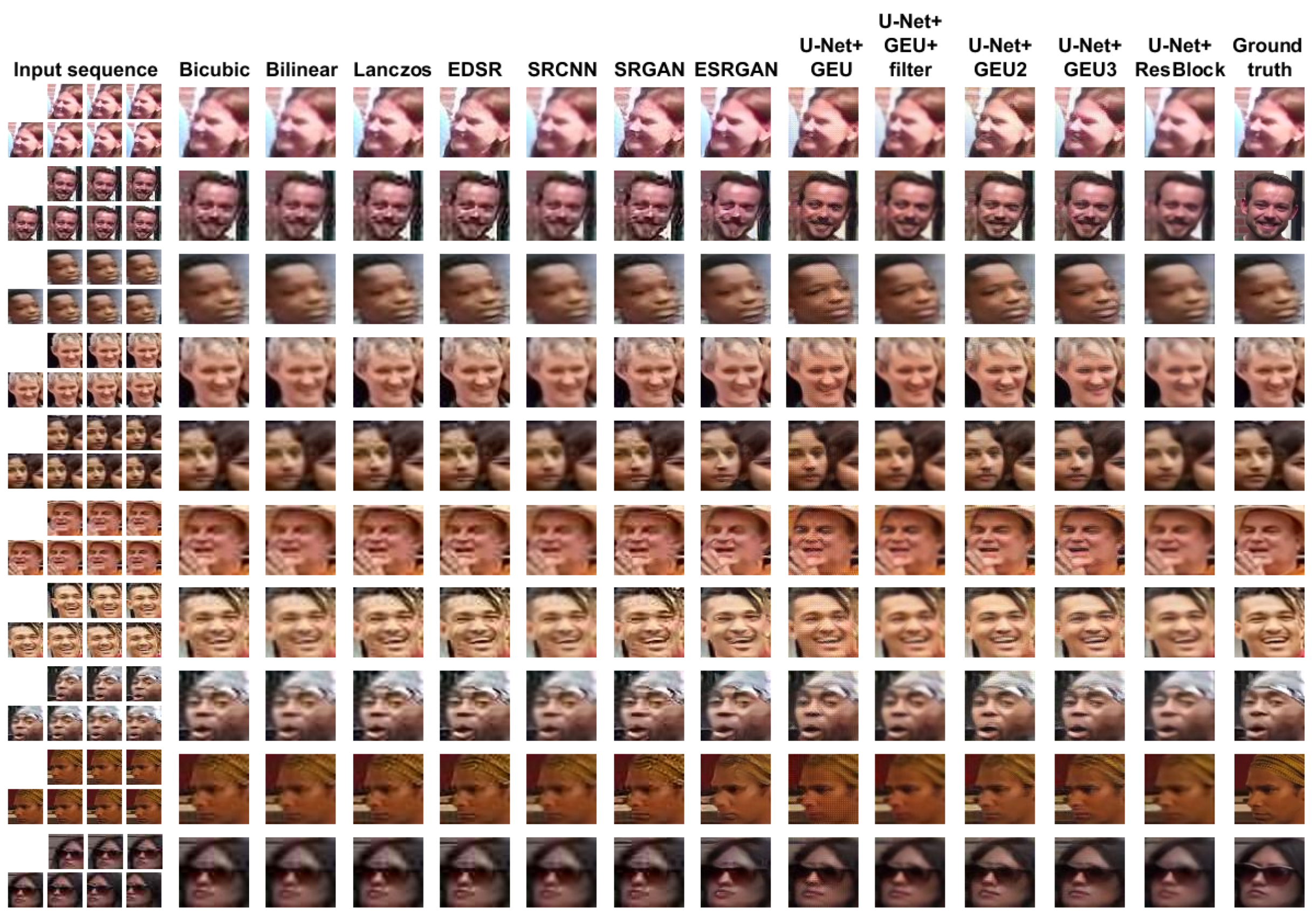

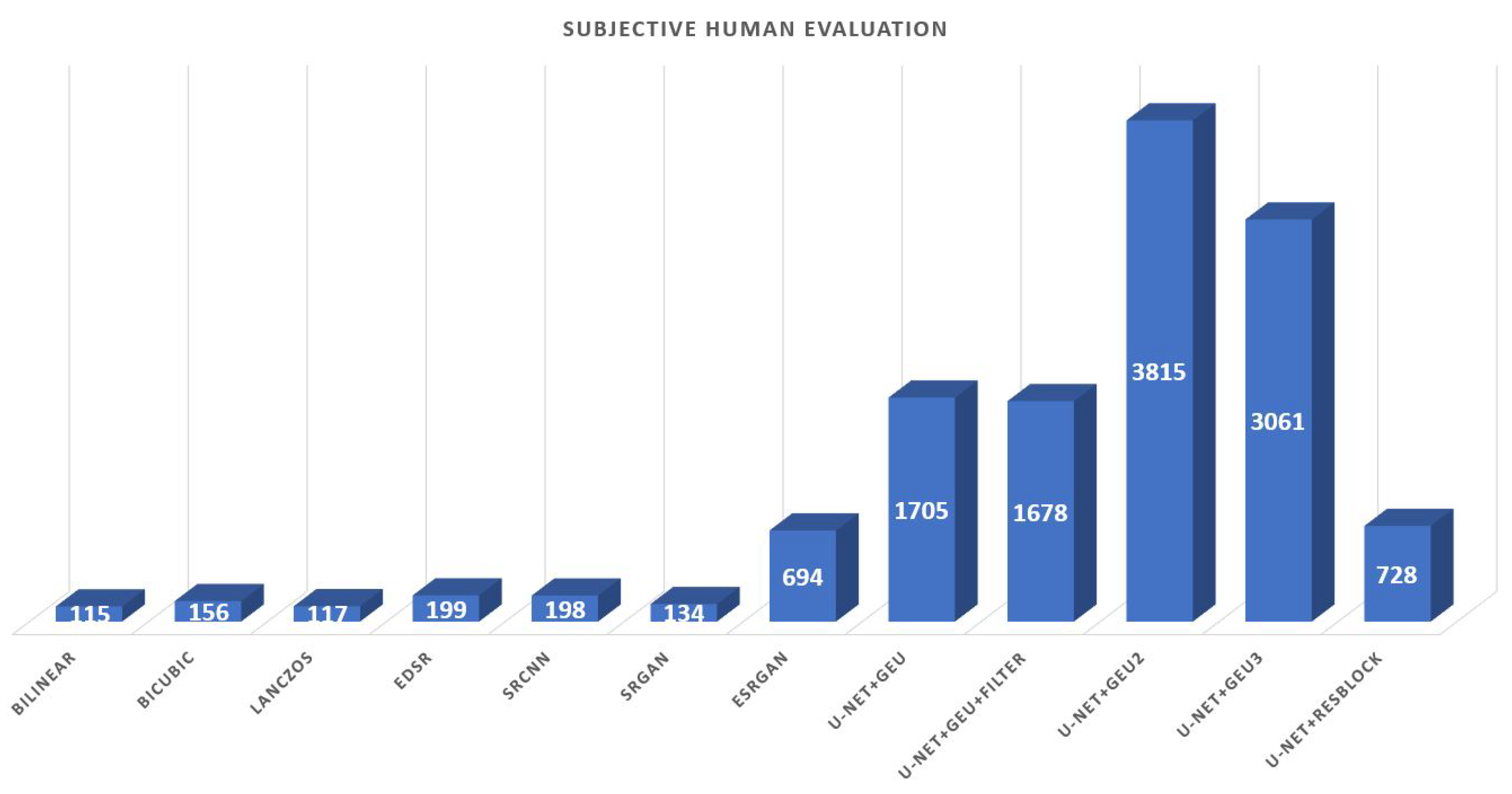

4.3. Questionnaire Results

5. Discussion

5.1. Dataset

5.2. Questionnaire

5.3. Results Comparison

5.4. Face Recognition Based Metrics

5.5. Benchmark and Leaderboard

5.6. Future Research

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CelebA | Large-scale CelebFaces Attributes |

| CCTV | Closed circuit television |

| CNN | Convolutional Neural Network |

| CPBD | Cumulative Probability of Blur Detection |

| CVPR | Conference on Computer Vision and Pattern Recognition |

| DB | DataBase |

| DeepSUM | Deep neural network for Super-resolution of Unregistered Multitemporal images |

| EDSR | Enhanced Deep Super-Resolution Network |

| ESRGAN | Enhanced Super-Resolution Generative Adversarial Network |

| EDVR | Enhanced Deformable Convolutional Network |

| FERET | Face Recognition Technology |

| FR | Face Recognition |

| FRVSR | Frame-recurrent video super-resolution |

| FSRGAN | Face Super-Resolution Generative Adversarial Network |

| FSRNet | Face Super-Resolution Network |

| GAN | Generative Adversarial Network |

| GPU | Graphics Processing Unit |

| JNB | Just Noticeable Blur |

| LFW | Labeled Faces in the Wild |

| MLFDB | Multi-frame Labeled Faces Database |

| MSE | Mean Square Error |

| NN | Neural Network |

| PCD | Pyramid, Cascading and Deformable convolutions |

| PSNR | Peak signal-to-noise ratio |

| PubFig | Public Figures Face Database |

| PULSE | Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models |

| ReLU | Rectified Linear Unit |

| ResNet | Residual Network |

| ResBlock | Residual Block |

| SR | Super-Resolution |

| SRCNN | Super-Resolution Convolutional Neural Network |

| SRGAN | Super-Resolution Generative Adversarial Network |

| SSIM | Structural similarity |

| TSA | Temporal and Spatial Attention |

| UR-DGN | Ultra-resolution by discriminative generative network |

| UVT | Unpaired Video Translation |

| VSR | Video Super-Resolution |

| YOLO | You Only Look Once |

References

- Hollis, M.E. Security or surveillance? Examination of CCTV camera usage in the 21st century. Criminol. Public Policy 2019, 18, 131–134. [Google Scholar] [CrossRef]

- Molini, A.B.; Valsesia, D.; Fracastoro, G.; Magli, E. DeepSUM: Deep neural network for Super-resolution of Unregistered Multitemporal images. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3644–3656. [Google Scholar] [CrossRef]

- Salvetti, F.; Mazzia, V.; Khaliq, A.; Chiaberge, M. Multi-Image Super Resolution of Remotely Sensed Images Using Residual Attention Deep Neural Networks. Remote Sens. 2020, 12, 2207. [Google Scholar] [CrossRef]

- Zangeneh, E.; Rahmati, M.; Mohsenzadeh, Y. Low resolution face recognition using a two-branch deep convolutional neural network architecture. Expert Syst. Appl. 2020, 139, 112854. [Google Scholar] [CrossRef]

- Huang, G.B.; Mattar, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; Technical Report 07-49; University of Massachusetts of Amherst: Amherst, MA, USA, October 2007. [Google Scholar]

- Kumar, N.; Berg, A.C.; Belhumeur, P.N.; Nayar, S.K. Attribute and simile classifiers for face verification. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 365–372. [Google Scholar]

- Freeman, W.T.; Pasztor, E.C.; Carmichael, O.T. Learning low-level vision. Int. J. Comput. Vis. 2000, 40, 25–47. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Large-scale celebfaces attributes (celeba) dataset. Retrieved August 2018, 15, 2018. [Google Scholar]

- Wolf, L.; Hassner, T.; Maoz, I. Face recognition in unconstrained videos with matched background similarity. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 529–534. [Google Scholar]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Proceedings of the Science and Information Conference, Leipzig, Germany, 1–4 September 2019; pp. 128–144. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Li, P.; Prieto, L.; Mery, D.; Flynn, P.J. On low-resolution face recognition in the wild: Comparisons and new techniques. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2000–2012. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Sridharan, S.; Tistarelli, M.; Nixon, M. Super-resolution for biometrics: A comprehensive survey. Pattern Recognit. 2018, 78, 23–42. [Google Scholar] [CrossRef]

- Yu, X.; Porikli, F. Ultra-resolving face images by discriminative generative networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 318–333. [Google Scholar]

- Chen, Y.; Tai, Y.; Liu, X.; Shen, C.; Yang, J. Fsrnet: End-to-end learning face super-resolution with facial priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2492–2501. [Google Scholar]

- Kim, D.; Kim, M.; Kwon, G.; Kim, D.S. Progressive face super-resolution via attention to facial landmark. arXiv 2019, arXiv:1908.08239. [Google Scholar]

- Menon, S.; Damian, A.; Hu, S.; Ravi, N.; Rudin, C. PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TX, USA, 4 September 2020; pp. 2437–2445. [Google Scholar]

- Kelvins–ESA’s Advanced Concepts, PROBA-V Super Resolution. 2019. Available online: https://kelvins.esa.int/proba-v-super-resolution (accessed on 20 August 2020).

- Kawulok, M.; Benecki, P.; Piechaczek, S.; Hrynczenko, K.; Kostrzewa, D.; Nalepa, J. Deep learning for multiple-image super-resolution. IEEE Geosci. Remote. Sens. Lett. 2019, 17, 1062–1066. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sajjadi, M.S.; Vemulapalli, R.; Brown, M. Frame-recurrent video super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6626–6634. [Google Scholar]

- Chu, M.; Xie, Y.; Mayer, J.; Leal-Taixé, L.; Thuerey, N. Learning temporal coherence via self-supervision for GAN-based video generation. arXiv 2018, arXiv:1811.09393. [Google Scholar]

- Wang, X.; Chan, K.C.; Yu, K.; Dong, C.; Change Loy, C. Edvr: Video restoration with enhanced deformable convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Ustinova, E.; Lempitsky, V. Deep multi-frame face super-resolution. arXiv 2017, arXiv:1709.03196. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Geitgey, A. Face Recognition. Available online: https://github.com/ageitgey/face_recognition (accessed on 27 April 2020).

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Deudon, M.; Kalaitzis, A.; Goytom, I.; Arefin, M.R.; Lin, Z.; Sankaran, K.; Michalski, V.; Kahou, S.E.; Cornebise, J.; Bengio, Y. HighRes-net: Recursive Fusion for Multi-Frame Super-Resolution of Satellite Imagery. arXiv 2020, arXiv:2002.06460. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Ayyoubzadeh, S.M.; Wu, X. Adaptive Loss Function for Super Resolution Neural Networks Using Convex Optimization Techniques. arXiv 2020, arXiv:2001.07766. [Google Scholar]

- Bare, B.; Yan, B.; Ma, C.; Li, K. Real-time video super-resolution via motion convolution kernel estimation. Neurocomputing 2019, 367, 236–245. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 694–711. [Google Scholar]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and checkerboard artifacts. Distill 2016, 1, e3. [Google Scholar] [CrossRef]

- Mohammadi, P.; Ebrahimi-Moghadam, A.; Shirani, S. Subjective and objective quality assessment of image: A survey. arXiv 2014, arXiv:1406.7799. [Google Scholar]

- Poobathy, D.; Chezian, R.M. Edge detection operators: Peak signal to noise ratio based comparison. IJ Image Graph. Signal Process. 2014, 10, 55–61. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Narvekar, N.D.; Karam, L.J. A no-reference perceptual image sharpness metric based on a cumulative probability of blur detection. In Proceedings of the 2009 International Workshop on Quality of Multimedia Experience, San Diego, CA, USA, 29–31 July 2009; pp. 87–91. [Google Scholar]

- Ferzli, R.; Karam, L.J. A no-reference objective image sharpness metric based on just-noticeable blur and probability summation. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16–19 September 2007; Volume 3, pp. III-445–III-448. [Google Scholar]

- Ferzli, R.; Karam, L.J. A no-reference objective image sharpness metric based on the notion of just noticeable blur (JNB). IEEE Trans. Image Process. 2009, 18, 717–728. [Google Scholar] [CrossRef]

- Miao, J.; Niu, L. A survey on feature selection. Procedia Comput. Sci. 2016, 91, 919–926. [Google Scholar] [CrossRef]

| Improvement | LFW | FERET | PubFig | CelebA | Youtube DB |

|---|---|---|---|---|---|

| Number of samples | ✓ | ✓ | × | × | × |

| Number of identities | × | ✓ | ✓ | × | ✓ |

| Samples as sequences | ✓ | ✓ | ✓ | ✓ | × |

| Super-resolution purpose | ✓ | ✓ | ✓ | ✓ | ✓ |

| Variability (avoid bias) | ✓ | ✓ | × | ✓ | × |

| Method | SSIM | MSE | PSNR | Sharpness | FR Rate (All) | FR Rate (∩) | FR Failed |

|---|---|---|---|---|---|---|---|

| bicubic | 0.806 | 227.196 | 25.654 | 0.084 | 0.476 | 0.473 | 674/2500 |

| bilinear | 0.809 | 217.368 | 25.769 | 0.158 | 0.471 | 0.468 | 693/2500 |

| lanczos | 0.802 | 238.247 | 25.504 | 0.024 | 0.483 | 0.480 | 700/2500 |

| EDSR | 0.780 | 286.999 | 24.687 | −0.025 | 0.495 | 0.494 | 701/2500 |

| SRCNN | 0.815 | 216.940 | 25.771 | 0.209 | 0.461 | 0.456 | 643/2500 |

| SRGAN | 0.760 | 320.981 | 24.234 | −0.078 | 0.511 | 0.510 | 771/2500 |

| ESRGAN | 0.788 | 281.192 | 24.604 | −0.016 | 0.488 | 0.485 | 470/2500 |

| U-Net+GEU | 0.760 | 276.459 | 24.383 | −0.129 | 0.507 | 0.503 | 317/2500 |

| U-Net+GEU+filter | 0.815 | 234.552 | 25.196 | 0.239 | 0.464 | 0.458 | 394/2500 |

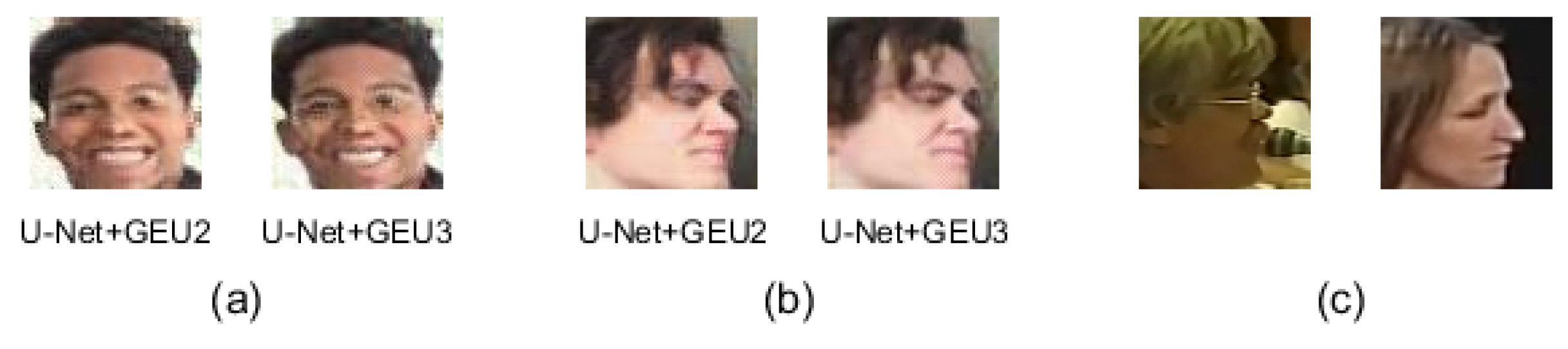

| U-Net+GEU2 | 0.799 | 256.511 | 24.878 | 0.031 | 0.472 | 0.467 | 259/2500 |

| U-Net+GEU3 | 0.799 | 243.433 | 25.135 | 0.004 | 0.471 | 0.466 | 270/2500 |

| U-Net+ResBlock | 0.812 | 239.114 | 25.141 | 0.130 | 0.468 | 0.463 | 581/2500 |

| Method | SSIM | MSE | PSNR | Sharp. | FR Rate (∩) | FR Failed | Sub. ev. | Sum1 | Sum2 |

|---|---|---|---|---|---|---|---|---|---|

| bicubic | 0.164 | 0.099 | 0.076 | 0.340 | 0.315 | 0.811 | 1 | 1.805 | 2.805 |

| bilinear | 0.109 | 0.004 | 0.001 | 0.655 | 0.222 | 0.848 | 0.989 | 1.839 | 2.828 |

| lanczos | 0.236 | 0.205 | 0.174 | 0.085 | 0.444 | 0.861 | 0.999 | 2.005 | 3.004 |

| EDSR | 0.636 | 0.673 | 0.705 | 0.703 | 0.089 | 0.863 | 0.977 | 3.669 | 4.646 |

| SRCNN | 0 | 0 | 0 | 0.872 | 0 | 0.750 | 0.978 | 1.622 | 2.600 |

| SRGAN | 1 | 1 | 1 | 1 | 0.315 | 1 | 0.995 | 5.315 | 6.310 |

| ESRGAN | 0.491 | 0.618 | 0.759 | 0.051 | 0.537 | 0.412 | 0.844 | 2.868 | 3.712 |

| U-Net+GEU | 0 | 0.572 | 0.903 | 0.532 | 0.870 | 0.113 | 0.570 | 2.990 | 3.560 |

| U-Net+GEU+filter | 0 | 0.169 | 0.374 | 1 | 0.037 | 0.246 | 0.578 | 1.844 | 2.422 |

| U-Net+GEU2 | 0.281 | 0.380 | 0.581 | 0.115 | 0.204 | 0 | 0 | 1.571 | 1.571 |

| U-Net+GEU3 | 0.281 | 0.255 | 0.414 | 0 | 0.185 | 0.021 | 0.204 | 1.166 | 1.370 |

| U-Net+ResBlock | 0.055 | 0.213 | 0.410 | 0.536 | 0.130 | 0.629 | 0.834 | 1.973 | 2.807 |

| Threshold | 0.6 | 0.5 | 0.45 | 0.4 | 0.35 |

|---|---|---|---|---|---|

| different people | 76 | 2378 | 6651 | 10,822 | 13,369 |

| Method | Differences |

|---|---|

| bicubic | 12/415 |

| bilinear | 9/434 |

| lanczos | 16/441 |

| EDSR | 18/442 |

| SRCNN | 11/384 |

| SRGAN | 15/512 |

| ESRGAN | 35/211 |

| U-Net+GEU | 38/58 |

| U-Net+GEU+filter | 16/135 |

| U-Net+GEU3 | 55/11 |

| U-Net+ResBlock | 10/322 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rajnoha, M.; Mezina, A.; Burget, R. Multi-Frame Labeled Faces Database: Towards Face Super-Resolution from Realistic Video Sequences. Appl. Sci. 2020, 10, 7213. https://doi.org/10.3390/app10207213

Rajnoha M, Mezina A, Burget R. Multi-Frame Labeled Faces Database: Towards Face Super-Resolution from Realistic Video Sequences. Applied Sciences. 2020; 10(20):7213. https://doi.org/10.3390/app10207213

Chicago/Turabian StyleRajnoha, Martin, Anzhelika Mezina, and Radim Burget. 2020. "Multi-Frame Labeled Faces Database: Towards Face Super-Resolution from Realistic Video Sequences" Applied Sciences 10, no. 20: 7213. https://doi.org/10.3390/app10207213

APA StyleRajnoha, M., Mezina, A., & Burget, R. (2020). Multi-Frame Labeled Faces Database: Towards Face Super-Resolution from Realistic Video Sequences. Applied Sciences, 10(20), 7213. https://doi.org/10.3390/app10207213