1. Introduction

Skin related diseases such as skin cancer are common [

1], and non-invasive methods for diagnosing them are needed. According to Le et al. [

2], the melanoma incidence is increasing. The incidence between 2009–2016 was 491.1 cases per hundred thousand people, which is nearly 64% more compared to the time period of 1999–2008. The difference in incidence at an older age is even starker, as the incidence nearly doubled from 1278.1 to 2424.9 in people older than 70 years old [

2]. The cost of the melanoma for the society has the same trend and early detection and accurate treatment lowers these costs and improves the life expectancy of the patients. Hyperspectral imaging is one way for the early detection and guidance for the treatment. Skin physical parameter retrieval with hyperspectral camera or spectrometer and machine learning (ML) provide a non-invasive method of measuring the chromophore concentrations and other parameters in the skin [

3].

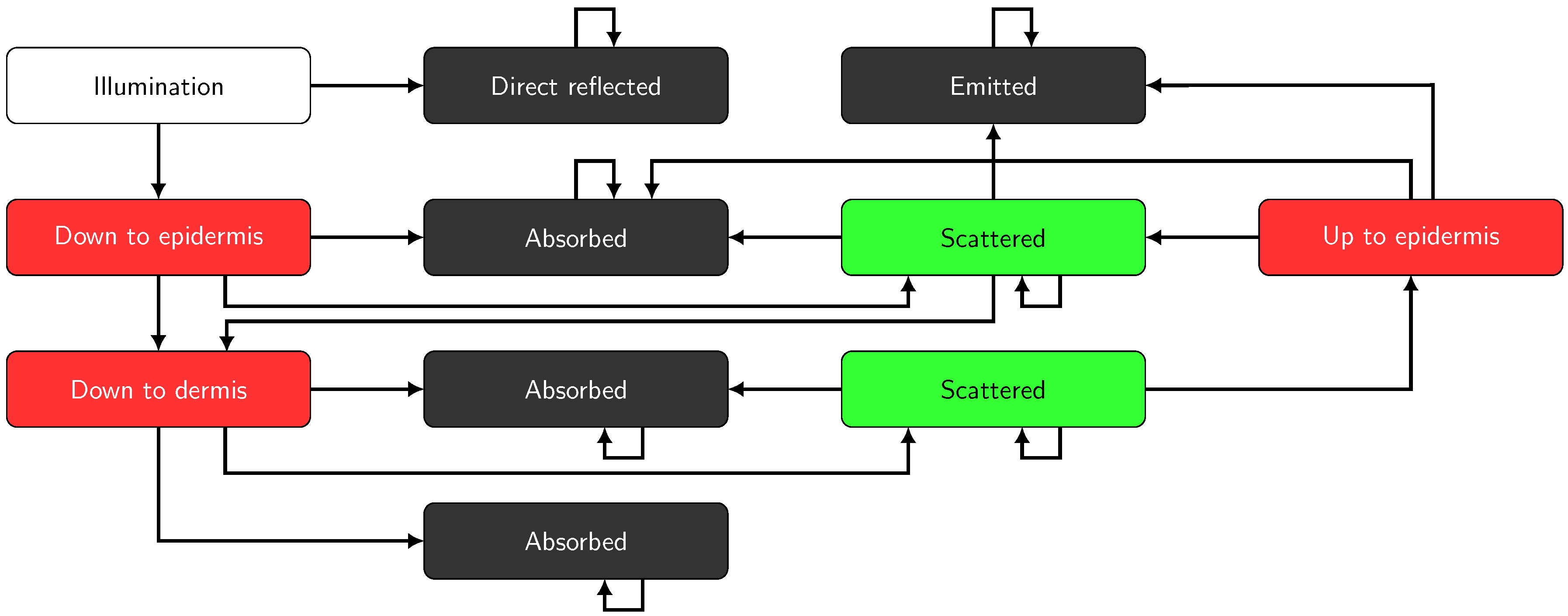

One hinderance to developing ML models for clinical use is that the needed training datasets are large and difficult to produce. The ethical standards of using human testing make it hard to obtain data, and there needs to be a team of medical staff in addition to computer science specialists for the work. One way to avoid some of these pitfalls is to use mathematical modeling to produce training data for ML algorithms. In this study, we use the stochastic model, which is partially of our own design.

In our previous research [

4,

5], we have used convolutional neural networks (CNN) for stochastic model inversion. In [

4], we used CNN in inverting the stochastic model for leaf optical properties (SLOP) [

6], which was the inspiration for the stochastic model used in this study. We found the inversion successful. In [

5], we compared the invertibility and usefulness in the physical parameter retrieval from the stochastic model used in this study and a Kubelka–Munk model [

7] by inversion with CNN. It has since occurred to us that it would be useful to verify the applicability of the CNN networks in model inversion by comparing it to other ML algorithms.

The ML algorithms have been compared multiple times in different hyperspectral imaging scenarios. For example, one study compared ML algorithms in assessing strawberry foliage Anthracnose disease stage classification [

8]. They compared spectral angle mapper, stepwise discriminant analysis, and their own spectral index they call simple slope measure. These algorithms did not show good performance in the task, with classification accuracy just breaking 80%. Another study found a least mean squares classifier to perform best in classifying a small batch of lamb muscles compared to six other machine learning algorithms including support vector machine (SVM) approaches, simple neural networks, nearest neighbor algorithm, and linear discriminator analysis [

9]. The algorithms were also tested using principal component analysis (PCA) for dimensionality reduction in training and testing data. They found no statistical differences in the classification results between using PCA for the data or not.

Gewali et al. [

10] have written a survey on machine learning methods in hyperspectral remote sensing tasks. The retrieval of (bio)physical or (bio)chemical parameters from the hyperspectral images was one of the tasks, and they found three articles where SVMs were used in retrieval, five articles that used latent linear models such as PCA, four that used ensemble learning, and five that used Gaussian processes. Surprisingly, they found no articles where deep learning was used in retrieval.

In the articles [

8,

9,

10], the distinction to our work is that they do not apply mathematical modeling prior to applying machine learning. In contrast, the following articles by Vyas et al. and Liang et al. are similar to our work, only employing different mathematical and machine learning models [

11,

12,

13].

Liang et al. [

11] used simulated PROSAIL data to select optimal vegetation indices for leaf and canopy chlorophyll content prediction from an inverted PROSAIL model. In the inversion, they used an SVM algorithm and a random forest (RF) algorithm, of which they found RF to be better. The results were promising, as the coefficient of determination (

) was 0.96 between measured and predicted data. However, their usage of indices makes it rather incomparable to our research.

Vyas et al. [

12,

13] have done the work most similar to ours. In [

12], the Kubelka–Munk (KM) model was inverted using a k nearest neighbors (k-NN) method with different distance metrics and a support vector regressor. The inversion parameters were melanosome volume fraction, collagen volume fraction, hemoglobin oxygenation percentage, and blood volume fraction. In the synthetic experiment, they found the k-NN with spectral angle distance to have the smallest mean absolute errors. In the in vivo experiment, they show that the predicted parameters produce modeled spectra strikingly similar to measured spectra. This is used as evidence of inversion success.

In their other study [

13], the KM model was inverted using an SVM approach. The inversion parameter was thickness of the skin. The linear correlation coefficient (

r) between inverted KM predictions and ultrasound measurements of the skin thickness was 0.999. In further experiments, they found the measured and modeled spectra nearly indistinguishable, although it is not disclosed how they chose the other parameters for the modeling. The difference to our approach in aforementioned studies is that the inverted models differ from ours, as we are trying to find useful models alternative to KM.

The objective of our study is to find a good way to invert the stochastic model for skin optical properties. Our hypothesis is that the convolutional neural network (CNN) will outperform the others as it has been shown to perform well in similar tasks [

14,

15,

16,

17].

In

Section 2, we provide the reader with information of our data, the methods we use, including the stochastic model and the different machine learning algorithms, and the metrics we use in evaluating the results. In

Section 3, we show the experimental results and, in

Section 4, we compare our results to the previous research, discuss the strengths and weaknesses of our work, and discuss the direction of future work.

Section 5 concludes the work.

3. Results

The six inversions had variable success with the simulated data (

Figure 3 and

Figure 4 and

Table 12). The CNN and CNNV2 experiments (

Table 12) performed best with correlation coefficients being the highest between 0.93 and 0.96 (mean 0.95) and their RMSE, MSE, and MAE values being lowest 0.09–0.12 (mean 0.11) (RMSE), 0.01–0.02 (mean 0.01) (MSE), and 0.08–0.09 (mean 0.09) (MAE). The performance of the MLP was also good. It outscored CNN in some regression goals by some metrics and was a little poorer in others. Correlation coefficients were between 0.79 and 0.95 (mean 0.91), RMSEs 0.09–0.18 (mean 0.12), MSEs 0.01–0.03 (mean 0.02), and MAEs 0.07–0.14 (mean 0.09). Based on visual interpretation of

Figure 4, the second experiment of CNN regressor is the best of the neural network regressors, as the correlation seems to be strongest there.

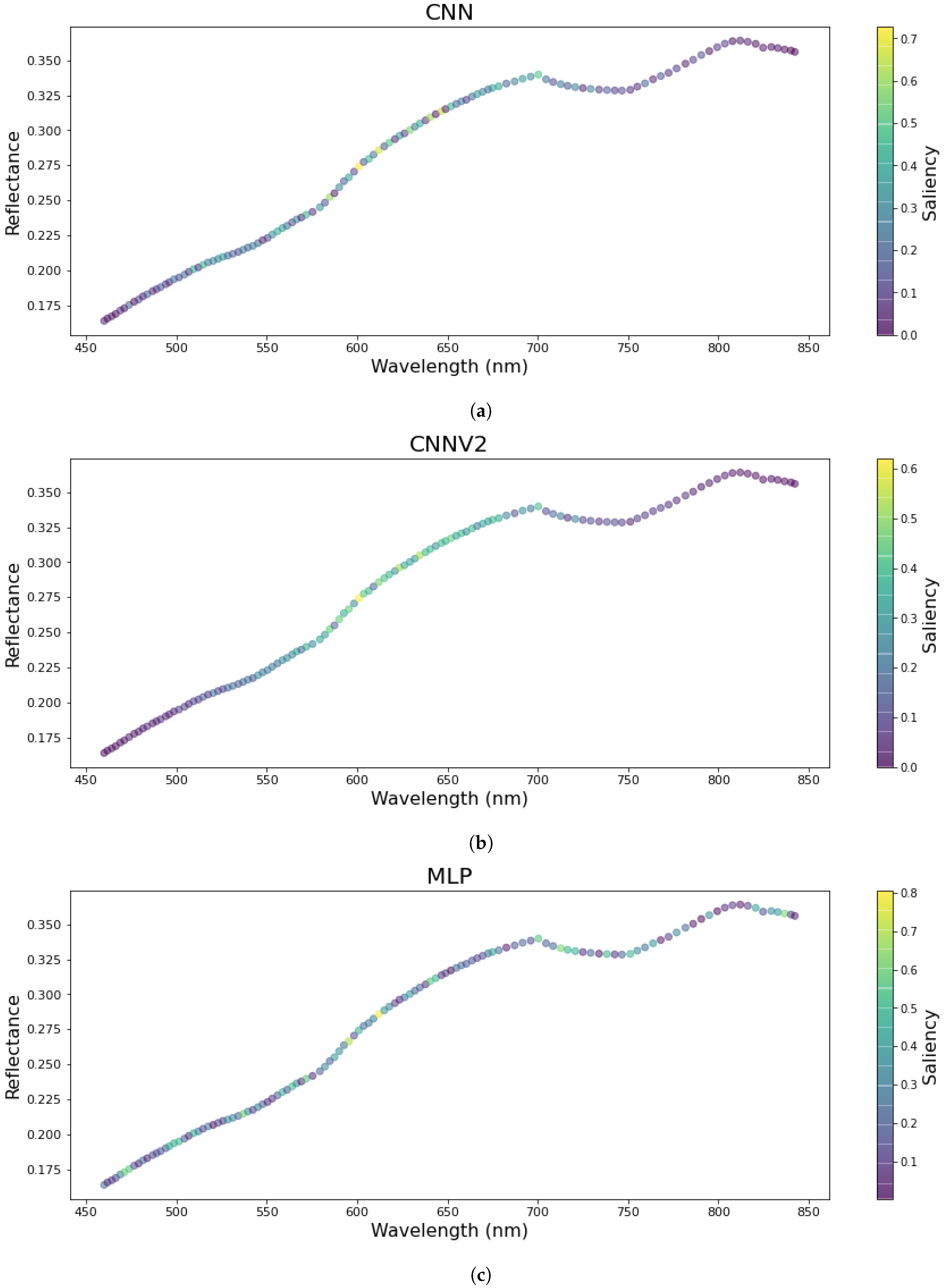

In the saliency maps of the CNN and MLP regressors (

Figure 3), we see that the CNN experiments had similar areas of interest. The interval between 550

and 700

seems to be most important in predicting the target variables. In the MLP experiment, the same interval seems to be useful, but additionally it highlights some areas in the ends of the spectrum.

Lasso and stochastic gradient descent regressors showed similar results. As

Figure 4 shows, the correlation is virtually identical, and the points scatter to nearly the same place. In fact, only looking at individual points, one can observe some differences between the lasso and SGD regressors. Lasso and SGDR had correlation coefficients between 0.55 and 0.88 (means 0.71 and 0.71, respectively), RMSEs between 0.14 and 0.24 (means 0.20 and 0.20), MSEs between 0.02 and 0.06 (means 0.04 and 0.04), and MAEs between 0.11 and 0.20 (means 0.16 and 0.16).

The worst predictor was the linear support vector regressor. It is worst by all metrics by average, but, in hemoglobin volume fraction and epidermis thickness predictions, it seems to be marginally better than Lasso and SGDR. The correlation coefficients were between 0.59 and 0.68 (mean 0.64), RMSEs between 0.22 and 0.24 (mean 0.23), MSEs between 0.05 and 0.06 (mean 0.05), and MAEs between 0.16 and 0.19 (mean 0.18).

Overall, the least accurate predictions were for hemoglobin volume fraction and the models were most accurate for dermis thickness prediction. Melanosome volume fraction was relatively easily learned by all regressors, while, in epidermis thickness, there is a clear divide between group of CNN and MLP regressors and other regressors. The neural network models provide accurate responses which seems almost impossible for the other types of models.

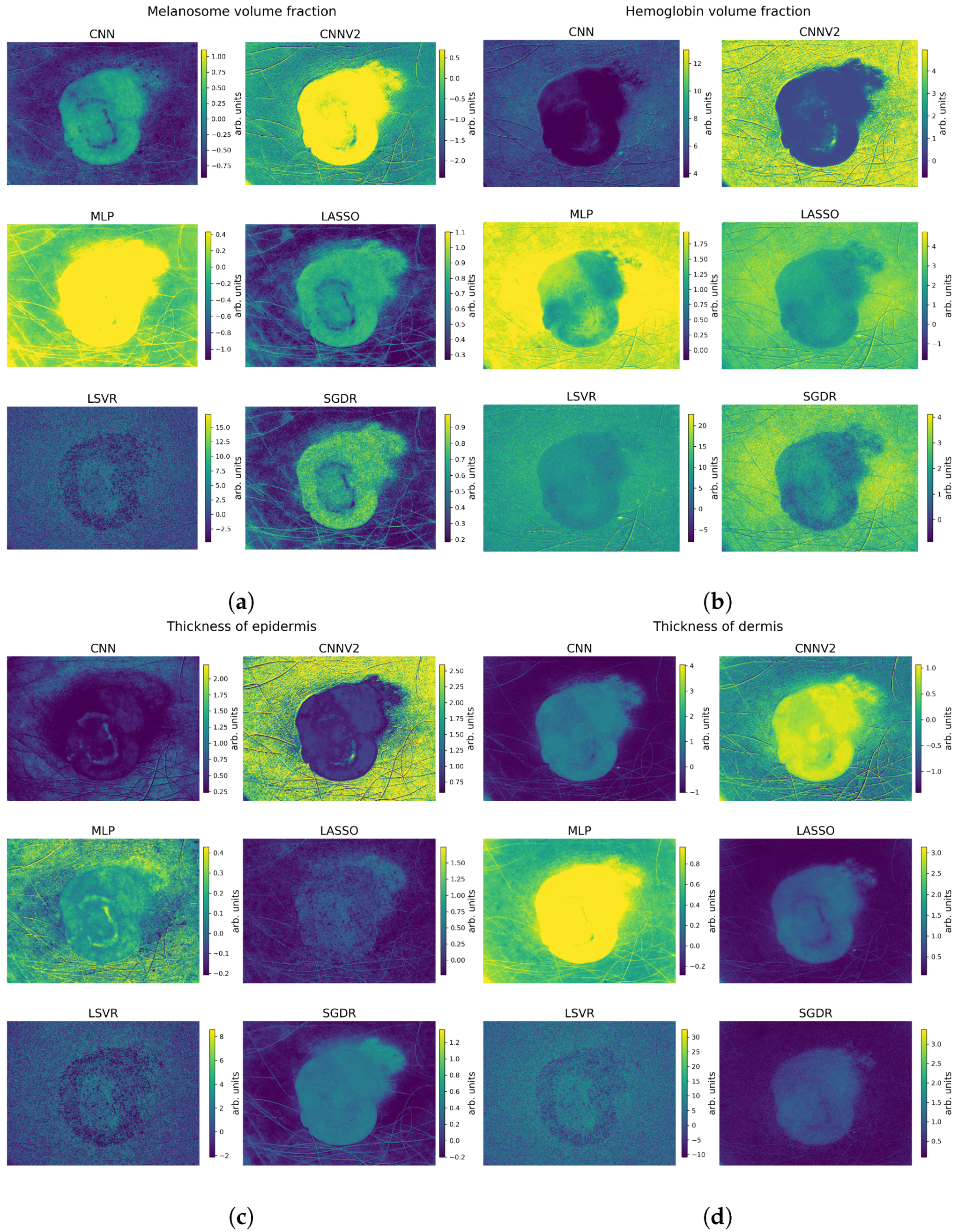

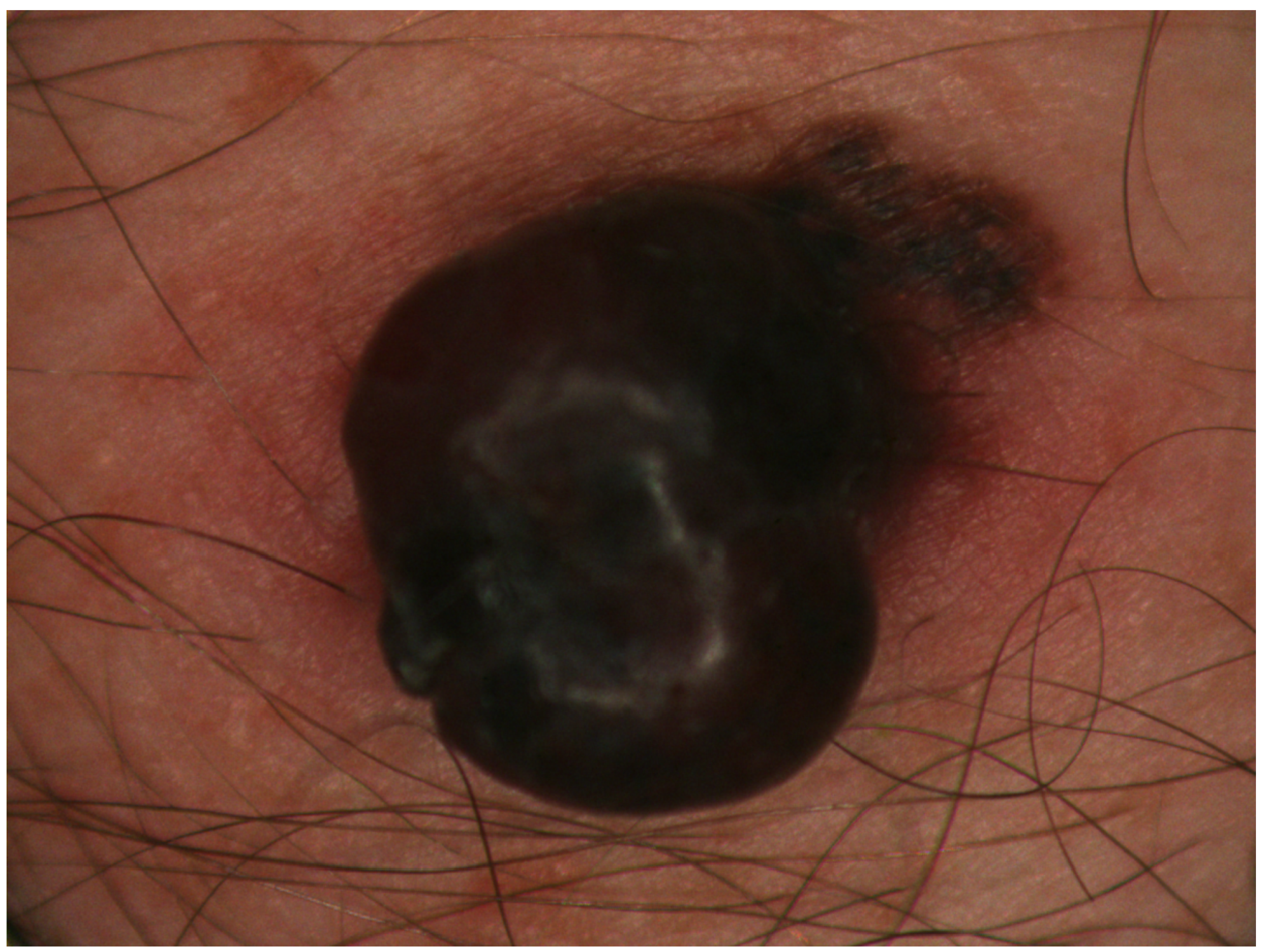

Regarding the test with the measured hyperspectral data in

Figure 5, the details of the lesions are most easily visible in the CNN experiments. In MLP, lasso, and LSVR experiments, the epidermis thickness is poorly visible due to noise. Looking at

Figure 5, the fourth best model seems to be the SGDR, as the details of the lesions are somewhat visible.

4. Discussion

The purpose of this study was to investigate how different machine learning approaches perform in the inversion task of predicting stochastic model parameters from the spectral input data. The present results suggests that the most accurate models can be obtained by the CNN method. It is likely that performance of all the trained models can be improved by further parameter tuning or increasing the amount of data, but it is unlikely that one model would have improvement rate vastly different from others. The results were expected as the CNN has been shown to be superior in signal processing tasks, which are essentially analogous to one-dimensional, spectral data used in this study.

The measured correlation coefficients show that the CNN is as close to perfection as can be reasonably expected without overfitting. However, we must remember that the results were achieved with simulated data, and the testing with measured biophysical parameters are yet to be carried out. The error metrics show similarly good signs, as the MAE was less than of the range (all true target parameters were normalized to between zero and one), and the RMSE is less than . If the errors are similar in further testing with measured data, then the model would be ready for in-vivo clinical testing.

Based on the results regarding the measured hyperspectral data, it seems that the used stochastic skin model represents the optical properties of the skin quite well. The CNN predictors in

Figure 5 show the same general pattern as

Figure 6, and at least the melanosome volume fraction is predicted to be higher in the area of the lesion, which is clearly correct based on

Figure 6. Of the absolute correctness of the model, these experiments do not provide information, as we did not have measurements of the skin biophysical parameters to compare to

Figure 5.

Compared to the previous research by Vyas et al. [

12,

13], our correlation results are slightly inferior. They found a correlation coefficient between measured thickness and estimated thickness to be nearly perfect, while our best correlation is 0.96. However, our goal was different: we set out to find the best inversion algorithm, instead of best inversion. In future studies, we hope we can build a more robust system of parameter retrieval with a perfected CNN model.

In future research, the stochastic model and CNN combination should be thoroughly tested with measured hyperspectral data and measured biophysical parameters. The goals of the testing could include finding the best CNN estimator by optimizing the absolute and relative accuracy of the predictions with respect to the model. Our ultimate goal is to obtain a comprehensive model for predicting skin optical properties and its inverse function for predicting skin biophysical parameters.