Machine-Vision-Based Algorithm for Blockage Recognition of Jittering Sieve in Corn Harvester

Abstract

1. Introduction

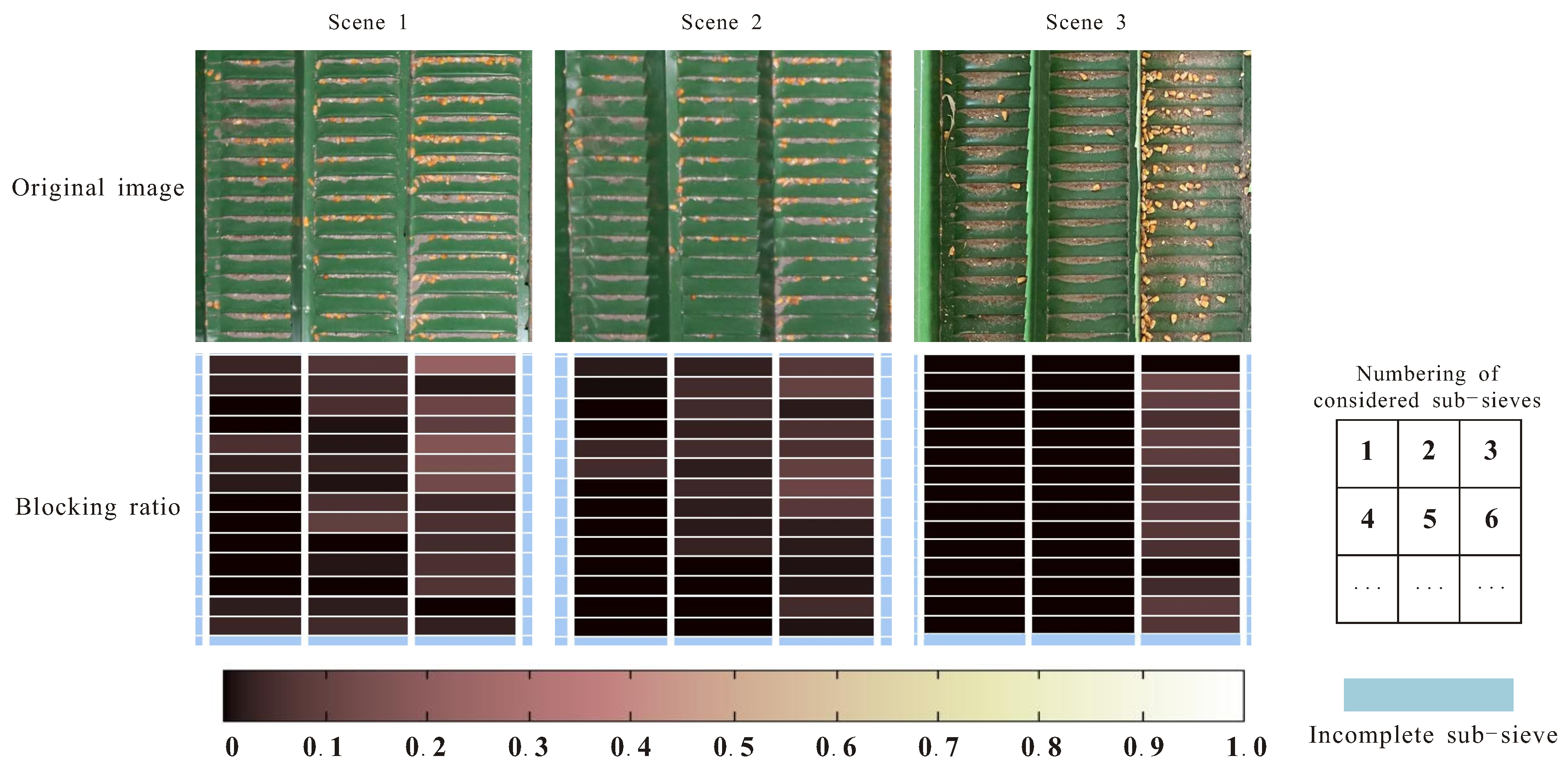

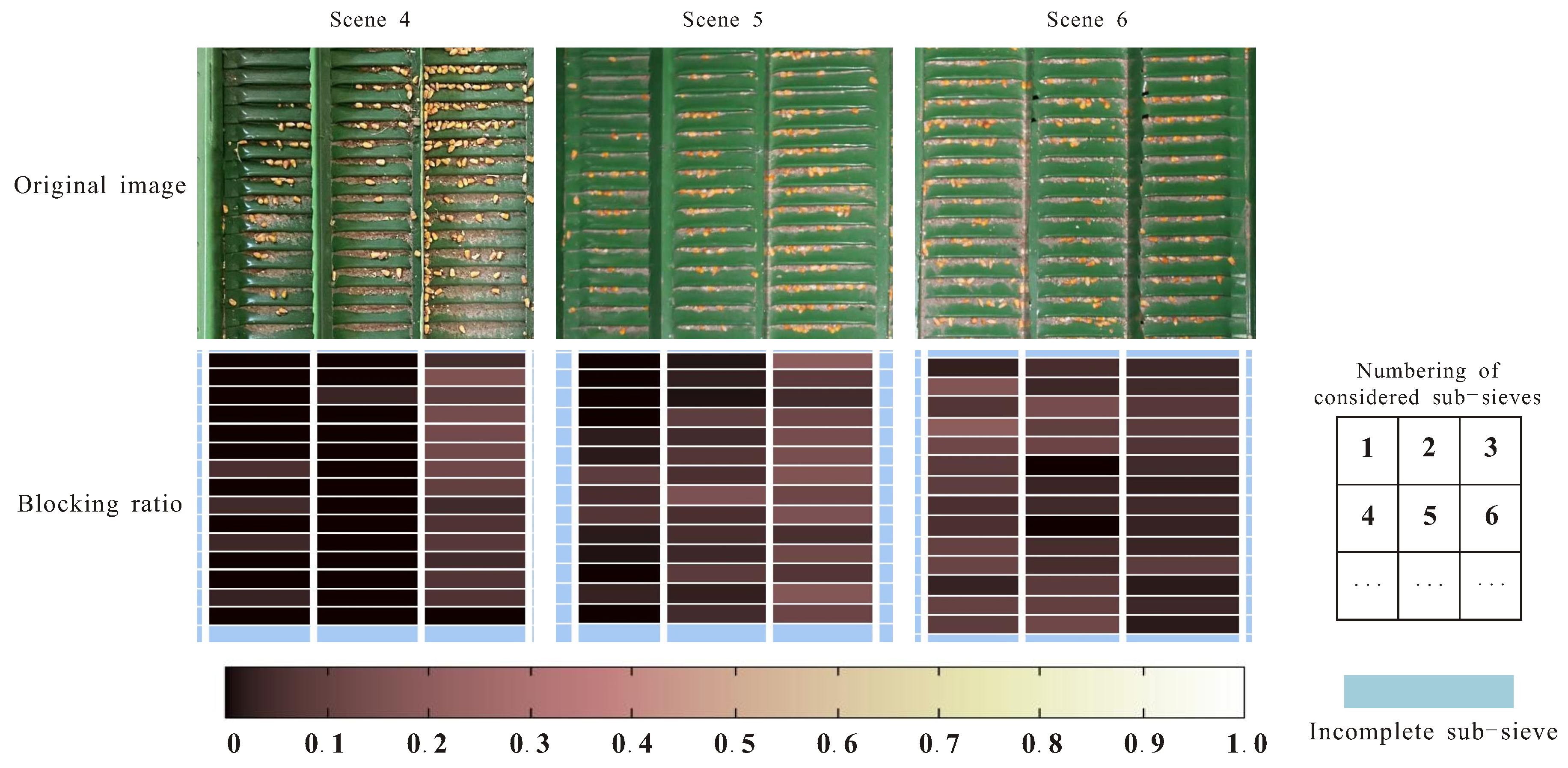

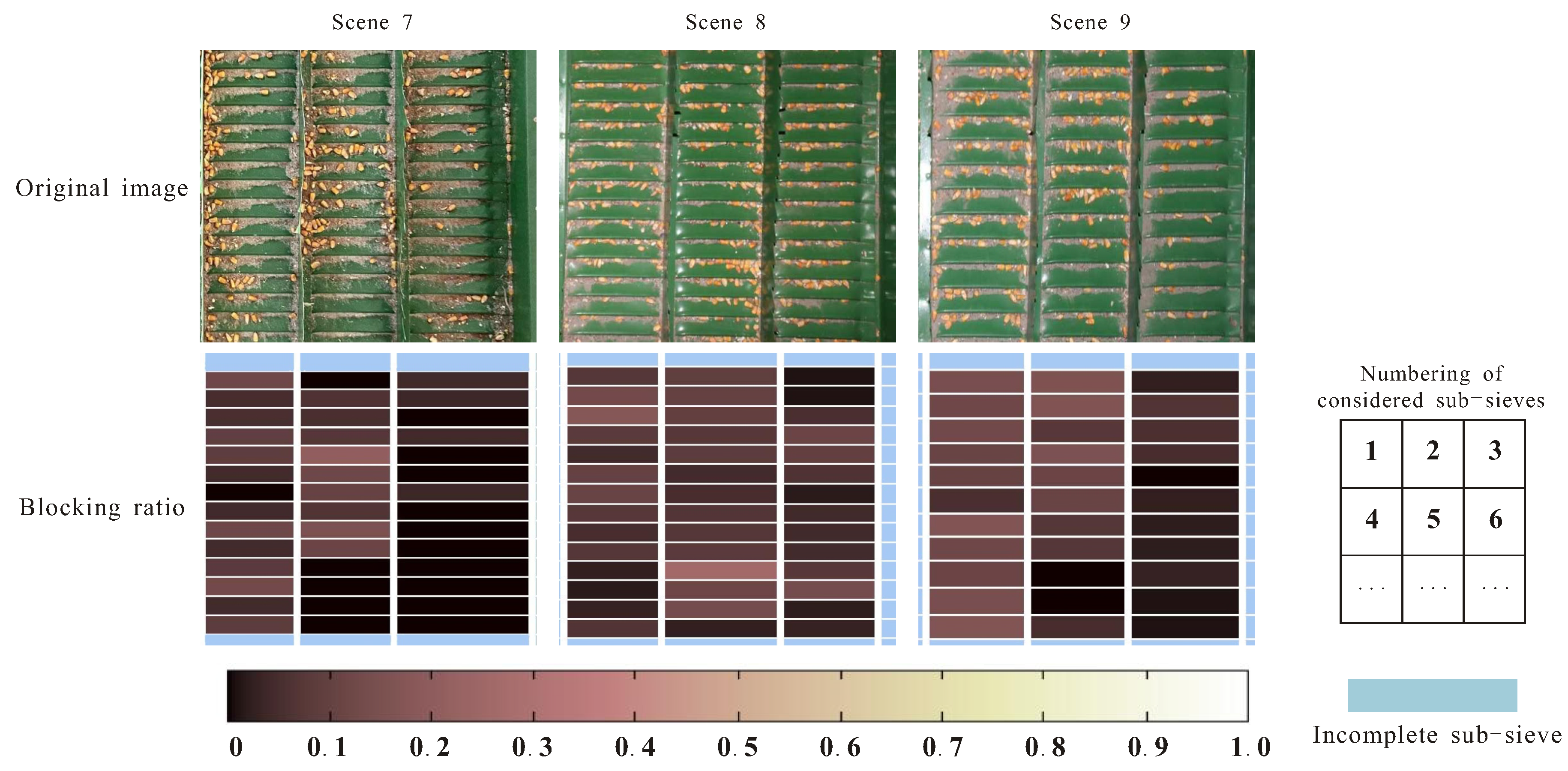

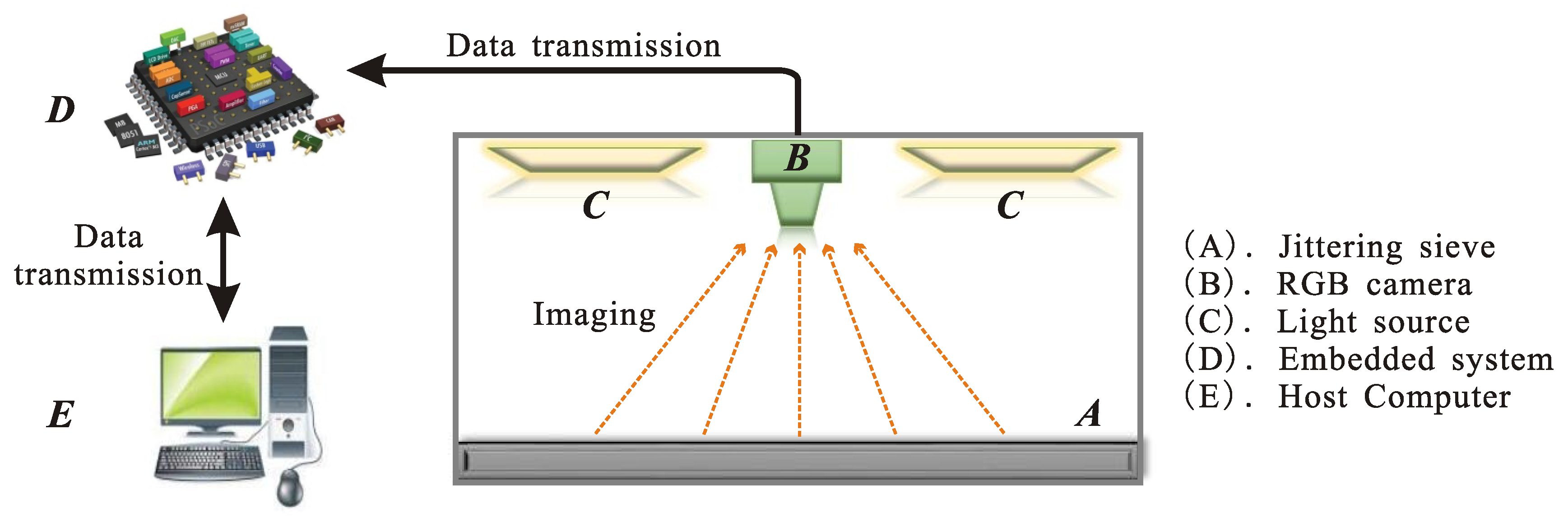

2. Material and Method

2.1. Material

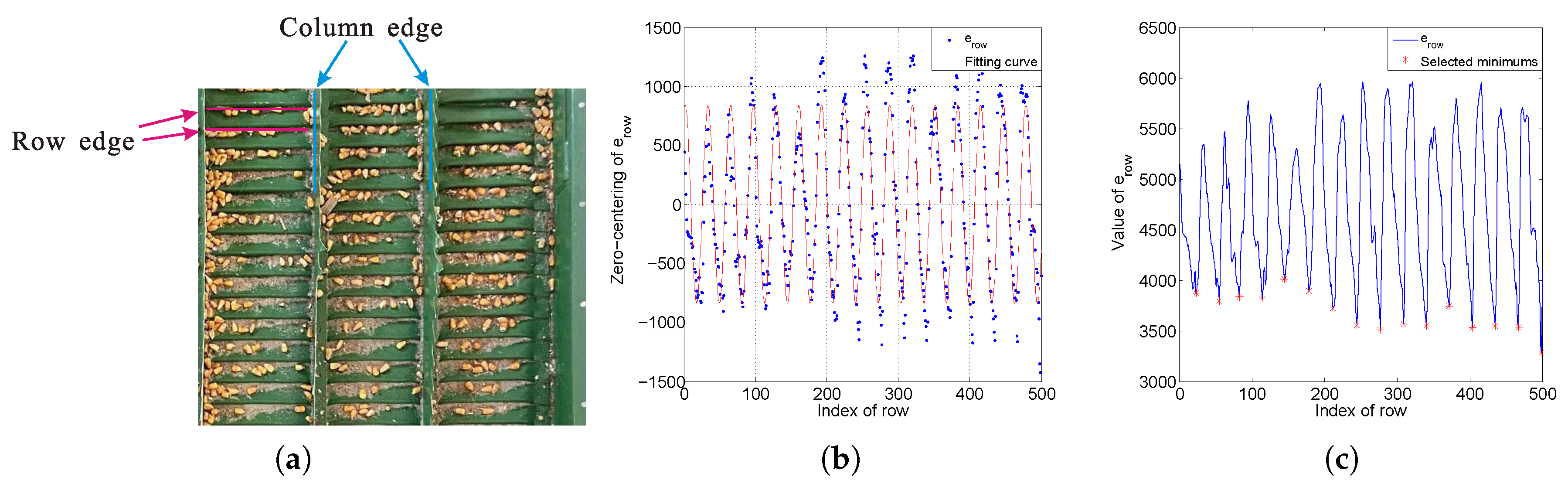

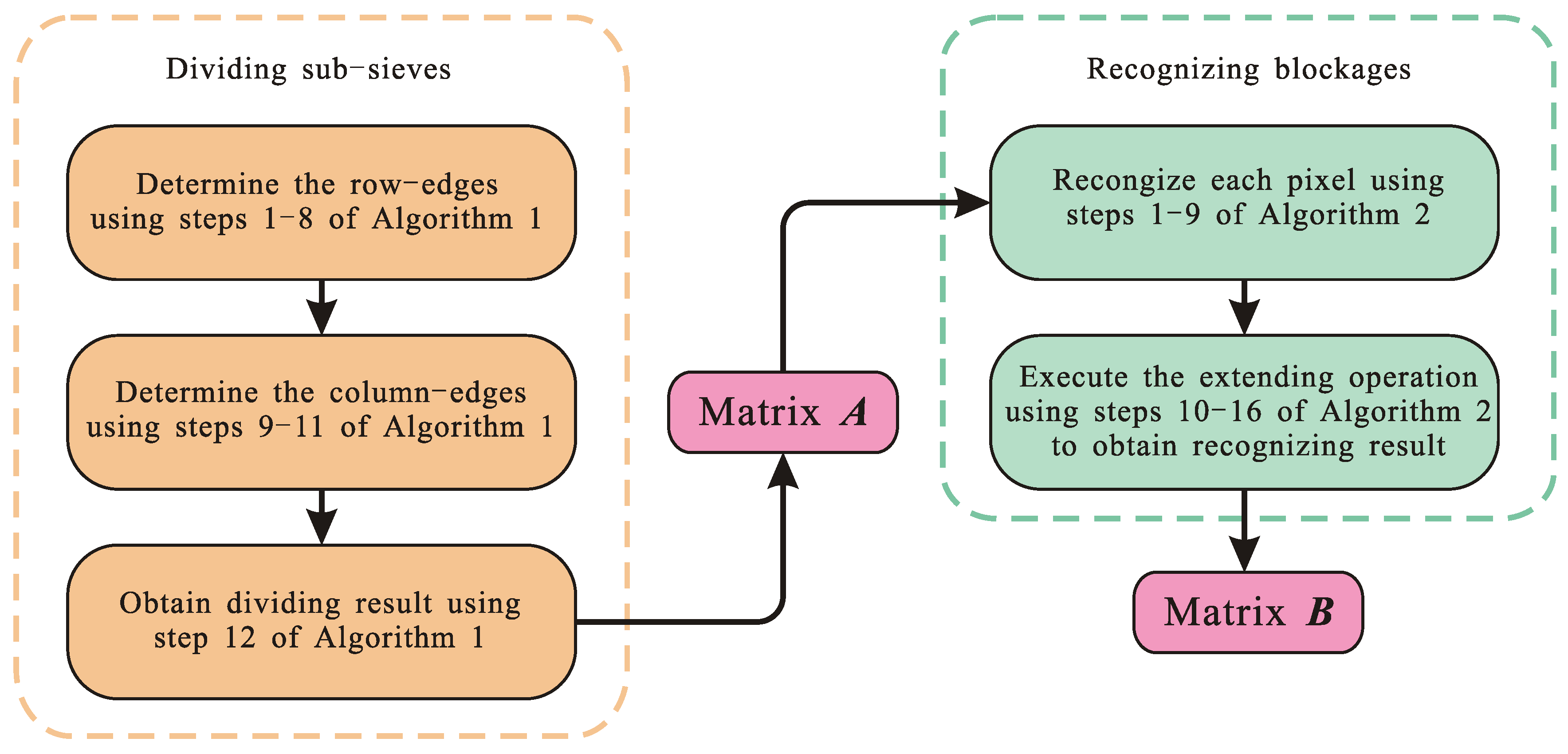

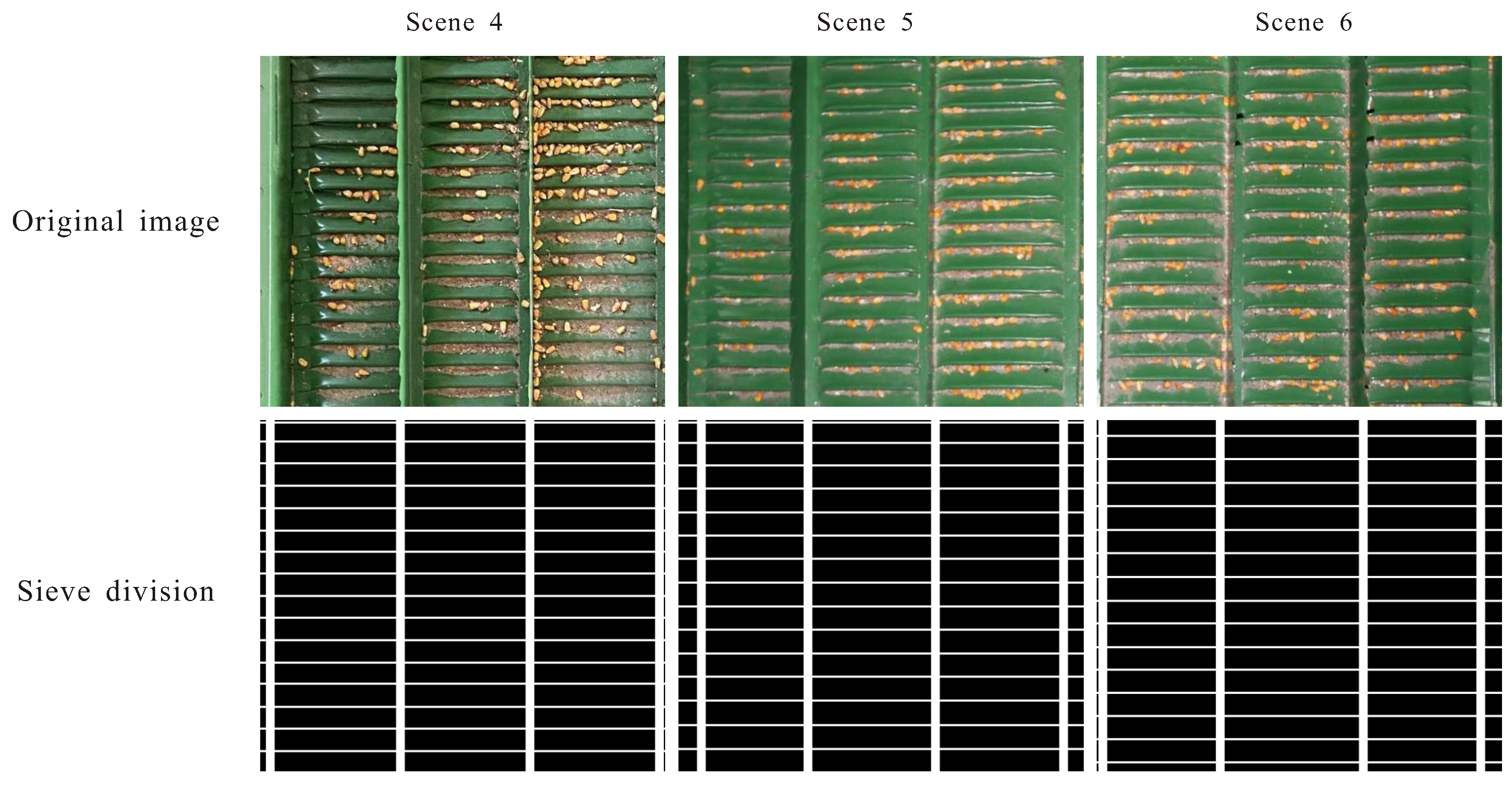

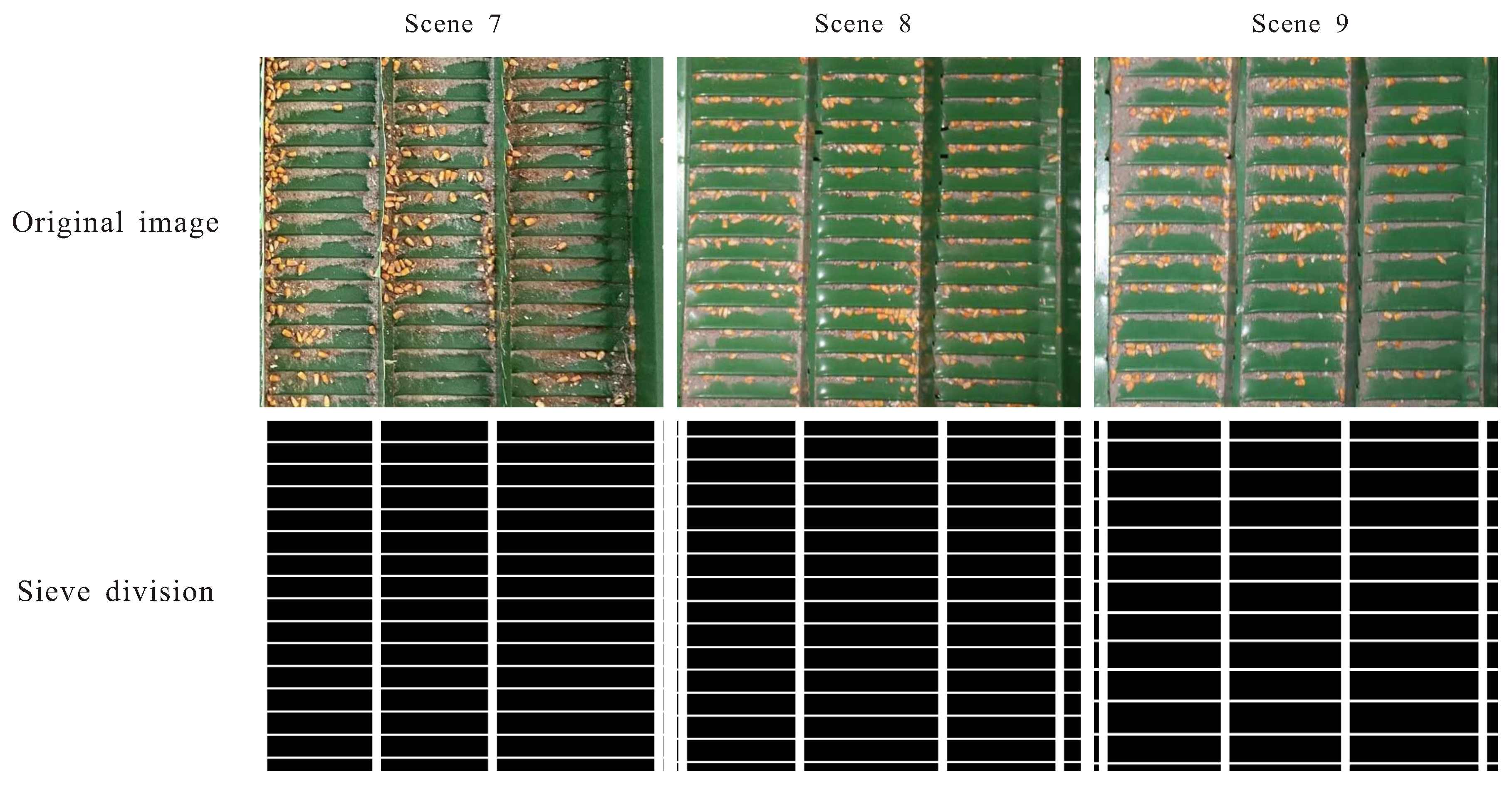

2.2. Algorithm of Sieve Dividing

| Algorithm 1: Dividing jittering sieve into sub-sieves |

|

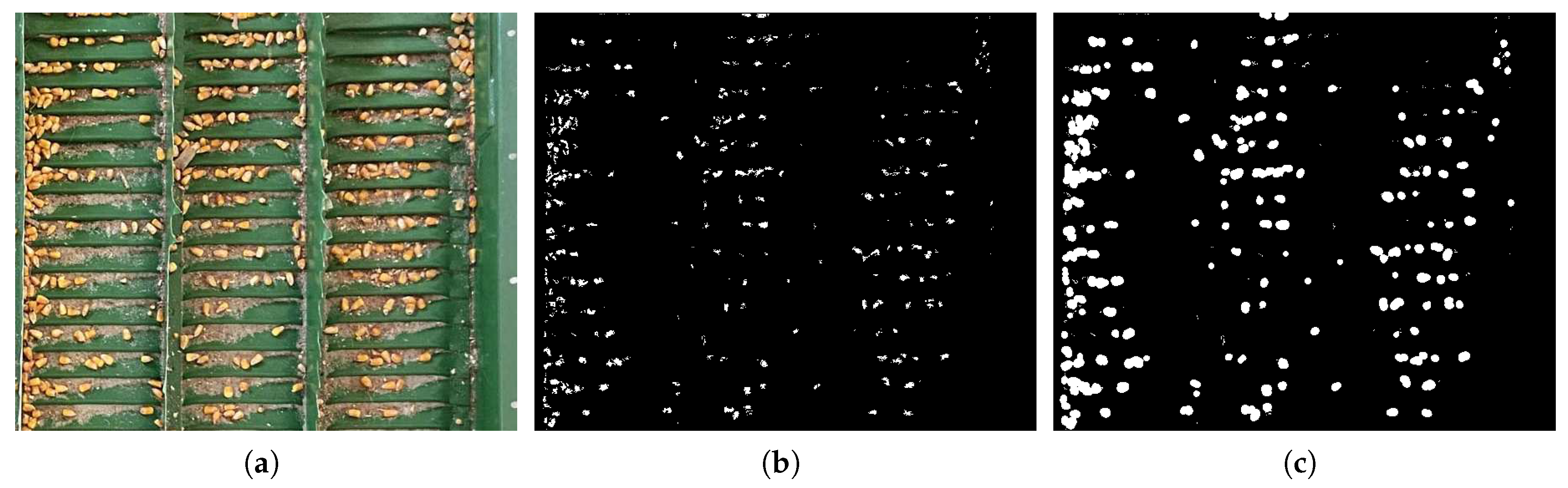

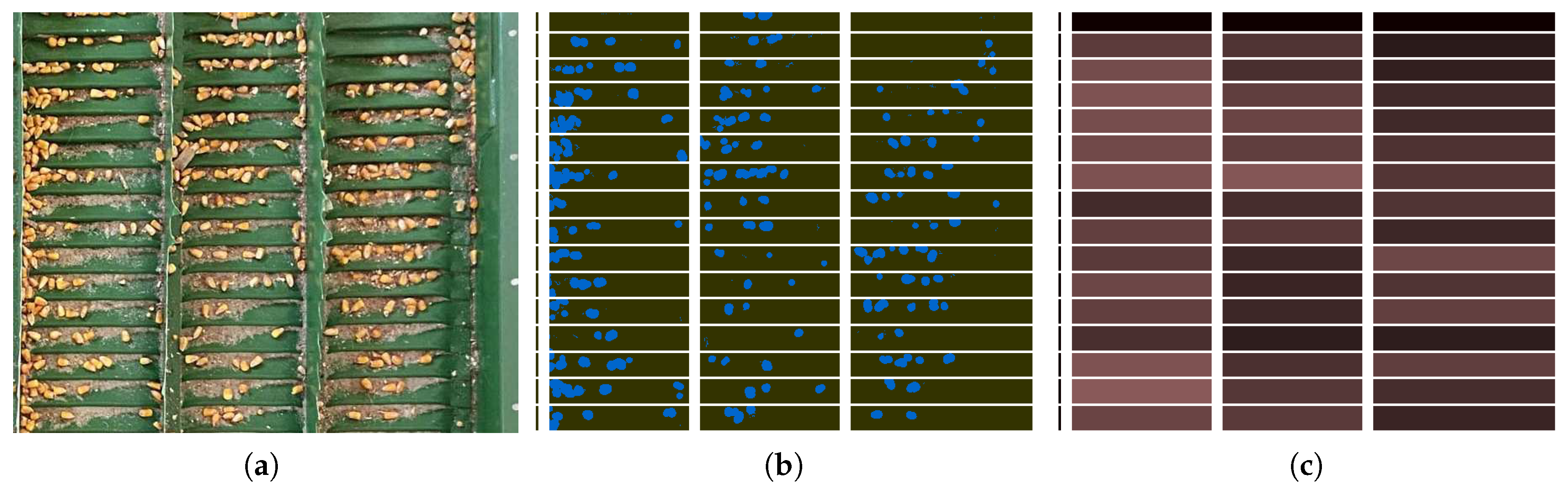

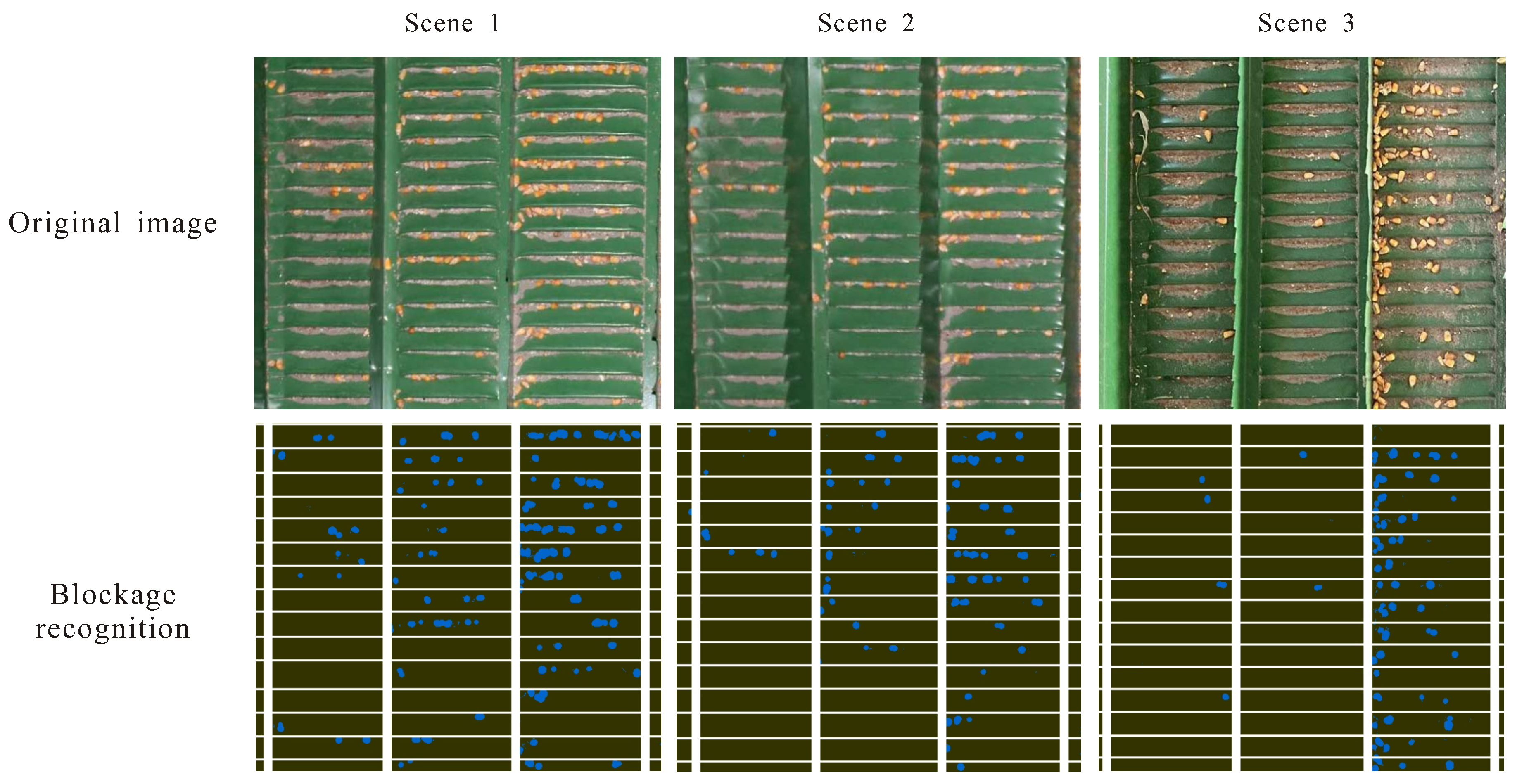

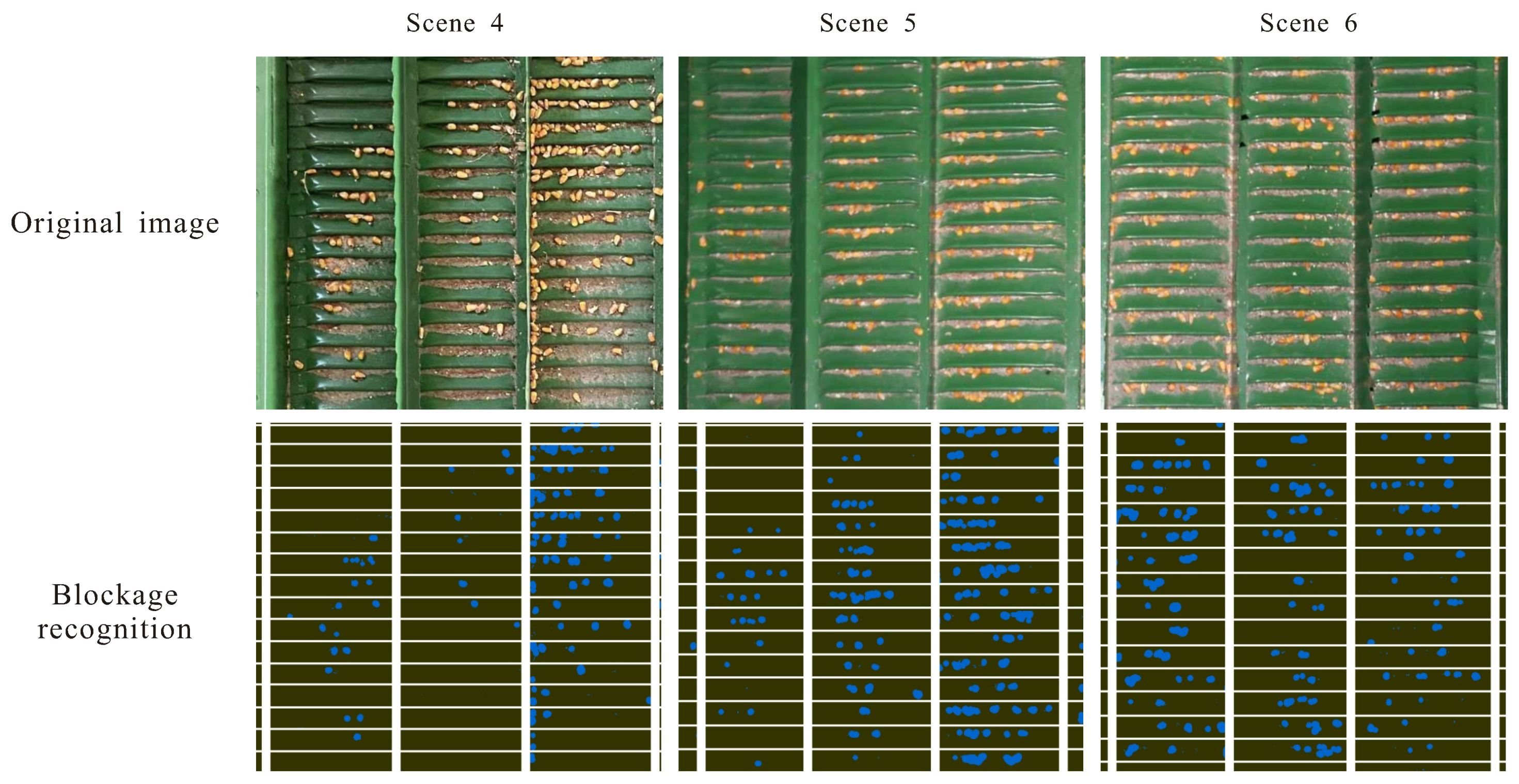

2.3. Algorithm of Blockage Recognition

| Algorithm 2: Recognizing of blockages |

|

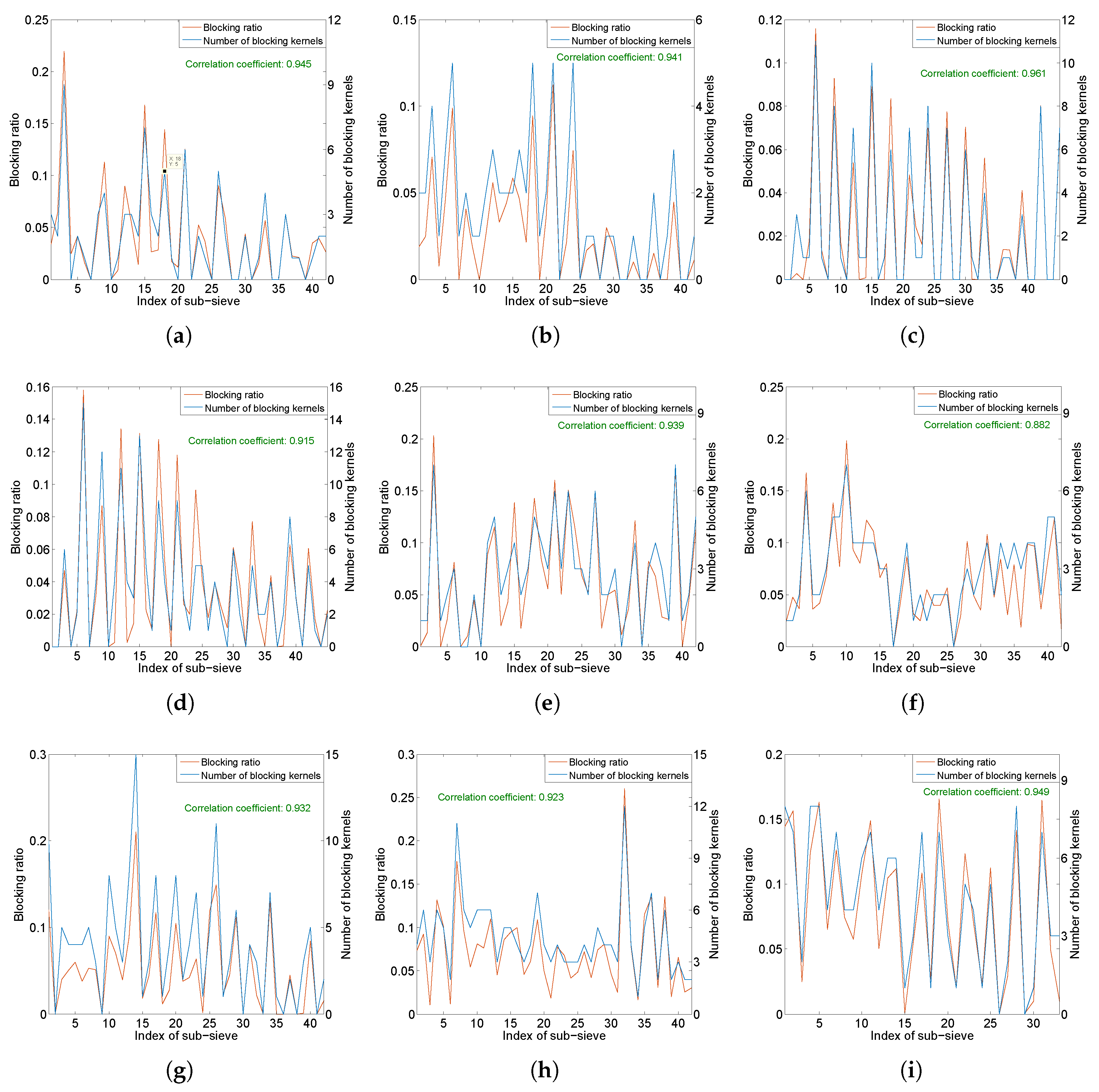

2.4. Evaluation of Blocking Level

3. Experimental Results and Analysis

4. Discussions

4.1. Limitations

4.2. Future Work

- Use the adjustable light source such that the thresholds can be tested and adjusted in advance.

- Normalize the RGB data of each pixel, that is, , and then compute the correlation between the normalized data and the dictionary to judge whether the pixel belongs to kernel blockage. The dictionary is trained by using training samples, and various of dictionary learning methods can be employed for this purpose [24].

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Esteves, C.A.C.; Colemanb, W.; Dubec, M.; Rodriguesa, A.E.; Pinto, P.C.R. Assessment of key features of lignin from lignocellulosic crops: Stalks and roots of corn, cotton, sugarcane, and tobacco. Ind. Crops Prod. 2016, 92, 136–148. [Google Scholar] [CrossRef]

- Chen, S.; Chen, X.; Xu, J. Impacts of climate change on agriculture: Evidence from China. Ind. Crops Prod. 2016, 76, 105–124. [Google Scholar] [CrossRef]

- Tabacco, E.; Ferrero, F.; Borreani, G. Feasibility of Utilizing Biodegradable Plastic Film to Cover Corn Silage under Farm Conditions. Appl. Sci. 2020, 10, 2803. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, Q.; Yan, X.; Shi, C. Compressibility and equivalent bulk modulus of shelled corn. Biosyst. Eng. 2015, 140, 91–97. [Google Scholar] [CrossRef]

- Isaak, M.; Yahya, A.; Razif, M.; Mat, N. Mechanization status based on machinery utilization and workers’ workload in sweet corn cultivation in Malaysia. Comput. Electron. Agric. 2020, 169, 105208. [Google Scholar] [CrossRef]

- Mantovani, E.C.; de Oliveira, P.E.B.; de Queiroz, D.M.; Fernandes, A.L.T.; Cruvinel, P.E. Current Status and Future Prospect of the Agricultural Mechanization in Brazil. AMA-Agric. Mech. Asia Afr. Lat. Am. 2020, 50, 20–28. [Google Scholar]

- Qian, F.; Yang, J.; Torres, D. Comparison of corn production costs in China, the US and Brazil and its implications. Agric. Sci. Technol. 2016, 17, 731–736. [Google Scholar]

- Puzauskas, E.; Steponavicius, D.; Jotautiene, E.; Petkevicius, S.; Kemzuraite, A. Substantiation of concave crossbars shape for corn ears threshing. Mechanika 2016, 22, 553–561. [Google Scholar] [CrossRef]

- Qu, Z.; Zhang, T.; Li, K.; Yang, L.; Cui, T.; Zhang, D. The design and experiment of longitudinal axial flow maize threshing and separating device. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017. [Google Scholar]

- Qu, Z.; Zhang, T.; Li, K.; Yang, L.; Cui, T.; Zhang, D. Experiment on distribution of mixture in longitudinal axial flow threshing separation device for maize. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019. [Google Scholar]

- Chen, Y.; Chao, K.; Kim, M.S. Machine vision technology for agricultural applications. Comput. Electron. Agric. 2002, 26, 173–191. [Google Scholar] [CrossRef]

- El-Mesery, H.S.; Mao, H.; Abomohra, A.E.F. Applications of non-destructive technologies for agricultural and food products quality inspection. Sensors 2019, 19, 846. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D. Inspection and grading of agricultural and food products by computer vision systems—A review. Comput. Electron. Agric. 2002, 36, 193–213. [Google Scholar] [CrossRef]

- Zhang, L.; Geer, T.; Sun, X.; Shou, C.; Du, H. Application of hyperspectral imaging technique in agricultural remote sensing. Bangladesh J. Bot. 2019, 48, 907–912. [Google Scholar]

- Eyarkai, V.N.; Thangave, K.; Shahir, S.; Thirupathi, V. Comparison of various RGB image features for nondestructive prediction of ripening quality of “alphonso” mangoes for easy adoptability in machine vision applications: A multivariate approach. J. Food Qual. 2016, 39, 816–825. [Google Scholar] [CrossRef]

- Taghizadeh, M.; Gowen, A.A.; O’Donnell, C.P. Comparison of hyperspectral imaging with conventional RGB imaging for quality evaluation of Agaricus bisporus mushrooms. Biosyst. Eng. 2011, 108, 191–194. [Google Scholar] [CrossRef]

- Karayel, D.; Wiesehoff, M.; Özmerzi, A.; Müller, J. Laboratory measurement of seed drill seed spacing and velocity of fall of seeds using high-speed camera system. Comput. Electron. Agric. 2006, 50, 89–96. [Google Scholar] [CrossRef]

- Leemans, V.; Destain, M.F. A computer-vision based precision seed drill guidance assistance. Comput. Electron. Agric. 2007, 59, 1–12. [Google Scholar] [CrossRef][Green Version]

- Liu, C.; Chen, B.; Song, J.; Zheng, Y.; Wang, J. Study on the image processing algorithm for detecting the seed-sowing performance. In Proceedings of the 2010 International Conference on Digital Manufacturing & Automation, Changsha, China, 18–20 December 2010; pp. 551–556. [Google Scholar]

- Liu, Z.; Wang, S. Broken corn detection based on an adjusted YOLO with focal loss. IEEE Access 2019, 7, 68281–68289. [Google Scholar] [CrossRef]

- Fu, J.; Yuan, H.; Zhao, R.; Chen, Z.; Ren, L. Peeling Damage Recognition Method for Corn Ear Harvest Using RGB Image. Appl. Sci. 2020, 10, 3371. [Google Scholar] [CrossRef]

- Liao, K.; Paulsen, M.R.; Reid, J.F. Real-time detection of colour and surface defects of maize kernels using machine vision. J. Agric. Eng. Res. 1994, 59, 263–271. [Google Scholar] [CrossRef]

- Zhao, X.; Shang, P.; Lin, A. Distribution of eigenvalues of detrended cross-correlation matrix. EPL 2014, 107, 40008. [Google Scholar] [CrossRef]

- Tosic, I.; Frossard, P. Dictionary Learning. IEEE Signal Process. Mag. 2011, 28, 27–38. [Google Scholar] [CrossRef]

| Notation | Definition | Notation | Definition | Notation | Definition |

| scalars | vectors | matrices | |||

| tensors | mean value | Frobenius-norm | |||

| covariance | variance | cross-correlation | |||

| Abbreviation | Full Name | Abbreviation | Full Name | Abbreviation | Full Name |

| RGB | red, green, blue | MV | machine vision | F-norm | Frobenius-norm |

| Number of Row Edges | Number of Recognized Row Edges | Success Rate (%) | Number of Column Edges | Number of Recognized Column Edges | Success Rate (%) | |

|---|---|---|---|---|---|---|

| Scene 1 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 2 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 3 | 16 | 16 | 100.00 | 4 | 4 | 100 |

| Scene 4 | 16 | 16 | 100.00 | 4 | 4 | 100 |

| Scene 5 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 6 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 7 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 8 | 15 | 15 | 100.00 | 4 | 4 | 100 |

| Scene 9 | 12 | 12 | 100.00 | 4 | 4 | 100 |

| Total | 134 | 134 | 100.00 | 36 | 36 | 100.00 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, J.; Yuan, H.; Zhao, R.; Tang, X.; Chen, Z.; Wang, J.; Ren, L. Machine-Vision-Based Algorithm for Blockage Recognition of Jittering Sieve in Corn Harvester. Appl. Sci. 2020, 10, 6319. https://doi.org/10.3390/app10186319

Fu J, Yuan H, Zhao R, Tang X, Chen Z, Wang J, Ren L. Machine-Vision-Based Algorithm for Blockage Recognition of Jittering Sieve in Corn Harvester. Applied Sciences. 2020; 10(18):6319. https://doi.org/10.3390/app10186319

Chicago/Turabian StyleFu, Jun, Haikuo Yuan, Rongqiang Zhao, Xinlong Tang, Zhi Chen, Jin Wang, and Luquan Ren. 2020. "Machine-Vision-Based Algorithm for Blockage Recognition of Jittering Sieve in Corn Harvester" Applied Sciences 10, no. 18: 6319. https://doi.org/10.3390/app10186319

APA StyleFu, J., Yuan, H., Zhao, R., Tang, X., Chen, Z., Wang, J., & Ren, L. (2020). Machine-Vision-Based Algorithm for Blockage Recognition of Jittering Sieve in Corn Harvester. Applied Sciences, 10(18), 6319. https://doi.org/10.3390/app10186319