Integrated Replay Spoofing-Aware Text-Independent Speaker Verification

Abstract

1. Introduction

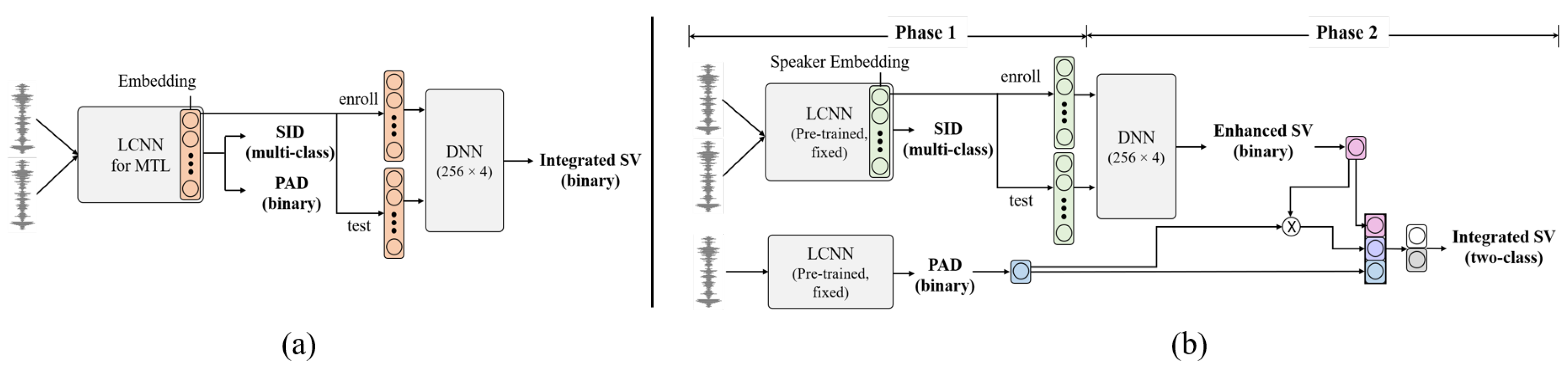

- Propose a novel E2E framework that jointly optimizes SID, PAD, and the ISV task.

- Experimentally validate the hypothesis that the discriminative information required for the SV and the PAD task may be distinct, requiring separate front-end modeling.

- Propose a separate modular back-end DNN that takes speaker embeddings and PAD predictions as an input to make ISV decisions.

2. Related Work

3. Integrated Speaker Verification

3.1. End-to-End Monolithic Approach

3.2. Back-End Modular Approach

4. Experiments and Results

4.1. Dataset

4.2. Experimental Configurations

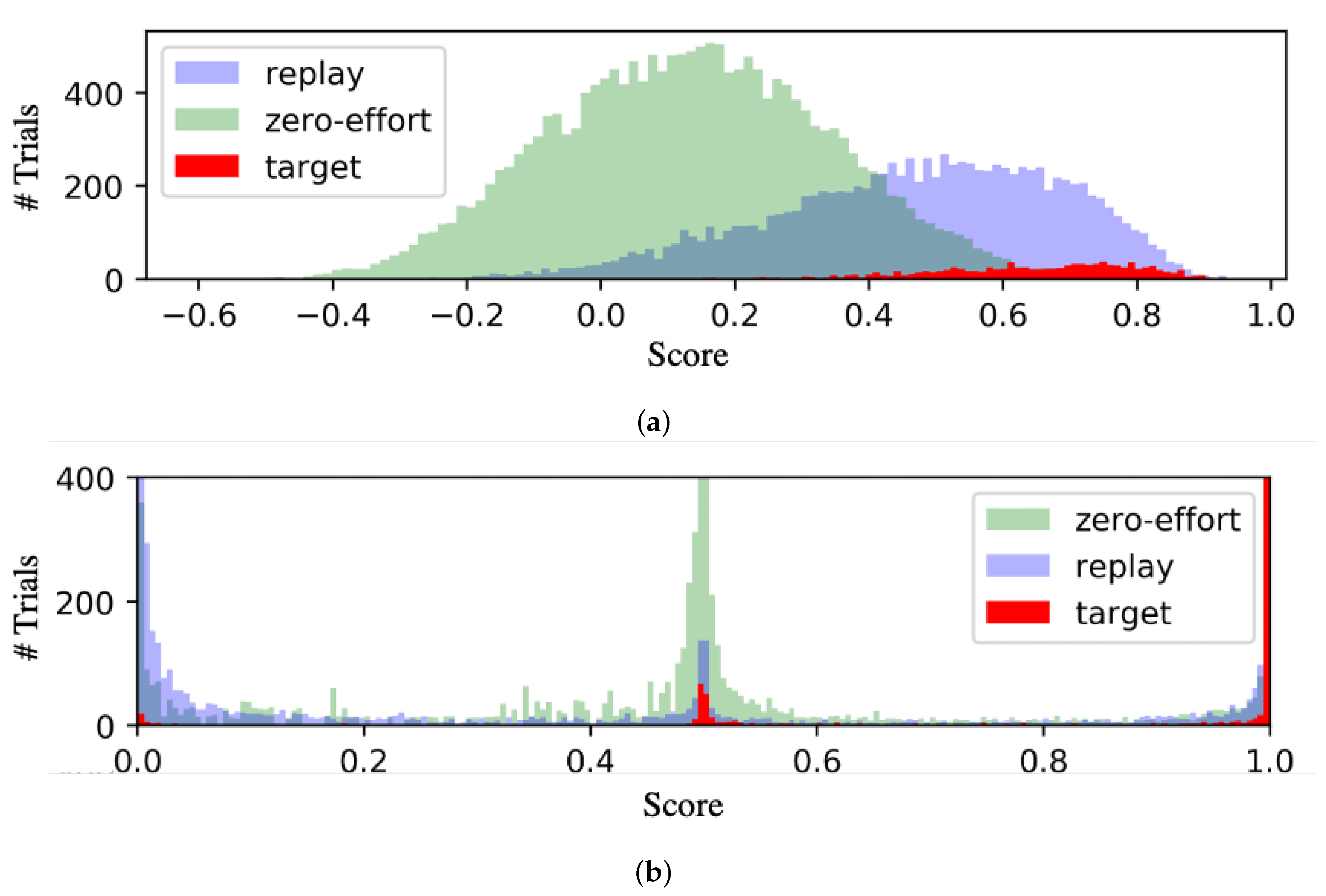

4.3. Results Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bhattacharya, G.; Alam, J.; Kenny, P. Deep speaker recognition: Modular or monolithic? In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 1143–1147. [Google Scholar]

- Jung, J.W.; Heo, H.S.; Kim, J.H.; Shim, H.J.; Yu, H.J. RawNet: Advanced End-to-End Deep Neural Network Using Raw Waveforms for Text-Independent Speaker Verification. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 1268–1272. [Google Scholar]

- Tawara, N.; Ogawa, A.; Iwata, T.; Delcroix, M.; Ogawa, T. Frame-Level Phoneme-Invariant Speaker Embedding for Text-Independent Speaker Recognition on Extremely Short Utterances. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6799–6803. [Google Scholar]

- Jin, Q.; Schultz, T.; Waibel, A. Far-field speaker recognition. IEEE Trans. Audio Speech Lang Process 2007, 15, 2023–2032. [Google Scholar] [CrossRef]

- Jung, J.; Heo, H.; Shim, H.; Yu, H. Short Utterance Compensation in Speaker Verification via Cosine-Based Teacher-Student Learning of Speaker Embeddings. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Sentosa, Singapore, 14–18 December 2019; pp. 335–341. [Google Scholar]

- Wu, Z.; Kinnunen, T.; Evans, N.; Yamagishi, J.; Hanilçi, C.; Sahidullah, M.; Sizov, A. ASVspoof 2015: The first automatic speaker verification spoofing and countermeasures challenge. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Kinnunen, T.; Sahidullah, M.; Delgado, H.; Todisco, M.; Evans, N.; Yamagishi, J.; Lee, K.A. The ASVspoof 2017 Challenge: Assessing the Limits of Replay Spoofing Attack Detection. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 2–6. [Google Scholar]

- Todisco, M.; Wang, X.; Vestman, V.; Sahidullah, M.; Delgado, H.; Nautsch, A.; Yamagishi, J.; Evans, N.; Kinnunen, T.; Lee, K.A. ASVspoof 2019: Future Horizons in Spoofed and Fake Audio Detection. arXiv 2019, arXiv:1904.05441. [Google Scholar]

- Lai, C.I.; Chen, N.; Villalba, J.; Dehak, N. ASSERT: Anti-Spoofing with squeeze-excitation and residual networks. arXiv 2019, arXiv:1904.01120. [Google Scholar]

- Jung, J.W.; Shim, H.J.; Heo, H.S.; Yu, H.J. Replay Attack Detection with Complementary High-Resolution Information Using End-to-End DNN for the ASVspoof 2019 Challenge. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 1083–1087. [Google Scholar]

- Lavrentyeva, G.; Novoselov, S.; Tseren, A.; Volkova, M.; Gorlanov, A.; Kozlov, A. STC antispoofing systems for the ASVSpoof2019 challenge. arXiv 2019, arXiv:1904.05576. [Google Scholar]

- Todisco, M.; Delgado, H.; Lee, K.A.; Sahidullah, M.; Evans, N.; Kinnunen, T.; Yamagishi, J. Integrated Presentation Attack Detection and Automatic Speaker Verification: Common Features and Gaussian Back-end Fusion. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; pp. 77–81. [Google Scholar]

- Sahidullah, M.; Delgado, H.; Todisco, M.; Yu, H.; Kinnunen, T.; Evans, N.; Tan, Z.H. Integrated Spoofing Countermeasures and Automatic Speaker Verification: An Evaluation on ASVspoof 2015. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016; pp. 1700–1704. [Google Scholar]

- Sizov, A.; Khoury, E.; Kinnunen, T.; Wu, Z.; Marcel, S. Joint Speaker Verification and Antispoofing in the i-Vector Space. IEEE Trans. Inf. Forensics Secur. 2015, 10, 821–832. [Google Scholar] [CrossRef]

- Dhanush, B.; Suparna, S.; Aarthy, R.; Likhita, C.; Shashank, D.; Harish, H.; Ganapathy, S. Factor analysis methods for joint speaker verification and spoof detection. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5385–5389. [Google Scholar]

- Li, J.; Sun, M.; Zhang, X. Multi-task learning of deep neural networks for joint automatic speaker verification and spoofing detection. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 1517–1522. [Google Scholar]

- Li, J.; Sun, M.; Zhang, X.; Wang, Y. Joint Decision of Anti-Spoofing and Automatic Speaker Verification by Multi-Task Learning With Contrastive Loss. IEEE Access 2020, 8, 7907–7915. [Google Scholar] [CrossRef]

- Wu, X.; He, R.; Sun, Z.; Tan, T. A light CNN for deep face representation with noisy labels. arXiv 2015, arXiv:1511.02683. [Google Scholar] [CrossRef]

- Lavrentyeva, G.; Novoselov, S.; Malykh, E.; Kozlov, A.; Kudashev, O.; Shchemelinin, V. Audio Replay Attack Detection with Deep Learning Frameworks. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 82–86. [Google Scholar]

- Caruana, R.A. Multitask Learning: A Knowledge-Based Source of Inductive Bias; Learning to Learn; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Variani, E.; Lei, X.; McDermott, E.; Moreno, I.L.; Gonzalez-Dominguez, J. Deep neural networks for small footprint text-dependent speaker verification. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4052–4056. [Google Scholar]

- Shim, H.J.; Jung, J.W.; Heo, H.S.; Yoon, S.H.; Yu, H.J. Replay spoofing detection system for automatic speaker verification using multi-task learning of noise classes. In Proceedings of the Conference on Technologies and Applications of Artificial Intelligence (TAAI), Taichung, Taiwan, 30 November–2 December 2018; pp. 172–176. [Google Scholar]

- Delgado, H.; Todisco, M.; Sahidullah, M.; Evans, N.; Kinnunen, T.; Lee, K.A.; Yamagishi, J. ASVspoof 2017 Version 2.0: Meta-data analysis and baseline enhancements. In Proceedings of the Odyssey 2018 The Speaker and Language Recognition Workshop, Les Sables d’Olonne, France, 26–29 June 2018; pp. 296–303. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the convergence of adam and beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5265–5274. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE cOnference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

| ZE-EER | PAD-EER | ISV-EER | |

|---|---|---|---|

| SV baseline | 9.58 | 33.72 | 19.98 |

| Target | ZE | Replay | |

|---|---|---|---|

| Non-Target | Non-Target | ||

| ZE-EER | 1 | 0 | |

| PAD-EER | 1 | 0 | |

| ISV-EER | 1 | 0 | 0 |

| System | ZE-EER (SV) | PAD-EER | ISV-EER |

|---|---|---|---|

| #1 | 18.52 | 15.73 | 18.44 |

| #2-SE | 18.99 | 15.90 | 17.90 |

| #3-split | 19.43 | 37.31 | 26.40 |

| Train Loss | DNN Arch | ZE-EER (SV) | PAD-EER |

|---|---|---|---|

| Sid | SV | 9.58 | - |

| Sid+PAD | SV | 17.53 | 13.69 |

| PAD | PAD | - | 10.60 |

| PAD+Sid | PAD | 19.16 | 12.17 |

| System | ZE-EER (SV) | PAD-EER | ISV-EER |

|---|---|---|---|

| #4-w/o mul | 20.52 | 19.77 | 20.48 |

| #5-w mul | 15.59 | 18.06 | 16.66 |

| #6-loss weight | 15.22 | 14.55 | 15.91 |

| #7-DNN arch | 14.32 | 15.46 | 15.63 |

| ZE-EER | PAD-EER | ISV-EER | |

|---|---|---|---|

| SV Baseline | 9.58 | 33.72 | 19.98 |

| #7-Ours | 14.32 | 15.46 | 15.63 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shim, H.-j.; Jung, J.-w.; Kim, J.-h.; Yu, H.-j. Integrated Replay Spoofing-Aware Text-Independent Speaker Verification. Appl. Sci. 2020, 10, 6292. https://doi.org/10.3390/app10186292

Shim H-j, Jung J-w, Kim J-h, Yu H-j. Integrated Replay Spoofing-Aware Text-Independent Speaker Verification. Applied Sciences. 2020; 10(18):6292. https://doi.org/10.3390/app10186292

Chicago/Turabian StyleShim, Hye-jin, Jee-weon Jung, Ju-ho Kim, and Ha-jin Yu. 2020. "Integrated Replay Spoofing-Aware Text-Independent Speaker Verification" Applied Sciences 10, no. 18: 6292. https://doi.org/10.3390/app10186292

APA StyleShim, H.-j., Jung, J.-w., Kim, J.-h., & Yu, H.-j. (2020). Integrated Replay Spoofing-Aware Text-Independent Speaker Verification. Applied Sciences, 10(18), 6292. https://doi.org/10.3390/app10186292