A Web Shell Detection Method Based on Multiview Feature Fusion

Abstract

1. Introduction

- (1)

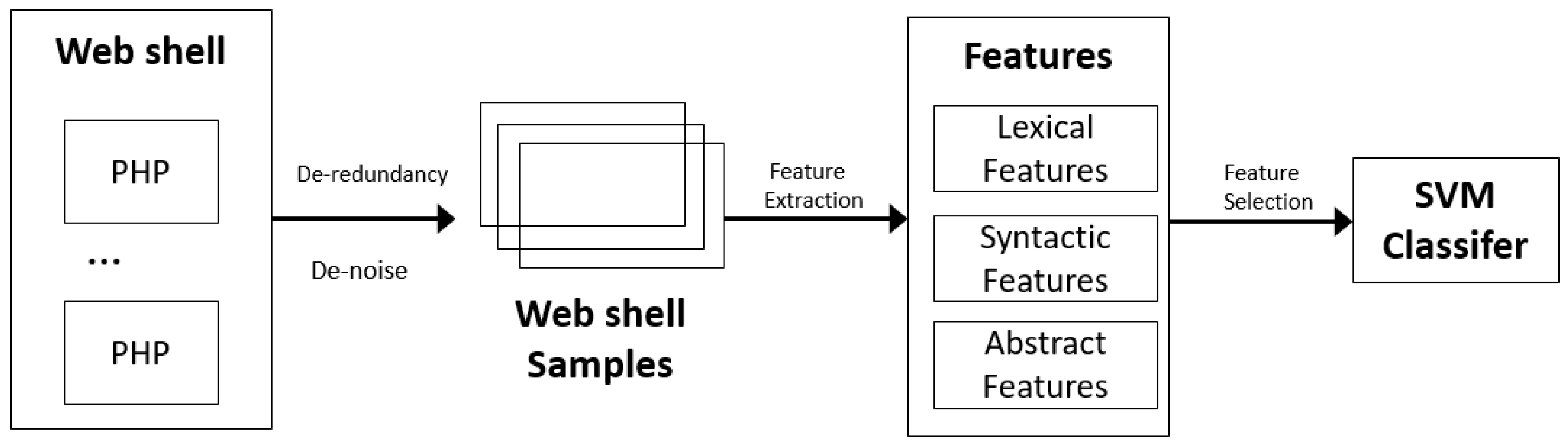

- A multiview feature fusion mechanism is presented, which can extract lexical features, syntactic features, and abstract features from PHP scripts. Features that can effectively represent web shells are integrated and extracted from multiple levels.

- (2)

- In order to determine the features that are reasonable for classification, a Fisher scoring mechanism is used to rank the importance of the features, and then the best features for subsequent classification are determined through experiments.

- (3)

- The use of optimized SVM for effective classification of malicious web shell has achieved satisfactory classification accuracy on large-scale data sets, and the overall performance is better than the state-of-the-art web shell detection tools and methods.

2. Related Work

3. Methodology

3.1. Overview

- (1)

- (2)

- Analyze and extract lexical features, syntactic features, and abstract features from web shell;

- (3)

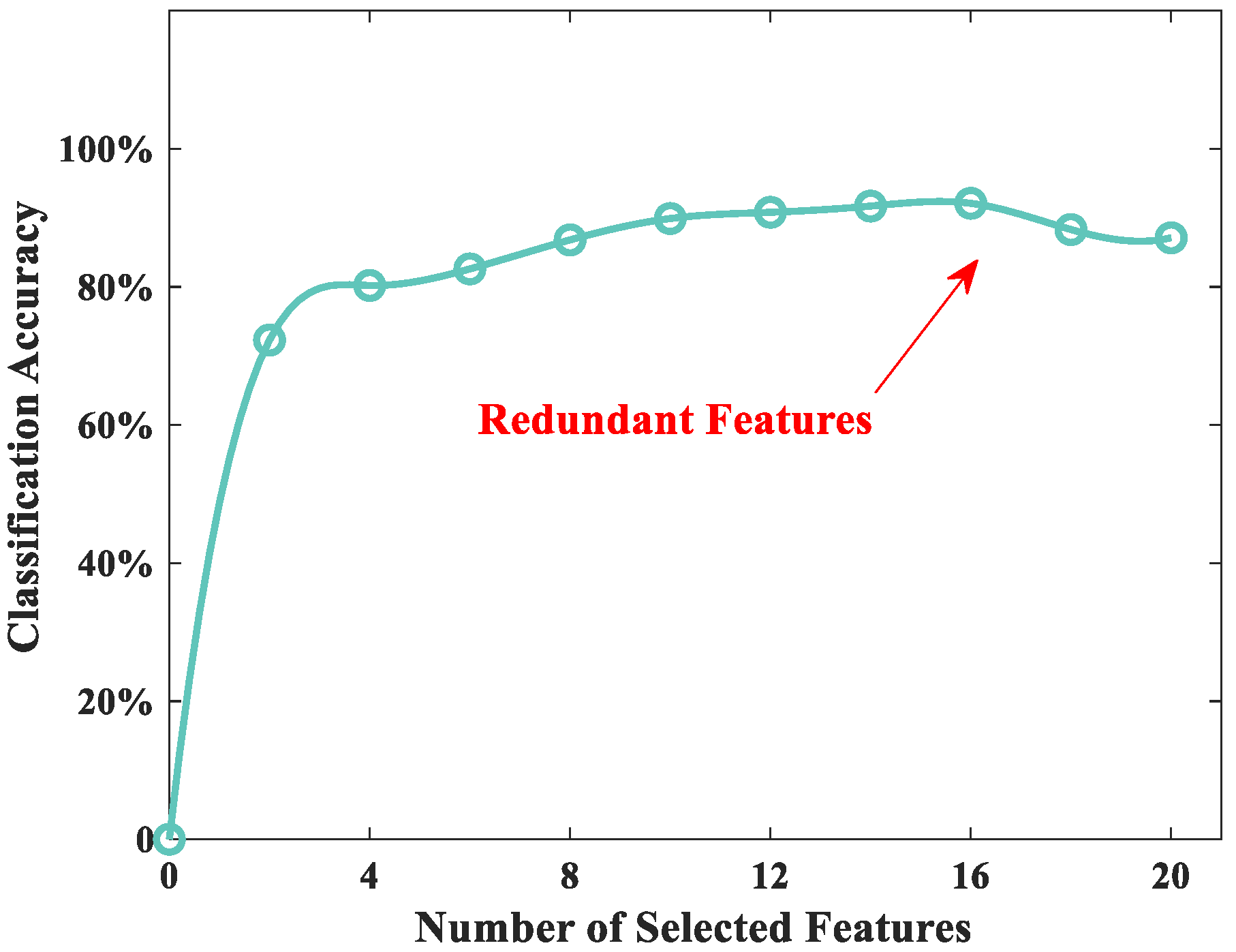

- Use Fisher score to rank the importance of the features, and then the best features for subsequent classification are determined through experiments;

- (4)

- Given the selected features, train a classifier that can distinguish web shells from benign web scripts by SVM.

3.2. Data Preprocessing

- (1)

- Small Trojan usually has an upload function only. Attackers generally first obtain upload permission through the Small Trojan and then upload the Big Trojan to the website to perform key functions. The source of malicious behavior is Big Trojan.

- (2)

- One Word Trojan needs to be used in conjunction with other tools to perform malicious behavior. Additionally, One Word Trojan is often used at the beginning of the attack. Unlike the Big Trojan, One Word Trojan is rarely used for persistence attacks.

3.3. Feature Extraction

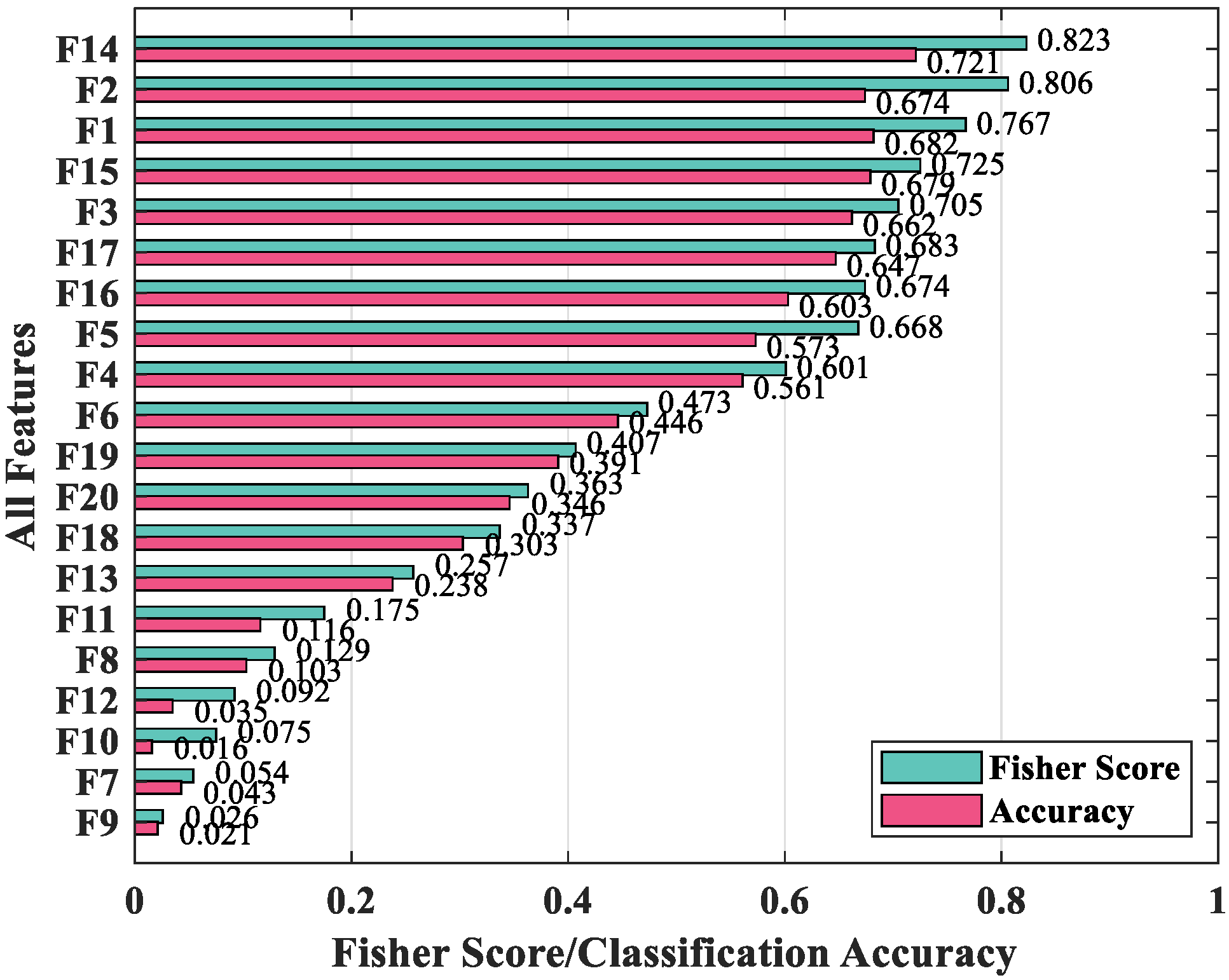

- The proportion of conditional statements, which represents the percentage of conditional statements in all statements in the script, including if (F7), elseif (F8), else (F9), and case (F10).

- The proportion of looping statements, which represents the percentage of loop statements in all statements in the script, including for (F11), while (F12), and foreach (F13).

3.4. Feature Selection

3.5. Modeling and Classification

4. Evaluation

4.1. Experimental Setup

4.2. Evaluation Metric

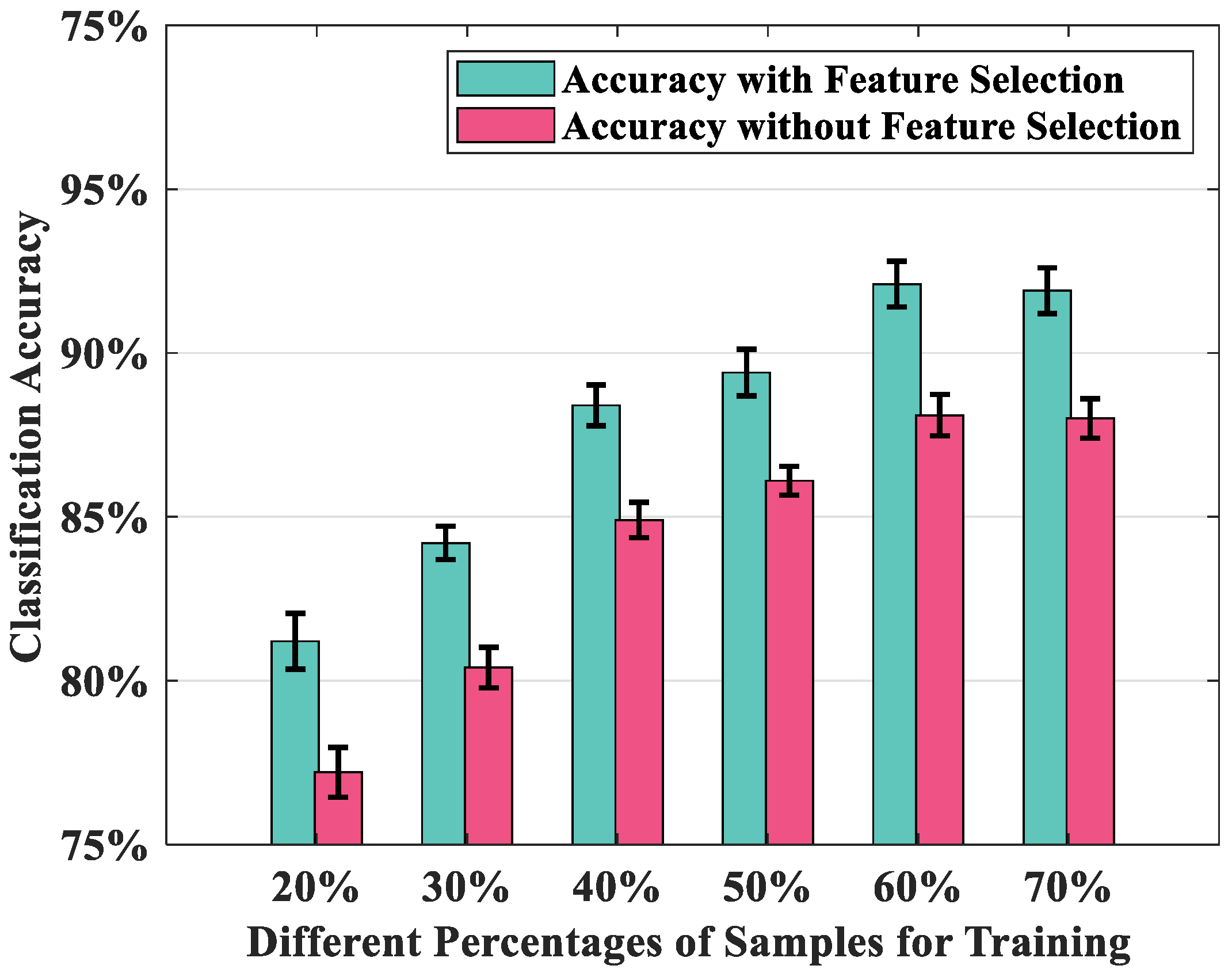

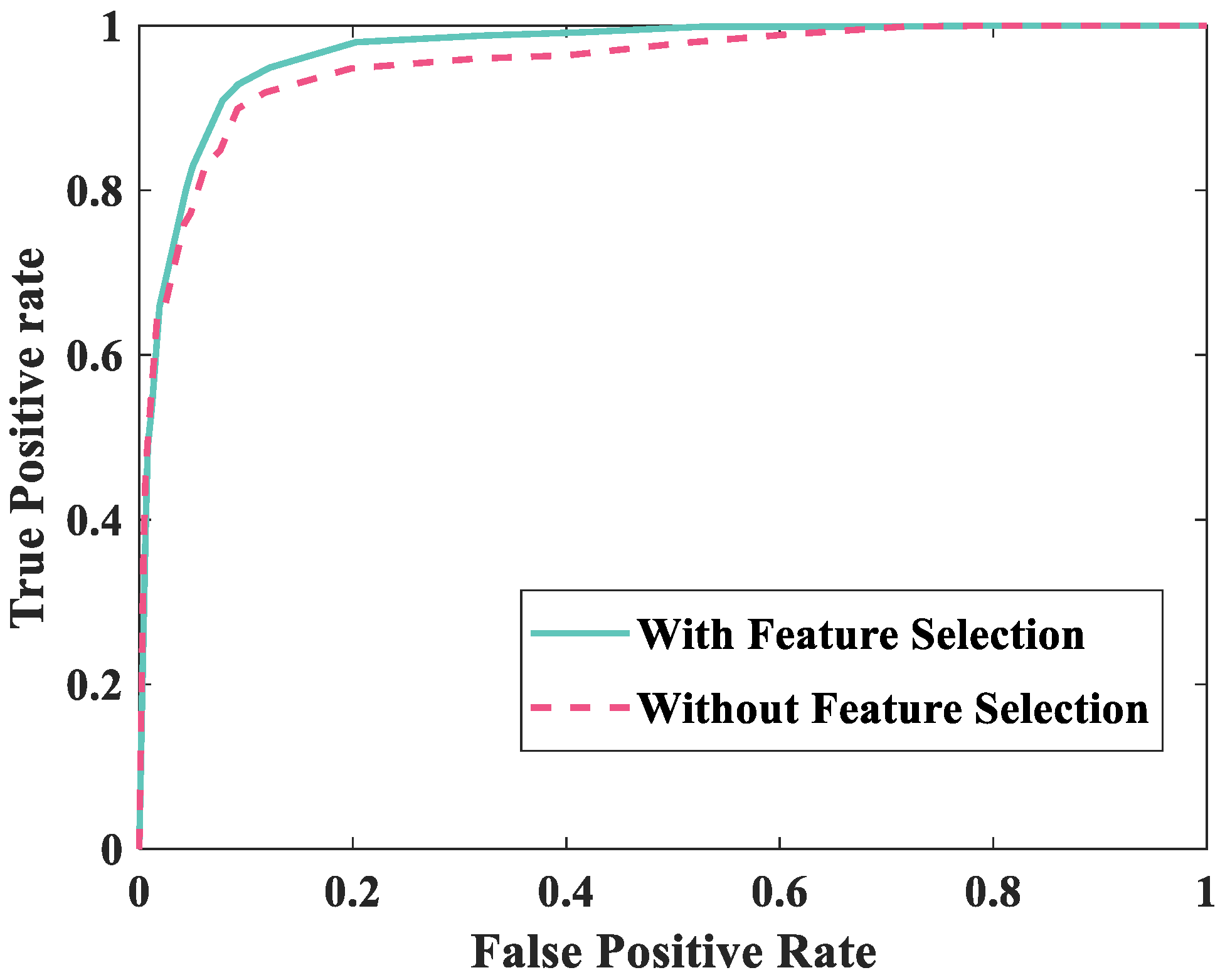

4.3. The Experiment of Feature Selection

4.4. Model Optimization

4.5. Overall Performance

4.6. Comparison with Existing Detectors

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Compromised Web Servers and Web Shells. Available online: https://www.us-cert.gov/ncas/alerts/TA15-314A (accessed on 2 July 2020).

- Yang, W.; Sun, B.; Cui, B. A Webshell Detection Technology Based on Http Traffic Analysis. In Proceedings of the International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing; Kunibiki Messe, Matsue, Japan, 4–6 July 2018; Springer: New York, NY, USA, 2018; pp. 336–342. [Google Scholar] [CrossRef]

- Wu, Y.; Sun, Y.; Huang, C.; Jia, P.; Liu, L. Session-Based Webshell Detection Using Machine Learning in Web Logs. Secur. Commun. Netw. 2019, 2019, 3093809. [Google Scholar] [CrossRef]

- Zhang, H.; Guan, H.; Yan, H.; Li, W.; Yu, Y.; Zhou, H.; Zeng, X. Webshell traffic detection with character-level features based on deep learning. IEEE Access 2018, 6, 75268–75277. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, J.; Zhou, Z.; Zhou, S. CNN-Webshell: Malicious Web Shell Detection with Convolutional Neural Network. In Proceedings of the VI International Conference on Network, Kunming, China, 8–10 December 2017; Communication and Computing: New York, NY, USA, 2017; pp. 75–79. [Google Scholar] [CrossRef]

- Kim, J.; Yoo, D.; Jang, H.; Jeong, K. WebSHArk 1.0: A Benchmark Collection for Malicious Web Shell Detection. JIPS 2015, 11, 229–238. [Google Scholar] [CrossRef]

- Tu, T.D.; Guang, C.; Xiaojun, G.; Wubin, P. Webshell Detection Techniques in Web Applications. In Proceeding of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–14 July 2014; pp. 1–7. [Google Scholar] [CrossRef]

- Huang, Y.; Tsai, C.; Lin, T.; Huang, S.; Lee, D.T.; Kuo, S. A testing framework for Web application security assessment. Comput. Netw. 2005, 48, 739–761. [Google Scholar] [CrossRef]

- Son, S.; Shmatikov, V. SAFERPHP: Finding Semantic Vulnerabilities in PHP Applications. In Proceedings of the ACM SIGPLAN 6th Workshop on Programming Languages and Analysis for Security, San Jose, CA, USA, 5 June 2011; pp. 1–13. [Google Scholar] [CrossRef]

- Wassermann, G.; Su, Z. Sound and Precise Analysis of Web Applications for Injection Vulnerabilities. In Proceedings of the 28th ACM SIGPLAN Conference on Programming Language Design and Implementation, San Diego, CA, USA, 10–13 June 2007; pp. 32–41. [Google Scholar] [CrossRef]

- Wassermann, G.; Su, Z. Static Detection of Cross-Site Scripting Vulnerabilities. In Proceedings of the 30th International Conference on Software Engineering (ACM/IEEE), Leipzig, Germany, 10–18 May 2008; pp. 171–180. [Google Scholar] [CrossRef]

- Xie, Y.; Aiken, A. Static Detection of Security Vulnerabilities in Scripting Languages. In Proceedings of the USENIX Security Symposium, Vancouver, BC, Canada, 31 July–4 August 2006; pp. 179–192. [Google Scholar]

- Cross Site Scripting. Available online: https://en.wikipedia.org/wiki/Cross-site_scripting (accessed on 2 July 2020).

- Nikto 2. Available online: https://cirt.net/Nikto2 (accessed on 2 July 2020).

- WebInspect. Available online: https://webinspect.updatestar.com/ (accessed on 2 July 2020).

- Almgren, M.; Debar, H.; Dacier, M. A Lightweight Tool for Detecting Web Server Attacks; NDSS: San Diego, CA, USA, 2000. [Google Scholar] [CrossRef]

- Kruegel, C.; Vigna, G. Anomaly Detection of Web-Based Attacks. In Proceedings of the 10th ACM Conference on Computer and Communications Security, Washington, DC, USA, 27–30 October 2003; pp. 251–261. [Google Scholar] [CrossRef]

- Robertson, W.; Vigna, G.; Kruegel, C.; Kemmerer, R.A. Using Generalization and Characterization Techniques in the Anomaly-Based Detection of Web Attacks; NDSS: San Diego, CA, USA, 2006. [Google Scholar]

- Ko, C.; Ruschitzka, M.; Levitt, K. Execution Monitoring of Security-Critical Programs in Distributed Systems: A Specification-Based Approach. In Proceedings of the Symposium on Security and Privacy (Cat. No. 97CB36097), Oakland, CA, USA, 4–7 May 1997; pp. 175–187. [Google Scholar] [CrossRef]

- Uppuluri, P.; Sekar, R. Experiences with Specification-Based Intrusion Detection. In Proceedings of the International Workshop on Recent Advances in Intrusion Detection, Davis, CA, USA, 10–12 October 2001; pp. 172–189. [Google Scholar] [CrossRef]

- Hossain, M.N.; Milajerdi, S.M.; Wang, J.; Eshete, B.; Gjomemo, R.; Sekar, R.; Stoller, S.; Venkatakrishnan, V.N. {SLEUTH}: Real-Time Attack Scenario Reconstruction from {COTS} Audit Data. In Proceedings of the 26th {USENIX} Security Symposium, Vancouver, BC, Canada, 16–18 August 2017; pp. 487–504. [Google Scholar] [CrossRef]

- Starov, O.; Dahse, J.; Ahmad, S.S.; Holz, T.; Nikiforakis, N. No Honor Among Thieves: A Large-Scale Analysis of Malicious Web Shells. In Proceedings of the 25th International Conference on World Wide Web, Montreal, Canada, 11–15 April 2016; pp. 1021–1032. [Google Scholar] [CrossRef]

- Cui, H.; Huang, D.; Fang, Y.; Liu, L.; Huang, C. Webshell detection based on random forest–gradient boosting decision tree algorithm. In In Proceedings of the Third International Conference on Data Science in Cyberspace (DSC), Guangzhou, China, 18–21 June 2018; pp. 153–160. [Google Scholar] [CrossRef]

- JohnTroony’s php-Web Shells Repository. Available online: https://github.com/JohnTroony/php-webshells (accessed on 2 July 2020).

- Nikicat’s Web-Malware-Collection Repository. Available online: https://github.com/nikicat/web-malware-collection/tree/master/Backdoors/PHP (accessed on 2 July 2020).

- Tennc’s Webshell Repository. Available online: https://github.com/tennc/webshell/ (accessed on 2 July 2020).

- Webshell-Sample. Available online: https://github.com/ysrc/webshell-sample/ (accessed on 2 July 2020).

- PHP-Backdoors. Available online: https://github.com/bartblaze/PHP-backdoors (accessed on 2 July 2020).

- Base64. Available online: https://en.wikipedia.org/wiki/Base64 (accessed on 2 July 2020).

- UnPHP, the Online PHP Decoder. Available online: http://www.unphp.net/ (accessed on 2 July 2020).

- Zhang, Z.; Li, M.; Zhu, L.; Li, X. SmartDetect: A smart Detection Scheme for Malicious Web Shell Codes Via Ensemble. In Proceeding of the Learning, International Conference on Smart Computing and Communication, Tokyo, Japan, 10–12 December 2018; Springer: New York, NY, USA, 2018; pp. 196–205. [Google Scholar] [CrossRef]

- TF-IDF. Available online: http://www.tfidf.com/ (accessed on 2 July 2020).

- Huang, C.; Yin, J.; Hou, F. A text similarity measurement combining word semantic information with TF-IDF method. Jisuanji Xuebao 2011, 34, 856–864. [Google Scholar] [CrossRef]

- Zhu, T.; Gao, H.; Yang, Y.; Bu, K.; Chen, Y.; Downey, D.; Lee, K.; Choudhary, A.N. Beating the artificial chaos: Fighting OSN spam using its own templates. IEEE ACM Trans. Netw. 2016, 24, 3856–3869. [Google Scholar] [CrossRef]

- Webshell Detector. Available online: https://github.com/chaitin/cloudwalker (accessed on 2 July 2020).

- Zhu, T.; Qu, Z.; Xu, H.; Zhang, J.; Shao, Z.; Chen, Y.; Prabhakar, S.; Yang, J. RiskCog: Unobtrusive real-time user authentication on mobile devices in the wild. IEEE Trans. Mobile Comput. 2019, 19, 466–483. [Google Scholar] [CrossRef]

- Zhu, T.; Weng, Z.; Song, Q.; Chen, Y.; Liu, Q.; Chen, Y.; Lv, M.; Chen, T. ESPIALCOG: General, Efficient and Robust Mobile User Implicit Authentication in Noisy Environment. IEEE Trans. Mobile Comput. 2020. [Google Scholar] [CrossRef]

- Zhu, T.; Fu, L.; Liu, Q.; Lin, Z.; Chen, Y.; Chen, T. One Cycle Attack: Fool Sensor-based Personal Gait Authentication with Clustering. IEEE Trans. Inf. Foren. Sec. 2020. [Google Scholar] [CrossRef]

- Zhu, T.; Weng, Z.; Chen, G.; Fu, L. A Hybrid Deep Learning System for Real-World Mobile User Authentication Using Motion Sensors. Sensors 2020, 20, 3876. [Google Scholar] [CrossRef] [PubMed]

- Virustotal. Available online: https://www.virustotal.com/ (accessed on 2 July 2020).

- ClamAV. Available online: https://www.clamav.net/ (accessed on 2 July 2020).

- LOKI. Available online: https://www.nextron-systems.com/loki/ (accessed on 2 July 2020).

- CloudWalker. Available online: https://github.com/chaitin/cloudwalker (accessed on 2 July 2020).

- Fang, Y.; Qiu, Y.; Liu, L.; Huang, C. Detecting Webshell Based on Random Forest with Fasttext. In Proceedings of the International Conference on Computing and Artificial Intelligence, London, UK, 10–12 July 2018; pp. 52–56. [Google Scholar] [CrossRef]

- Xiong, C.; Zhu, T.; Dong, W.; Ruan, L.; Yang, R.; Chen, Y.; Cheng, Y.; Cheng, S.; Chen, X. CONAN: A Practical Real-time APT Detection System with High Accuracy and Efficiency. IEEE Trans. Depende Secur. 2020. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. Stat 2014, 1050, 10. [Google Scholar] [CrossRef]

| Family Name | The Number of Web Shells |

|---|---|

| c99 | 326 |

| r57 | 247 |

| WSO | 112 |

| B374k | 106 |

| NST | 98 |

| NCC | 84 |

| Crystal | 83 |

| Parameter | Value |

|---|---|

| 2.604 | |

| 3.025 | |

| 17.531 |

| Web Shells | Benign Scripts | |

|---|---|---|

| Web shells | TP = 402 | FN = 20 |

| Benign scripts | FP = 46 | TN = 376 |

| Categories | Pmalicious | Rmalicious | Fmalicious | Pbenign | Rbenign | Fbenign |

|---|---|---|---|---|---|---|

| With feature selection | 89.73% | 95.26% | 92.41% | 94.05% | 89.10% | 91.51% |

| Without feature selection | 85.52% | 89.57% | 87.50% | 89.05% | 84.83% | 87.48% |

| c99 | r57 | WSO | B374K | NST | NCC | Crystal | Overall | |

|---|---|---|---|---|---|---|---|---|

| Our method | 96.92% | 98.99% | 88.89% | 100% | 97.44% | 85.29% | 87.88% | 95.26% |

| VirusTotal | 92.31% | 93.94% | 88.89% | 97.62% | 92.31% | 52.94% | 48.48% | 86.26% |

| ClamAV | 83.08% | 83.84% | 37.78% | 26.19% | 20.51% | 17.65% | 6.06% | 55.69% |

| LOKI | 86.15% | 87.88% | 51.11% | 52.38% | 17.95% | 26.47% | 12.12% | 62.56% |

| CloudWalker | 95.38% | 93.94% | 91.11% | 90.48% | 15.38% | 17.65% | 6.06% | 73.46% |

| Tu et al. | 94.17% | 96.76% | 62.50% | 54.72% | 72.45% | 54.76% | 63.86% | 79.92% |

| Fang et al. | 96.31% | 87.57% | 94.64% | 93.40% | 83.67% | 82.14% | 85.54% | 92.99% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, T.; Weng, Z.; Fu, L.; Ruan, L. A Web Shell Detection Method Based on Multiview Feature Fusion. Appl. Sci. 2020, 10, 6274. https://doi.org/10.3390/app10186274

Zhu T, Weng Z, Fu L, Ruan L. A Web Shell Detection Method Based on Multiview Feature Fusion. Applied Sciences. 2020; 10(18):6274. https://doi.org/10.3390/app10186274

Chicago/Turabian StyleZhu, Tiantian, Zhengqiu Weng, Lei Fu, and Linqi Ruan. 2020. "A Web Shell Detection Method Based on Multiview Feature Fusion" Applied Sciences 10, no. 18: 6274. https://doi.org/10.3390/app10186274

APA StyleZhu, T., Weng, Z., Fu, L., & Ruan, L. (2020). A Web Shell Detection Method Based on Multiview Feature Fusion. Applied Sciences, 10(18), 6274. https://doi.org/10.3390/app10186274