Speech Recognition for Task Domains with Sparse Matched Training Data

Abstract

1. Introduction

2. Related Work

2.1. End-to-End Speech Recognition

2.2. Active Learning

2.3. Disentangled Representation Learning in Speech Processing

2.4. Teacher-Student Learning for Domain Adaptation

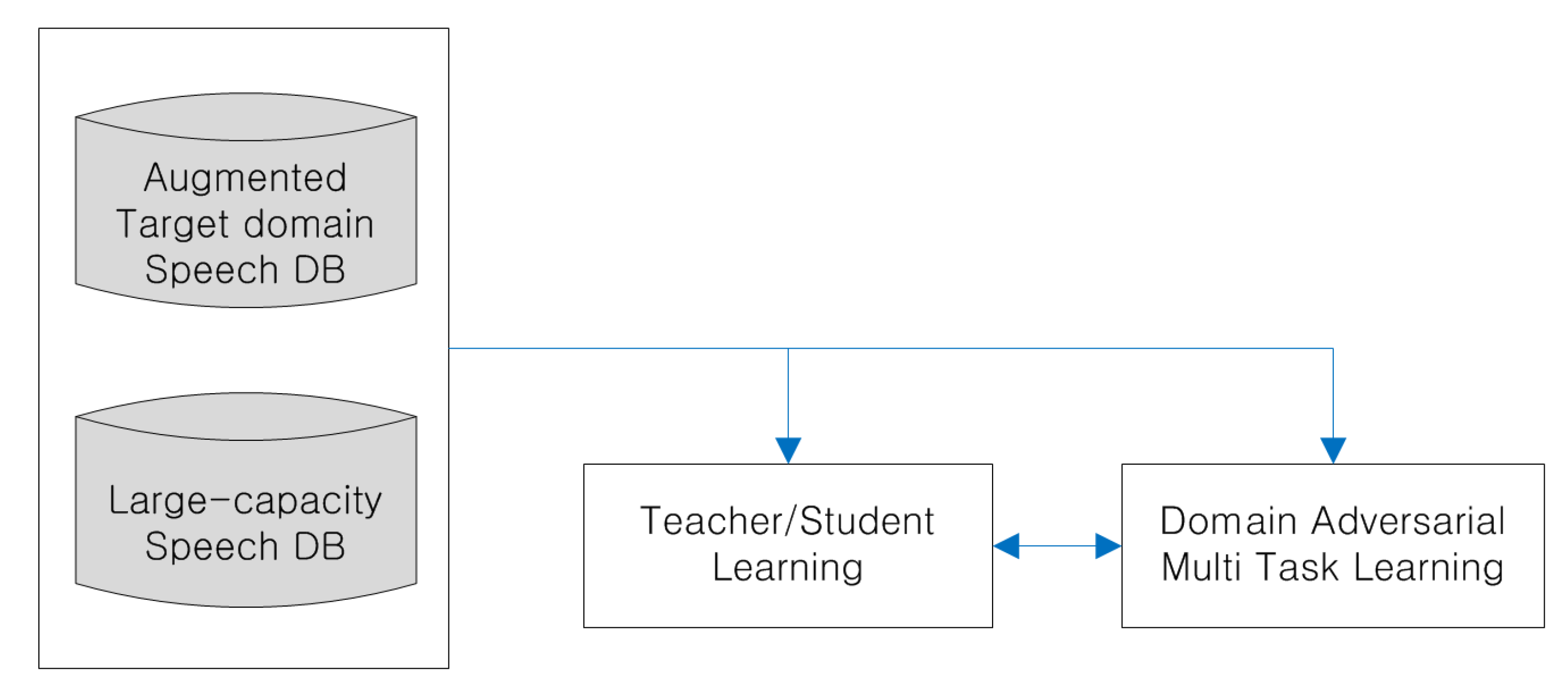

3. Proposed Methods

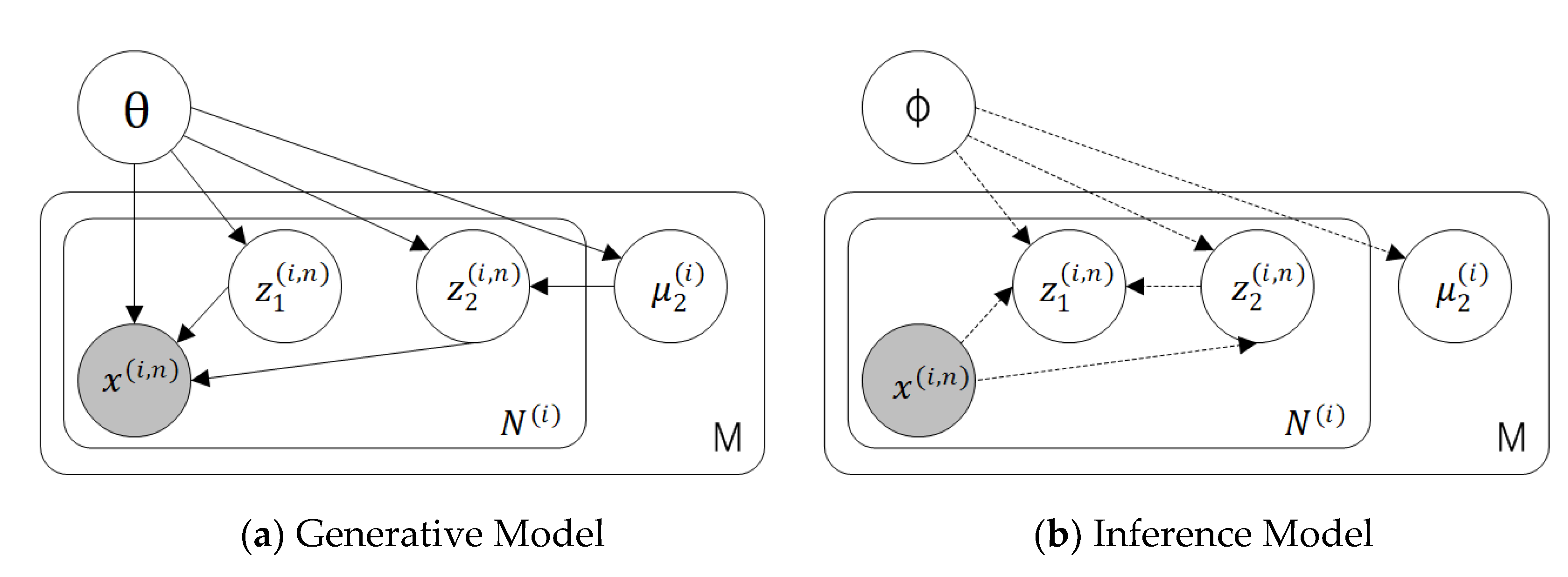

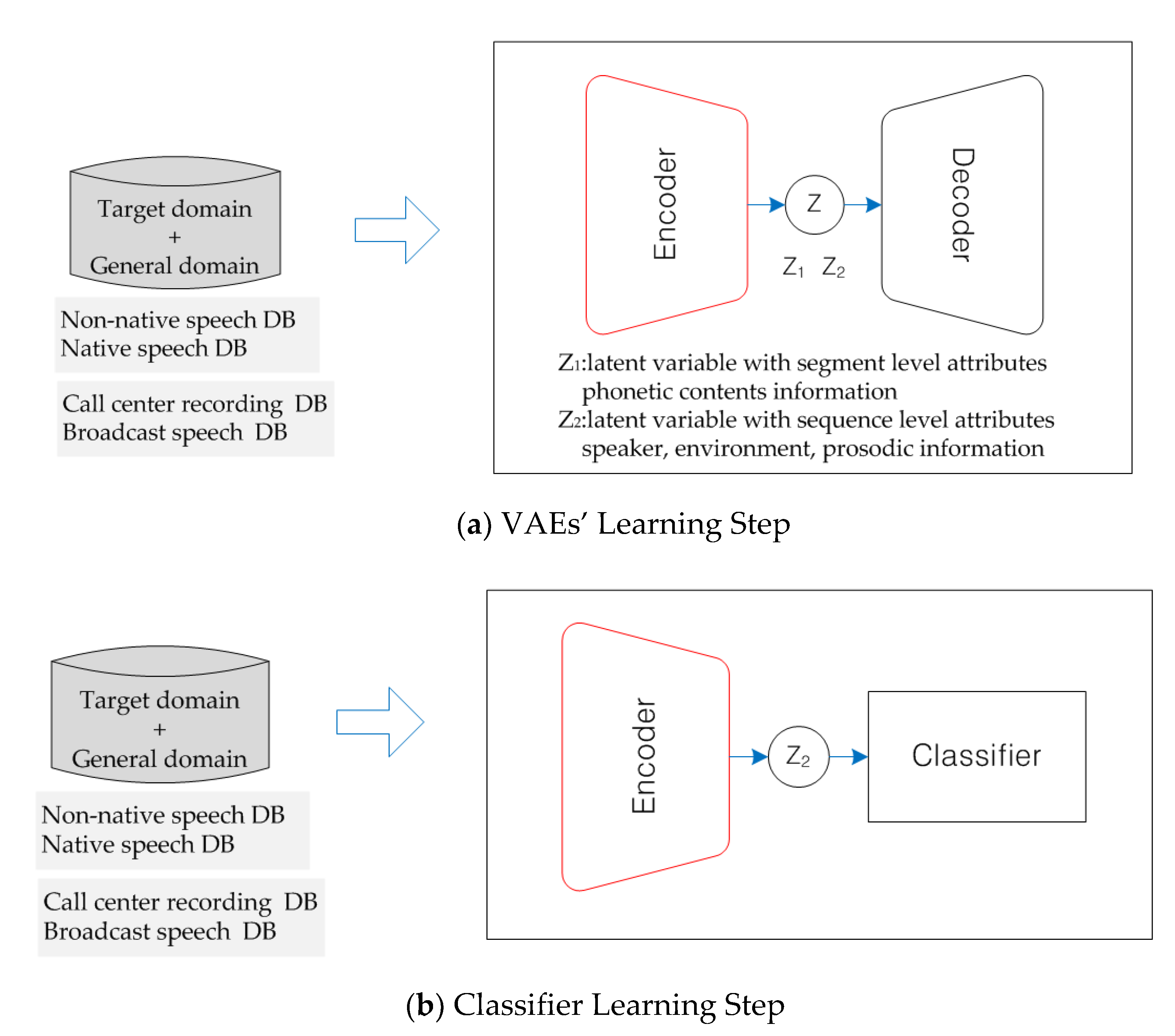

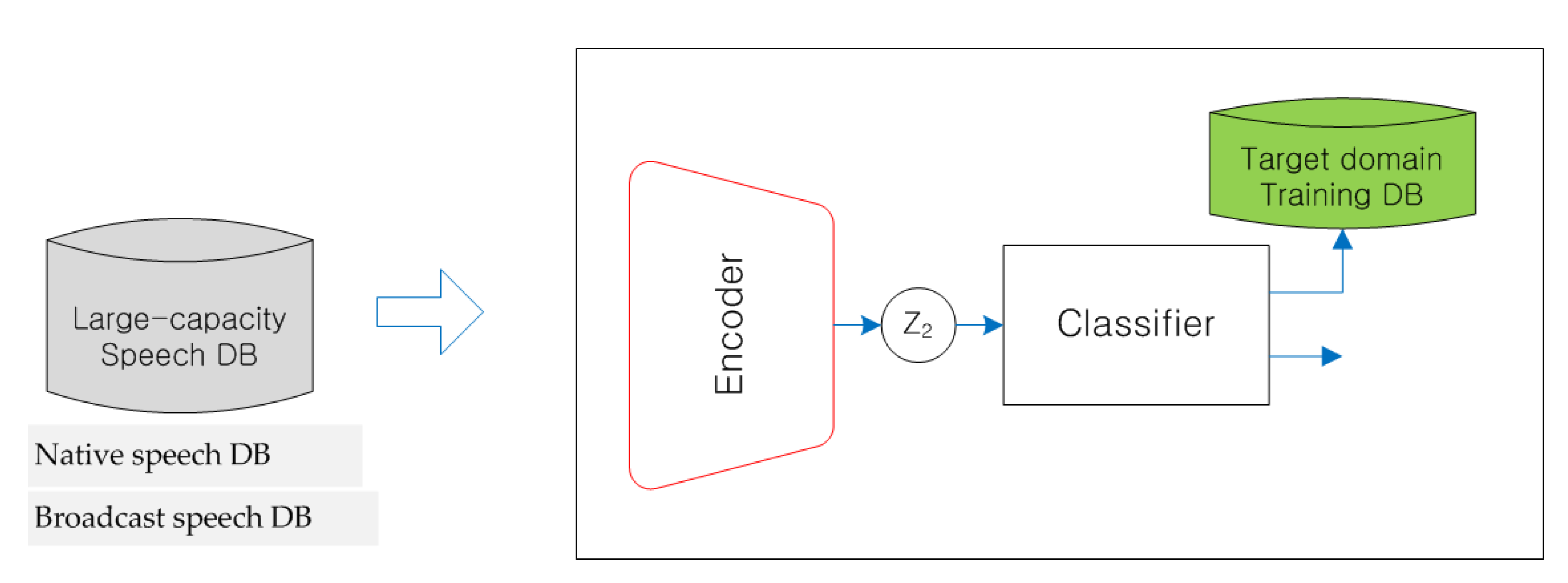

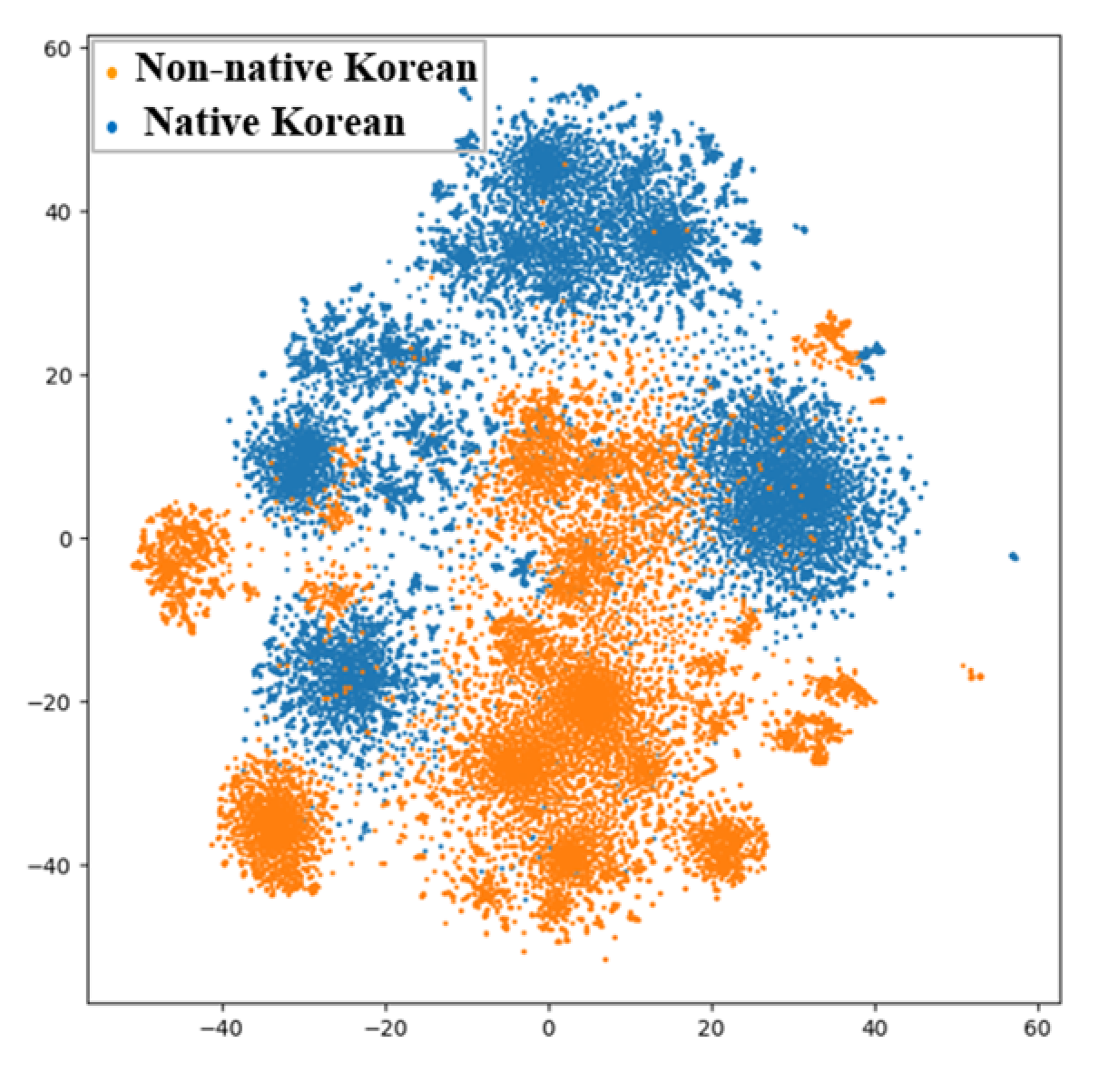

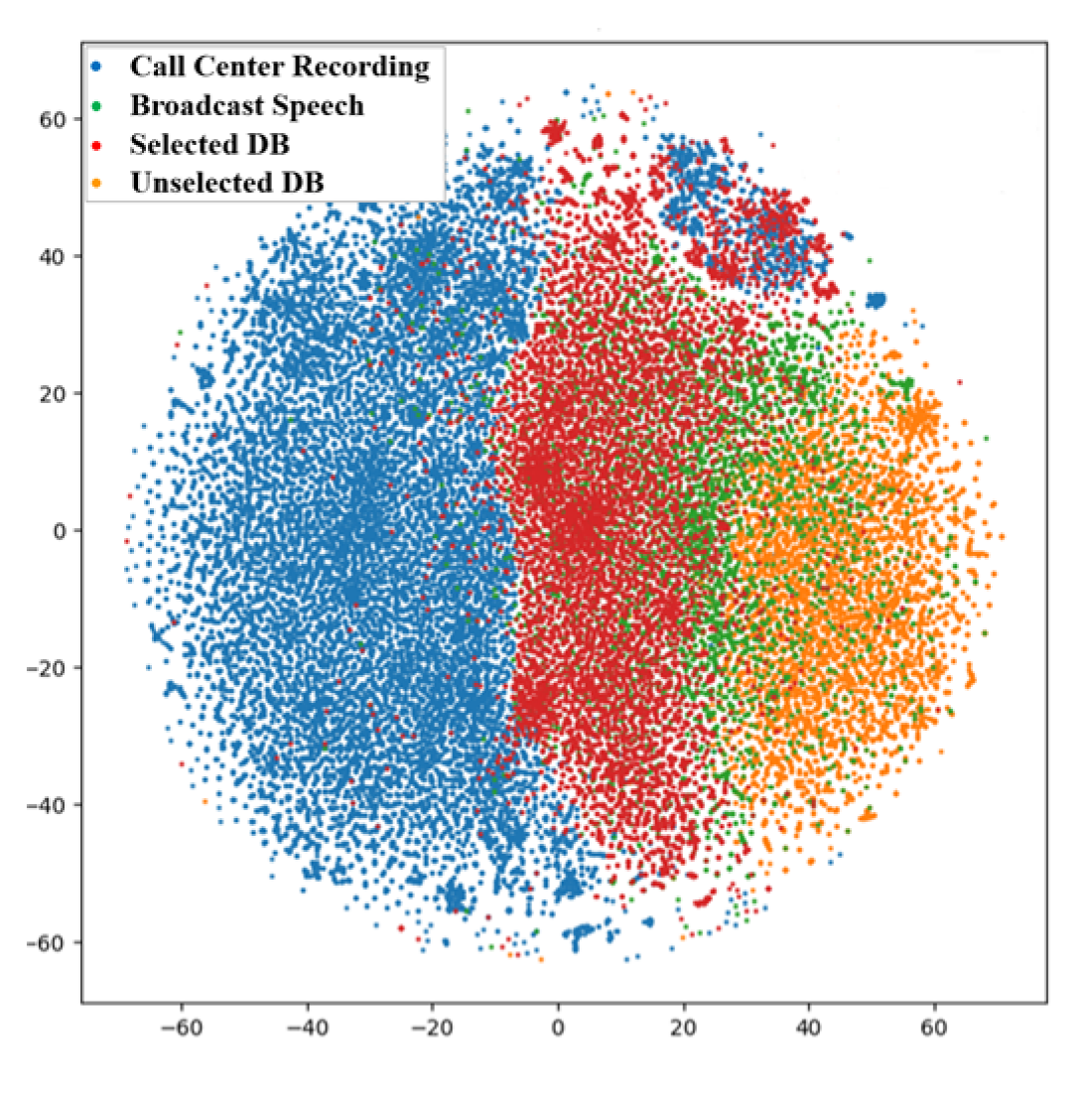

3.1. Active Learning Using Latent Variables with Disentangled Attributes

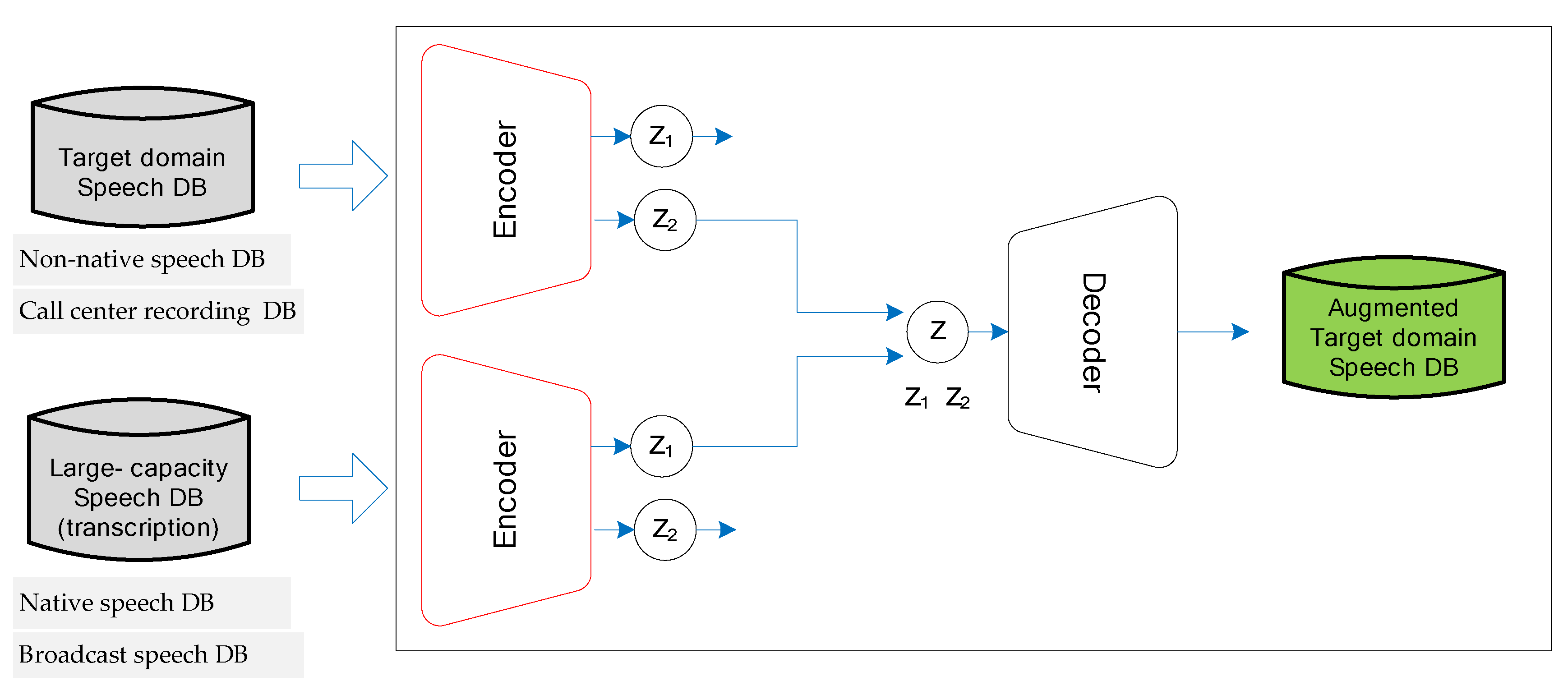

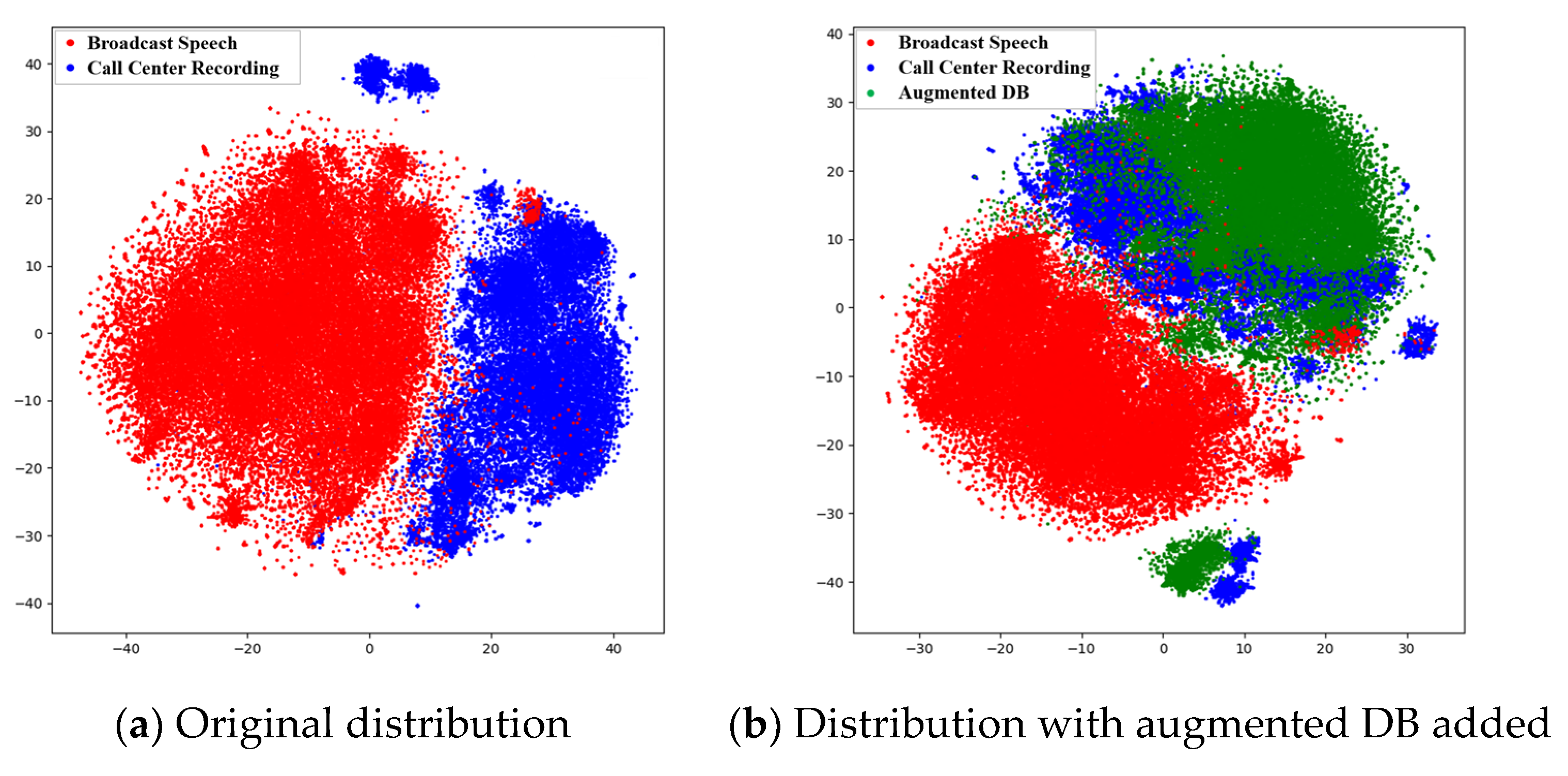

3.2. Teacher/Student-Based Transfer Learning Using Augmented Training Data

4. Experimental Settings

4.1. Corpus Descriptions

- AMI Meeting corpus (http://groups.inf.ed.ac.uk/ami/corpus)

- Non-native Korean speech corpus

- Korean call center recording corpus

- Korean broadcast speech corpus

4.2. Detailed Architecture of the End-to-End ASR

5. Experimental Results

5.1. Experiments for Active Learning Using Latent Variables with Disentangled Attributes

5.2. Experiments on Teacher/Student-Based Transfer Learning Using Augmented Training Data

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Abdel-rahman, M.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Dahl, G.E.; Yu, D.; Deng, L.; Acero, A. Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 30–42. [Google Scholar] [CrossRef]

- Amodei, D.; Ananthanarayanan, S.; Anubhai, R.; Bai, J.; Battenberg, E.; Case, C.; Casper, J. Deep speech 2: End-to-end speech recognition in English and mandarin. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–28 June 2016; pp. 173–182. [Google Scholar]

- Graves, A.; Jaitly, N. Towards end-to-end speech recognition with recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Beijing, China, 22–24 June 2014; pp. 1764–1772. [Google Scholar]

- Niesler, T. Language-dependent state clustering for multilingual acoustic modelling. Speech Commun. 2007, 49, 453–463. [Google Scholar] [CrossRef][Green Version]

- Huang, J.-T.; Li, J.; Yu, D.; Deng, L.; Gong, Y. Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 7304–7308. [Google Scholar]

- Kang, B.O.; Kwon, O.W. Combining multiple acoustic models in GMM spaces for robust speech recognition. IEICE Trans. Inf. Syst. 2016, 99, 724–730. [Google Scholar] [CrossRef]

- Sun, S.; Zhang, B.; Xie, L. An unsupervised deep domain adaptation approach for robust speech recognition. Neurocomputing 2017, 257, 79–87. [Google Scholar] [CrossRef]

- Asami, T.; Masumura, R.; Yamaguchi, Y.; Masataki, H.; Aono, Y. Domain adaptation of dnn acoustic models using knowledge distillation. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5185–5189. [Google Scholar]

- Meng, Z.; Li, J.; Gong, Y.; Juang, B.-H. Adversarial teacher-student learning for unsupervised domain adaptation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5949–5953. [Google Scholar]

- Meng, Z.; Li, J.; Gaur, Y.; Gong, Y. Domain adaptation via teacher-student learning for end-to-end speech recognition. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Sentosa, Singapore, 14–18 December 2019; pp. 268–275. [Google Scholar]

- Wang, L.; Gales, M.J.; Woodland, P.C. Unsupervised training for Mandarin broadcast news and conversation transcription. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Honolulu, HI, USA, 15–20 April 2007; Volume 4, pp. 4–353. [Google Scholar]

- Yu, K.; Gales, M.; Wang, L.; Woodland, P.C. Unsupervised training and directed manual transcription for 220 LVCSR. Speech Commun. 2010, 52, 652–663. [Google Scholar] [CrossRef]

- Ranzato, M.; Szummer, M. Semi-supervised learning of compact document representations with deep networks. In Proceedings of the 25th International Conference on Machine learning. ACM, Helsinki, Finland, 5–9 July 2008; pp. 792–799. [Google Scholar]

- Dhaka, A.K.; Salvi, G. Sparse autoencoder based semi-supervised learning for phone classification with limited annotations. In Proceedings of the GLU 2017 International Workshop on Grounding Language Understanding, Stockholm, Sweden, 25 August 2017; pp. 22–26. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Amodei, D.; Ananthanarayanan, S.; Anubhai, R.; Bai, J.; Battenberg, E.; Case, C.; Casper, J.; Catanzaro, B.; Chen, J.; Chrzanowski, M.; et al. Deep Speech 2: End-to-End Speech Recognition in English and Mandarin. In Proceedings of the International Conference on Machine Learning, Lille, France, 11 July 2015. [Google Scholar]

- Audhkhasi, K.; Saon, G.; Tüske, Z.; Kingsbury, B.; Picheny, M. Forget a Bit to Learn Better: Soft Forgetting for CTC-Based Automatic Speech Recognition. In Proceedings of the 2019 Interspeech, Graz, Austria, 15 September 2019; pp. 2618–2622. [Google Scholar]

- Chorowski, J.; Bahdanau, D.; Cho, K.; Bengio, Y. End-to-end continuous speech recognition using attention-based recurrent NN. First results. arXiv 2014, arXiv:1412.1602. Available online: https://arxiv.org/abs/1412.1602 (accessed on 8 August 2020).

- Chorowski, J.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-Based Models for Speech Recognition. In Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 577–585. [Google Scholar]

- Bahdanau, D.; Chorowski, J.; Serdyuk, D.; Brakel, P.; Bengio, Y. End-to-end attention-based large vocabulary speech recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 4945–4949. [Google Scholar]

- Chan, W.; Jaitly, N.; Le, Q.; Vinyals, O. Listen, attend and spell: A neural network for large vocabulary conversational speech recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shangai, China, 20–25 March 2016; pp. 4960–4964. [Google Scholar]

- Watanabe, S.; Hori, T.; Karita, S.; Hayashi, T.; Nishitoba, J.; Unno, Y.; Soplin, E.Y.S.; Heyman, J.; Wiesner, M.; Ochiai, T.; et al. ESPnet: End-to-End Speech Processing Toolkit. arXiv 2018, arXiv:1804.00015. Available online: https://arxiv.org/abs/1804.00015 (accessed on 8 August 2020).

- Settles, B. Active Learning Literature Survey; Technical Report; University of Wisconsin-Madison Department of Computer Sciences: Madison, WI, USA, 2009. [Google Scholar]

- Settles, B.; Craven, M. An Analysis of Active Learning Strategies for Sequence Labeling Tasks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Honolulu, HI, USA, 25–27 October 2008; Association for Computational Linguistics: Stroudsburg, PA, USA, 2008; pp. 1070–1079. [Google Scholar]

- Lewis, D.D.; Catlett, J. Heterogeneous uncertainty sampling for supervised learning. In Machine Learning Proceedings, Proceedings of the Eleventh International Conference, New Brunswick, NJ, USA, July 10–13, 1994; Elsevier: Amsterdam, The Netherlands, 2017; pp. 148–156. [Google Scholar]

- Dasgupta, S.; Hsu, D. Hierarchical sampling for active learning. In Proceedings of the 25th International Conference on Machine Learning ACM, Helsinki, Finland, 5–9 July 2008; pp. 208–215. [Google Scholar]

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Qadir, J.; Schuller, B.W. Deep representation learning in speech processing: Challenges, recent advances, and future trends. arXiv 2020, arXiv:2001.00378. Available online: https://arxiv.org/abs/2001.00378 (accessed on 8 August 2020).

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Tschannen, M.; Bachem, O.; Lucic, M. Recent advances in autoencoder-based representation learning. arXiv 2018, arXiv:1812.05069. Available online: https://arxiv.org/abs/1812.05069 (accessed on 8 August 2020).

- Hsu, W.N.; Zhang, Y.; Glass, J. Unsupervised learning of disentangled and interpretable representations from sequential data. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 1878–1889. [Google Scholar]

- Meng, Z.; Chen, Z.; Mazalov, V.; Li, J.; Gong, Y. Unsupervised adaptation with domain separation networks for robust speech recognition. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Okinawa, Japan, 16–20 December 2017. [Google Scholar]

- Li, J.; Seltzer, M.L.; Wang, X. Large-scale domain adaptation via teacher-student learning. In Proceedings of the 2017 Interspeech, Stockholm, Sweden, 20 August 2017. [Google Scholar]

- Watanabe, S.; Hori, T.; Roux, J.L.; Hershey, J. Student—Teacher network learning with enhanced features. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Li, J.; Zhao, R.; Chen, Z. Developing far-field speaker system via teacher-student learning. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Kang, B.O.; Park, J.G. Active Learning using Latent Variables with Independent Attributes for Speech Recognition. In Proceedings of the Winter Annual Conference of KICS, Kangwon, Korea, 5–7 February 2020. [Google Scholar]

- Carletta, J.; Ashby, S.; Bourban, S.; Flynn, M.; Guillemot, M.; Hain, T.; Kadlec, J.; Karaiskos, V.; Kraijaj, W.; Lathoud, G.; et al. The AMI meeting corpus: A pre-announcement. In International Workshop on Machine Learning for Multimodal Interaction; Springer: Berlin/Heidelberg, Germany, 2005; pp. 28–39. [Google Scholar]

- Oh, Y.R.; Park, K.; Jeon, H.B.; Park, J.G. Automatic proficiency assessment of Korean speech read aloud by non-natives using bidirectional LSTM-based speech recognition. ETRI J. 2020. [Google Scholar] [CrossRef]

- Kang, B.O.; Jeon, H.B.; Song, H.J.; Han, R.; Park, J.G. Long Short-Term Memory RNN based Speech Recognition System for Recording Data. In Proceedings of the Winter Annual Conference of KICS, Kangwon, Korea, 17–19 January 2018. [Google Scholar]

- Bang, J.U.; Choi, M.Y.; Kim, S.H.; Kwon, O.W. Automatic construction of a large-scale speech recognition database using multi-genre broadcast data with inaccurate subtitle timestamps. IEICE Trans. Inf. Syst. 2020, 103, 406–415. [Google Scholar] [CrossRef]

- Povey, D.; Ghoshal, A.; Boulianne, G.; Goel, N.; Hannemann, M.; Qian, Y.; Schwarz, P.; Stemmer, G. The Kaldi Speech Recognition Toolkit. In Proceedings of the 2011 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Hawaii, HI, USA, 11–15 December 2011. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556 (accessed on 8 August 2020).

- Picone, J.W. Signal modeling techniques in speech recognition. Proc. IEEE 1993, 81, 1215–1247. [Google Scholar] [CrossRef]

- Mogran, N.; Bourlard, H.; Hermansky, H. Automatic speech recognition: An auditory perspective. In Speech Processing in the Auditory System; Springer: New York, NY, USA, 2004; pp. 309–338. [Google Scholar]

- Manohar, V.; Ghahremani, P.; Povey, D.; Khudanpur, S. A teacher-student learning approach for unsupervised domain adaptation of sequence-trained ASR models. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 250–257. [Google Scholar]

| Corpus | Description of Purpose | Duration (h) | Number of Speakers |

|---|---|---|---|

| AMI Meeting | Functional verification of the integrated systems | 100 | 200 |

| Non-native Korean speech | Task domain with the sparse matched training DB | 520 | 830 |

| Korean call center recording | Task domain with the sparse matched training DB | 1000 | > 100 |

| Korean broadcast speech | Large-scale labeled speech DB | 14,000 | > 1000 |

| Feature | Dimension | Above 1 s | Above 2 s | Above 5 s | Above 10 s |

| MFCC | 40 | 4.7 | 4.0 | 2.0 | 1.7 |

| LogMel | 40 | 2.7 | 3.0 | 2.7 | 2.2 |

| Sequence Level Latent variable(Z2) | 32 | 1.0 | 0.3 | 0.0 | 0.0 |

| Training DB | Error Rate |

|---|---|

| 200 h non-native Korean | 19.1 |

| +500 h randomly selected native Korean | 17.1 |

| +500 h actively selected native Korean | 16.3 |

| Training DB | Error Rate |

|---|---|

| 500 h Korean call center recording | 21.1 |

| +1000 h randomly selected Korean broadcast speech | 19.4 |

| +1000 h actively selected Korean broadcast speech | 18.5 |

| Training DB | Eval | Dev |

|---|---|---|

| IHM | 55.9 | 51.1 |

| SDM | 35.0 | 32.3 |

| SDM + AugSDM | 32.5 | 29.9 |

| Training DB | Error Rate |

|---|---|

| 500 h Korean call center recording | 21.1 |

| +500 h Korean broadcast speech | 19.6 |

| +500 h AugCall augmented by the proposed method | 18.5 |

| 1000 h Korean call center recording | 18.5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, B.O.; Jeon, H.B.; Park, J.G. Speech Recognition for Task Domains with Sparse Matched Training Data. Appl. Sci. 2020, 10, 6155. https://doi.org/10.3390/app10186155

Kang BO, Jeon HB, Park JG. Speech Recognition for Task Domains with Sparse Matched Training Data. Applied Sciences. 2020; 10(18):6155. https://doi.org/10.3390/app10186155

Chicago/Turabian StyleKang, Byung Ok, Hyeong Bae Jeon, and Jeon Gue Park. 2020. "Speech Recognition for Task Domains with Sparse Matched Training Data" Applied Sciences 10, no. 18: 6155. https://doi.org/10.3390/app10186155

APA StyleKang, B. O., Jeon, H. B., & Park, J. G. (2020). Speech Recognition for Task Domains with Sparse Matched Training Data. Applied Sciences, 10(18), 6155. https://doi.org/10.3390/app10186155