An Aircraft Object Detection Algorithm Based on Small Samples in Optical Remote Sensing Image

Abstract

1. Introduction

2. Theoretical Analysis and Methods

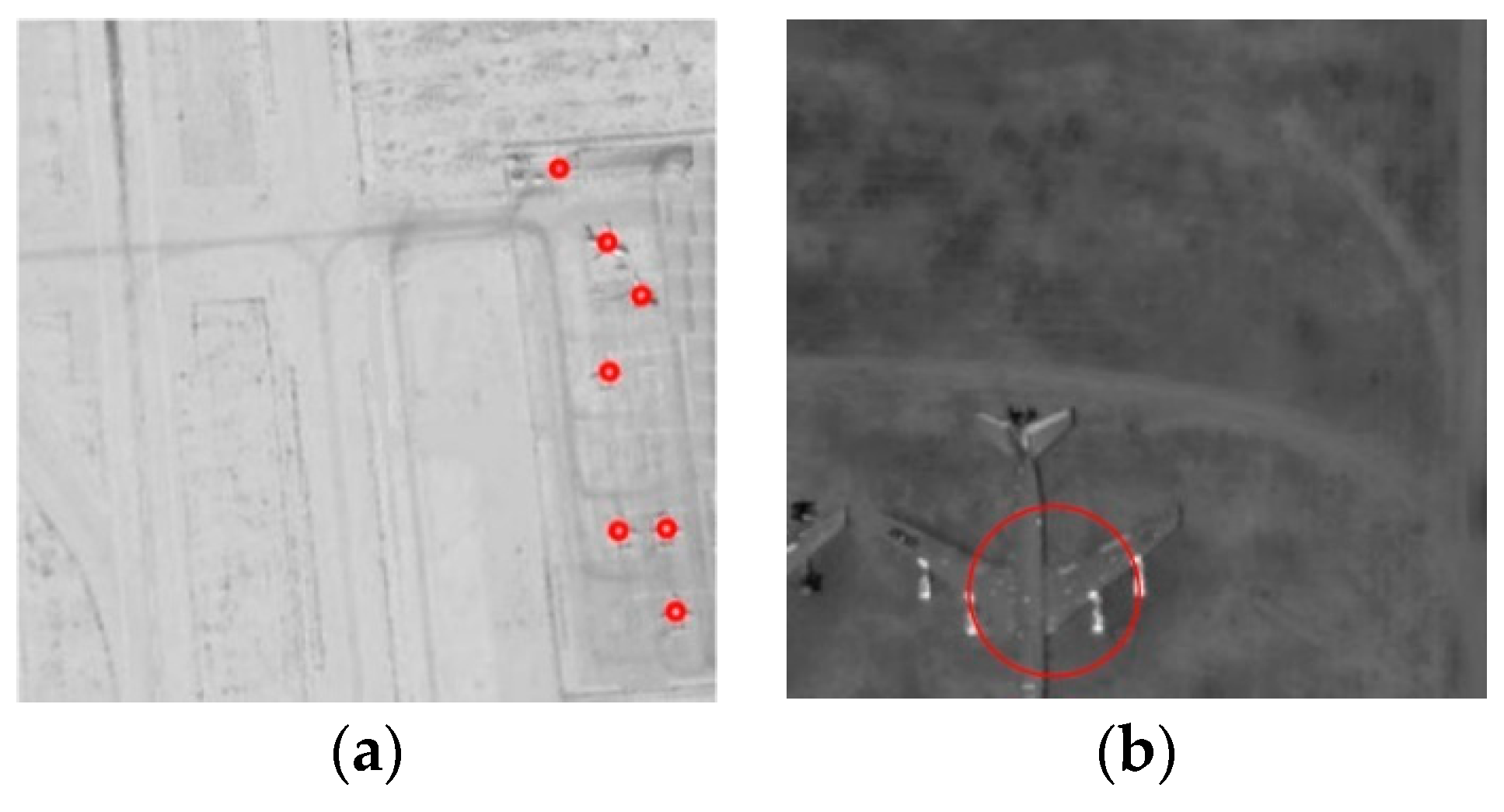

2.1. Weak and Small Object Detection Algorithm

2.2. Fusion Algorithm

2.2.1. Clustering Algorithm

2.2.2. False Alarms Elimination

3. Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tommy, G.; Robert, J.; Larry, D.; Steve, C.; Matthew, H.; Timothy, S. The pan-STARRS synthetic solar system model: A tool for testing and efficiency determination of the moving object processing system. Publ. Astron. Soc. Pacific 2011, 123, 423–447. [Google Scholar] [CrossRef]

- Trigo-Rodriguez, J.M.; Madiedo, J.M.; Gural, P.S.; Castro-Tirado, A.J.; Llorca, J.; Fabregat, J.; Vítek, S.; Pujols, P. Determination of meteoroid orbits and spatial fluxes by using high-resolution all-sky CCD cameras. Earth Moon Planets 2008, 102, 231–240. [Google Scholar] [CrossRef]

- Serge, S.; Iacovella, F.; van der Velde, O.; Montanyà, J.; Füllekrug, M.; Farges, T.; Bór, J.; Georgis, J.-F.; NaitAmor, S.; Martin, J.-M. Multi-instrumental analysis of large sprite events and their producing storm in southern France. Atmos. Res. 2014, 135–136, 415–431. [Google Scholar] [CrossRef]

- Shitao, C.; Songyi, Z.; Jinghao, S.; Badong, C.; Zheng, N. Brain-inspired cognitive model with attention for self-driving cars. IEEE Trans. Cogn. Dev. Syst. 2019, 11, 13–25. [Google Scholar] [CrossRef]

- Olaf, R.; Philipp, F.; Thomas, B. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Shuang, M.; Hua, Y.; Zhouping, Y. An unsupervised-learning-based approach for automated defect inspection on textured surfaces. IEEE Trans. Instrum. Meas. 2018, 67, 1266–1277. [Google Scholar] [CrossRef]

- Logar, T.; Bullock, J.; Nemni, E.; Bromley, L.; Quinn, J.A.; Luengo-Oroz, M. PulseSatellite: A tool using human-AI feedback loops for satellite image analysis in humanitarian contexts. AAAI 2020, 34, 13628–13629. [Google Scholar] [CrossRef]

- Pompílio, A.; Jefferson, F.; Luciano, O. Multi-perspective object detection for remote criminal analysis using drones. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1283–1286. [Google Scholar] [CrossRef]

- Apoorva, R.; Mohana, M.; Pakala, R.; Aradhya, H.V.R. Object detection algorithms for video surveillance applications. In Proceedings of the International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 3–5 April 2018. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2014, 111, 98–136. [Google Scholar] [CrossRef]

- Yang, X.; Fu, K.; Sun, H.; Sun, X.; Yan, M.; Diao, W.; Guo, Z. Object detection with head direction in remote sensing images based on rotational region CNN. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Hiromoto, M.; Sugano, H.; Miyamoto, R. Partially parallel architecture for adaBoost-based detection with haar-like features. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 41–52. [Google Scholar] [CrossRef]

- Zhu, J.; Rosset, S.; Zou, H.; Hastie, T. Multi-class adaboost. Stat. Interface 2006, 2, 349–360. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Felzenszwalb, P.; Girshick, R.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Felzenszwalb, P.; Girshick, R.; McAllester, D. Cascade object detection with deformable part models. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Malisiewicz, T.; Gupta, A.; Efros, A. Ensemble of exemplar-SVMs for object detection and beyond. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Ren, X.; Ramanan, D. Histograms of sparse codes for object detection. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Berg, A.; Deng, J.; Li, F.F. Large Scale Visual Recognition Challenge. 2012. Available online: www.image-net.org/challenges (accessed on 1 July 2020).

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25, Lake Tahoe, CA, USA, 3–6 December 2012. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R. Image Net: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-end learning for point cloud based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hongping, C.; Yi, S. Airplane detection in remote-sensing image with a circle-frequency filter. In Proceedings of the SPIE—The International Society for Optical Engineering, Wuhan, China, 4 January 2006. [Google Scholar]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Haigang, Z.; Xiaogang, C.; Weiqun, D.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. IEEE Int. Conf. Image Process. 2015. [Google Scholar] [CrossRef]

- Caruso, G.; Gattone, S.A.; Balzanella, A.; Di Battista, T. Models and theories in social systems. Studies in systems, decision and control. In Cluster Analysis: An Application to a Real Mixed-Type Data Set; Flaut, C., Hošková-Mayerová, Š., Flaut, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 179, pp. 525–533. [Google Scholar]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef]

- Cheng, Y. Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 790–799. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

| The Proposed Algorithm |

|---|

| Input: Test dataset images |

Execute:

|

| Output: Images contain the location of the aircraft |

| Evaluation Indicator | The Proposed Algorithm | Faster R CNN |

|---|---|---|

| Precision | 89.25% | 72.22% |

| Accuracy | 82.56% | 64.09% |

| Recall | 91.68% | 85.07% |

| Missing Alarm | 8.32% | 14.93% |

| False Alarm | 10.75% | 27.78% |

| F-measure | 90.44% | 78.12% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Cao, C.; Zeng, X.; Feng, Z.; Shen, J.; Li, W.; Wang, B.; Zhou, Y.; Yan, X. An Aircraft Object Detection Algorithm Based on Small Samples in Optical Remote Sensing Image. Appl. Sci. 2020, 10, 5778. https://doi.org/10.3390/app10175778

Wang T, Cao C, Zeng X, Feng Z, Shen J, Li W, Wang B, Zhou Y, Yan X. An Aircraft Object Detection Algorithm Based on Small Samples in Optical Remote Sensing Image. Applied Sciences. 2020; 10(17):5778. https://doi.org/10.3390/app10175778

Chicago/Turabian StyleWang, Ting, Changqing Cao, Xiaodong Zeng, Zhejun Feng, Jingshi Shen, Weiming Li, Bo Wang, Yuedong Zhou, and Xu Yan. 2020. "An Aircraft Object Detection Algorithm Based on Small Samples in Optical Remote Sensing Image" Applied Sciences 10, no. 17: 5778. https://doi.org/10.3390/app10175778

APA StyleWang, T., Cao, C., Zeng, X., Feng, Z., Shen, J., Li, W., Wang, B., Zhou, Y., & Yan, X. (2020). An Aircraft Object Detection Algorithm Based on Small Samples in Optical Remote Sensing Image. Applied Sciences, 10(17), 5778. https://doi.org/10.3390/app10175778