A Review of the Artificial Neural Network Models for Water Quality Prediction

Abstract

1. Introduction

2. Methods

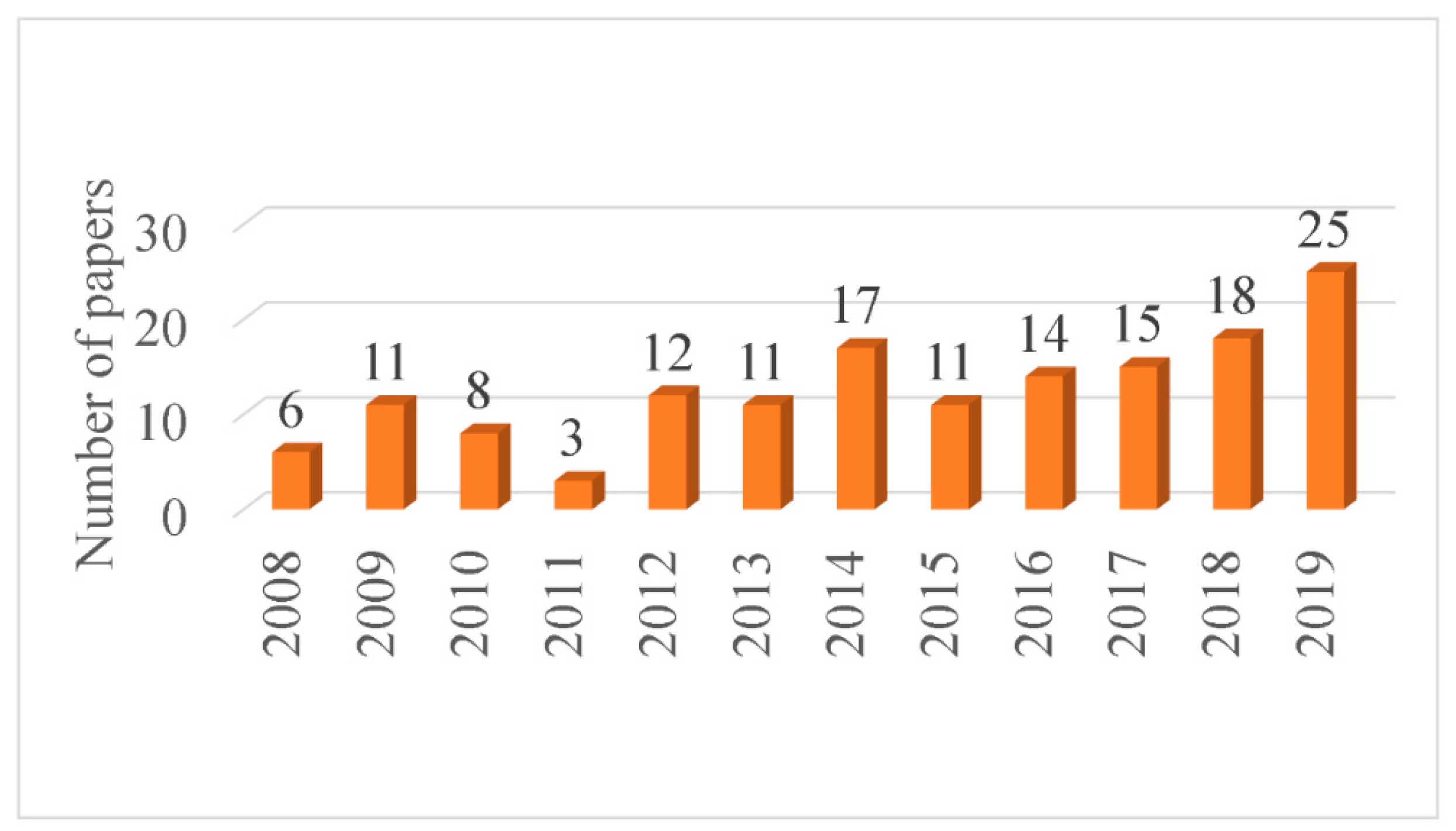

- First, we identified ANN-related papers in influential water-related and environmental-related journals to ensure that high-quality papers are included in the review. These papers are mainly from journals whose subjects are environmental science and ecology, water resources, engineering and application.

- Thereafter, a keyword search of the ISI Web of Science was then conducted for the period 2008–2019 using the keywords; water quality, river, lake, reservoir, WWTP, groundwater, pond, prediction, and forecasting, accompanied by the names of ANN methods (one or more), such as neural network, MLP, RBFNN, GRNN, RNN, to name but a few.

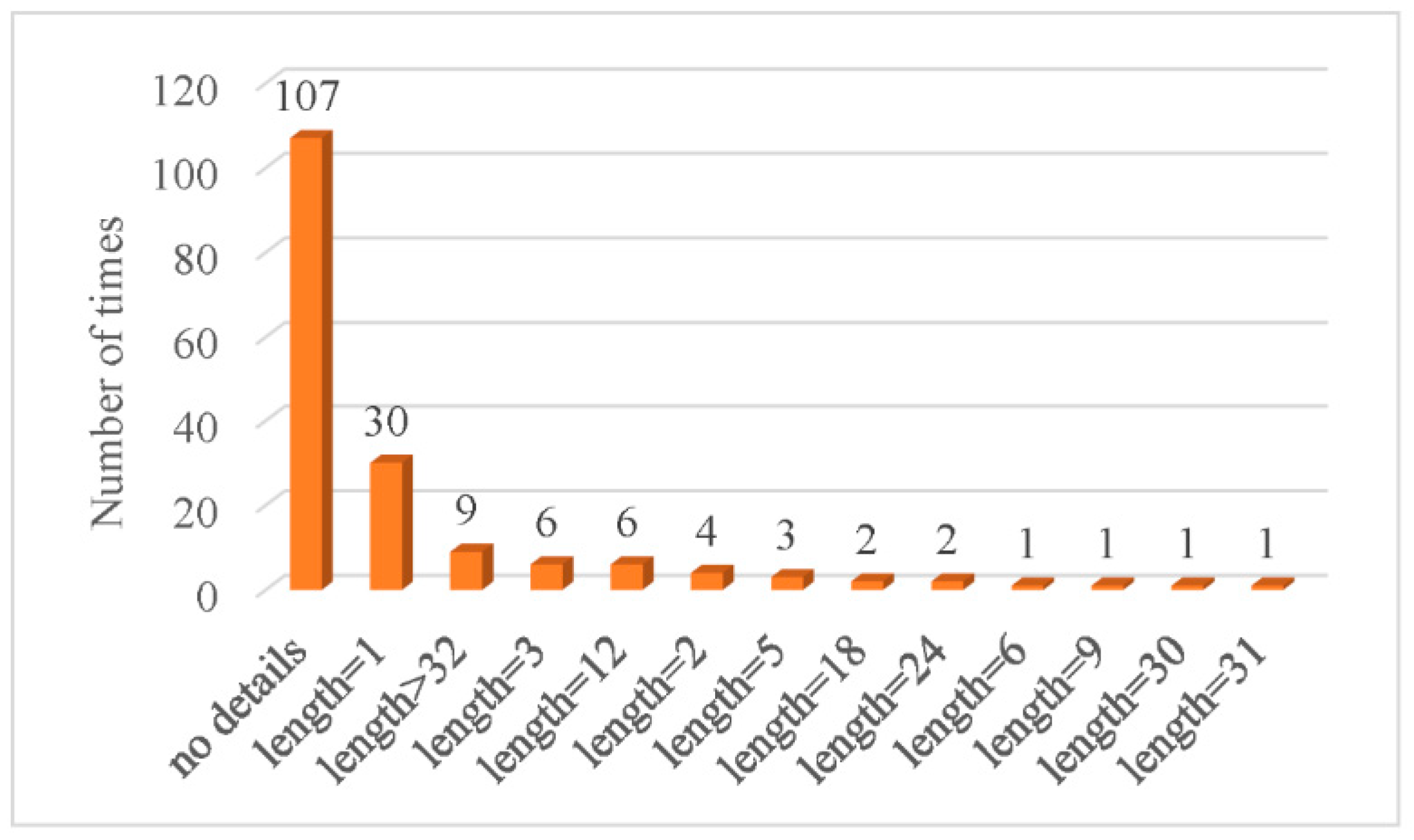

- Then, through the search process from 1 to 2, 151 articles in English relevant to our focus were selected. The basic information of the papers, including authors (year), locations, water quality variables, meteorological factors, other factors, output strategy, data size, time step, data dividing, methods, and prediction lengths are provided in Appendix A.

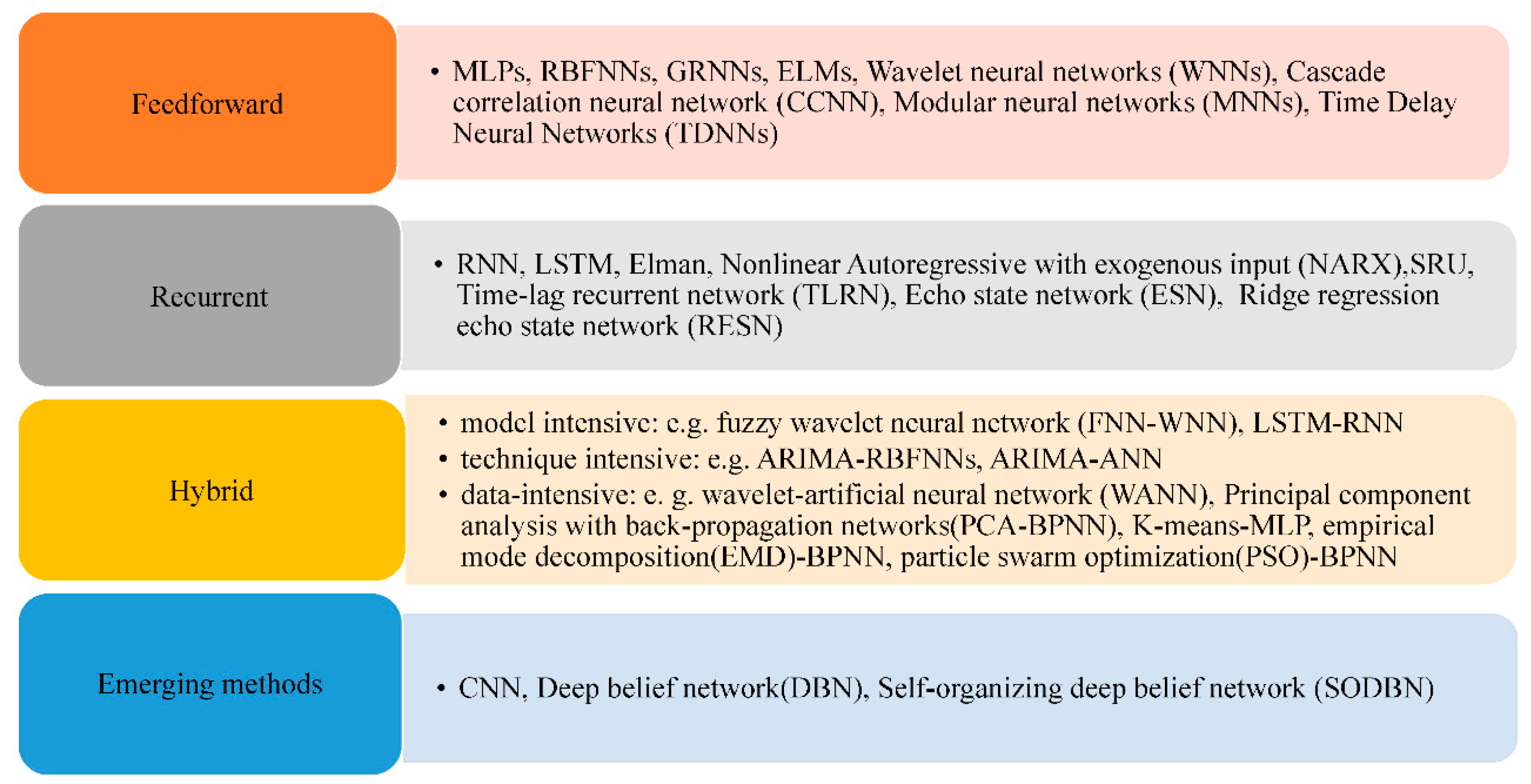

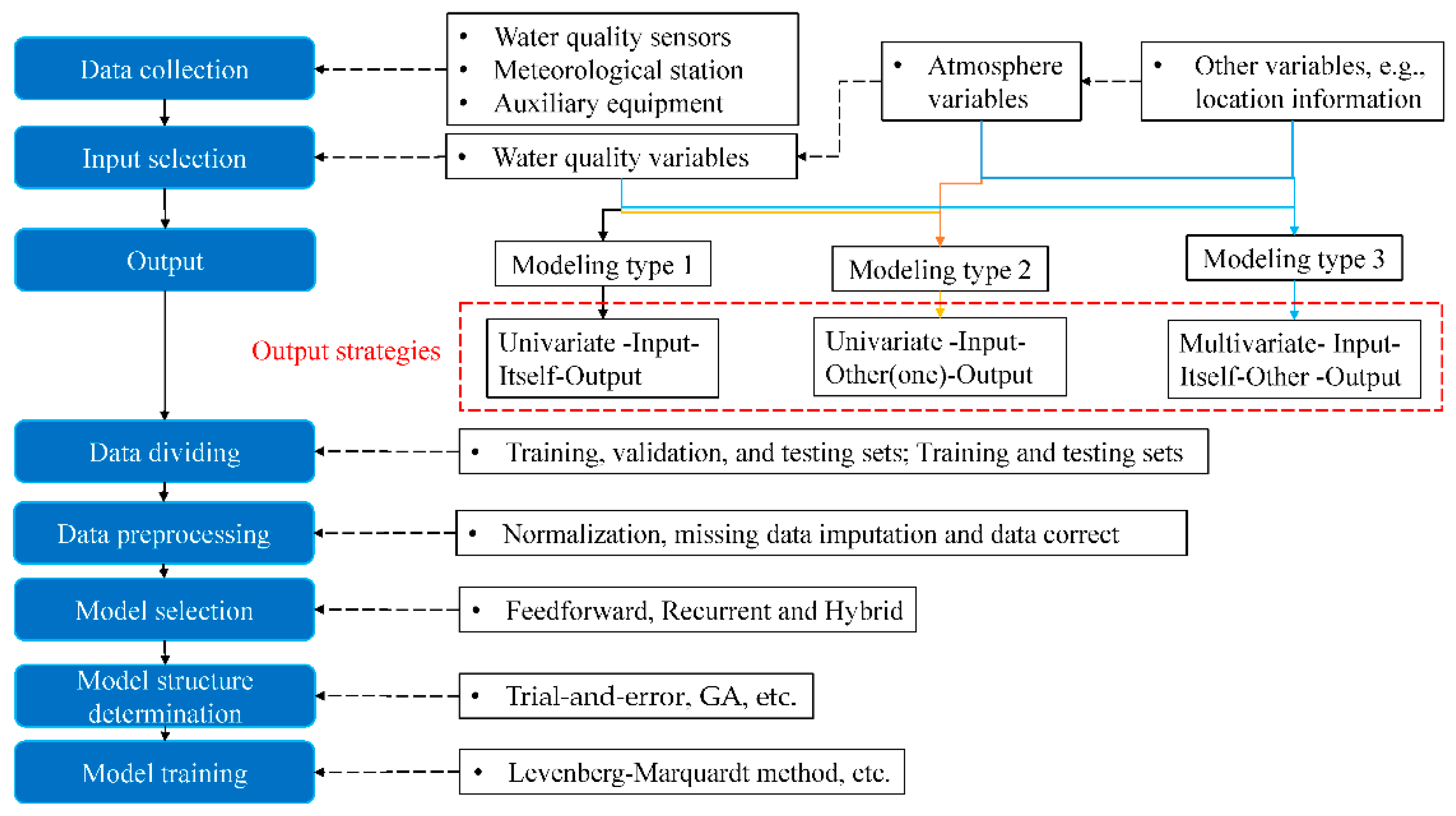

3. Three Basic Model Structures in Water Quality Prediction

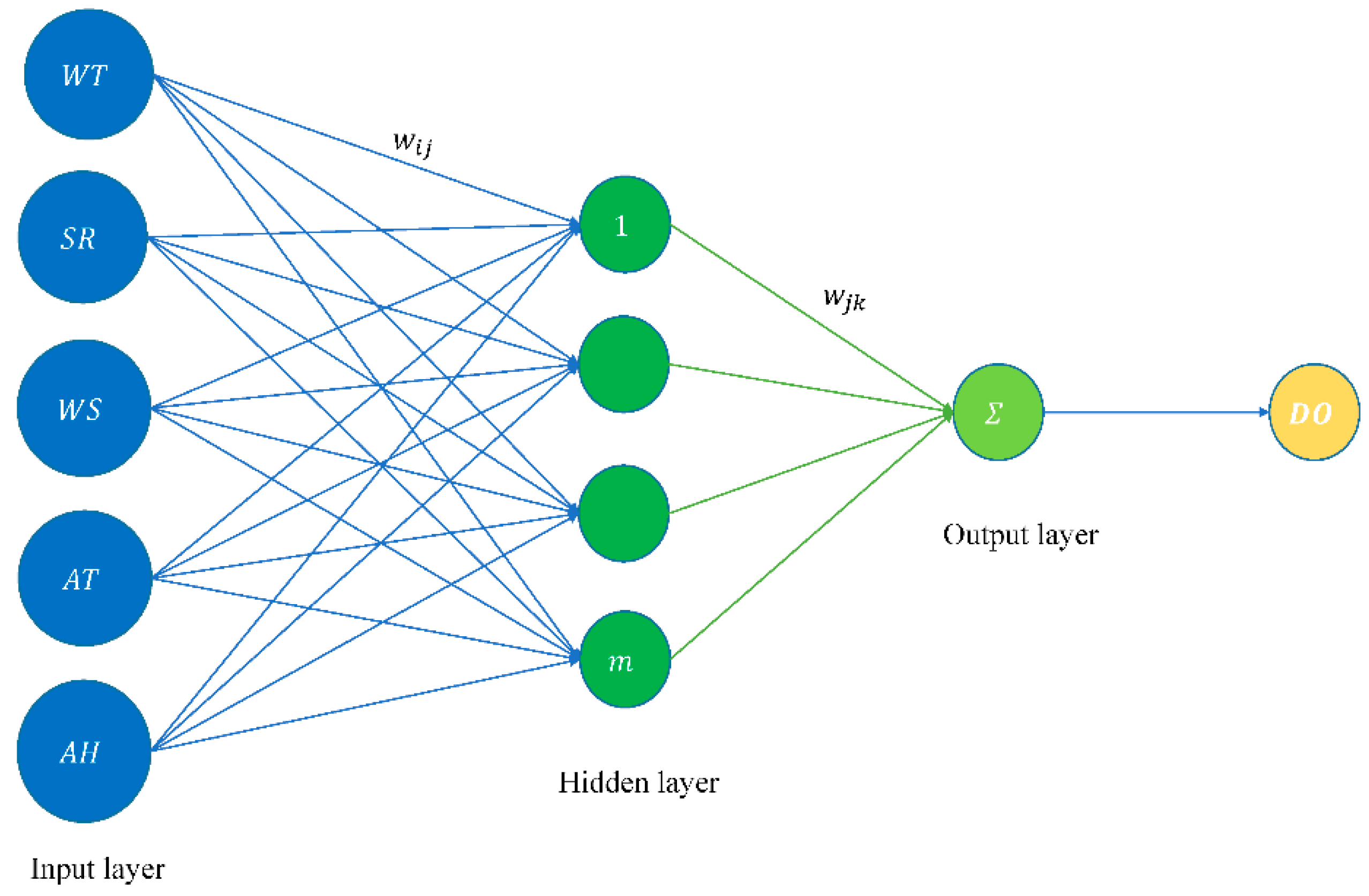

3.1. Feedforward Architectures

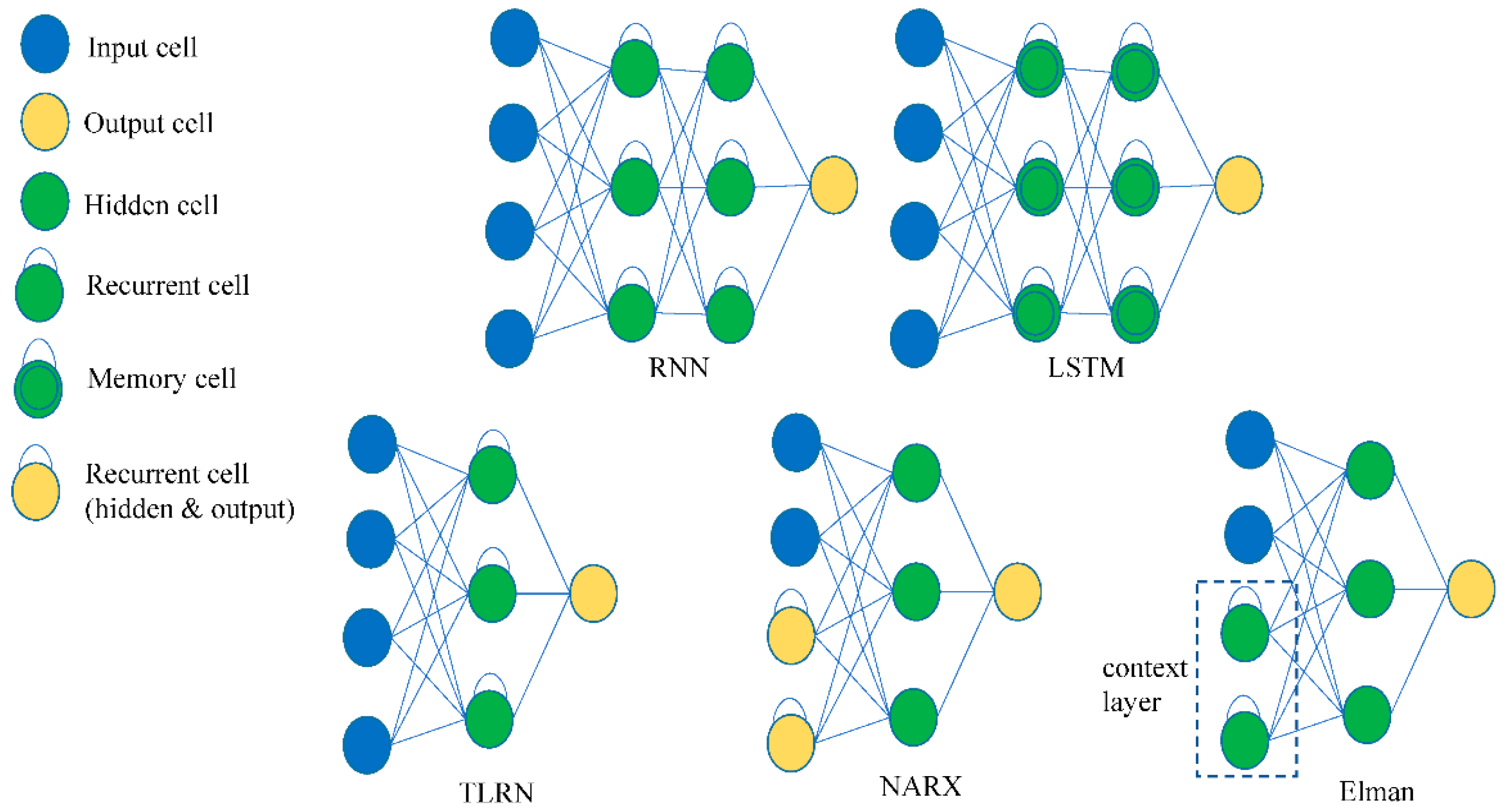

3.2. Recurrent Architectures

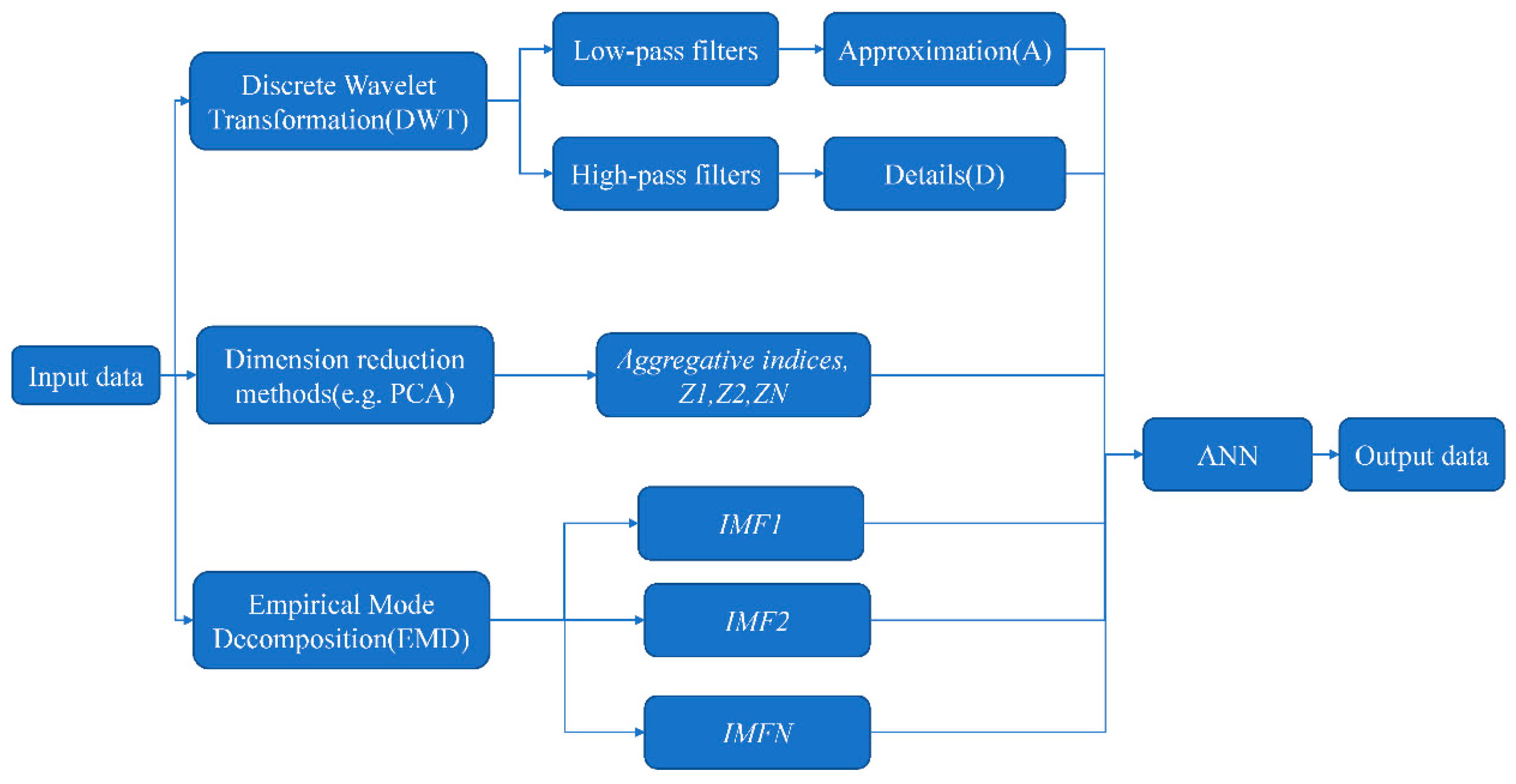

3.3. Hybrid Architectures

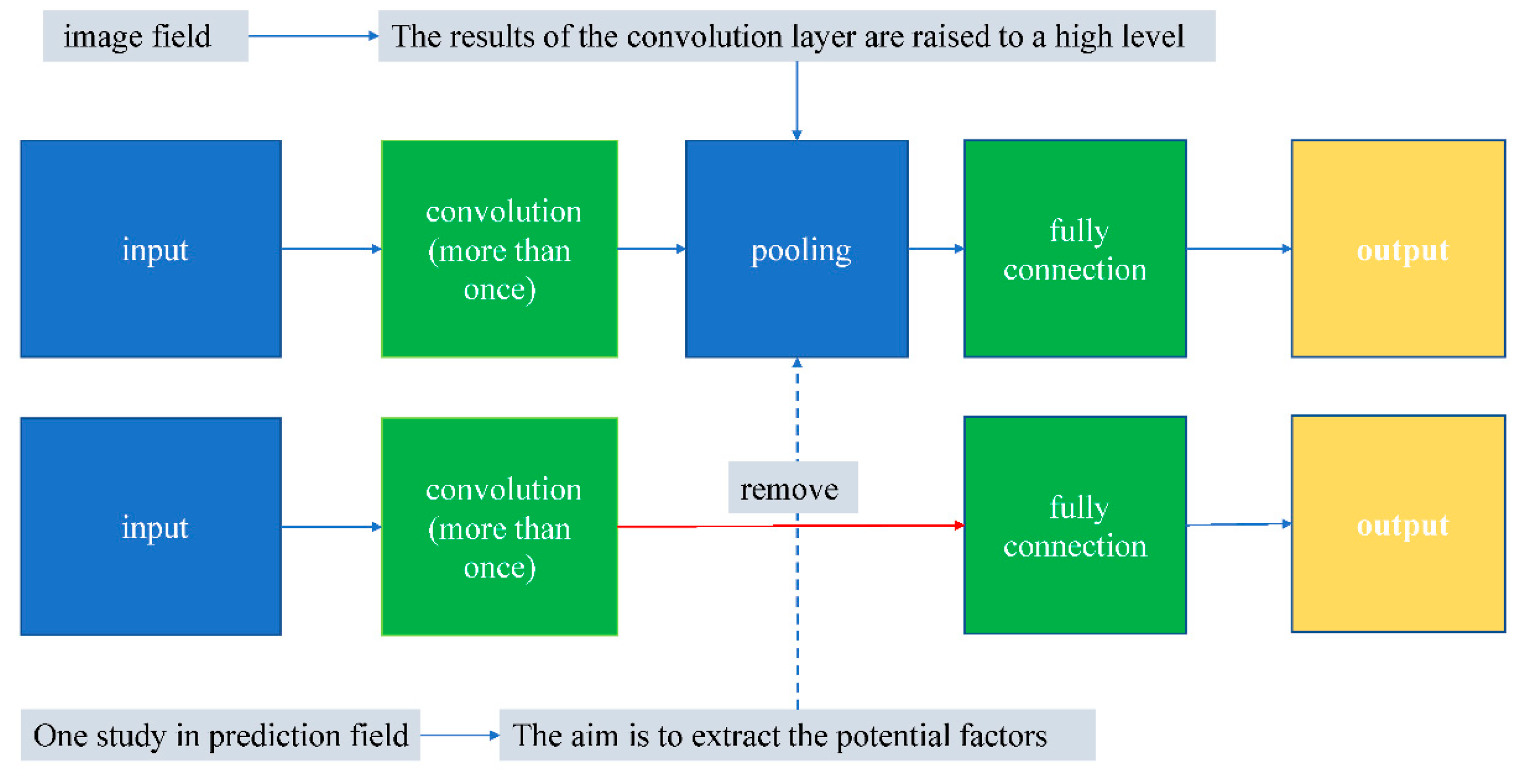

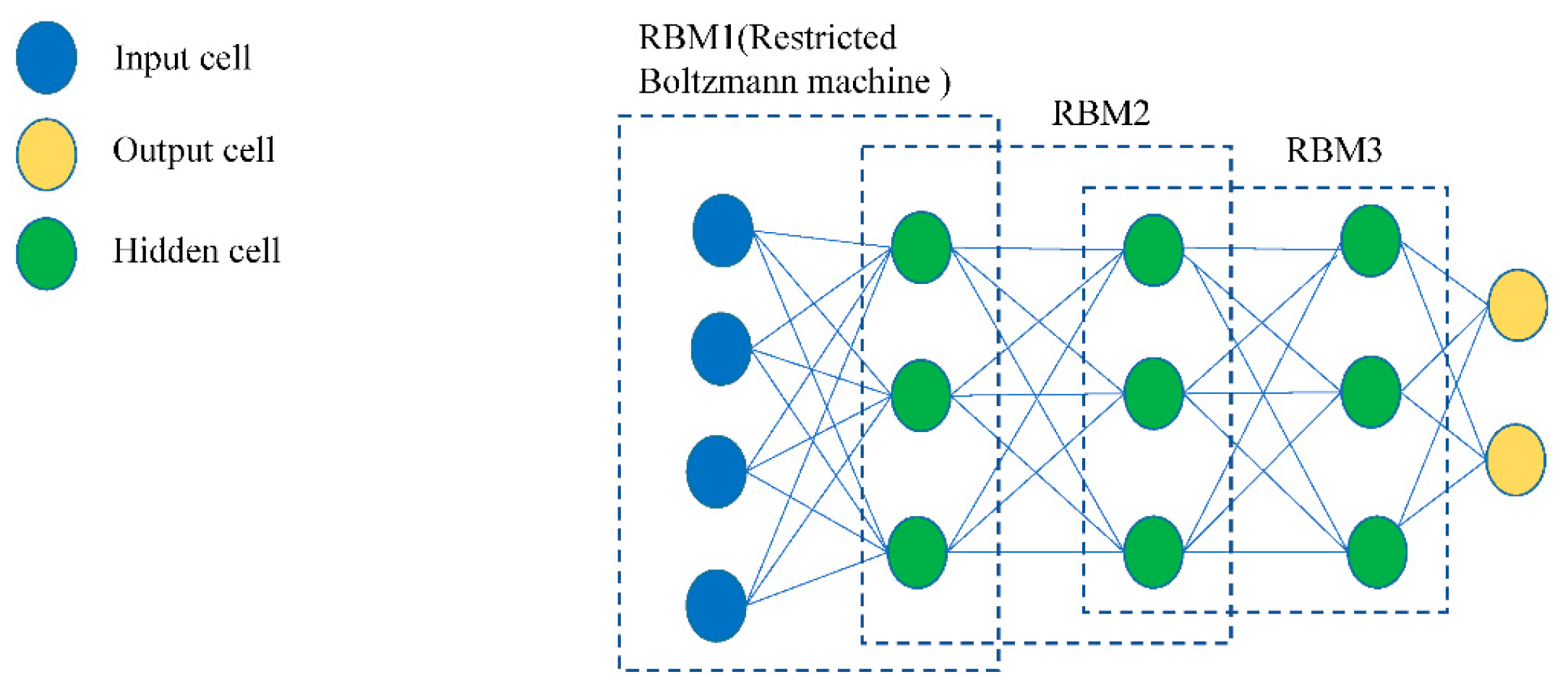

3.4. Emerging Methods

4. Artificial Neural Networks Models for Water Quality Prediction

5. Result

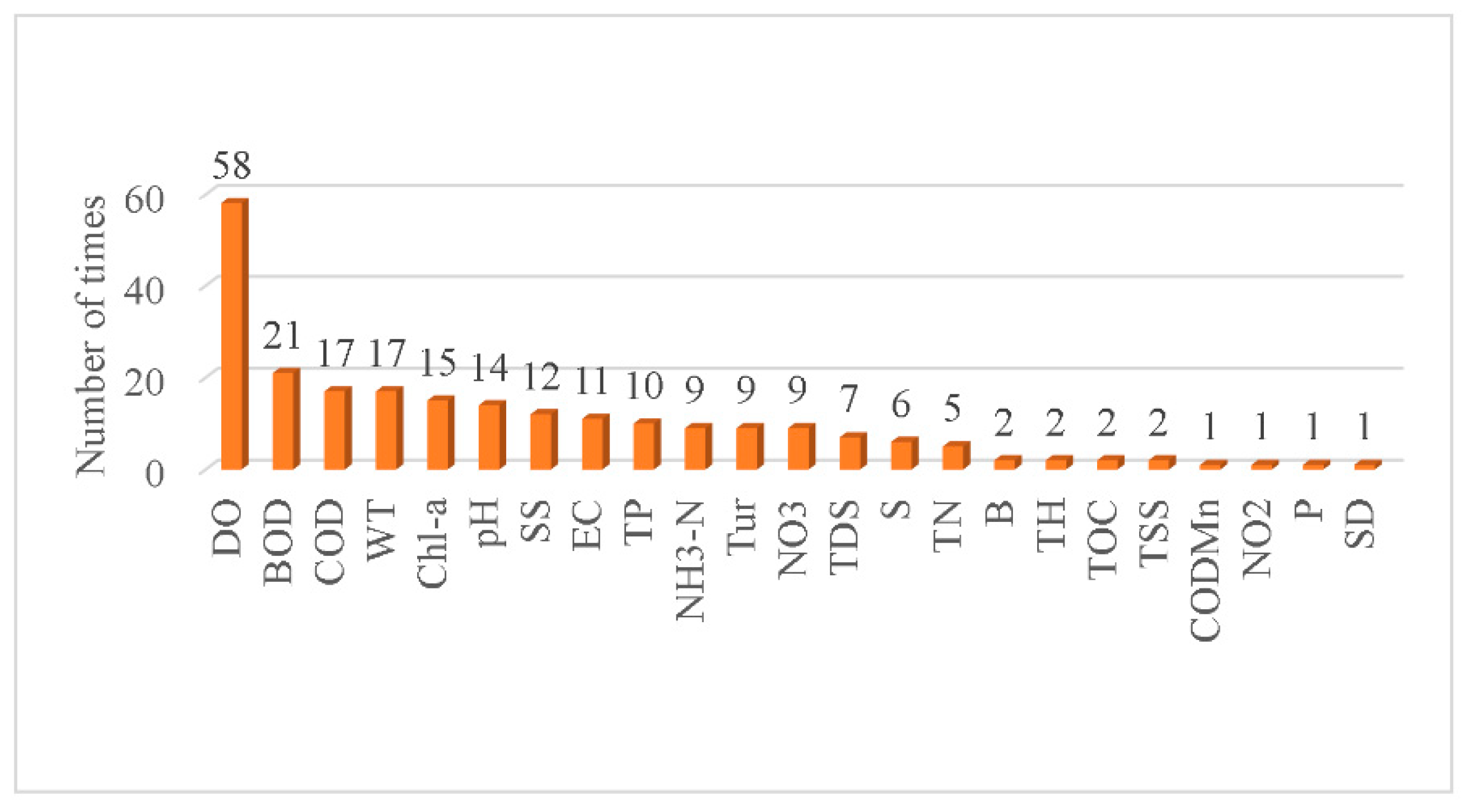

5.1. Data Collection

5.2. Output Strategy

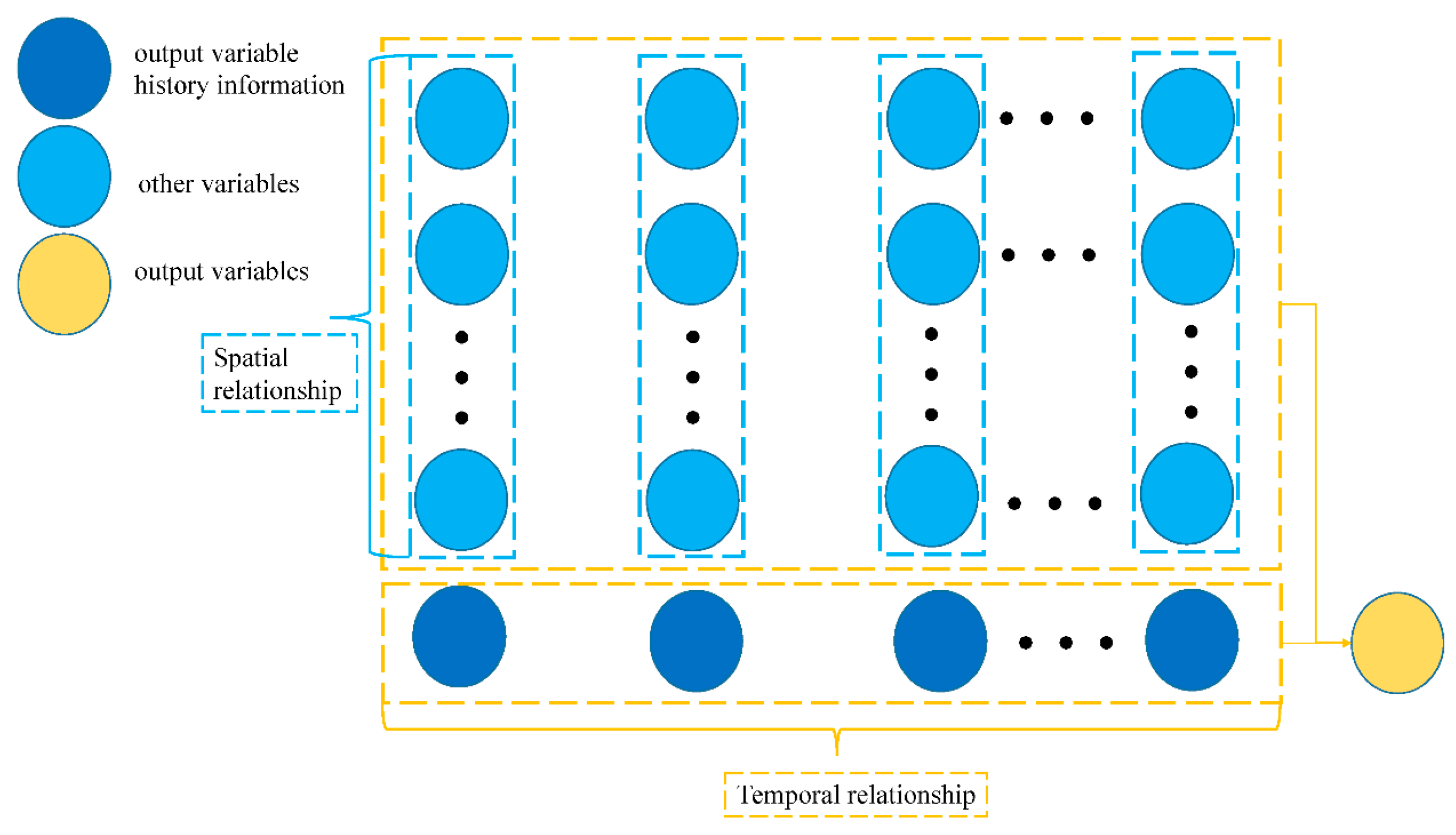

5.3. Input Selection

5.4. Data Dividing

5.5. Data Preprocessing

5.6. Model Structure Determination

5.7. Model Training

6. Discussion

6.1. Data Are the Foundation

6.2. Data Processing Is Key

6.3. Model Is the Core

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Categories | Authors (Year) | Locations | Water Quality Variables | Meteorological Factors | Other Factors | Output Strategy | Dataset | Time Step | Data Dividing | Methods | Prediction Lengths |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Feedforward | [75] | WWTP(Turkey) | BOD; SS, TN, TP | NA | Q | Category 2 | 364 samples (1 year) | daily | Train: 67%, test:33% | ANN, MLR | NA |

| Feedforward | [76] | Mamasin dam reservoir (Turkey) | DO, EC; SS, TN, WT | RF | AODD | Category 2 | No details | No details | No details | ANN(MLP) | NA |

| Feedforward | [40] | Singapore coastal waters (Singapore) | S, DO, Chl-a;; WT | NA | NA | Category 3 | 32 samples (5 months) | No details | No details | ANN(MLP), GRNN | 1 |

| Feedforward | [19] | Feitsui Reservoir (China) | Chl-a; | NA | Bands | Category 2 | No details | No details | Train: 75%, test:25% | ANN(MLP) | NA |

| Feedforward | [90] | Pyeongchang river (Korea) | DO, TOC; WT | NA | Q | Category 3 | No details (3 months) | 5 minutes | No details | ANN, MNN, ANFIS | 12,24 |

| Feedforward | [170] | Feitsui Reservoir (China) | Chl-a; | NA | Bands | Category 2 | No details (7 years) | No details | Train:57%, validate: 29%, test: 14% | ANN(MLP) | NA |

| Feedforward | [91] | Melen River (Turkey) | BOD; COD, WT, DO, Chl-a, NH3-N, NO3, NO2 | NA | F, Ns | Category 2 | No details (over 6 years) | monthly | Train:60%, validate: 20%, test: 20% | ANN(MLP) | NA |

| Feedforward | [92] | Moshui River (China) | COD, NH3-N;; | NA | mineral oil;; | Category 0 | No details (5 years) | No details | Train:80%, test: 20% | BPNN | NA |

| Feedforward | [93] | Doce River (Brazil) | WT, pH, EC, TN | NA | other ions | Category 2 | 232samples (3 years) | No details | Train:50%, validate: 25%, test: 25% | ANN | NA |

| Feedforward | [94] | NA (China) | pH, DO;; WT, S, NH3-N, NO2 | NA | NA | Category 3 | 500 samples | No details | Train:80%, test: 20% | BPNN | NA |

| Feedforward | [95] | Gomti river (India) | DO, BOD; pH, TA, TH, TS, COD, NH3-N, NO3, P | RF | NA | Category 2 | 500 samples (10 years) | monthly | Train:60%, validate: 20%, test: 20% | ANN | NA |

| Feedforward | [96] | Pyeongchang River (Korea) | TOC;; | Precip | Q;; | Category 3 | No details (7 years) | No details | No details | ANN | NA |

| Feedforward | [97] | Groundwater (China) | NO2, COD;; | NA | other 7 variables | Category 3 | 97 samples | No details | Train:56%, test: 44% | ANN | NA |

| Feedforward | [98] | Omerli Lake (Turkey) | DO; BOD, NH3-N, NO3, NO2, P | NA | NA | Category 2 | No details (17 years) | No details | No details | ANN, MLR, NLR | NA |

| Feedforward | [99] | Changle River (China) | DO, TN, TP;; WT | RF | F, FTT | Category 3 | No details (18months) | monthly | No details | BPNN | NA |

| Feedforward | [105] | Sangamon River (USA) | NO3;; | AT, Precip | Q | Category 3 | No details (6 years) | weekly | Train:50%, test: 50% | ANN | 1 |

| Feedforward | [106] | Surface water (Turkey) | Chl-a; | NA | other 12 variables | Category 2 | 110 samples | No details | Train:67%, test: 33% | ANN(MLP) | NA |

| Feedforward | [107] | Gruˇza reservoir (Serbia) | DO; pH, WT, CL, TP, NO2, NH3-N, EC | NA | Fe, Mn | Category 2 | 180samples (3 years) | No details | Train:84%, test: 16% | ANN | NA |

| Feedforward | [108] | The tank (China) | DO;; pH, S, WT | AT | NA | Category 3 | No details (22 months) | 1 minute | Train:57%, validate: 29%, test: 14% | ANN | 30 |

| Feedforward | [109] | Groundwater (India) | S; EC | NA | WL, T, Pumping, Rainp | Category 2 | No details (7 years) | No details | Train:29%, test: 71% | ANN | NA |

| Feedforward | [110] | WWTP(China) | BOD; COD, SS, pH, NH3–N | NA | Oil | Category 2 | No details | No details | Train:50%, test: 50% | RBFNN | 5 |

| Feedforward | [10] | Groundwater (Iran) | NO3; pH, EC, TDS, TH | NA | Mg, Cl, Na, K, HCO3, SO4, Ca, ICs | Category 2 | 818samples (nearly 17days) | 30 minutes | Train:70%, test: 30% | ANN, Linear regression (LR) | NA |

| Feedforward | [77] | Wells (Palestine) | NO3; | NA | Q, other five variables | Category 2 | 975samples (16 years) | No details | No details | MLP, RBF, GRNN | NA |

| Feedforward | [112] | Upstream and downstream (USA) | DO; pH, WT, EC | NA | Q | Category 2 | 2063, 4765 samples (18 years) | daily | Train:50%, validate:25%, test: 25% | RBFNN, ANN(MLP), MLR, | NA |

| Feedforward | [50] | WWTP (Korea) | DO;; NH3-N | NA | NA | Category 3 | 1900 samples | No details | Train:45%, validate:5%, test: 50% | MNN | NA |

| Feedforward | [79] | Eastern Black Sea Basin (Turkey) | SS; Tur | NA | NA | Category 1 | 144 samples (1 year) | fortnightly | Train:75%, validate:8%, test: 17% | ANN(MLP) | NA |

| Feedforward | [113] | Kinta River (Malaysia) | DO, BOD, NH3-N, pH, COD, Tur;; | NA | NA | Category 2 | 255 samples (7 months) | No details | Train:80%, validate:10%, test: 10% | ANN(MLP) | NA |

| Feedforward | [78] | Power station (New Zealand) | WT; | AT, AP, WD, WS | other 8 variables | Category 2 | 45,594 samples (2 years) | 10 minutes | Train:70%, test: 30% | ANN(MLP) | 12 |

| Feedforward | [114] | Yuan-Yang Lake (China) | WT; | SR, AP, RH, AT, WS, WD | ST | Category 2 | No details (2 months) | 10 minutes | Train:70%, validate & test: 30% | ANN(MLP) | 1 |

| Feedforward | [22] | Experimental system (UK) | BOD, NH3-N, NO3, P; DO, WT, pH, EC, TSS, Tur | NA | RP | Category 2 | 195samples (4 years) | No details | Train: 62%, test: 38% | ANN | NA |

| Feedforward | [11] | Lake Fuxian (China) | DO, TP, SD, Chl-a;; TN, WT, pH | NA | Month; | Category 2 and Category 3 | No details | No details | No details | ANN | NA |

| Feedforward | [115] | Doiraj River (Iran) | SS; | RF | Q | Category 1 and Category 2 | more than 3000 samples (11 years) | daily | No details | ANN, Support vector regression (SVR) | 1 |

| Feedforward | [116] | Lake Abant (Turkey) | DO, Chl-a; WT, EC | NA | MDHM | Category 2 | 6674 samples (86 days) | 15 minutes | Train:60%, validate:15%, test: 25% | ANN, Multiple nonlinear regression (MNLR) | NA |

| Feedforward | [37] | Johor River, Sayong River (Malaysia) | TDS, EC, Tur; | NA | NA | Category 1 | No details (5 years) | No details | The test set is approximately 10–40 % of the size of the training data set | ANN(MLP), RBFNN, LR | NA |

| Feedforward | [158] | Mine water (India) | BOD, COD; WT, pH, DO, TSS | NA | other | Category 2 | 73 samples | No details | Train:79%, test: 21% | ANN | NA |

| Feedforward | [160] | Heihe River (China) | DO; pH, NO3, NH3-N, EC, TA, TH | NA | Cl, Ca | Category 2 | 164 samples (over 6 years) | monthly | Train:60%, validate:20%, test: 20% | ANN(MLP) | NA |

| Feedforward | [117] | Danube River (Serbia) | DO; WT, pH, NO3, EC | Na, CL, SO4, HCO3, other 11 variables | Category 2 | 1512 samples (9 years) | No details | Train:70%, validate:20%, test: 10% | GRNN | NA | |

| Feedforward | [80] | Stream Harsit (Turkey) | SS; Tur | NA | TCC, TIC | Category 1 and Category 2 | 132 samples (11months) | No details | No details | ANN(MLP) | NA |

| Feedforward | [118] | Feitsui Reservoir (China) | DO; WT, pH, EC, Tur, SS, TH, TA, NH3-N | NA | NA | Category 2 | 400 samples (20 years) | No details | No details | BPNN, ANFIS, MLR | NA |

| Feedforward | [163] | Stream (USA) | WT; | AT | Form attributes, forested land cover | Category 2 | 982 (6 months) | daily | Train:90%, validate & test: 10% | ANN(MLP) | NA |

| Feedforward | [49] | The Bahr Hadus drain (Egypt) | DO, TDS;; | NA | NA | Category 0 | No details | monthly | Train:80%, test: 20% | CCNN, BPNN | NA |

| Feedforward | [161] | Karoon River (Iran) | DO, COD, BOD; EC, pH, Tur, NO3, NO2, P | NA | Ca, Mg, Na | Category 2 | 200 samples (17 years) | monthly | Train:80%, test: 20% | ANN(MLP), RBFNN, ANFIS | NA |

| Feedforward | [121] | Manawatu River (New Zealand) | NO3; | NA | EMS (Energy, Mean, Skewness) | Category 1 | 144 samples | weekly | Train: 70%, test: 30% | RBFNN | NA |

| Feedforward | [119] | WWTP (China) | BOD; DO, pH, SS | NA | F, TNs | Category 2 | 360 samples | daily | Train: 83%, test: 17% | HELM, Bayesian approach, ELM | NA |

| Feedforward | [35] | Nalón river (Spain) | Tur; NH3-N, EC, DO, pH, WT | NA | NA | Category 2 | No details (1 year) | 15 minutes | Train: 90%, test: 10% | ANN(MLP) | NA |

| Feedforward | [128] | Groundwater (Turkey) | pH, TDS, TH | NA | SAR, SO4; CL | Category 2 | 124 samples (1 year) | monthly | Train: 84.1%, test: 15.9% | ANN | NA |

| Feedforward | [89] | Johor River (Malaysia) | DO; WT, pH, NO3, NH3-N | NA | NA | Category 2 | No details (10 year) | monthly | Train:60%, validate: 25%, test: 15% | ANN(MLP), ANFIS | NA |

| Feedforward | [129] | The Taipei Water Source Domain (China) | Tur; | RF | NA | Category 2 | No details (1 year) | No details | No details | BPNN | NA |

| Feedforward | [130] | Mashhad plain (Iran) | EC; | NA | CL; Lon, Lat | Category 2 and Category 3 | 122 samples | No details | Train:65%, validate: 20%, test: 15% | ANN(MLP), ANFIS, geostatistical models | NA |

| Feedforward | [122] | Tai Po River (China) | DO; pH, EC, WT, NH3-N, TP, NO2, NO3 | NA | CL | Category 2 | 252 samples (21 years) | No details | Train:85%, test: 15% | ANN, ANFIS, MLR | NA |

| Feedforward | [137] | Ireland Rivers (Ireland) | DO, BOD, Alk, TH;; WT, pH, EC | NA | DOP (dissolved oxygen percentage), CL;; | Category 2 | 3001 samples (No details) | No details | No details | ANN | NA |

| Feedforward | [42] | Twostatistical databases (European countries) | BOD; DO | NA | other 20 variables | Category 2 | 159 samples (9 years) | No details | Train:88%, test: 12% | GRNN, MLR | NA |

| Feedforward | [81] | Maroon River (Iran) | WT, Tur, pH, EC, TDS, TH; | NA | HCO3, SO4, CL, Na, K, Mg, Ca | Category 2 | No details (20 years) | monthly | Train:60%, validate: 15%, test: 35% | ANN(MLP), RBFNN | NA |

| Feedforward | [36] | River Zayanderud (Iran) | TSS; pH, TH | NA | Na, Mg, CO32−, HCO3, CL, Ca | Category 2 | 1320 samples (10 years) | monthly | No details | RBFNN, TDNN | NA |

| Feedforward | [9] | Ardabil plain (Iran) | EC, TDS; | RF | RO, WL | Category 2 | No details (17 years) | 6 months | Train:71%, test: 29% | ANN, MLR | 1 |

| Feedforward | [25] | Danube River (Serbia) | BOD; WT, DO, pH, NH3-N, COD, EC, NO3, TH, TP | NA | other 8 variables | Category 2 | more than 32,000 samples (years) | No details | Train:72%, validate: 18%, test: 10% | GRNN | NA |

| Feedforward | [82] | Hydrometric stations (USA) | SS;; | NA | Q | Category 0 and Category 3 | No details (8 years) | daily | Train and test:80%, validate:20% | ANN(MLP), SVR, MLR | 1 |

| Feedforward | [138] | Surma River (Angladesh) | BOD, COD;; | NA | NA | Category 0 and Category 3 | No details (3 years) | No details | Train:70%, validate: 15%, test: 15% | RBFNN, MLP | NA |

| Feedforward | [85] | Groundwater (Palestine) | S; EC, TDS, NO3 | NA | Mg, Ca, Na | Category 2 | No details (11 years) | No details | Train: more than 50%, test: less than 50% | ANN(MLP), SVM | NA |

| Feedforward | [24] | River Danube (Hungary) | DO; pH, WT, EC | NA | RO | Category 2 | More than 151 samples (6 years) | monthly | No details | GRNN, ANN(MLP), RBFNN, MLR | NA |

| Feedforward | [60] | Langat River and Klang River (Malaysia) | DO, BOD, COD, SS, pH, NH3-N; | NA | NA | Category 2 | No details (10 years) | monthly | Train:80%, validate: 20% | RBFNN | NA |

| Feedforward | [47] | Eight United States Geological Survey stations (USA) | DO; WT, EC, Tur, pH | NA | YMDH | Category 2 | 35,064 samples (4 years) | hourly | Train:70%, test: 30% | ELM, ANN(MLP) | 1, 12, 24, 48, 72, 168 |

| Feedforward | [162] | Rivers (China) | DO; WT, pH, BOD, NH3-N, TN, TP | NA | other variables | Category 2 | 969 samples | No details | Train and validate: 80%, test: 20% | BPNN, SVM, MLR | NA |

| Feedforward | [86] | Syrenie Stawy Ponds (Poland) | DO, BOD, COD, TN, TP, TA | NA | CL; other ions | Category 2 | No details (19 months) | monthly | Train:60%, validate: 20%, test: 20% | ANN(MLP) | NA |

| Feedforward | [83] | Delaware River (USA) | DO; pH, EC, WT | NA | Q | Category 1 and Category 2 | 2063 samples (6 years) | daily | Train:75%, test: 25% | ANN(MLP), RBFNN, SVM | NA |

| Feedforward | [84] | Zayandeh-rood River (Iran) | NO3; EC, pH, TH | NA | Na, K, Ca, Mg, SO4, CL, bicarbonate | Category 2 | No details | No details | Train:50%, validate: 30%, test: 20% | ANN(MLP) | NA |

| Feedforward | [59] | Saint John River (Canada) | TSS, COD, BOD, DO, Tur; | NA | NA | Category 2 | 39 samples (3 days) | No details | Train:60%, validate: 20%, test: 20% | BPNN, SVM | NA |

| Feedforward | [164] | Karkheh River (Iran) | BOD; TDS, EC | NA | CL, Na, SO4, Mg, SAR, Ca | Category 2 | 13,800 samples (5 years) | No details | No details | ANN | NA |

| Feedforward | [159] | Xuxi River (China) | COD; WT, DO, TN, TP, NH3-N, SD, SS | NA | NA | Category 2 | 110 samples (8 hours) | No details | No details | MLP | NA |

| Feedforward | [102] | Danube River (Serbia) | DO; pH, WT, EC, BOD, COD, SS, P, NO3, TA, TH | NA | five metal ions | Category 2 | No details (6 years; 7 years) | monthly or fortnightly | Train:72%, validate: 18%, test: 10% | BPNN | NA |

| Feedforward | [131] | Sufi Chai river (Iran) | TDS; | NA | Q, Other 4 variables | Category 2 | 144 samples (12 years) | monthly | Train:66%, validate: 17%, test: 17% | ANN(MLP) | NA |

| Feedforward | [127] | River Tisza (Hungary) | DO; WT, EC, pH | NA | RO | Category 2 | More than 1300 samples (6 years) | No details | Train:67%, test: 33% | RBFNN, GRNN, MLR | 12 |

| Feedforward | [171] | Karoon River (Iran) | TH; EC, TDS, pH | NA | SAR; HCO3, CL, SO4, Ca, Mg, Na, K, TAC | Category 2 | No details (49 years) | No details | No details | ANN(MLP), RBFNN | NA |

| Feedforward | [32] | Yamuna River (India) | DO;; BOD, COD, pH, WT, NH3-N | NA | Q | Category 3 | No details (4 years) | monthly | Train:75%, test: 25% | BPNN, SVM, ANFIS, ARIMA | NA |

| Feedforward | [88] | Lakes (USA) | Chl-a; TP, TN, Tur | NA | SD | Category 2 | 1087 samples (6 years) | No details | Train:75%, test: 25% | MLP, ANFIS | NA |

| Feedforward | [139] | Karoun River (Iran) | BOD, COD; EC, Tur, pH | NA | six mental ions | Category 2 | 200 samples (16 years) | No details | No details | ANN, ANFIS, Least Squares SVM(LSSVM) | NA |

| Feedforward | [133] | Lakes (USA) | TN, TP; pH, EC, Tur | NA | NA | Category 2 | 1217 samples | No details | Train:55%, validate: 22%, test: 23% | ANN, LR | NA |

| Feedforward | [48] | Three rivers (USA) | WT; | AT | Q, DOY | Category 2 | No details (8 years) | No details | No details | ELM, ANN(MLP), MLR | NA |

| Feedforward | [63] | St. Johns River (USA) | DO; NH3-N, TDS, pH, WT | NA | CL | Category 2 | 232 samples (12 years) | half a month | Train:75%, test: 25% | CCNN, DWT, VMD-MLP, MLP | NA |

| Recurrent | [111] | Talkheh Rud River (Iran) | TDS; | NA | Q | Category 1 | No details (13 years) | No details | Train:69%, validate & test: 31% | Elman, ANN(MLP) | 1 |

| Recurrent | [3] | Hyriopsis Cumingii ponds (China) | DO;; pH, WT | SR, WS, AT | NA | Category 3 | 816 samples (34 days) | No details | Train and validate:80%, test: 20% | Elman | NA |

| Recurrent | [41] | Danube River (Serbia) | DO; WT, pH, EC | NA | Q | Category 2 | 61 samples | monthly or semi-monthly | Train: 85%, test: 15% | Elman, GRNN, BPNN, MLR | NA |

| Recurrent | [167] | Chou-Shui River (China) | pH, Alk | NA | As;; Ca | Category 3 | No details (8 years) | No details | No details | Systematical dynamic-neural modeling (SDM), BPNN, NARX | NA |

| Recurrent | [55] | Yenicaga Lake (Turkey) | DO; WT, EC, pH | NA | WL, DOY, hour | Category 2 | 13,744 samples (573 days) | 15 minutes | Train:60%, validate: 15%, test: 25% | TLRN, RNN, TDNN | NA |

| Recurrent | [12] | Dahan River (China) | TP;; EC, SS, pH, DO, BOD, COD, WT, NH3-N | NA | Coli | Category 3 | 280 samples (11 years) | monthly | Train:75%, test: 25% | NARX, BPNN, MLR | 1 |

| Recurrent | [6] | Taihu Lake (China) | DO, TP;; | NA | NA | Category 0 | 657 samples (7 years) | monthly | Train:90%, test: 10% | LSTM, BPNN, OS-ELM | NA |

| Recurrent | [38] | WWTP(China) | BOD, TP;; COD, TSS, pH, DO, WT | NA | ORP | Category 2 and Category 3 | 5000 samples | No details | Train:45%, validate: 15%, test: 40% | RESN | NA |

| Recurrent | [66] | Mariculturebase (China) | WT, pH; EC, S, Chl-a, Tur, DO | NA | NA | Category 2 | 710 samples (21 days) | 5 minutes | Train:86%, test: 14% | LSTM, RNN | >32 |

| Recurrent | [67] | Marine aquaculture base (China) | pH, WT;; | NA | NA | Category 0 | 710 samples | No details | Train:86%, test: 14% | SRU | NA |

| Recurrent | [53] | Geum River basin (Korea) | BOD, COD, SS; | AT, WS | WL, Q | Category 2 | No details (10 years) | daily | Train:70%, test: 30% | RNN, LSTM | 1 |

| Recurrent | [165] | Lakes (USA) | WT;; | NA | NA | Category 0 | 1520 samples | No details | Train:65%, test: 35% | LSTM | NA |

| Recurrent | [153] | Reservoir (China) | Chl-a;; WT, pH, EC, DO, Tur | NA | ORP | Category 0 and Category 2 | 1440 samples (5 days) | 5 minutes | No details | TL-FNN, RNN, LSTM | NA |

| Recurrent | [134] | Two gauged stations (USA) | SS;; | NA | Q | Category 1 | 10,060 samples (30 years) | daily | Train: 70–90%, test: 30–10% | WANN | NA |

| Recurrent | [135] | Agricultural catchment (France) | NO3, SS; | RF | Q | Category 1 and Category 2 | 26,355 samples (1 year) | daily | Train: 66.67%, test: 33.33% | SOM-MLP, MLP | NA |

| Recurrent | [140] | Four streams (USA) | WT; | SR, AT | NA | Category 2 | No details (4 years) | 10 minutes | Train:50%, validate: 25%, test: 25% | u GA-ANN, BPNN, RBFNN | NA |

| Hybrid | [141] | Chaohu Lake (China) | TP, TN, Chl-a; | NA | Bands | Category 2 | 18,368 (TN),1050(TP) samples (more than 3 years) | No details | Train:86%, test: 14% | GA-BP, BPNN, RBFNN | NA |

| Hybrid | [142] | Two stations (USA) | SS;; | NA | Q | Category 1 and Category 3 | 730 samples (2 years) | daily | Train:50%, test: 50% | ANN-differential evolution | NA |

| Hybrid | [71] | B¨uy ¨ uk Menderes river (Turkey) | WT, DO, B;; | NA | NA | Category 0 | 108 samples (9 years) | monthly | Train:67%, test: 33% | ARIMA-ANN, ANN, ARIMA | NA |

| Hybrid | [143] | Karkheh reservoir (Iran) | water quality variables | NA | NA | Category 2 | No details (6 months) | No details | No details | PSO-ANN | NA |

| Hybrid | [1] | WWTP(China) | DO; COD, BOD, SS | NA | other two variables | Category 3 | No details | daily | No details | SOM-RBFNN, ANN(MLP) | NA |

| Hybrid | [144] | Bangkok canals (Thailand) | DO;; WT, pH, BOD, COD, SS, NH3-N, TP, NO2, NO3, | NA | total coliform, hydrogen sulfide | Category 3 | 13,846 samples (5 years) | monthly | Train: 70%, test: 30% | FCM-MLP, MLP | 1 |

| Hybrid | [56] | Lake Baiyangdian (China) | Chl-a; WT, pH, DO, SD, TP, TN, NH3–N, BOD, COD | Precip, Evap | WL, LV, Sth | Category 2 | No details (10 years) | monthly | No details | WANN, ANN, ARIMA | NA |

| Hybrid | [64] | Songhua River (China) | DO, NH3-N;; | NA | NA | Category 0 | No details (7 years) | monthly | Train:71%, test: 29% | BWNN, ANN, WANN, ARIMA | 1 |

| Hybrid | [136] | Gazacoastal aquifer (Palestine) | NO3; EC, TDS, NO3, | CL, SO4, Ca, Mg, Na | Category 2 | No details (10 year) | No details | No details | K-means-ANN | NA | |

| Hybrid | [43] | WWTP (Turkey) | COD; SS, pH, WT | NA | Q | Category 2 | 265 samples (3 years) | daily | Train:50%, validate:25%, test: 25% | k-means-MLP, Arima-RBF, ANN(MLP), MLR, RBFNN, GRNN, ANFIS | NA |

| Hybrid | [70] | Yangtze River (China) | DO, NH3-N;; | NA | NA | Category 0 | 480 samples (9 years) | weekly | Train:67%, validate & test: 33% | ARIMA-RBFNN | 1 |

| Hybrid | [120] | Taihu Lake (China) | DO, EC, pH, NH3-N, TN, COD, TP, BOD, COD; | NA | VP, petroleum, other 11 variables | Category 2 | 2680 samples | No details | Train:75%, test: 25% | PCA-GA-BPNN | NA |

| Hybrid | [62] | Gauging station (Iran) | DO, WT, S;; Tur, Chl-a | NA | NA | Category 0 and Category 2 and Category 3 | 650, 540 samples | daily, hourly | Train:70%, validate: 15%, test: 15% | WANN, ANN | 1, 2, 3 |

| Hybrid | [172] | Two gauging stations (USA) | SS;; | NA | Q | Category 0 and Category 3 | 1974 samples (8 years) | daily | Train:75%, test: 25% | WANN | NA |

| Hybrid | [173] | River Yamuna (India) | COD;; | NA | NA | Category 0 | 120 samples (10 years) | monthly | Train:92.5%, test: 7.5% | ANN, ANFIS, WANFIS | 9 |

| Hybrid | [100] | Two catchments (Poland) | WT; | AT | Q, declination of the Sun | Category 2 | No details (10 years) | daily | No details | MLP, ANFIS, WNN, Product-Unit ANNs (PUNN), ensemble aggregation approach | 1, 3, 5 |

| Hybrid | [7] | South San Francisco bay (USA) | Chl-a;; | NA | NA | Category 0 | No details (20 years) | monthly | Train:60%, validate: 20%, test: 20% | WANN, MLR, GA-SVR | 1 |

| Hybrid | [72] | Asi River (Turkey) | EC;; | NA | Q | Category 0 and Category 3 | 274 samples (23 years) | No details | Train:75%, test: 25% | WANN, ANN | NA |

| Hybrid | [146] | Klamath River (USA) | DO;; pH, WT, EC, SD | NA | NA | Category 0 and Category 2 | No details | monthly | Train:80%, validate: 10%, test: 10% | WANN, ANN, MLR | NA |

| Hybrid | [147] | Prawn culture ponds (China) | WT; | NA | NA | Category 0 | 1152 samples (8 days) | 10 minutes | Train:87.5%, test: 12.5% | EMD-BPNN, BPNN | 1 |

| Hybrid | [44] | WWTP(China) | BOD; COD, SS, DO, pH | NA | NA | Category 2 | 598 samples (19 months) | daily | No details | Chaos Theory-PCA-ANN | NA |

| Hybrid | [174] | Charlotte harbor marine waters | TN; | NA | NA | Category 0 | No details (13 years) | monthly | Train:70%, validate: 15%, test: 15% | WANN, wavelet-gene expression programing (WGEP), TDNN, GEP, MLR | 1 |

| Hybrid | [73] | Groundwater (Iran) | EC, Tur, pH, NO2, NO3 | NA | Cu | Category 2 | No details (8 years) | No details | Train:80%, test: 20% | PCA-ANN | NA |

| Hybrid | [17] | Downstream (China) | WT, DO, pH, EC, TN, TP, Tur, Chl-a; | NA | NA | Category 0 | No details (13 months) | daily | Train:80%, validate: 10%, test: 10% | Ensemble-ANN | 1 |

| Hybrid | [104] | Karaj River (Iran) | NO3; | NA | CL; Q | Category 0 and Category 1 and Category 3 | No details | monthly | Train:80%, validate: 10%, test: 10% | WANN, ANN, MLR | NA |

| Hybrid | [148] | Crab ponds (China) | DO;; WT | SR, WS, AT, AH | NA | Category 3 | 700 samples (22 days) | 20 minutes | Train:71%, test: 29% | RBFNN-IPSO-LSSVM, BPNN | 3 |

| Hybrid | [149] | Guanting reservoirs (China) | DO, COD, NH3-N;; | NA | NA | Category 0 | No details (18 weeks) | weekly | No details | Kalman-BPNN | 2 |

| Hybrid | [101] | Toutle River (USA) | SS;; | NA | Q | Category 0 and Category 3 | 2000 samples (8 years) | daily | No details | A least-square ensemble models-WANN | NA |

| Hybrid | [69] | WWTP (China) | DO; pH | NA | NA | Category 2 | 50 samples | No details | Train:70%, test: 30% | FNN-WNN | NA |

| Hybrid | [52] | Clackamas River (USA) | DO;; WT | NA | Q | Category 3 | 1623 samples (6 years) | daily | Train:78%, test: 22% | WANN, WMLR, ANN(MLP), MLR | 1, 31 |

| Hybrid | [123] | Representative lakes (China) | Chl-a; WT, pH;; NH3-N, TN, TP, DO, BOD | NA | other 17 variables | Category 3 | No details (3 years) | No details | Train:80%, test: 20% | GA-BP | NA |

| Hybrid | [16] | Miyun reservoir (China) | DO, COD, NH3-N; | NA | NA | Category 0 | 5000 samples (2 years) | weekly | Train:98%, test: 2% | PSO-WNN, WNN, BPNN, SVM | NA |

| Hybrid | [126] | Aji-Chay River (Iran) | EC;; | NA | NA | Category 0 | 315 samples (26 years) | monthly | Train:90%, test: 10% | WA-ELM, ANFIS | 1, 2, 3 |

| Hybrid | [4] | Yangtze River (China) | DO, CODMn, BOD;; | NA | NA | Category 3 | 65 samples (2 months) | daily | Train:50%, validate: 16%, test: 34% | IABC-BPNN, BPNN | NA |

| Hybrid | [33] | WWTP(China) | COD; COD, SS, pH, NH3-N | NA | NA | Category 2 | 250 samples | No details | No details | WANN, ANN(MLP) | NA |

| Hybrid | [175] | The Stream Veszprémi-Séd (Hungary) | pH, EC, DO, Tur;; | NA | NA | Category 2 | No details (7 years) | yearly | No details | DE-ANN | NA |

| Hybrid | [54] | Shrimp pond (China) | DO; WT, NH3-N, pH | AT, AH, AP, WS | NA | Category 2 | 2880 samples (20 days) | 10 minutes | Train:75%, test: 25% | SAE-LSTM, SAE-BPNN, LSTM, BPNN | 18, 36, 72 |

| Hybrid | [124] | Four basins (Iran) | TDS; EC | NA | Na, CL | Category 2 | No details (20 years) | No details | Train:80%, test: 20% | WANN, GEP, WANFIS | NA |

| Hybrid | [125] | Blue River (USA) | pH, DO, Tur; WT | NA | Q | Category 0 and Category 3 | No details (4 years) | daily | Train:80%, test: 20% | WANN, WGEP | 1 |

| Hybrid | [157] | Chattahoochee River (USA) | pH;; | NA | Q | Category 3 | 730 samples (2 years) | daily | Train:75%, test: 20% | WANN, ANN, WMLR, MLR | 1, 2, 3 |

| Hybrid | [176] | Morava River Basin (Serbia) | WT, EC; SS, DO | NA | other ions | Category 2 | No details (10 years) | 15 days | No details | PCA-ANN | NA |

| Hybrid | [151] | Tai Lake, Victoria Bay (China) | DO;; WT, pH, NO2, TP | Precip | NA | Category 3 | No details (7 years) | No details | Train:80%, test: 20% | IGRA-LSTM, BPNN, ARIMA | NA |

| Hybrid | [46] | WWTP (Saudi Arabia) | C, DO, SS, pH | NA | CL;; | Category 3 | 774 samples | No details | No details | PCA-ELM | NA |

| Hybrid | [5] | Prespa Lake (Greece) | DO, Chl-a;; | NA | NA | Category 0 | 363 samples (11 months) | daily | Train:70%, validate: 15%, test: 15% | CEEMDAN-VMD -ELM) | NA |

| Hybrid | [87] | The Warta River (Poland) | WT;; | AT | NA | Category 3 | No details (22 to 27 years) | daily | Train:4/9, validate: 2/9, test: 1/3 | WANN(MLP), MLP | 1 |

| Hybrid | [152] | Ashi River (China) | DO, NH3-N, Tur;; | NA | NA | Category 0 | 846 samples (4 hours) | more than 4 months | Train:70%, test: 30% | IGA-BPNN | 1 |

| Hybrid | [15] | Qiantang River (China) | pH, TP, DO;; | NA | NA | Category 0 | 1448 samples | No details | Train:70%, test: 30% | DS-RNN, RNN, BPNN, SVR | NA |

| Hybrid | [132] | The Johor river (Malaysia) | NH3-N, SS, pH; Tur, WT, | NA | COD Mn, Mg, Na | Category 2 | No details (1 year) | No details | No details | WANFIS, MLP, RBFNN, ANFIS | NA |

| Hybrid | [103] | Hilo Bay (the Pacific Ocean) | Chl-a, S;; | NA | NA | Category 0 | No details (5 years) | daily | No details | Bates–Granger (BG)-least square based ensemble (LSE)-WANN | 1, 3, 5 |

| Hybrid | [154] | WWTP (China) | COD, TP, pH, TN; DO, NH3-N, BOD, TH | NA | CL, oil-related quality indicators | Category 2 | 23,268 samples (4 years) | hourly | Train:80%, test: 20% | PSO-LSTM | 1 |

| Hybrid | [68] | Beihai Lake (China) | pH, Chl-a, DO, BOD, EC; | NA | HA;; | Category 3 | No details (5 days) | 30 minutes | Train:70%, test: 30% | PSO-GA-BPNN | 12 |

| Hybrid | [26] | River (China) | COD;; | NA | NA | Category 0 | 460 samples (14 months) | 12 hours | Train:95%, test: 5% | LSTM-RNN | 1 |

| Hybrid | [45] | Zhejiang Institute of Freshwater Fisheries (China) | DO; WT | AT, AH, WS, WD, SR, AP | SM, ST | Category 4 | 5006 samples (1 year) | 10 minutes | Train:80%, test: 20% | attention-RNN | 6, 12, 48, 144, 288 |

| Hybrid | [39] | Taihu Lake (China) | pH; DO, COD, NH3-N | NA | NA | Category 2 | 28 samples (6 months) | Weekly | Train:75%, test: 25% | grey theory-GRNN, BPNN, RBFNN | 1 |

| Emerging | [58] | Wastewater factory (China) | TP; WT, TSS, pH, NH3-N, NO3, DO | NA | other 3 variables | Category 2 | 1000 samples (4 months) | No details | Train:80%, test: 20% | SODBN | NA |

| Emerging | [57] | Recirculating Aquaculture Systems (China) | DO;; EC, pH, WT | NA | NA | Category 3 | 4500 samples (13 months) | 10 minutes | Train:67%, validate: 11%, test: 22% | CNN, BPNN | 18 |

References

- Han, H.G.; Qiao, J.F.; Chen, Q.L. Model predictive control of dissolved oxygen concentration based on a self-organizing RBF neural network. Control Eng. Pract. 2012, 20, 465–476. [Google Scholar] [CrossRef]

- Zheng, F.; Tao, R.; Maier, H.R.; See, L.; Savic, D.; Zhang, T.; Chen, Q.; Assumpção, T.H.; Yang, P.; Heidari, B.; et al. Crowdsourcing Methods for Data Collection in Geophysics: State of the Art, Issues, and Future Directions. Rev. Geophys. 2018, 56, 698–740. [Google Scholar] [CrossRef]

- Liu, S.; Yan, M.; Tai, H.; Xu, L.; Li, D. Prediction of dissolved oxygen content in aquaculture of hyriopsis cumingii using elman neural network. IFIP Adv. Inf. Commun. Technol. 2012, 370 AICT, 508–518. [Google Scholar] [CrossRef]

- Chen, S.; Fang, G.; Huang, X.; Zhang, Y. Water quality prediction model of a water diversion project based on the improved artificial bee colony-backpropagation neural network. Water 2018, 10, 806. [Google Scholar] [CrossRef]

- Fijani, E.; Barzegar, R.; Deo, R.; Tziritis, E.; Konstantinos, S. Design and implementation of a hybrid model based on two-layer decomposition method coupled with extreme learning machines to support real-time environmental monitoring of water quality parameters. Sci. Total Environ. 2019, 648, 839–853. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhou, J.; Chen, K.; Wang, Y.; Liu, L. Water quality prediction method based on LSTM neural network. In Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering, ISKE 2017, Nanjing, China, 24–26 November 2017. [Google Scholar] [CrossRef]

- Rajaee, T.; Boroumand, A. Forecasting of chlorophyll-a concentrations in South San Francisco Bay using five different models. Appl. Ocean Res. 2015, 53, 208–217. [Google Scholar] [CrossRef]

- Araghinejad, S. Data-Driven Modeling: Using MATLAB® in Water Resources and Environmental Engineering; Springer: Berlin, Germany, 2014; ISBN 978-94-007-7505-3. [Google Scholar]

- Nourani, V.; Alami, M.T.; Vousoughi, F.D. Self-organizing map clustering technique for ANN-based spatiotemporal modeling of groundwater quality parameters. J. Hydroinformatics 2016, 18, 288–309. [Google Scholar] [CrossRef]

- Zare, A.H.; Bayat, V.M.; Daneshkare, A.P. Forecasting nitrate concentration in groundwater using artificial neural network and linear regression models. Int. Agrophysics 2011, 25, 187–192. [Google Scholar]

- Huo, S.; He, Z.; Su, J.; Xi, B.; Zhu, C. Using Artificial Neural Network Models for Eutrophication Prediction. Procedia Environ. Sci. 2013, 18, 310–316. [Google Scholar] [CrossRef]

- Chang, F.J.; Chen, P.A.; Chang, L.C.; Tsai, Y.H. Estimating spatio-temporal dynamics of stream total phosphate concentration by soft computing techniques. Sci. Total Environ. 2016, 562, 228–236. [Google Scholar] [CrossRef]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with artificial neural networks: The state of the art. Int. J. Forecast. 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Anmala, J.; Meier, O.W.; Meier, A.J.; Grubbs, S. GIS and artificial neural network-based water quality model for a stream network in the upper green river basin, Kentucky, USA. J. Environ. Eng. 2015, 141, 1–15. [Google Scholar] [CrossRef]

- Li, L.; Jiang, P.; Xu, H.; Lin, G.; Guo, D.; Wu, H. Water quality prediction based on recurrent neural network and improved evidence theory: A case study of Qiantang River, China. Environ. Sci. Pollut. Res. 2019, 26, 19879–19896. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zou, Z.; Shan, W. Development of a method for comprehensive water quality forecasting and its application in Miyun reservoir of Beijing, China. J. Environ. Sci. 2017, 56, 240–246. [Google Scholar] [CrossRef]

- Seo, I.W.; Yun, S.H.; Choi, S.Y. Forecasting Water Quality Parameters by ANN Model Using Pre-processing Technique at the Downstream of Cheongpyeong Dam. Procedia Eng. 2016, 154, 1110–1115. [Google Scholar] [CrossRef]

- Heddam, S. Modelling hourly dissolved oxygen concentration (DO) using dynamic evolving neural-fuzzy inference system (DENFIS)-based approach: Case study of Klamath River at Miller Island Boat Ramp, OR, USA. Environ. Sci. Pollut. Res. 2014, 21, 9212–9227. [Google Scholar] [CrossRef]

- Wang, T.S.; Tan, C.H.; Chen, L.; Tsai, Y.C. Applying artificial neural networks and remote sensing to estimate chlorophyll-a concentration in water body. In Proceedings of the 2008 2nd International Sympoisum Intelligent Information Technology Application IITA, Shanghai, China, 20–22 December 2008; pp. 540–544. [Google Scholar] [CrossRef]

- Maier, H.R.; Dandy, G.C. Neural networks for the prediction and forecasting of water resources variables: A review of modelling issues and applications. Environ. Model. Softw. 2000, 15, 101–124. [Google Scholar] [CrossRef]

- Loke, E.; Warnaars, E.A.; Jacobsen, P.; Nelen, F.; Do Céu Almeida, M. Artificial neural networks as a tool in urban storm drainage. Water Sci. Technol. 1997, 36, 101–109. [Google Scholar] [CrossRef]

- Tota-Maharaj, K.; Scholz, M. Artificial neural network simulation of combined permeable pavement and earth energy systems treating storm water. J. Environ. Eng. 2012, 138, 499–509. [Google Scholar] [CrossRef]

- Nour, M.H.; Smith, D.W.; El-Din, M.G.; Prepas, E.E. The application of artificial neural networks to flow and phosphorus dynamics in small streams on the Boreal Plain, with emphasis on the role of wetlands. Ecol. Modell. 2006, 191, 19–32. [Google Scholar] [CrossRef]

- Csábrági, A.; Molnár, S.; Tanos, P.; Kovács, J. Application of artificial neural networks to the forecasting of dissolved oxygen content in the Hungarian section of the river Danube. Ecol. Eng. 2017, 100, 63–72. [Google Scholar] [CrossRef]

- Šiljić Tomić, A.N.; Antanasijević, D.Z.; Ristić, M.; Perić-Grujić, A.A.; Pocajt, V.V. Modeling the BOD of Danube River in Serbia using spatial, temporal, and input variables optimized artificial neural network models. Environ. Monit. Assess. 2016, 188. [Google Scholar] [CrossRef] [PubMed]

- Ye, Q.; Yang, X.; Chen, C.; Wang, J. River Water Quality Parameters Prediction Method Based on LSTM-RNN Model. In Proceedings of the 2019 Chinese Control and Decision Conference CCDC, Nanchang, China, 3–5 June 2019; pp. 3024–3028. [Google Scholar] [CrossRef]

- Maier, H.R.; Jain, A.; Dandy, G.C.; Sudheer, K.P. Methods used for the development of neural networks for the prediction of water resource variables in river systems: Current status and future directions. Environ. Model. Softw. 2010, 25, 891–909. [Google Scholar] [CrossRef]

- Pu, F.; Ding, C.; Chao, Z.; Yu, Y.; Xu, X. Water-quality classification of inland lakes using Landsat8 images by convolutional neural networks. Remote Sens. 2019, 11, 1674. [Google Scholar] [CrossRef]

- Nourani, V.; Hosseini Baghanam, A.; Adamowski, J.; Kisi, O. Applications of hybrid wavelet-Artificial Intelligence models in hydrology: A review. J. Hydrol. 2014, 514, 358–377. [Google Scholar] [CrossRef]

- Wu, W.; Dandy, G.C.; Maier, H.R. Protocol for developing ANN models and its application to the assessment of the quality of the ANN model development process in drinking water quality modelling. Environ. Model. Softw. 2014, 54, 108–127. [Google Scholar] [CrossRef]

- Cabaneros, S.M.; Calautit, J.K.; Hughes, B.R. A review of artificial neural network models for ambient air pollution prediction. Environ. Model. Softw. 2019, 119, 285–304. [Google Scholar] [CrossRef]

- Elkiran, G.; Nourani, V.; Abba, S.I. Multi-step ahead modelling of river water quality parameters using ensemble artificial intelligence-based approach. J. Hydrol. 2019, 577, 123962. [Google Scholar] [CrossRef]

- Cong, Q.; Yu, W. Integrated soft sensor with wavelet neural network and adaptive weighted fusion for water quality estimation in wastewater treatment process. Measurement 2018, 124, 436–446. [Google Scholar] [CrossRef]

- Humphrey, G.B.; Maier, H.R.; Wu, W.; Mount, N.J.; Dandy, G.C.; Abrahart, R.J.; Dawson, C.W. Improved validation framework and R-package for artificial neural network models. Environ. Model. Softw. 2017, 92, 82–106. [Google Scholar] [CrossRef]

- Iglesias, C.; Martínez Torres, J.; García Nieto, P.J.; Alonso Fernández, J.R.; Díaz Muñiz, C.; Piñeiro, J.I.; Taboada, J. Turbidity Prediction in a River Basin by Using Artificial Neural Networks: A Case Study in Northern Spain. Water Resour. Manag. 2014, 28, 319–331. [Google Scholar] [CrossRef]

- Gholamreza, A.; Afshin, M.-D.; Shiva, H.A.; Nasrin, R. Application of artificial neural networks to predict total dissolved solids in the river Zayanderud, Iran. Environ. Eng. Res. 2016, 21, 333–340. [Google Scholar] [CrossRef]

- Najah, A.; El-Shafie, A.; Karim, O.A.; El-Shafie, A.H. Application of artificial neural networks for water quality prediction. Neural Comput. Appl. 2012, 22, 187–201. [Google Scholar] [CrossRef]

- Zhao, J.; Zhao, C.; Zhang, F.; Wu, G.; Wang, H. Water Quality Prediction in the Waste Water Treatment Process Based on Ridge Regression Echo State Network. IOP Conf. Ser. Mater. Sci. Eng. 2018, 435. [Google Scholar] [CrossRef]

- Zhai, W.; Zhou, X.; Man, J.; Xu, Q.; Jiang, Q.; Yang, Z.; Jiang, L.; Gao, Z.; Yuan, Y.; Gao, W. Prediction of water quality based on artificial neural network with grey theory. IOP Conf. Ser. Earth Environ. Sci. 2019, 295. [Google Scholar] [CrossRef]

- Palani, S.; Liong, S.Y.; Tkalich, P. An ANN application for water quality forecasting. Mar. Pollut. Bull. 2008, 56, 1586–1597. [Google Scholar] [CrossRef] [PubMed]

- Antanasijević, D.; Pocajt, V.; Povrenović, D.; Perić-Grujić, A.; Ristić, M. Modelling of dissolved oxygen content using artificial neural networks: Danube River, North Serbia, case study. Environ. Sci. Pollut. Res. 2013, 20, 9006–9013. [Google Scholar] [CrossRef]

- Aleksandra, Š.; Antanasijevi, D. Perić-Grujić, A.; Ristić, M.; Pocajt, V. Artificial neural network modelling of biological oxygen demand in rivers at the national level with input selection based on Monte Carlo simulations. Environ. Sci. Pollut. Res. 2015, 22, 4230–4241. [Google Scholar] [CrossRef]

- Ay, M.; Kisi, O. Modelling of chemical oxygen demand by usinAg ANNs, ANFIS and k-means clustering techniques. J. Hydrol. 2014, 511, 279–289. [Google Scholar] [CrossRef]

- Qiao, J.; Hu, Z.; Li, W. Soft measurement modeling based on chaos theory for biochemical oxygen demand (BOD). Water 2016, 8, 581. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Q.; Song, L.; Chen, Y. Attention-based recurrent neural networks for accurate short-term and long-term dissolved oxygen prediction. Comput. Electron. Agric. 2019, 165, 104964. [Google Scholar] [CrossRef]

- Djerioui, M.; Bouamar, M.; Ladjal, M.; Zerguine, A. Chlorine Soft Sensor Based on Extreme Learning Machine for Water Quality Monitoring. Arab. J. Sci. Eng. 2019, 44, 2033–2044. [Google Scholar] [CrossRef]

- Heddam, S.; Kisi, O. Extreme learning machines: A new approach for modeling dissolved oxygen (DO) concentration with and without water quality variables as predictors. Environ. Sci. Pollut. Res. 2017, 24, 16702–16724. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Heddam, S.; Wu, S.; Dai, J.; Jia, B. Extreme learning machine-based prediction of daily water temperature for rivers. Environ. Earth Sci. 2019, 78, 1–17. [Google Scholar] [CrossRef]

- Elbisy, M.S.; Ali, H.M.; Abd-Elall, M.A.; Alaboud, T.M. The use of feed-forward back propagation and cascade correlation for the neural network prediction of surface water quality parameters. Water Resour. 2014, 41, 709–718. [Google Scholar] [CrossRef]

- Baek, G.; Cheon, S.P.; Kim, S.; Kim, Y.; Kim, H.; Kim, C.; Kim, S. Modular neural networks prediction model based A 2/O process control system. Int. J. Precis. Eng. Manuf. 2012, 13, 905–913. [Google Scholar] [CrossRef]

- Ding, D.; Zhang, M.; Pan, X.; Yang, M.; He, X. Modeling extreme events in time series prediction. In Proceedings of the ACM SIGKDD International Conference Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; Volume 1, pp. 1114–1122. [Google Scholar] [CrossRef]

- Khani, S.; Rajaee, T. Modeling of Dissolved Oxygen Concentration and Its Hysteresis Behavior in Rivers Using Wavelet Transform-Based Hybrid Models. Clean-Soil Air Water 2017, 45. [Google Scholar] [CrossRef]

- Lim, H.; An, H.; Kim, H.; Lee, J. Prediction of pollution loads in the Geum River upstream using the recurrent neural network algorithm. Korean J. Agrcultural Sci. 2019, 46, 67–78. [Google Scholar] [CrossRef]

- Li, Z.; Peng, F.; Niu, B.; Li, G.; Wu, J.; Miao, Z. Water Quality Prediction Model Combining Sparse Auto-encoder and LSTM Network. IFAC-PapersOnLine 2018, 51, 831–836. [Google Scholar] [CrossRef]

- Evrendilek, F.; Karakaya, N. Monitoring diel dissolved oxygen dynamics through integrating wavelet denoising and temporal neural networks. Environ. Monit. Assess. 2014, 186, 1583–1591. [Google Scholar] [CrossRef]

- Wang, F.; Wang, X.; Chen, B.; Zhao, Y.; Yang, Z. Chlorophyll a simulation in a lake ecosystem using a model with wavelet analysis and artificial neural network. Environ. Manag. 2013, 51, 1044–1054. [Google Scholar] [CrossRef] [PubMed]

- Ta, X.; Wei, Y. Research on a dissolved oxygen prediction method for recirculating aquaculture systems based on a convolution neural network. Comput. Electron. Agric. 2018, 145, 302–310. [Google Scholar] [CrossRef]

- Qiao, J.; Wang, G.; Li, X.; Li, W. A self-organizing deep belief network for nonlinear system modeling. Appl. Soft Comput. J. 2018, 65, 170–183. [Google Scholar] [CrossRef]

- Sharaf El Din, E.; Zhang, Y.; Suliman, A. Mapping concentrations of surface water quality parameters using a novel remote sensing and artificial intelligence framework. Int. J. Remote Sens. 2017, 38, 1023–1042. [Google Scholar] [CrossRef]

- Hameed, M.; Sharqi, S.S.; Yaseen, Z.M.; Afan, H.A.; Hussain, A.; Elshafie, A. Application of artificial intelligence (AI) techniques in water quality index prediction: A case study in tropical region, Malaysia. Neural Comput. Appl. 2017, 28, 893–905. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Fitch, P.; Thorburn, P.J. Predicting the trend of dissolved oxygen based on the kPCA-RNN model. Water 2020, 12, 585. [Google Scholar] [CrossRef]

- Alizadeh, M.J.; Kavianpour, M.R. Development of wavelet-ANN models to predict water quality parameters in Hilo Bay, Pacific Ocean. Mar. Pollut. Bull. 2015, 98, 171–178. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Seo, Y.; Kim, S.; Ghorbani, M.A.; Samadianfard, S.; Naghshara, S.; Kim, N.W.; Singh, V.P. Can decomposition approaches always enhance soft computing models? Predicting the dissolved oxygen concentration in the St. Johns River, Florida. Appl. Sci. 2019, 9, 2534. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, T.; Zhao, Y.; Jiang, J.; Wang, Y.; Guo, L.; Wang, P. Monthly water quality forecasting and uncertainty assessment via bootstrapped wavelet neural networks under missing data for Harbin, China. Environ. Sci. Pollut. Res. 2013, 20, 8909–8923. [Google Scholar] [CrossRef]

- Lee, K.J.; Yun, S.T.; Yu, S.; Kim, K.H.; Lee, J.H.; Lee, S.H. The combined use of self-organizing map technique and fuzzy c-means clustering to evaluate urban groundwater quality in Seoul metropolitan city, South Korea. J. Hydrol. 2019, 569, 685–697. [Google Scholar] [CrossRef]

- Hu, Z.; Zhang, Y.; Zhao, Y.; Xie, M.; Zhong, J.; Tu, Z.; Liu, J. A water quality prediction method based on the deep LSTM network considering correlation in smart mariculture. Sensors 2019, 19, 1420. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Yu, C.; Hu, Z.; Zhao, Y.; Xia, X.; Tu, Z.; Li, R. Automatic and accurate prediction of key water quality parameters based on SRU deep learning in mariculture. In Proceedings of the 2018 IEEE International Conference on Advanced Manufacturing ICAM 2018, Yunlin, Taiwan, 16–18 November 2018; pp. 437–440. [Google Scholar] [CrossRef]

- Yan, J.; Xu, Z.; Yu, Y.; Xu, H.; Gao, K. Application of a hybrid optimized bp network model to estimatewater quality parameters of Beihai Lake in Beijing. Appl. Sci. 2019, 9, 1863. [Google Scholar] [CrossRef]

- Huang, M.; Zhang, T.; Ruan, J.; Chen, X. A New Efficient Hybrid Intelligent Model for Biodegradation Process of DMP with Fuzzy Wavelet Neural Networks. Sci. Rep. 2017, 7, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Wang, G.; Zhang, X.; Guo, Y.; Li, G. Water quality prediction based on a novel hybrid model of ARIMA and RBF neural network. In Proceedings of the 2014 IEEE 3rd International Conference Cloud Computing Intelligence System, Shenzhen, China, 27–29 November 2014; pp. 33–40. [Google Scholar] [CrossRef]

- Ömer Faruk, D. A hybrid neural network and ARIMA model for water quality time series prediction. Eng. Appl. Artif. Intell. 2010, 23, 586–594. [Google Scholar] [CrossRef]

- Ravansalar, M.; Rajaee, T. Evaluation of wavelet performance via an ANN-based electrical conductivity prediction model. Environ. Monit. Assess. 2015, 187. [Google Scholar] [CrossRef]

- Sakizadeh, M.; Malian, A.; Ahmadpour, E. Groundwater Quality Modeling with a Small Data Set. Groundwater 2016, 54, 115–120. [Google Scholar] [CrossRef]

- Lin, Q.; Yang, W.; Zheng, C.; Lu, K.; Zheng, Z.; Wang, J.; Zhu, J. Deep-learning based approach for forecast of water quality in intensive shrimp ponds. Indian J. Fish. 2018, 65, 75–80. [Google Scholar] [CrossRef]

- Dogan, E.; Ates, A.; Yilmaz, C.; Eren, B. Application of Artificial Neural Networks to Estimate Wastewater Treatment Plant Inlet Biochemical Oxygen Demand. Environ. Prog. 2008, 27, 439–446. [Google Scholar] [CrossRef]

- Elhatip, H.; Kömür, M.A. Evaluation of water quality parameters for the Mamasin dam in Aksaray City in the central Anatolian part of Turkey by means of artificial neural networks. Environ. Geol. 2008, 53, 1157–1164. [Google Scholar] [CrossRef]

- Al-Mahallawi, K.; Mania, J.; Hani, A.; Shahrour, I. Using of neural networks for the prediction of nitrate groundwater contamination in rural and agricultural areas. Environ. Earth Sci. 2012, 65, 917–928. [Google Scholar] [CrossRef]

- Hong, Y.S.T. Dynamic nonlinear state-space model with a neural network via improved sequential learning algorithm for an online real-time hydrological modeling. J. Hydrol. 2012, 468–469, 11–21. [Google Scholar] [CrossRef]

- Bayram, A.; Kankal, M.; Önsoy, H. Estimation of suspended sediment concentration from turbidity measurements using artificial neural networks. Environ. Monit. Assess. 2012, 184, 4355–4365. [Google Scholar] [CrossRef] [PubMed]

- Bayram, A.; Kankal, M.; Tayfur, G.; Önsoy, H. Prediction of suspended sediment concentration from water quality variables. Neural Comput. Appl. 2014, 24, 1079–1087. [Google Scholar] [CrossRef]

- Tabari, H.; Talaee, P.H. Reconstruction of river water quality missing data using artificial neural networks. Water Qual. Res. J. Canada 2015, 50, 326–335. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Kişi, Ö.; Adamowski, J.; Ramezani-Charmahineh, A. Evaluation of data driven models for river suspended sediment concentration modeling. J. Hydrol. 2016, 535, 457–472. [Google Scholar] [CrossRef]

- Olyaie, E.; Zare Abyaneh, H.; Danandeh Mehr, A. A comparative analysis among computational intelligence techniques for dissolved oxygen prediction in Delaware River. Geosci. Front. 2017, 8, 517–527. [Google Scholar] [CrossRef]

- Ostad-Ali-Askari, K.; Shayannejad, M.; Ghorbanizadeh-Kharazi, H. Artificial neural network for modeling nitrate pollution of groundwater in marginal area of Zayandeh-rood River, Isfahan, Iran. KSCE J. Civ. Eng. 2017, 21, 134–140. [Google Scholar] [CrossRef]

- Alagha, J.S.; Seyam, M.; Md Said, M.A.; Mogheir, Y. Integrating an artificial intelligence approach with k-means clustering to model groundwater salinity: The case of Gaza coastal aquifer (Palestine). Hydrogeol. J. 2017, 25, 2347–2361. [Google Scholar] [CrossRef]

- Miller, T.; Poleszczuk, G. Prediction of the Seasonal Changes of the Chloride Concentrations in Urban Water Reservoir. Ecol. Chem. Eng. S 2017, 24, 595–611. [Google Scholar] [CrossRef][Green Version]

- Graf, R.; Zhu, S.; Sivakumar, B. Forecasting river water temperature time series using a wavelet–neural network hybrid modelling approach. J. Hydrol. 2019, 578. [Google Scholar] [CrossRef]

- Luo, W.; Zhu, S.; Wu, S.; Dai, J. Comparing artificial intelligence techniques for chlorophyll-a prediction in US lakes. Environ. Sci. Pollut. Res. 2019, 26, 30524–30532. [Google Scholar] [CrossRef] [PubMed]

- Najah Ahmed, A.; Binti Othman, F.; Abdulmohsin Afan, H.; Khaleel Ibrahim, R.; Ming Fai, C.; Shabbir Hossain, M.; Ehteram, M.; Elshafie, A. Machine learning methods for better water quality prediction. J. Hydrol. 2019, 578. [Google Scholar] [CrossRef]

- Yeon, I.S.; Kim, J.H.; Jun, K.W. Application of artificial intelligence models in water quality forecasting. Environ. Technol. 2008, 29, 625–631. [Google Scholar] [CrossRef] [PubMed]

- Dogan, E.; Sengorur, B.; Koklu, R. Modeling biological oxygen demand of the Melen River in Turkey using an artificial neural network technique. J. Environ. Manag. 2009, 90, 1229–1235. [Google Scholar] [CrossRef] [PubMed]

- Miao, Q.; Yuan, H.; Shao, C.; Liu, Z. Water quality prediction of moshui river in china based on BP neural network. In Proceedings of the 2009 International Conference Computing Intelligent Natural Computing CINC, Wuhan, China, 6–7 June 2009; pp. 7–10. [Google Scholar] [CrossRef]

- Oliveira Souza da Costa, A.; Ferreira Silva, P.; Godoy Sabará, M.; Ferreira da Costa, E. Use of neural networks for monitoring surface water quality changes in a neotropical urban stream. Environ. Monit. Assess. 2009, 155, 527–538. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.; Chen, M.; Yu, J. Water environment monitoring system based on neural networks for shrimp cultivation. In Proceedings of the 2009 International Conference Artifitial Intelligence and Computional Intelligence AICI, Shanghai, China, 7–8 November 2009; pp. 427–431. [Google Scholar] [CrossRef]

- Singh, K.P.; Basant, A.; Malik, A.; Jain, G. Artificial neural network modeling of the river water quality—A case study. Ecol. Modell. 2009, 220, 888–895. [Google Scholar] [CrossRef]

- Yeon, I.S.; Jun, K.W.; Lee, H.J. The improvement of total organic carbon forecasting using neural networks discharge model. Environ. Technol. 2009, 30, 45–51. [Google Scholar] [CrossRef][Green Version]

- Zuo, J.; Yu, J.T. Application of neural network in groundwater denitrification process. In Proceedings of the 2009 Asia-Pacific Conference Information Processing APCIP, Shenzhen, China, 18–19 July 2009; pp. 79–82. [Google Scholar] [CrossRef]

- Akkoyunlu, A.; Akiner, M.E. Feasibility Assessment of Data-Driven Models in Predicting Pollution Trends of Omerli Lake, Turkey. Water Resour. Manag. 2010, 24, 3419–3436. [Google Scholar] [CrossRef]

- Chen, D.; Lu, J.; Shen, Y. Artificial neural network modelling of concentrations of nitrogen, phosphorus and dissolved oxygen in a non-point source polluted river in Zhejiang Province, southeast China. Hydrol. Process. 2010, 24, 290–299. [Google Scholar] [CrossRef]

- Piotrowski, A.P.; Napiorkowski, M.J.; Napiorkowski, J.J.; Osuch, M. Comparing various artificial neural network types for water temperature prediction in rivers. J. Hydrol. 2015, 529, 302–315. [Google Scholar] [CrossRef]

- Alizadeh, M.J.; Jafari Nodoushan, E.; Kalarestaghi, N.; Chau, K.W. Toward multi-day-ahead forecasting of suspended sediment concentration using ensemble models. Environ. Sci. Pollut. Res. 2017, 24, 28017–28025. [Google Scholar] [CrossRef] [PubMed]

- Šiljić Tomić, A.; Antanasijević, D.; Ristić, M.; Perić-Grujić, A.; Pocajt, V. Application of experimental design for the optimization of artificial neural network-based water quality model: A case study of dissolved oxygen prediction. Environ. Sci. Pollut. Res. 2018, 25, 9360–9370. [Google Scholar] [CrossRef] [PubMed]

- Shamshirband, S.; Jafari Nodoushan, E.; Adolf, J.E.; Abdul Manaf, A.; Mosavi, A.; Chau, K. Ensemble models with uncertainty analysis for multi-day ahead forecasting of chlorophyll a concentration in coastal waters. Eng. Appl. Comput. Fluid Mech. 2019, 13, 91–101. [Google Scholar] [CrossRef]

- Rajaee, T.; Benmaran, R.R. Prediction of water quality parameters (NO3, CL) in Karaj river by using a combination of Wavelet Neural Network, ANN and MLR models. J. Water Soil 2016, 30, 15–29. [Google Scholar] [CrossRef]

- Markus, M.; Hejazi, M.I.; Bajcsy, P.; Giustolisi, O.; Savic, D.A. Prediction of weekly nitrate-N fluctuations in a small agricultural watershed in Illinois. J. Hydroinformatics 2010, 12, 251–261. [Google Scholar] [CrossRef]

- Merdun, H.; Çinar, Ö. Utilization of two artificial neural network methods in surface water quality modeling. Environ. Eng. Manag. J. 2010, 9, 413–421. [Google Scholar] [CrossRef]

- Ranković, V.; Radulović, J.; Radojević, I.; Ostojić, A.; Čomić, L. Neural network modeling of dissolved oxygen in the Gruža reservoir, Serbia. Ecol. Modell. 2010, 221, 1239–1244. [Google Scholar] [CrossRef]

- Zhu, X.; Li, D.; He, D.; Wang, J.; Ma, D.; Li, F. A remote wireless system for water quality online monitoring in intensive fish culture. Comput. Electron. Agric. 2010, 71, S3. [Google Scholar] [CrossRef]

- Banerjee, P.; Singh, V.S.; Chatttopadhyay, K.; Chandra, P.C.; Singh, B. Artificial neural network model as a potential alternative for groundwater salinity forecasting. J. Hydrol. 2011, 398, 212–220. [Google Scholar] [CrossRef]

- Han, H.G.; Chen, Q.L.; Qiao, J.F. An efficient self-organizing RBF neural network for water quality prediction. Neural Netw. 2011, 24, 717–725. [Google Scholar] [CrossRef]

- Asadollahfardi, G.; Taklify, A.; Ghanbari, A. Application of Artificial Neural Network to Predict TDS in Talkheh Rud River. J. Irrig. Drain. Eng. 2012, 138, 363–370. [Google Scholar] [CrossRef]

- Ay, M.; Kisi, O. Modeling of dissolved oxygen concentration using different neural network techniques in Foundation Creek, El Paso County, Colorado. J. Environ. Eng. 2012, 138, 654–662. [Google Scholar] [CrossRef]

- Gazzaz, N.M.; Yusoff, M.K.; Aris, A.Z.; Juahir, H.; Ramli, M.F. Artificial neural network modeling of the water quality index for Kinta River (Malaysia) using water quality variables as predictors. Mar. Pollut. Bull. 2012, 64, 2409–2420. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.C.; Chen, W.B. Prediction of water temperature in a subtropical subalpine lake using an artificial neural network and three-dimensional circulation models. Comput. Geosci. 2012, 45, 13–25. [Google Scholar] [CrossRef]

- Kakaei Lafdani, E.; Moghaddam Nia, A.; Ahmadi, A. Daily suspended sediment load prediction using artificial neural networks and support vector machines. J. Hydrol. 2013, 478, 50–62. [Google Scholar] [CrossRef]

- Karakaya, N.; Evrendilek, F.; Gungor, K.; Onal, D. Predicting diel, diurnal and nocturnal dynamics of dissolved oxygen and chlorophyll-a using regression models and neural networks. Clean-Soil Air Water 2013, 41, 872–877. [Google Scholar] [CrossRef]

- Antanasijević, D.; Pocajt, V.; Perić-Grujić, A.; Ristić, M. Modelling of dissolved oxygen in the danube river using artificial neural networks and Monte carlo simulation uncertainty analysis. J. Hydrol. 2014, 519, 1895–1907. [Google Scholar] [CrossRef]

- Chen, W.B.; Liu, W.C. Artificial neural network modeling of dissolved oxygen in reservoir. Environ. Monit. Assess. 2014, 186, 1203–1217. [Google Scholar] [CrossRef]

- Han, H.G.; Wang, L.D.; Qiao, J.F. Hierarchical extreme learning machine for feedforward neural network. Neurocomputing 2014, 128, 128–135. [Google Scholar] [CrossRef]

- Ding, Y.R.; Cai, Y.J.; Sun, P.D.; Chen, B. The use of combined neural networks and genetic algorithms for prediction of river water quality. J. Appl. Res. Technol. 2014, 12, 493–499. [Google Scholar] [CrossRef]

- Faramarzi, M.; Yunus, M.A.M.; Nor, A.S.M.; Ibrahim, S. The application of the Radial Basis Function Neural Network in estimation of nitrate contamination in Manawatu river. In Proceedings of the 2014 International Conference Computional Science Technology ICCST, Kota Kinabalu, Malaysia, 27–28 August 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Nemati, S.; Fazelifard, M.H.; Terzi, Ö.; Ghorbani, M.A. Estimation of dissolved oxygen using data-driven techniques in the Tai Po River, Hong Kong. Environ. Earth Sci. 2015, 74, 4065–4073. [Google Scholar] [CrossRef]

- Li, X.; Sha, J.; Wang, Z.L. Chlorophyll-A Prediction of lakes with different water quality patterns in China based on hybrid neural networks. Water 2017, 9, 524. [Google Scholar] [CrossRef]

- Montaseri, M.; Zaman Zad Ghavidel, S.; Sanikhani, H. Water quality variations in different climates of Iran: Toward modeling total dissolved solid using soft computing techniques. Stoch. Environ. Res. Risk Assess. 2018, 32, 2253–2273. [Google Scholar] [CrossRef]

- Rajaee, T.; Jafari, H. Utilization of WGEP and WDT models by wavelet denoising to predict water quality parameters in rivers. J. Hydrol. Eng. 2018, 23. [Google Scholar] [CrossRef]

- Barzegar, R.; Asghari Moghaddam, A.; Adamowski, J.; Ozga-Zielinski, B. Multi-step water quality forecasting using a boosting ensemble multi-wavelet extreme learning machine model. Stoch. Environ. Res. Risk Assess. 2018, 32, 799–813. [Google Scholar] [CrossRef]

- Csábrági, A.; Molnár, S.; Tanos, P.; Kovács, J.; Molnár, M.; Szabó, I.; Hatvani, I.G. Estimation of dissolved oxygen in riverine ecosystems: Comparison of differently optimized neural networks. Ecol. Eng. 2019, 138, 298–309. [Google Scholar] [CrossRef]

- Klçaslan, Y.; Tuna, G.; Gezer, G.; Gulez, K.; Arkoc, O.; Potirakis, S.M. ANN-based estimation of groundwater quality using a wireless water quality network. Int. J. Distrib. Sens. Netw. 2014, 2014, 1–8. [Google Scholar] [CrossRef]

- Yang, T.M.; Fan, S.K.; Fan, C.; Hsu, N.S. Establishment of turbidity forecasting model and early-warning system for source water turbidity management using back-propagation artificial neural network algorithm and probability analysis. Environ. Monit. Assess. 2014, 186, 4925–4934. [Google Scholar] [CrossRef]

- Khashei-Siuki, A.; Sarbazi, M. Evaluation of ANFIS, ANN, and geostatistical models to spatial distribution of groundwater quality (case study: Mashhad plain in Iran). Arab. J. Geosci. 2015, 8, 903–912. [Google Scholar] [CrossRef]

- Yousefi, P.; Naser, G.; Mohammadi, H. Surface water quality model: Impacts of influential variables. J. Water Resour. Plan. Manag. 2018, 144, 1–10. [Google Scholar] [CrossRef]

- Najah, A.; El-Shafie, A.; Karim, O.A.; El-Shafie, A.H. Performance of ANFIS versus MLP-NN dissolved oxygen prediction models in water quality monitoring. Environ. Sci. Pollut. Res. 2014, 21, 1658–1670. [Google Scholar] [CrossRef] [PubMed]

- Sinshaw, T.A.; Surbeck, C.Q.; Yasarer, H.; Najjar, Y. Artificial Neural Network for Prediction of Total Nitrogen and Phosphorus in US Lakes. J. Environ. Eng. 2019, 145, 1–11. [Google Scholar] [CrossRef]

- Partal, T.; Cigizoglu, H.K. Estimation and forecasting of daily suspended sediment data using wavelet-neural networks. J. Hydrol. 2008, 358, 317–331. [Google Scholar] [CrossRef]

- Anctil, F.; Filion, M.; Tournebize, J. A neural network experiment on the simulation of daily nitrate-nitrogen and suspended sediment fluxes from a small agricultural catchment. Ecol. Modell. 2009, 220, 879–887. [Google Scholar] [CrossRef]

- Alagha, J.S.; Said, M.A.M.; Mogheir, Y. Modeling of nitrate concentration in groundwater using artificial intelligence approach-a case study of Gaza coastal aquifer. Environ. Monit. Assess. 2014, 186, 35–45. [Google Scholar] [CrossRef]

- Salami, E.S.; Ehteshami, M. Simulation, evaluation and prediction modeling of river water quality properties (case study: Ireland Rivers). Int. J. Environ. Sci. Technol. 2015, 12, 3235–3242. [Google Scholar] [CrossRef]

- Ahmed, A.A.M. Prediction of dissolved oxygen in Surma River by biochemical oxygen demand and chemical oxygen demand using the artificial neural networks (ANNs). J. King Saud Univ. Eng. Sci. 2017, 29, 151–158. [Google Scholar] [CrossRef]

- Najafzadeh, M.; Ghaemi, A. Prediction of the five-day biochemical oxygen demand and chemical oxygen demand in natural streams using machine learning methods. Environ. Monit. Assess. 2019, 191. [Google Scholar] [CrossRef]

- Sahoo, G.B.; Schladow, S.G.; Reuter, J.E. Forecasting stream water temperature using regression analysis, artificial neural network, and chaotic non-linear dynamic models. J. Hydrol. 2009, 378, 325–342. [Google Scholar] [CrossRef]

- Wu, M.; Zhang, W.; Wang, X.; Luo, D. Application of MODIS satellite data in monitoring water quality parameters of Chaohu Lake in China. Environ. Monit. Assess. 2009, 148, 255–264. [Google Scholar] [CrossRef]

- Kişi, Ö. River suspended sediment concentration modeling using a neural differential evolution approach. J. Hydrol. 2010, 389, 227–235. [Google Scholar] [CrossRef]

- Afshar, A.; Kazemi, H. Multi objective calibration of large scaled water quality model using a hybrid particle swarm optimization and neural network algorithm. KSCE J. Civ. Eng. 2012, 16, 913–918. [Google Scholar] [CrossRef]

- Areerachakul, S.; Sophatsathit, P.; Lursinsap, C. Integration of unsupervised and supervised neural networks to predict dissolved oxygen concentration in canals. Ecol. Modell. 2013, 261–262, 1–7. [Google Scholar] [CrossRef]

- Gazzaz, N.M.; Yusoff, M.K.; Ramli, M.F.; Juahir, H.; Aris, A.Z. Artificial Neural Network Modeling of the Water Quality Index Using Land Use Areas as Predictors. Water Environ. Res. 2015, 87, 99–112. [Google Scholar] [CrossRef] [PubMed]

- Heddam, S. Simultaneous modelling and forecasting of hourly dissolved oxygen concentration (DO) using radial basis function neural network (RBFNN) based approach: A case study from the Klamath River, Oregon, USA. Model. Earth Syst. Environ. 2016, 2, 1–18. [Google Scholar] [CrossRef]

- Liu, S.; Xu, L.; Li, D. Multi-scale prediction of water temperature using empirical mode decomposition with back-propagation neural networks. Comput. Electr. Eng. 2016, 49, 1–8. [Google Scholar] [CrossRef]

- Yu, H.; Chen, Y.; Hassan, S.; Li, D. Dissolved oxygen content prediction in crab culture using a hybrid intelligent method. Sci. Rep. 2016, 6, 1–10. [Google Scholar] [CrossRef]

- Zhao, Y.; Zou, Z.; Wang, S. A Back Propagation Neural Network Model based on kalman filter for water quality prediction. In Proceedings of the International Conference Natrual Computation, Zhangjiajie, China, 15–17 August 2015; pp. 149–153. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, Y.; Xiao, F.; Wang, Y.; Sun, L. Water quality prediction method based on IGRA and LSTM. Water 2018, 10, 1148. [Google Scholar] [CrossRef]

- Jin, T.; Cai, S.; Jiang, D.; Liu, J. A data-driven model for real-time water quality prediction and early warning by an integration method. Environ. Sci. Pollut. Res. 2019, 26, 30374–30385. [Google Scholar] [CrossRef]

- Tian, W.; Liao, Z.; Wang, X. Transfer learning for neural network model in chlorophyll-a dynamics prediction. Environ. Sci. Pollut. Res. 2019, 26, 29857–29871. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Chen, X.; Yu, Y.; Zhang, X. Application of a parallel particle swarm optimization-long short term memory model to improve water quality data. Water 2019, 11, 1317. [Google Scholar] [CrossRef]

- Chu, H.B.; Lu, W.X.; Zhang, L. Application of artificial neural network in environmental water quality assessment. J. Agric. Sci. Technol. 2013, 15, 343–356. [Google Scholar]

- Rajaee, T.; Ebrahimi, H.; Nourani, V. A review of the arti fi cial intelligence methods in groundwater level modeling. J. Hydrol. 2019, 572, 336–351. [Google Scholar] [CrossRef]

- Rajaee, T.; Ravansalar, M.; Adamowski, J.F.; Deo, R.C. A New Approach to Predict Daily pH in Rivers Based on the “à trous” Redundant Wavelet Transform Algorithm. Water. Air. Soil Pollut. 2018, 229. [Google Scholar] [CrossRef]

- Verma, A.K.; Singh, T.N. Prediction of water quality from simple field parameters. Environ. Earth Sci. 2013, 69, 821–829. [Google Scholar] [CrossRef]

- Ruben, G.B.; Zhang, K.; Bao, H.; Ma, X. Application and Sensitivity Analysis of Artificial Neural Network for Prediction of Chemical Oxygen Demand. Water Resour. Manag. 2018, 32, 273–283. [Google Scholar] [CrossRef]

- Wen, X.; Fang, J.; Diao, M.; Zhang, C. Artificial neural network modeling of dissolved oxygen in the Heihe River, Northwestern China. Environ. Monit. Assess. 2013, 185, 4361–4371. [Google Scholar] [CrossRef]

- Emamgholizadeh, S.; Kashi, H.; Marofpoor, I.; Zalaghi, E. Prediction of water quality parameters of Karoon River (Iran) by artificial intelligence-based models. Int. J. Environ. Sci. Technol. 2014, 11, 645–656. [Google Scholar] [CrossRef]

- Li, X.; Sha, J.; Wang, Z. liang A comparative study of multiple linear regression, artificial neural network and support vector machine for the prediction of dissolved oxygen. Hydrol. Res. 2017, 48, 1214–1225. [Google Scholar] [CrossRef]

- DeWeber, J.T.; Wagner, T. A regional neural network ensemble for predicting mean daily river water temperature. J. Hydrol. 2014, 517, 187–200. [Google Scholar] [CrossRef]

- Ahmadi, A.; Fatemi, Z.; Nazari, S. Assessment of input data selection methods for BOD simulation using data-driven models: A case study. Environ. Monit. Assess. 2018, 190. [Google Scholar] [CrossRef] [PubMed]

- Read, J.S.; Jia, X.; Willard, J.; Appling, A.P.; Zwart, J.A.; Oliver, S.K.; Karpatne, A.; Hansen, G.J.A.; Hanson, P.C.; Watkins, W.; et al. Process-Guided Deep Learning Predictions of Lake Water Temperature. Water Resour. Res. 2019, 55, 9173–9190. [Google Scholar] [CrossRef]

- Bowden, G.J.; Dandy, G.C.; Maier, H.R. Input determination for neural network models in water resources applications. Part 1—Background and methodology. J. Hydrol. 2005, 301, 75–92. [Google Scholar] [CrossRef]

- Chang, F.J.; Chen, P.A.; Liu, C.W.; Liao, V.H.C.; Liao, C.M. Regional estimation of groundwater arsenic concentrations through systematical dynamic-neural modeling. J. Hydrol. 2013, 499, 265–274. [Google Scholar] [CrossRef]

- Bontempi, G.; Ben Taieb, S.; Le Borgne, Y.A. Machine learning strategies for time series forecasting. In Business Intelligence; Spriger: Berlin/Heidelberg, Germany, 2013; ISBN 9783642363177. [Google Scholar]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, IJCAI’95, Morgan Kaufmann, United States, Montreal, QC, Canada, 20–25 August 1995; pp. 1137–1143. [Google Scholar]

- Chen, L.; Hsu, H.H.; Kou, C.H.; Yeh, H.C.; Wang, T.S. Applying Multi-temporal Satellite Imageries to Estimate Chlorophyll-a Concentration in Feitsui Reservoir using ANNs. IJCAI Int. Jt. Conf. Artif. Intell. 2009, 345–348. [Google Scholar] [CrossRef]

- Ebadati, N.; Hooshmandzadeh, M. Water quality assessment of river using RBF and MLP methods of artificial network analysis (case study: Karoon River Southwest of Iran). Environ. Earth Sci. 2019, 78, 1–12. [Google Scholar] [CrossRef]

- Olyaie, E.; Banejad, H.; Chau, K.W.; Melesse, A.M. A comparison of various artificial intelligence approaches performance for estimating suspended sediment load of river systems: A case study in United States. Environ. Monit. Assess. 2015, 187. [Google Scholar] [CrossRef]

- Parmar, K.S.; Bhardwaj, R. River Water Prediction Modeling Using Neural Networks, Fuzzy and Wavelet Coupled Model. Water Resour. Manag. 2015, 29, 17–33. [Google Scholar] [CrossRef]

- Rajaee, T.; Shahabi, A. Evaluation of wavelet-GEP and wavelet-ANN hybrid models for prediction of total nitrogen concentration in coastal marine waters. Arab. J. Geosci. 2016, 9. [Google Scholar] [CrossRef]

- Dragoi, E.N.; Kovács, Z.; Juzsakova, T.; Curteanu, S.; Cretescu, I. Environmental assesment of surface waters based on monitoring data and neuro-evolutive modelling. Process Saf. Environ. Prot. 2018, 120, 136–145. [Google Scholar] [CrossRef]

- Voza, D.; Vuković, M. The assessment and prediction of temporal variations in surface water quality—A case study. Environ. Monit. Assess. 2018, 190. [Google Scholar] [CrossRef] [PubMed]

| Abbreviations | Full Name | Abbreviations | Full Name | Abbreviations | Full Name | Abbreviations | Full Name |

|---|---|---|---|---|---|---|---|

| AH | air humidity | EC | Electrical conductivity | ORP | Oxidation reduction potential | TCC | total chromium concentration |

| AODD | August, October, December, data | Evap | evaporation | Q | discharge | TIC | total iron concentration |

| AP | air pressure | FTT | flow travel time | pH | Pondus Hydrogenii | TAC | total anions and cations |

| AT | air temperature | Fe | iron | Precip | precipitation | TNs | total nutrients |

| As | Arsenic | F | flow | P | phosphate | TA | total alkalinity |

| B | boron | HCO3 | bicarbonate | RH | relative humidity | TP | total phosphorus |

| BOD | Biochemical Oxygen Demand; | HA | Hydrogenated Amine | RP | Redox potential | Tur | turbidity |

| C | carbon | ICs | ionic concentrations | RO | runoff | TDS | total dissolved solids |

| Cl | chloride | K | potassium | RF | rainfall | TN | total nitrogen |

| Cu | Copper | Lon | longitude | RainP | Rainy period | TH | total hardness |

| Ca | calcium | Lat | latitude | SR | solar radiation | TOC | total organic carbon |

| CO32- | Carbonate | LV | lake volume | Sth | sunshine time hours | TSS | total suspended solids |

| Coli | Coliform | MDHM | month, day, hour, minute | SD | transparence | VP | volatile phenol |

| COD | Chemical Oxygen Demand | Mn | manganese; | SAR | sodium absorption ratio | WL | Water Level |

| COD Mn | permanganate index | Mg | magnesium | SM | Soil Moisture | WT | water temperature |

| Chl-a | Chlorophyll a | Na | sodium | ST | soil temperature | WS | wind speed |

| DO | dissolved oxygen | Ns | nutrients | SO4 | sulphate | WD | wind direction |

| DOY | day of year | NO2 | nitrite | S | salinity | YMDH | the year numbers |

| Categories | Structure(s) | Advantage(s) | Reference(s) |

|---|---|---|---|

| MLPs | They are based on an understanding of the biological nervous system | Solving the nonlinear problems | [19,23,30,32,33,34,35] |

| TDNNs | They are based on the structure of MLPs | Using time delay cells to deal with the dynamic nature of sample data | [36] |

| RBFNNs | The structure of RBFNNs is similar to the MLPs The radial basis activation function is in the hidden layer | To overcome the local minimum problems | [5,18,37,38] |

| GRNNs | A modified form of the RBFNNs model There is a pattern and a summation layer between the input and output layers | Solving the small sample problems | [24,39,40,41,42,43] |

| WNNs | Wavelet function replace the linear sigmoid activation functions of MLPs | Solving the non-stationary problems | [16,44] |

| ELMs | The structure of ELMs is similar to the MLPs Only need to learn the output weight | Reducing the computation problems because the weights of the input and hidden layer need not be adjusted | [31,45,46,47,48] |

| CCNN | Start with input and output layer without a hidden layer | A constructive neural network that aims to solve the problems of the determination of potential neurons which are not relevant to the output layer | [49] |

| MNNs | A special feedforward network Choosing the neural network which have the maximum similarity between the inputs and centroids of the cluster | Solving the problem of low prediction accuracy | [30,50] |

| RNNs | The RNNs are developed with the development of deep learning | Solving the problems of long-term dependence which are not captured by the feedforward network | [12,31,38,51,52] |

| LSTMs | Its structure is similar to RNNs Memory cell state is added to hidden layer | Addressing the well-known vanishing gradient problem of RNNs | [15,26,45,53,54] |

| TLRN | Its structure is similar to MLPs It has the local recurrent connections in the hidden layer | Reducing the influence of the noise and owning the advantage of adaptive memory depth | [55] |

| NARX | Sub-classes of RNNs Their recurrent connections are from the output | Solving the problems of long-term dependence | [12] |

| Elman | A context layer that can store the internal states is added besides the traditional three layers | It is useful in dynamic system modeling because of the context layer | [3] |

| ESN | Different from the above recurrent neural networks The three layers are input, reservoir, and readout layer | To overcome the problems of the local minima and gradient vanishing | [3] |

| RESN | They are based on the structure of ESN which has a large and sparsely connected reservoir | To overcome the ill-posed problem existing in the ESN | [3] |

| Hybrid methods | The combination of conventional or preprocess methods with ANNs The internal integration of ANN methods or | Exploring the advantages of each methods | [56] |

| CNN | Input, convolution, fully connection, and output layers | An emerging method to solve the dissolved oxygen prediction problem | [57] |

| SODBN | They are based on the structure of DBN whose visible and hidden layers are stacked sequentially | Investigating the problem of dynamically determining the structure of DBN | [58] |

| Water Quality Variables | Categories | Unit | Major Sensors | Research Scenarios |

|---|---|---|---|---|

| DO | chemical | mg/L | ✓ | river, lake, reservoir, WWTP, ponds, coastal waters, creek, drain |

| BOD | chemical | mg/L | - | river, lake, WWTP, mine water experimental system |

| COD | chemical | mg/L | - | river, lake, reservoir, WWTP, groundwater, mine water |

| WT | physical | °C | ✓ | river, lake, ponds, catchment, stream, coastal waters |

| Chl-a | biological | μg/L | ✓ | lake, reservoir, surface water, coastal waters |

| pH | physical | none | ✓ | river, lake, WWTP, stream, coastal waters |

| SS | physical | mg/L | - | river, stream, coastal waters, creek, catchment |

| EC | physical | us cm−1 | ✓ | river, lake, reservoir, groundwater, stream |

| TP | physical | μg/L | - | river, lake, WWTP |

| NH3-N | chemical | mg/L | ✓ | river, lake, reservoir, groundwater experimental system |

| Tur | physical | FNU | ✓ | river, stream |

| NO3 | chemical | mg/L | - | river, groundwater, catchment, wells, aquifer experimental system |

| TDS | physical | mg/L | - | river, groundwater, drain |

| S | physical | psu | ✓ | groundwater, coastal waters |

| TN | chemical | mg/L | - | lake, WWTP, coastal waters |

| B | physical | mg/L | - | river |

| TH | physical | mg/L | - | river |

| TOC | chemical | mg/L | - | river |

| TSS | physical | mg/L | - | river |

| COD Mn | chemical | mg/L | - | river |

| NO2 | chemical | mg/L | - | groundwater |

| P | physical | mg/L | - | experimental system |

| SD | physical | cm | - | lake |

| Categories | Authors (Year) | Methods | Scenario (s) | Time Step | Dataset (Samples) |

|---|---|---|---|---|---|

| Feedforward | [39] | GRNN, BPNN, RBFNN | lake | weekly | 28 (6 months) |

| [40] | ANN(MLP), GRNN | coastal waters | No details | 32 (5 months) | |

| [59] | BPNN | river | No details | 39 (3 days) | |

| [158] | ANN | mine water | No details | 73 | |

| [97] | ANN | groundwater | No details | 97 | |

| [106] | ANN(MLP) | surface water | No details | 110 | |

| [159] | MLP | river | No details | 110 (8 hours) | |

| [130] | ANN(MLP) | plain | No details | 122 | |

| [128] | ANN | groundwater | monthly | 124 (1 year) | |

| [80] | ANN(MLP) | stream | No details | 132 (11 months) | |

| [79] | ANN(MLP) | basin | fortnightly | 144 (1 year) | |

| [131] | ANN(MLP) | river | monthly | 144 (12 years) | |

| [121] | RBFNN | river | weekly | 144 | |

| [24] | GRNN, ANN(MLP), RBFNN, MLR | river | monthly | More than 151 samples (6 years) | |

| [42] | GRNN, MLR | Open-source data | No details | 159 (9 years) | |

| [160] | ANN(MLP) | river | monthly | 164 (over 6 years) | |

| [107] | ANN | reservoir | No details | 180 (3 years) | |

| [22] | ANN | system | No details | 195 (4 years) | |

| [161] | ANN(MLP), RBFNN | river | monthly | 200 (17 years) | |

| [139] | ANN | river | No details | 200 (16 years) | |

| [93] | ANN | river | No details | 232 (3 years) | |

| [63] | CCNN, MLP | river | half a month | 232 (12 years) | |

| [122] | ANN | river | No details | 252 (21 years) | |