Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees

Abstract

1. Introduction

2. Materials and Methods

2.1. Modern Cyber-Security Training Platforms

- -

- Network layer tools (e.g., intrusion detection systems, firewalls, honeypots/honeynet);

- -

- Infrastructure layer tools (e.g., security monitors, passive and active penetration testing tools (e.g., configuration testing, SSL/TLS testing);

- -

- Application layer tools (e.g., security monitors, code analysis, as well as passive and active penetration testing tools such as authentication testing, database testing, session management testing, data validation and injection testing).

2.2. Teaching Cyber-Security

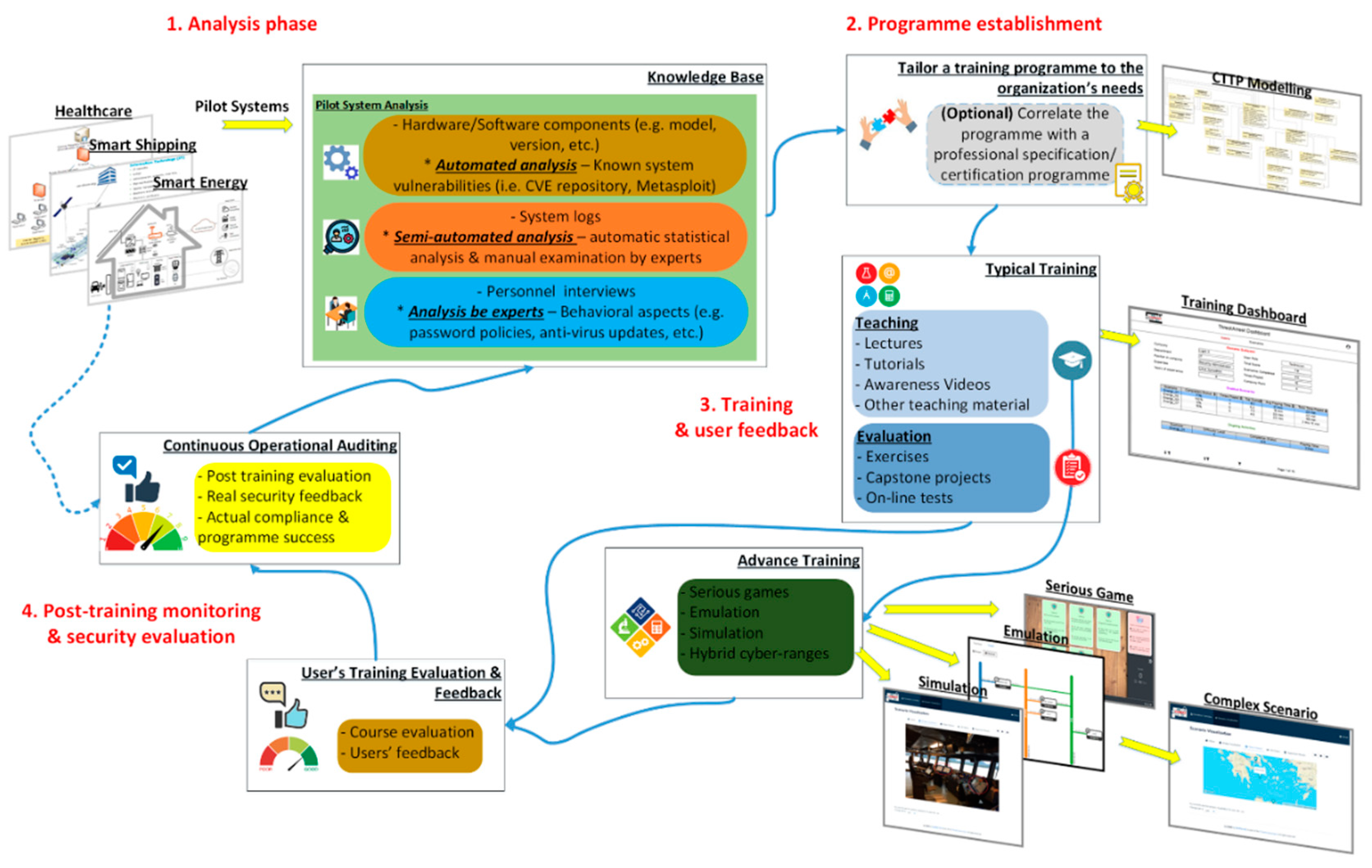

2.3. The Building-Blocks of the THREAT-ARREST Cyber-Security Training Framework

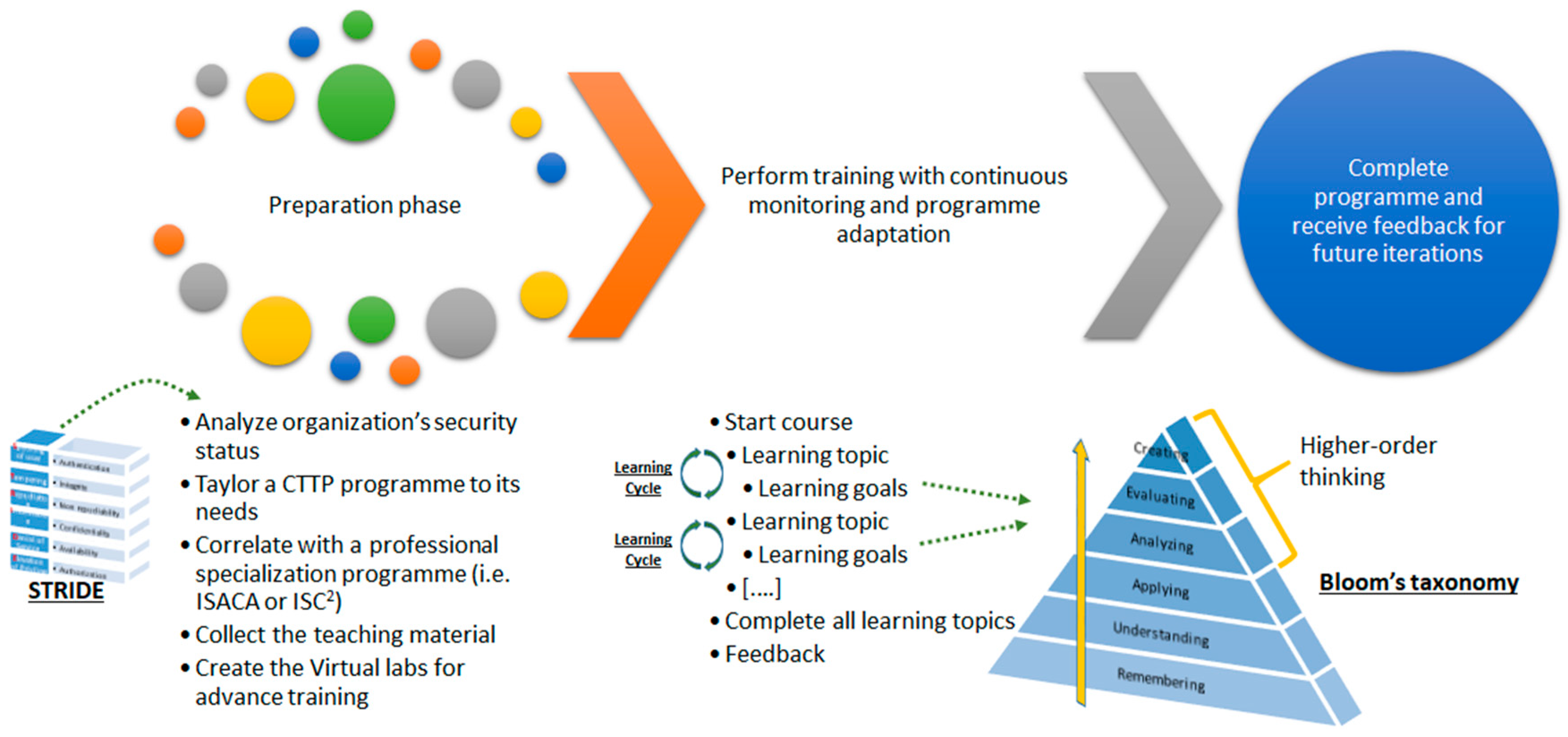

2.3.1. CTTP Modelling

Initial Analysis of a Pilot System

CTTP Programme Establishment

Training and User Feedback

Post-Training Monitoring and Security Evaluation

3. Results

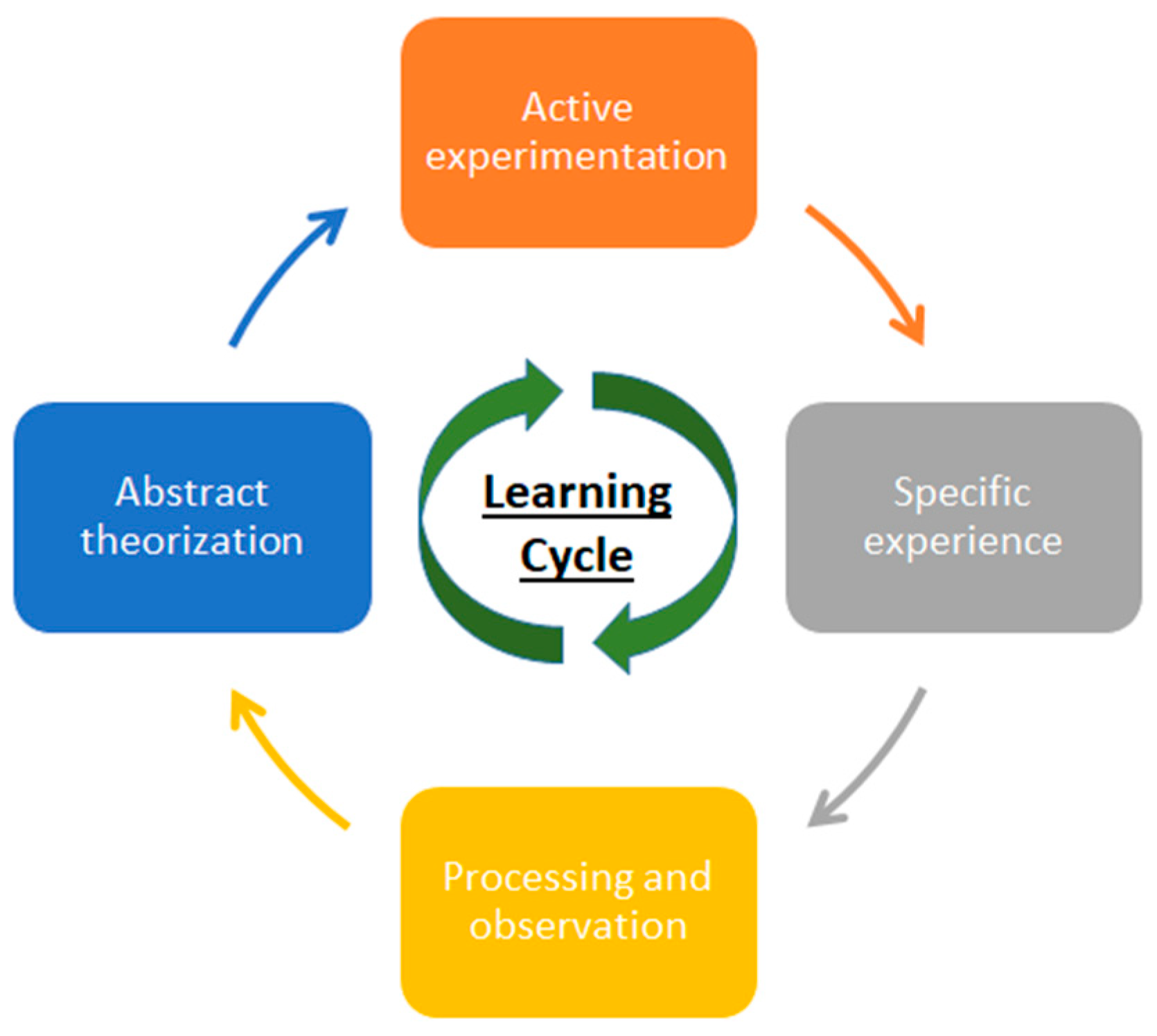

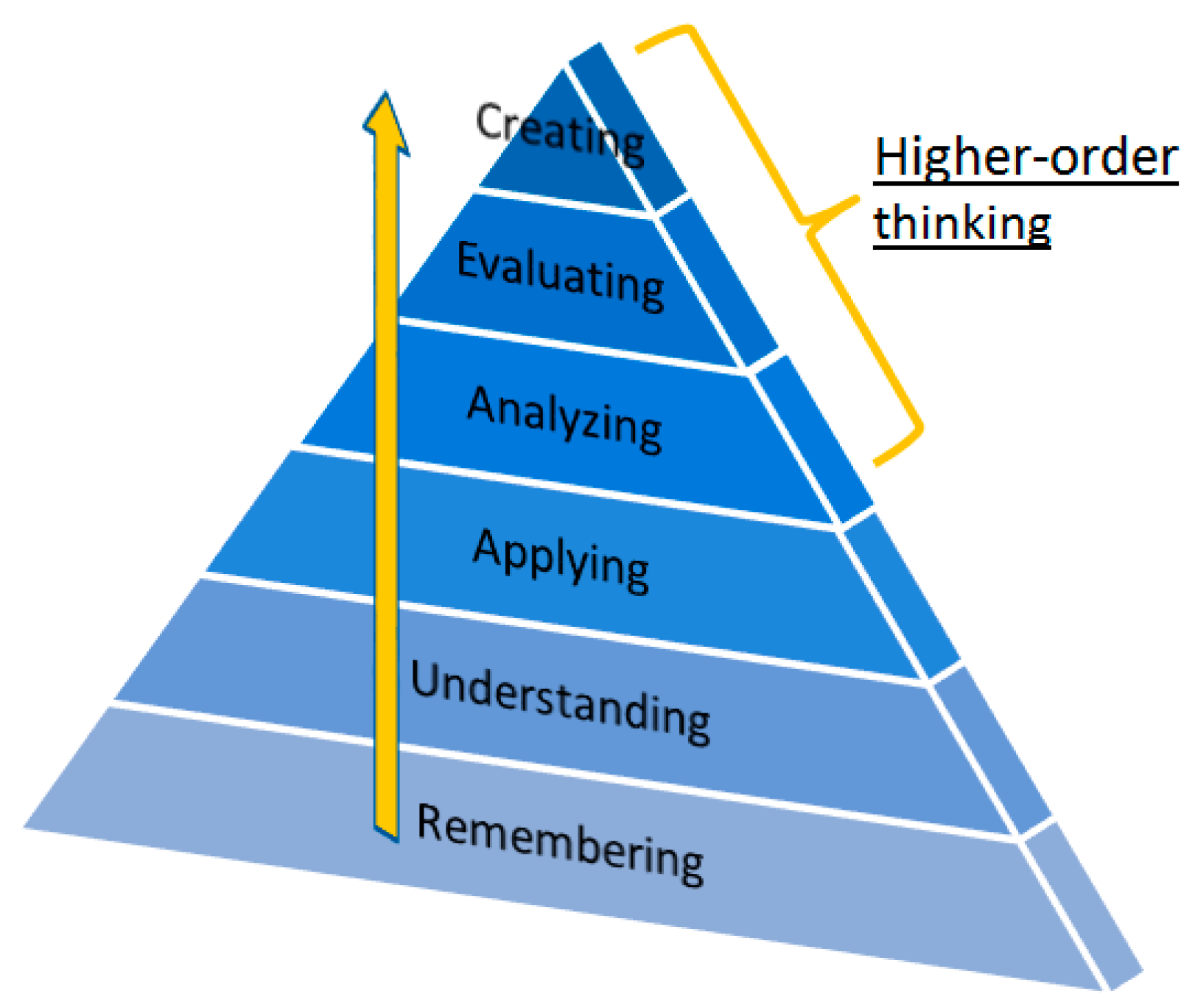

3.1. Modelling of the Learning Process

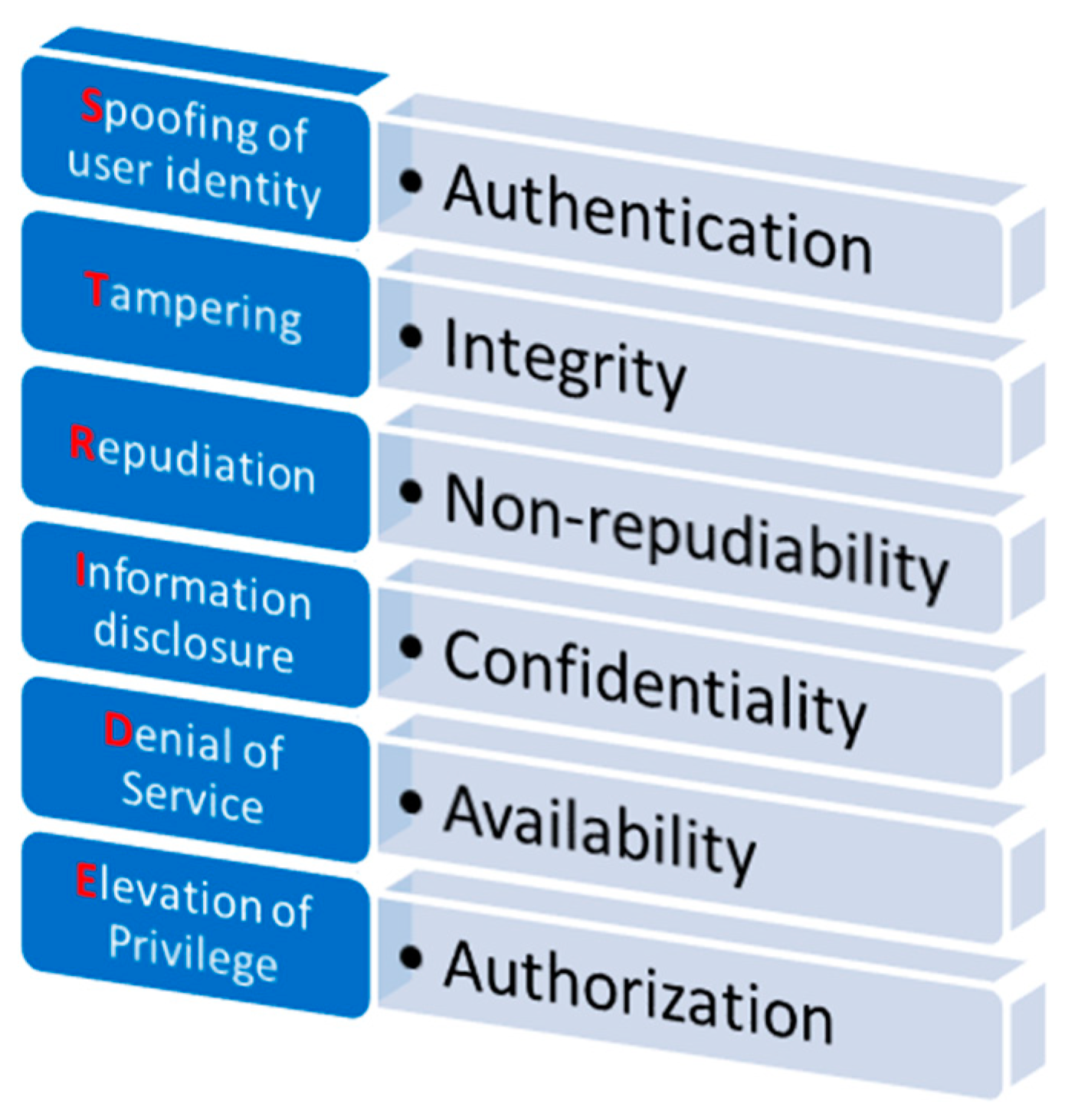

3.1.1. Security Modelling

3.1.2. Training Programme Preparation

3.1.3. Continuous Trainee Assessment and Dynamic Adaptation of the Training Process

- Foundation: the trainee has covered the first layer. He/she knows the main theoretic background of the educational topic.

- Practitioner: the trainee proceeds and accomplishes the layers 2–3. He/she has practical knowledge regarding the application and operation of the underlying concepts.

- Intermediate: the trainee reaches the layers 4–5. He/she has hands-on experience and technical knowledge regarding the deployment and management correlation of the various learning subjects.

- Expert: the trainee reaches the top layer 6. He/she has complete knowledge of the educational topic and is able in designing, developing, and administrating all aspects of the involved subject.

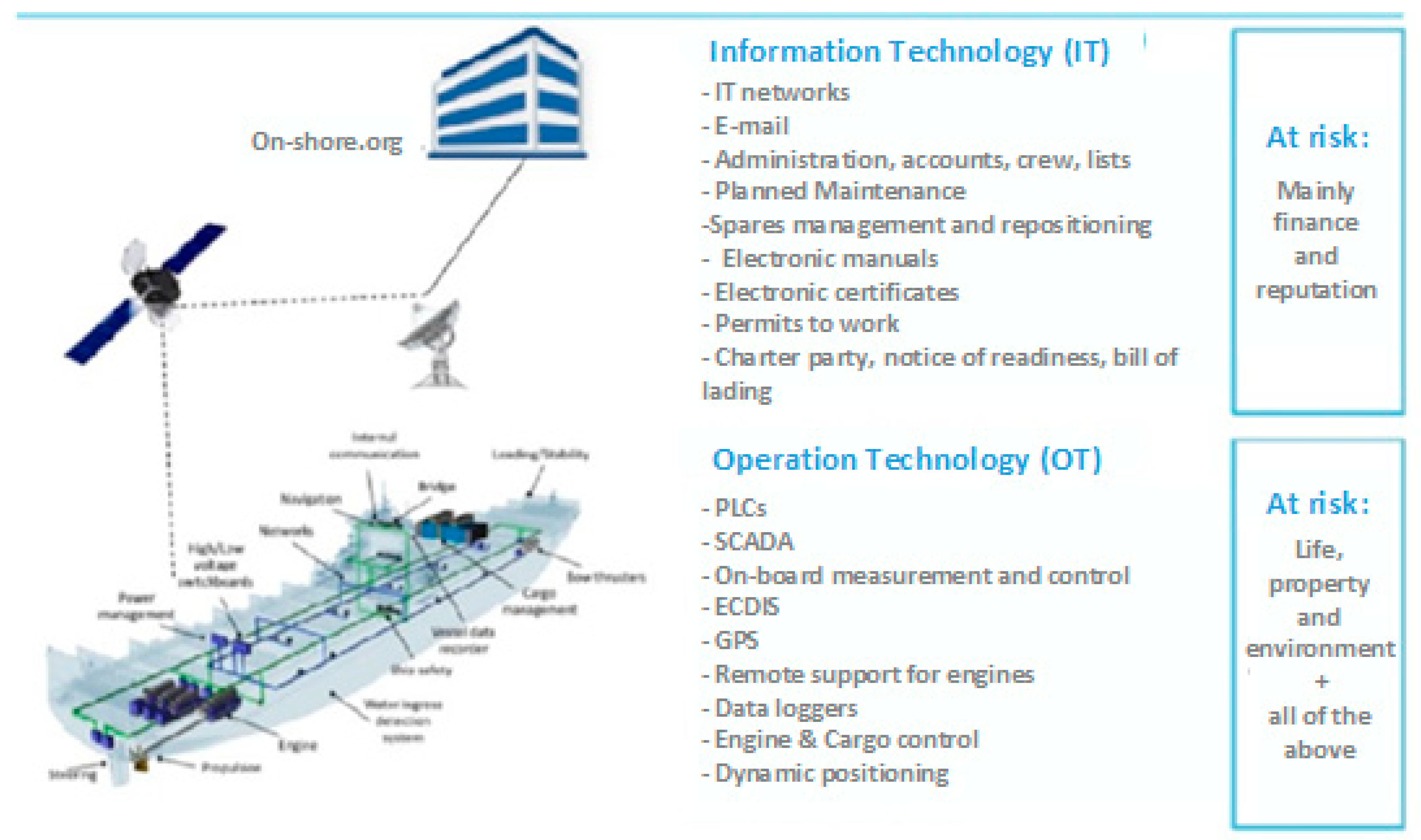

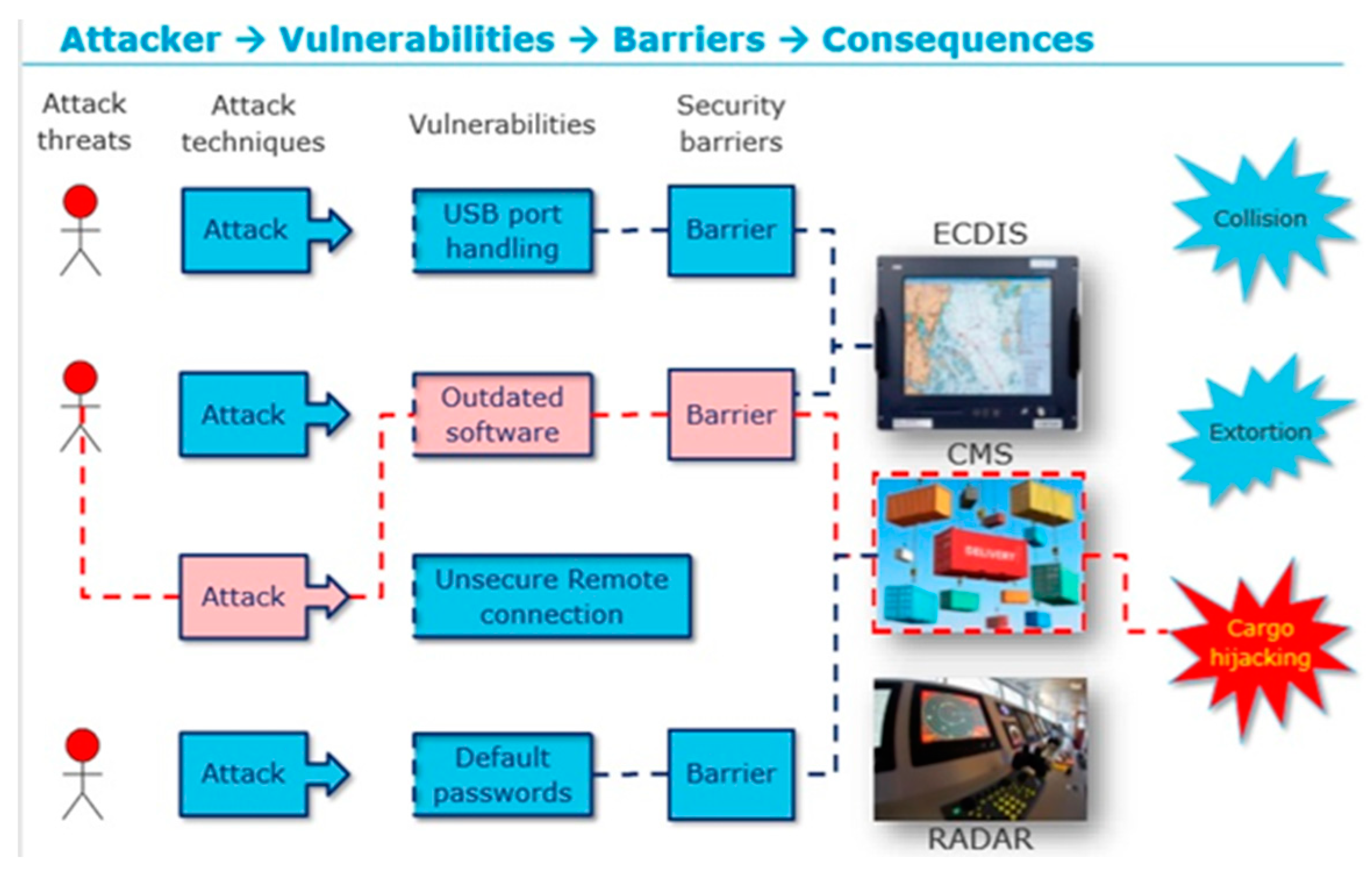

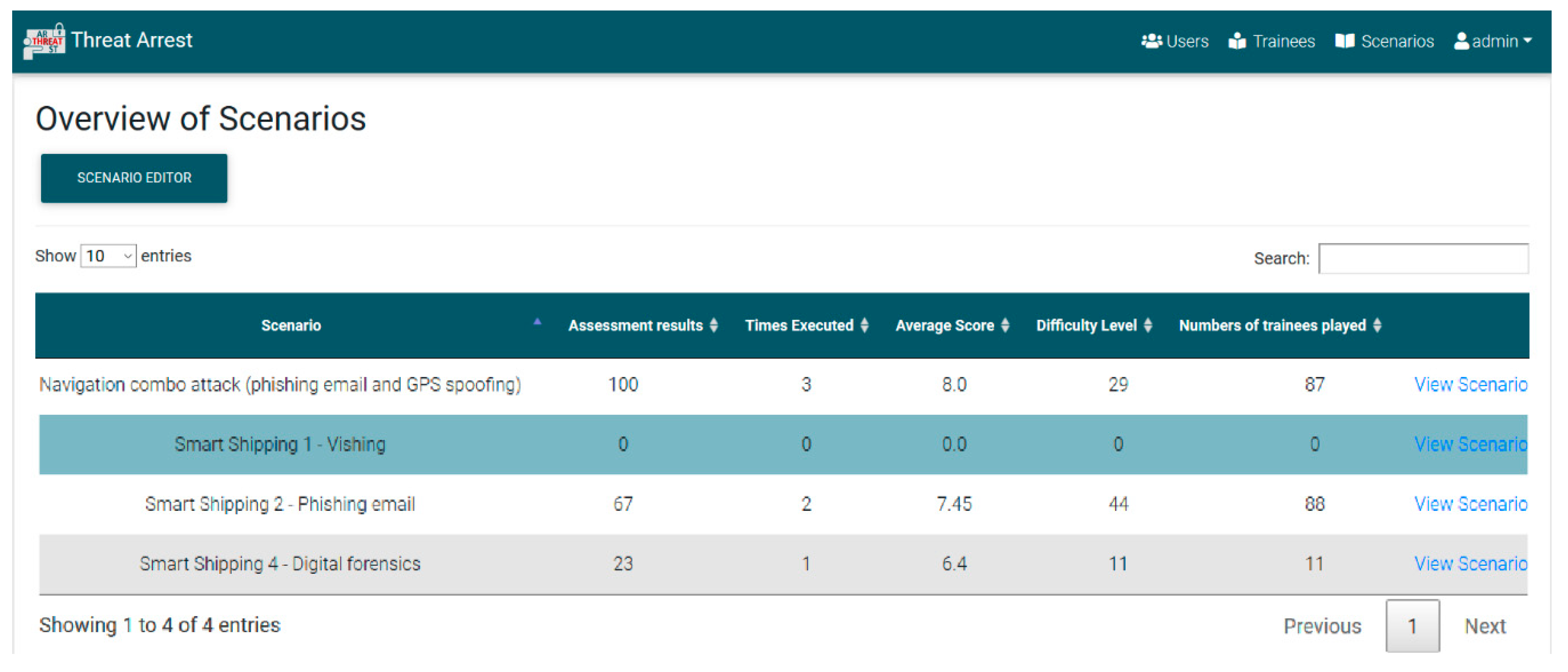

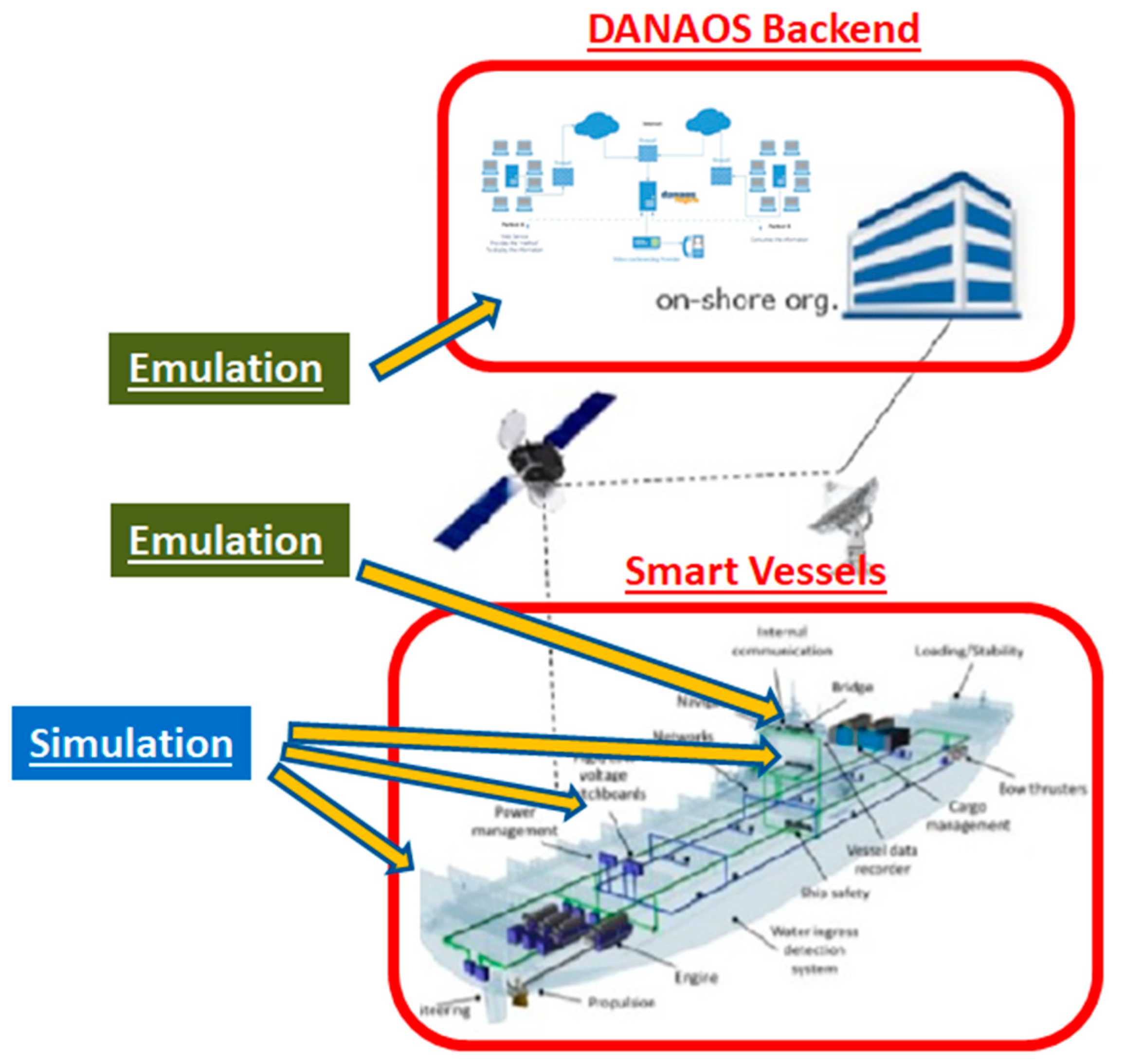

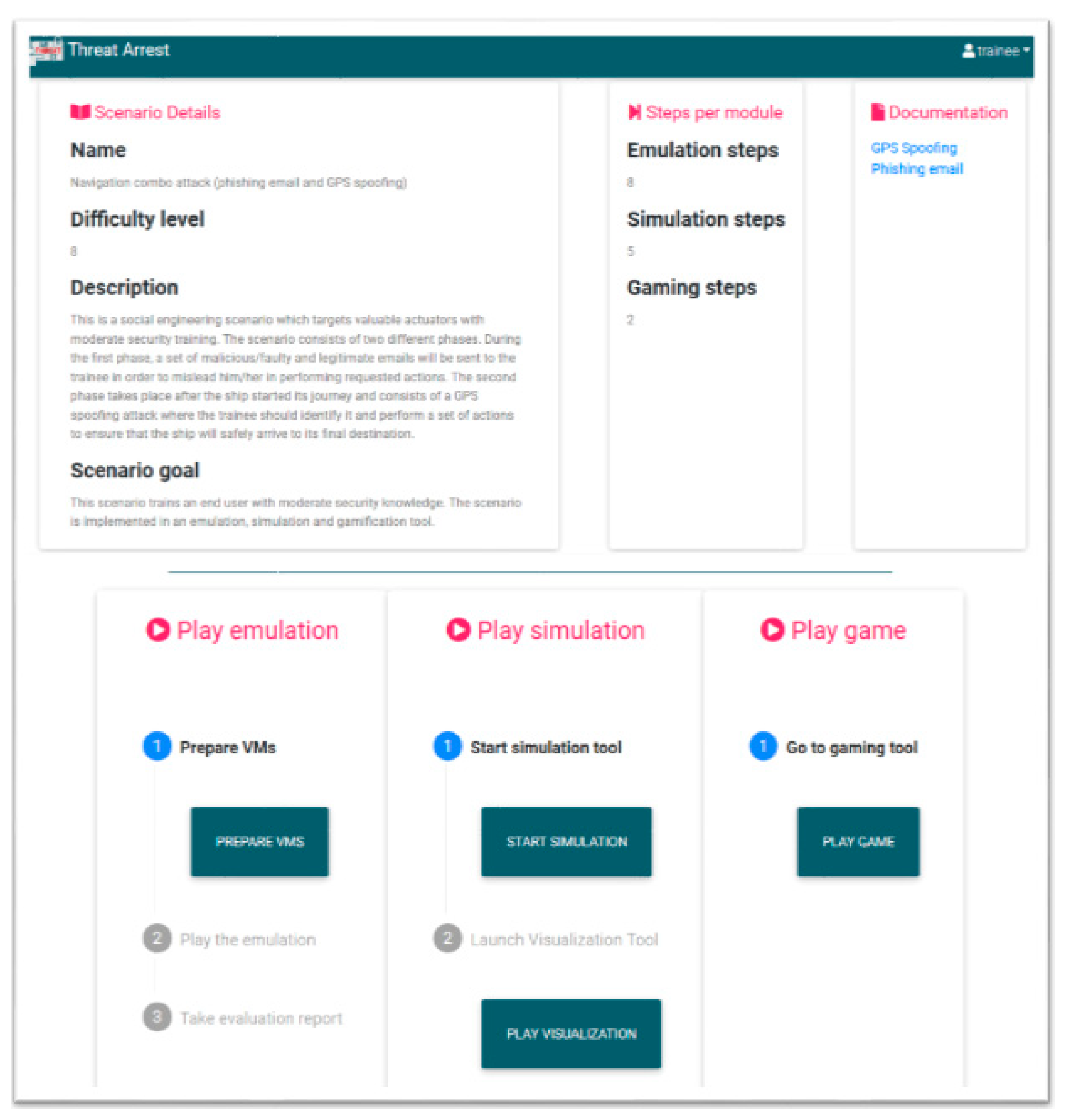

4. The Smart Shipping Use Case

4.1. Description of the Training Programme

4.2. Learning Outcome of the Training Module

- On-board personnel including the master, officers and crew.

- Shore-side personnel, who support the management and operation of the ship.

- Risks related to emails and how to behave in a safe manner (examples are phishing attacks where the user clicks on a link to a malicious site);

- Risks related to Internet usage, including social media, chat forums and cloud-based file storage where data movement is less controlled and monitored;

- Risks related to the use of own devices (these devices may be missing security patches and controls, such as anti-virus, and may transfer the risk to the environment to which they are connected to);

- Risks related to installing and maintaining software on company hardware using infected hardware (removable media) or software (infected package);

- Risks related to poor software and data security practices where no anti-virus checks or authenticity verifications are performed;

- Safeguarding user information, passwords and digital certificates;

- Cyber risks in relation to the physical presence of non-company personnel, e.g., where third-party technicians are left to work on equipment without supervision;

- Detecting suspicious activity or devices and how to report if a possible cyber incident is in progress (examples of this are strange connections that are not normally seen or someone plugging in an unknown device on the ship network);

- Awareness of the consequences or impact of cyber incidents to the safety and operations of the ship.

- An unresponsive or slow to respond system;

- Unexpected password changes or authorized users being locked out of a system;

- Unexpected errors in programs, including failure to run correctly or programs running; unexpected or sudden changes in available disk space or memory;

- Emails being returned unexpectedly;

- Unexpected network connectivity difficulties;

- Frequent system crashes;

- Abnormal hard drive or processor activity;

- Unexpected changes to browser, software or user settings, including permissions.

4.3. Teaching and Learning Strategies

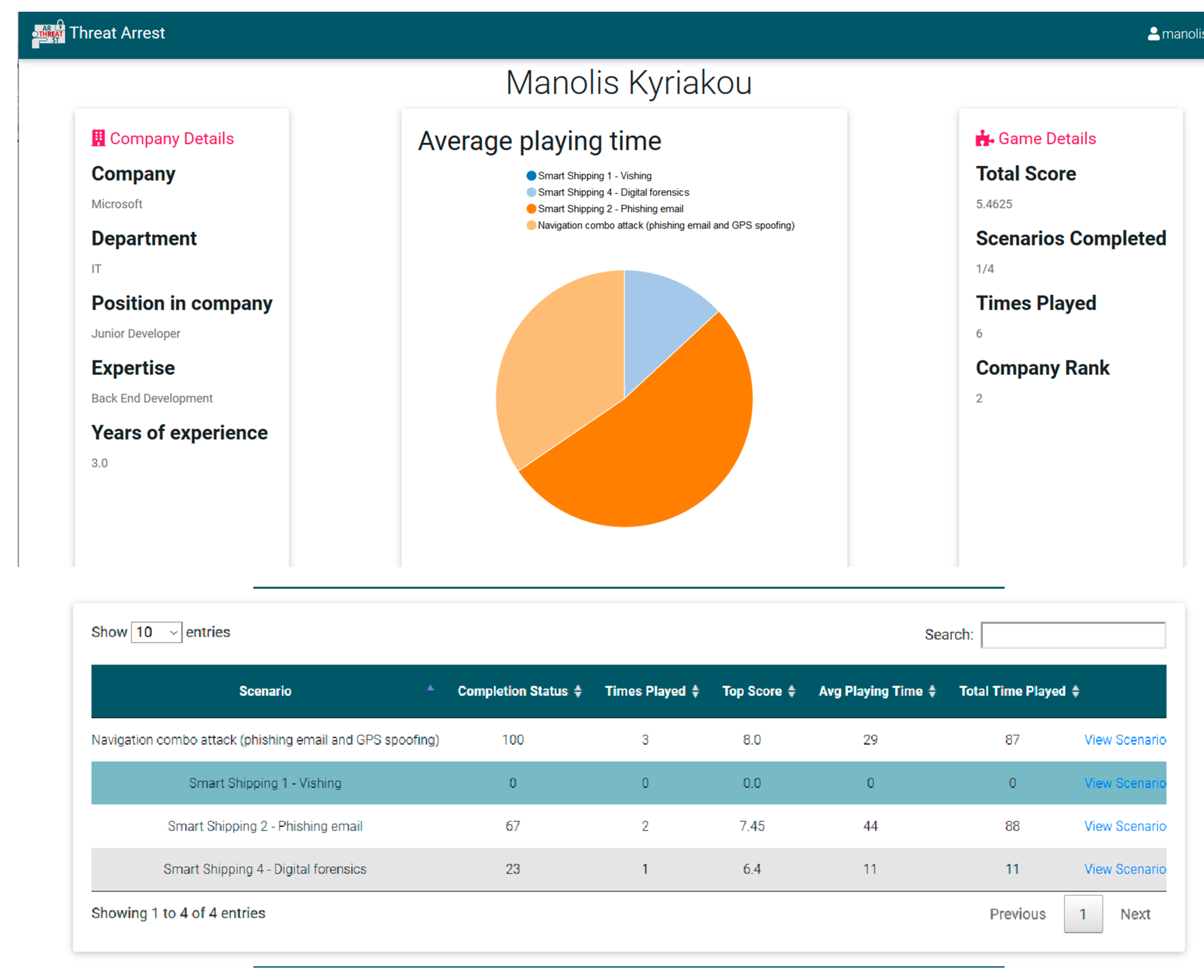

4.4. Student Participation

- On-board personnel including the master, officers and crew.

- Shore-side personnel, who support the management and operation of the ship.

4.5. Overview of Assessments and Training Levels

Application Example of the STRIDE Model and the Bloom Taxonomy

4.6. Study Plan (Learning Schedule)

4.6.1. Basic Training

- Has consumed the main teaching material;

- Has passed the training evaluation (e.g., exercises, exams, etc.) with an adequate score;

- Has passed a game, which contains all the involved learning topics of the learning unit, with an adequate score.

4.6.2. Advance Training

4.7. Resources Required to Complete the Training

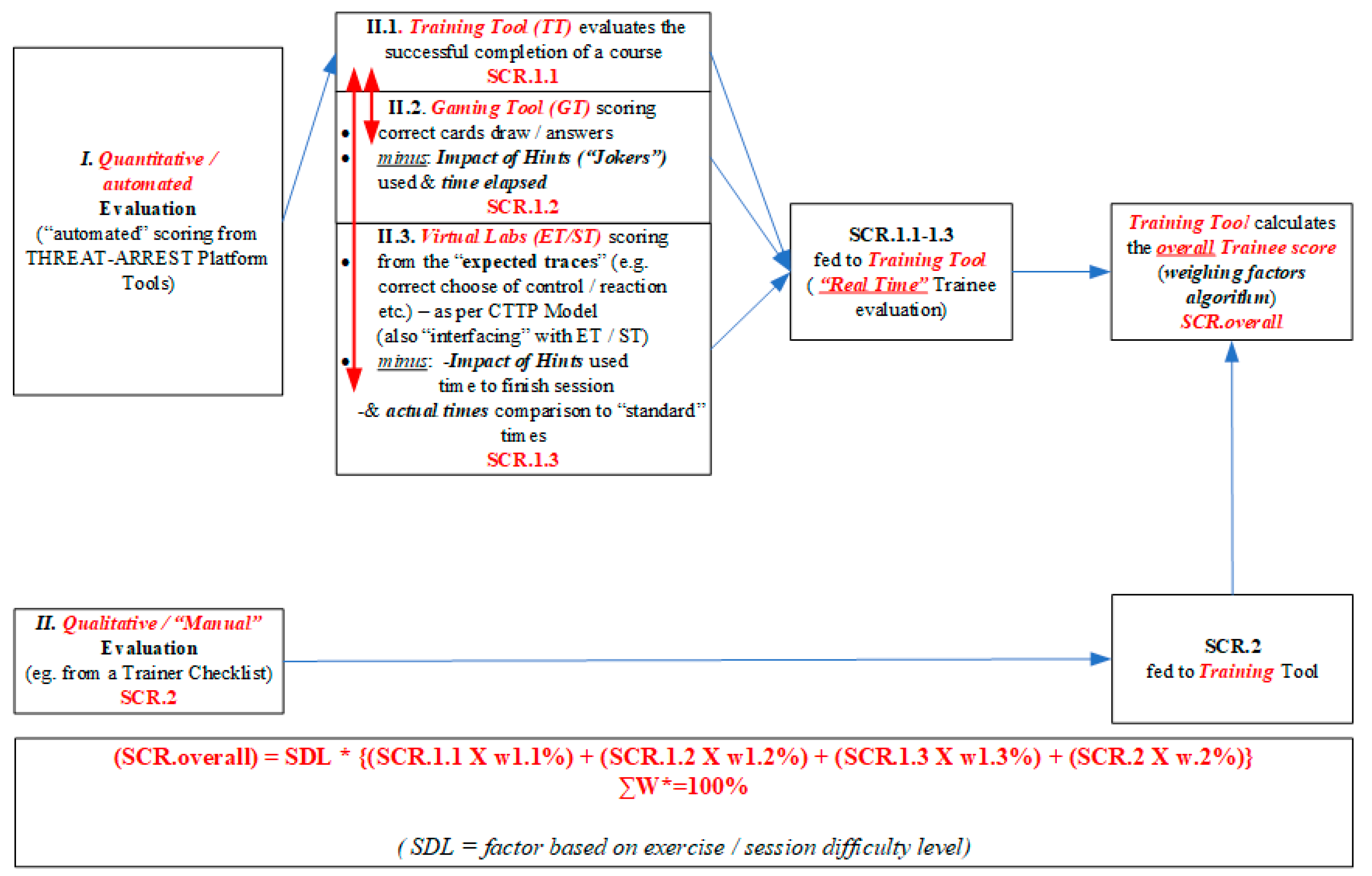

- A quantitative (automated) scoring based on the TREAT-ARREST platform’s tools and the relevant information derived from the CTTP Models. The first one can be divided to three sub-scores stemmed from:

- The Training Tool;

- The Gamification Tool;

- And the virtual labs with the Emulation and Simulation Tools.

- And a qualitative (manual) scoring, e.g., when the trainee answers a questionnaire.

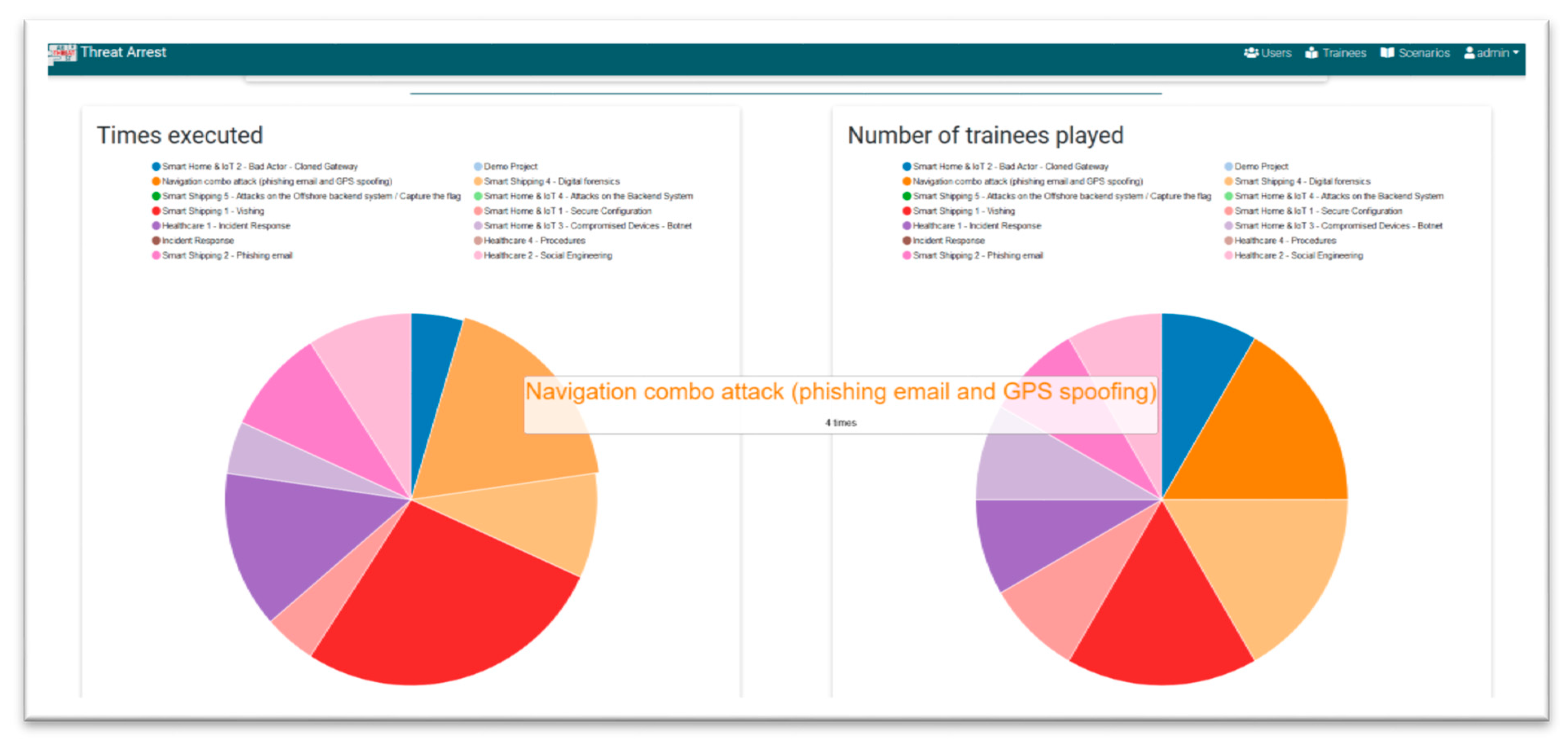

4.8. Benchmarking of the Module

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lin, J.; Yu, W.; Zhang, N.; Yang, X.; Zhang, H.; Zhao, W. A survey of Internet of Things: Architecture, enabling technologies, security and privacy, and applications. IEEE Internet Things J. 2017, 4, 1125–1142. [Google Scholar] [CrossRef]

- Hatzivasilis, G.; Fysarakis, K.; Soultatos, O.; Askoxylakis, I.; Papaefstathiou, I.; Demetriou, G. The Industrial Internet of Things as an enabler for a Circular Economy Hy-LP: A novel IIoT Protocol, evaluated on a Wind Park’s SDN/NFV-enabled 5G Industrial Network. In Computer Communications—Special Issue on Energy-Aware Design for Sustainable 5G Networks; Elsevier: Amsterdam, The Netherlands, 2018; Volume 119, pp. 127–137. [Google Scholar]

- Habibi, J.; Midi, D.; Mudgerikar, A.; Bertino, E. Heimdall: Mitigating the Internet of Insecure Things. IEEE Internet Things J. 2017, 4, 968–978. [Google Scholar] [CrossRef]

- Hatzivasilis, G.; Soultatos, O.; Ioannidis, S.; Verikoukis, C.; Demetriou, G.; Tsatsoulis, C. Review of Security and Privacy for the Internet of Medical Things (IoMT). In Proceedings of the 1st International Workshop on Smart Circular Economy (SmaCE), Santorini Island, Greece, 30 May 2019; pp. 1–8. [Google Scholar]

- Hatzivasilis, G.; Soultatos, O.; Ioannidis, S.; Spanoudakis, G.; Katos, V.; Demetriou, G. MobileTrust: Secure Knowledge Integration in VANETs. ACM Trans. Cyber-Phys. Syst. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Khandelwal, S. United airlines hacked by sophisticated hacking group. The Hacker News, 30 July 2015. [Google Scholar]

- Hirschfeld, J.D. Hacking of government computers exposed 21.5 million people. The New York Times, 9 July 2015. [Google Scholar]

- Santa, I. A Users’ Guide: How to Raise Information Security Awareness; ENISA: Heraklion, Greece, 2010; pp. 1–140. [Google Scholar]

- Manifavas, C.; Fysarakis, K.; Rantos, K.; Hatzivasilis, G. DSAPE—Dynamic Security Awareness Program Evaluation. In International Conference on Human Aspects of Information Security, Privacy, and Trust; Springer: Cham, Switzerland, 2014; pp. 258–269. [Google Scholar]

- Kish, D.; Carpenter, P. Forecast Snapshot: Security Awareness Computer-Based Training, Worldwide. 2017. Gartner Research, ID G00324277, March 2017. Available online: https://www.gartner.com/en/documents/3629840/forecast-snapshot-security-awareness-computer-based-trai (accessed on 24 July 2020).

- SANS: Online Cyber Security Training. 2000–2020. Available online: https://www.sans.org/online-security-training/ (accessed on 24 July 2020).

- CYBERINTERNACADEMY: Complete Cybersecurity Course Review on Cyberinernacademy. 2017–2020. Available online: https://www.cyberinternacademy.com/complete-cybersecurity-course-guide-review/ (accessed on 24 July 2020).

- StationX: Online Cyber Security & Hacking Courses. 1996–2020. Available online: https://www.stationx.net/ (accessed on 24 July 2020).

- Cybrary: Develop Security Skills. 2016–2020. Available online: https://www.cybrary.it/ (accessed on 24 July 2020).

- AwareGO: Security Awareness Training. 2011–2020. Available online: https://www.awarego.com/ (accessed on 24 July 2020).

- BeOne Development: Security Awareness Training. 2013–2020. Available online: https://www.beonedevelopment.com/en/security-awareness/ (accessed on 24 July 2020).

- ISACA: CyberSecurity Nexus (CSX) Training Platform. 1967–2020. Available online: https://cybersecurity.isaca.org/csx-certifications/csx-training-platform (accessed on 24 July 2020).

- Kaspersky: Kaspersky Security Awareness. 1997–2020. Available online: https://www.kaspersky.com/enterprise-security/security-awareness (accessed on 24 July 2020).

- CyberBit: Cyber Security Training Platform. 2019–2020. Available online: https://www.cyberbit.com/blog/security-training/cyber-security-training-platform/ (accessed on 24 July 2020).

- Bloom, B. Taxonomy of educational objectives: The classification of educational goals. In Handbook I: Cognitive Domain; David McKay Company: New York, NY, USA, 1956. [Google Scholar]

- Johnstone, M.N. Threat modelling with STRIDE and UML. In Proceedings of the 8th Australian Information Security Management Conference (AISM), Perth Western, Australia, 30 November 2010; pp. 18–27. [Google Scholar]

- Biggs, J. Teaching for Quality Learning at University: What the Student Does, 4th ed.; Open University Press: Maidenhead, UK, 2011; pp. 1–416. [Google Scholar]

- Sims, R. R: Kolb’s Experiential Learning Theory: A Framework for Assessing Person-Job Interaction. Acad. Manag. Rev. 1983, 8, 501–508. [Google Scholar] [CrossRef]

- Othonas, S.; Fysarakis, K.; Spanoudakis, G.; Koshutanski, H.; Damiani, E.; Beckers, K.; Wortmann, D.; Bravos, G.; Ioannidis, M. The TREAT-ARREST Cyber-Security Training Platform. In Proceedings of the 1st Model-driven Simulation and Training Environments for Cybersecurity (MSTEC), Luxembourg, 27 September 2019. [Google Scholar]

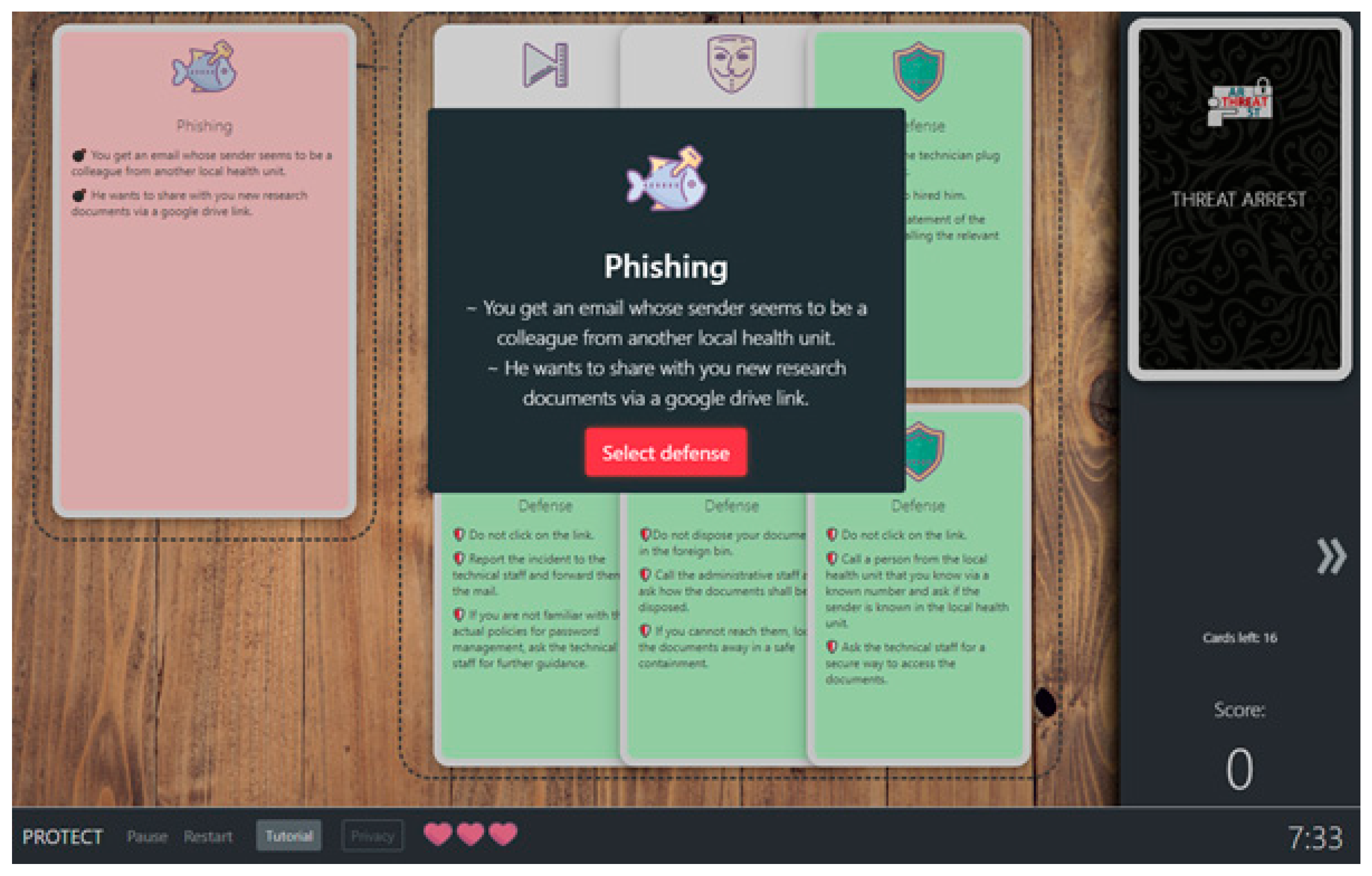

- Goeke, L.; Quintanar, A.; Beckers, K.; Pape, S. PROTECT—An Easy Configurable Serious Game to Train Employees Against Social Engineering Attacks. In Proceedings of the 1st Model-Driven Simulation and Training Environments for Cybersecurity (MSTEC), Luxembourg, 27 September 2019. [Google Scholar]

- Beckers, K.; Pape, S.; Fries, V. HATCH: Hack and trick capricious humans—A serious game on social engineering. In Proceedings of the 30th International BCS Human Computer Interaction (HCI) Conference Fusion, Bournemouth, UK, 11–15 July 2016; pp. 1–3. [Google Scholar]

- Braghin, C.; Cimato, S.; Damiani, E.; Frati, F.; Mauri, L.; Riccobene, E. A model driven approach for cyber security scenarios deployment. In Proceedings of the 1st Model-Driven Simulation and Training Environments for Cybersecurity (MSTEC), Luxembourg, 27 September 2019. [Google Scholar]

- Somarakis, I.; Smyrlis, M.; Fysarakis, K.; Spanoudakis, G. Model-driven Cyber Range Training—The Cyber Security Assurance Perspective. In Proceedings of the 1st Model-Driven Simulation and Training Environments for Cybersecurity (MSTEC), Luxembourg, 27 September 2019. [Google Scholar]

- Hautamäki, J.; Karjalainen, M.; Hämäläinen, T.; Häkkinen, P. Cyber security exercise: Literature review to pedagogical methodology. 13th annual International Technology. In Proceedings of the Education and Development Conference, Valencia, Spain, 11–13 March 2019; pp. 3893–3898. [Google Scholar]

- McDaniel, L.; Talvi, E.; Hay, B. Capture the Flag as Cyber Security Introduction. In Proceedings of the 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 5–8 January 2016; pp. 5479–5486. [Google Scholar]

- James, J.E.; Morsey, C.; Phillips, J. Cybersecurity education: A holistic approach to teaching security. In Issues in Information Systems; Maria, E.C., Ed.; IACIS: Leesburg, VA, USA, 2016; Volume 17, pp. 150–161. [Google Scholar]

- ISO 22398: Societal Security—Guidelines for Exercises. Available online: https://www.iso.org/standard/50294.html (accessed on 24 July 2020).

- Arabo, A.; Serpell, M. Pedagogical Approach to Effective Cybersecurity Teaching. In Transactions on Edutainment XV; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11345, pp. 129–140. [Google Scholar]

- Freitas, S.; Oliver, M. How can exploratory learning with games and simulations within the curriculum be most effectively evaluated? Comput. Educ. 2006, 46, 249–264. [Google Scholar] [CrossRef]

- Israel, M.; Lash, T. From classroom lessons to exploratory learning progressions: Mathematics + computational thinking. Interact. Learn. Environ. 2019, 28, 362–382. [Google Scholar] [CrossRef]

- Mann, L.; Chang, R.L.; Chandrasekaran, S.; Coddington, A.; Daniel, S.; Cook, E.; Crossin, E.; Cosson, B.; Turner, J.; Mazzurco, A.; et al. From problem-based learning to practice-based education: A framework for shaping future engineers. Eur. J. Eng. Educ. 2020, 1–21. [Google Scholar] [CrossRef]

- Scheponik, T.; Sherman, A.T.; Delatte, D.; Phatak, D.; Oliva, L.; Thompson, J.; Herman, G.L. How Students Reason about Cybersecurity Concepts. In Proceedings of the Frontiers in Education Conference (FIE), Erie, PA, USA, 12–15 October 2016; pp. 1–5. [Google Scholar]

- Ericsson, K.A. Deliberate practice and acquisition of expert performance: A general overview. Acad. Emerg. Med. 2008, 15, 988–994. [Google Scholar] [CrossRef] [PubMed]

- Miller, G.E. The assessment of clinical skills/competence/performance. Acad. Med. 1990, 65, 63–67. [Google Scholar] [CrossRef] [PubMed]

- Karjalainen, M.; Kokkonen, T.; Puuska, S. Pedagogical Aspects of Cyber Security Exercises. In Proceedings of the IEEE European Symposium on Security and Privacy Workshops, Stockholm, Sweden, 17–19 June 2019; pp. 103–108. [Google Scholar]

- Kick, J. Cyber Exercise Playbook. The MITRE Corporation. Available online: https://www.mitre.org/sites/default/files/publications/pr_14-3929-cyber-exercise-playbook.pdf (accessed on 24 July 2020).

- Lif, P.; Sommestad, T.; Granasen, D. Development and evaluation of information elements for simplified cyber-incident reports. In Proceedings of the International Conference On Cyber Situational Awareness, Data Analytics and Assessment (Cyber SA), Glasgow, UK, 11–12 June 2018; pp. 1–10. [Google Scholar]

- Said, S.E. Pedagogical Best Practices in Higher Education National Centers of Academic Excellence/Cyber Defense Centers of Academic Excellence in Cyber Defense. Ph.D. Thesis, Union University, Tennessee, TN, USA, May 2018. [Google Scholar]

- Athauda, R.; AlKhaldi, T.; Pranata, I.; Conway, D.; Frank, C.; Thorne, W.; Dean, R. Design of a Technology-Enhanced Pedagogical Framework for a Systems and Networking Administration course incorporating a Virtual Laboratory. In Proceedings of the Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; pp. 1–5. [Google Scholar]

- Pohl, M. Learning to Think—Thinking to Learn: Models and Strategies to Develop a Classroom Culture of Thinking, 1st ed.; Hawker Brownlow Education: Cheltenham, Australasia, 2000; pp. 1–98. [Google Scholar]

- Švábenský, V.; Vykopal, J.; Čermák, M.; Laštovička, M. Enhancing cybersecurity skills by creating serious games. In Proceedings of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education (ITiCSE), Larnaca, Cyprus, 2–4 July 2018; pp. 194–199. [Google Scholar]

- Jin, G.; Tu, M. Evaluation of Game-Based Learning in Cybersecurity Education for High School Students. J. Educ. Learn. 2018, 12, 150–158. [Google Scholar] [CrossRef]

- Taylor-Jackson, J.; McAlaney, J.; Foster, J.; Bello, A.; Maurushat, A.; Dale, J. Incorporating Psychology into Cyber Security Education: A Pedagogical Approach. In Proceedings of the AsiaUSEC’20, Financial Cryptography and Data Security (FC), Sabah, Malaysia, 14 February 2020; pp. 1–15. [Google Scholar]

- Shah, V.; Kumar, A.; Smart, K. Moving Forward by Looking Backward: Embracing Pedagogical Principles to Develop an Innovative MSIS Program. J. Inf. Syst. Educ. 2018, 29, 139–156. [Google Scholar]

- Knapp, K.J.; Maurer, C.; Plachkinove, M. Maintaining a Cybersecurity Curriculum: Professional Certifications as Valuable Guidance. J. Inf. Syst. Educ. 2017, 28, 101–114. [Google Scholar]

- Shafiq, H.; Kamal, A.; Ahmad, S.; Rasool, G.; Iqbal, S. Threat modelling methodologies: A survey. Sci. Int. 2014, 26, 1607–1609. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R.; Airasian, P.W.; Cruikshank, K.A.; Mayer, R.E.; Pintrich, P.R.; Raths, J.; Wittrock, M.C. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Reference and Research Book News: Dublin, OH, USA, 2001; Volume 16, pp. 1–336. [Google Scholar]

- Bird, J.; Kim, F. Survey on application security programs and practices. In A SANS Analyst Survey; SANS Institute: Bethesda, MD, USA, 2014; pp. 1–24. [Google Scholar]

- DANAOS Shipping Company: DANAOSone Platform. DANAOS Management Consultants S.A. Available online: https://web2.danaos.gr/maritime-software-solutions/danaosone-platform/ (accessed on 24 July 2020).

- Trustwave. Security Testing Practices and Priorities: An Osterman Research Survey Report; Osterman Research: Seattle, WA, USA, 2016; pp. 1–15. [Google Scholar]

- IMO. SOLAS Chapter XI-2—International Ship and Port Facility Security Code (ISPS Code); International Maritime Organization (IMO): London, UK, 2004. [Google Scholar]

- CIS: Center of Internet Security. Available online: https://www.cisecurity.org/ (accessed on 24 July 2020).

- ESCO: Results of Simulation-Based Competence Development Survey. European Cyber Security Organisation, 2019–2020 Report. Available online: https://echonetwork.eu/report-results-of-simulation-based-competence-development-survey/ (accessed on 24 July 2020).

- Aaltola, K.; Taitto, P. Utilising Experiential and Organizational Learning Theories to Improve Human Performance in Cyber Training. Inf. Secur. Int. J. 2019, 43, 123–133. [Google Scholar] [CrossRef]

| Feature | A | B | C | D | E | F |

|---|---|---|---|---|---|---|

| Automatic security vulnerability analysis of a pilot system | Y | N | N | N | N | N |

| Multi-layer modelling | Y | P | Y | Y | Y | P |

| Continuous security assurance | Y | N | N | Y | Y | N |

| Serious gaming | Y | N | Y | Y | N | P |

| Realistic simulation of cyber systems | Y | P | Y | Y | Y | N |

| Combination of emulated and real equipment | Y | N | P | Y | N | N |

| Programme runtime evaluation | Y | N | N | Y | Y | Y |

| Programme runtime adaptation | Y | N | Y | Y | N | P |

| # | Description | Trainee Type | Security Expertise | Platform Tools |

|---|---|---|---|---|

| 1 | Navigation combo attack (phishing email and GPS spoofing) | Captain | Highly-privilege actuator with low/moderate security knowledge |

|

| 2 | Vishing (social engineering) | Crew/Offshore officers | Non-security actuators with low access privileges |

|

| 3 | Attacks on the Offshore system | IT Administrators of the shipping company | Highly-privilege actuators with moderate/high security knowledge |

|

| 4 | Digital Forensics | The organization’s security engineers | Security experts |

|

| STRIDE Property | Bloom Taxonomy Layer | Description |

|---|---|---|

| Tampering/Integrity | Remembering | Introductory lesson to cryptography |

| Understanding | Exercises with the educational Crypt Tool 2 | |

| Applying | Practice with PGP (sign/verify emails) | |

| Analyzing/Evaluating | Emulated scenario where the trainee has to verify emails’ integrity with PGP and send signed responses to the back office | |

| Creating | Act as the back office employee or the attacker and send the emails of the emulated scenario to other trainees | |

| Spoofing/Authentication | Remembering | Lesson for social engineering and phishing attacks |

| Understanding | Review of actual phishing email examples and play a tailored PROTECT game | |

| Applying | Classify email examples as legitimate or malicious | |

| Analyzing/Evaluating | Emulated scenario where the trainee must audit emails (e.g., the sender’s email address, the email’s content, PGP verification, etc.) and justify if they are legitimate, faulty, or malicious. | |

| Creating | Act as the back office employee or the attacker and send the emails of the emulated scenario to other trainees |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hatzivasilis, G.; Ioannidis, S.; Smyrlis, M.; Spanoudakis, G.; Frati, F.; Goeke, L.; Hildebrandt, T.; Tsakirakis, G.; Oikonomou, F.; Leftheriotis, G.; et al. Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees. Appl. Sci. 2020, 10, 5702. https://doi.org/10.3390/app10165702

Hatzivasilis G, Ioannidis S, Smyrlis M, Spanoudakis G, Frati F, Goeke L, Hildebrandt T, Tsakirakis G, Oikonomou F, Leftheriotis G, et al. Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees. Applied Sciences. 2020; 10(16):5702. https://doi.org/10.3390/app10165702

Chicago/Turabian StyleHatzivasilis, George, Sotiris Ioannidis, Michail Smyrlis, George Spanoudakis, Fulvio Frati, Ludger Goeke, Torsten Hildebrandt, George Tsakirakis, Fotis Oikonomou, George Leftheriotis, and et al. 2020. "Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees" Applied Sciences 10, no. 16: 5702. https://doi.org/10.3390/app10165702

APA StyleHatzivasilis, G., Ioannidis, S., Smyrlis, M., Spanoudakis, G., Frati, F., Goeke, L., Hildebrandt, T., Tsakirakis, G., Oikonomou, F., Leftheriotis, G., & Koshutanski, H. (2020). Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees. Applied Sciences, 10(16), 5702. https://doi.org/10.3390/app10165702