1. Introduction

Dams can bring significant socio-economic benefits under safe operating conditions. In case of a dam accident, there will be a huge disaster [

1,

2,

3]. In fact, most dam accidents did not arise suddenly, but went through a process from quantity to quality variation [

4]. If we can establish suitable prediction models for dam monitoring data and analyze them in a timely manner, potential problems in the structural behavior of dams will be identified, thus avoiding accidents.

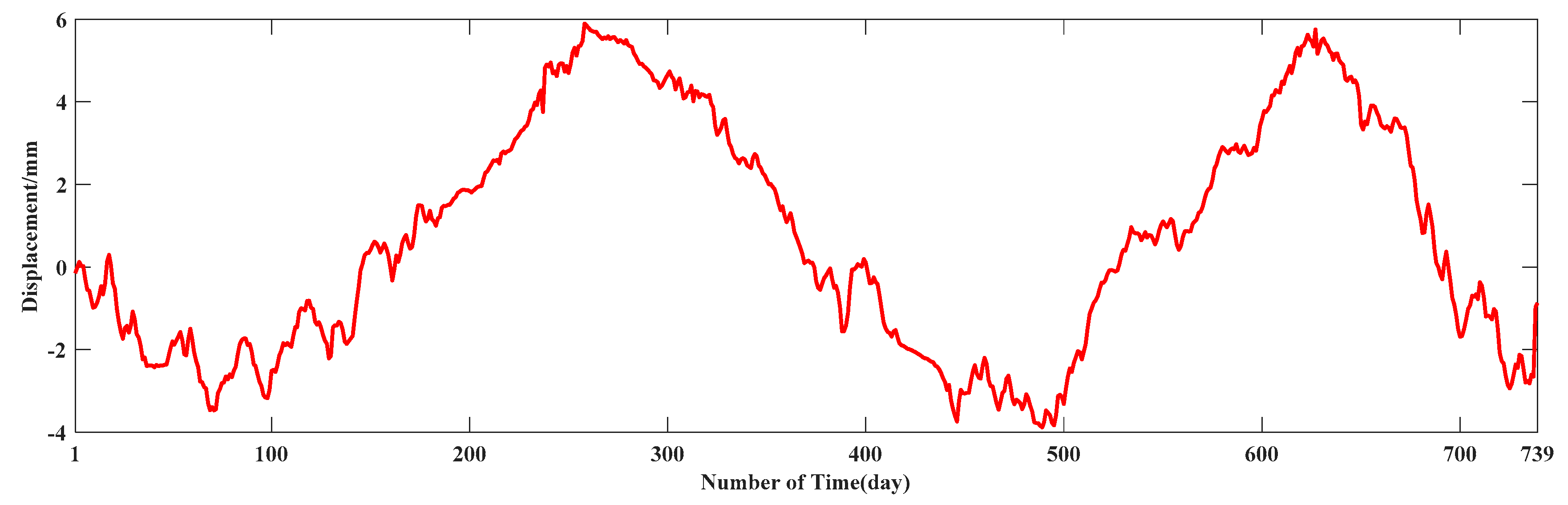

As the controlled indicator of dam safety monitoring, deformation monitoring data can objectively reflect the structural state and the safety condition of dams, which are one of the important bases for assessing the safety of dam projects [

5]. During the actual service of a dam, the deformation monitoring data are usually complex nonstationary and nonlinear time series. Therefore, it is an important research topic to accurately predict dam deformation (DD) in the future by using historical deformation monitoring data [

6,

7]. Currently, the commonly used models for predicting DD are the deterministic models, statistical models, neural network models, and hybrid models [

7,

8,

9,

10,

11,

12,

13].

The deterministic models explain DD based on the physical laws of loads, material properties, and stress–strain relationships [

6,

14], requiring as accurate as possible material parameters, geometry, and operating conditions of the dam and its foundation, which are difficult to achieve in actual engineering [

15,

16]. In contrast, a statistical model is more easily implemented [

13]. It is a more commonly used data-driven approach for DD monitoring [

17]. Although the statistical model is simple and effective, it cannot capture the nonlinear characteristics of the DD time series, thus affecting the accuracy of deformation prediction [

14]. In comparison, a neural network model has unique advantages in dealing with nonlinear problems [

18,

19]. In the field of DD prediction, the neural network models have good and robust prediction performance. This so-called ‘black box’ method can build a data-driven model based on the historical deformation data of dams, thereby achieving high-precision prediction of deformation without knowing detailed environmental information, avoiding complex data handling and modeling processes. Among them, the artificial neural networks (ANN) [

20] and support vector machines (SVM) [

21] are the most commonly used methods for the nonlinear problems. However, these models have some limitations [

5]. An ANN model based on gradient descent requires multiple iterations to correct the relevant parameters, which is a time-consuming process and the model tends to fall into local minima. Although an SVM overcomes the shortcomings of the ANN model, its kernel function parameters are difficult to choose [

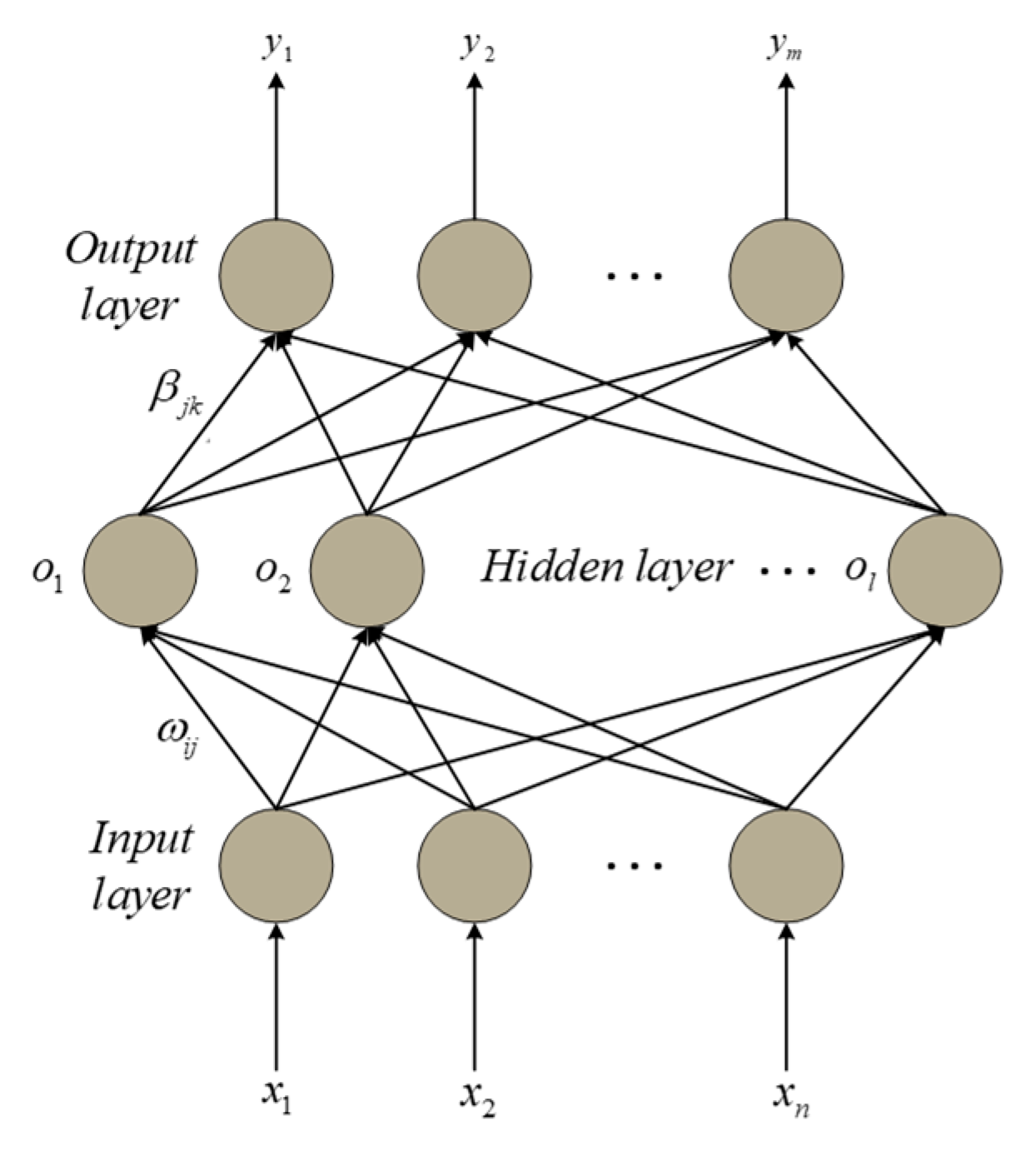

1]. Therefore, in this paper, the extreme learning machine (ELM) [

22,

23,

24] with efficient regression capabilities is chosen as the basic model for DD prediction to address the drawbacks of the above models [

25,

26]. It randomly generates connection weights between the input and hidden layers and thresholds for neurons in the hidden layer without adjusting during the training process. The conditions for the unique optimal solution of the ELM output are the determined activation function and the hidden layer parameters. Compared with traditional methods, ELMs have the advantages of fast learning speed and good generalization performance [

27].

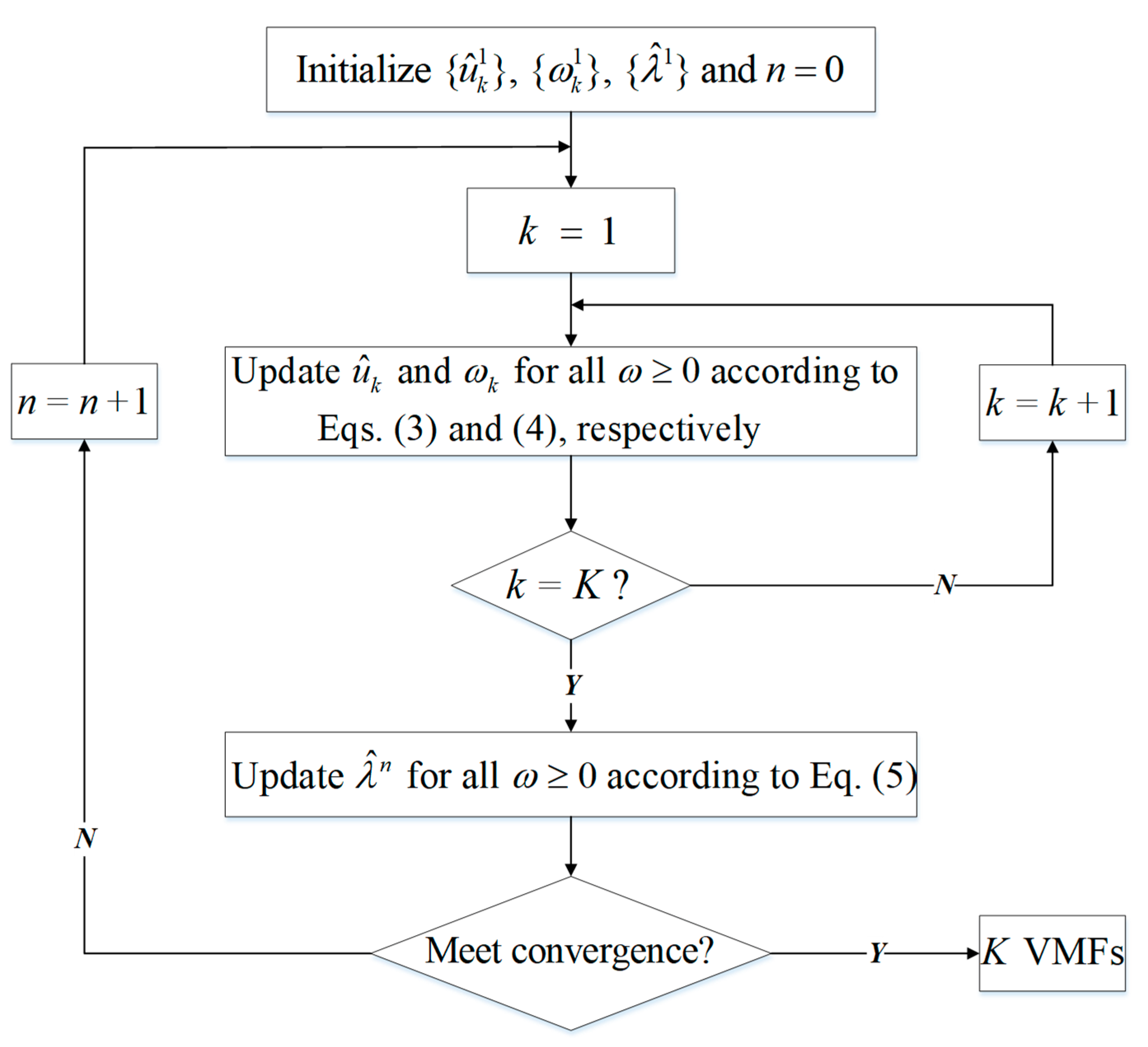

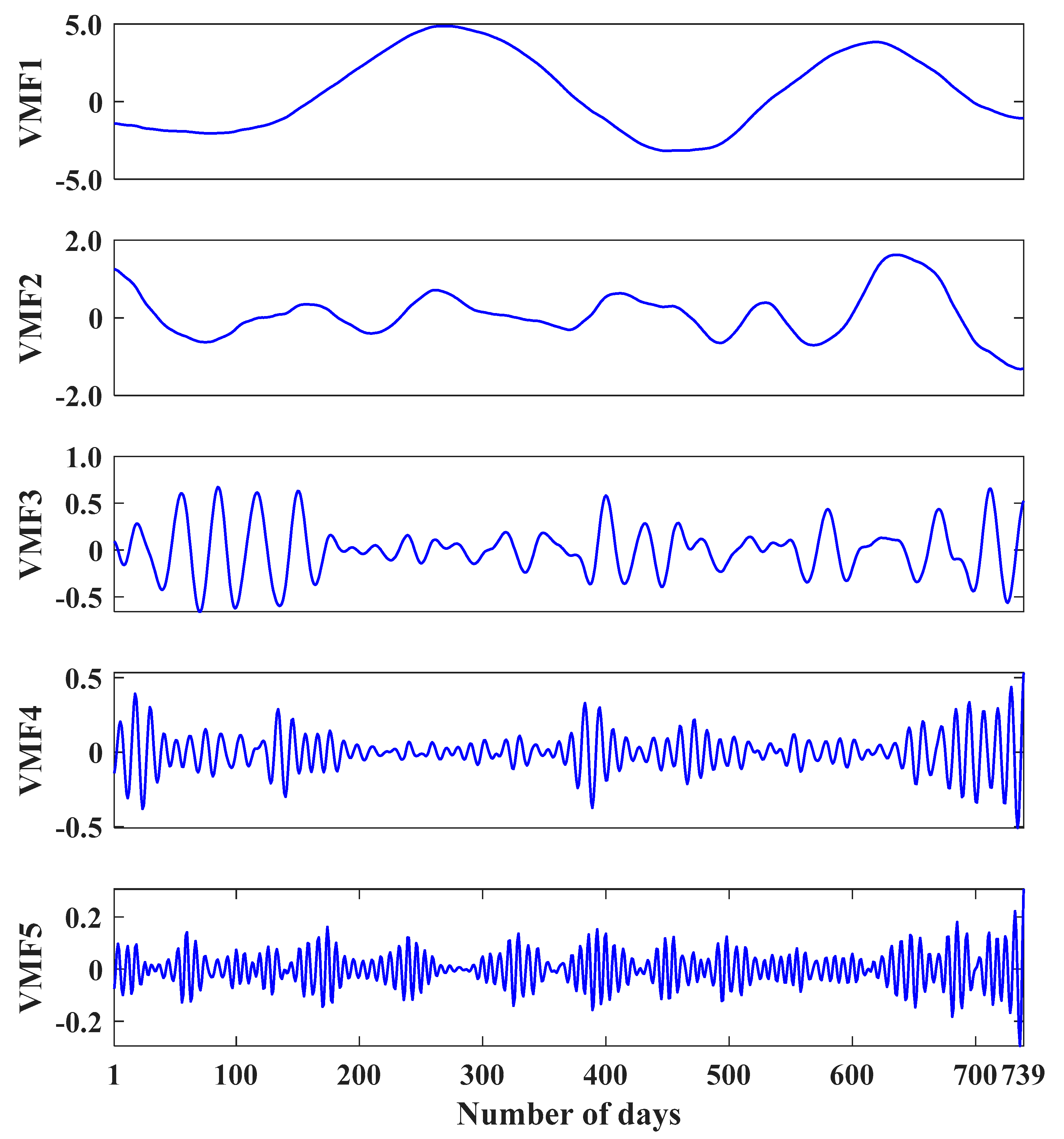

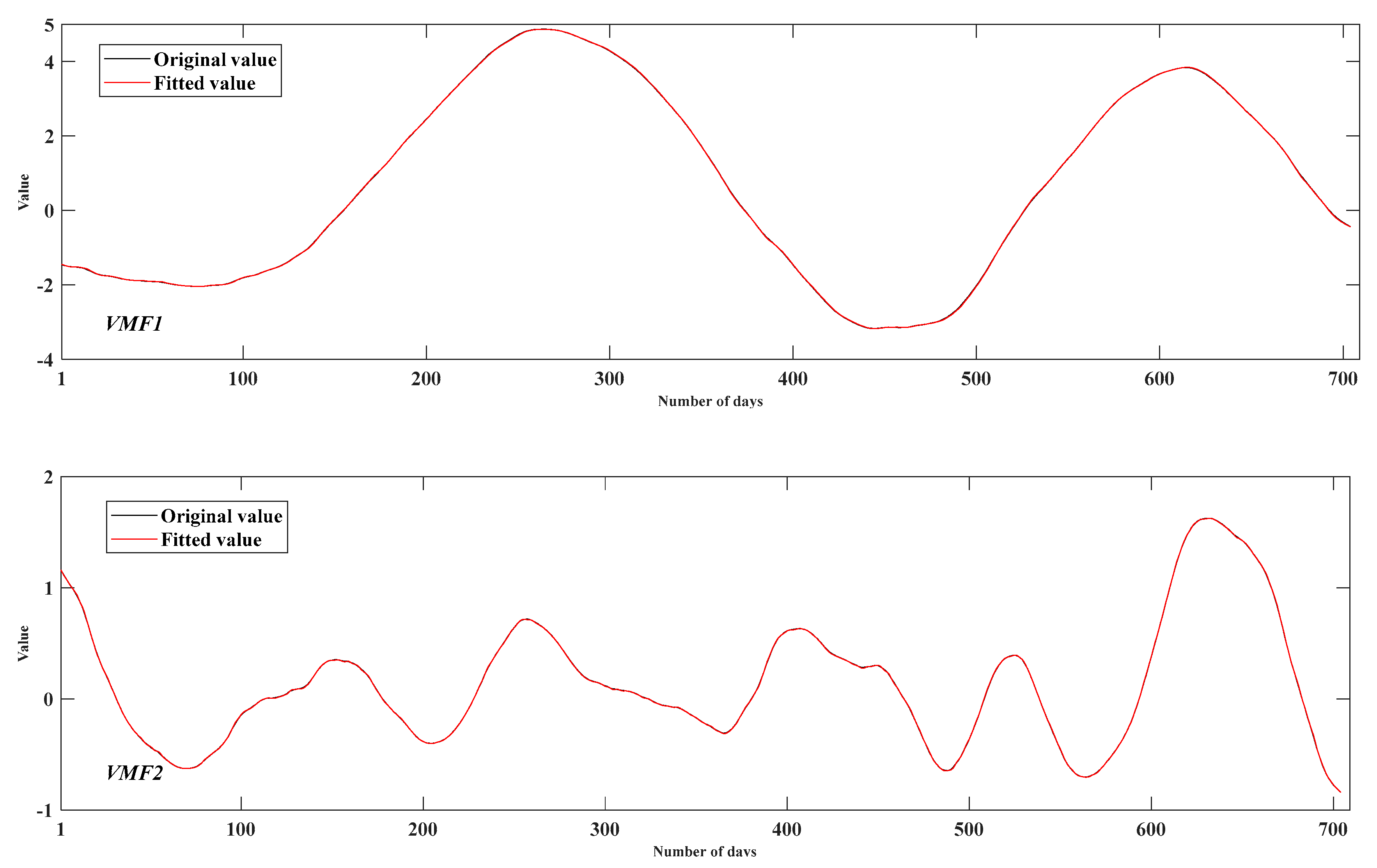

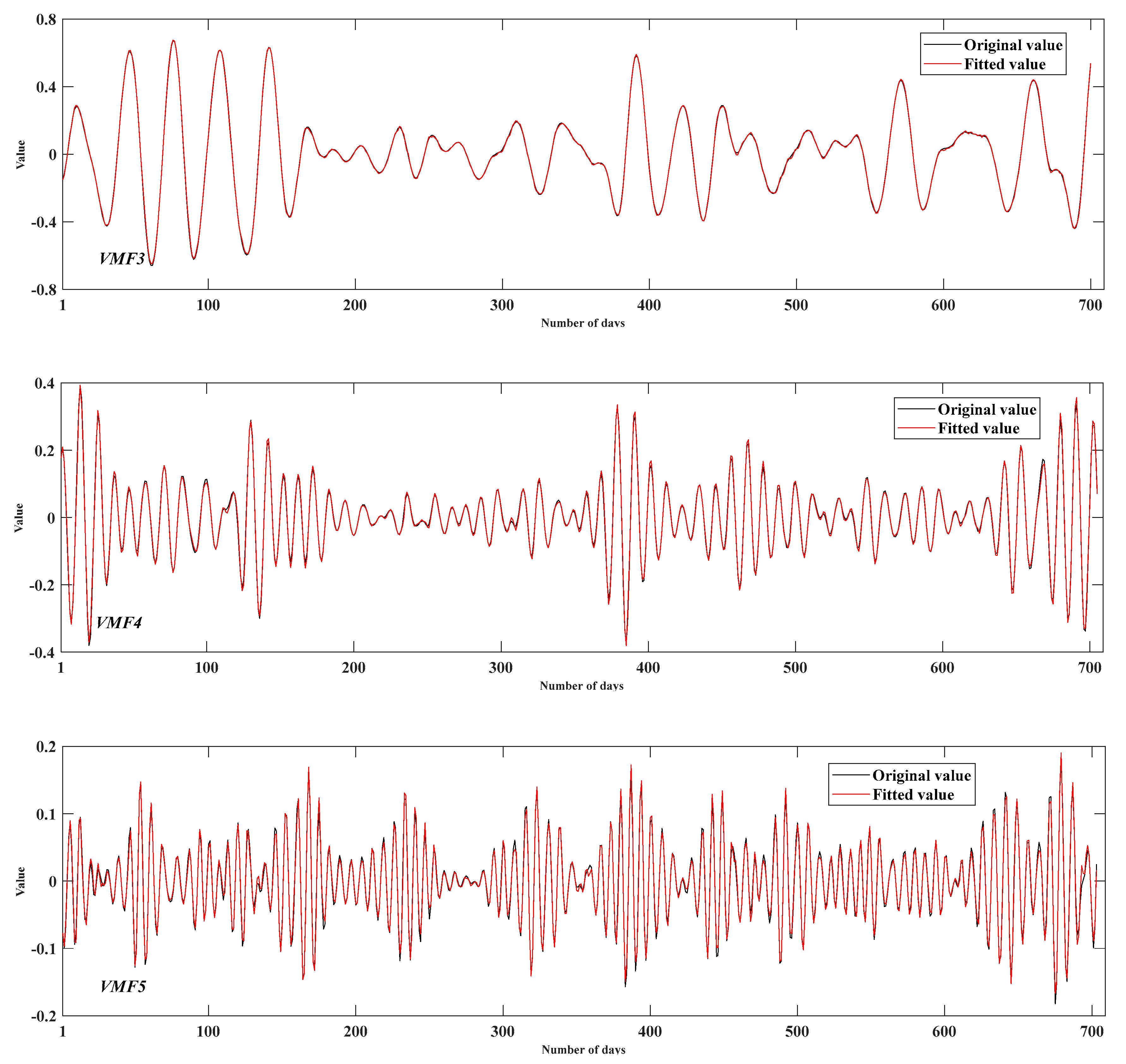

Although the ELM model has some advantages for handling nonlinear problems, the strong volatility of the deformation data will undoubtedly have negative impacts on the prediction results. Therefore, before predicting the DD, we need to preprocess the time series by signal decomposition technique, which can extract useful information from the original data to improve the prediction accuracy of data-driven models. Wavelet decomposition (WD) and empirical modal decomposition (EMD) are two common signal decomposition techniques [

1,

28,

29,

30,

31], and they are widely used in the processing of strongly fluctuating data. However, they have certain drawbacks, such as the difficulty in choosing wavelet bases and decomposition scales in wavelet transforms, and the basic disadvantage of EMD is the lack of mathematical foundation. To address these issues, the variational mode decomposition (VMD) [

32] algorithm is introduced in this study. Compared with EMD, WD, and other methods, VMD is an accurate mathematical model, which can decompose the original data into a set of variational mode functions (VMFs) that fluctuate around the center frequency [

33,

34,

35], with better decomposition effect and higher robustness [

36,

37,

38]. Currently, the contribution of VMD in the field of prediction has now been confirmed by some scholars, such as energy power generation prediction [

39], carbon price prediction [

40], runoff prediction [

41], container throughput prediction [

42] and air quality index prediction [

43]. However, to the best of the authors’ knowledge, the application for DD prediction has not been extensively explored, so this study will provide a priori knowledge for the utilization of VMD in dam safety monitoring.

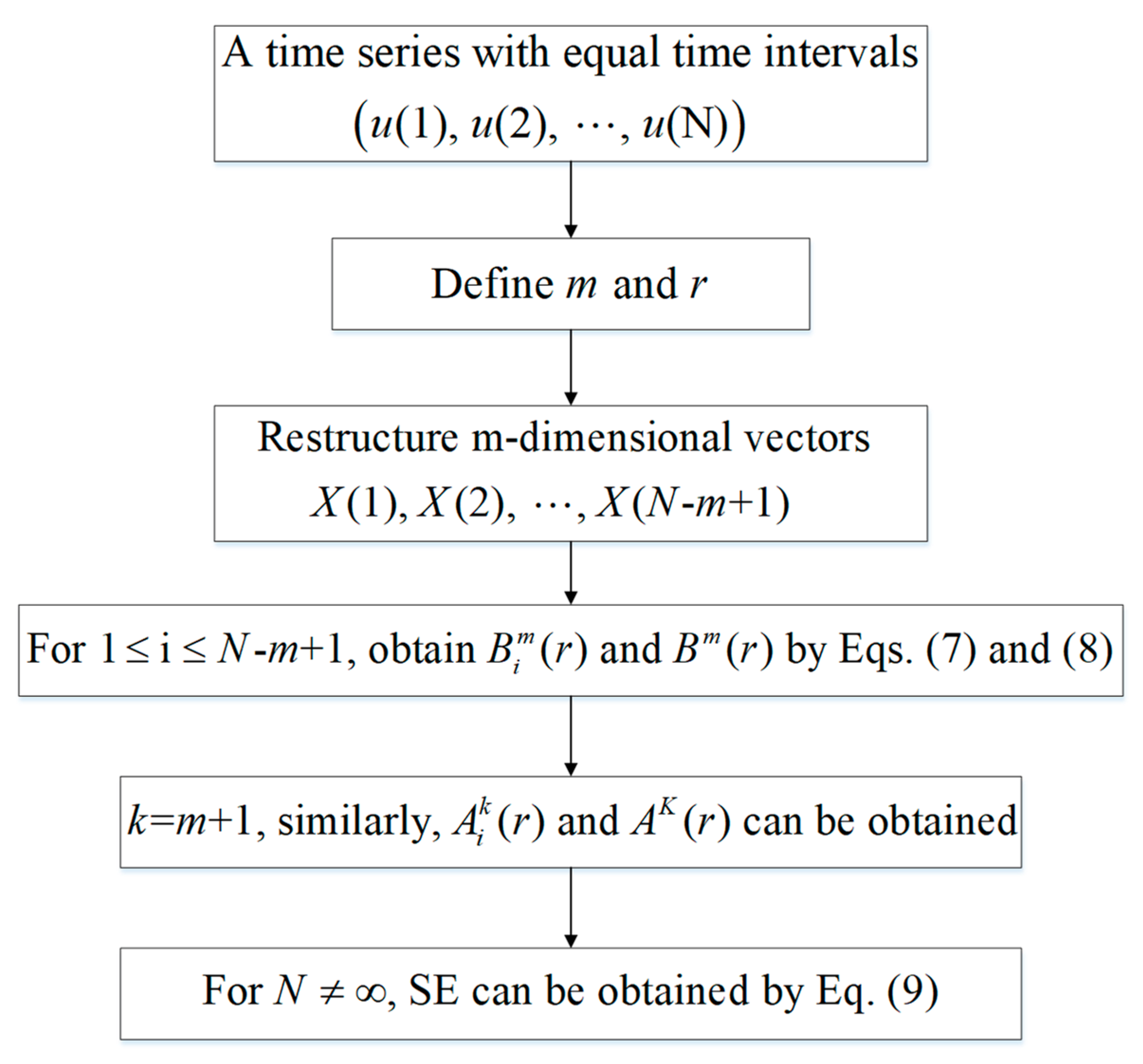

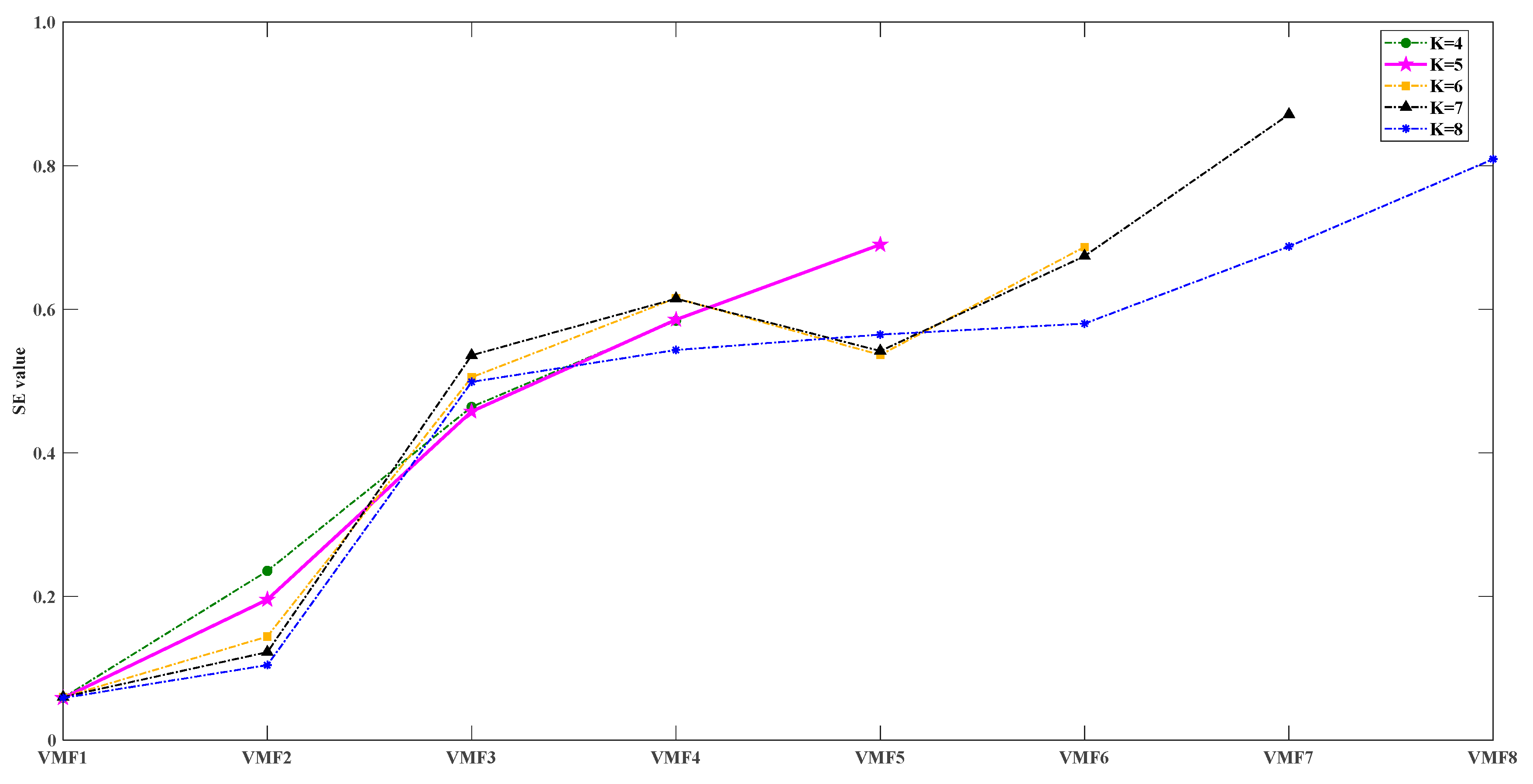

In practical applications, the decomposition effect of VMD is affected by the decomposition modulus K. If the K value is too small, the obtained VMFs will lose information; on the contrary, it will lead to excessive decomposition and even make the decomposition results worse [

44,

45]. In paper [

41], the decomposition modulus was determined by central frequency. Here, we propose a more efficient method for determining the decomposition modulus—sample entropy (SE) method. The SE can characterize the complexity of the time series, and when the time series is perturbed and the uncertainty of its state value increases, the value of the SE will also increase accordingly [

46]. If two or more subsequences have similar SE values, modal confusion occurs in the decomposition. Therefore, in this paper, we choose the maximum K value that allows a large difference between the SE values of any two subsequences as the final decomposition modulus of the VMD. In addition, there is an error sequence (ER) between the sum of the subsequences obtained by the VMD algorithm and the original sequence. In other words, the sum of the VMFs is not equal to the original sequence [

47]. Since the ER contains the true fluctuation characteristics of the original sequence, considering only VMFs will not fully reflect the randomness, which will lead to distortion of the prediction results to some extent. In this paper, the ER of the DD after decomposition is extracted, and because the sequence is approximately noisy, it cannot be modeled directly using the machine learning model to obtain good prediction results. To solve this problem, the authors of paper [

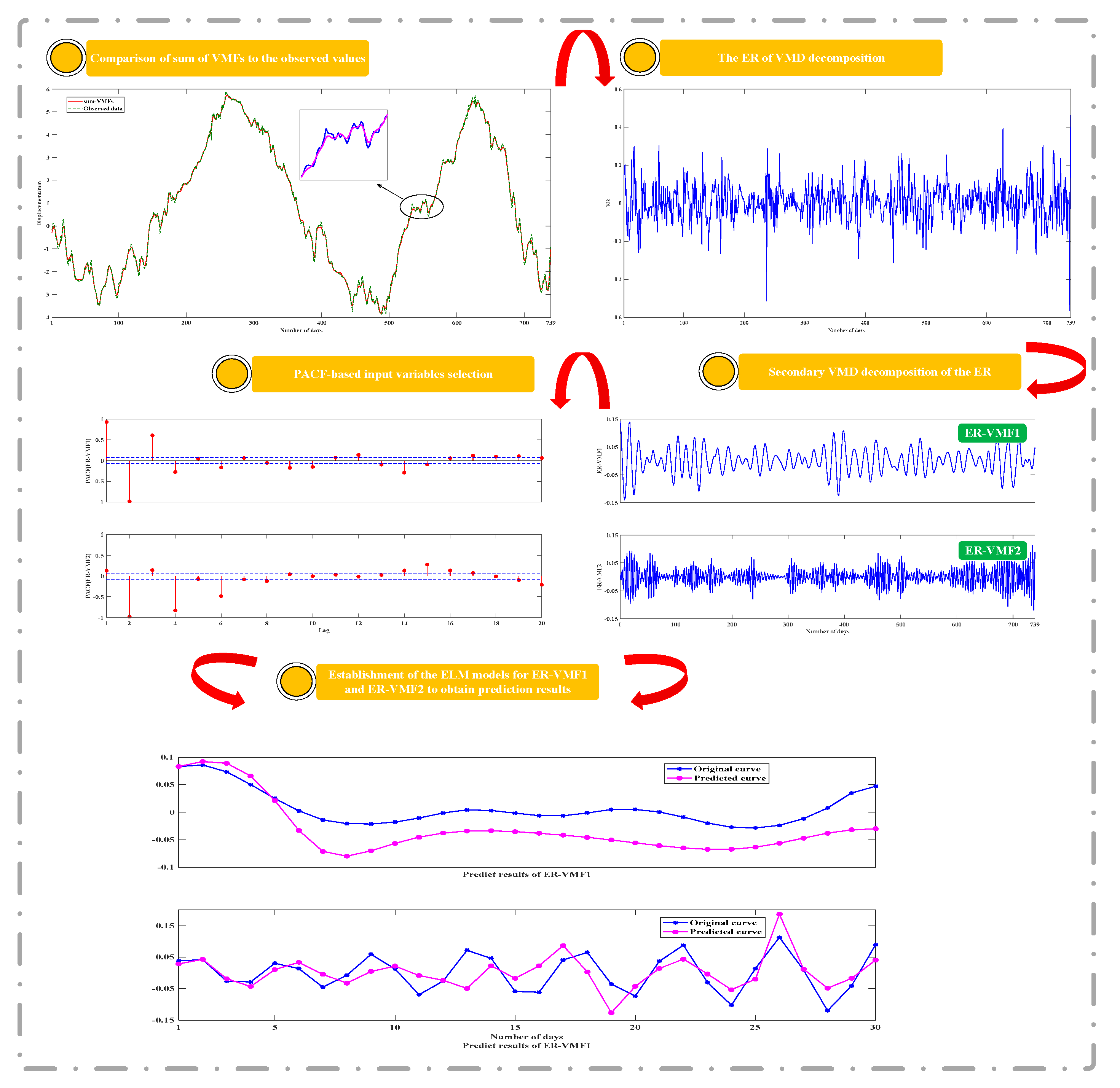

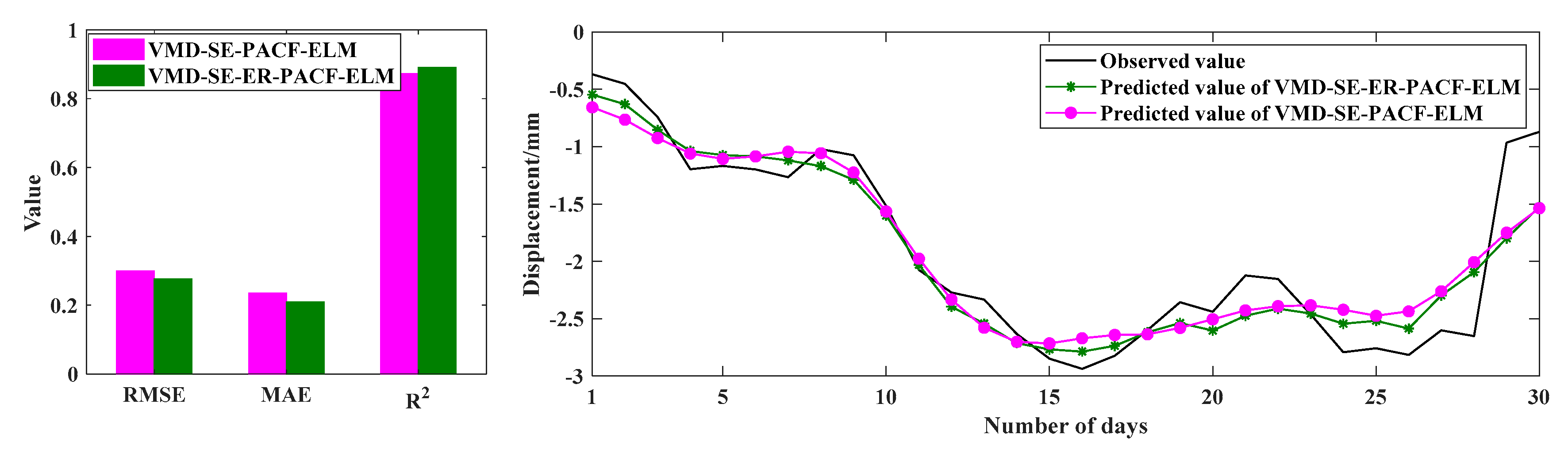

47] proposed the random number method for point prediction of ER, but the uncertainty of the results obtained by this method is significant. Therefore, we innovatively propose a VMD-based secondary decomposition for the ER in order to dig deeper into the temporal features embedded in it. The results show that the prediction obtained by considering the ER is closer to the observed values and has more practical engineering significance.

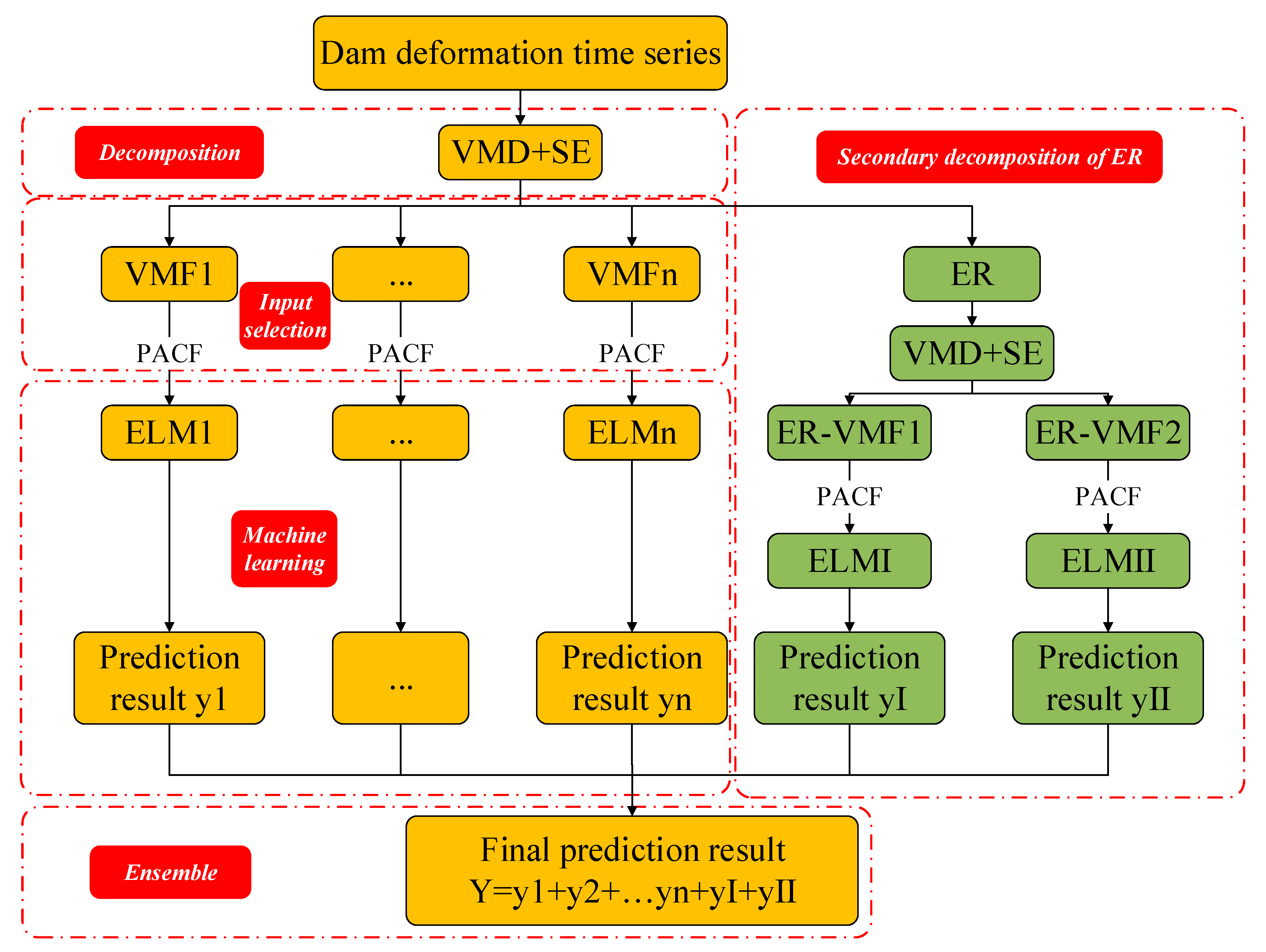

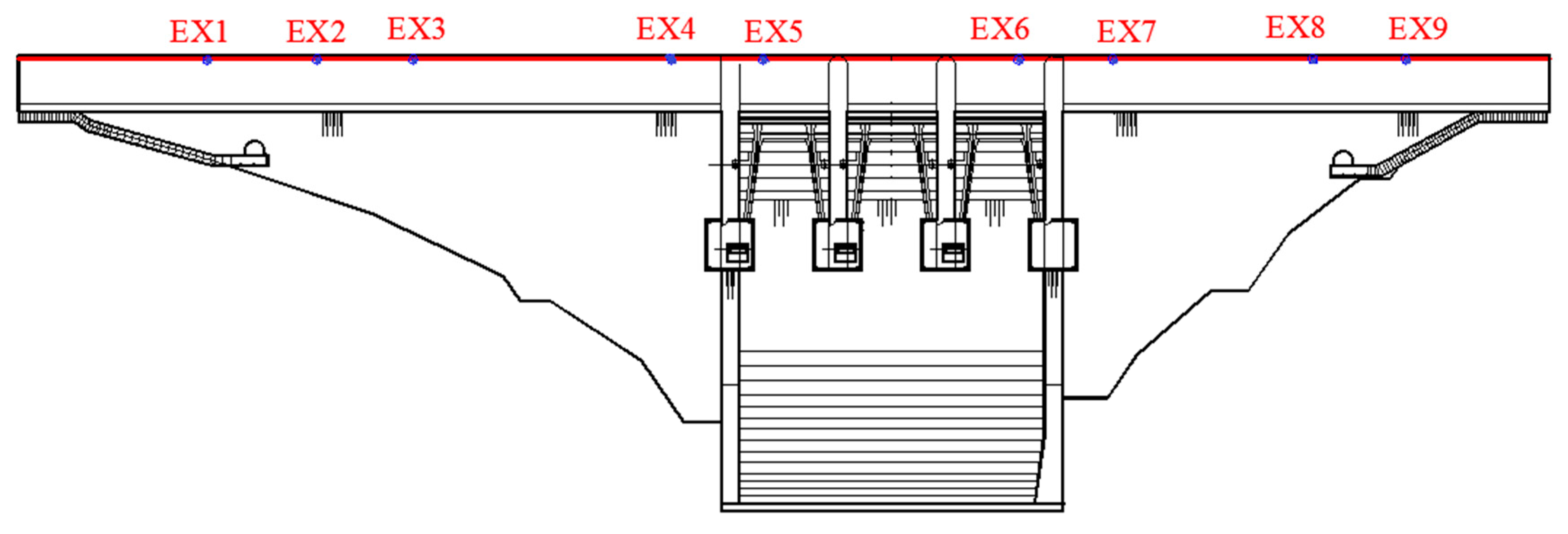

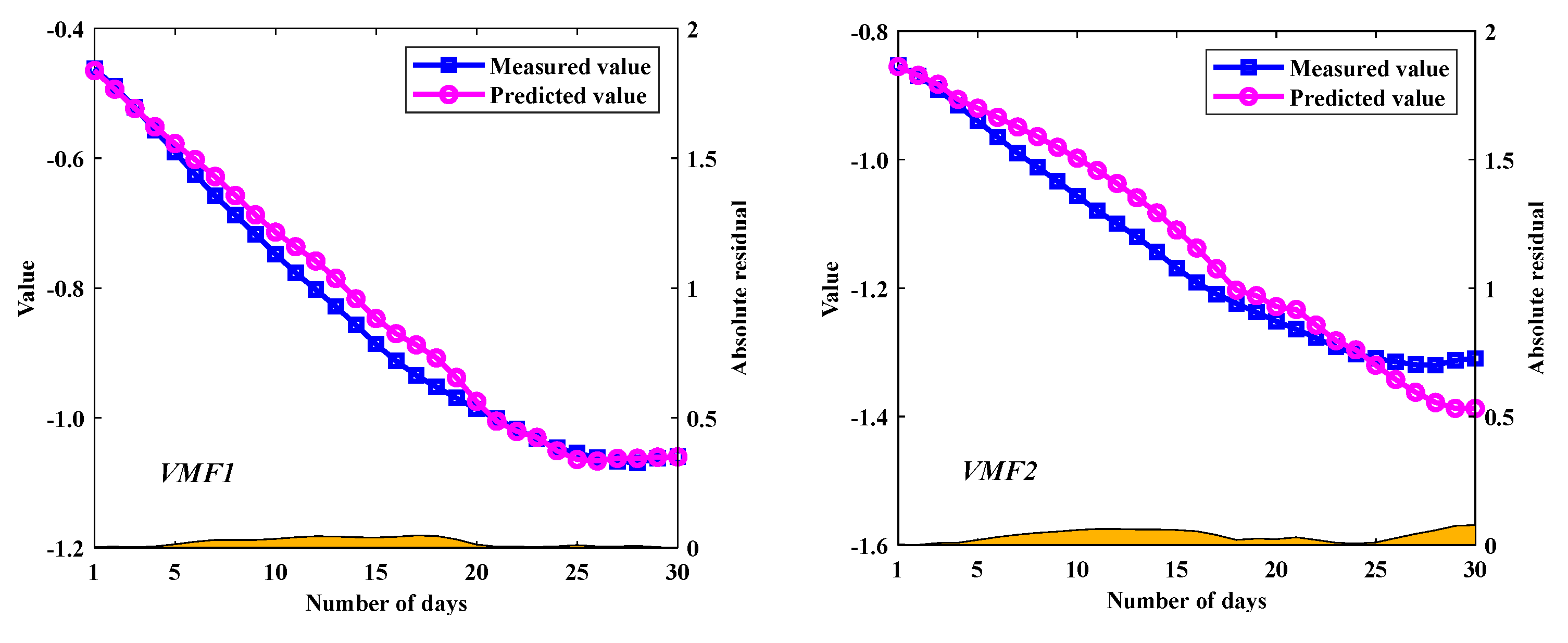

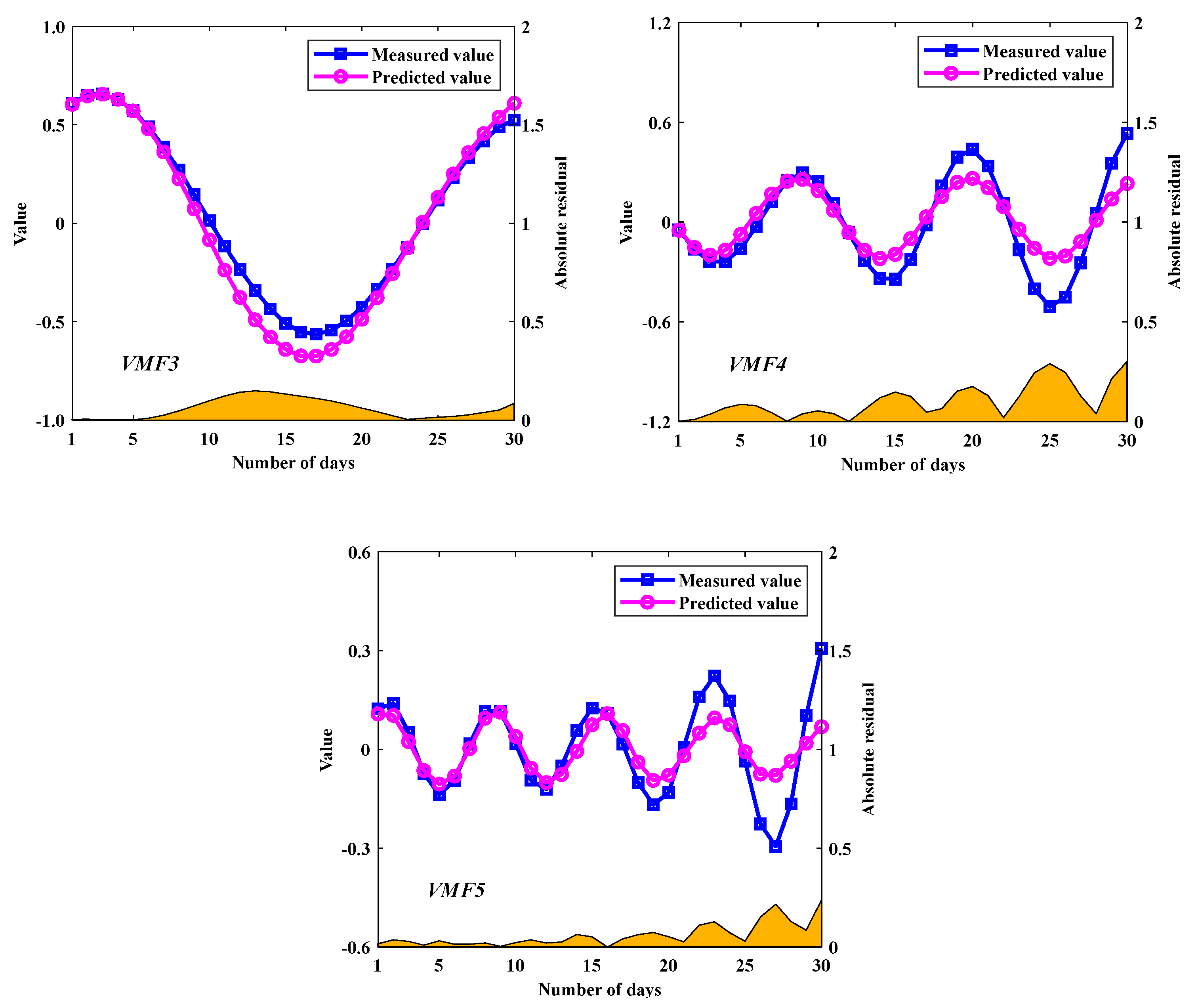

In summary, a hybrid model, namely VMD-SE-ER-PACF-ELM, for the DD prediction is proposed, which makes full use of the advantages of the VMD, SE, PACF, and ELM neural network. First, the VMD-SE model is used to decompose a deformation sequence into K VMFs with good characteristics and an ER. For the ER, the same method is used to obtain a series of subsequences. Secondly, the partial autocorrelation function (PACF) method is used to determine the input variables of the subsequences. Then the ELM models corresponding to each subsequence is trained in the machine-learning process. Finally, the ELMs are applied to the corresponding subsequences, and the sum of the prediction results of each component is the final result of the DD prediction. Meanwhile, comparisons are made between the model and other prediction models, while validating it through different prediction periods.

The rest of this paper is organized as follows:

Section 2 briefly introduces the methods mentioned above. In

Section 3, the proposed model and performance evaluation indicators are introduced. Then, in

Section 4, a case study and discussion of the results are presented, and

Section 5 presents the conclusions of the study.

5. Conclusions

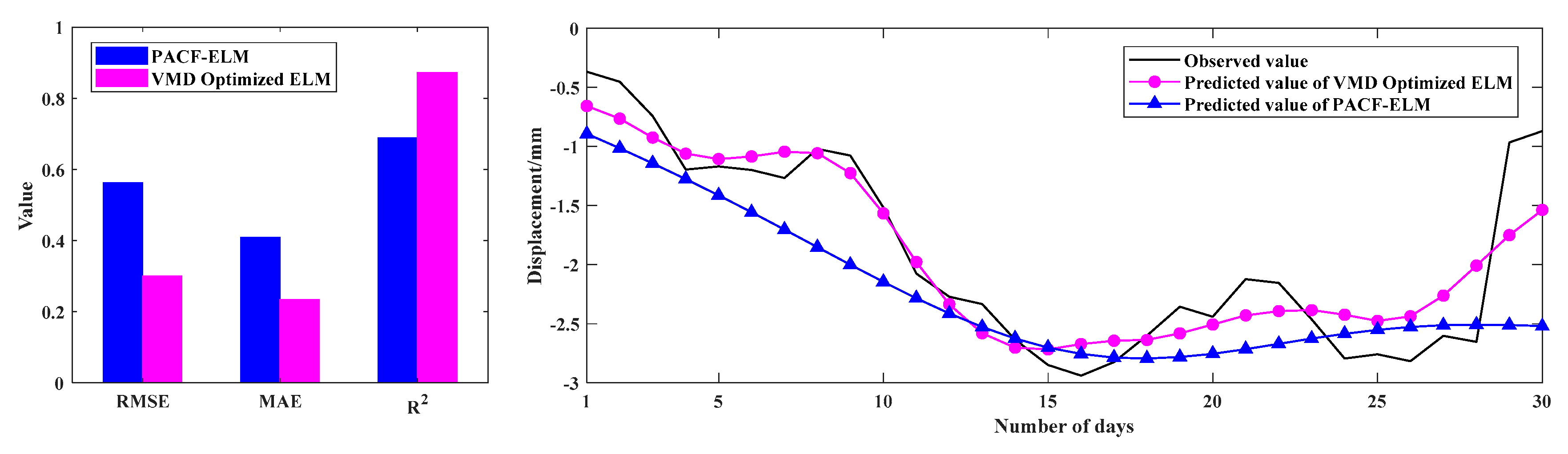

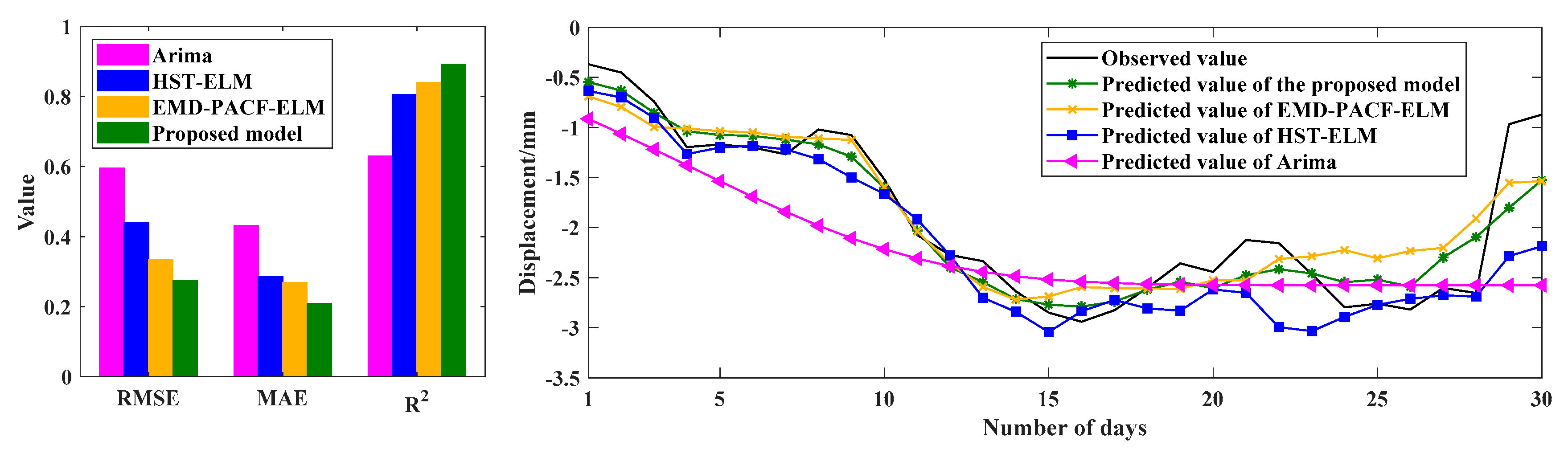

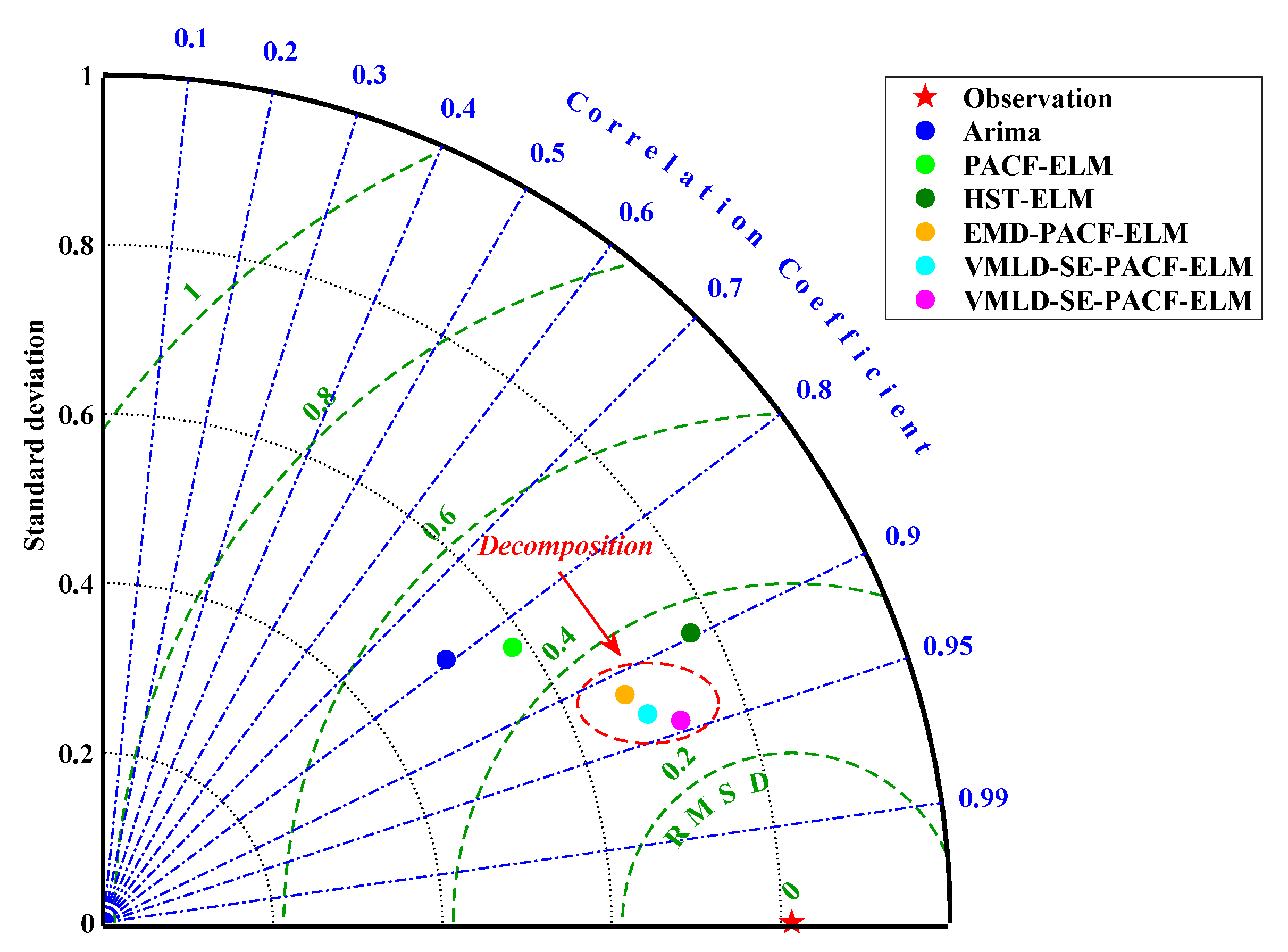

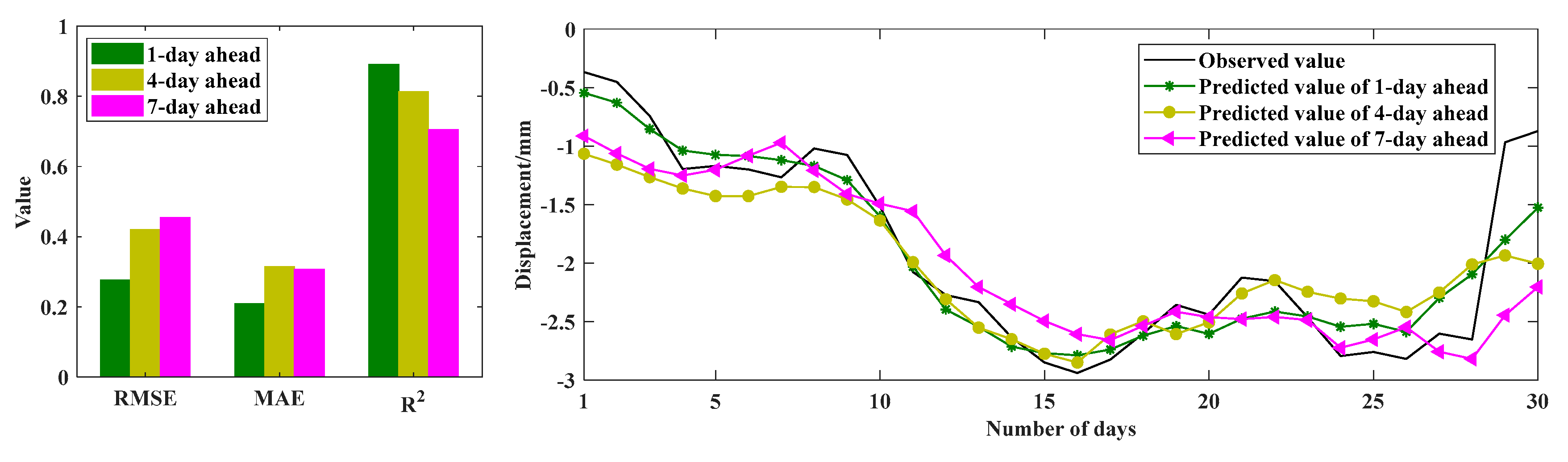

In order to improve the prediction performance of nonstationary DD, a novel hybrid model based on the decomposition-composition framework is proposed, namely VMD-SE-ER-PACF-ELM. The details are as follows: (1) The VMD algorithm is used to decompose an original deformation sequence into a number of subsequences with good characteristics to improve the prediction performance. (2) The SE method is used to quantify the complexity of each subsequence in order to select the appropriate decomposition modulus. (3) The secondary VMD decomposition of the ER enables the prediction value to be closer to the actual deformation characteristics. (4) The PACF method is used to analyze the characteristics of each subsequence to extract the input variables. (5) The ELM models are used to predict the subsequences and their combination is the final prediction result. Meanwhile, the prediction performance of the VMD-SE-ER-PACF-ELM model is compared with those of Arima, PACF-ELM, HST-ELM, VMD-PACF-ELM, and VMD-SE-PACF-ELM models with RMSE, MAE, R2, and Taylor diagrams as performance evaluation indicators.

The results show that the proposed VMD-SE-ER-PACF-ELM model has the best prediction performance among all prediction models. It can effectively predict nonstationary and nonlinear time series and significantly improve the prediction accuracy. In addition, we have also analyzed its performance under different prediction periods. The results show that the length of the prediction period is inversely proportional to the prediction accuracy, which can provide a priori knowledge for the normal operation and early warning of the dam projects.

Although the proposed method has good prediction results, there are still certain prediction errors. In this paper, the uncontrolled errors due to the randomness of the ELM model is the main cause of the prediction errors, which needs to be addressed in our future research. In addition, the idea of spatial relationships between the measurement points [

51] will be introduced into the prediction to analyze the overall deformation effect of dams.