1. Introduction

The lives of people with severe disabilities such as amyotrophic lateral sclerosis (ALS), motor neuron diseases (MND), cerebral palsy (CP), spinal cord injury (SCI), and stroke with intubation can be effectively improved to some extent with the help of assistive communication systems [

1]. When some of the original human functions such as the functions of movement and language are disabled, it would result in very serious problems and inconvenience in the disabled’s daily life. According to the world report on disability produced by World Health Organization [

2], the proportion of the world’s population over 60 years old will increase from 11% to 22%, and 15% of the world’s population by 2050 are estimated to be living with a disability. Once the patients lose their capabilities of movement and language, they can not communicate with the world smoothly. The disabled person can not express his/her own opinion conveniently, and then the caregiver can not understand the needs of the disabled person. Therefore, the arguments and mistakes are easily generated between the disabled and the caregiver and result in the disabled’s bad mood. The bad relationship between the disabled and the caregiver during long-duration will let the bad things happen which is hard to regret. Hence, it’s very important to develop an assistive communication system for improving the communication capability of the disabled person.

“Communication” is a process of exchanging opinions, transmitting messages, and establishing a relationship. Effective communication could be done through the good medium or tool of communication. The objects of communication not only include humans but also machines. In general, the medium of communication could be language, text, movement, expression and the possible interactive information. A lot of severe disabilities, such as ALS, MNDs, CP, SCI, and stroke, cause people to lose their abilities of speech and movement forever; therefore, they can not communicate with people directly. Of course, they can not control their own daily life by themselves as they wish, either. Gradually, the daily life of the disabled becomes colorless and meaningless.

The severely disabled can mean quadriplegia—that the people with serious diseases or handicaps such as movement and speech disabilities lie in bed year round and can do nothing but very limited functions such as blinking the eyes, twisting cheeks, twitching the finger, etc. What they can do every day is to watch the ceiling and ruminate. The severely disabled neither can move by themselves nor communicate with the others. According to the limits of severe disabled, it is very difficult for the severely disabled to communicate with people or machines. However, the sensory nerves and the autonomic nervous system are unaffected, the severely disabled might maintain the capabilities of hearing, sight, touch, smell, taste, thinking, and cognition. In short, to solve the communication problem is the most urgent issue, and it would make the severely disabled live more meaningfully and happily.

In December 2014, an assistive context-aware toolkit (ACAT) platform was developed by the Intel research team and the famous astrophysicist named Stephen Hawking, who is a patient with ALS. The researchers in the world can free access the related information of ACAT [

3] to implement more effective assistive communication systems because Intel has announced the source codes of the program and the related software on the website. Currently, the important functions of Intel ACAT include keyboard simulation, word prediction, speech synthesis, etc. It can help the severely disabled with movement and speech problems control the computer to communicate with the outside world, including editing, managing documents, navigating the web, writing, and accessing emails. Nevertheless, the body conditions of each disabled people are not the same; thus, ACAT might be not always suitable for all the people with ALS or other severe disabilities. For example, some severely disabled subjects are not used to wearing the sensor on the cheek or waiting for a scan for input, either.

Augmentative and alternative communication (AAC) is an alternative way to help people with speech challenges or language disorders find effective and appropriate ways to express their basic needs. Many AAC systems have been implemented to help the disabled in communicating with others or devices [

4,

5,

6,

7]. Most of the off-the-shelf AAC devices belonging to the standalone system are portable, simple, and convenient, supporting brief communication functions of short messages for the disabled. An AAC device has a communication board with texts and icons that pronounce a speech by touching the command sheets, and it is used to help people with speech disabilities [

4]. A tongue drive system is proposed to enable people with severe disabilities to access their environment [

5]. The information of discrete breathing patterns is identified to represent the pre-defined words and help the disabled to express their needs [

6]. An AAC app was implemented to use speech symbol technology to express their thoughts, needs, and ideas [

7]. Unfortunately, these AAC systems mentioned above are only suitable for slightly disabled people with speech disorders but still with movement functions they can control, such as patients with CP or slight stroke. Thus, it is important for the disabled to select a suitable and easy communication interface.

Recently, many studies in areas such as home automation, environmental monitoring, and internet of things (IoT) have been widely promoted to improve the quality of life [

8,

9,

10,

11,

12]. Many wireless communication technologies are utilized to solve the smart home and home automation control problems. Some papers [

13,

14,

15,

16] discussed the application of IoT, wireless Bluetooth, and X-10 protocol for the environmental condition monitoring and smart home system for the disabled. In addition to wireless communication technologies, there are also some papers discussing algorithms, such as the algorithms of different neural network designs and artificial intelligence embedded in the decision mechanism of system control core to make the smart home system more intelligent [

17,

18]. Moreover, needs differ as the disabilities vary; each disabled person may need different assistive input devices depending on their disabilities. Thence, different command input mediums were discussed, such as the medium of voice-controlled hands-free [

19] and the brain–computer interface (BCI) communication pathway [

20,

21]. Shinde et al. developed an Arduino-based application including an android application, a wireless module, and Arduino devices to control smart appliances [

22]. Rathi et al. proposed a hand gesture recognition algorithm for implementing a gesture human–machine interface that can be used to operate all the smart home appliances [

23]. Rawat et al. adopted automated speech recognition to help the severely disabled operate computers and home appliances without clicking buttons [

24]. Gagan used the Intel Galileo Board to achieve information including temperature, humidity, gas, smoke, motion, and fire, and then the user can control the home appliances [

25]. However, most of the related researches are for normal people or the slightly disabled, while there are fewer papers talking about communication and home automation solutions for the severely disabled. In [

8], a wireless home automation system is proposed for the deaf, dumb, and the people with Alzheimer’s, but the disabled subjects with speech, hearing, and memory problems mentioned that still have normal moving functions can operate the home appliances easily. Therefore, to develop a specific assistive device for home automation, environmental monitoring, or computer input would be a great and important issue for the severely disabled.

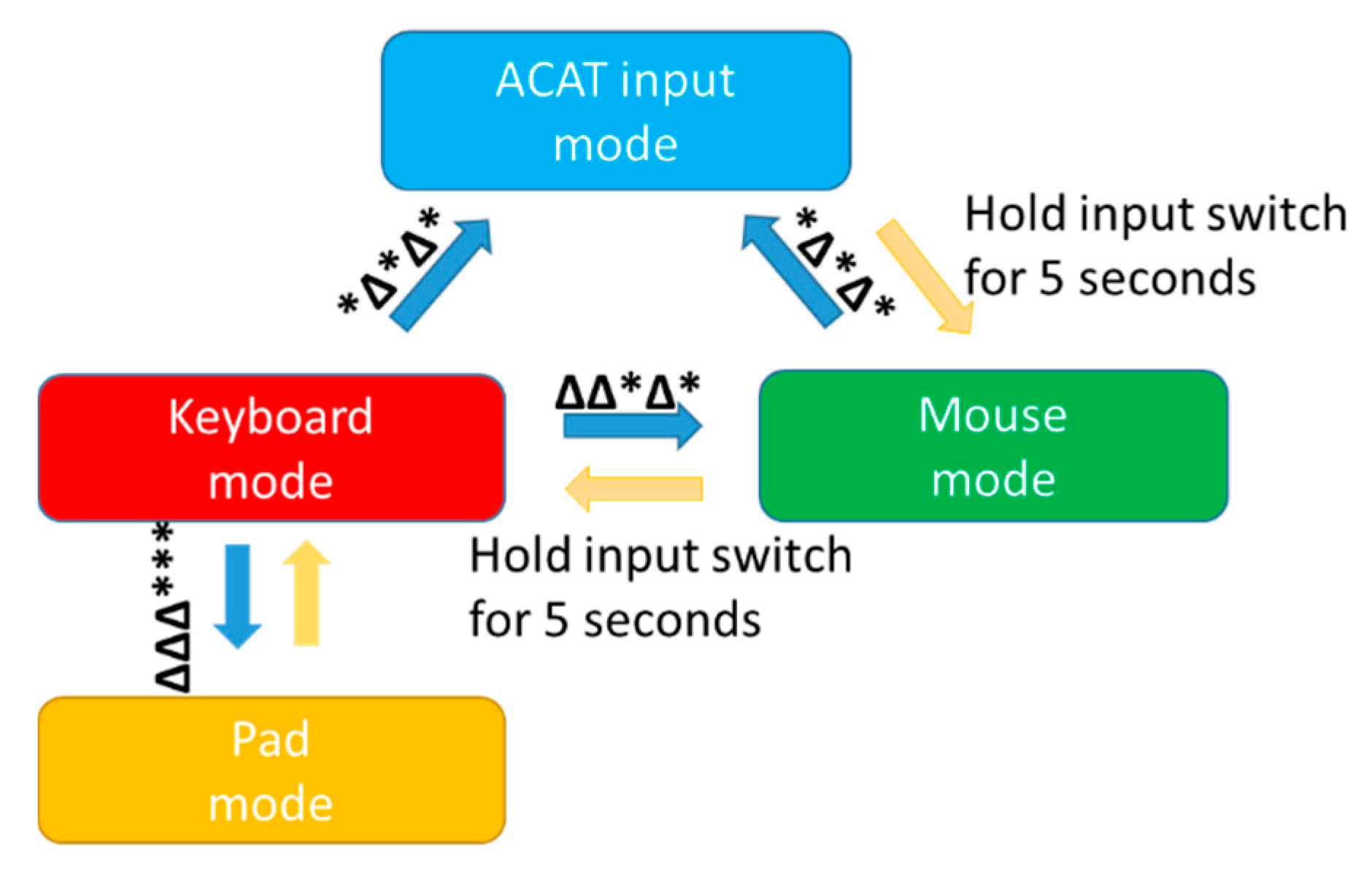

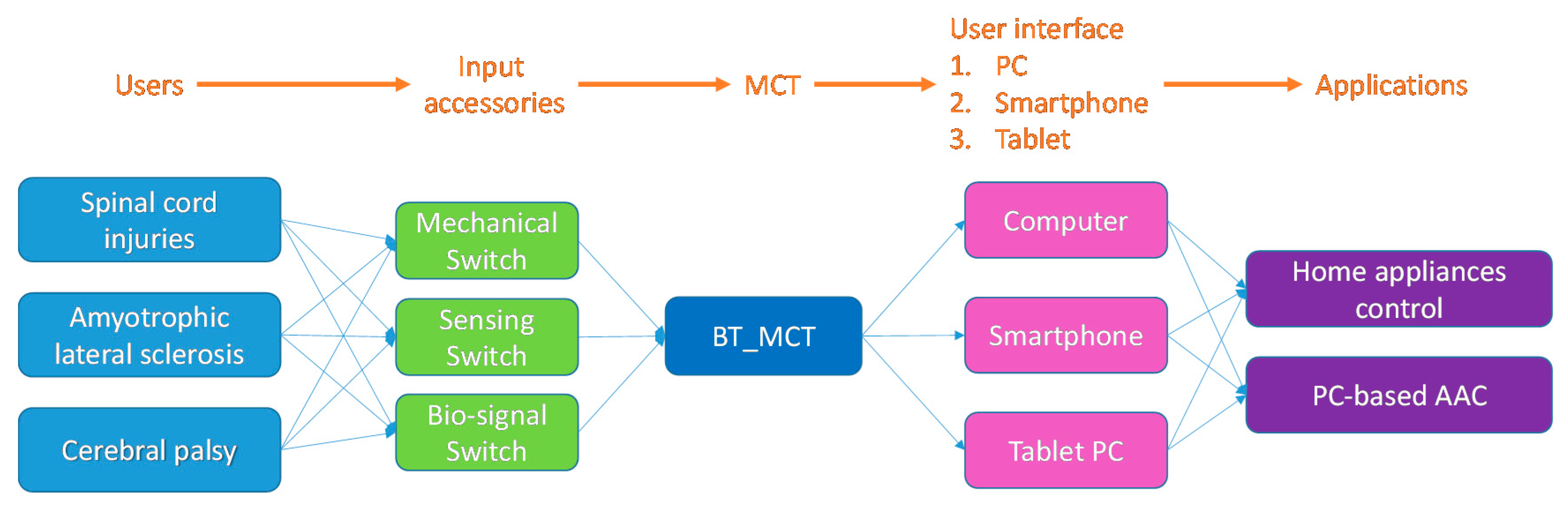

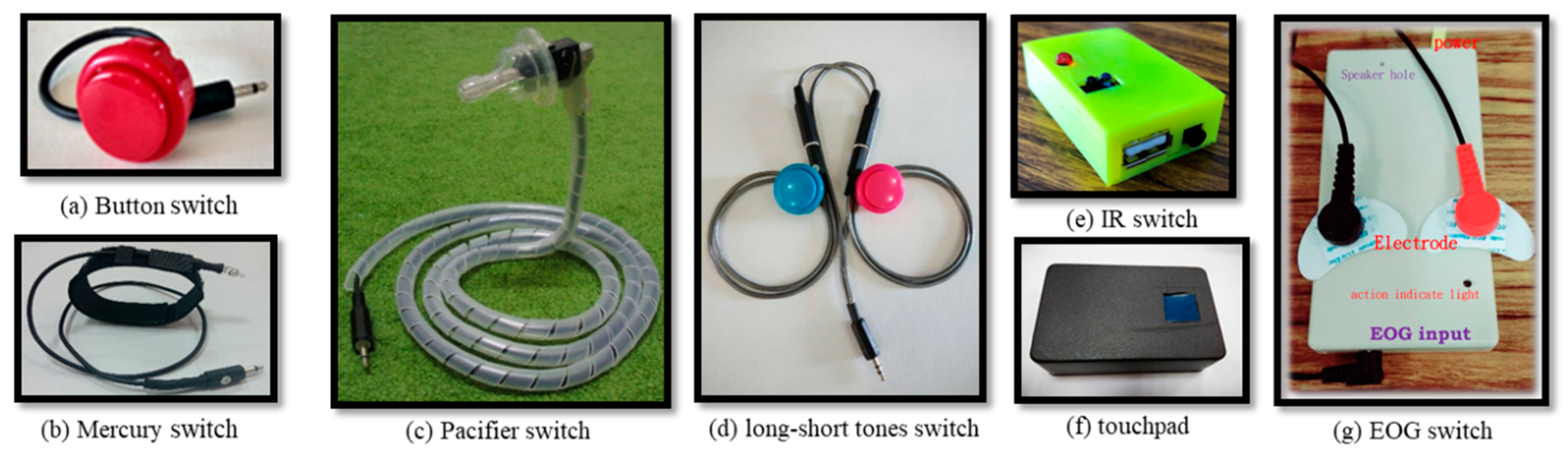

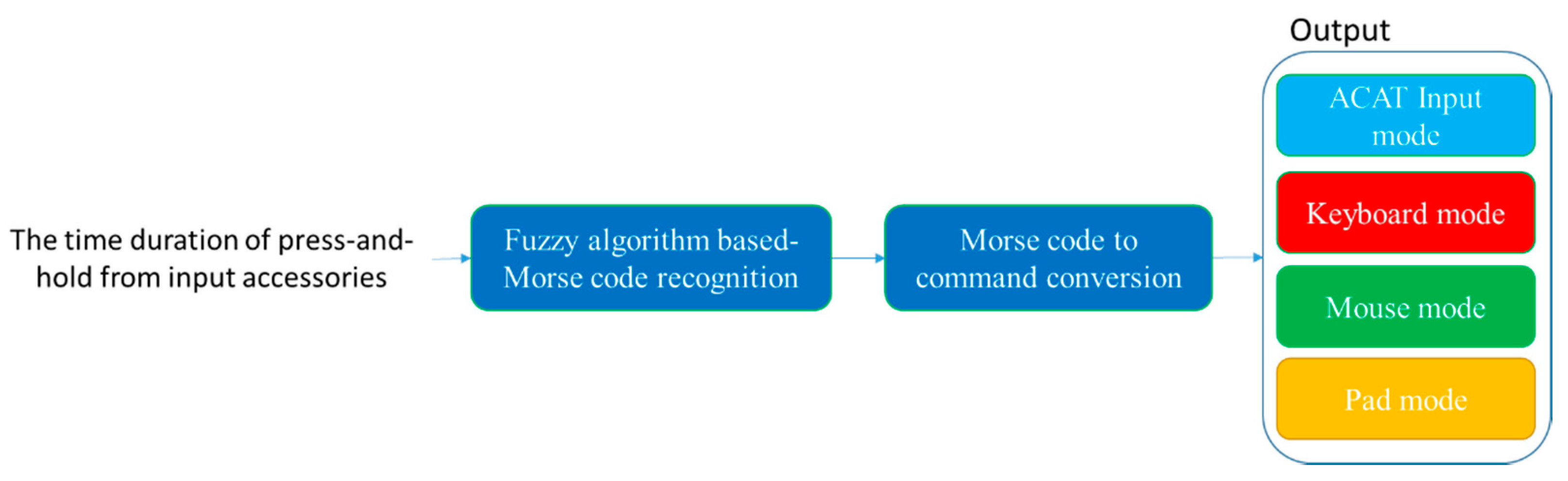

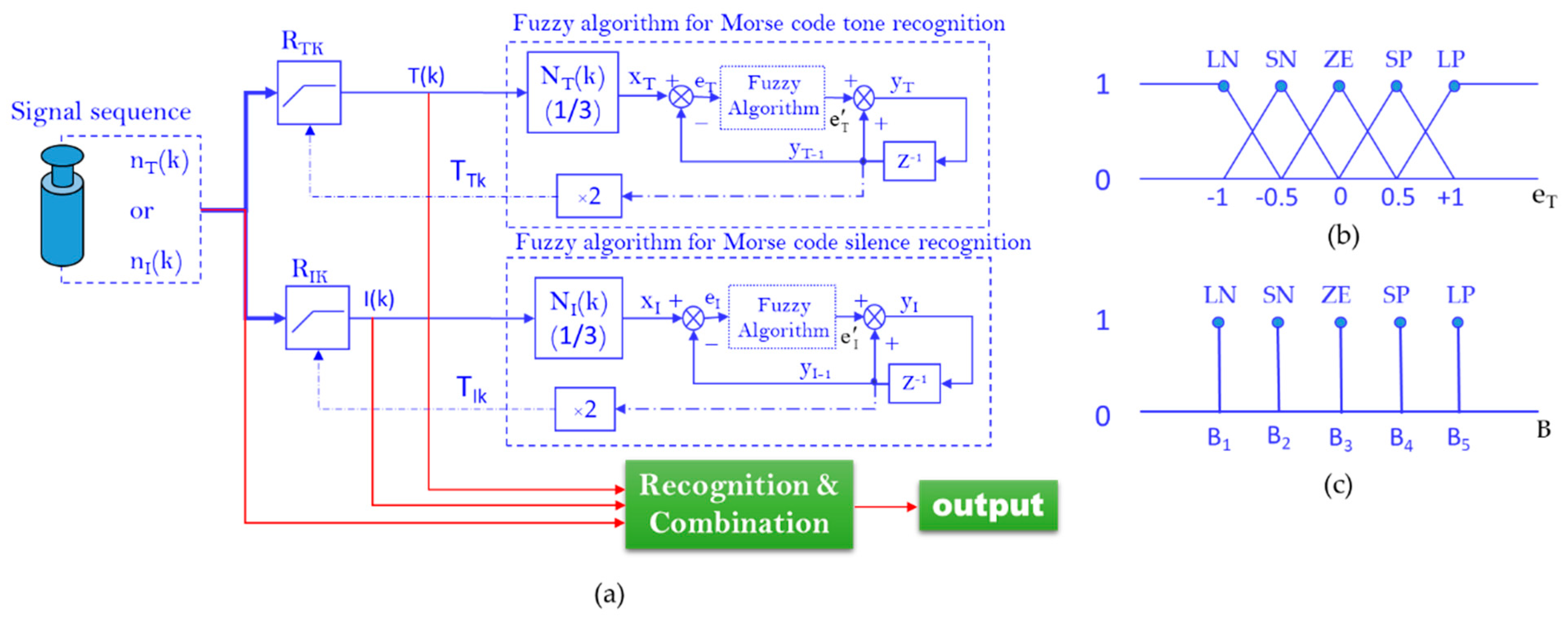

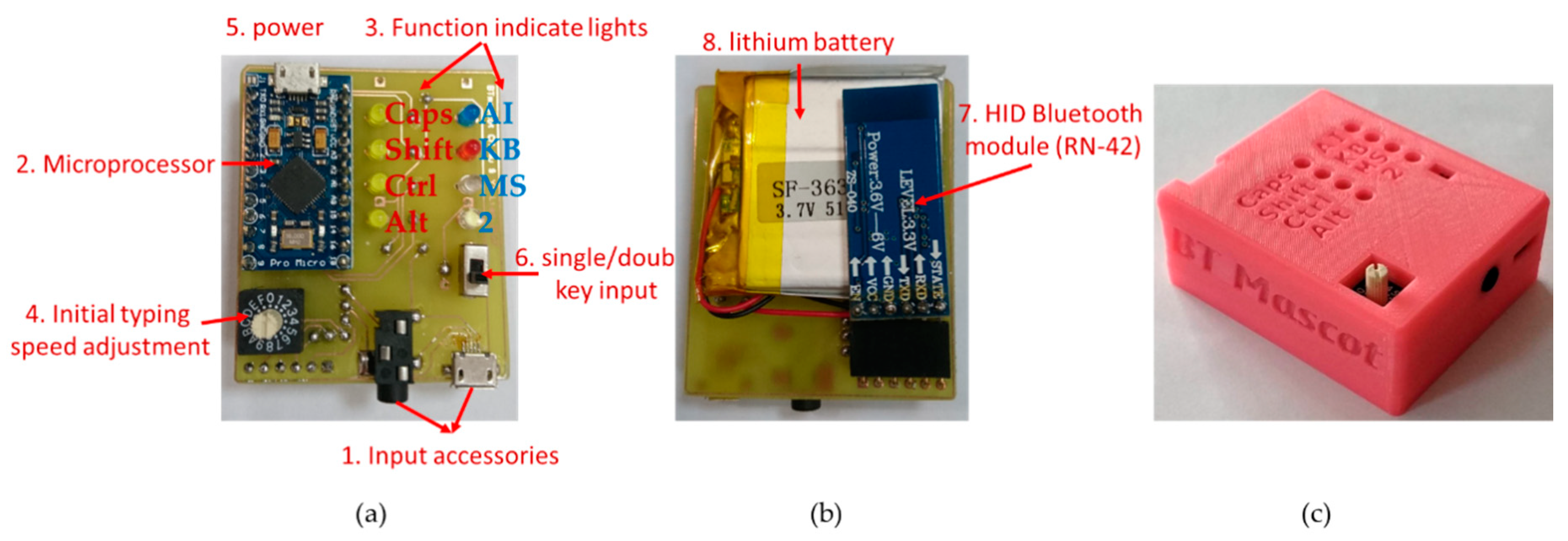

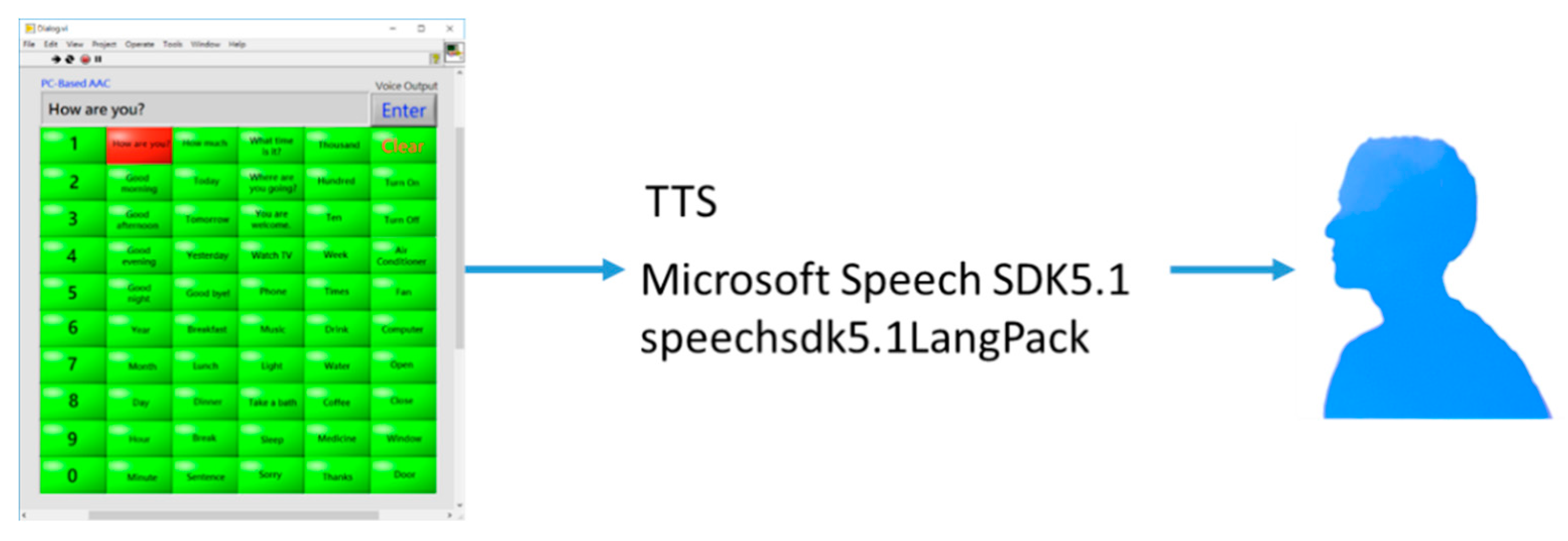

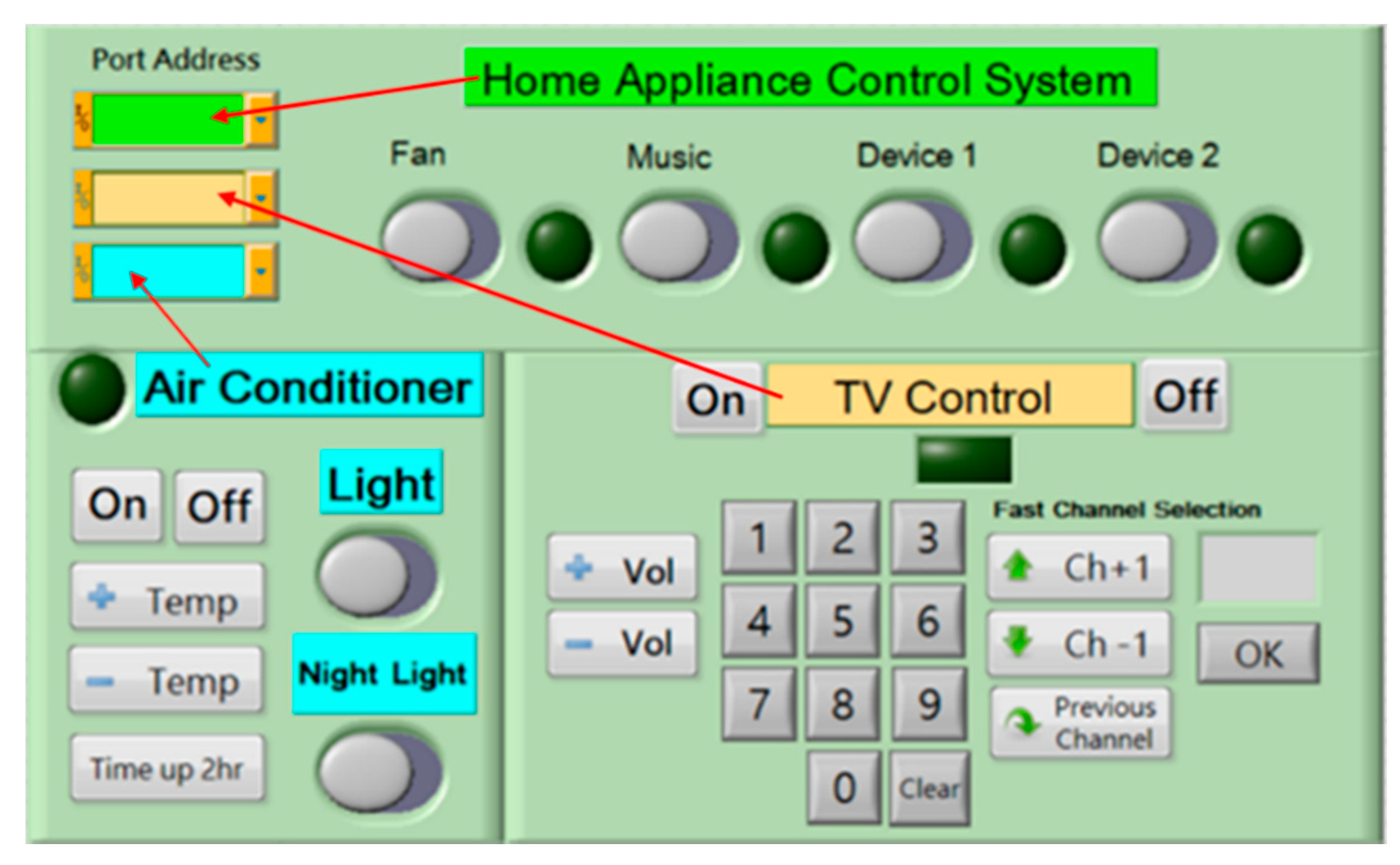

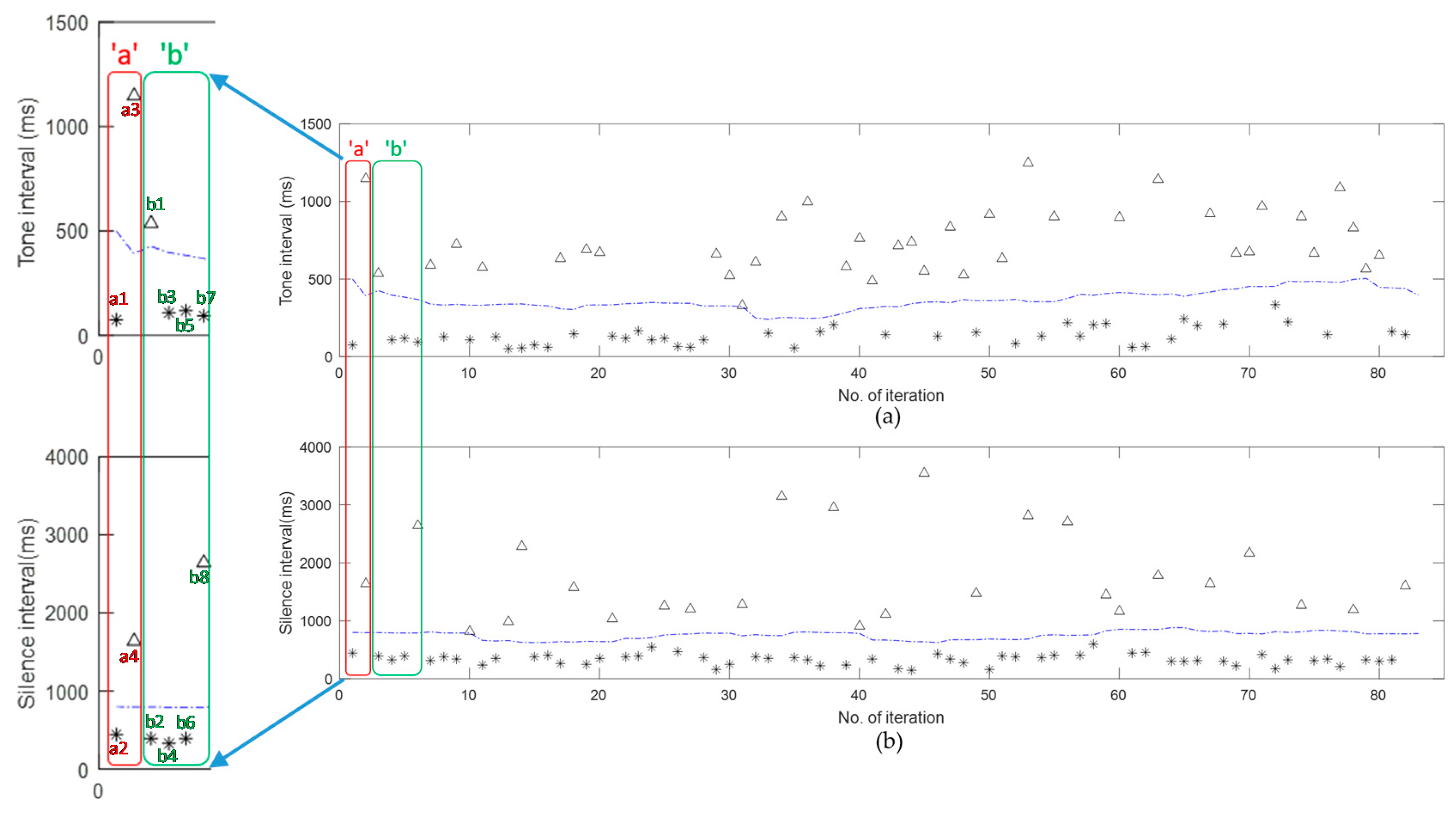

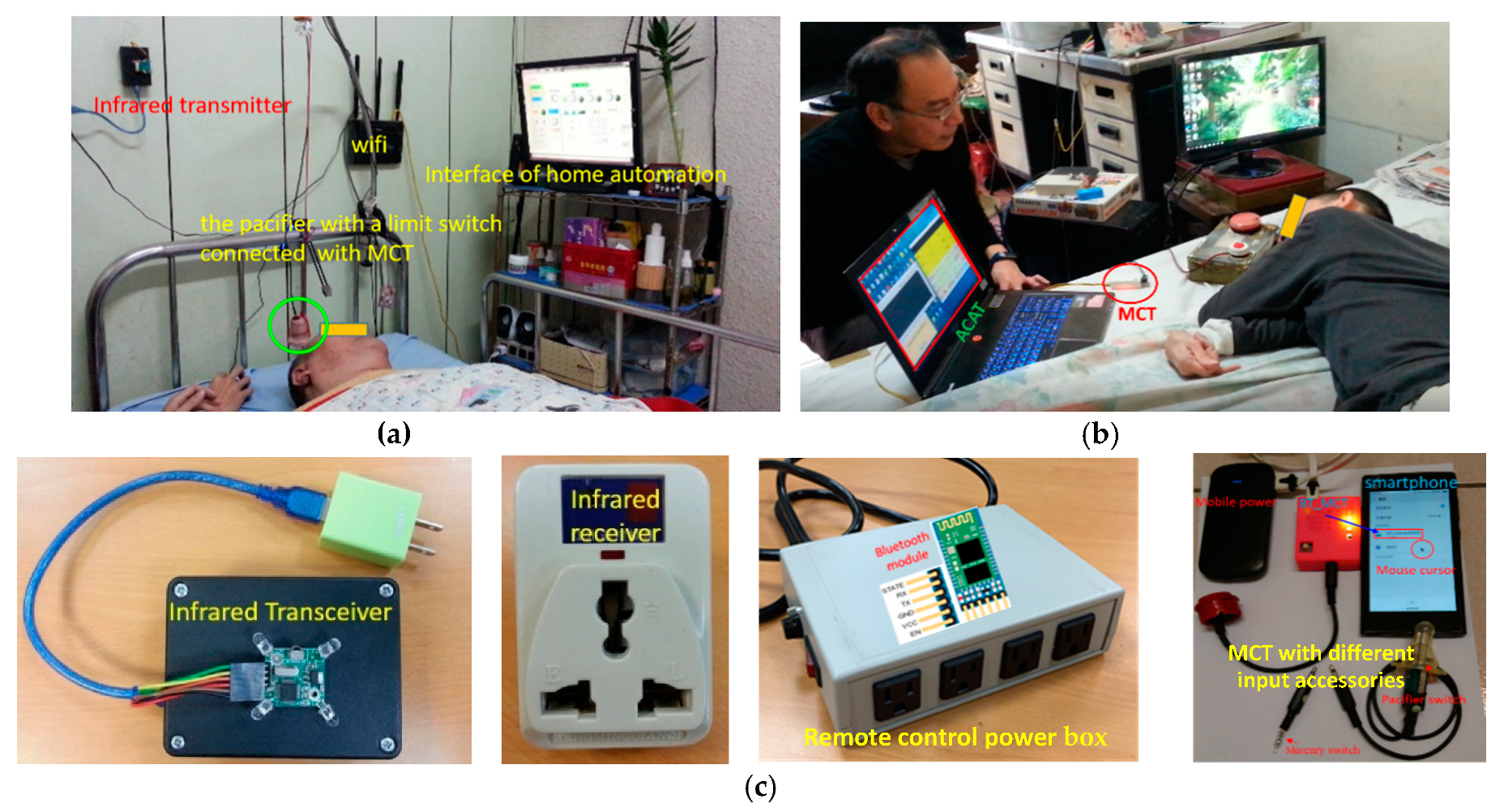

In this study, a wireless home assistive system (WHAS), including Morse code translator (MCT) and human–machine interface, is developed to help the severely disabled. To meet the special physical requirements or limitations of the severely disabled, many different types of assistive input accessories such as mechanical switches, sensing switches, and bio-signal switches are adopted. To help the severely disabled input commands and messages, MCT is developed. For the MCT, Morse code is applied to represent the commands in simple form and then Morse code, a fuzzy algorithm based-Morse code recognition, is developed to accurately identify commands. To assist the severely disabled in communicating with humans or machines, human–machine interfaces including personal computer-based alternative augmentative communication (PC-based AAC) and a home appliance control interface are developed. Therefore, the severely disabled can have other optional methods to communicate with others in this WHAS, and then they can do more things alone, even including entertainment in daily life.

The remainder of this paper is organized as follows.

Section 2 describes the proposed WHAS, including MCT and the human–machine interface. To evaluate the proposed approach,

Section 3 presents the results of a series of experiments, and some discussions are also described. Finally, the conclusions and possible improvements for the future development of this work are discussed in

Section 4.