Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning

Abstract

1. Introduction

2. Autonomous Driving Control Algorithm

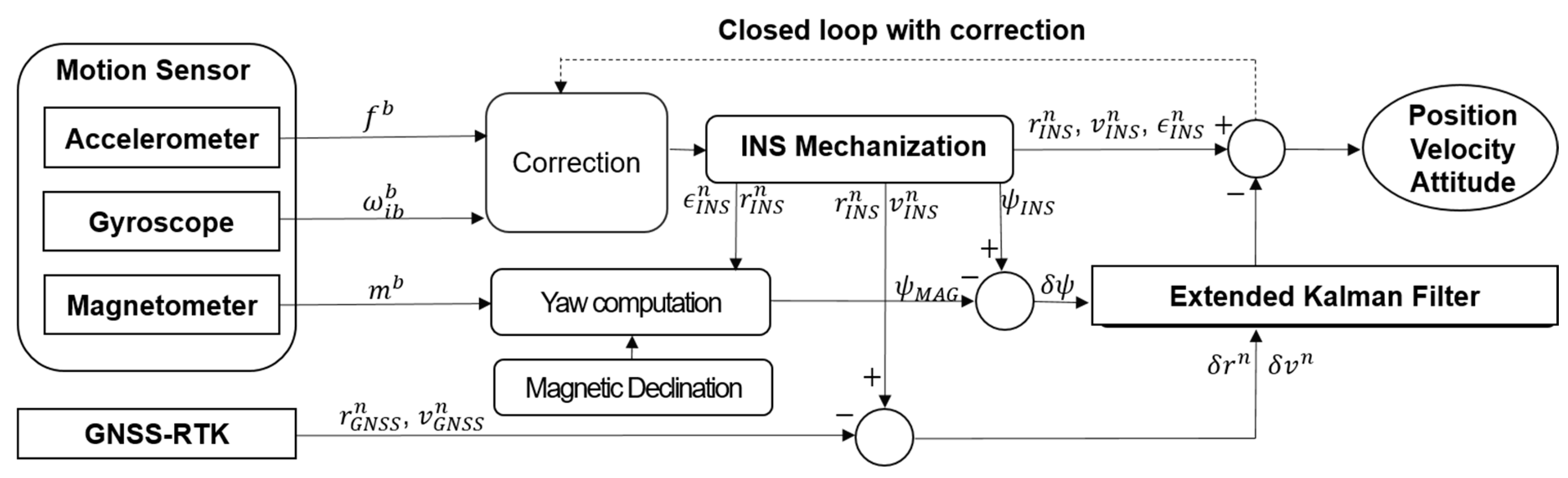

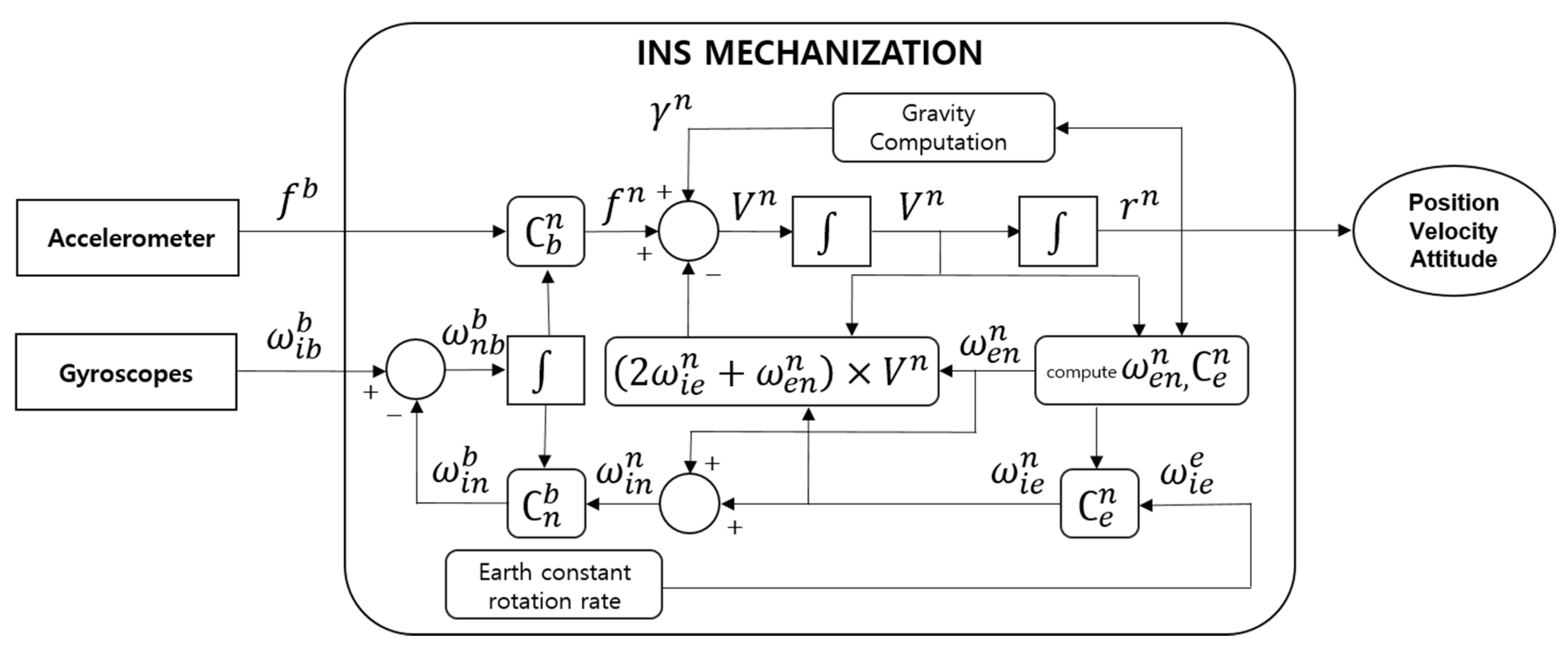

2.1. GNSS-RTK/Motion Sensor Integrated Positioning Algorithm

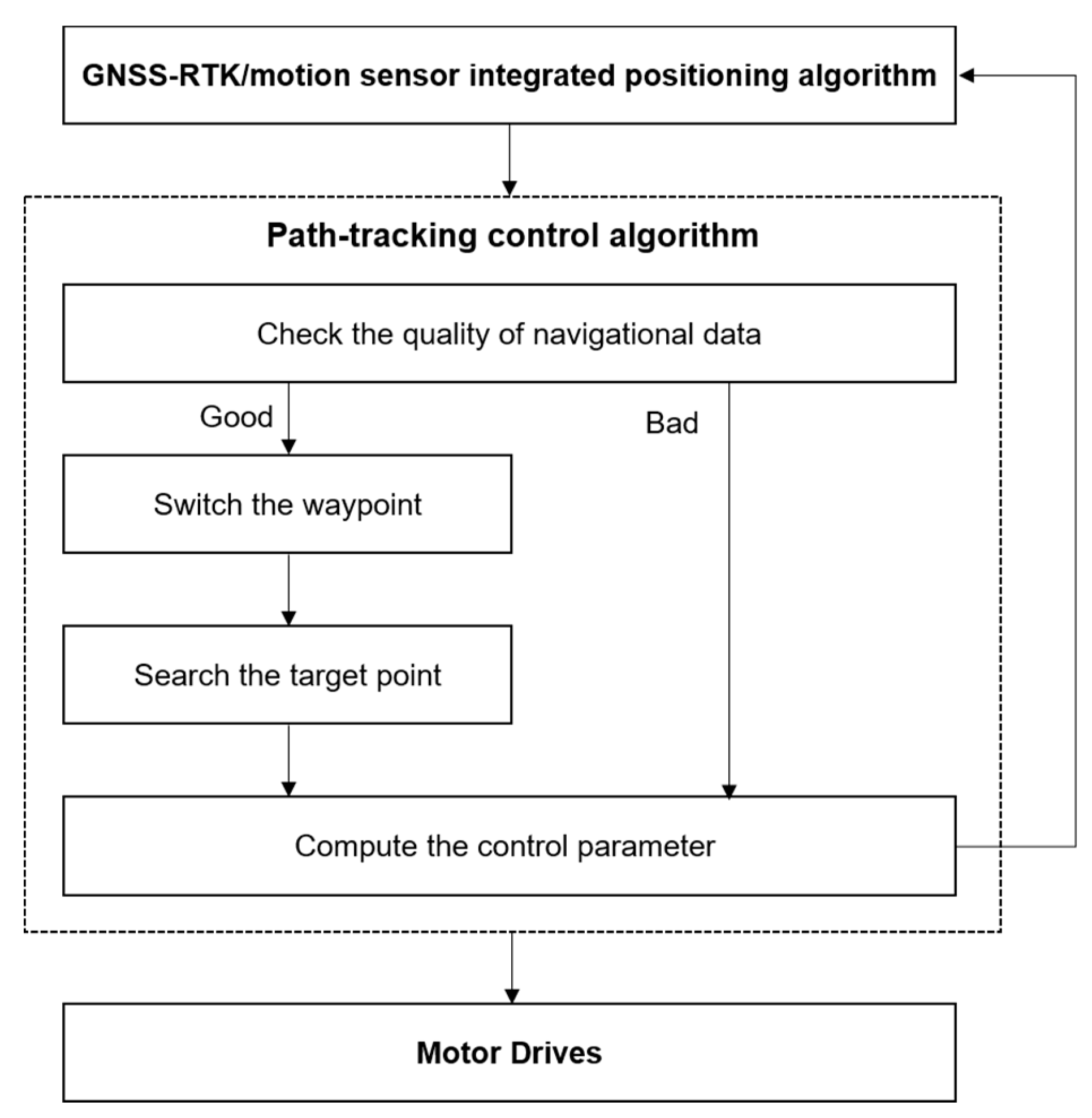

2.2. Path-Tracking Control Algorithm

3. Performance Evaluation of Autonomous Driving Control Algorithm

3.1. Test Description

3.2. Performance Evaluation

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Korea Rural Economic Institute. Agricultural Outlook 2015; Korea Rural Economic Institute: Naju-si, Korea, 2015; pp. 27–28. [Google Scholar]

- Alberto-Rodriguez, A.; Neri-Muñoz, M.; Ramos-Fernández, J.C.; Márquez-Vera, M.A.; Ramos-Velasco, L.E.; Díaz-Parra, O.; Hernández-Huerta, E. Review of control on agricultural robot tractors. Int. J. Comb. Optim. Probl. Inform. 2020, 11, 9–20. [Google Scholar]

- FUTURE FARMING. Available online: https://www.futurefarming.com/Machinery/Articles/2019/11/21-autonomous-tractor-projects-around-the-world-501448E/ (accessed on 30 June 2020).

- MAQUINAC. Available online: https://maquinac.com/2017/12/john-deere-presenta-tractor-autonomo-mas-potente-la-marca/ (accessed on 30 June 2020).

- MAQUINAC. Available online: https://maquinac.com/2016/08/case-ih-lanzo-su-primer-tractor-autonomo/ (accessed on 30 June 2020).

- YANMAR. Available online: https://www.yanmar.com/global/about/technology/technical_review/2019/0403_1.html (accessed on 30 June 2020).

- NEW HOLLAND ARICULTURE. Available online: https://agriculture.newholland.com/apac/en-nz/about-us/whats-up/news-events/2017/new-holland-nhdrive-concept-autonomous-tractor (accessed on 30 June 2020).

- O’Connor, M.; Bell, T.; Elkaim, G.; Parkinson, B. Automatic steering of farm vehicles using GPS. In Proceedings of the 3rd international conference on precision agriculture, Minneapolis, MN, USA, 23–26 June 1996. [Google Scholar]

- Stoll, A.; Kutzbach, H.D. Guidance of a forage harvester with GPS. Precis. Agric. 2000, 2, 281–291. [Google Scholar] [CrossRef]

- Gan-Mor, S.; Clark, R.L.; Upchurch, B.L. Implement lateral position accuracy under RTK-GPS tractor guidance. Comput. Electron. Agric. 2007, 59, 31–38. [Google Scholar] [CrossRef]

- Han, J.H.; Park, C.H.; Park, Y.J.; Kwon, J.H. Preliminary Results of the Development of a Single-Frequency GNSS RTK-Based Autonomous Driving System for a Speed Sprayer. J. Sens. 2019, 2019, 4687819. [Google Scholar] [CrossRef]

- Spangenberg, M. Safe Navigation for Vehicles. Ph.D. Thesis, Toulouse University, Toulouse, France, 2009. [Google Scholar]

- Noguchi, N.; Reid, J.F.; Zhang, Q.; Will, J.D.; Ishii, K. Development of robot tractor based on RTK-GPS and gyroscope. In Proceedings of the 2001 ASAE Annual Meeting, Sacramento, CA, USA, 29 July–1 August 1998. [Google Scholar]

- Takai, R.; Yang, L.; Noguchi, N. Development of a crawler-type robot tractor using RTK-GPS and IMU. Eng. Agric. Environ. Food 2014, 7, 143–147. [Google Scholar] [CrossRef]

- Xiang, Y.; Juan, D.U.; Duanyang, G.; Chengqian, J. Development of an automatically guided rice transplanter using RTK-GNSS and IMU. IFAC-PapersOnLine 2018, 51, 374–378. [Google Scholar]

- Han, J.H.; Park, C.H.; Hong, C.K.; Kwon, J.H. Performance Analysis of Two-Dimensional Dead Reckoning Based on Vehicle Dynamic Sensors during GNSS Outages. J. Sens. 2017, 2017, 9802610. [Google Scholar] [CrossRef]

- Jekeli, C. Inertial Navigation Systems with Geodetic Applications; Walter de Gruyter: Berlin, Germany, 2001; pp. 101–138. [Google Scholar]

- Solimeno, A. Low-Cost INS/GPS Data Fusion with Extended Kalman Filter for Airborne Applications. Master’s Thesis, Universidad Tecnica de Lisboa, Lisbon, Portugal, 2007. [Google Scholar]

- Shin, E. Accuracy Improvement of Low Cost INS/GPS for Land Applications. Master’s Thesis, The University of Calgary, Alberta Calgary, AB, Canada, 2001. [Google Scholar]

- Won, D.; Ahn, J.; Sung, S.; Heo, M.; Im, S.H.; Lee, Y.J. Performance improvement of inertial navigation system by using magnetometer with vehicle dynamic constraints. J. Sens. 2015, 2015, 435062. [Google Scholar] [CrossRef]

- Godha, S.; Cannon, M.E. GPS/MEMS INS integrated system for navigation in urban areas. GPS Solut. 2007, 11, 193–203. [Google Scholar] [CrossRef]

- Jensen, T.M. Waypoint-Following Guidance Based on Feasibility Algorithms. Master’s Thesis, Norwegian University of Science, Trondheim, Norway, 2011. [Google Scholar]

- ublox ZED-F9P Module. Available online: https://www.u-blox.com/en/product/zed-f9p-module (accessed on 22 April 2020).

- Xsens MTi 1-Series. Available online: https://www.xsens.com/products/mti-1-series (accessed on 22 April 2020).

- Raspberry Pi 4. Available online: https://www.raspberrypi.org/products/raspberry-pi-4-model-b (accessed on 22 April 2020).

| Reference. | Sensor | Target Vehicle | Error | Country |

|---|---|---|---|---|

| O’Connor et al. [8] | 4 antenna carrier-phase GPS System | tractor | Less than 2.5 cm | United States |

| Stoll and Kuzbach [9] | GNSS-RTK | Automatic steering system | 2.5–6.9 cm | Germany |

| Gan-Mor et al. [10] | GNSS-RTK | tractor | Centimeters | Israel |

| Han et al. [11] | single-frequency GNSS-RTK | speed sprayer | Centimeters | Korea |

| Noguchi et al. [13] | GNSS-RTK, a fiber optic gyroscope (FOG) and an IMU | autonomous driving robot | 3 cm | Japan |

| Takai et al. [14] | GNSS-RTK and an IMU | crawler-type tractor | 5 cm | Japan |

| Xiang et al. [15] | GNSS-RTK and IMU | rice transplanter | Less than 10 cm | China |

| Item | Contents |

|---|---|

| Drive system | Crawler |

| Dimension (length × width × height, mm) | 2183 × 1300 × 1241 |

| Engine | 48 V AC motor |

| Crawler Track (length × width × tread, mm) | 1100 × 200 × 1100 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.-h.; Park, C.-h.; Kwon, J.H.; Lee, J.; Kim, T.S.; Jang, Y.Y. Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning. Appl. Sci. 2020, 10, 4667. https://doi.org/10.3390/app10134667

Han J-h, Park C-h, Kwon JH, Lee J, Kim TS, Jang YY. Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning. Applied Sciences. 2020; 10(13):4667. https://doi.org/10.3390/app10134667

Chicago/Turabian StyleHan, Joong-hee, Chi-ho Park, Jay Hyoun Kwon, Jisun Lee, Tae Soo Kim, and Young Yoon Jang. 2020. "Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning" Applied Sciences 10, no. 13: 4667. https://doi.org/10.3390/app10134667

APA StyleHan, J.-h., Park, C.-h., Kwon, J. H., Lee, J., Kim, T. S., & Jang, Y. Y. (2020). Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning. Applied Sciences, 10(13), 4667. https://doi.org/10.3390/app10134667