3.1. Definition of the Entities and How They Relate

In a GDM problem, there is a set of decision-makers that (together) have to choose one alternative from a set of alternatives . There is also a set of criteria , and each criterion is related to each alternative . A decision matrix is composed by a relation between and .

Definition 1. Letbe the decision matrix, where:

is the set of criteria;

is the set of alternatives.

Rule 1. , each criterion is related to each alternative , so is the value of criterion in alternative . There cannot exist an alternative with values in criteria that are not defined in the problem.

Definition 2. A criterionconsists of:

;

is the identification of a particular criterion;

is the type of a particular criterion (Numeric, Boolean or Classificatory);

is the greatness associated with the criterion (Maximization, Minimization or Subjective).

The example of choosing a restaurant is composed of 9 criteria, defined as follows:

; //minutes

; //dollars

; //minutes

; //good, ok or poor

; //dollars

; //true or false

; //true or false

; //thai, italian or american

. //true or false

Definition 3. An alternativeconsists of:

;

is the identification of a particular alternative.

We can now define the desirable set of alternatives:

In our example, the decision matrix is as follows:

As can be seen in the example, although the criteria value can be Numeric, Boolean, or Classificatory, internally they are all interpreted as Numeric. When the criterion type is Boolean, we assume and , when the criterion type is Classificatory, the scale is converted into the corresponding values (in our example , and ), and when the criterion greatness is Subjective, the instantiation of each criterion in each alternative is always 1 (as is the case of the criterion cuisineStyle).

Usually, most MCDA methods only consider the decision-makers’ preferences on alternatives and criteria, i.e., the decision process is seen as a fully rational process. However, there are many human particularities that affect and are important in decision processes. According to the winner of 2017 Economics Nobel Prize (Richard Thaler), much more should be considered, and a decision process should not be seen only from a rational perspective. In this work, we follow that vision. We consider the existence of a heterogeneous group of decision-makers and consequently, we contemplate a set of attributes to model each decision-maker according to his/her own particularities, interests, and intentions.

Next, we introduce the decision-maker definition and all the definitions associated with the decision-makers representation.

Definition 4. Letbe a set of decision-makers where:

.

Definition 5. Letbe the weight or preference given to a certain alternativeby a decision-maker.

Rule 2. A decision-maker can define a set of alternatives weights where:

;

;

.

Definition 6. Letbe the weight or preference given to a certain criterionby a decision-maker.

Rule 3. A decision-maker can define a set of criteria weights where:

;

;

.

In order to help decision-makers express their preferences regarding alternatives and criteria [

25,

31,

32], several strategies can be applied. One of the preference structures most used in GDM problems defined under uncertainty, is the so-called fuzzy preference relation [

33], but there are others, such as multiplicative preference relation [

34] and linguistic preference relation [

35]. In this work, we follow the one presented in [

36], which consists of classifying the preferences individually according to an adaptation of the Visual Analog Scale, although, due to the alternatives and criteria alignment on the screen, they are unconsciously being visually compared to each other. As we will see later, the decision-makers’ preferences regarding alternatives and criteria are used to define the alternatives and criteria importance according to a specific scale. Decision-makers are not obliged to define a preference for all alternatives and criteria, however, the alternatives and criteria without a defined preference will be (internally) considered as 0.

Definition 7. A decision-makerconsists of:

is the identification of a particular decision-maker;

is the set of decision-makers that decision-maker considers as credible, ;

is the value of the Concern for Self of decision-maker chosen style of behavior;

is the value of the Concern for Others of decision-maker chosen style of behavior;

is the expertise level of decision-maker .

Besides the unique identification and the preferences for alternatives and criteria, it is possible to define for each decision-maker

the set of decision-makers (from the set of decision-makers involved in the decision process) that he/she considers credible in a specific decision problem. The credibility is multifold, i.e., a decision-maker can identify another decision-maker as credible due to different reasons, such as reputation, expertise level and/or hierarchy. Following the behavior styles proposed by the authors in [

15], which can be used to model decision-makers according to their intentions, it is possible to represent the decision-maker’s concerns in attaining his/her own objectives (Concern for Self) and the other decision-makers’ objectives (Concern for Others). This is possible due to the solid “operating values” the authors found for the five behavior styles (see

Table 2). The proposed behavior styles vary in four dimensions being two of them Concern for Self and Concern for Others. The “operating values” resulted from a questionnaire, where the authors asked the participants to classify the five behavior styles in the four dimensions. The objective was to understand if the participants had a similar perception of the meaning of each behavior style. The results demonstrated high levels of agreement, which allowed one to define standards for each behavior style.

Lastly, it is possible to define an expertise level for each decision-maker. The decision-maker’s expertise level is expressed in a [0, 1] interval, where 0 means a very low level of expertise and 1 stands for a very high level of expertise.

For our example, let’s consider the existence of three decision-makers:

;

;

.

Definition 8. Letbe the set of alternatives weights of a set of decision-makerswhere:

.

Definition 9. Letbe the set of criteria weights of a set of decision-makerswhere:

.

Definition 10. Letbe an alternatives preference matrix that relates each alternative with the correspondent evaluation made by each decision-maker, where:

Definition 11. Letbe a criteria preference matrix that relates each criterion with the correspondent evaluation made by each decision-maker, where:

So far, the main entities and values that will be used in our example were presented. Next, we will demonstrate how to handle this data in order to create new intelligence, reduce the decision problem’s complexity, and to generate the appropriate recommendations.

3.2. Forecasting the Importance of Each Alternative/Criterion from the Perspective of Each Decision-Maker

The decision-makers’ preferences are no more than a numerical evaluation of alternatives and criteria. Obviously, it allows us to understand the more and less preferred alternatives and criteria. However, that does not say much in terms of how important an alternative/criterion is to a specific decision-maker. To forecast the importance of each alternative/criterion from the perspective of each decision-maker, we start by readjusting the decision-makers’ preferences according to the context, i.e., by including the irrational side of the decision in the math. In order to do so, we ponder the decision-maker’s preferences regarding the decision problem alternatives with the defined level of Concern for Self, Concern for Others, expertise, and with the preferences of decision-makers that he/she pointed as credible.

Definition 12. The correlation between the decision-maker’s style of behavior, expertise level and decision-makers he/she considers credible is defined as follows:

where:

is the sum of the weights given to alternative by each of the credible decision-makers in , ;

is the number of credible decision-makers to such that ;

is the inverse of expertise level of decision-maker (calculated as ).

Each is associated with the ’s Concern for Self and expertise level, while the average preference for to the decision-makers marked as credible by is associated with the ’s Concern for Others and ’s inverse of expertise level. In this way, after the readjustment, the new value will reflect not only the decision-makers’ preferences but also the context, their motivations and more.

After readjusting the decision-makers’ preferences, the difference between the alternative/criterion with the highest evaluation and the alternative/criterion with the lowest evaluation is compared, since we believe the evaluation range used by the decision-makers can be an important indicator.

Definition 13. Letbe a function that returns the difference (according to the preferences defined by) between the maximum and minimum weights given to alternatives or criteria that belong to a set of weights (is a list of alternatives or criteria weights): returns

the difference (according to the preferences defined by

) between the alternative with the highest weight and the alternative with the lowest weight, while

returns the difference between the criterion with the highest weight and the criterion with the lowest weight. The result of

can be classified into one of the five possible levels presented in

Table 3.

The difference (using function

) between the criterion or alternative with more weight and the criterion or alternative with less weight allows to obtain (according to

Table 3) the

value.

Definition 14. Letbe a function that returns thevalue foraccording to Table 3,.

The value allows to have another look over the preferences defined by the decision-makers. Let us suppose defined the preferences [0.4, 0.4, 0.8] and defined the preferences [0.5, 0.5, 0.9], both for alternatives , and , respectively. Can we say that prefers more than prefers ? We believe not, because people have different perceptions of the world around them. That is why we try to understand the decision-makers’ configurations in terms of meaning.

After identifying the value, we can execute Algorithm 1 to find the predicted importance that each criterion and alternative have to each decision-maker. Remember that this prediction considers the readjustment of the decision-makers’ preferences considering the decision problem as a whole.

Algorithm 1 is also used to classify the importance of each criterion but is applied to each

and the weights considered belong to the set

. After

(the predicted importance of alternative

to decision-maker

) and

(the predicted importance of criterion

to decision-maker

) have been identified for each alternative and criterion, we can classify them according to their respective value (the meaning of the values is presented in

Table 4).

| Algorithm 1. Alternatives’ importance classification algorithm. |

| 1: foreach |

| 2: foreach |

| 3: |

| 4: while do |

| 5: |

| 6: end while |

| 7: |

| 8: end foreach |

| 9: end foreach |

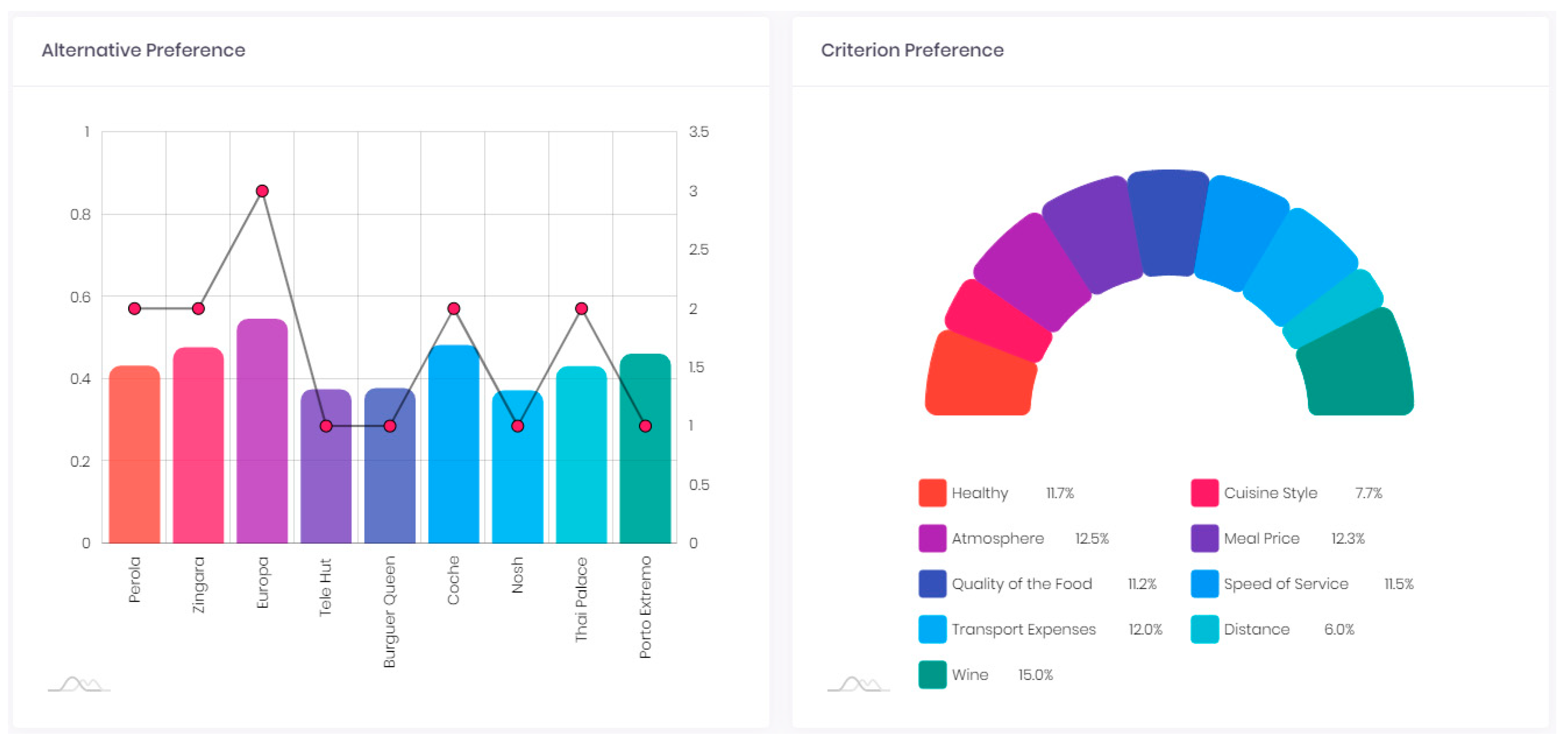

Coming back to our example,

Table 5 presents the predicted importance regarding the decision problem alternatives from the perspective of each decision-maker.

In

Table 6, the predicted importance regarding the decision problem criteria from the perspective of each decision-maker is presented.

3.3. Recommending Alternatives to Reject and as Solution

With the calculated importance predicted values, we now have the necessary data to work in the recommendation methods, i.e., the methods to recommend alternatives to reject in order to reduce the decision problem’s complexity and to recommend an alternative as solution.

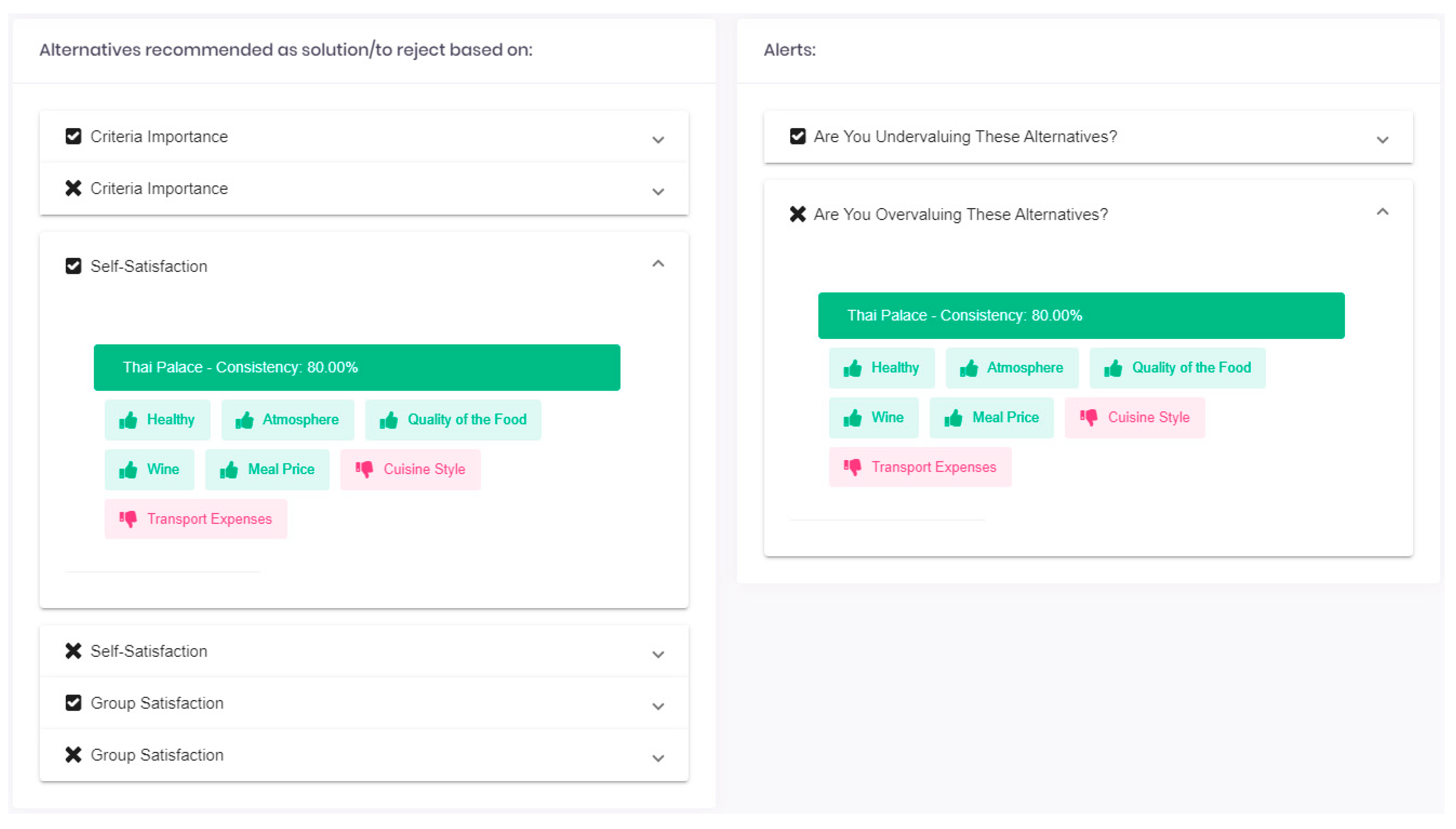

3.3.1. Recommending based on the Alternatives/Criteria Predicted Importance

The first strategy consists of recommending alternatives to reject and as a solution based on forecasts of importance.

Definition 15. Letbe the set of importance values for all the alternatives to decision-maker, where:

;

.

Definition 16. Letbe the set of importance values for all the criteria to decision-maker, where:

;

.

Definition 17. Letbe an alternatives evaluation matrix, where:

After defining the evaluation matrix

, we can now execute Algorithm 2, which selects the alternatives with worst classification to be rejected (

) based on their importance value.

| Algorithm 2. Algorithm for selecting the alternatives candidate to rejection. |

| 1: |

| 2: while do |

| 3: foreach |

| 4: |

| 5: foreach |

| 6: if then |

| 7: end foreach |

| 8: if then insert into |

| 9: end foreach |

| 10: |

| 11: end while |

Algorithm 2 iterates through the matrix and starts by iterating all the alternatives importance values in order to find at least an alternative whose importance has the lowest possible value (which corresponds to the Insignificant classification, ) for all the decision-makers. If an alternative is not found, the algorithm reiterates again with an increased value () and the process is repeated until at least one alternative is found. This means that in the worst-case scenario, all the alternatives will be classified as Very Important by at least one of the decision-makers. In that case, all the alternatives will be selected.

In our example, the Algorithm 2 output is: .

If the intention is to select an alternative to be proposed as a solution, we can execute Algorithm 3, which selects the alternatives with the best classification as candidate solutions () based on their importance value.

Algorithm 3 iterates through the

matrix and starts by iterating all the alternatives importance values in order to find at least an alternative whose importance has the highest possible value (which corresponds to the Very Important classification,

) for all the decision-makers.

| Algorithm 3. Algorithm for selecting the alternatives as candidate solutions. |

| 1: |

| 2: while do |

| 3: foreach |

| 4: |

| 5: foreach |

| 6: if then |

| 7: end foreach |

| 8: if then insert into |

| 9: end foreach |

| 10: |

| 11: end while |

If an alternative is not found, the algorithm reiterates again with a decreased value () and the process is repeated until at least one alternative is found. This means that in the worst-case scenario all the alternatives will be classified as Insignificant by at least one of the decision-makers. In this case all the alternatives will be selected.

In our example, the Algorithm 3 output is: .

There may be cases where several alternatives can be added to the list or to the list. For those cases, we propose a consistency method that allows to recommend the rejection of the least consistent alternative(s) or the proposal of the most consistent alternative(s).

The consistency method starts by comparing the difference between the importance of to and the predicted importance of to each decision-maker, but only for decision-makers with a predicted importance for higher than 3 (criteria with a predicted importance of important or very important). Then, all these differences are added, and the result is divided by . Next, we show how the importance of to is calculated.

First, we start by normalizing to make the criteria values comparable to each other, i.e., the relevance of each criterion in each alternative. The normalization will allow to understand what reasons make an alternative better than another.

Definition 18. Letbe a normalized decision matrix such that The

for our example is presented in

Table 7.

The next step consists of applying to

a process like the one applied to the definition of the alternatives and criteria importance to each decision-maker. For that, for each criterion, we calculate the difference between the instance of

with the highest value and the instance of

with the lowest value. For example,

. Then, we use

Table 3 to find the

value, followed by the Algorithm 4 to define the importance levels.

| Algorithm 4. The relevance that the value of each criterion has in alternative based on an overall appreciation of the criterion value in all alternatives. |

| 1: foreach |

| 2: foreach |

| 3: |

| 4: while do |

| 5: |

| 6: end while |

| 7: |

| 8: end foreach |

| 9: end foreach |

Table 8 presents the importance of each criterion to the existing alternatives.

Definition 19. Letbe the set of importance values of all the criteria to alternative, where:

;

.

After defining the criteria importance for all the alternatives, we can now proceed to the definition of the consistency method.

Definition 20. Letbe a function that returns the difference between the importance of a criterionto an alternativeand the importance of the same criterionto a decision-maker:

As previously referred, the consistency method only considers the differences when . After calculating the criteria consistency for each decision-maker, we are now in conditions of defining a consistency matrix for each decision-maker.

Definition 21. Letbe the consistency matrix of the decision-maker, where (stands for ):

Definition 22. Letbe a function which returns the sum of all consistency values for an alternativefor the decision-maker If we apply the function to the alternatives presented in list for each decision-maker we will get:

;

;

.

If we apply the function to the alternatives presented in list for each decision-maker we will get:

;

;

;

;

;

.

Definition 23. Letbe a function which returns the average offor all decision-makers: In our example, and it would be the alternative proposed to be rejected. Regarding the alternatives to propose as a solution, and , would be proposed as a possible solution.

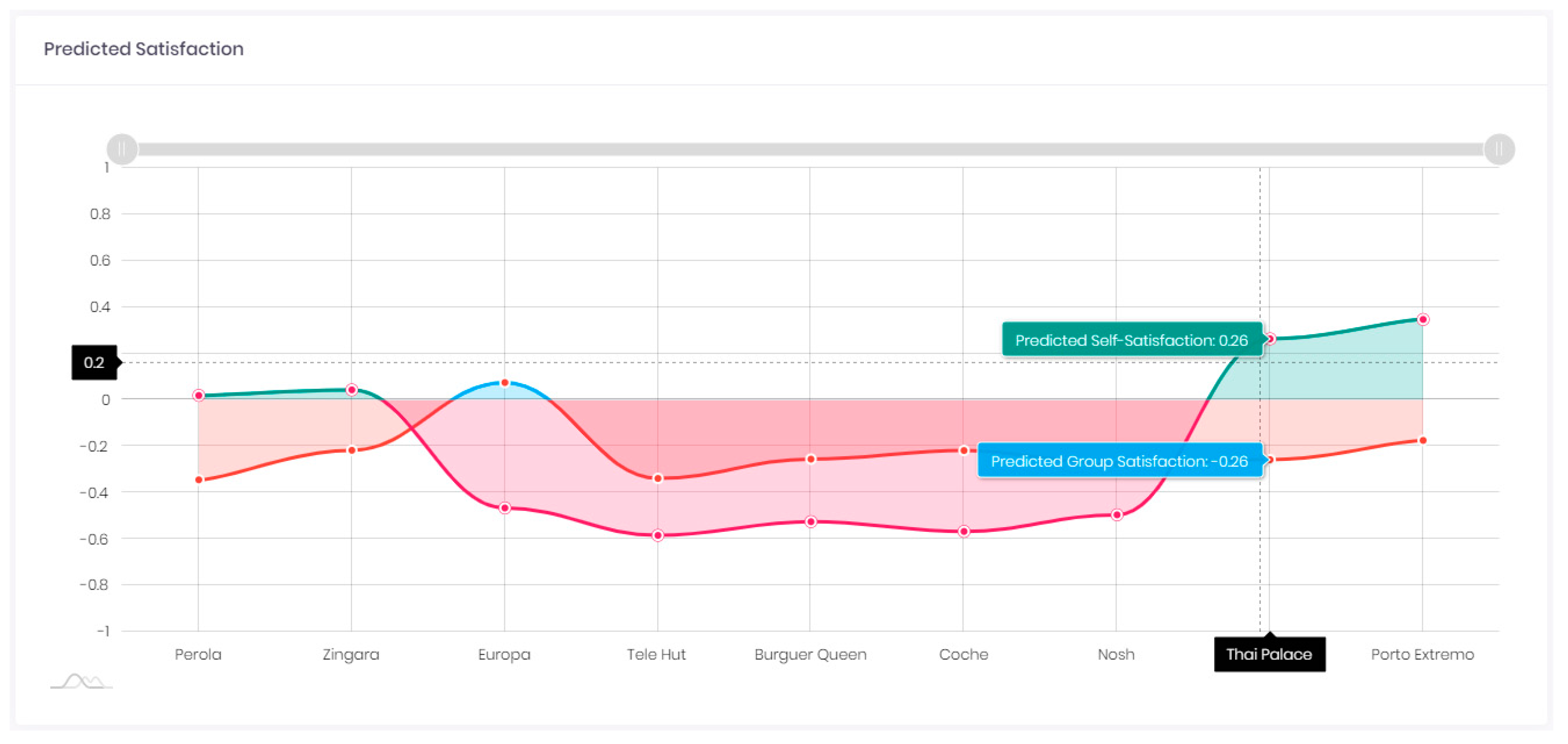

3.3.2. Recommending Based on the Prediction of Decision Satisfaction

So far we have presented all the necessary entities and definitions to implement a MCDA method capable of proposing solutions, recommending alternatives to reject and fundamentally, of executing those tasks using a method that deduces the reasons behind those proposals/recommendations. However, it is also essential to predict how the decision quality is perceived from the perspective of each decision-maker and the group. For instance, decision-makers who consider unsatisfactory a certain solution may be advised to review inconsistent assessments or to share with the group the reasons why certain criteria are more important. The discussion about the importance of the criteria may lead decision-makers to consider other perspectives, influencing them to redefine their preferences, promoting consensus. Next, we will introduce a set of definitions that intends to predict the decision quality through the analysis of the decision-makers’ satisfaction. As is evidenced in several studies, the study of the decision-makers’ satisfaction can be a strong indicator of the decision quality [

37,

38]. The satisfaction analysis allows to understand the impact of a certain choice in a certain time instant according to the context of that time instant. The context is not only the social context or the current dynamics but also a reflection of the decision-makers’ sentiments regarding a possible solution, according to the knowledge they have in that time instant. Therefore, we adapted to this framework a simpler version of the model proposed in [

38] that intends to predict the decision-makers’ satisfaction (perception of the decision quality). The used satisfaction scale is presented in

Table 9, with the predicted satisfaction belonging to the interval

.

Definition 24. Letbe a function which returns the predicted satisfaction of decision-makerregarding alternativewithout the inclusion of the decision-maker’s style of behavior (is the decision-maker’smost preferred alternative with the initial weights, i.e., before the readjustment performed in definition 12): In our example, , and .

Definition 25. Letbe a function which returns the average satisfaction of the:

In our example, , and .

Definition 26. Letbe a function which returns the predicted satisfaction of a decision-makerregarding the alternativewith the inclusion of the decision-maker’s Concern for Self and Concern for Others: In our example, , and .

Definition 27. Letbe a function which returns the decision-makerspredicted satisfaction regarding alternative:

In our example, .