Agglomerative Clustering and Residual-VLAD Encoding for Human Action Recognition

Abstract

1. Introduction

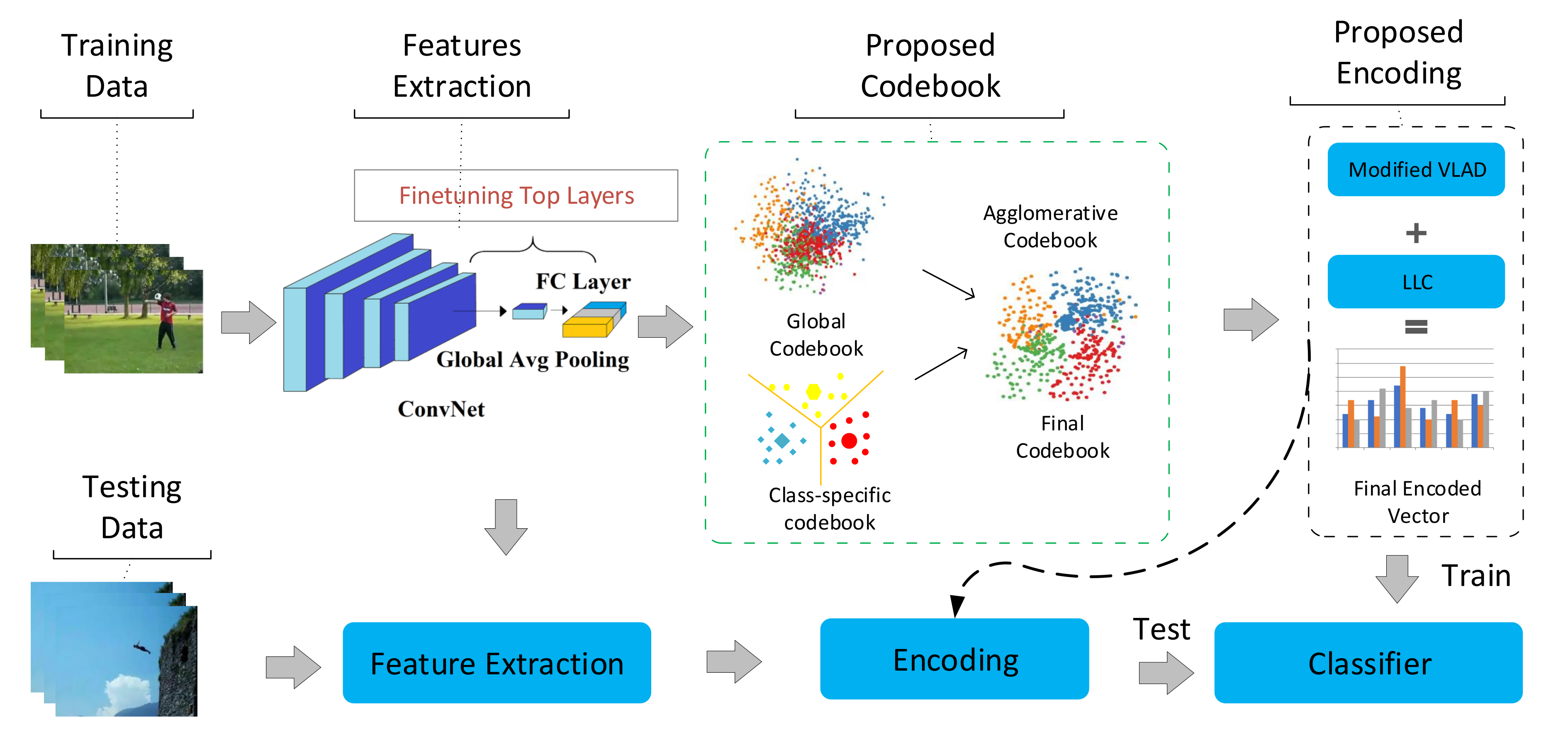

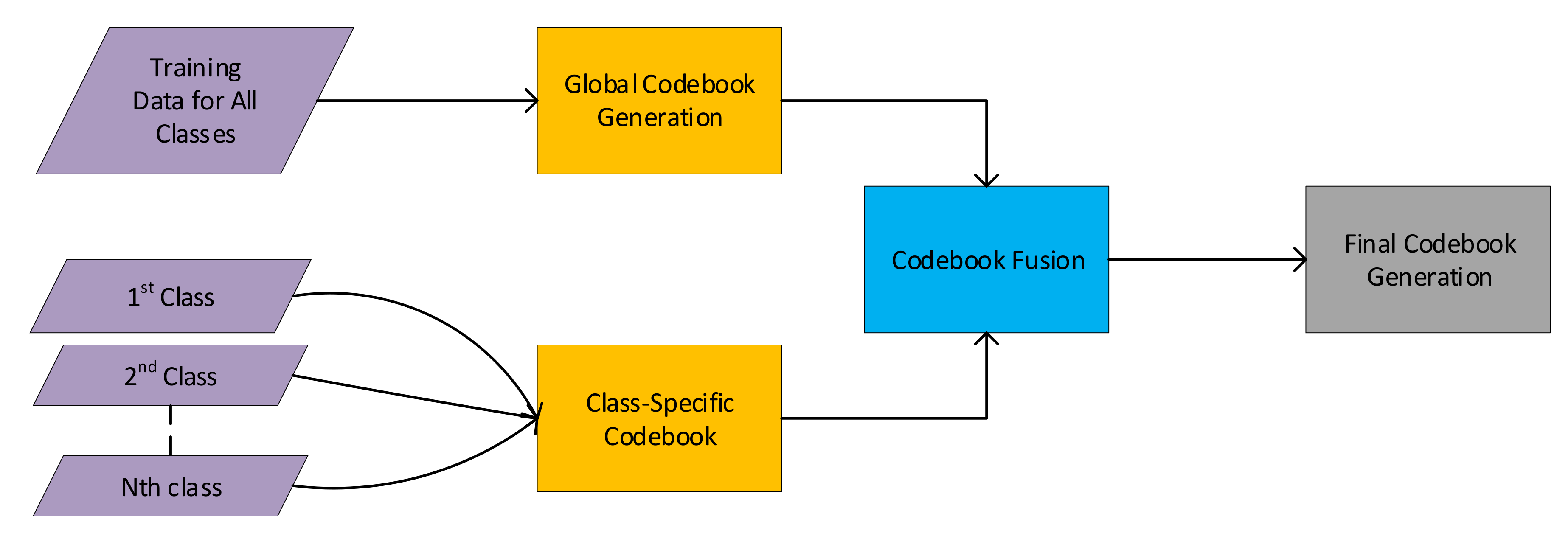

- First, we propose an agglomerative codebook. The aim of this codebook is to agglomerate the benefits of global codebook representation with class-specific codebook representations. The final agglomerative codebook provides discriminative codewords for the feature encoding step.

- Second, we have proposed a modified Vector of Locally Aggregated Descriptors (VLAD) vector, known as Residual-VLAD (R-VLAD), which computes the higher order statistics by finding a difference vector between the feature descriptor and mean of the nearest codeword. It requires less computational resources than VLAD due to a reduced size of the encoded vector.

- Third, to further enhance the capability of the R-VLAD vector, a hybrid feature vector is formed by fusing the locality-based descriptor with R-VLAD. The final encoded vectors are L2 normalized, which serve as the input to the classifier.

2. Proposed Methodology

2.1. Deep Feature Extraction

2.1.1. Spatio-Temporal ResNet (ST-ResNet)

2.1.2. ResNeXt-101

2.2. Agglomerative Codebook Generation

| Algorithm 1: Agglomerative Clustering |

|

2.3. Feature Encoding

2.3.1. Vector Quantization (VQ)

2.3.2. VLAD Super Vector

2.3.3. Locality Constrained Coding

2.3.4. Proposed Residual-VLAD (R-VLAD)

2.3.5. Proposed Hybrid Feature Vector

| Algorithm 2: Hybrid Feature Vector (HFV) Encoding |

| Input: Features of a video and Agglomerative codebook C Output: Encoded vector HFV Step 1: Computing R-VLAD where c represents the nearest codeword. Step 2: Computing LLC Step 3: Fusing R-VLAD and LLC descriptors |

3. Experimental Results and Discussion

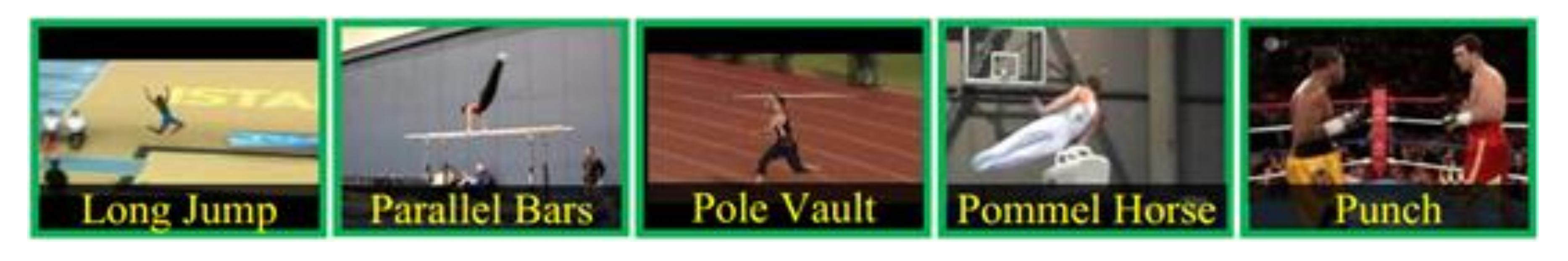

3.1. Datasets

3.2. Implementation Details

3.3. Performance Evaluation

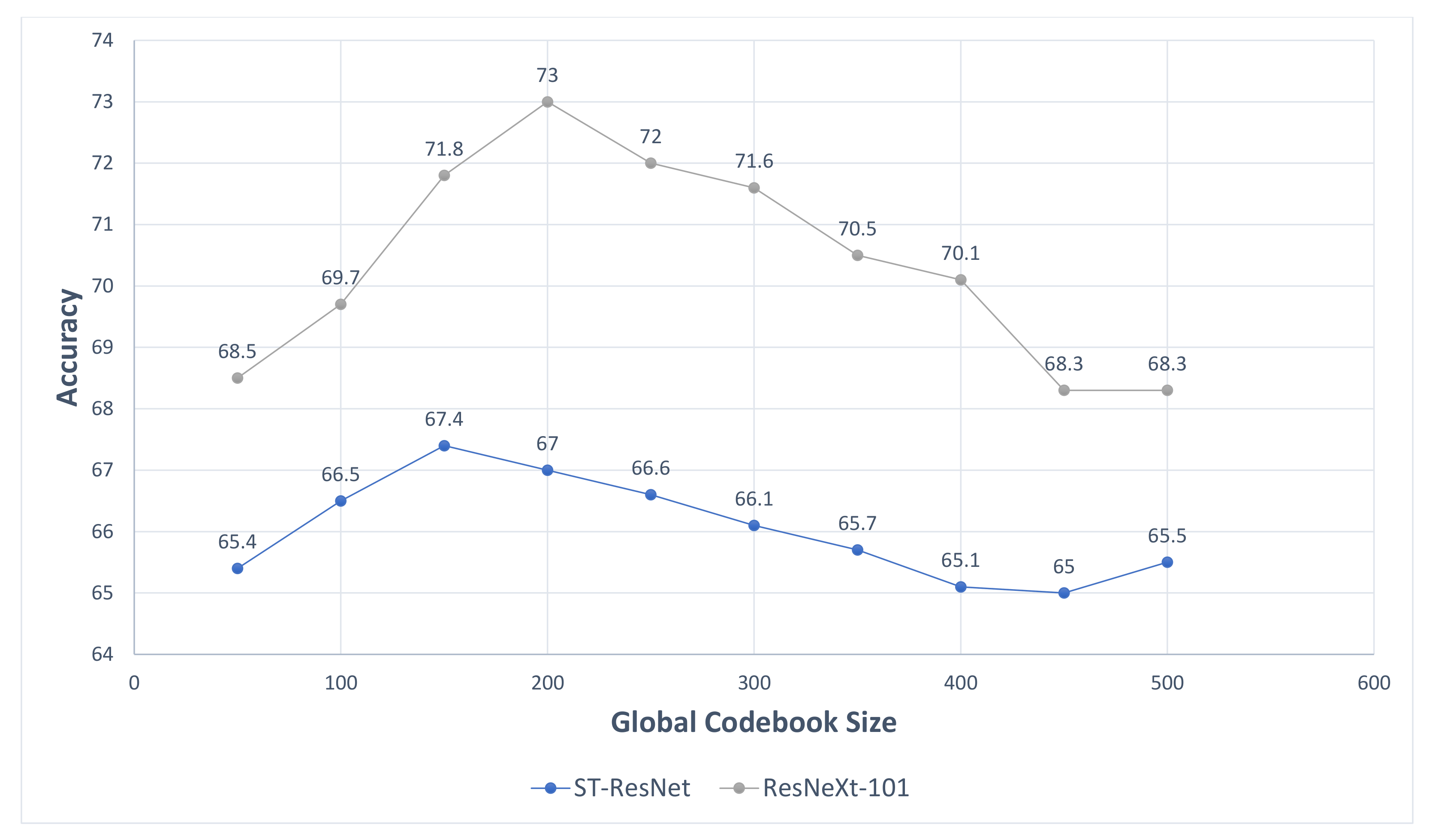

3.3.1. Codebook Size

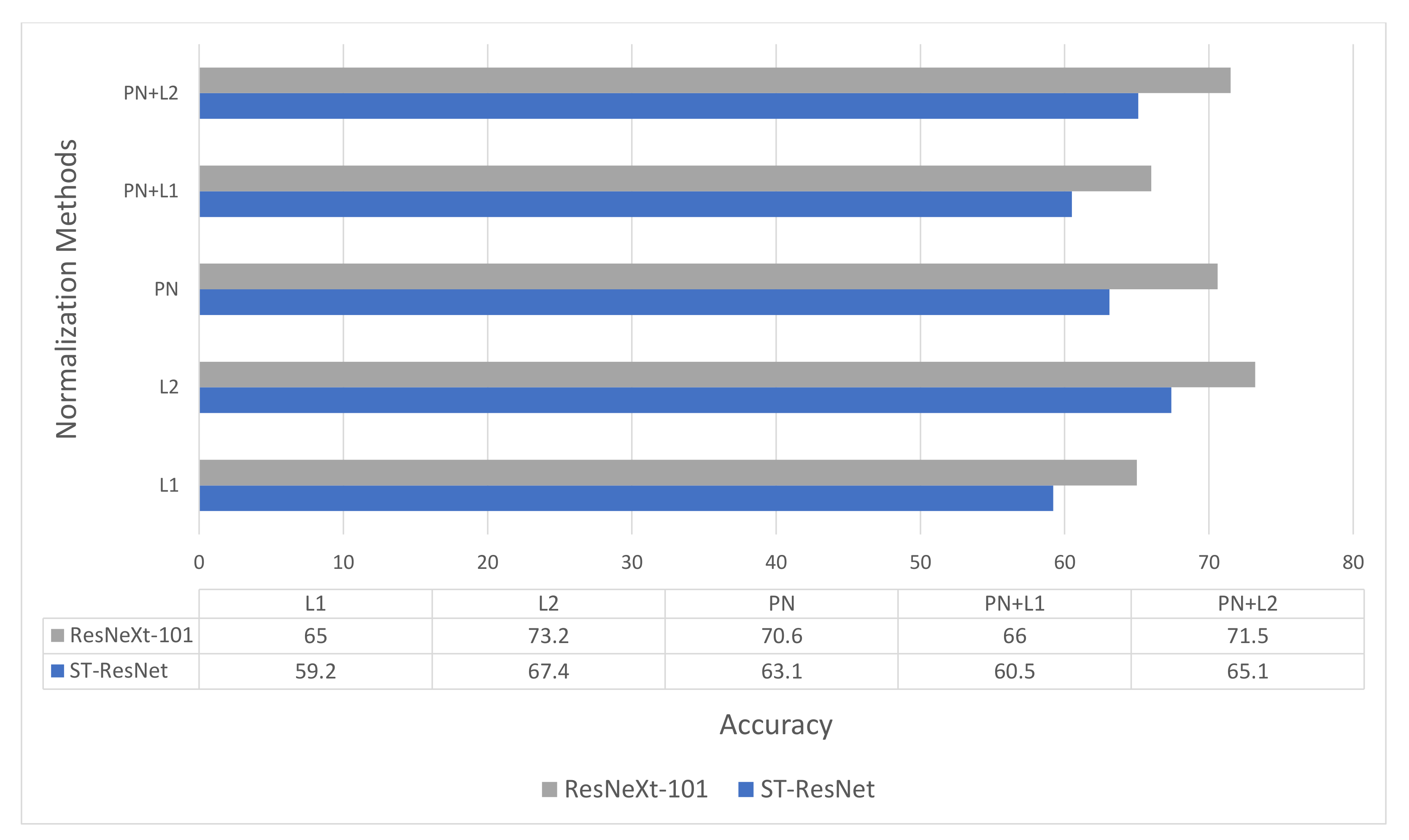

3.3.2. The Encoding Parameters and Normalization Scheme

3.4. Comparison with Other Encoding Schemes

3.5. Comparison with State-of-the-Art

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| BOW | Bag-of-Words |

| C3D | Convolutional 3D Network |

| ConvNet | Convolutional Neural Network |

| HFV | Hybrid Feature Vector |

| LLC | Locality Constrained Coding |

| MBH | Motion Boundary Histograms |

| R-VLAD | Residual-Vector of Locally Aggregated Descriptors |

| SCN | Spatial Convolutional Network |

| TCN | Temporal Convolutional Network |

| VQ | Vector Quantization |

References

- Kong, Y.; Fu, Y. Human Action Recognition and Prediction: A Survey. arXiv 2018, arXiv:1806.11230. [Google Scholar]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Dollár, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the 2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar]

- Dalal, N.; Triggs, B.; Schmid, C. Human detection using oriented histograms of flow and appearance. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 428–441. [Google Scholar]

- Laptev, I. On space-time interest points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the 15th ACM international conference on Multimedia, Bavaria, Germany, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Wang, H.; Ullah, M.M.; Klaser, A.; Laptev, I.; Schmid, C. Evaluation of local spatio-temporal features for action recognition. In Proceedings of the British Machine Learning Conference, London, UK, 7–10 September 2009. [Google Scholar]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3–6 December 2013; pp. 3551–3558. [Google Scholar]

- Raptis, M.; Soatto, S. Tracklet descriptors for action modeling and video analysis. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 577–590. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1510–1517. [Google Scholar] [CrossRef] [PubMed]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27, 568–576. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 4489–4497. [Google Scholar]

- Choutas, V.; Weinzaepfel, P.; Revaud, J.; Schmid, C. Potion: Pose motion representation for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7024–7033. [Google Scholar]

- Karaman, S.; Seidenari, L.; Bagdanov, A.; Del Bimbo, A. L1-regularized logistic regression stacking and transductive crf smoothing for action recognition in video. In Proceedings of the ICCV Workshop on Action Recognition With a Large Number of Classes, Sydney, Australia, 7 December 2013; Volume 13, pp. 14–20. [Google Scholar]

- Peng, X.; Wang, L.; Cai, Z.; Qiao, Y.; Peng, Q. Hybrid super vector with improved dense trajectories for action recognition. ICCV Workshops 2013, 13, 109–125. [Google Scholar]

- Uijlings, J.R.; Duta, I.C.; Rostamzadeh, N.; Sebe, N. Realtime video classification using dense hof/hog. In Proceedings of the International Conference on Multimedia Retrieval, Glasgow, Scotland, 1–4 April 2014; pp. 145–152. [Google Scholar]

- Bishop, C.M. PatterN Recognition and Machine Learning; Springer: Berlin, Germany, 2006. [Google Scholar]

- Zhou, X.; Yu, K.; Zhang, T.; Huang, T.S. Image classification using super-vector coding of local image descriptors. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 141–154. [Google Scholar]

- Huang, Y.; Huang, K.; Yu, Y.; Tan, T. Salient coding for image classification. CVPR 2011, 1753–1760. [Google Scholar]

- Jégou, H.; Douze, M.; Schmid, C.; Pérez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Wildes, R.P. Spatiotemporal multiplier networks for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4768–4777. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-means++: The advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms. Society for Industrial and Applied Mathematics, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Wang, J.; Yang, J.; Yu, K.; Lv, F.; Huang, T.; Gong, Y. Locality-constrained linear coding for image classification. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3360–3367. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 7 November 2011; pp. 2556–2563. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Smeulders, A.; Gemert, J.; Veenman, C.; Geusebroek, J. Visual word ambiguity. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1271–1283. [Google Scholar]

- Perronnin, F.; Sánchez, J.; Mensink, T. Improving the fisher kernel for large-scale image classification. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 143–156. [Google Scholar]

- Duta, I.C.; Ionescu, B.; Aizawa, K.; Sebe, N. Spatio-temporal vector of locally max pooled features for action recognition in videos. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3205–3214. [Google Scholar]

- Duta, I.C.; Ionescu, B.; Aizawa, K.; Sebe, N. Spatio-temporal vlad encoding for human action recognition in videos. In Proceedings of the International Conference on Multimedia Modeling, Reykjavik, Iceland, 4–6 January 2017; Volume 64, pp. 365–378. [Google Scholar]

- Girdhar, R.; Ramanan, D.; Gupta, A.; Sivic, J.; Russell, B. Actionvlad: Learning spatio-temporal aggregation for action classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 971–980. [Google Scholar]

- Tran, D.; Ray, J.; Shou, Z.; Chang, S.; Paluri, M. Convnet architecture search for spatiotemporal feature learning. arXiv 2017, arXiv:1708.05038. [Google Scholar]

- Sun, L.; Jia, K.; Yeung, D.; Shi, B.E. Human action recognition using factorized spatio-temporal convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4597–4605. [Google Scholar]

- Laptev, I. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Kar, A.; Rai, N.; Sikka, K.; Sharma, G. Adascan: Adaptive scan pooling in deep convolutional neural networks for human action recognition in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3376–3385. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 20–36. [Google Scholar]

- Wang, L.; Koniusz, P.; Huynh, D.Q. Hallucinating idt descriptors and i3d optical flow features for action recognition with cnns. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8698–8708. [Google Scholar]

| Encoding Schemes | UCF101 | HMDB51 | ||

|---|---|---|---|---|

| ST-ResNet | ResNeXt-101 | ST-ResNet | ResNeXt-101 | |

| VQ | 90.3 | 92.5 | 59.6 | 65.2 |

| SA [32] | 90.2 | 91.3 | 62.4 | 67.4 |

| VLAD [23] | 92.9 | 93.8 | 64.5 | 68.1 |

| Fisher Vector [33] | 91.0 | 90.1 | 64.2 | 64.3 |

| Proposed R-VLAD | 93.7 | 94.0 | 65.0 | 69.8 |

| Proposed HFV | 94.3 | 96.2 | 67.2 | 72.6 |

| Features | Method | UCF101 | HMDB51 |

|---|---|---|---|

| Two-Stream CNN | iDT + ST-VLAD [35] | 91.5 | 67.6 |

| LTC [11] | 92.7 | 67.2 | |

| iDT + ActionVLAD [36] | 93.6 | 69.8 | |

| iDT + VLMPF [34] | 94.3 | 73.1 | |

| Two-Stream [10] | 88.0 | 59.4 | |

| ST-ResNet [24] | 93.4 | 66.4 | |

| Proposed HFV-ST-ResNet | 94.3 | 67.2 | |

| Single-Stream 3D CNN | C3D [15] | 82.3 | 51.6 |

| Res3D [37] | 85.8 | 54.9 | |

| F [38] | 88.1 | 59.1 | |

| P3D [39] | 88.6 | - | |

| iDT + C3D AdaScan [40] | 93.2 | 66.9 | |

| TSN [41] | 94.2 | 69.4 | |

| ResNeXt-101 [25] | 94.5 | 70.2 | |

| Proposed HFV-ResNeXt-101 | 96.2 | 72.6 | |

| Two-Stream 3D CNN | I3D + PoTion [16] | 98.2 | 80.9 |

| I3D + IDT Hallucination [42] | - | 82.4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Butt, A.M.; Yousaf, M.H.; Murtaza, F.; Nazir, S.; Viriri, S.; Velastin, S.A. Agglomerative Clustering and Residual-VLAD Encoding for Human Action Recognition. Appl. Sci. 2020, 10, 4412. https://doi.org/10.3390/app10124412

Butt AM, Yousaf MH, Murtaza F, Nazir S, Viriri S, Velastin SA. Agglomerative Clustering and Residual-VLAD Encoding for Human Action Recognition. Applied Sciences. 2020; 10(12):4412. https://doi.org/10.3390/app10124412

Chicago/Turabian StyleButt, Ammar Mohsin, Muhammad Haroon Yousaf, Fiza Murtaza, Saima Nazir, Serestina Viriri, and Sergio A. Velastin. 2020. "Agglomerative Clustering and Residual-VLAD Encoding for Human Action Recognition" Applied Sciences 10, no. 12: 4412. https://doi.org/10.3390/app10124412

APA StyleButt, A. M., Yousaf, M. H., Murtaza, F., Nazir, S., Viriri, S., & Velastin, S. A. (2020). Agglomerative Clustering and Residual-VLAD Encoding for Human Action Recognition. Applied Sciences, 10(12), 4412. https://doi.org/10.3390/app10124412