1. Introduction

The color of an object determined by a machine visual system (MVS) such as a digital camera is based on the amount of light reflected from it. On the other hand, the human visual system (HVS) determines the color of an object by considering the details of the surrounding environment and changes in overall illumination [

1]. The complex HVS automatically recognizes the changes in illumination and easily recognizes the original color of the object. This feature of the HVS is called color constancy and has been studied for a long time [

2,

3]. Owing to the inconsistency between the HVS and the MVS under various illumination conditions, a machine cannot obtain the same image as a human. These inconsistencies also cause algorithmic errors in functions such as color separation, pattern recognition and object tracking. Therefore, to improve the performance of MVS, it is important to understand the color constancy of HVS.

The Retinex concept by Land and McCann [

4,

5,

6,

7] combines the functions of retina and cortex, and explains how the HVS perceives color. If

S is defined as the value of an image in the spatial domain

, the image value in the Retinex model mainly depends on two factors: the amount of illumination projected onto the object in the image and the amount of illumination reflected by the object in the image. Based on these two components,

S can be constructed with the reflectance function

R and illumination function

L [

8] as follows:

where,

(reflectivity) and

(illumination effect),

The HVS can recognize both the illumination component and the reflectance component, and is able to remove the illumination component. This is called color constancy. Therefore, the HVS can recognize the constant color of an object, while ignoring the changing illumination. Removing the illumination component from the image can cause dark areas in the low-light image enhancement application to represent the original color of the object, which can increase the success rate of other algorithms on the machine. Also, it is possible to improve the edge, texture, etc. so that the reflectance component can exhibit the same effect in reconstruction as a super resolution.

Motivated by the color perception characteristics of the HVS, the Retinex theory enhances high-contrast images, allowing more detail and color to be observed in low-light areas [

8,

9,

10,

11,

12,

13]. In addition, Retinex theory can be used effectively for shadow removal [

14]. A representative method among Retinex theories is the Retinex model using regularization parameters by calculating the total variation in the reflectance function [

8,

15]. Recently, a Retinex model [

16] has been proposed for effective image enhancement using the sparsity of reflectance in the gradient region and the sparsity of illumination in the frequency domain. The Retinex model in [

17] proposed image enhancement using sparse coding. Sparse coding is a method of constructing the basis of an image through a dictionary.

However, such conventional Retinex methods have a problem in that when the illumination changes rapidly, details in a complex area within the image are not properly reflected and blurred, or illumination and reflectance cannot be accurately decomposed. In most Retinex models, illumination has smooth conditions and reflectance shows a detailed structure. Therefore, if the reflectance function can be more accurately generated, the detailed description of the complex area can be well preserved when the illumination changes rapidly, and thus, the illumination and reflectance of the image can be accurately resolved. In sparse source separation Retinex model [

16] that consider sparsity, it is assumed that the reflectance component is sparse in the frequency domain. An image with a complex region (high rank) has, a number of detailed components. In such a case, the decomposition of reflectance is not properly performed, and an error in the reflectance function affects the illumination function, which results in the improper decomposition of reflectance and illumination. In particular, in the case of a Retinex model [

17] using sparse coding, an advantage is that the reflectance component can be expressed by the dictionary greater detail. The sparse coding consists of a dictionary and a sparse coefficient in a linear combination, constituting a local patch dictionary. A drawback of the dictionary-based sparse coding approaches is that important spatial structures of the signal of interest may be lost because of its subdivision into mutually-independent patches. Further, patches (atoms) of the dictionaries learned using this approach are often redundant and contain shifted versions of the same features. Therefore, it is necessary to construct a reflectance dictionary for each image, which cannot be used as a robust dictionary. Therefore, a new method that accurately generates the components of the reflectance function is needed to overcome these limitations.

In this paper, we propose an image enhancement method based on Retinex theory using convolutional sparse coding (CSC). Our approach builds on recent advances in CSC and reconstruction techniques. We show that the CSC reconstruction technique provides a higher quality of high contrast and complex image than the existing patch-based sparse reconstruction techniques. In addition, we observe that CSC, is a particularly well-suited general dictionary for the different types of high-contrast and complex signals present in the reflectance function. The advantage of a general dictionary is that when an arbitrary image is input, the reconstruction can be performed immediately with the learned dictionary without learning a new one. We use singular value decomposition (SVD) in CSC to construct a more compact dictionary in limited memory. In addition, since it is a form of reflectance basis of a general image, it only has additional information that fits the basis. Based on this fact, we can improve the low-light image through our proposed method. Additionally, a halo artifact can occur in a common Reinex-based reconstruction problem, which can be reduced by our proposed method. These are detailed in

Section 4. Therefore, we pose the Retinex image enhancement problem as a CSC problem and derive necessary formulations to solve it. We make the following contributions:

We show that the reflectance function of the Retinex model can be learned through a CSC dictionary, leading to efficient image enhancement through the expression of various nonlinear image shapes.

We propose that the reflectance function can be reconstructed using a trained general dictionary using CSC from a large dataset.

We use SVD in CSC to construct a more compact dictionary in limited memory.

The remainder of this paper is organized as follows.

Section 2 briefly introduces traditional Retinex model, sparse coding for Retinex model, and basic CSC.

Section 3 discusses our CSC Retinex algorithm, focusing on the proposed objective function and the general reflectance function dictionary learned with CSC. Obtained experimental results are presented and analyzed in

Section 4. Finally,

Section 5 summarizes this paper.

3. Proposed Method

In this section, we describe the proposed CSC Retinex algorithm, focusing on the proposed objective function and the general reflectance function dictionary learned with CSC. First, a new reflectance function using CSC is described. Then, we develop an objective function which combines illumination and reflectance functions. Next, we introduce a method to minimize the objective function. Finally, the architecture of the Retinex model is depicted.

3.1. Proposed Reflectance Function

The reflectance function of the proposed Retinex model aims to find the best basis that guarantees more detailed structures or features. Therefore, the proposed reflectance function should generate the most appropriate basis dictionary from the example image, and there should be a negligible redundancy between each dictionary patch. As mentioned in

Section 2.3, CSC is based on an image decomposition into spatially-invariant convolutional features. Compared to the atoms of a dictionary, the learned filters of our CSC scheme (

Figure 1c) show a much richer variance (e.g., they span a larger range of orientations), which leads to better reconstructions.

The components of the reflectance function of the proposed Retinex model are as follows.

In this paper, CSC was used as given in Equation (

8) to construct the reflectance function. Therefore, we learn dictionaries and coefficient maps through CSC. The reflectance is generated through the convolution of the dictionary and coefficient map. When a component of the reflectance function is generated through convolution, various nonlinear shapes of an image can be expressed, including both local and global features. For this reason, the reflectance can be expressed even in a more complex area than the conventional sparse coding method, following which the illumination can be accurately decomposed in the objective function. Learning in CSC takes longer than the previous method, but if we learn the dictionary, we can quickly proceed with the algorithm through the stored dictionary when creating the reflectance function component in the test stage.

We can reconstruct the reflectance component by using CSC to learn a general dictionary with a large dataset. In sparse coding, each image patch can be sparsely expressed using a linear combination of atoms from a specially selected dictionary. However, in such a linear combination, there is a limit to the number that can be expressed, and since the dictionary patch of sparse coding has high redundancy, this number is further reduced. In CSC, each image patch can be sparse expressed using a convolution of atoms from a specially selected dictionary. The convolution can express more cases than a linear combination; moreover, the redundancy of the CSC dictionary is also lower than the sparse coding, thereby leading to the expression of more cases (

Figure 1). Therefore, if we generate a general dictionary sufficiently learned from a large dataset through CSC, an appropriate reflectance component can be reconstructed even in an untrained test image. This is advantageous as there is no need to generate a dictionary every time, when compared to the SCR model that has to generate a dictionary for each image. To do this, we construct a basis dictionary using SVD.

3.2. Proposed Objective Function

In the proposed method, CSC is applied to the Retinex model to effectively decompose the image’s illumination and reflectance. The main idea of the CSC Retinex model is to search the appropriate basis in advance for the reflectance function, and then decompose the illumination by identifying more detailed structures or features in the reflectance function. Therefore, the key step is to construct a dictionary for expressing the reflectance component of the input image.

Our model is based on the following assumptions:

In general, since the illumination function is spatially smooth, it can be expressed as as the regularization term.

For the reflectance function, it is generated by CSC as mentioned in

Section 3.1 by

Based on the reflectivity, the constraints and are added.

We consider the following energy function for Retinex to simulate and explain how the HVS perceives color:

here,

,

and

are positive numbers for regularization parameters.

In our proposed model, the reflectance can be better represented by a trained dictionary than the SCR model, and the first and second terms in Equation (

9) can be interpreted as the regularization terms for reflectance

r. In addition, by applying an iterative algorithm in our proposed model, we construct a dictionary that can derive the optimal reflectance each time the algorithm is repeated. Therefore, the following alternating minimization method [

8] is used to solve Equation (

9).

3.3. Proposed Retinex Model

As mentioned in

Section 3.2, the following alternating minimization method [

8] is used to solve Equation (

9). The basic pseudo-code of the proposed CSC Retinex model is as follows and it is represented by Algorithm 1.

| Algorithm 1: Basic Pseudo-Code for Proposed CSC Retinex Model. |

- 1:

Initialize , and let be the initial illumination function - 2:

At the kth iteration: - 3:

Given , compute by solving - 4:

And update by using - 5:

Given , compute by solving - 6:

And update by using - 7:

Go back to step 2 until and

|

3.3.1. Reflectance Function Sub-Problem

From line 3 of Algorithm 1, the reflectance sub-problem can be written as

here, the formula for finding the dictionary

and coefficient map

through CSC Equation (

7) is as follows.

where,

. Equation (

11) can be solved using the consensus alternate direction method of multipliers (ADMM) [

21,

22].

and

can be expressed in a small N blocks matrix

,

. Here,

represents the

i th data block along with its respective filters

. The filter

d sub-problem through ADMM is as follows [

21].

Algorithm 2’s calculation follows CSC’s ADMM method [

21]; however, in particular, we propose a new method to solve the least-squares problem in line 3. The least-squares solution in line 3 is as follows.

where † denotes the conjugate transpose, and

denotes the identity matrix. Let

. Then, we can solve the least-squares problem in line 3 through SVD. The diagonal entries

of

are called the singular values of

. The columns of

are called left singular vectors. Moreover, the columns of

are called right singular vectors. And

h is the rank of

. Then,

can be calculated as

. We can use SVD to select only the important parts of large datasets and update the dictionary through it. Since we have limited memory (dictionary filter size and number), it is particularly important to construct a general dictionary for selecting and compressing only important information from large datasets.

SVD is known to be the most robust and reliable method for solving the least-squares problem, but it has the disadvantage of complicated computation. Nevertheless, we use SVD to obtain the most common basis dictionary. It means that the dictionary constituting the reflectance can be constructed on a basis like SVD. Therefore, the best reflectance component

can be obtained from the dictionary and coefficient map in CSC.

| Algorithm 2: CSC Reflectance function ADMM for the Filters d. |

- 1:

while Not Converged do - 2:

for to N do - 3:

- 4:

end for - 5:

- 6:

for to N do - 7:

- 8:

end for - 9:

end while - 10:

|

3.3.2. Illumination Function Sub-Problem

From line 5 of Algorithm 1, the illumination sub-problem can be written as

Since Equation (

13) is a

-norm problem, it can be easily solved through a partial derivative in the whole image area

by differentiating with respect to

l and setting the result of Equation (

13) to zero. Then, it can be solved efficiently through a fast Fourier transform (FFT) [

8,

17].

4. Experimental Result

In this section, we present the numerical results to illustrate the effectiveness of the proposed model and algorithm. In addition, we verify the algorithm through a comparative analysis of our proposed method and the SCR method. The proposed method and the SCR method implement the algorithm by applying the HSV (Hue, Saturation and Value) Retinex model to the color image. HSV Retinex is intended to reduce color changes by applying the Retinex algorithm only to the value channel of the HSV color space, and then convert it back to the RGB domain.

We note that the reflectance image obtained from Retinex is usually an overenhanced image. Therefore, we add a Gamma correction operation after the decomposition. Suppose is the illumination function obtained from Algorithm 1, and is the initial image; then the reflection function will be given by . The Gamma correction of L with an adjusting parameter is defined as . In this experiment, we set the commonly used parameter . W is the white value (it is equal to 255 in an 8-bit image, and also equal to 255 in the value channel of an HSV image), and the final result is given as .

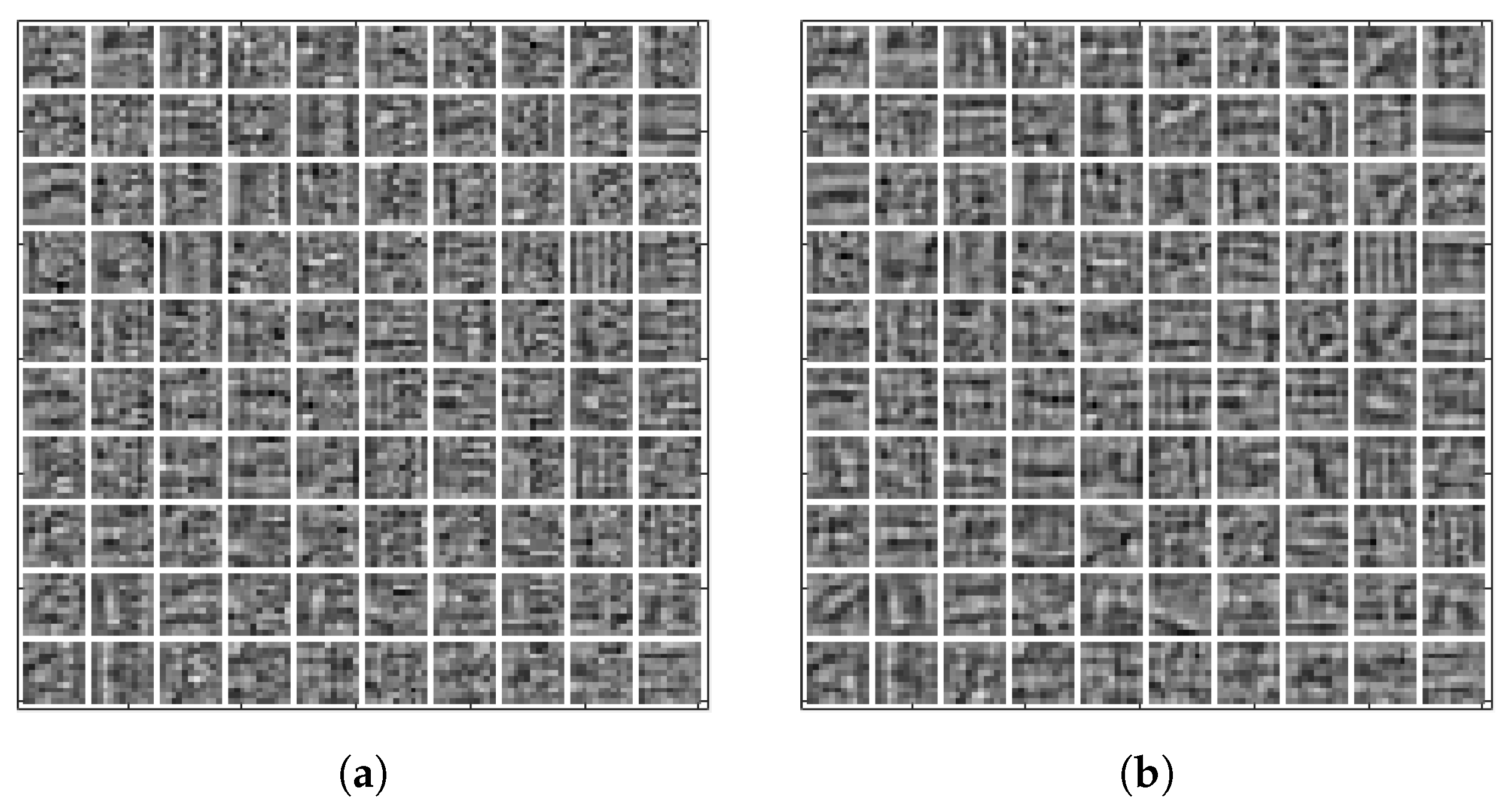

We use the following parameter values to compare the two methods in the test:

,

,

. For the stopping criteria, we set

in Algorithm 1. We verified the algorithm using MATLAB 2019(a) version. When the size of the dictionary to be learned in the algorithm is small, the calculation of the proposed method can be completed faster. However, if the algorithm has a larger dictionary size, the reflectance function can be more complex, and the computational complexity will increase; therefore, we need to choose an appropriate dictionary size. In this paper, we experimentally set the size of the dictionary to

and use 100 filters (

), as seen in

Figure 3. To generate the general dictionary for large-scale image data, we use 2000 images from ImageNet [

23]. As seen here,

Figure 3a without SVD and

Figure 3b with SVD are similar in appearance, but the dictionary with SVD includes more general features that yield better reconstruction results (

Section 4.1 and

Section 4.2). It also looks simpler and closer to the basis by using SVD. We collected training and test images for our experiment from [

24,

25], which was publicly released.

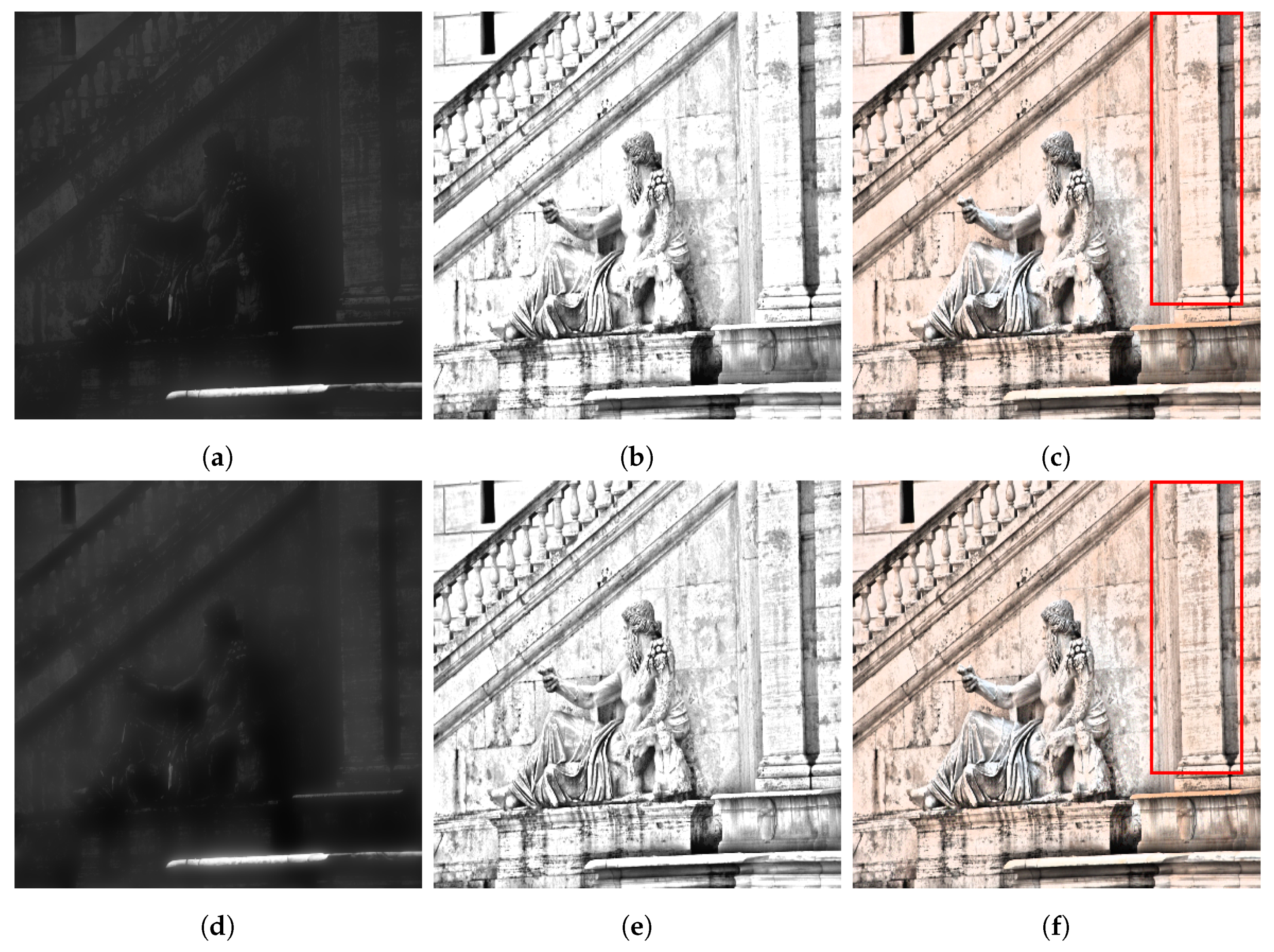

4.1. Real Image

As a first experiment with a real image, we test an image with a complex structure using a dictionary learned from an image with a simple structure. By comparing this with the dictionary of sparse coding and the dictionary of CSC, we can see that the reflectance dictionary of CSC is superior to sparse coding. Through the learned dictionary using

Figure 4a, the reflectance and enhancement images can be obtained through each CSC and sparse coding. Since the image used in training and the image used in the test in a single image are different, the separation of illumination and reflectance through CSC and sparse coding is not good. Although separation through CSC is not complete, it still yields better results than sparse coding. The reflectance of

Figure 4b and the enhancement image of

Figure 4c obtained through the CSC show that the details are better restored in the cat’s hair and texture.

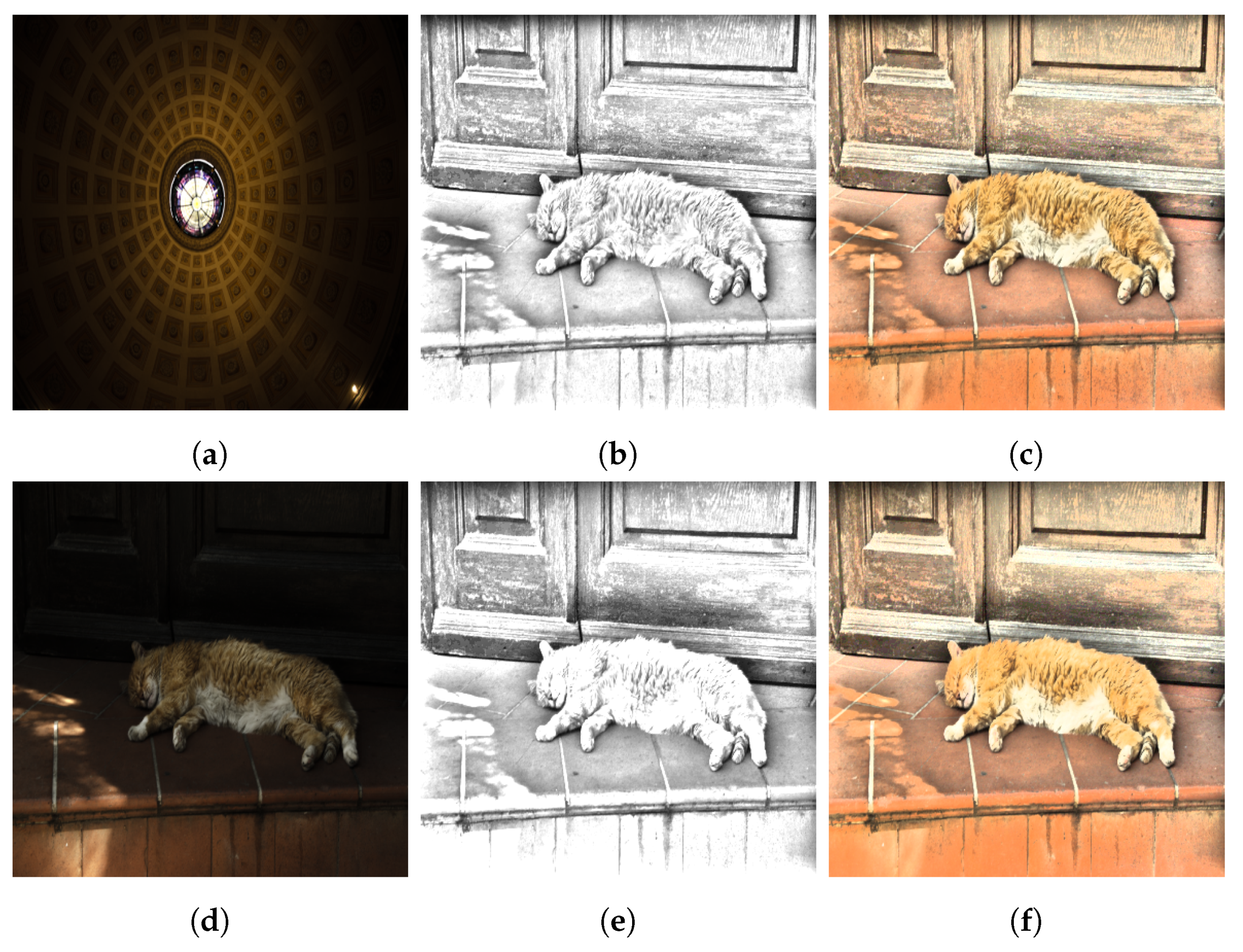

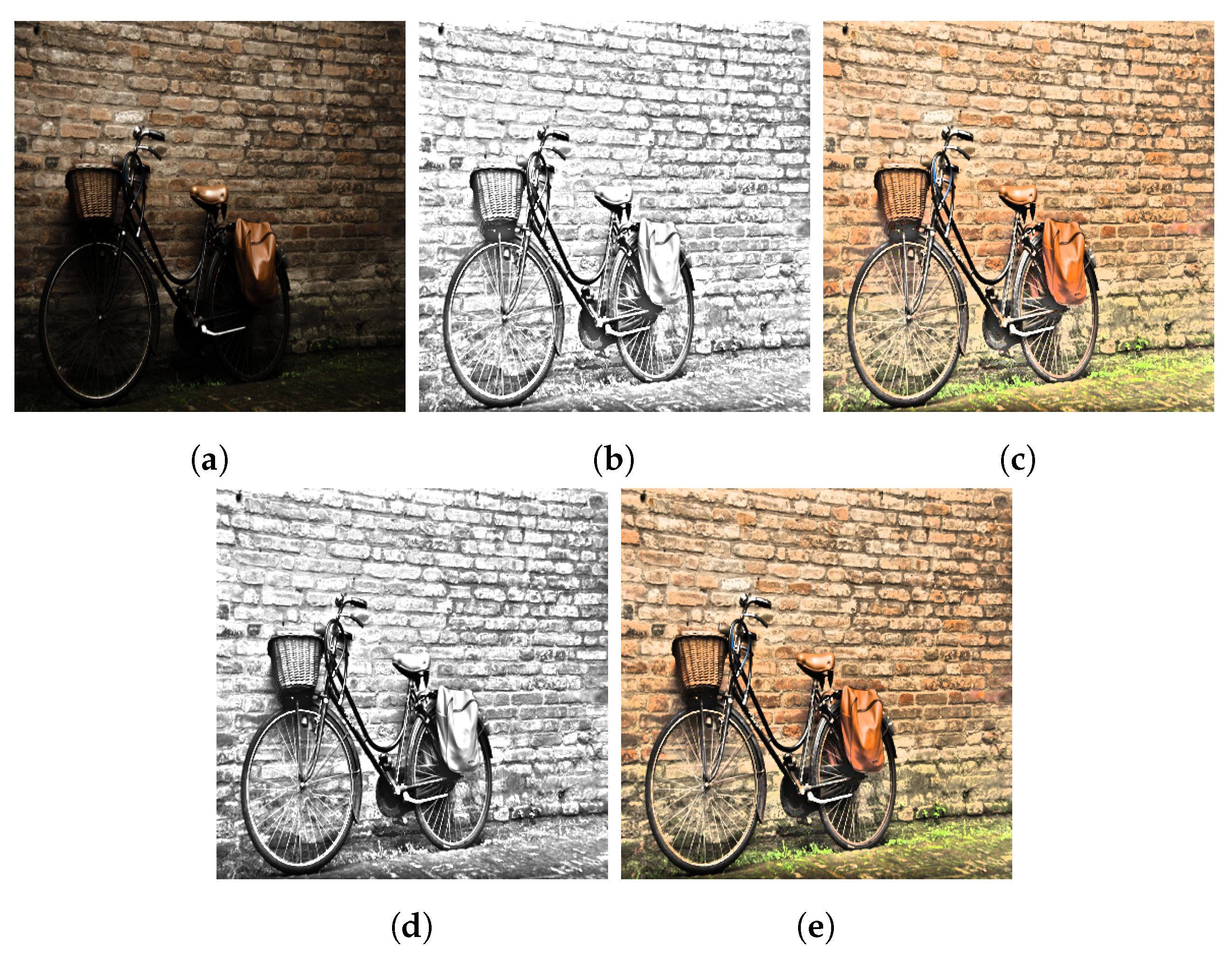

Second, in

Figure 5, the results of sparse coding in a single image, CSC in a single image, CSC in generating a general dictionary through large datasets, SVD-CSC in a single image and SVD-CSC in generating a general dictionary through large datasets are compared. These comparisons help contrast the performance of the general dictionary generated from large datasets to a single image dictionary.

Figure 1a is an original image used as a training and test image for single image sparse coding, single image CSC, and single image SVD-CSC. In addition, it is used as a test image in the general dictionary. As shown in

Figure 5c, the result of using SVD-CSC in a single image had a better quality with regard to image contrast than the result using a general dictionary. However, this difference is minimal, and

Figure 5e using general dictionary in SVD-CSC also show good results.

Figure 5e using the SVD-CSC general dictionary looks almost the same as

Figure 5b using the CSC single dictionary. This is because SVD is not used for training in large datasets, and thus, important basis data is not extracted and used in the iteration. Therefore, only the cost function value of the reflectance function itself is minimized, and local reflectance differences are not preserved.

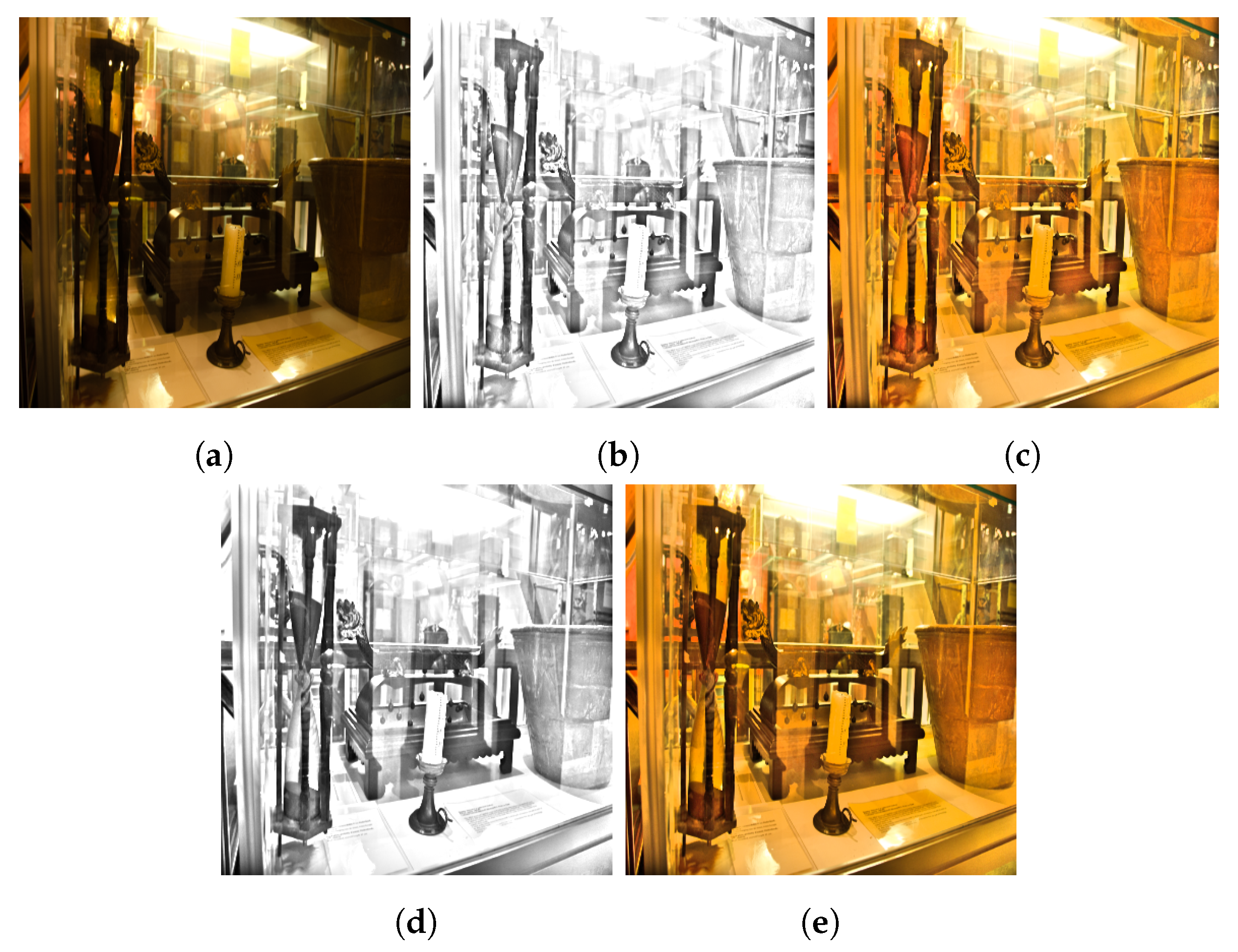

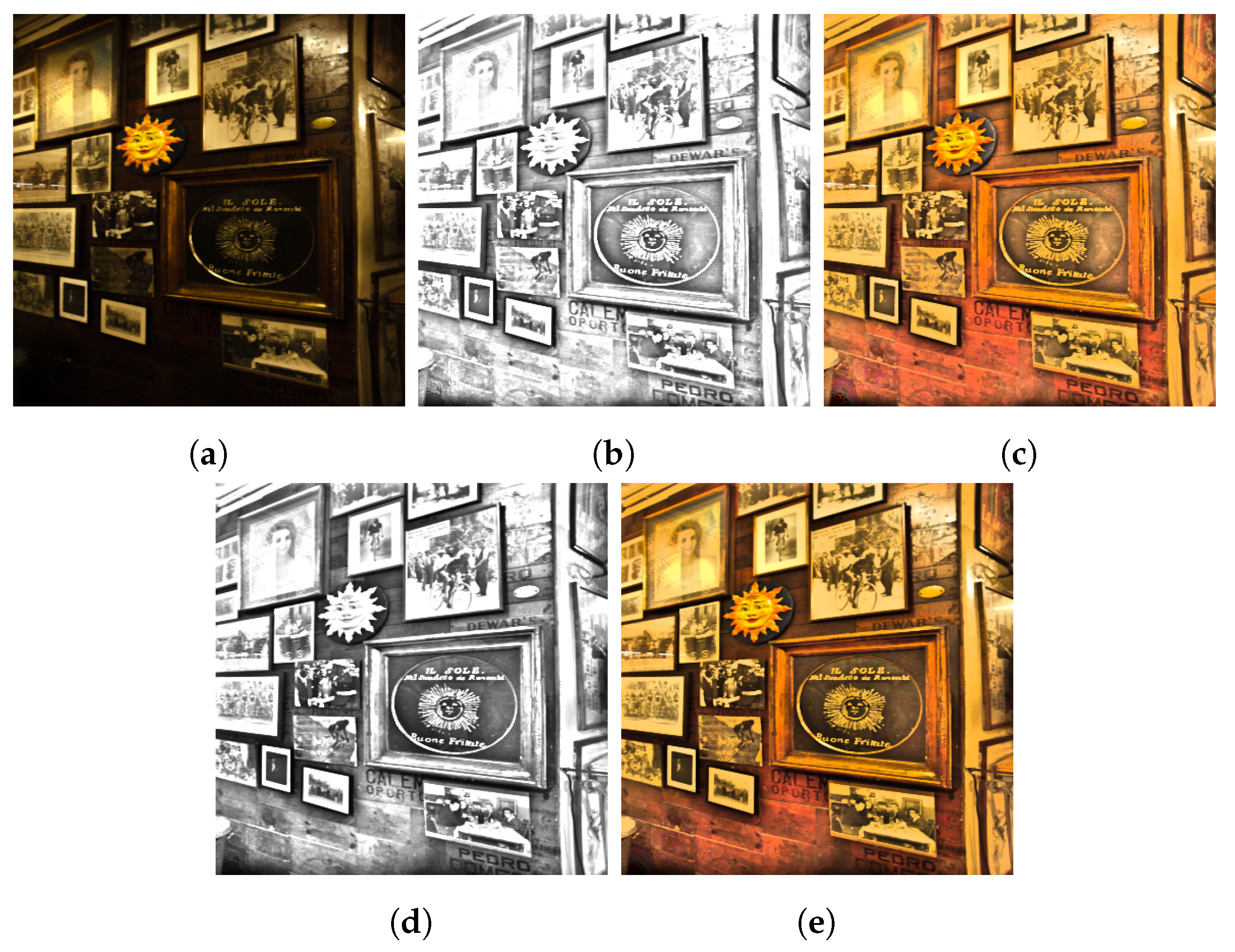

Third, we compare the SVD-CSC method with the CSC method without SVD. We show through this comparison that a dictionary using SVD can be more general than the one without SVD. As mentioned before, both dictionaries were trained with ImageNet [

23] datasets. In

Figure 6,

Figure 7 and

Figure 8, the results of the general dictionary through SVD-CSC have better image quality than those without SVD. In particular, the SVD-CSC shows better local reflectance than the CSC, when looking at the wheels of the bicycle in

Figure 6, the hourglass and candlestick in

Figure 7, and the frames in

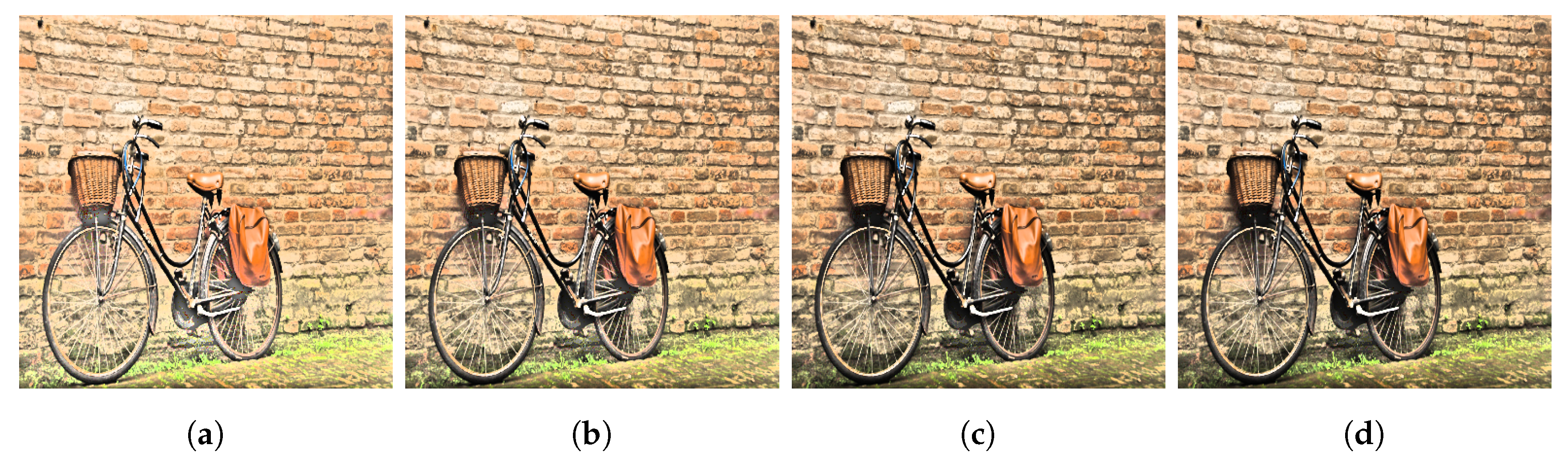

Figure 8. As mentioned earlier, this difference is even more pronounced in the absence of SVD, especially in terms of local reflection, because dictionaries do not contain important information, but are only directed at minimizing the energy in the least-squares problem. On the other hand, SVD-CSC generates a dictionary using SVD to decompose on the best basis in the least-squares problem.

Additionally, we examine the effect of SVD-CSC by reconstructing the reflectance from a dictionary of limited memory. For this purpose, we compare the results when the allowable memory is altered by changing the size of the filter. If the size of the filter is reduced, the elements constituting the dictionary are reduced. Consequently, each filter compresses and expresses information more clearly than when the filter size is large, and when reconstructing reflectance from such information, each filter must have more important information such as the basis of SVD than the preceding one to obtain better reconstruction results. In

Figure 9a, it is seen that when using

filters, the large data CSC does not express the local reflectance well.Meanwhile, the large data SVD-CSC in

Figure 9b does not express perfect local reflectance, but it shows better results than the large data CSC. When using

filters in

Figure 9c,d, both large data CSC and large data SVD-CSC have sufficient information, and hence, they show similar results. Therefore, the proposed large data SVD-CSC method can achieve better reconstruction results by constructing a compact dictionary with limited memory.

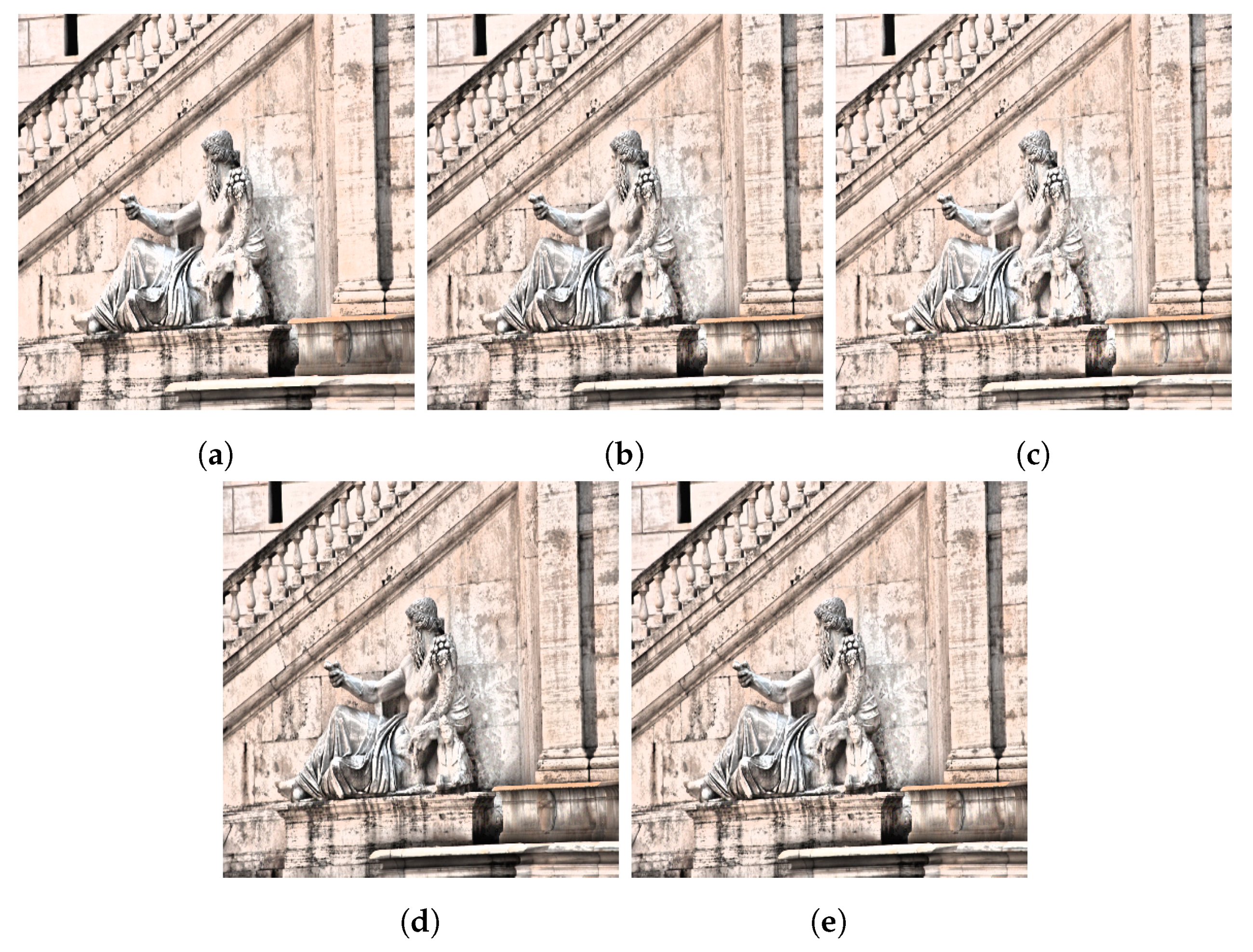

In

Figure 5,

Figure 6,

Figure 7 and

Figure 8, we show the image enhancement effect in low-light image. Finally, we show that high quality reflectance images can be obtained by removing a halo artifact from image reconstruction. A common problem with Retinex-based algorithms is the appearance of a halo artifact around the high-contrast areas owing to the rapid change in illumination. The halo artifact are shown in the red box in

Figure 10. As shown in

Figure 10c, the halo artifact usually appear due to the smoothness conditions of illumination of the Retinex model [

16]. However, in

Figure 10e our proposed method can reduce this artifact because the reflectance can be reconstructed in more detail. Therefore, our proposed method can reduce a halo artifact while using limited memory in these reconstruction applications, and acquire high quality images with enhanced edges and texture such as super resolution.

Objective Evaluation

A blind image quality assessment called natural image quality evaluator (NIQE) [

26] was used to evaluate the enhanced results. A lower NIQE value represents a higher image quality. Since NIQE evaluates only the naturalness of image assessment, we used another color image assessment called auto regressive-based image sharpness metric (ARISM) [

27]. In

Table 1, the large data SVD-CSC method had a lower score on NIQE/ARISM than the sparse coding and large data CSC methods; thus, our method is shown to have a good balance and performance. In addition, the large data SVD-CSC method has a superior or similar performance to the single image CSC, and a lower performance than the single image SVD-CSC, but without a significant difference. Therefore, through objective evaluation, we showed that the general dictionary of large data SVD-CSC method has a robust performance in most cases.

We reconstruct the reflectance from the limited memory dictionary illustrated in

Figure 5,

Figure 6,

Figure 7 and

Figure 8 to confirm the effect of SVD-CSC. The smaller the filter size of the dictionary, the more the compression rate, which adversely affects the performance when it reconstructs reflectance and subsequently, leads to bad results. Nevertheless, our proposed SVD-CSC method represents an effective objective performance indicator. In

Table 2, when the filter size is

, CSC and SVD-CSC express reflectance with sufficient information, and both show similar results that are good. However, when the filter size is

, the performance of the CSC is greatly reduced, whereas the SVD-CSC shows similar performance to the

filter CSC. Therefore, it can be seen that the proposed SVD-CSC can be effectively applied when the compression ratio is high.

4.2. Synthesis Image

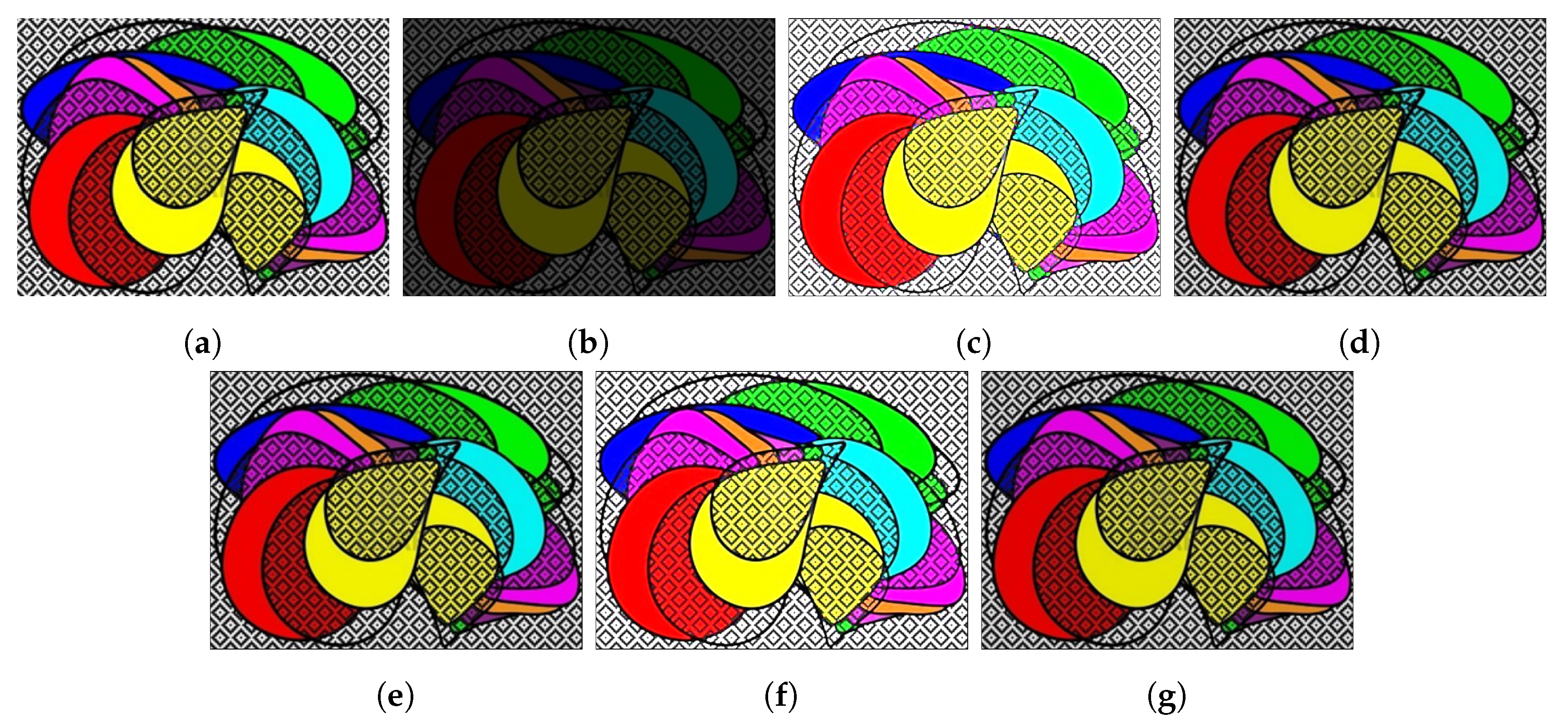

We compare the performance of the proposed SVD-CSC and other methods with a synthesized image.

Figure 11 shows the results of image tests using an image with uniformly dark colors and the same degrees of illumination. From

Figure 11b, image enhancement results shown in

Figure 11c–g were obtained using sparse coding, single image CSC, single image SVD-CSC, large data CSC, and large data SVD-CSC methods, respectively. In

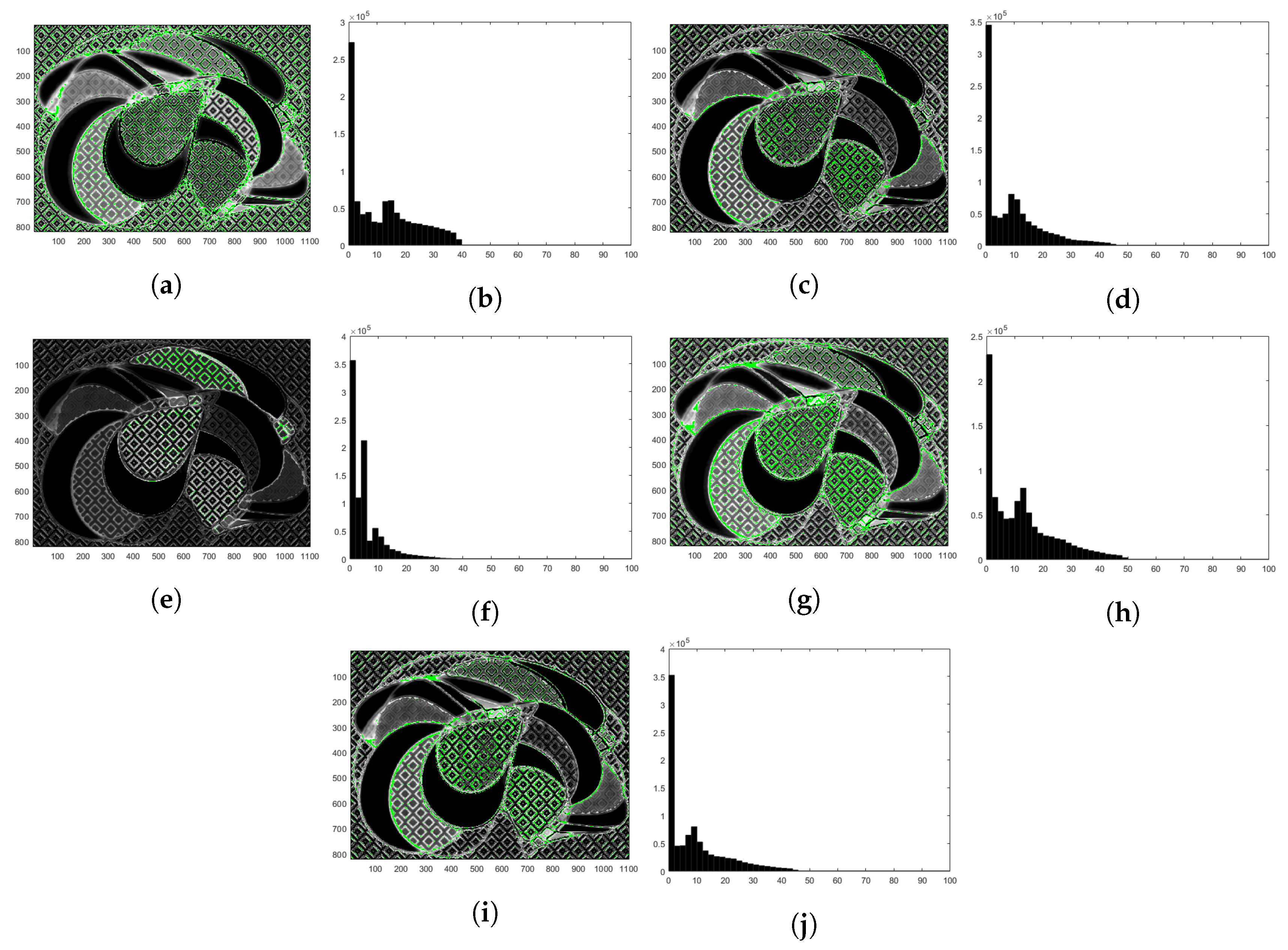

Figure 12, we compare the methods using the S-CIELAB color metric [

28], which includes a spatial processing step, and is useful and efficient for measuring color reproduction errors in digital images. We show the S-CIELAB errors between

Figure 11a and the sparse coding results in

Figure 11c, between

Figure 11a and the single image CSC results in

Figure 11d, between

Figure 11a and the single image SVD-CSC results in

Figure 11e, between

Figure 11a and the large data CSC results in

Figure 11f, and between

Figure 11a and the large data SVD-CSC results in

Figure 11g.

Figure 12a,c,e,g,i show a green color when the S-CIELAB error exceeds 30 units.

Figure 12b,d,f,h,j show the histogram distribution of the S-CIELAB error. The S-CIELAB error histogram distributions give the numbers of pixels per error unit. In this test, the S-CIELAB error values for the proposed method (SVD-CSC) indicate that 8.1% of the image exceeded 30 units, whereas 22.9%, 7.9%, 6.4%, and 13.8% of the image exceeded 30 units with sparse coding, single image CSC, single image SVD-CSC, and large data CSC respectively. Quantitatively, the error of single image SVD-CSC is the least, but the dictionary created in this method cannot be used in general. On the other hand, in the case of the large data SVD-CSC, the learned dictionary can be used robustly in general, with performance close to that of the single image SVD-CSC.