Novel Online Optimized Control for Underwater Pipe-Cleaning Robots

Abstract

1. Introduction

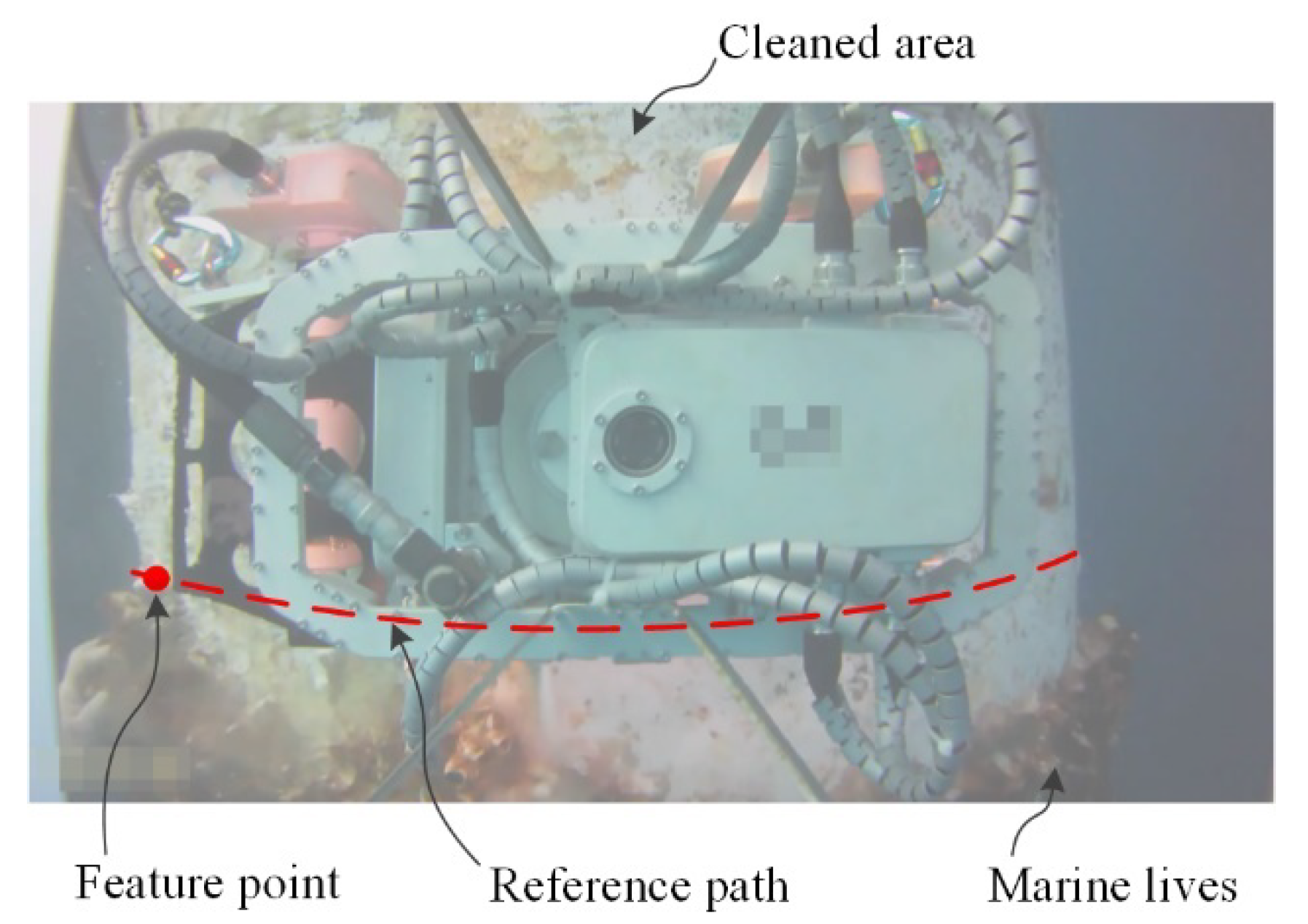

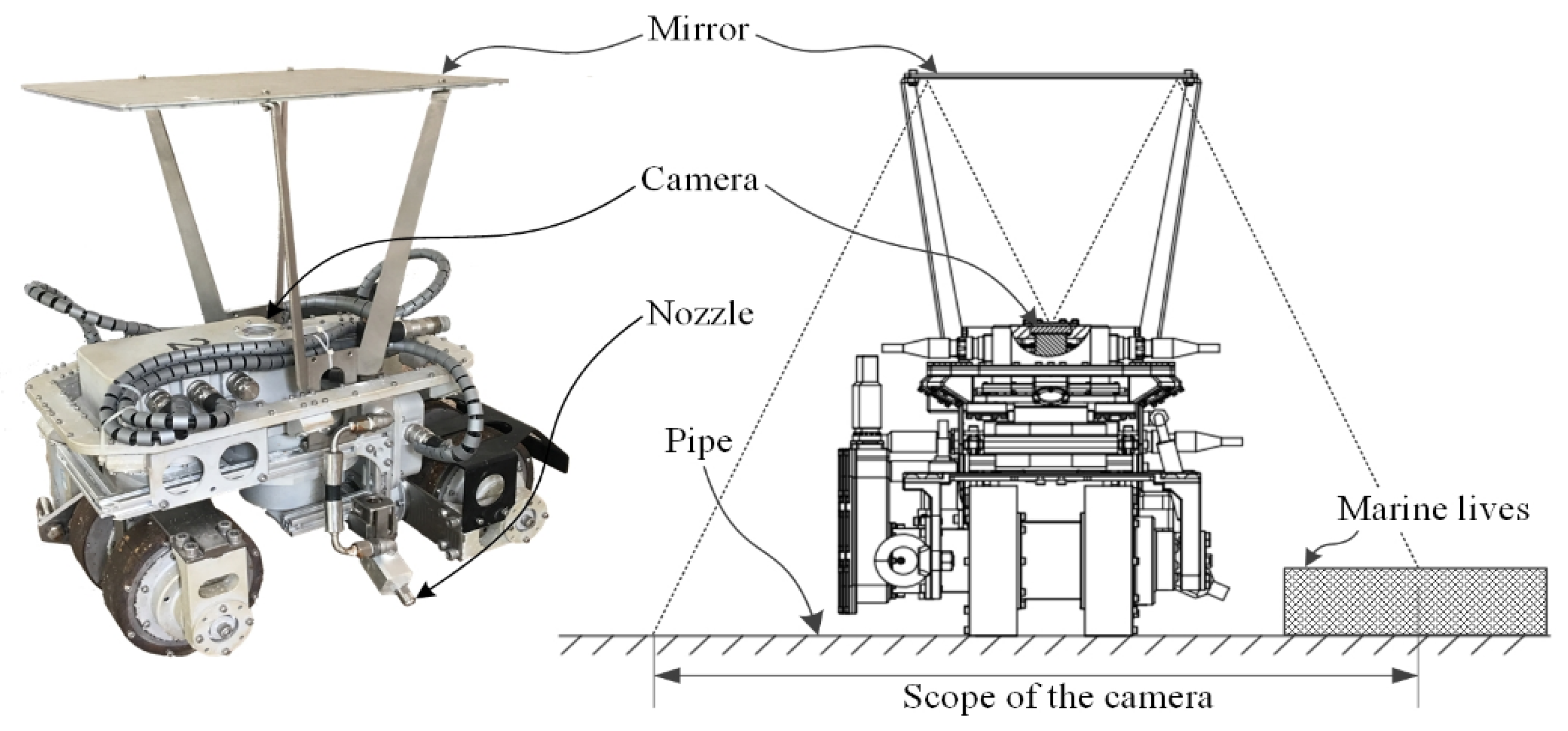

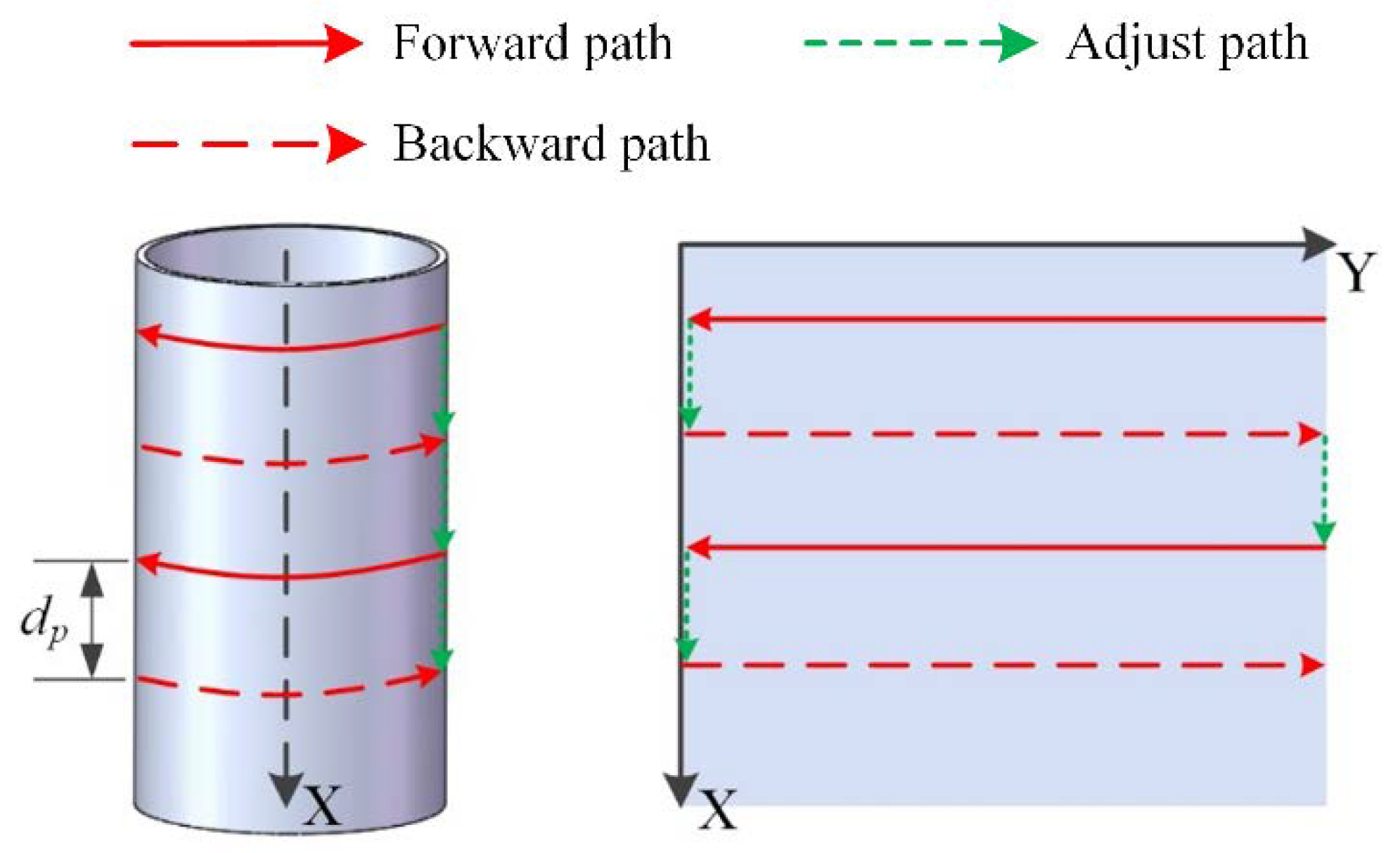

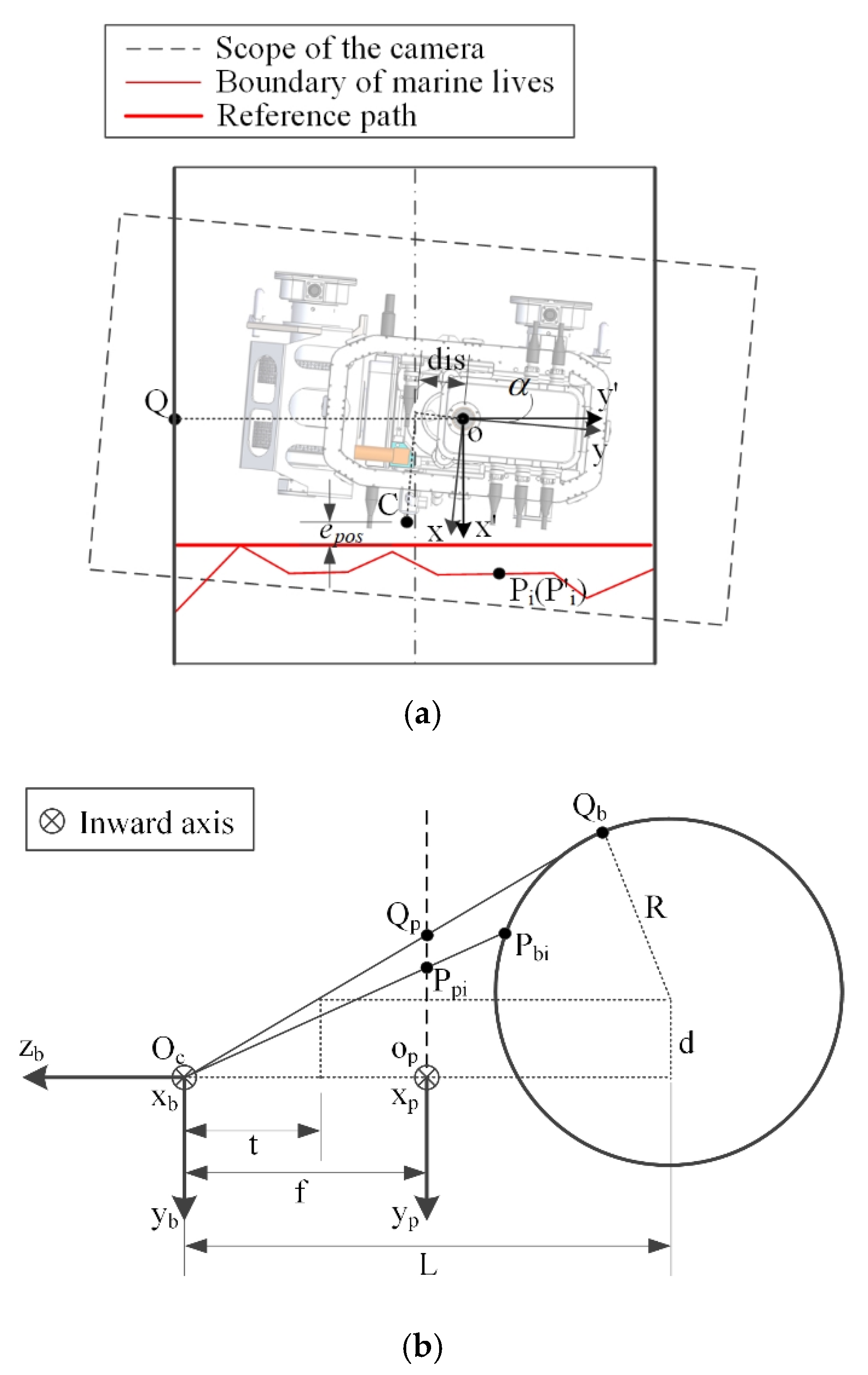

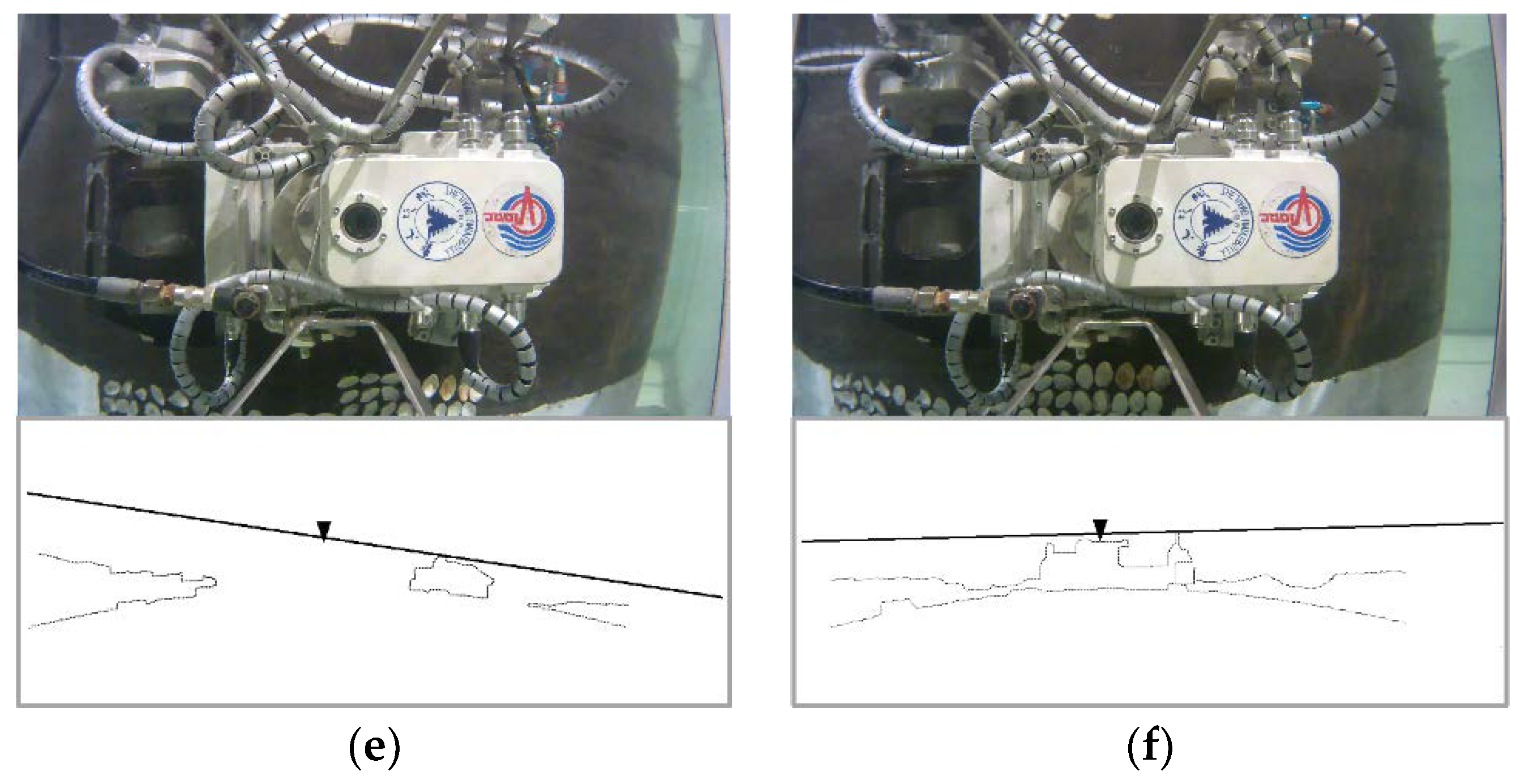

2. Catadioptric Panoramic Image-Based Vision Localization

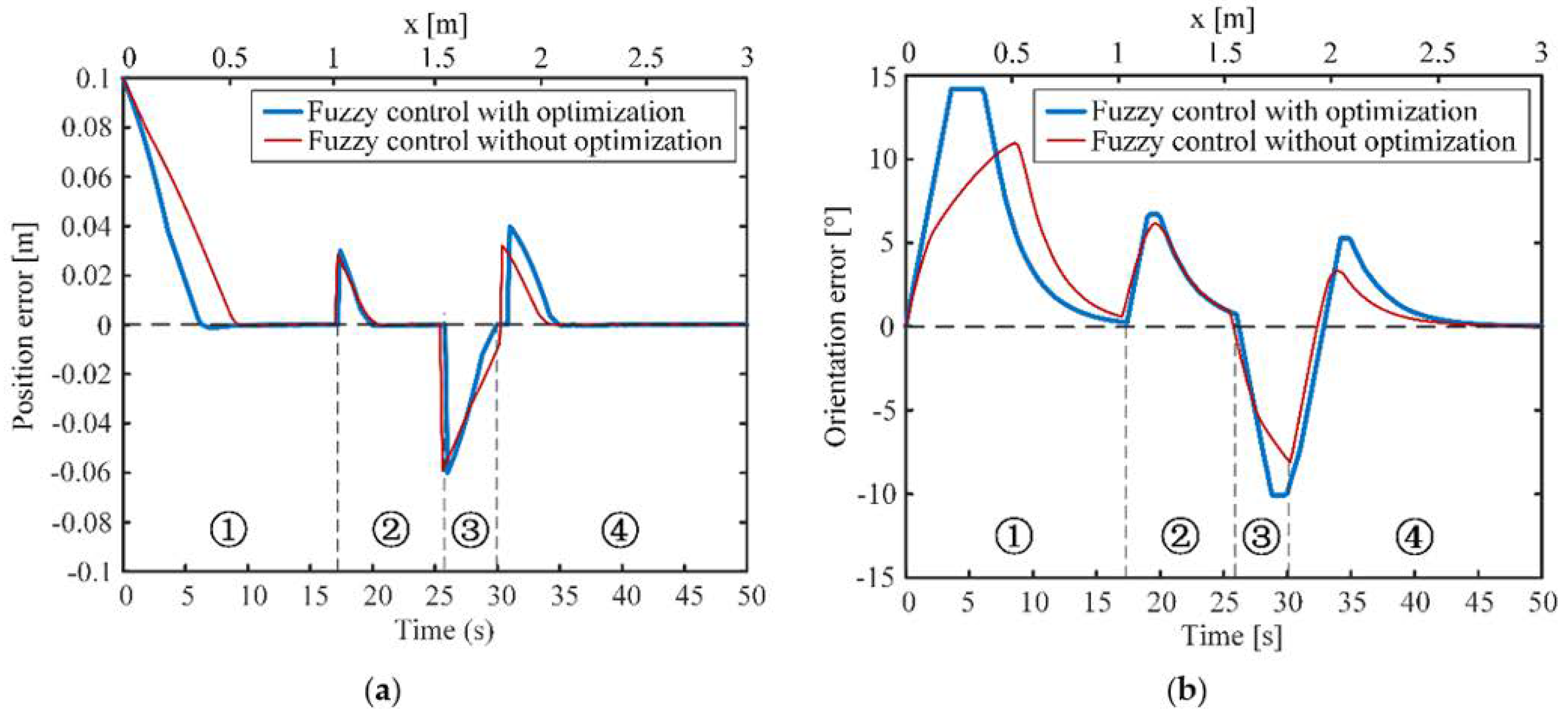

2.1. Prototype Description

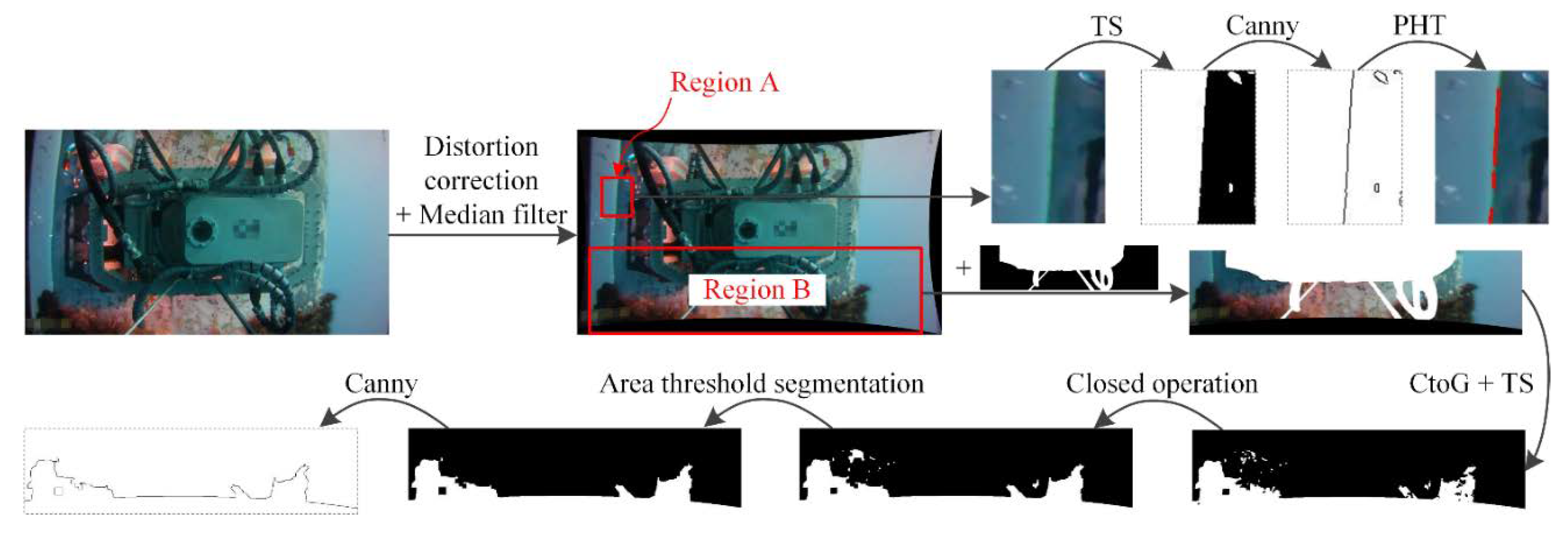

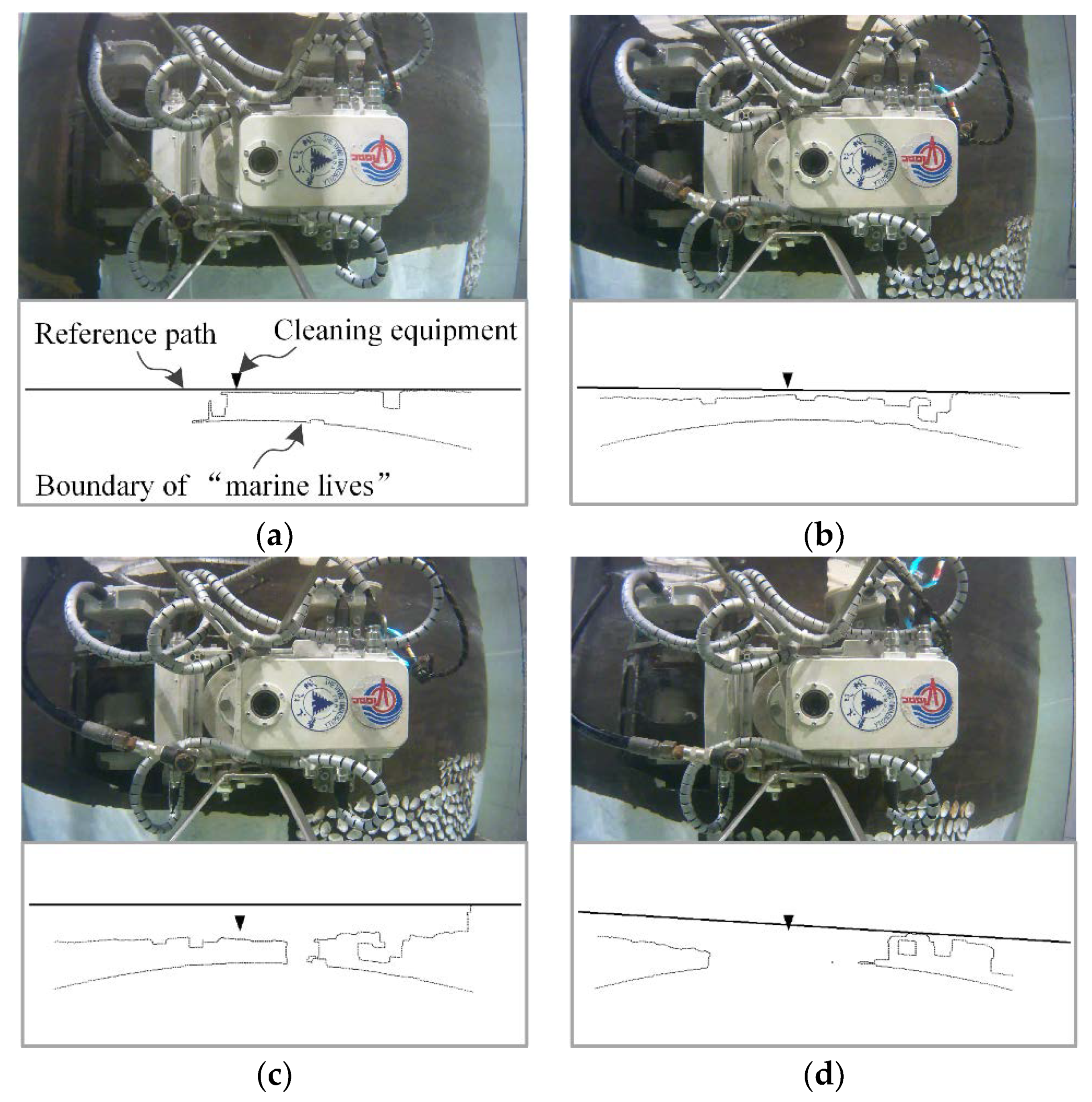

2.2. Feature Extraction

2.3. Projection Transformation Rules

2.4. Deviation Calculation

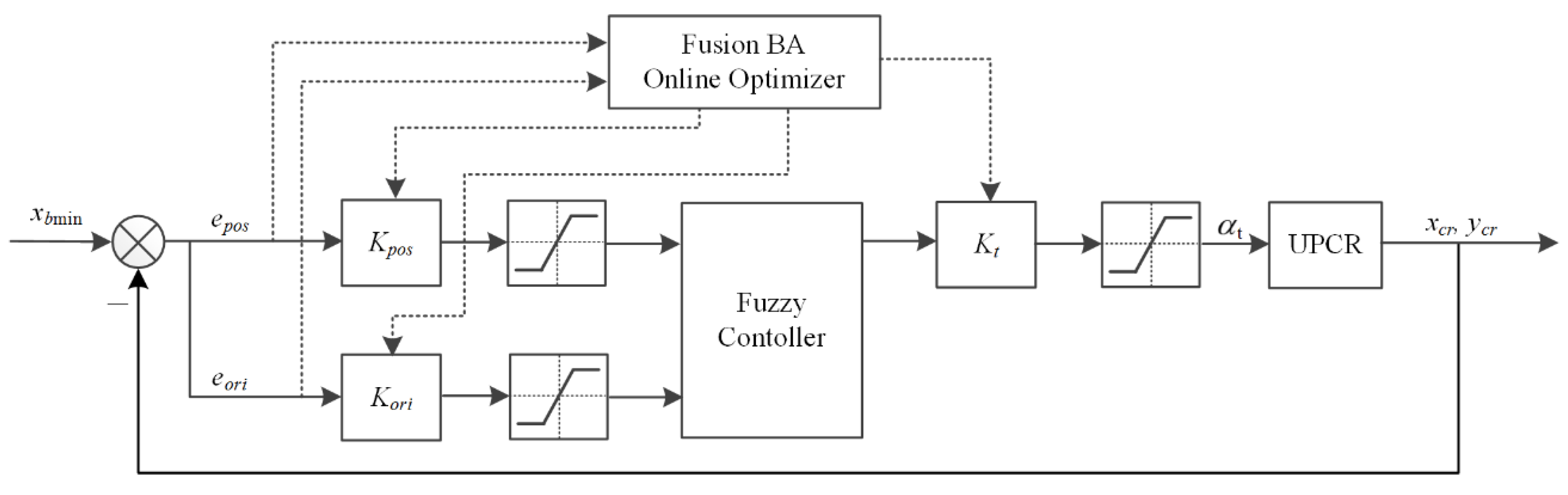

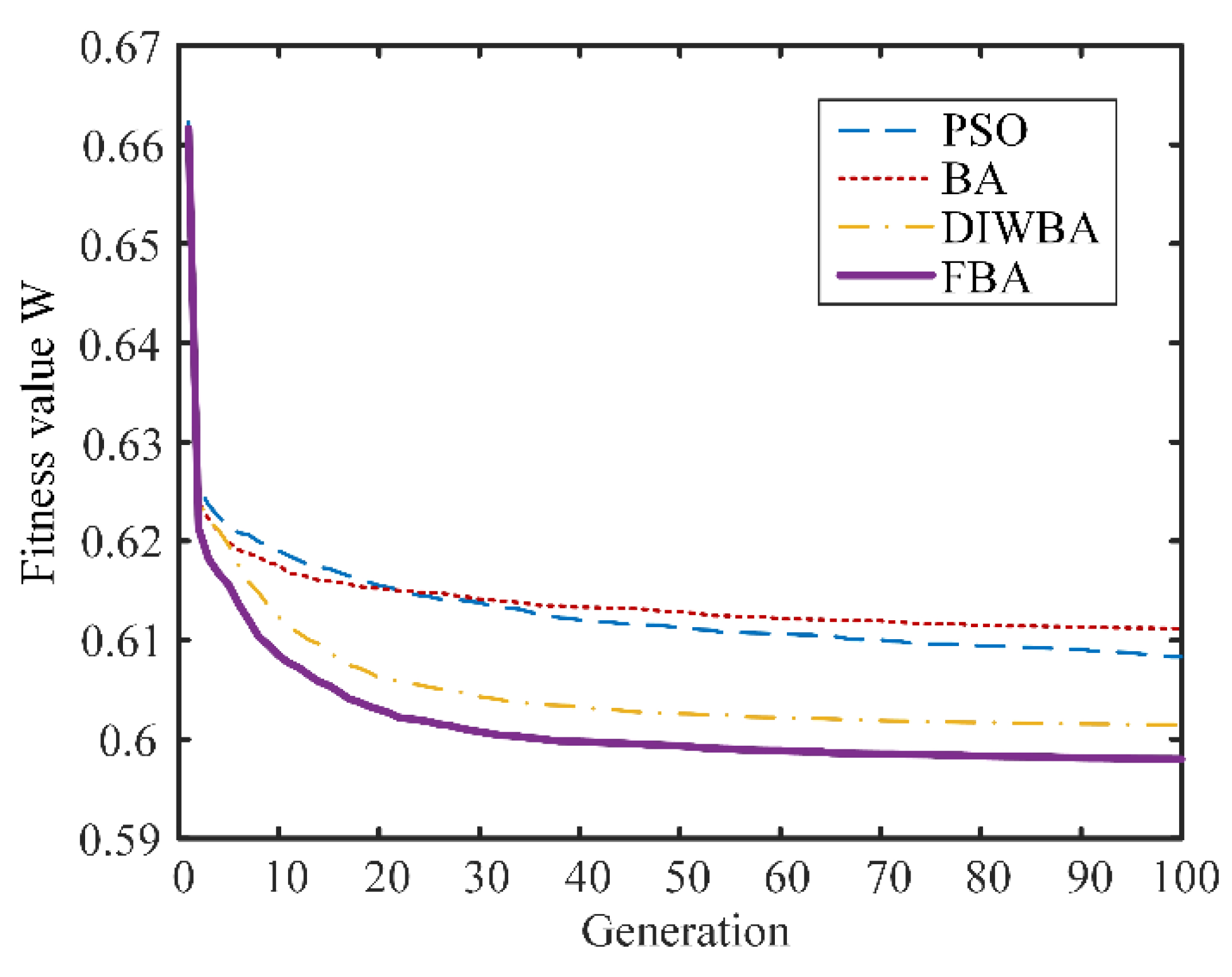

3. Fusion Bat Algorithm-Optimized Fuzzy Controller

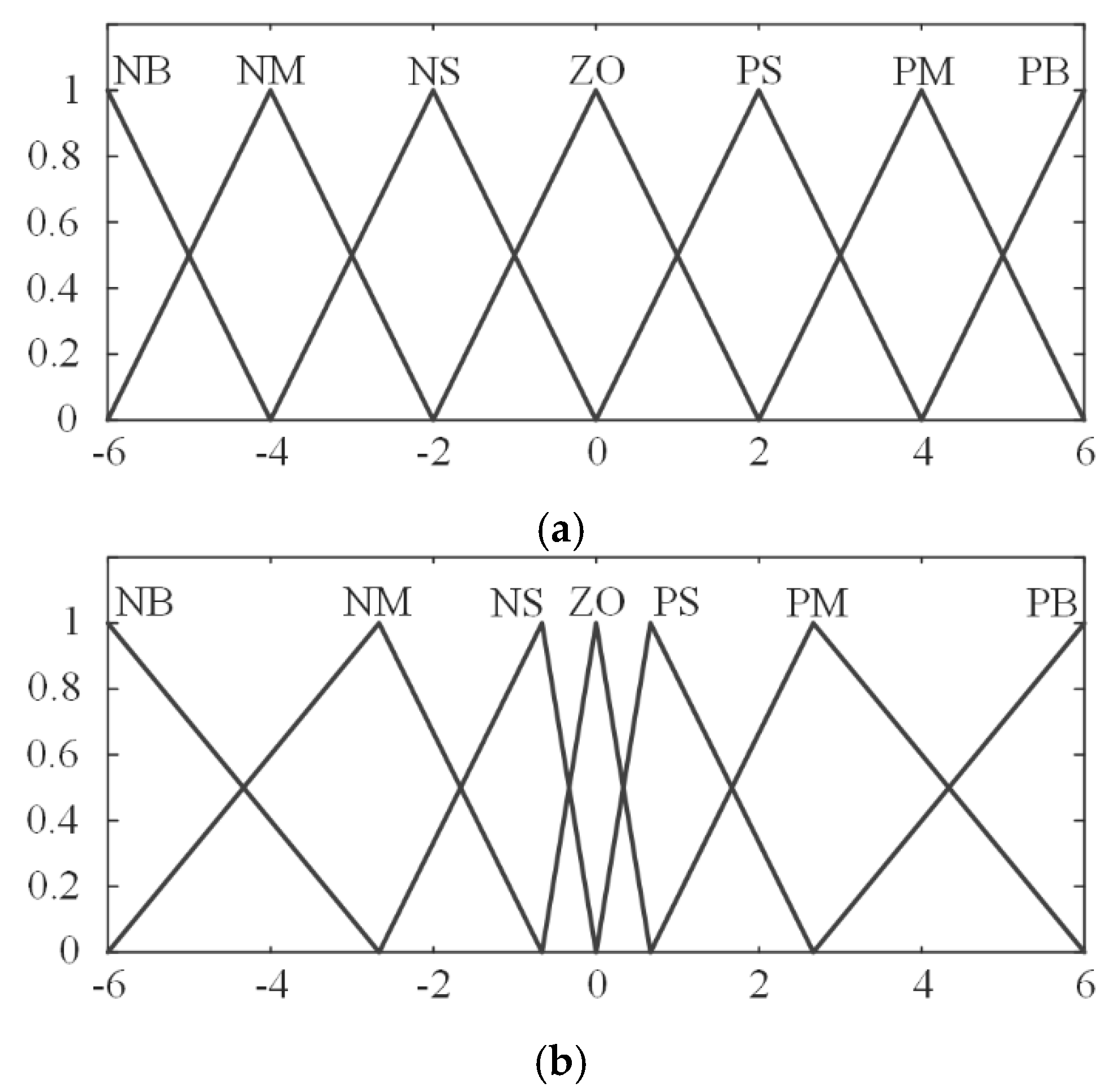

3.1. Fuzzy Controller Design

3.2. Bat Algorithm Optimization

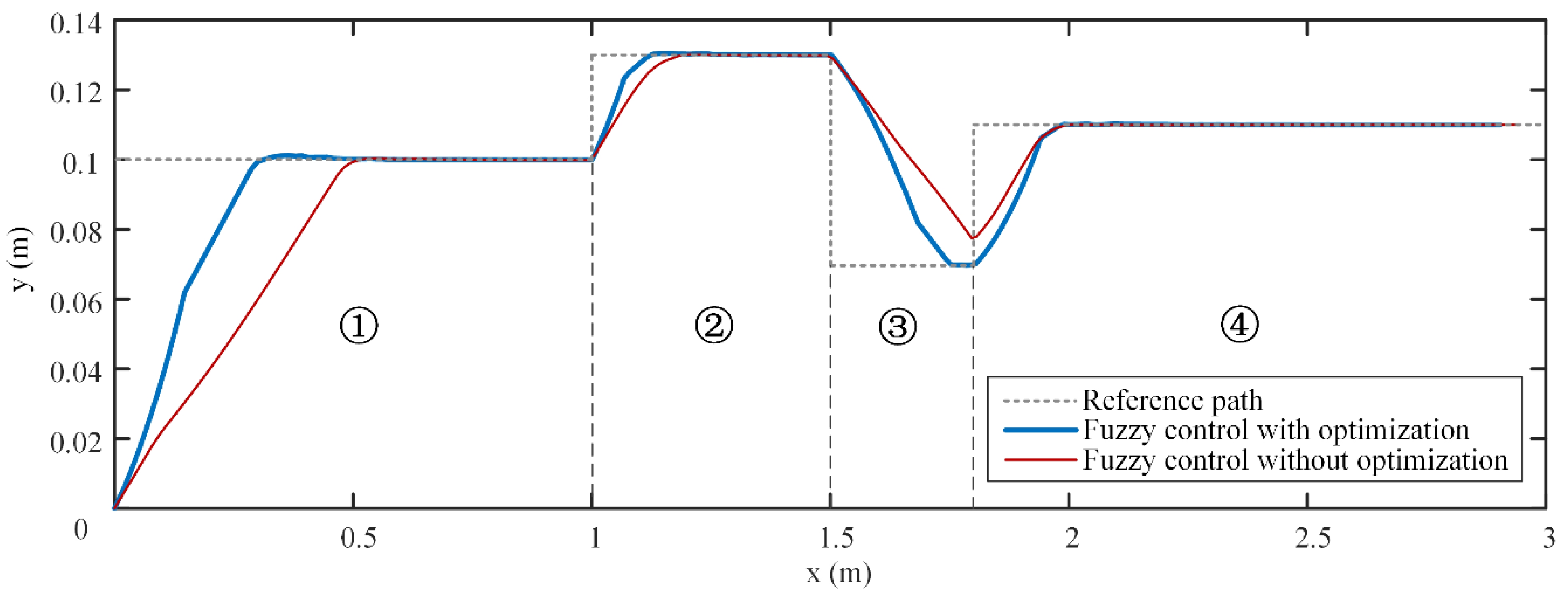

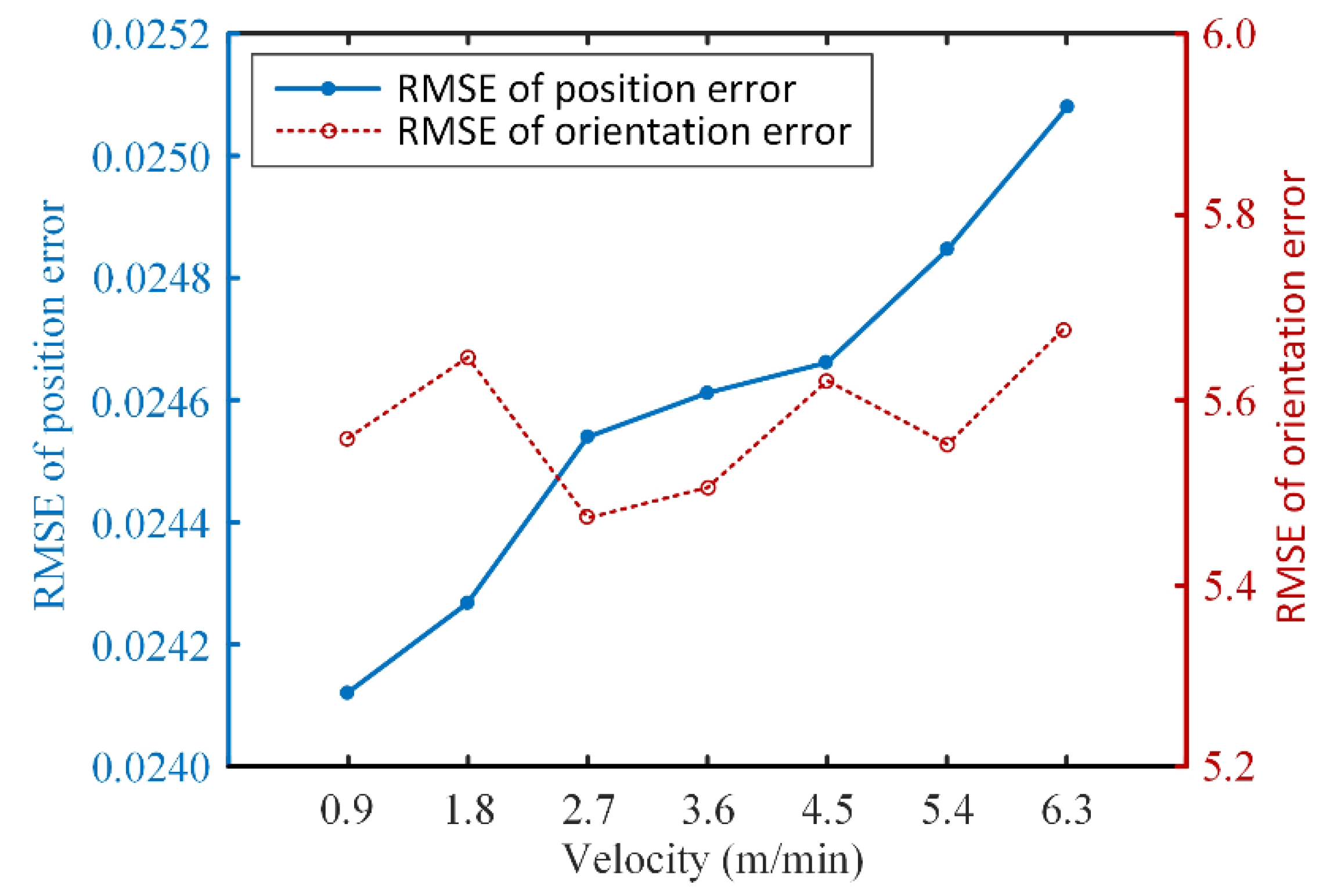

4. Simulations and Results

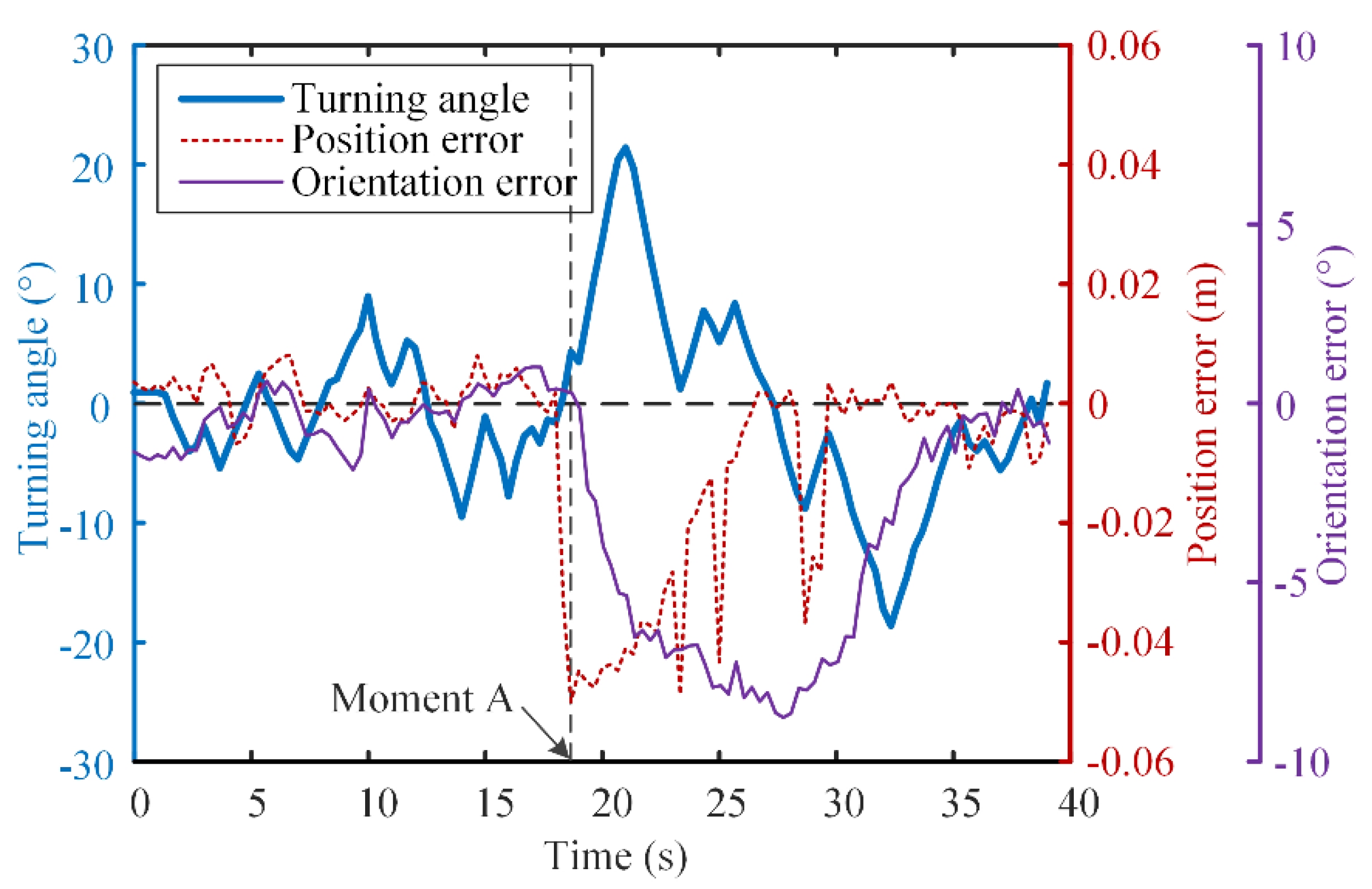

5. Experiment and Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Callow, J.A.; Callow, M.E. Trends in the development of environmentally friendly fouling-resistant marine coatings. Nat. Commun. 2011, 2. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Bai, X.; Yuan, C. Analysis of induced corrosion by fouling organisms on offshore platform and its research progress. Mater. Prot. 2018, 51, 116–124. [Google Scholar] [CrossRef]

- Albitar, H.; Dandan, K.; Ananiev, A.; Kalaykov, I. Underwater Robotics: Surface Cleaning Technics, Adhesion and Locomotion Systems. Int. J. Adv. Robot. Syst. 2016, 13. [Google Scholar] [CrossRef]

- Yi, Z.; Gong, Y.; Wang, Z.; Wang, X.; Zhang, Z. Large wall climbing robots for boarding ship rust removal cleaner. Robot 2010, 32, 560–567. [Google Scholar] [CrossRef]

- Nassiraei, A.A.F.; Sonoda, T.; Ishii, K. Development of ship hull cleaning underwater robot. In Proceedings of the International Conference on Emerging Trends in Engineering and Technology, Himeji, Japan, 5–7 November 2012; pp. 157–162. [Google Scholar]

- Souto, D.; Faina, A.; Lopez-Pena, F.; Duro, R.J. Morphologically intelligent underactuated robot for underwater hull cleaning. In Proceedings of the 2015 IEEE 8th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications, IDAACS 2015, Warsaw, Poland, 24–26 September 2015; Volume 2, pp. 879–886. [Google Scholar]

- Hachicha, S.; Zaoui, C.; Dallagi, H.; Nejim, S.; Maalej, A. Innovative design of an underwater cleaning robot with a two arm manipulator for hull cleaning. Ocean Eng. 2019, 181, 303–313. [Google Scholar] [CrossRef]

- Albitar, H.; Ananiev, A.; Kalaykov, I. In-water surface cleaning robot: Concept, locomotion and stability. Int. J. Mechatron. Autom. 2014, 4, 104–115. [Google Scholar] [CrossRef]

- Fan, J.; Yang, C.; Chen, Y.; Wang, H.; Huang, Z.; Shou, Z.; Jiang, P.; Wei, Q. An underwater robot with self-adaption mechanism for cleaning steel pipes with variable diameters. Ind. Rob. 2018, 45, 193–205. [Google Scholar] [CrossRef]

- Gong, Y.; Sun, L.; Zhang, Z.; Gu, X.; Li, G. Design and application of ultra-high pressure water jet ship rust equipment. In Proceedings of the IEEE International Conference on Fluid Power and Mechatronics, Beijing, China, 17–20 August 2011; pp. 359–363. [Google Scholar]

- Psarros, D.; Papadimitriou, V.; Chatzakos, P.; Spais, V.; Chrysagis, K. A service robot for subsea flexible risers. IEEE Robot. Autom. Mag. 2010, 17, 55–63. [Google Scholar] [CrossRef]

- Ross, B.; Bares, J.; Fromme, C. A semi-autonomous robot for stripping paint from large vessels. Int. J. Rob. Res. 2003, 22, 617–626. [Google Scholar] [CrossRef]

- Juliá, M.; Gil, A.; Reinoso, O. A comparison of path planning strategies for autonomous exploration and mapping of unknown environments. Auton. Robots 2012, 33, 427–444. [Google Scholar] [CrossRef]

- Tache, F.; Pomerleau, F.; Caprari, G.; Siegwart, R.; Bosse, M.; Moser, R. Three-dimensional localization for the MagneBike inspection robot. J. Field Robot. 2011, 28, 180–203. [Google Scholar] [CrossRef]

- Stumm, E.; Breitenmoser, A.; Pomerleau, F.; Pradalier, C.; Siegwart, R. Tensor-voting-based navigation for robotic inspection of 3D surfaces using lidar point clouds. Int. J. Rob. Res. 2012, 31, 1465–1488. [Google Scholar] [CrossRef]

- Okamoto, J.; Grassi, V.; Amaral, P.F.S.; Pinto, B.G.M.; Pipa, D.; Pires, G.P.; Martins, M.V.M.I. Development of an autonomous robot for gas storage spheres inspection. J. Intell. Robot. Syst. 2012, 66, 23–35. [Google Scholar] [CrossRef]

- Cho, C.; Kim, J.; Lee, S.; Lee, S.K.; Han, S.; Kim, B. A study on automated mobile painting robot with permanent magnet wheels for outer plate of ship. In Proceedings of the IEEE International Symposium on Robotics, Seoul, Korea, 24–26 October 2013. [Google Scholar]

- Zhang, K.; Chen, Y.; Gui, H.; Li, D.; Li, Z. Identification of the deviation of seam tracking and weld cross type for the derusting of ship hulls using a wall-climbing robot based on three-line laser structural light. J. Manuf. Process. 2018, 35, 295–306. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, J.; Yin, G.; Zhao, J.; Han, Q. A cross structured light sensor and stripe segmentation method for visual tracking of a wall climbing robot. Sensors 2015, 15, 13725–13751. [Google Scholar] [CrossRef]

- Zhang, L.; Ke, W.; Ye, Q.; Jiao, J. A novel laser vision sensor for weld line detection on wall-climbing robot. Opt. Laser Technol. 2014, 60, 69–79. [Google Scholar] [CrossRef]

- Teixeira, M.A.S.; Santos, H.B.; Dalmedico, N.; de Arruda, L.V.R.; Neves, F., Jr.; de Oliveira, A.S. Intelligent environment recognition and prediction for NDT inspection through autonomous climbing robot. J. Intell. Robot. Syst. 2018, 92, 323–342. [Google Scholar] [CrossRef]

- Tavakoli, M.; Lopes, P.; Sgrigna, L.; Viegas, C. Motion control of an omnidirectional climbing robot based on dead reckoning method. Mechatronics 2015, 30, 94–106. [Google Scholar] [CrossRef]

- Kim, J.H.; Lee, J.C.; Choi, Y.R. LAROB: Laser-guided underwater mobile robot for reactor vessel inspection. IEEE/ASME Trans. Mechatron. 2014, 19, 1216–1225. [Google Scholar] [CrossRef]

- Jiang, P.; Yang, C.; Shou, Z.; Chen, Y.; Fan, J.; Huang, Z.; Wei, Q. Research on vision-inertial navigation of an underwater cleaning robot. J. Cent. South Univ. Sci. Technol. 2018, 49, 52–58. [Google Scholar] [CrossRef]

- Wang, H.; Yang, C.; Deng, X.; Fan, J. A simple modeling method and trajectory planning for a car-like climbing robot used to strip coating from the outer surface of pipes underwater. In Proceedings of the 18th International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines, CLAWAR 2015, Hangzhou, China, 6–9 September 2015; pp. 704–712. [Google Scholar]

- Wang, Z.; Zhang, K.; Chen, Y.; Luo, Z.; Zheng, J. A real-time weld line detection for derusting wall-climbing robot using dual cameras. J. Manuf. Process. 2017, 27, 76–86. [Google Scholar] [CrossRef]

- Heath, M.D.; Sarkar, S.; Sanocki, T.; Bowyer, K.W. A robust visual method for assessing the relative performance of edge-detection algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 1338–1359. [Google Scholar] [CrossRef]

- Moustris, G.P.; Tzafestas, S.G. Switching fuzzy tracking control for mobile robots under curvature constraints. Control Eng. Pract. 2011, 19, 45–53. [Google Scholar] [CrossRef]

- Chen, Z.; Huang, F.; Sun, W.; Gu, J.; Yao, B. RBF neural network based adaptive robust control for nonlinear bilateral teleoperation manipulators with uncertainty and time delay. IEEE/ASME Trans. Mechatron. 2019. [Google Scholar] [CrossRef]

- Xiao, J.; Xiao, J.Z.; Xi, N.; Tummala, R.L.; Mukherjee, R. Fuzzy controller for wall-climbing microrobots. IEEE Trans. Fuzzy Syst. 2004, 12, 466–480. [Google Scholar] [CrossRef]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Huang, H.C. Fusion of Modified Bat Algorithm Soft Computing and Dynamic Model Hard Computing to Online Self-Adaptive Fuzzy Control of Autonomous Mobile Robots. IEEE Trans. Ind. Inform. 2016, 12, 972–979. [Google Scholar] [CrossRef]

- Xue, F.; Cai, Y.; Cao, Y.; Cui, Z.; Li, F. Optimal parameter settings for bat algorithm. Int. J. Bio-Inspired Comput. 2015, 7, 125–128. [Google Scholar] [CrossRef]

- Mendes, R.; Mohais, A.S. DynDE: A Differential Evolution for dynamic optimization problems. 2005 IEEE Congr. Evol. Comput. 2005, 3, 2808–2815. [Google Scholar] [CrossRef]

| epos | NB | NM | NS | ZO | PS | PM | PB | ||

|---|---|---|---|---|---|---|---|---|---|

| αt | |||||||||

| eori | |||||||||

| NB | ZO | NS | NM | NB | NB | NB | NB | ||

| NM | ZO | ZO | NS | NM | NM | NB | NB | ||

| NS | PS | ZO | ZO | NS | NM | NM | NB | ||

| ZO | PM | PS | PS | ZO | NS | NS | NM | ||

| PS | PB | PM | PM | PS | ZO | ZO | NS | ||

| PM | PB | PB | PM | PM | PS | ZO | ZO | ||

| PB | PB | PB | PB | PB | PM | PS | ZO | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Liu, S.; Fan, J.; Yang, C. Novel Online Optimized Control for Underwater Pipe-Cleaning Robots. Appl. Sci. 2020, 10, 4279. https://doi.org/10.3390/app10124279

Chen Y, Liu S, Fan J, Yang C. Novel Online Optimized Control for Underwater Pipe-Cleaning Robots. Applied Sciences. 2020; 10(12):4279. https://doi.org/10.3390/app10124279

Chicago/Turabian StyleChen, Yanhu, Siyue Liu, Jinchang Fan, and Canjun Yang. 2020. "Novel Online Optimized Control for Underwater Pipe-Cleaning Robots" Applied Sciences 10, no. 12: 4279. https://doi.org/10.3390/app10124279

APA StyleChen, Y., Liu, S., Fan, J., & Yang, C. (2020). Novel Online Optimized Control for Underwater Pipe-Cleaning Robots. Applied Sciences, 10(12), 4279. https://doi.org/10.3390/app10124279