1. Introduction

Public transport (also known as public transportation, public transit, or mass transit) is a system of transport for passengers by group travel systems, typically managed on a schedule, operated on established routes, and that charge a posted fee for each trip. The bus operation system is a major component of public transport and plays an important role. Ways to predict the passenger demand by bus are always the research focuses of scholars. The real-time passenger flow forecasting (observing the passenger flow in a short period time and predicting the passenger number for a next period of time) is also a very important part of the bus operation system. To solve the problem of passenger flow prediction, especially for real-time passenger flow prediction, this paper proposes a passenger flow prediction model based on the history and real-time data (HRPFP).

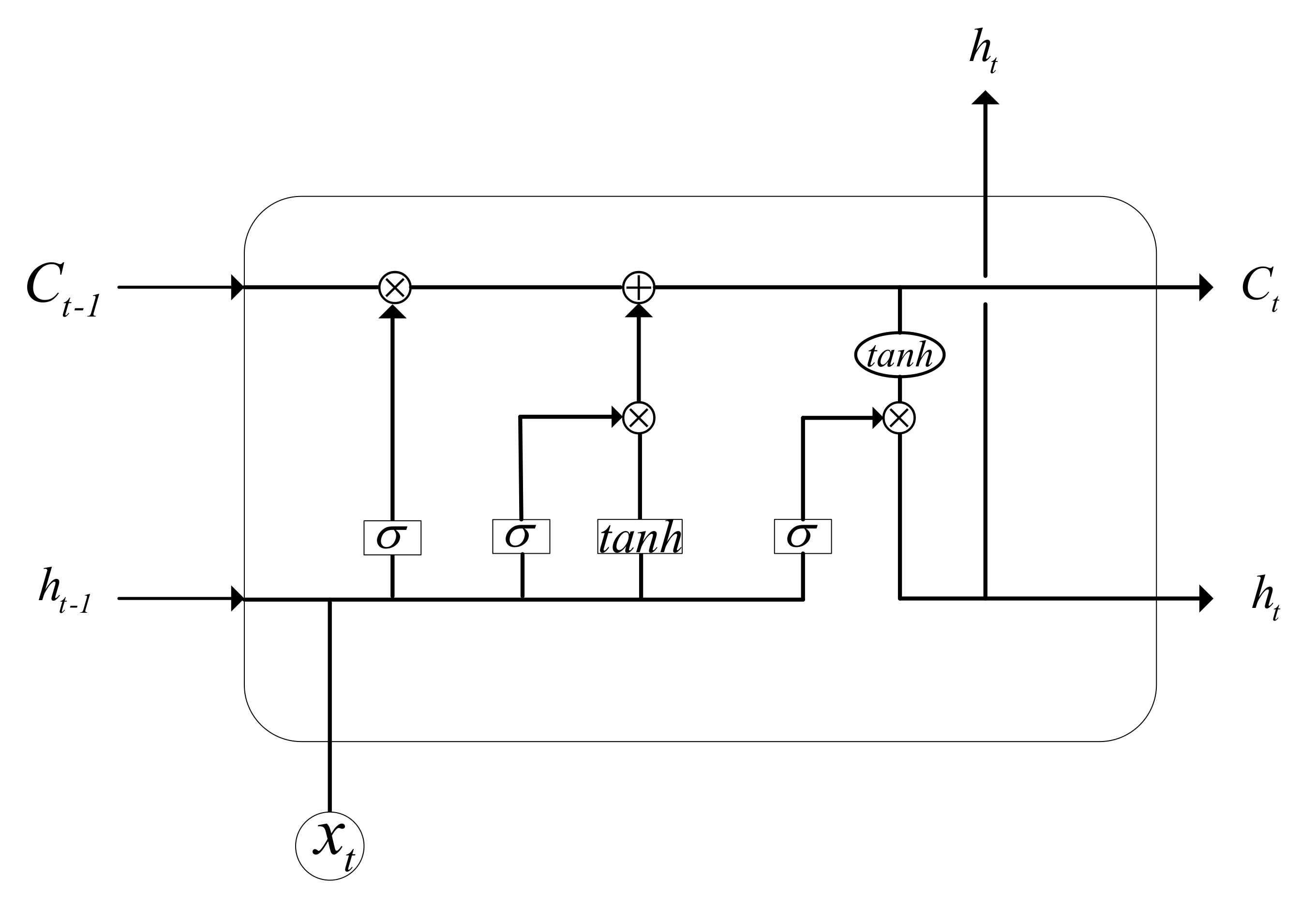

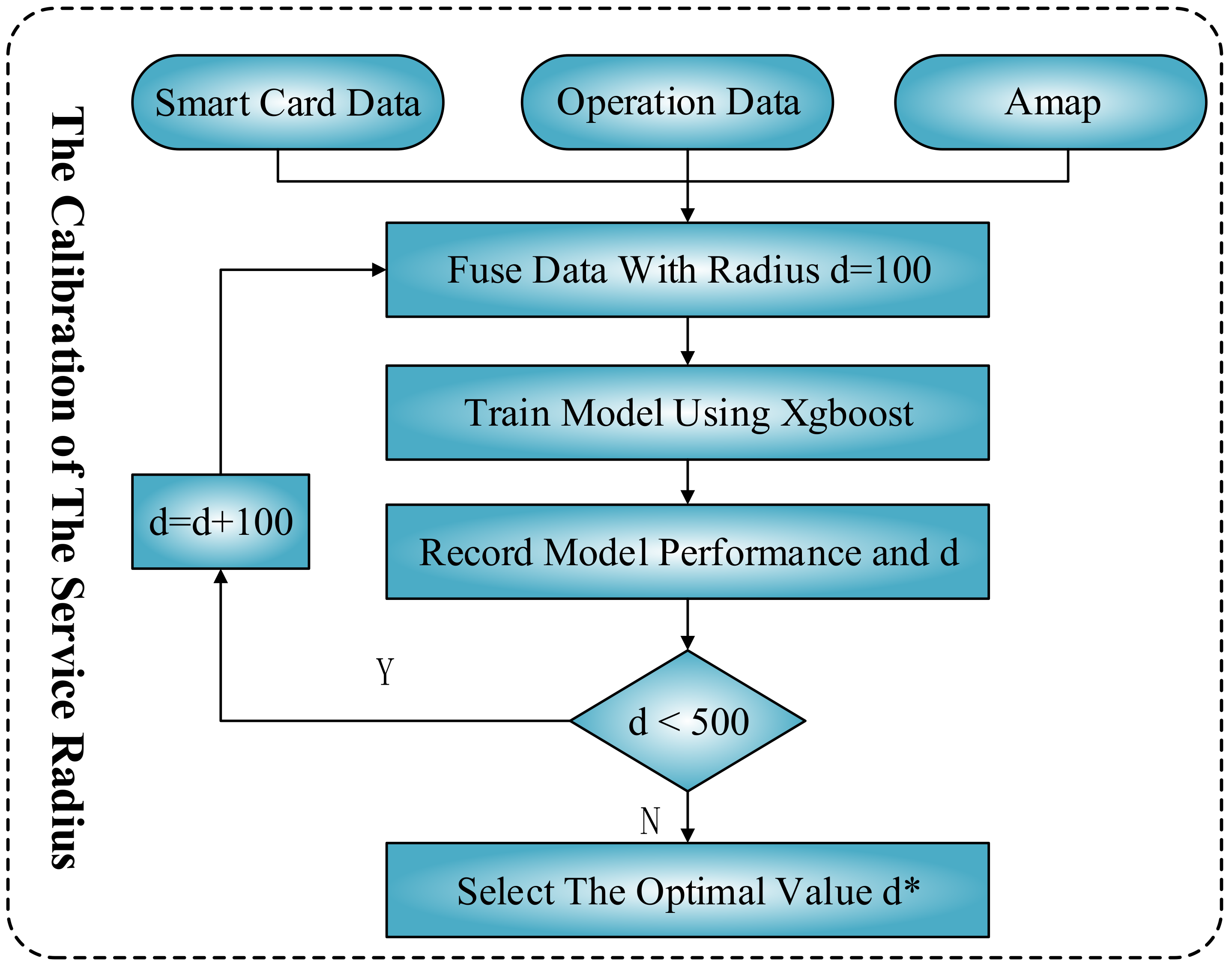

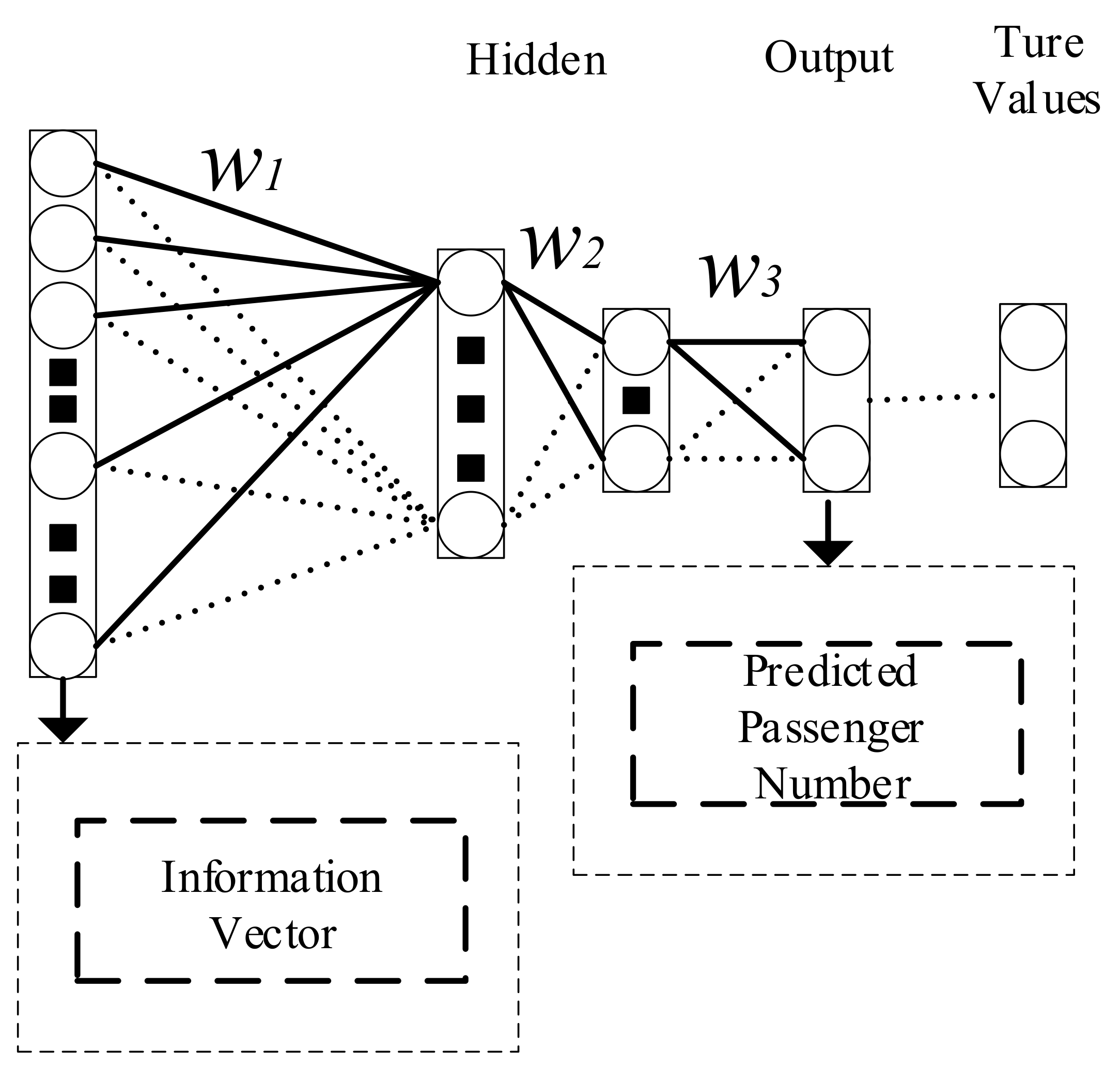

The HRPFP model mainly contains four parts: the feature extraction part, the history information encoding part, the real-time information encoding part, and the decoding part. In the feature extraction part, we use the Xgboost algorithm to combine the smart card data and the points of interest together. A POI is a specific point location that someone may find useful or interesting. We use the Amap application program interface to obtain the amount of different POIs around a certain bus station. The smart card data and the points of interest can be fused by a radius. We use the Xgboost algorithm to obtain the value of radius because the speed of the Xgboost algorithm is fast. In the history information encoding part, we use the long short-term memory (LSTM) structure to encode the history smart card data collected by AFCs. We arrange the history data with the index of the date to form a time series sequence. The length of the sequence is 7, which means that we use the passenger flow data of a certain period in the previous seven days to predict the passenger number in the same period for a certain day. In the real-time information encoding part, we also use the LSTM structure to encode the real-time smart card data collected by sensors. The index of the real-time data sequence is the time section, and the length of this sequence is 4, which means that we use the passenger flow data of the previous four time periods to predict the passenger number of the next two time periods for a certain day. In the decoding part, we use a four-layer neural network to calculate the passenger number in the next two time periods. Because the output is a single value, we choose a multi-layer neural network instead of the LSTM to speed up our training. Through comparative experiments, the HRPFP model can achieve better accuracy than the existing LSTM neural network and other baseline methods.

The structure of this paper is as follows.

Section 1 is a brief introduction of our research purpose and the structure of the HRPFP model.

Section 2 is the latest research methods in this area.

Section 3 describes the HRPFP model framework and its sub-models. Results of the experiments are provided in

Section 4. Conclusions are shown in

Section 5.

2. Related Work

In this part, we will mainly introduce three methods used as baselines in our experiment. We will also introduce the achievements researchers made and the shortcomings of these methods. This part also gives the reason why we increase the data dimensions through POI data and use two encoder structures in our model based on LSTM. In recent years, machine learning and deep learning methods have been used in passenger flow prediction. A major method is the time series and Kalman filtering. This method treats the passenger flow on different dates as sequences or signals, and then looks for the regularity behind data by processing sequences or signals. M. Milinkovic et al. [

1] used seasonal autoregressive integrated moving average (SARIMA) model to predict railway passenger flow. R. Gummadi et al. [

2] chose a time series model to forecast the passenger flow for a certain transit bus station. M. Ni et al. [

3] proposed a linear regression and SARIMA model to predict subway passenger flow. C. Li et al. [

4] proposed a spatial–temporal correlation prediction model of passenger flow based on non-linear regression and time series data. S. V. Kumar et al. [

5] used Kalman filtering to predict the traffic flow. P. Jiao et al. [

6] used three revised Kalman filtering models to predict short-term rail transit passenger flow. This method has simple dimensions and high model training efficiency. However, this model may not perform very well in some cases because fewer feature dimensions are considered. In the multi-station passenger flow forecasting for a certain line, this method needs to train a model for each station, which will be very complicated.

Another major method is the support vector machine (SVM). This method can map low-dimensional data to high-dimensional space through the kernel function, and transform non-linear problems into linear problems, so it can effectively consider the influence of multiple factors on a model. M. Tang et al. [

7] used linear regression and a wavelet support vector approach to forecast the passenger flow. Y. Sun [

8] et al. used wavelet-SVM short-time to predict subway passenger flow in Beijing. R. Liu et al. [

9] used wavelet transform and kernel extreme method to predict short-term passenger flow. C. Li et al. [

10] chose clustering-based support vector regression to forecast bus passenger flow. They all achieved a lot of good results in passenger flow prediction using the SVM. However, due to the existence of outliers, this method has a large error in some cases.

Neural networks and deep learning are also applied to passenger flow prediction. In a famous machine learning paper, M. Spyros et al. [

11] compared different methods in prediction based on stock market data. From his article, we can see that when the data dimension was small, the prediction accuracy of LSTM was the best. LSTM performed better than other basic machine learning methods. J. Guo et al. [

12] proposed a combination method using the support vector regression (SVR) and LSTM neural network to predict the abnormal passenger flow. F. Toqué et al. [

13] used LSTM neural networks to predict travel demand based on smart card data and proved the effectiveness of the forecasting approaches. S. Sunardi et al. [

14] presented a useful way of observing the domestic passenger travel series using LSTM. Y. Liu et al. [

15] built a deep learning architecture to make metro passenger flow prediction. H. Zhao et al. [

16] tried to obtain the passenger numbers for a certain bus line using LSTM considering the weather information in Guangzhou city to improve the performance of prediction. However, in other cities like Beijing, weather information changes little. Therefore, weather information is not a good feature for all the big cities. Y. Han et al. [

17] compared different LSTM gradient calculation methods in passenger number prediction, so we choose the Adam algorithm in our paper, based on Yong Han’s research. J. Zhang et al. [

18] described the importance of real-time passenger flow prediction and used a linear method to predict the real-time bus passenger number. K. Pasini et al. and M. Farahani et al. [

19,

20] used encoder structures based on LSTM to predict passenger number and draw a conclusion that an encoder structure can improve the accuracy. That is the main reason that we use two encoder structures in our model.

To our knowledge, deep learning performs well in image recognition and natural language processing with thousands of feature dimensions. For the passenger flow forecast, the smart card data usually has a large scale, but the dimensions of the smart card data are usually less than 10. Therefore, in our opinion, increasing the dimensions of bus smart card data as much as possible is very important in improving prediction accuracy. A passenger prediction model can learn the passenger number regularity and predict the passenger number for the next few days based on the history data. Besides, a passenger prediction model also needs to predict the passenger number for the next few time periods based on the real-time data. The function of real-time passenger number prediction is an important part in providing early warning for a sudden large passenger flow rush. This is the major motivation for this paper.

4. Results and Discussion

The train dataset used was extracted from October 1, 2015 to October 25, 2015. The test dataset was from October 26, 2015 to October 30, 2015. The two lines were 685 and 56008. Line 56008 has a large passenger volume, because it is a major bus line on the third ring road. Line 685 is a normal line which has a relatively small passenger volume. We chose these two lines to evaluate the performance of the HRPFP model in different scales. These two lines are shown in

Figure 6.

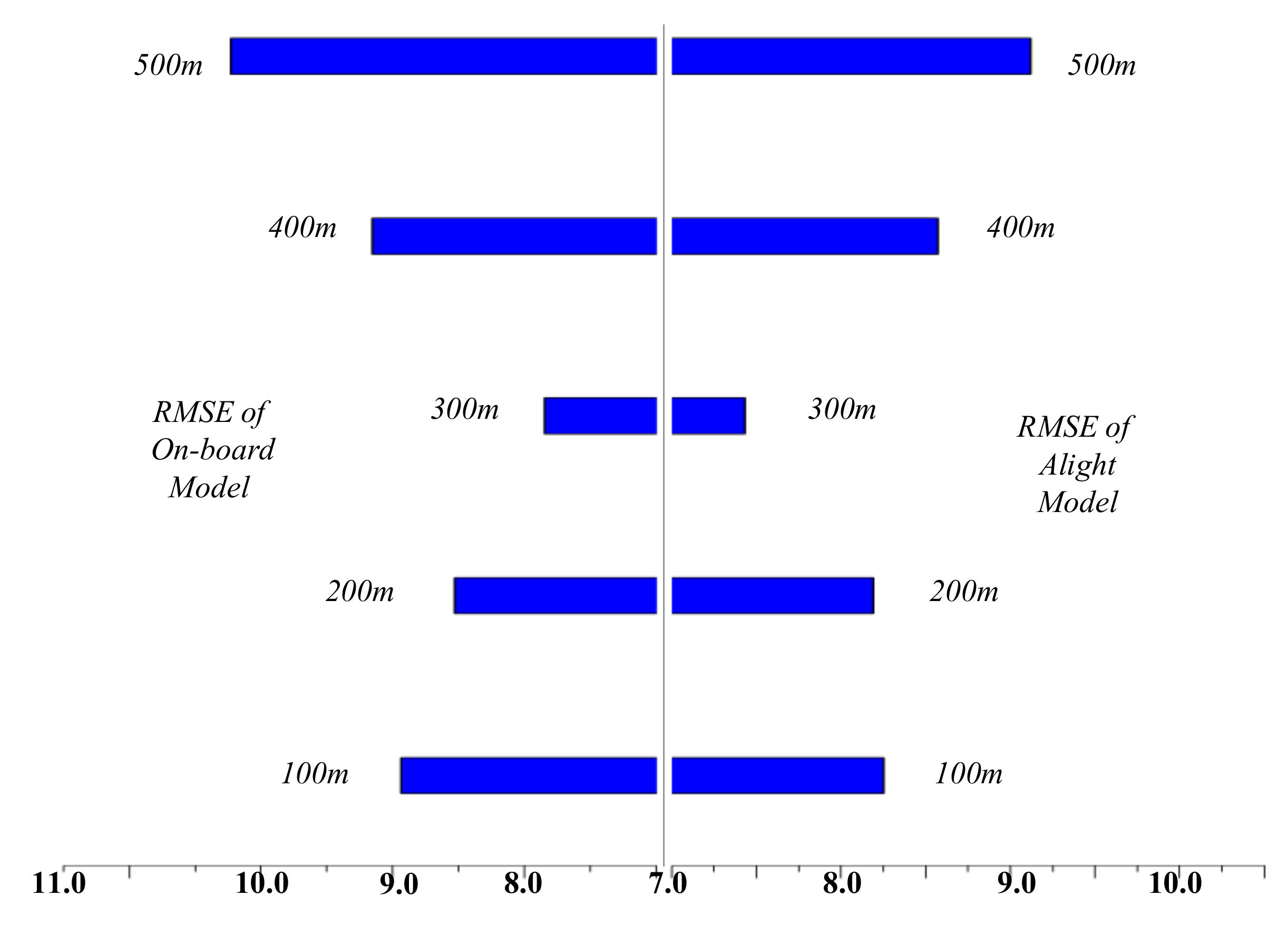

Here, we chose the root mean square error (RMSE) as an evaluation for the service radius configuration part. The RMSE can be calculated by Equation (19).

where

M is the total number of samples,

is the true value, and

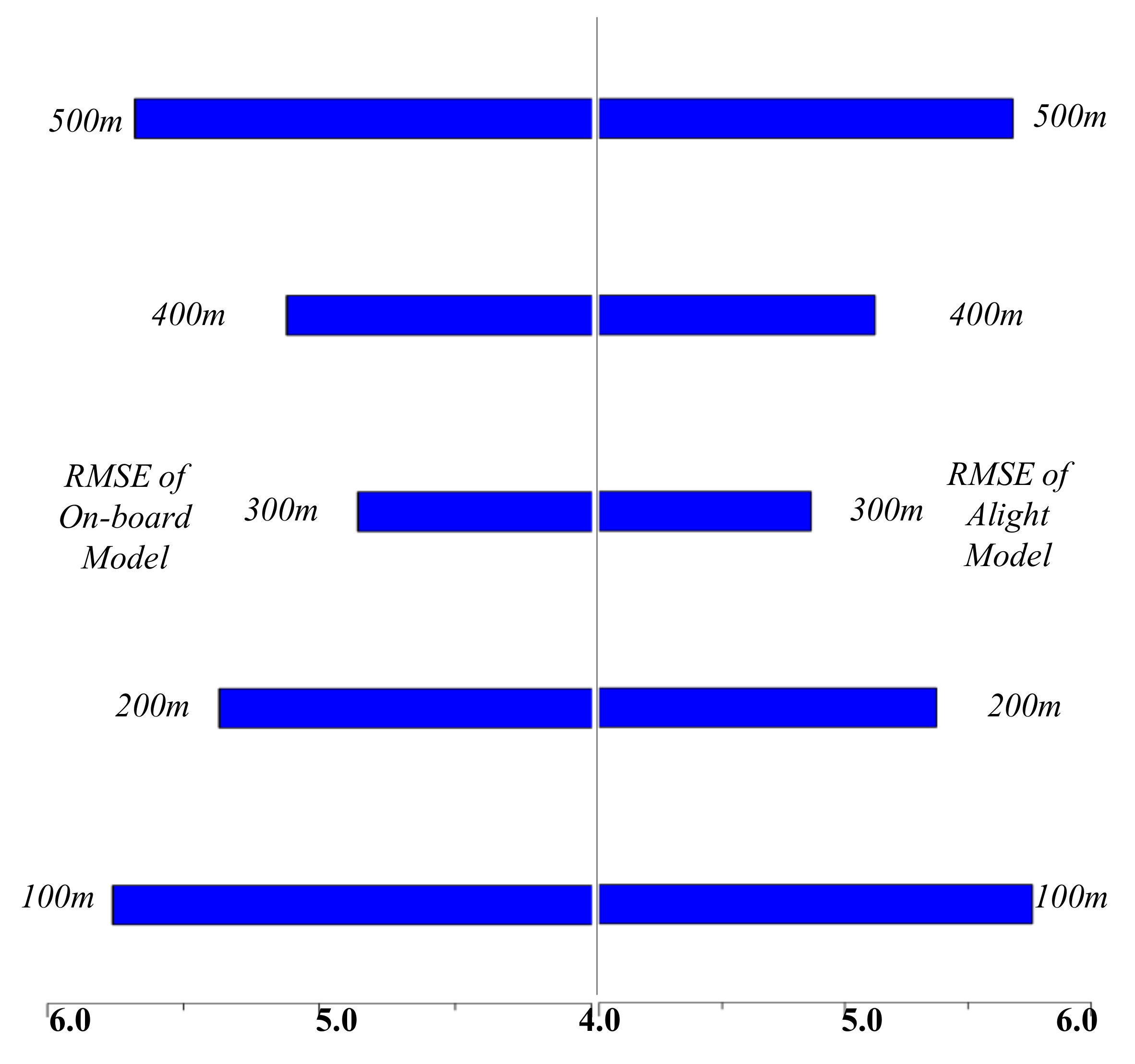

is the predicted value. For line 56008, the optimal parameters of the predicted model are as follows: the maximum tree depth was 4 layers, the learning rate was 0.02, the maximum tree size was 1500, and the optimal distance was 300 m. For line 685, the maximum tree depth was 3 layers, the learning rate was 0.01, the maximum size of the tree was 800, and the optimal distance was 300 m. The evaluation of prediction model for line 56008 under different distances is shown in

Figure 7. When the distance was 500 m, the RMSE of these models was close to 10, and when the distance was 300 m, the RMSE of the passenger flow prediction models was about 7.7. The evaluation of the prediction model for line 685 under different distances is shown in

Figure 8. When the distance was 100 m, the RMSE of these models was the largest which was close to 5.5, and when the distance was 300 m, the RMSE of the passenger flow prediction models was about 4.5.

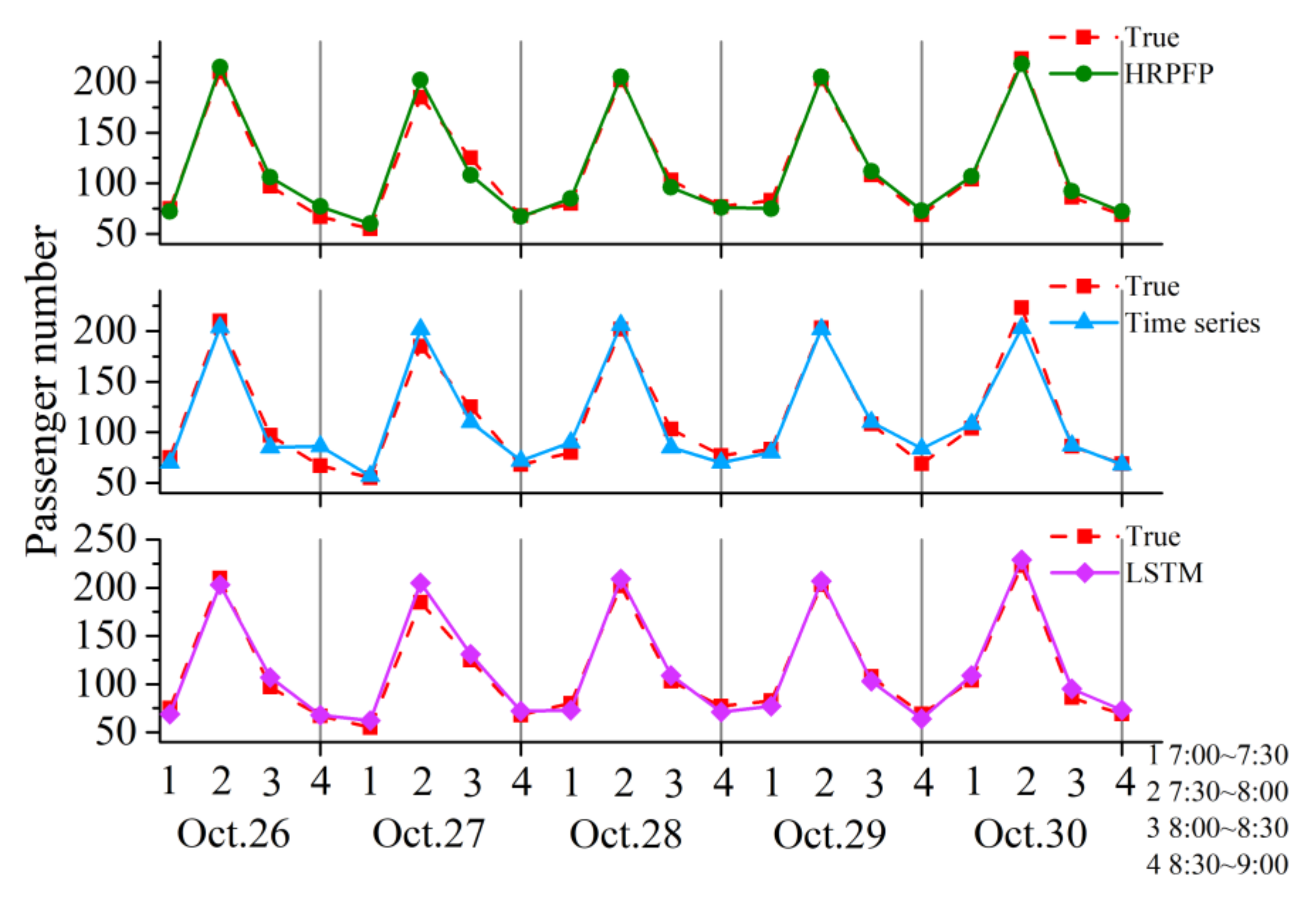

Through analysis, it can be found that if the input of real-time data encoding part was set to a vector

0, the model will be transformed to a LSTM sequence model. In order to verify the performance of the HRPFP model, we selected the time series and the LSTM model as comparison. The two selected stations were the major on-board stations of line 56008 and line 685. The major on-board station of line 56008 was the 8th station. During the test date, the predicted passenger number of different models from 7:00 to 9:00 are shown in

Figure 9. We can see that the predicted values of time series model were around the history average value and presented an obvious periodic characteristic. The predicted values of the LSTM model and the HRPFP model varied among different days, which meant that these two models can reflect the daily passenger flow trend. The HRPFP model performed better than the LSTM and time series models in this station.

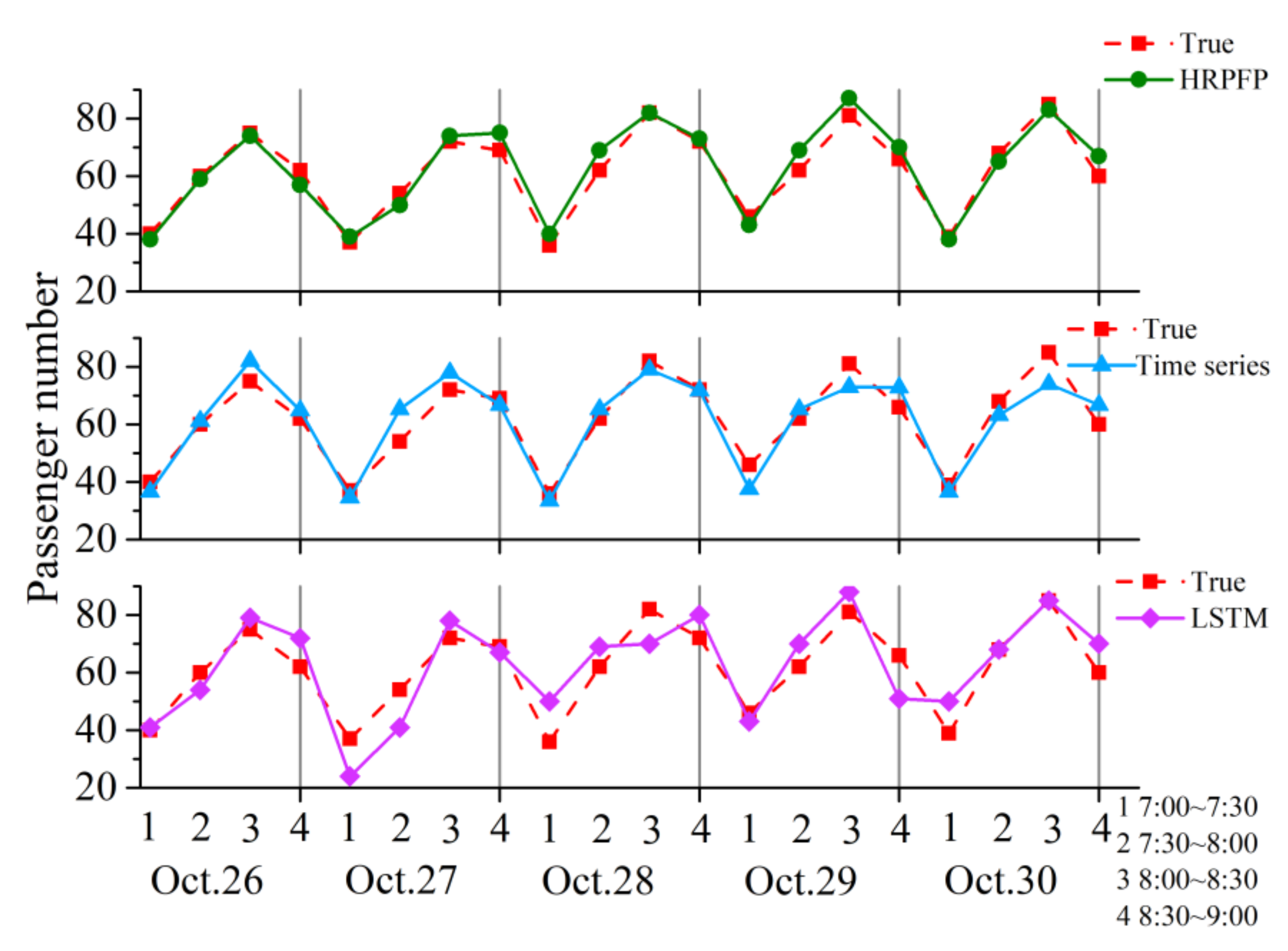

In the same time periods, the predicted passenger number using different models for the major on-board station (the 7

th station) of line 685 are shown in

Figure 10. Due to the passenger number being small at this station, the passenger data fluctuated strongly. Therefore, the time series model cannot find this regularity effectively. The LSTM model can only find part of this regularity. The HRPFP model was more accurate than the other two models due to the addition of POI features and, especially, the real-time data information.

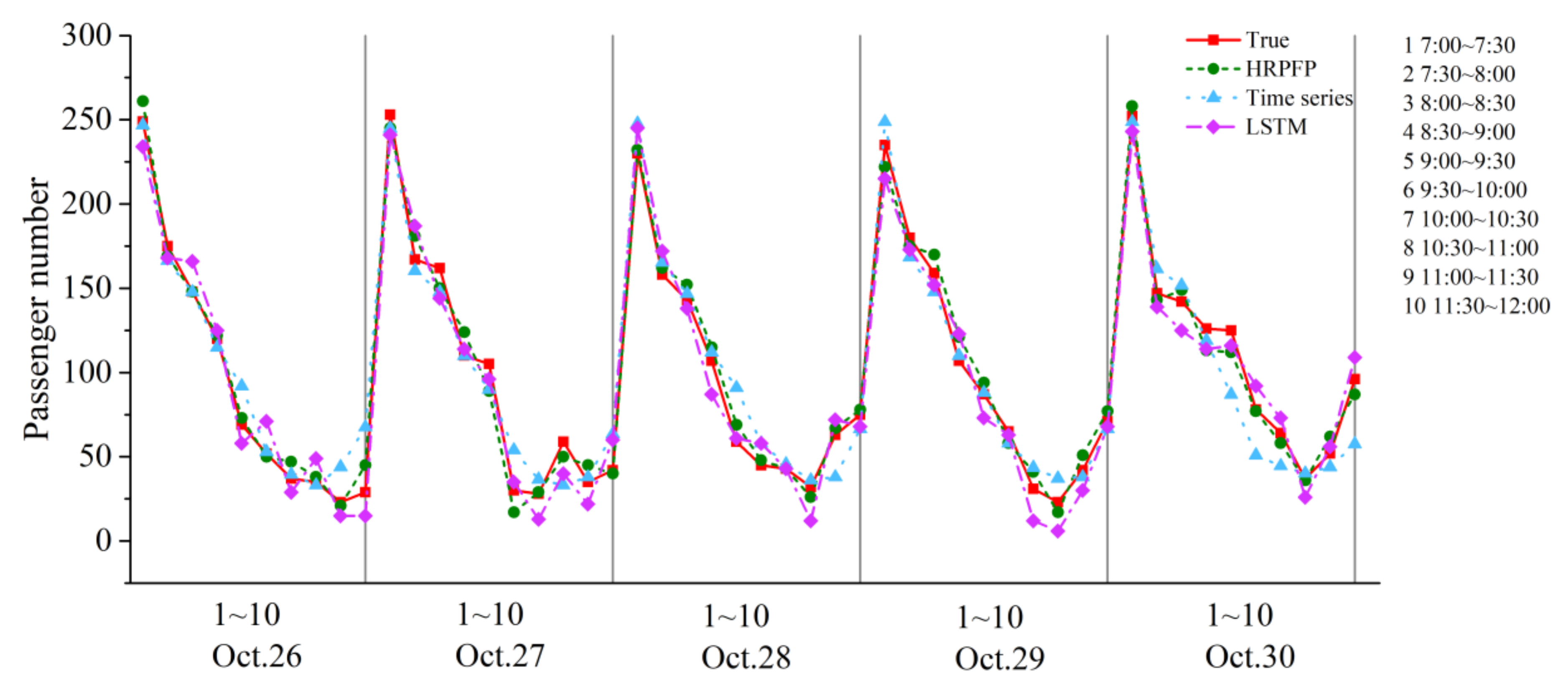

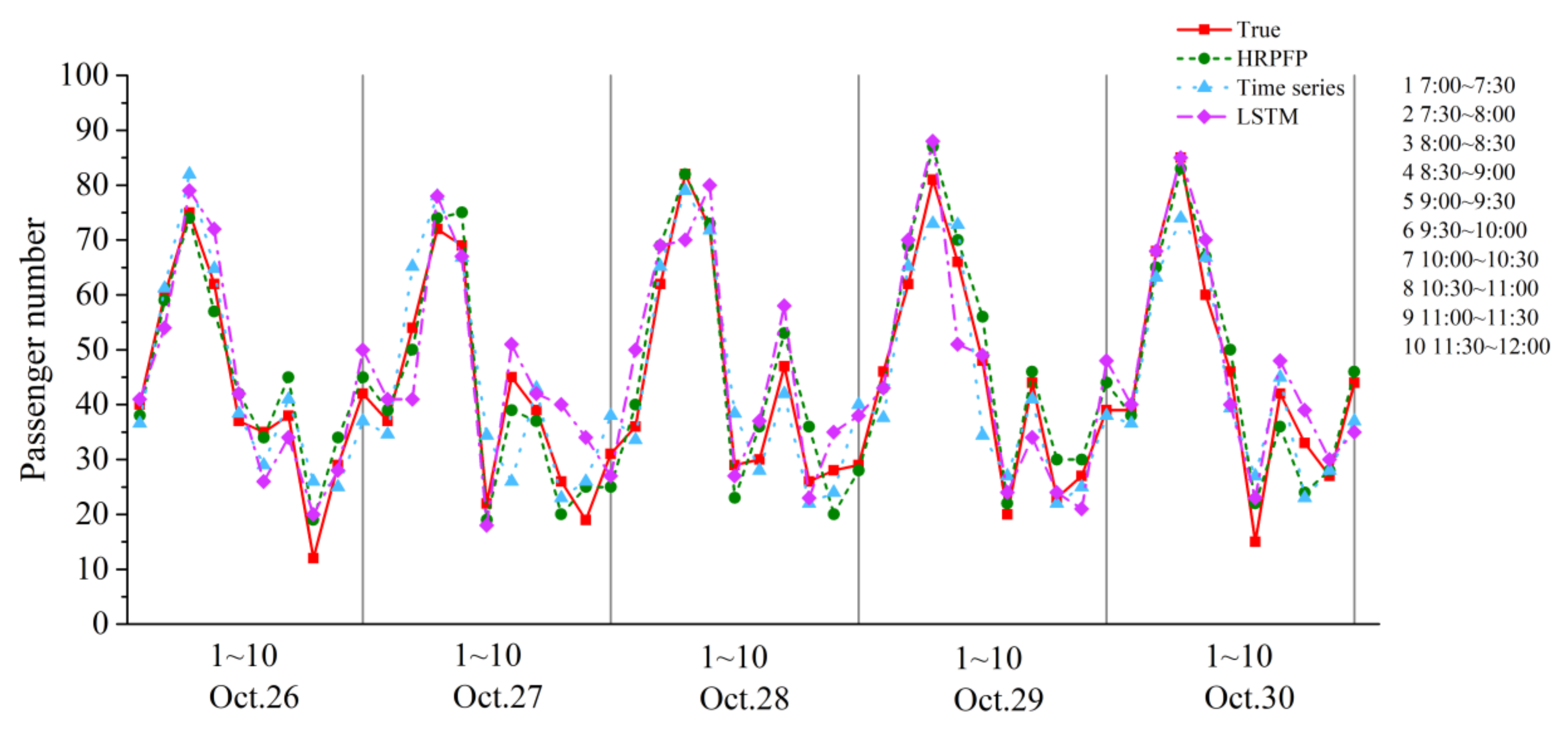

We extended the time period to 7:00~12:00. From

Figure 11, we can see that due to the irregular fluctuation of daily passenger flow from 10:00 to 12:00, the performance of the HRPFP model was significantly better than the LSTM model and the time series model at the 8th station in line 56008. The performance of the HRPFP model was also the best at the 7

th station in line 685, as shown in

Figure 12. However, the time series model performed a bit better than the LSTM model at the 7

th station in line 685. We believed the major reason for this phenomenon was that the changing amplitude of the passenger flow at this station was smaller than that at the 8

th station in line 56008, and the LSTM model overfitted.

Besides the comparison with these two sequence models at a certain station, we also compared the HRPFP model with the Xgboost model and the SVM model in bus passenger flow prediction for the lines. Using the time series model in passenger number prediction for the lines required establishing a prediction model for each site. The organization process of the time series model was too complicated, so we did not choose the time series model as a baseline in bus passenger flow prediction for the lines. Besides RMSE, we also used MAE and MaxError as the evaluation for different methods. The MAE can be calculated by Equation (20).

where

M is the total number of samples,

is the true value, and

is the predicted value. The MaxError can be calculated by Equation (21). We used the MaxError as one of our evaluation standards because usually the SVM model has a greater error in the station with a large passenger number. However, these stations have a great meaning for bus operations, and we cannot ignore this error. From

Table 1, we can see that the LSTM and HRPFP had a smaller MaxError than the other two methods. The performance of the HRPFP model was the best for both line 56008 and line 685.

We also analyzed the sensitivity of each model mentioned above. The sensitivity analysis experiment was based on the real data on Oct. 27th, 2015. We assumed that the passenger flow data of the 8th station for line 56008 from 7:00 to 8:00 had changed a little based on the real value, then we can recalculate the predicted passenger number of different models based on this change. The results are shown in

Table 2. It can be seen that the LSTM model indexed by the date was the least sensitive on passenger flow prediction, and the predicted value did not change as the real-time data changed. The SVM model and the Xgboost model changed slightly. The predicted value of the HRPFP model will change significantly as the data updated. Therefore, the HRPFP model is more sensitive to real-time data. When passenger number changed at a certain time period suddenly, the HRPFP model will reflect this change, which means that the HRPFP model performs better in real-time passenger flow prediction.

5. Conclusions

Based on the LSTM structure, this paper proposes a new passenger flow prediction model that considers real-time data. This model combines point of interest data with smart card data to improve the dimension of dataset. Then, we use the date as an index of LSTM structure to produce a history information vector. Furthermore, the time section is used as an index of LSTM structure to produce a real-time information vector. With the help of these two information vectors, we can predict bus passenger number more accurately after a decoding process. By comparing with different models, the HRPFP model has a higher accuracy than other models in passenger flow prediction. Due to the addition of the real-time encoding part, the HRPFP model has a better sensitivity to real-time data. Therefore, when a sudden passenger flow change occurs, the HRPFP model can provide an early warning of this phenomenon based on the real-time collected data, provide more accurate data for the adjustment of the bus plan, and make the bus system have a better stability. In the future, we will try to find a more proper sequence length for both the history data encoding part and real-time encoding part to make our research more complete. We hope this research can help bus operations and improve the bus system stability in the future.