Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning

Abstract

Featured Application

Abstract

1. Introduction

2. Related Work

2.1. The Concept of the Digital Twin

2.2. Robot Simulators

2.3. Digital Twins and Robots

3. Proposed Approach

- What are the costs in terms of money, time, safety, etc., of the current manual process?

- Is the use of robots technically feasible for the tasks?

- Will the robot work isolated or collaboratively with humans?

- What are the costs of the new automated or semi-automated process? Which costs are reduced and which ones are increased?

- Is the new process cost-effective?

- Does the automation reduce risks and enhance safety?

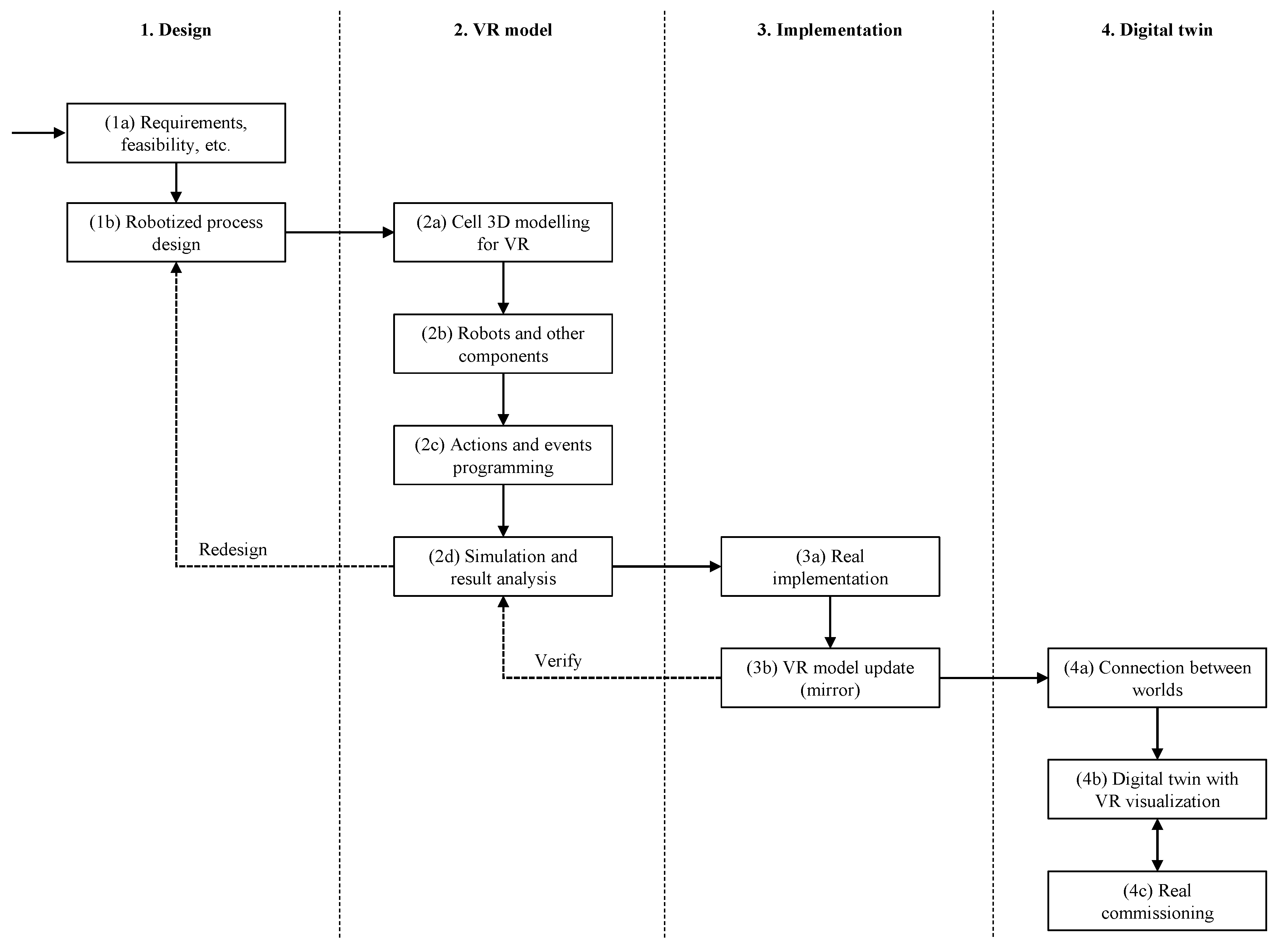

- Design:

- (a)

- Requirements, feasibility, etc. Analysis of the requirements of the new process and studying costs, technical solutions, number and type of robots, etc.

- (b)

- Robotized process design. Design and selection of the flowchart, the components, the layout, etc.

- VR model:

- (a)

- Cell 3D modelling for VR. Environment 3D reconstruction or modelling in order to create a VR immersive experience.

- (b)

- Robots and other components. The elements of the cell (robots and others) are also included in the VR model.

- (c)

- Actions and events programming. For the immersive experience, actions and events should happen as in the real world.

- (d)

- Simulation and result analysis. The design process and cell are simulated and studied in the virtual environment to verify if the result fulfills the requirements. This is the virtual commissioning. At this point, if redesign is necessary, the process will go back to step (1b).

- Implementation:

- (a)

- Real implementation. Once the process and the robot-based automation solution have been virtually tested, it is time for the real implementation.

- (b)

- VR model update (mirror). If during the real implementation there is any change from the original design, the virtual model should be updated in order to keep the mirror, and the simulation should be repeated by going back to (2d).

- Digital twin:

- (a)

- Connection between worlds. Sensor installation for real-time data communication between the real world and the virtual model to create the digital twin.

- (b)

- Digital twin with VR visualization. Visualization of the real actions and events in the digital twin, and operator training.

- (c)

- Real commissioning. Real functioning of the manufacturing process mirrored in its digital twin.

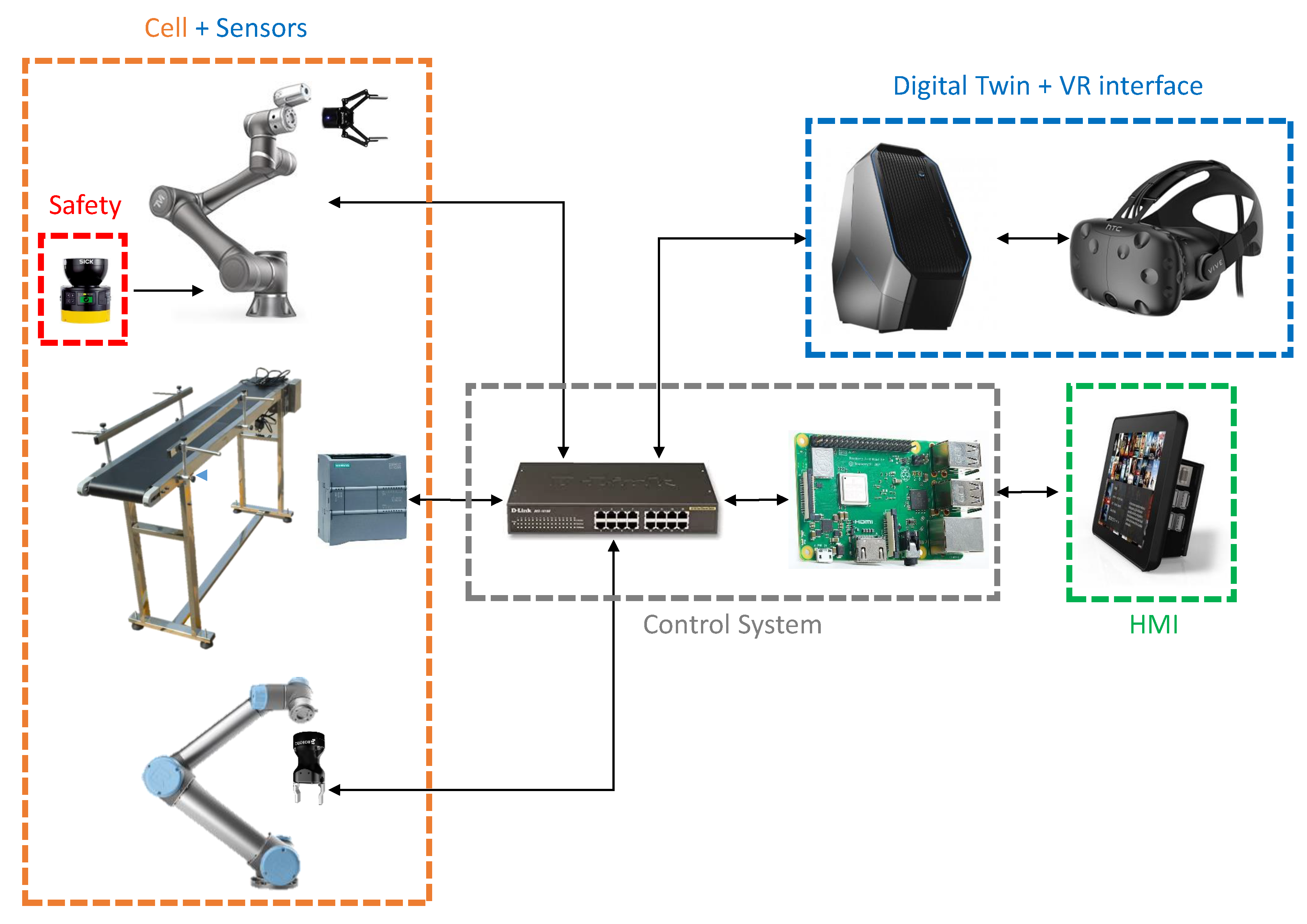

3.1. System Architecture

- Control system. The cell is managed by the control system with a graphical user interface.

- Robots and other cell components. The cell may be composed of one or several robots and other elements: conveyor belts, automatic tools, etc. As in a standard solution, the robots are controlled and commanded by their controllers.

- Safety system. As the operators can enter in the working area of the robots, the safety system is aimed at avoiding collisions between them. When there is any potential risk of collision, the robot controller gets the alert signal to reduce the speed, or to stop, depending on the distance between the human and the robot.

- HMI. During operation, the users will interact with the system using a HMI connected to the cell control system instead of dealing with the specific console of each robot.

- Digital twin. The real cell is mirrored in a virtual space.

- Sensors. In order to feed the digital twin with real-time information, additional sensors will be installed in cell.

- Virtual reality interface. The VR interface permits an immersive visualization of the digital twin.

3.2. The Virtual Interface

- Scan the real scenario. Firstly, using a 3D reconstruction scanner, a dense 3D point cloud is obtained. The scanner of the mentioned reference can be used, but it is possible to use others.

- Process the resulting 3D point cloud and apply filters. The point cloud is processed and filtered with the software of the 3D scanner in order to reduce noise and the number of points, guaranteeing a continuous density of points to facilitate the next step.

- Model the point cloud to render the virtual environment. The point cloud is modeled to create the 3D reconstruction with the real dimensions of the real environment and the immersive effect for the user.

- Implement the elements’ behavior and the human interaction. Finally, the virtual scene is completed with the configuration of the physical behavior of the elements (animations, movements, events, actions, etc., so that virtual elements act similarly to the real ones), a set of virtual buttons, floating text charts, etc., to permit the immersive user interaction and the data visualization.

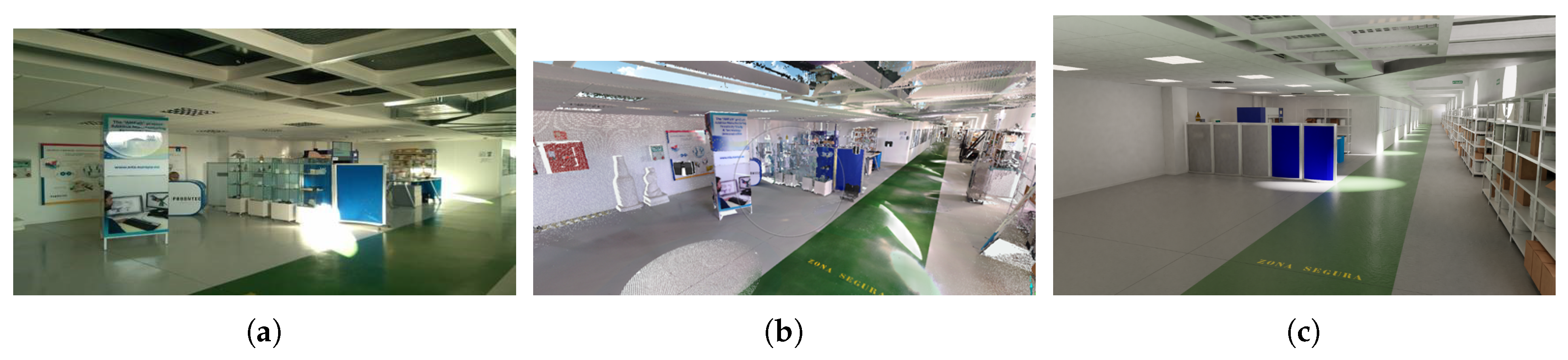

4. Use Case

4.1. Design

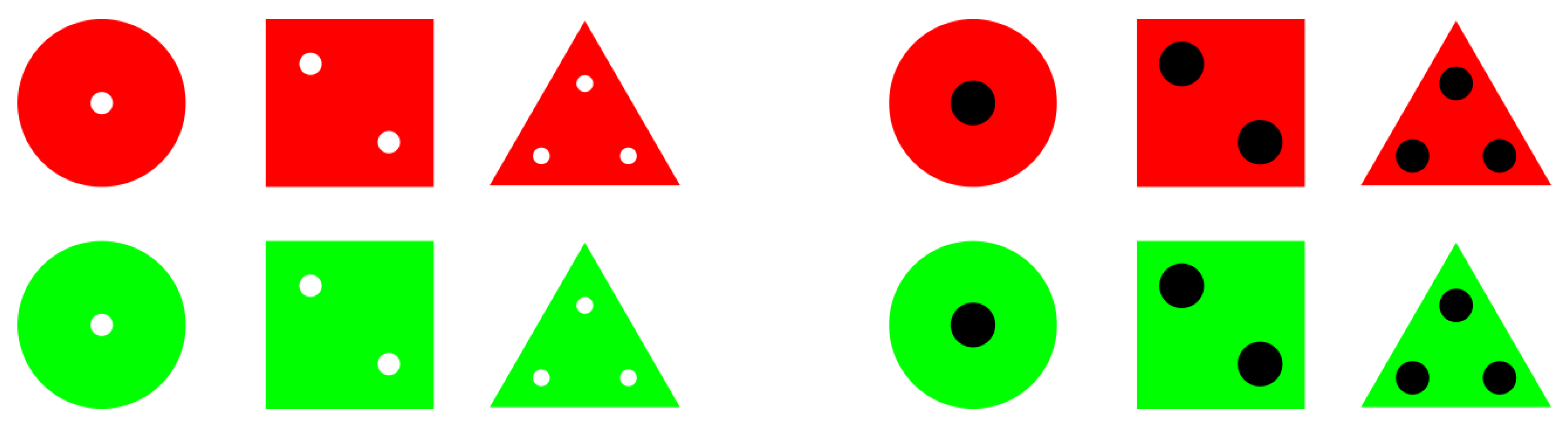

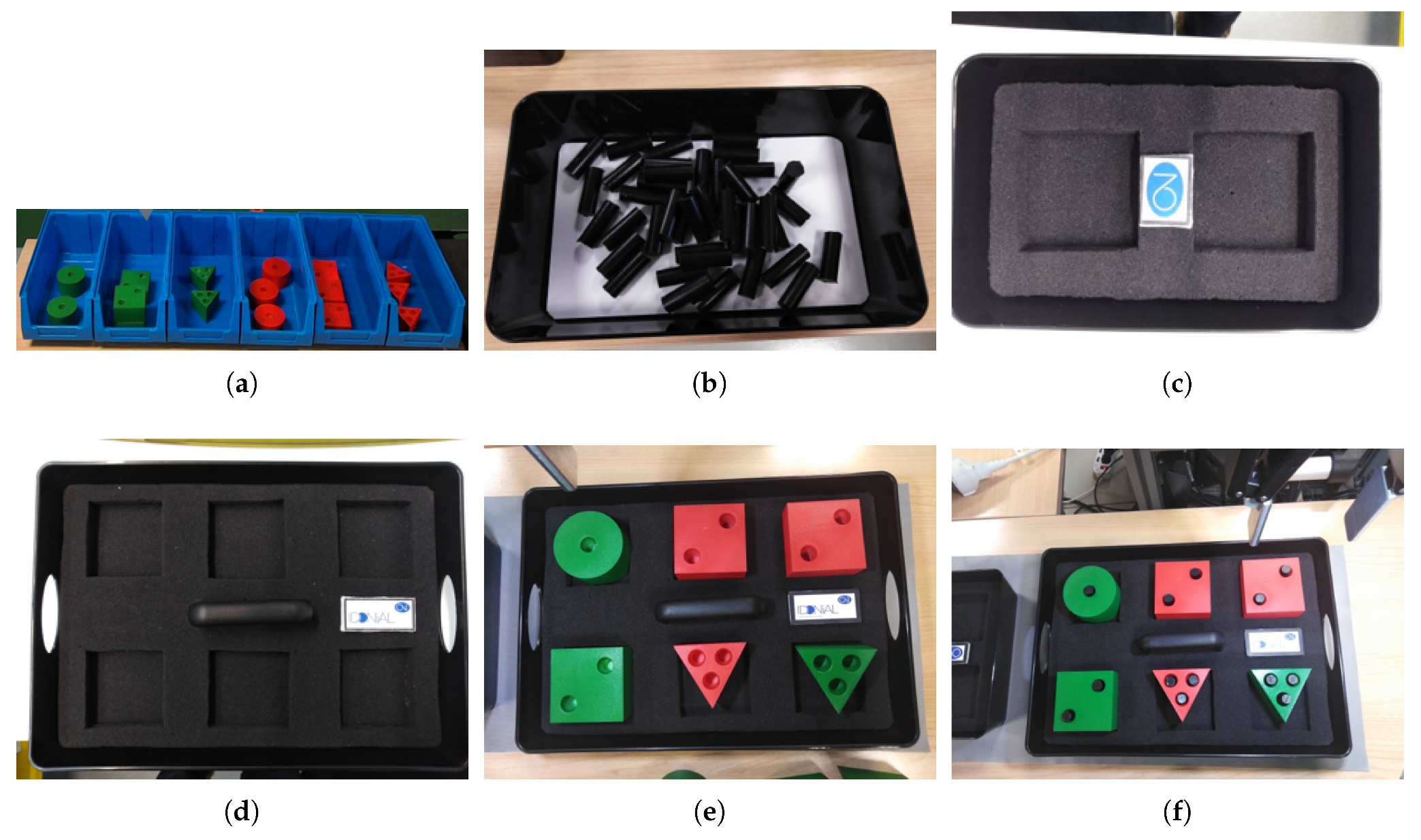

- Batch preparation and individual inspection:

- (a)

- Once the operator and the robot are on the assembly table, the system indicates to the operator the batch and the first part to take.

- (b)

- Following the instructions, the operator puts the part in the buffer.

- (c)

- The robot verifies whether the part is correct with an on-board camera [43]. If the part is correct (type, dimensions, and color), it is picked and placed in the tray. If not, the robot puts away the wrong part.

- (d)

- The process is repeated for all the parts of the batch. Related to the wrong part, the program has a list of pending parts; thus as long as a part is still on this list, it is requested again. It will only be deleted if it is seen correctly in the buffer.

- Covers assembly and batch inspection:

- (a)

- When all the positions of the template of the tray are completed, the robot verifies again that all the parts are the required ones and that they are in the right position.

- (b)

- The operator puts the covers inside the holes.

- (c)

- The robot verifies whether all the covers have been placed. If not, it notifies to the operator that the covers are not ok.

- Batch distribution:

- (a)

- The robot takes the tray and puts it on the conveyor belt for delivery.

- (b)

- On the other side of the conveyor, another robot receives the tray with assembled parts.

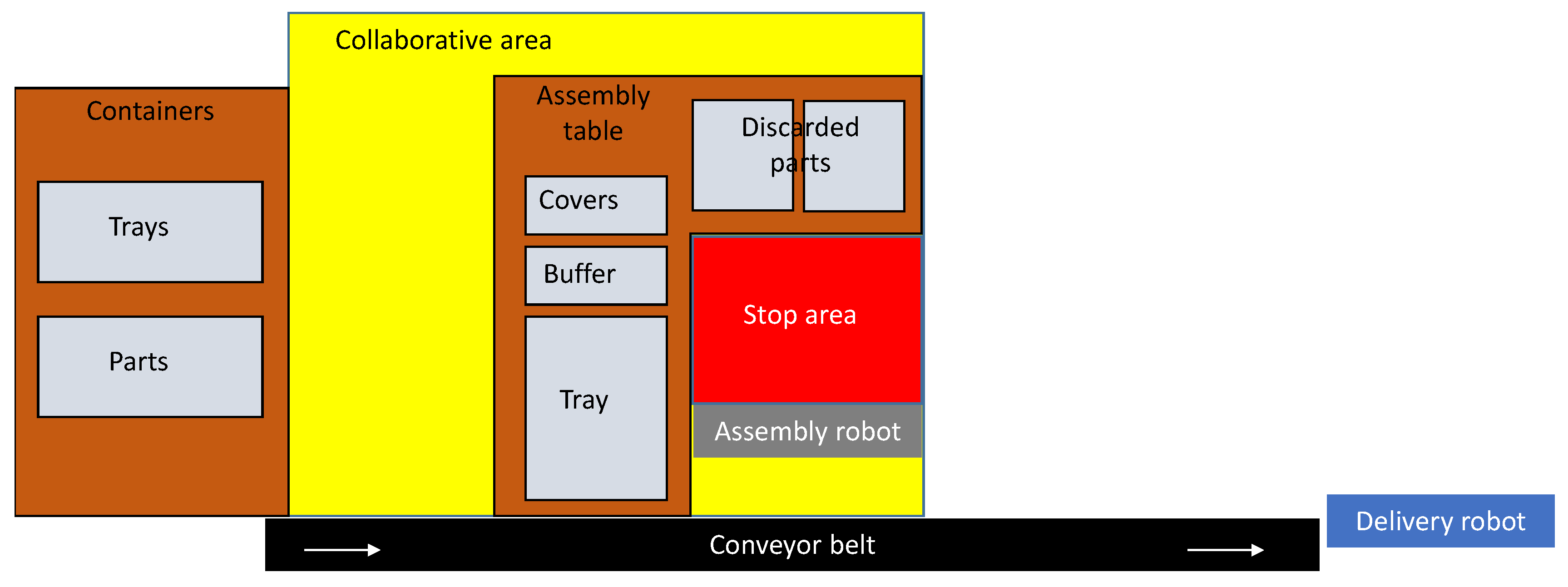

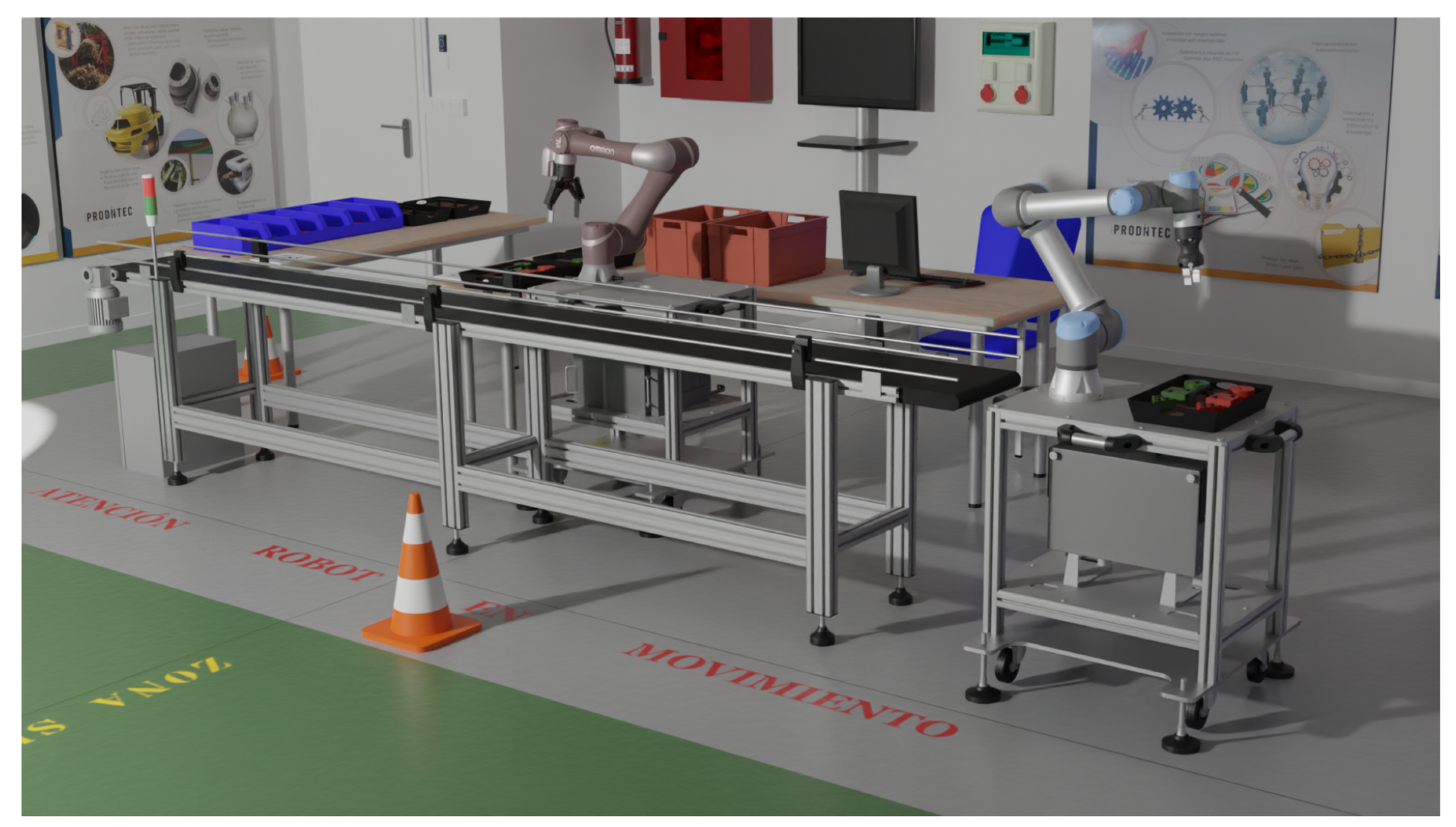

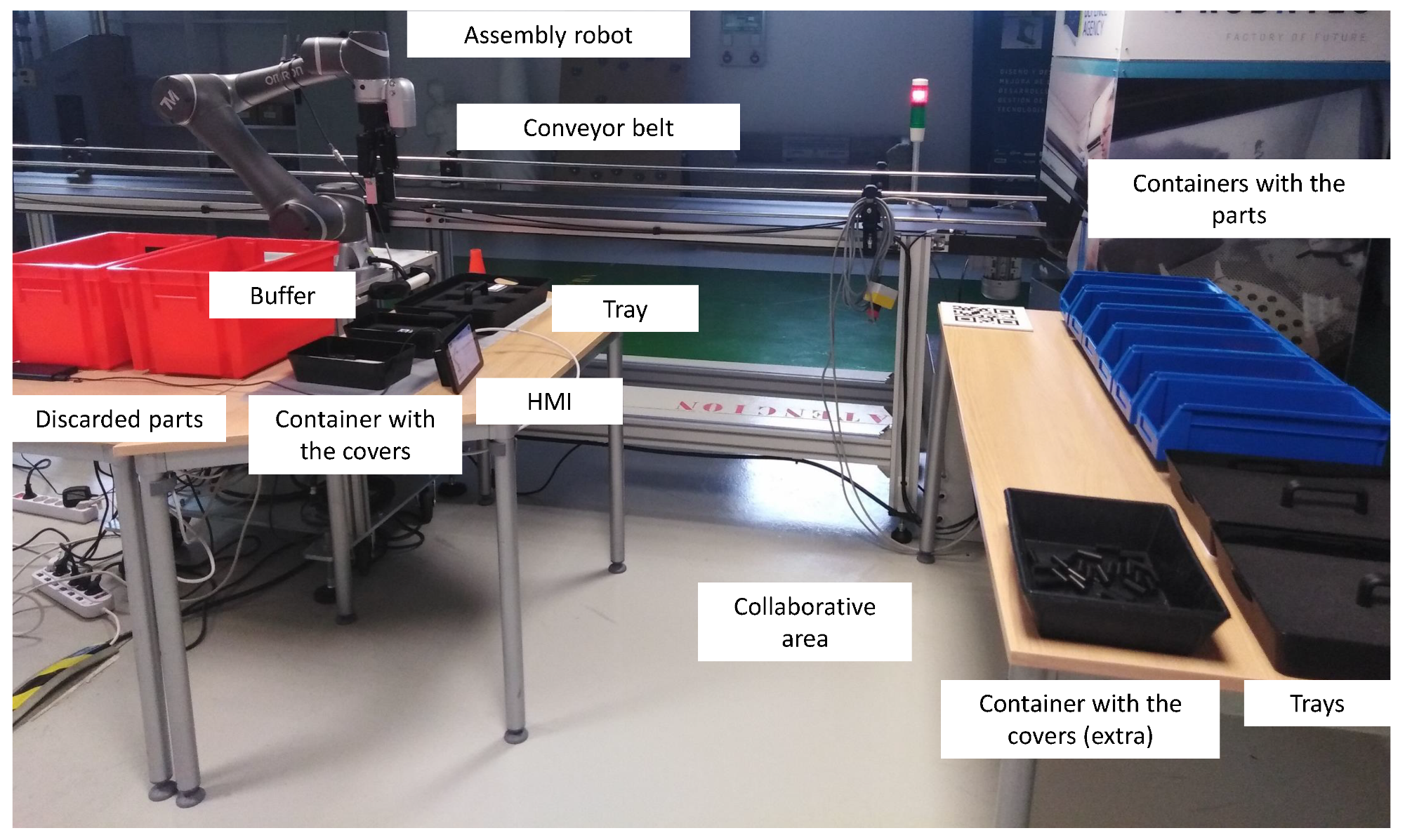

- A table for the containers of parts and trays.

- The assembly robot.

- An assembly table with the containers of covers and discarded parts as well as a buffer where the operator will put the parts which the robot will move to the tray.

- A vision system for part location and inspection.

- A conveyor belt where the robot will place the tray once completed.

- The delivery robot which is waiting the tray at the other side of the conveyor.

- Control system. The unit controller is a Raspberry Pi 3 B+.

- Robots and other cell components. Two light weigh collaborative robots with grippers have been selected, the Omron TM5-900 and the Universal Robots UR5e. The first one is used in the assembly operation as it has an on-board vision system which is used for the inspection. The tool of this robot is the Robotiq 2F-140 gripper. The second robot is used for the delivery of the trays with the Robotiq Hand-e gripper as tool. The conveyor belt is own-manufacture and it is controlled by the Siemens S7-1200 PLC. Moreover, it contains presence sensors in order to control the position of the trays.

- Safety system. The movements of the operators inside the working area of the robots are monitored by the Sick microScan 3 Core scanner. If the operator is in the collaborative area, the robot controller gets the alert signal to reduce the speed, and if he/she is too close to the robot, the robot controller gets the alert signal to stop.

- HMI. A touch screen is connected to the control system to permit the human interaction so that the operator can know what operations and actions must perform, and get feedback of his/her performance from the system.

- Digital twin. The PC which hosts the virtual mirror of the real cell is connected to the cell network to receive the real-time information.

- Sensors. The real-time information about movements, events, etc. is provided by the safety system, the presence sensors, the robots, the inspection systems, etc.

- Virtual reality interface. Among the different available commercial hardware, HTC Vive glasses were selected. Unity3D is used as the VR engine.

4.2. VR Model

- Efficiency and optimization:

- The parts are inspected previously to the assembly of the covers, avoiding spending time and wasting materials on wrong parts.

- The operator receives instructions to continue his/her tasks in parallel with robot’s tasks. It is not necessary to wait.

- The buffer avoids bottlenecks.

- The robot discards automatically the wrong parts. The operator does not have to wait for the inspection result.

- If a part is pending, it is requested at the end of the batch, increasing process flexibility and avoiding confusions to the operator.

- If all the tasks are done manually without any automation, the operator will spend time in repetitive tasks, the inspection will be subjective, and materials will be wasted, among other inefficiencies. Thus, it will not be the optimal situation.

- Reduction of movements and transportation:

- Containers and trays are close to the operator in order to reduce movements.

- The conveyor belt permits the transportation of the completed batches from the assembly area to the delivery area, avoiding the use of a mobile platform or an automatic guided vehicle (AGV).

- Possibility of future extensions:

- New workstations can be added.

- Augmented reality can be used as HMI instead of the current screen. For this purpose, it is necessary to locate QR-codes in the real scenario. Their possible location has been studied in the virtual scenario as it can be appreciated in Figure 9a.

4.3. Implementation

4.4. Digital Twin

- Manufacturing orders. They are directly transmitted to the digital twin at the beginning of the assembly process.

- Operators. Their positions are controlled by the safety system, thus they are transmitted to the digital twin.

- Robots. The control system is executing predetermined (previously programmed) movements which are communicated to the digital twin.

- Inspection results. The control system receives the results of the part and batch inspections; thus they can be communicated and replicated in the digital twin.

- Conveyor belt. The PLC which controls the movement of the conveyor is connected to the cell control system. It captures the data from the presence sensors, the speed, etc. This information is transmitted to the digital twin.

5. Results and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | Three-Dimensional |

| AGV | Automatic Guided Vehicle |

| CPPS | Cyber-Physical Production Systems |

| DT | Digital Twin |

| HMI | Human-Machine Interface |

| PLC | Programmable Logic Controller |

| QR | Quick Response |

| ROS | Robot Operating System |

| VR | Virtual Reality |

References

- Ohno, T. Toyota Production System: Beyond Large-Scale Production; CRC Press: Boca Raton, FL, USA, 1988. [Google Scholar]

- Henning, K. Recommendations for Implementing the Strategic Initiative INDUSTRIE 4.0; National Academy of Science and Engineering: Munich, Germany, 2013. [Google Scholar]

- Kolberg, D.; Zühlke, D. Lean automation enabled by industry 4.0 technologies. IFAC-PapersOnLine 2015, 48, 1870–1875. [Google Scholar] [CrossRef]

- Lee, J.; Bagheri, B.; Kao, H.A. A cyber-physical systems architecture for industry 4.0-based manufacturing systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F.; Wang, L. Symbiotic human–robot collaborative approach for increased productivity and enhanced safety in the aerospace manufacturing industry. Int. J. Adv. Manuf. Technol. 2020, 106, 851–863. [Google Scholar] [CrossRef]

- Vanderborght, B. Unlocking the Potential of Industrial Human–Robot Collaboration; Technical Report; Publications Office of the European Union: Brussels, Belgium, 2019. [Google Scholar]

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 85–113. [Google Scholar]

- Boschert, S.; Rosen, R. Digital twin—The simulation aspect. In Mechatronic Futures; Springer: Berlin/Heidelberg, Germany, 2016; pp. 59–74. [Google Scholar]

- Schluse, M.; Rossmann, J. From simulation to experimentable digital twins: Simulation-based development and operation of complex technical systems. In Proceedings of the 2016 IEEE International Symposium on Systems Engineering (ISSE), Edinburgh, UK, 3–5 October 2016; pp. 1–6. [Google Scholar]

- Rosen, R.; Von Wichert, G.; Lo, G.; Bettenhausen, K.D. About the importance of autonomy and digital twins for the future of manufacturing. IFAC-PapersOnLine 2015, 48, 567–572. [Google Scholar] [CrossRef]

- Havard, V.; Jeanne, B.; Lacomblez, M.; Baudry, D. Digital twin and virtual reality: A co-simulation environment for design and assessment of industrial workstations. Prod. Manuf. Res. 2019, 7, 472–489. [Google Scholar] [CrossRef]

- Zhu, Z.; Liu, C.; Xu, X. Visualisation of the Digital Twin data in manufacturing by using Augmented Reality. Procedia CIRP 2019, 81, 898–903. [Google Scholar] [CrossRef]

- Cimino, C.; Negri, E.; Fumagalli, L. Review of digital twin applications in manufacturing. Comput. Ind. 2019, 113, 103130. [Google Scholar] [CrossRef]

- Yan, Z.; Fabresse, L.; Laval, J.; Bouraqadi, N. Building a ros-based testbed for realistic multi-robot simulation: Taking the exploration as an example. Robotics 2017, 6, 21. [Google Scholar] [CrossRef]

- Schleich, B.; Anwer, N.; Mathieu, L.; Wartzack, S. Shaping the digital twin for design and production engineering. CIRP Ann. 2017, 66, 141–144. [Google Scholar] [CrossRef]

- Tao, F.; Sui, F.; Liu, A.; Qi, Q.; Zhang, M.; Song, B.; Guo, Z.; Lu, S.C.Y.; Nee, A. Digital twin-driven product design framework. Int. J. Prod. Res. 2019, 57, 3935–3953. [Google Scholar] [CrossRef]

- Tao, F.; Cheng, J.; Qi, Q.; Zhang, M.; Zhang, H.; Sui, F. Digital twin-driven product design, manufacturing and service with big data. Int. J. Adv. Manuf. Technol. 2018, 94, 3563–3576. [Google Scholar] [CrossRef]

- Zhuang, C.; Liu, J.; Xiong, H. Digital twin-based smart production management and control framework for the complex product assembly shop-floor. Int. J. Adv. Manuf. Technol. 2018, 96, 1149–1163. [Google Scholar] [CrossRef]

- Wang, J.; Ye, L.; Gao, R.X.; Li, C.; Zhang, L. Digital Twin for rotating machinery fault diagnosis in smart manufacturing. Int. J. Prod. Res. 2019, 57, 3920–3934. [Google Scholar] [CrossRef]

- Association, R.I. Digital Twins and Virtual Commissioning in Industry 4.0. Available online: https://www.robotics.org/content-detail.cfm/Industrial-Robotics-Tech-Papers/Digital-Twins-and-Virtual-Commissioning-in-Industry-4-0/content_id/8173 (accessed on 3 April 2020).

- Rocca, R.; Rosa, P.; Sassanelli, C.; Fumagalli, L.; Terzi, S. Integrating Virtual Reality and Digital Twin in Circular Economy Practices: A Laboratory Application Case. Sustainability 2020, 12, 2286. [Google Scholar] [CrossRef]

- Bevilacqua, M.; Bottani, E.; Ciarapica, F.E.; Costantino, F.; Di Donato, L.; Ferraro, A.; Mazzuto, G.; Monteriù, A.; Nardini, G.; Ortenzi, M.; et al. Digital Twin Reference Model Development to Prevent Operators’ Risk in Process Plants. Sustainability 2020, 12, 1088. [Google Scholar] [CrossRef]

- Pan, Z.; Polden, J.; Larkin, N.; Van Duin, S.; Norrish, J. Recent progress on programming methods for industrial robots. In Proceedings of the ISR 2010 (41st International Symposium on Robotics) and ROBOTIK 2010 (6th German Conference on Robotics), Munich, Germany, 7–9 June 2010; pp. 1–8. [Google Scholar]

- Penttilä, S.; Lund, H.; Ratava, J.; Lohtander, M.; Kah, P.; Varis, J. Virtual Reality Enabled Manufacturing of Challenging Workpieces. Procedia Manuf. 2019, 39, 22–31. [Google Scholar] [CrossRef]

- Pérez, L.; Diez, E.; Usamentiaga, R.; García, D.F. Industrial robot control and operator training using virtual reality interfaces. Comput. Ind. 2019, 109, 114–120. [Google Scholar] [CrossRef]

- Components, V. Visual Components: 3D Manufacturing Simulation and Visualization Software—Design the Factories of the Future. Available online: https://www.visualcomponents.com (accessed on 10 April 2020).

- Siemens. Engineer Automated Production Systems Using Robotics and Automation Simulation. Available online: https://www.plm.automation.siemens.com/global/es/products/manufacturing-planning/robotics-automation-simulation.html (accessed on 10 April 2020).

- RoboDK. Simulate Robot Applications. Available online: https://robodk.com (accessed on 8 May 2020).

- Roldán, J.J.; Peña-Tapia, E.; Garzón-Ramos, D.; de León, J.; Garzón, M.; del Cerro, J.; Barrientos, A. Multi-Robot Systems, Virtual Reality and ROS: Developing a new generation of operator interfaces. In Robot Operating System (ROS); Springer: Berlin/Heidelberg, Germany, 2019; pp. 29–64. [Google Scholar]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Giannoulis, C.; Michalos, G.; Makris, S. Digital twin for adaptation of robots’ behavior in flexible robotic assembly lines. Procedia Manuf. 2019, 28, 121–126. [Google Scholar] [CrossRef]

- Malik, A.A.; Bilberg, A. Digital twins of human robot collaboration in a production setting. Procedia Manuf. 2018, 17, 278–285. [Google Scholar] [CrossRef]

- Ma, X.; Tao, F.; Zhang, M.; Wang, T.; Zuo, Y. Digital twin enhanced human-machine interaction in product lifecycle. Procedia CIRP 2019, 83, 789–793. [Google Scholar] [CrossRef]

- Bilberg, A.; Malik, A.A. Digital twin driven human–robot collaborative assembly. CIRP Ann. 2019, 68, 499–502. [Google Scholar] [CrossRef]

- Aivaliotis, P.; Georgoulias, K.; Arkouli, Z.; Makris, S. Methodology for enabling digital twin using advanced physics-based modelling in predictive maintenance. Procedia CIRP 2019, 81, 417–422. [Google Scholar] [CrossRef]

- Vachálek, J.; Bartalskỳ, L.; Rovnỳ, O.; Šišmišová, D.; Morháč, M.; Lokšík, M. The digital twin of an industrial production line within the industry 4.0 concept. In Proceedings of the 2017 21st International Conference on Process Control (PC), Strbske Pleso, Slovakia, 6–9 June 2017; pp. 258–262. [Google Scholar]

- Zhang, C.; Zhou, G.; He, J.; Li, Z.; Cheng, W. A data-and knowledge-driven framework for digital twin manufacturing cell. Procedia CIRP 2019, 83, 345–350. [Google Scholar] [CrossRef]

- Burghardt, A.; Szybicki, D.; Gierlak, P.; Kurc, K.; Pietruś, P.; Cygan, R. Programming of Industrial Robots Using Virtual Reality and Digital Twins. Appl. Sci. 2020, 10, 486. [Google Scholar] [CrossRef]

- Kuts, V.; Otto, T.; Tähemaa, T.; Bondarenko, Y. Digital Twin based synchronised control and simulation of the industrial robotic cell using Virtual Reality. J. Mach. Eng. 2019, 19. [Google Scholar] [CrossRef]

- Zhang, J.; Fang, X. Challenges and key technologies in robotic cell layout design and optimization. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2017, 231, 2912–2924. [Google Scholar] [CrossRef]

- Burdea, G.C.; Coiffet, P. Virtual Reality Technology; John Wiley & Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Wang, L.; Keshavarzmanesh, S.; Feng, H.Y.; Buchal, R.O. Assembly process planning and its future in collaborative manufacturing: A review. Int. J. Adv. Manuf. Technol. 2009, 41, 132. [Google Scholar] [CrossRef]

- European Factories of the Future Research Association. Factories of the Future: Multi-Annual Roadmap for the Contractual PPP under Horizon 2020; Publications Office of the European Union: Brussels, Belgium, 2013. [Google Scholar]

- Pérez, L.; Rodríguez, Í.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Robot guidance using machine vision techniques in industrial environments: A comparative review. Sensors 2016, 16, 335. [Google Scholar] [CrossRef]

| Robot Manufacturers | Commercial Sim. Tools with VR | DT Based on VR | |

|---|---|---|---|

| Low investment | 2 | 1 | 3 |

| Multi-robot | 1 | 3 | 3 |

| Human-robot collab. | 1 | 1 | 3 |

| Immersive | 1 | 3 | 3 |

| Customization | 1 | 2 | 3 |

| Training | 1 | 2 | 3 |

| Versatility | 1 | 2 | 3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning. Appl. Sci. 2020, 10, 3633. https://doi.org/10.3390/app10103633

Pérez L, Rodríguez-Jiménez S, Rodríguez N, Usamentiaga R, García DF. Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning. Applied Sciences. 2020; 10(10):3633. https://doi.org/10.3390/app10103633

Chicago/Turabian StylePérez, Luis, Silvia Rodríguez-Jiménez, Nuria Rodríguez, Rubén Usamentiaga, and Daniel F. García. 2020. "Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning" Applied Sciences 10, no. 10: 3633. https://doi.org/10.3390/app10103633

APA StylePérez, L., Rodríguez-Jiménez, S., Rodríguez, N., Usamentiaga, R., & García, D. F. (2020). Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning. Applied Sciences, 10(10), 3633. https://doi.org/10.3390/app10103633