Exploration of the Application of Virtual Reality and Internet of Things in Film and Television Production Mode

Abstract

:1. Introduction

2. Literature Review

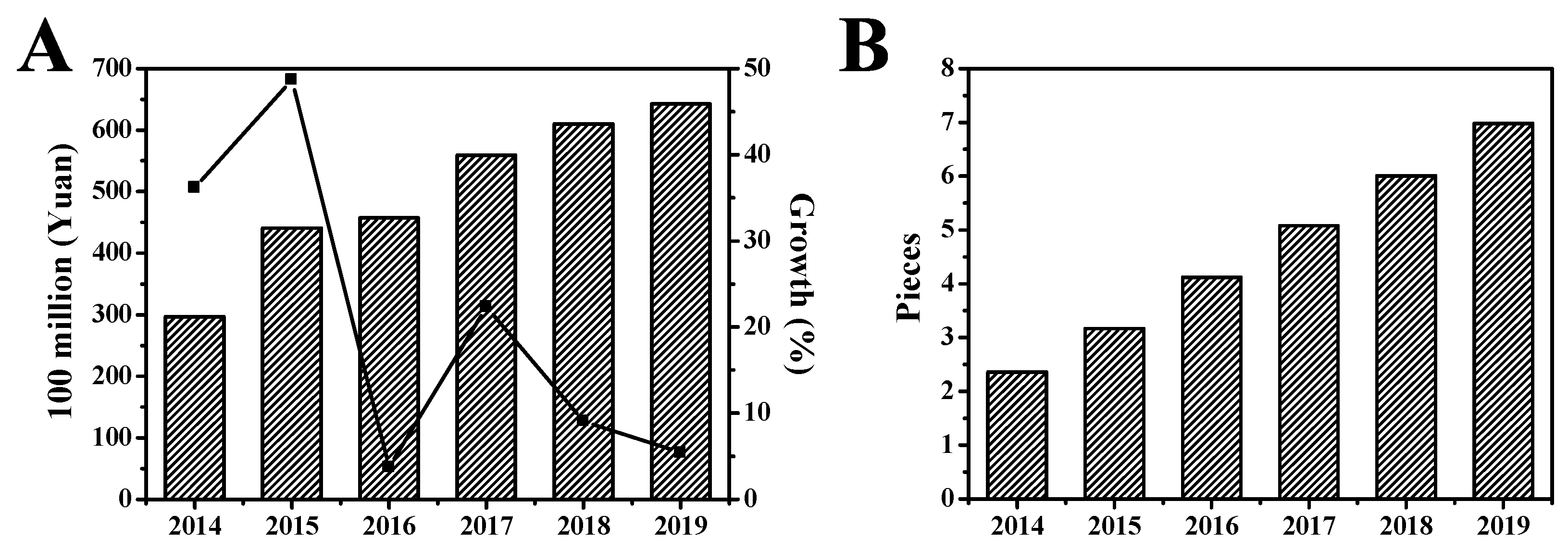

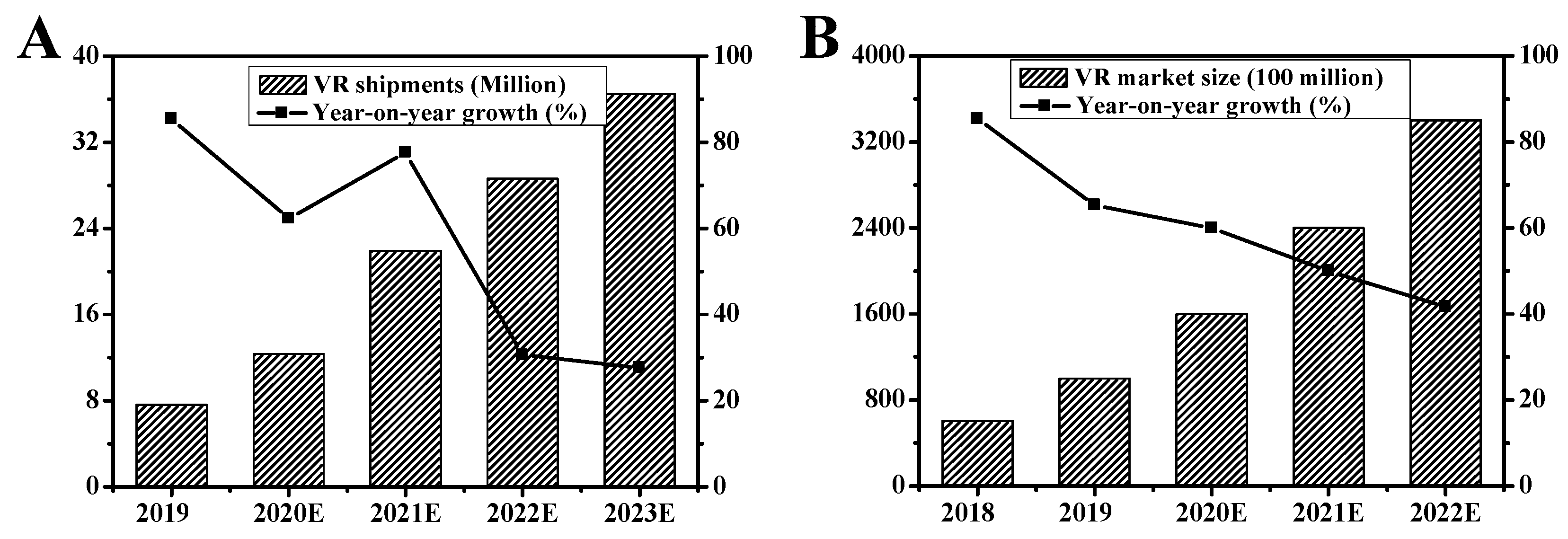

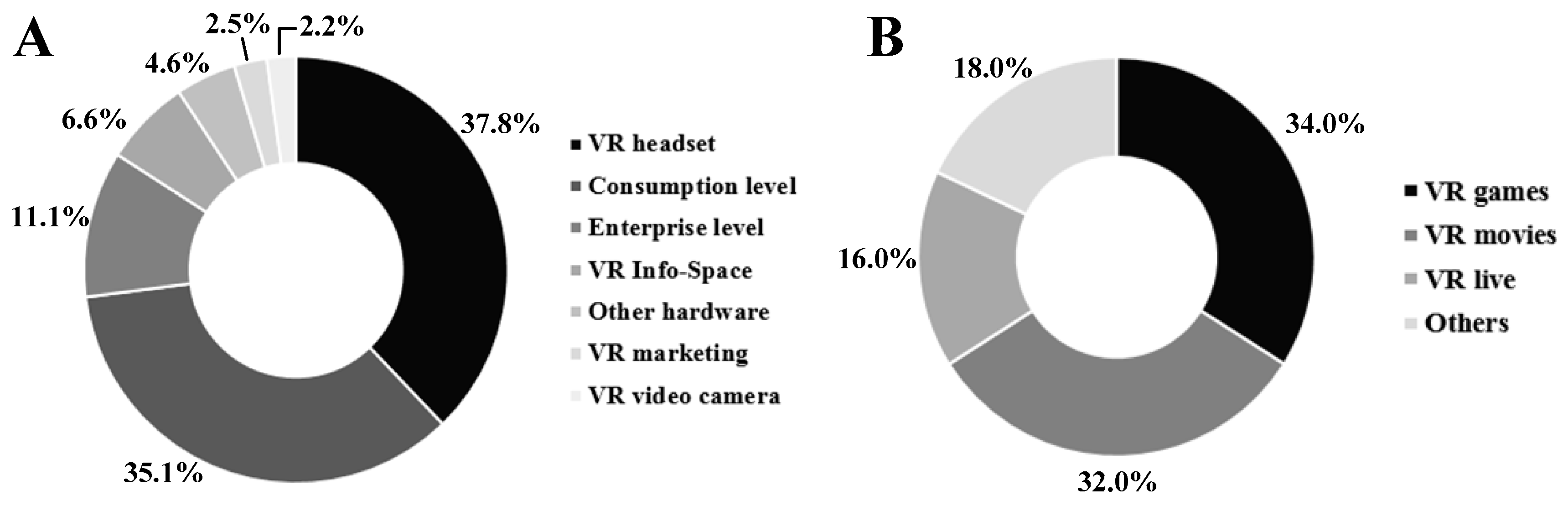

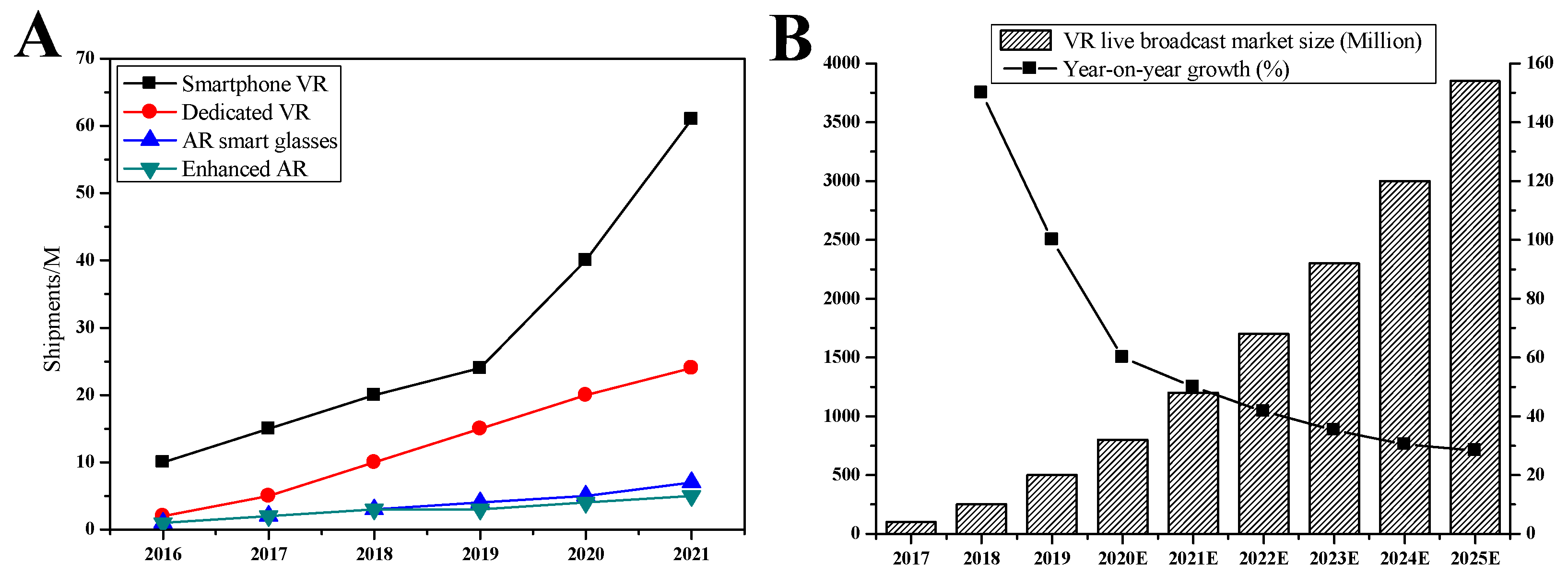

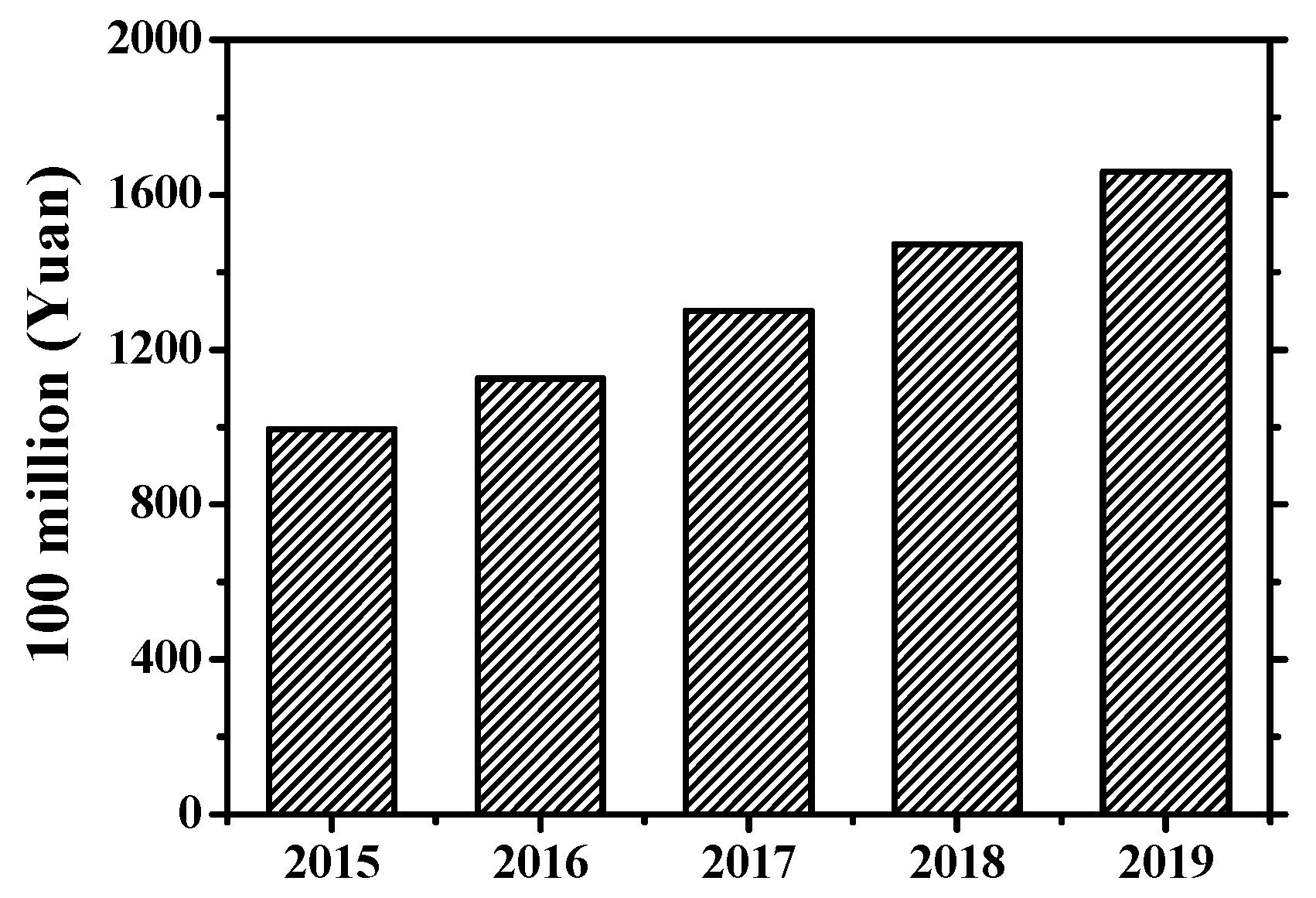

2.1. The Application and Development of VR Technology in Film and Television

2.2. The Application and Development of IoT Technology in Film and Television

3. Proposed Method

3.1. Video Data Crawling Based on Theme Crawler

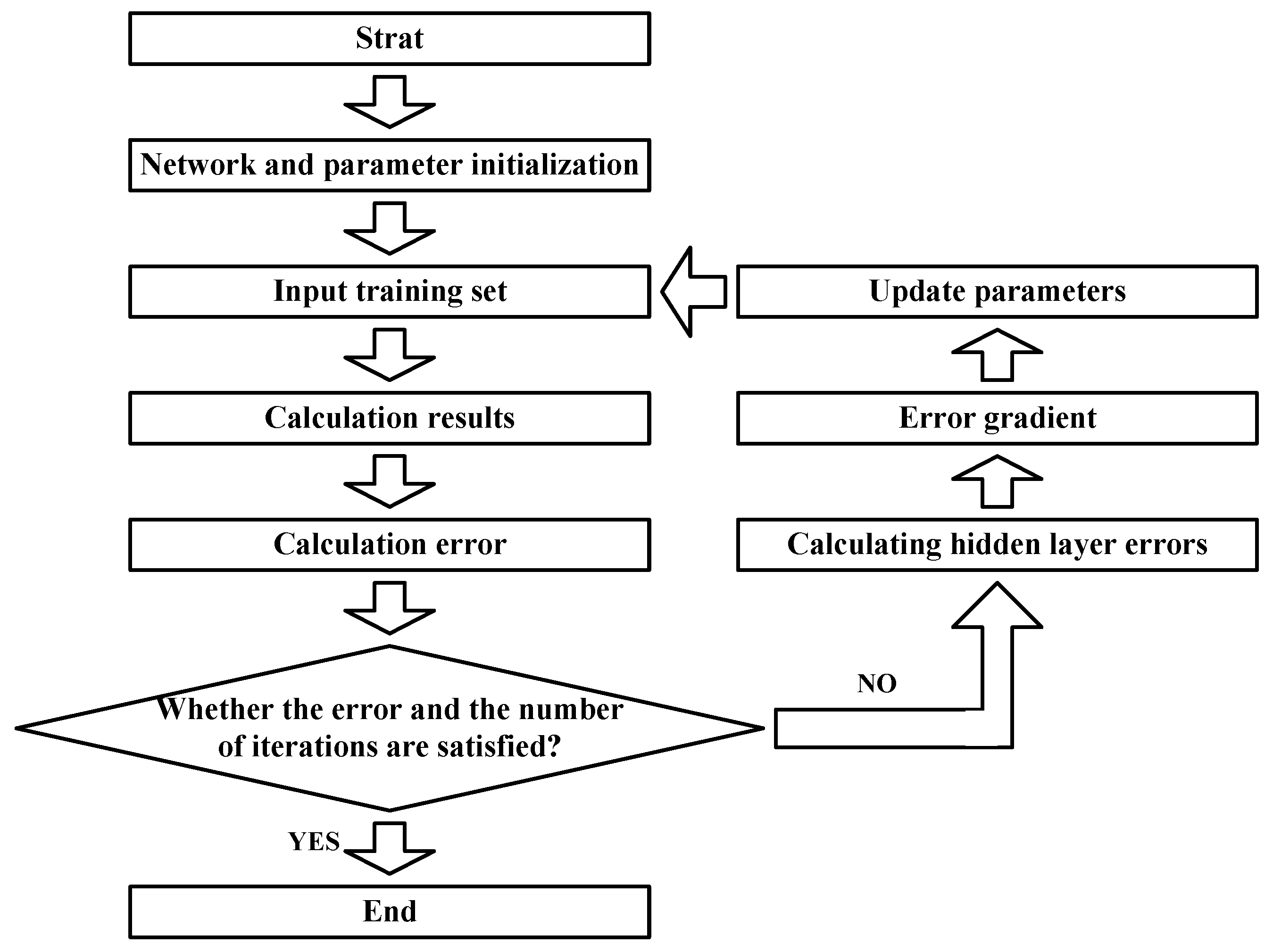

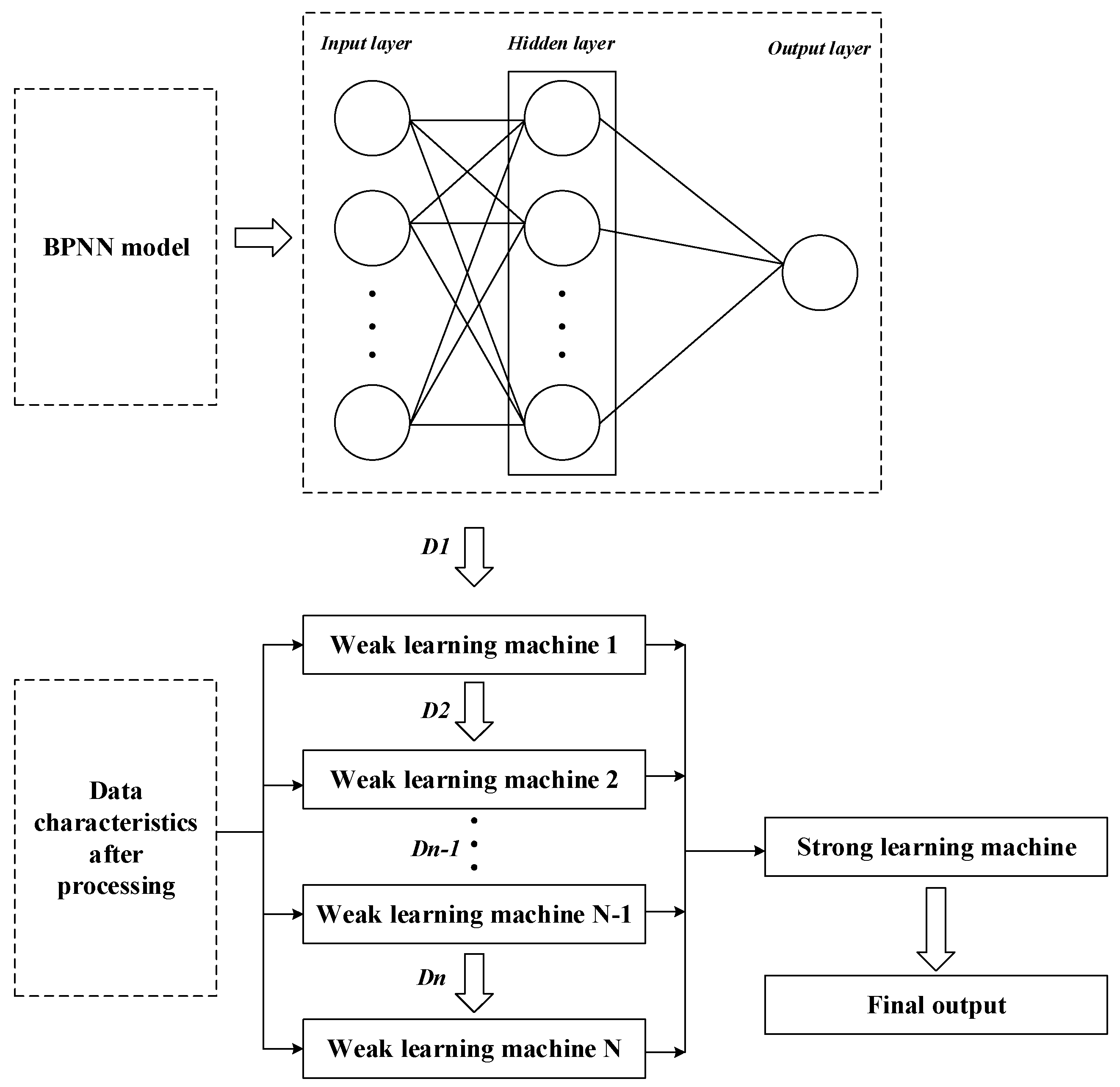

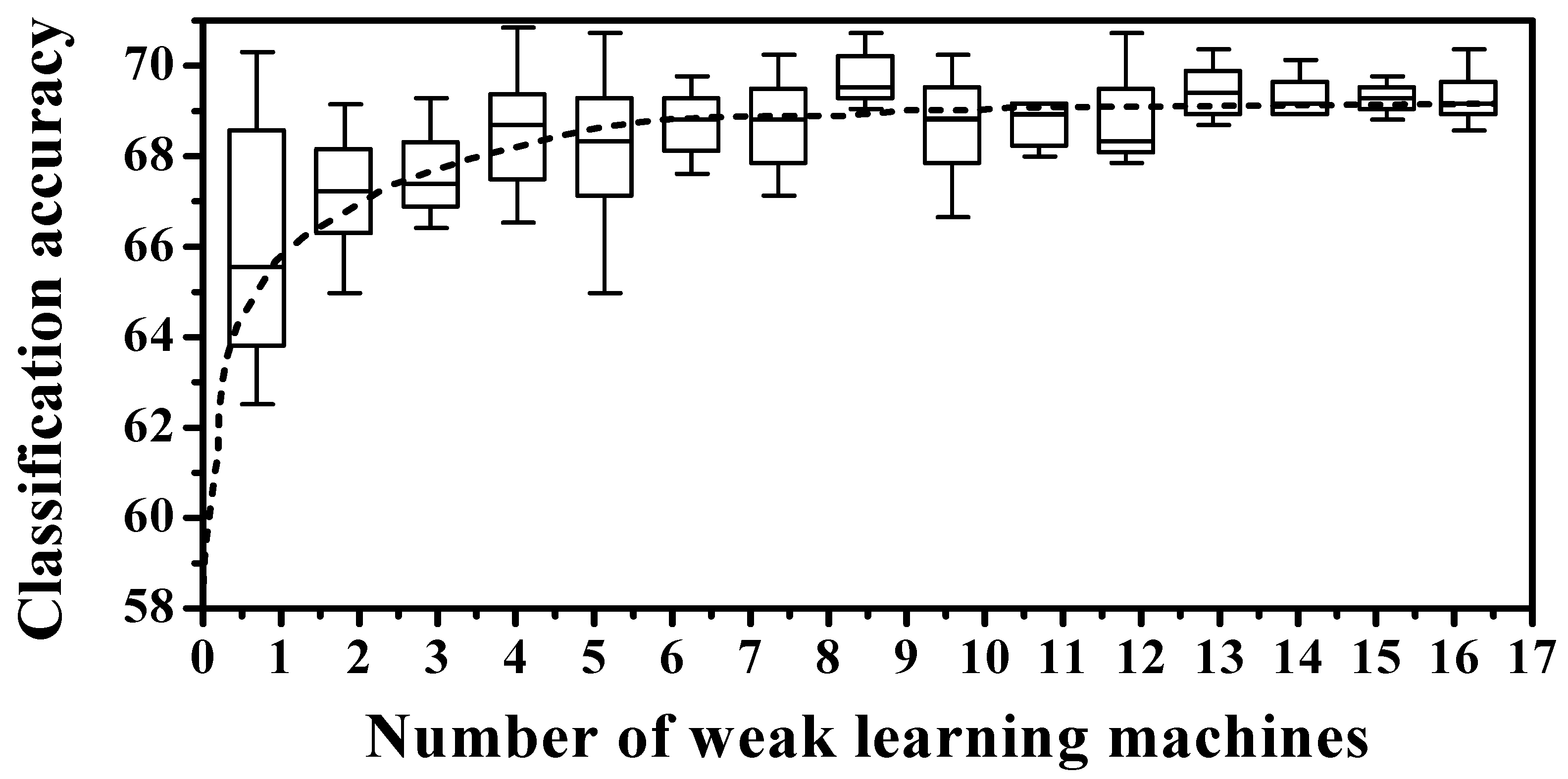

3.2. Prediction of the Development Trend of VR Film and Television based on the AdaBoost-BP Algorithm

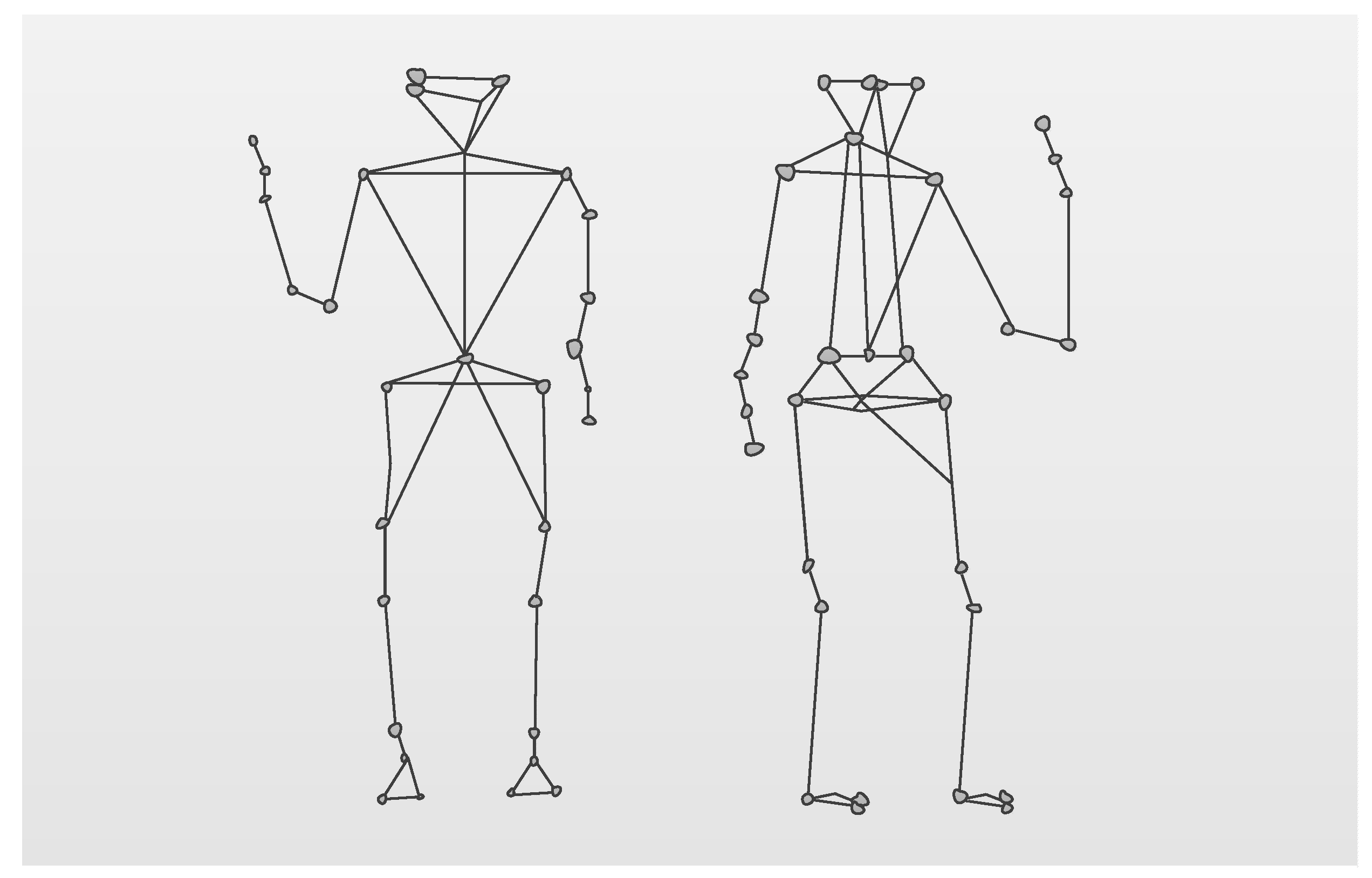

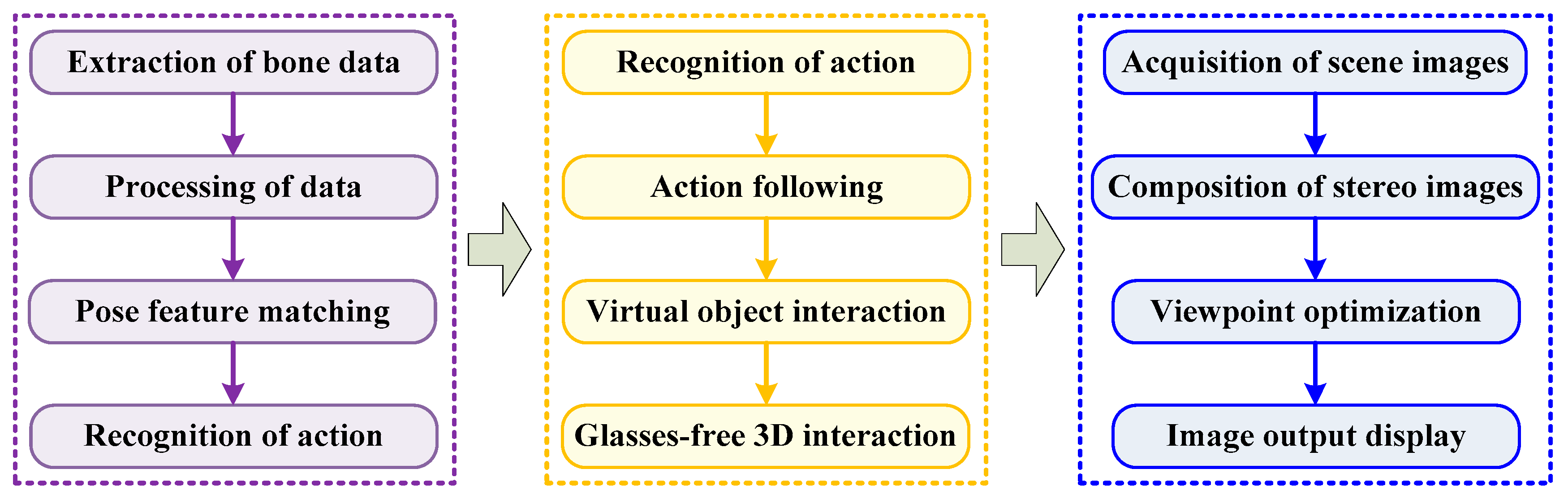

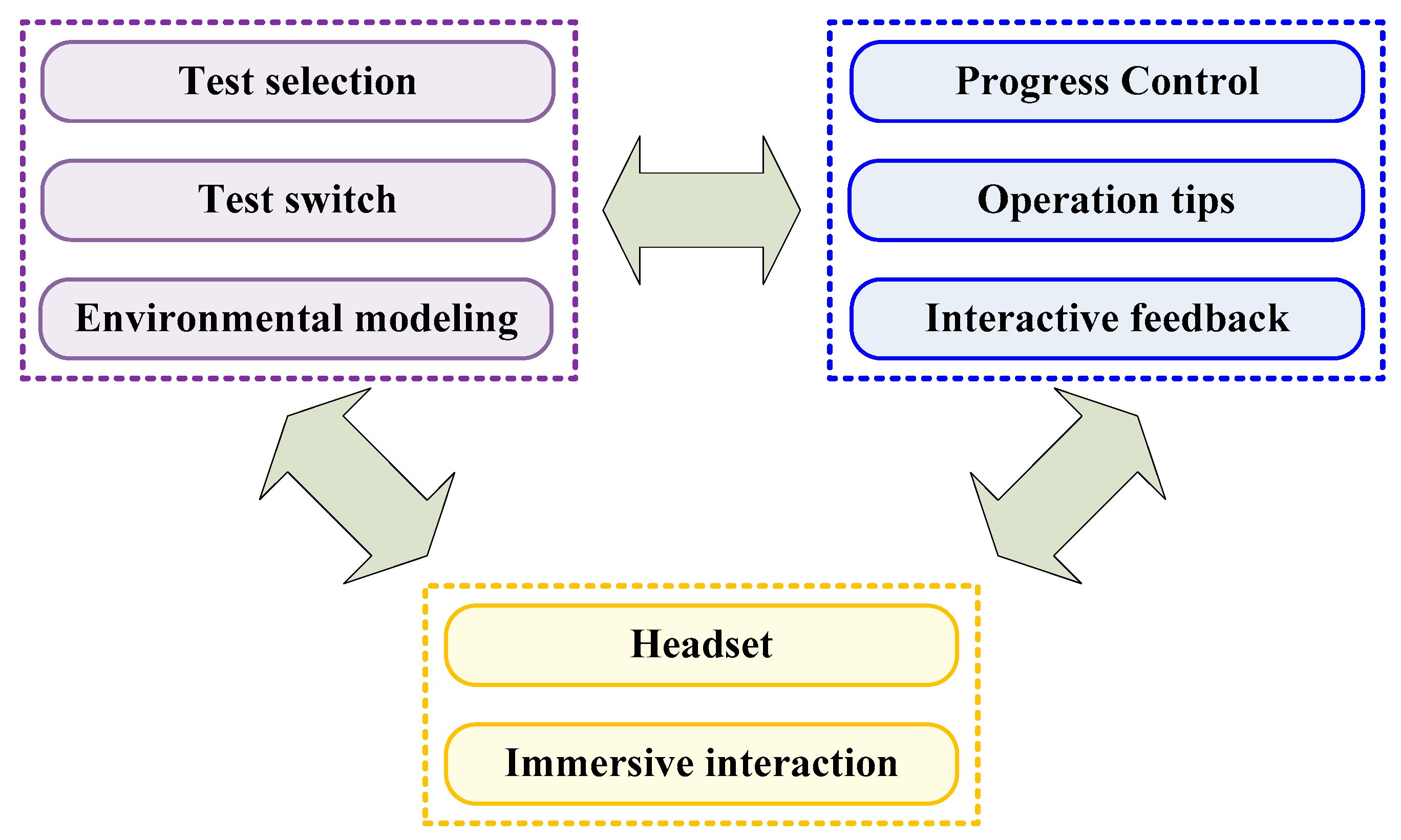

3.3. The Interactive Application-Implementation-Based IoT Technology

4. Results

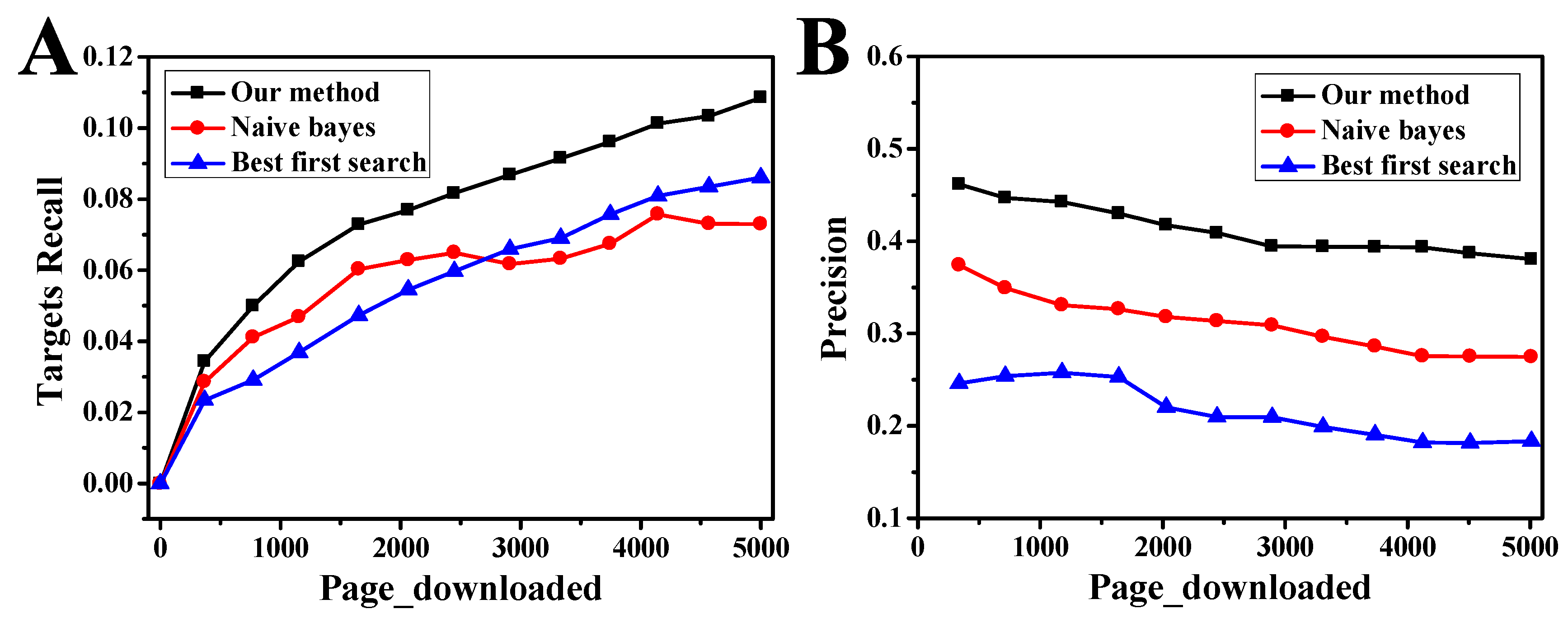

4.1. Validation of Crawler Tools and Prediction Models

4.2. Analysis of External Feature Set Prediction Results of VR Film and Television Data

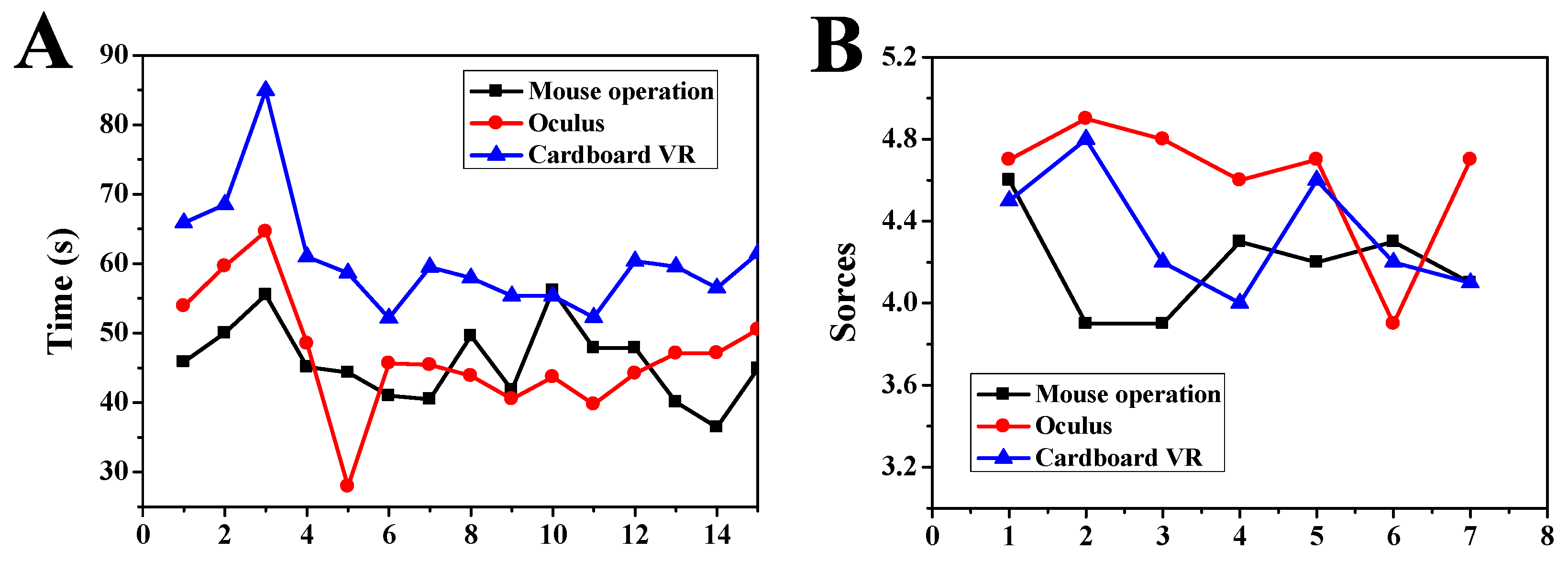

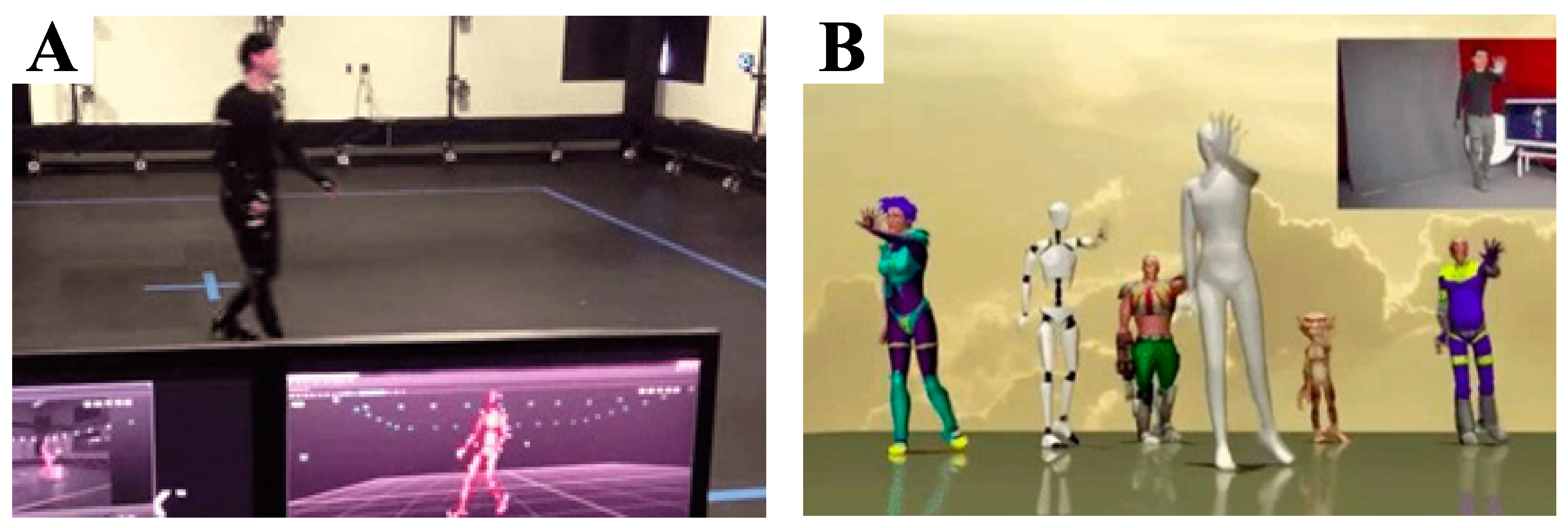

4.3. The Application Verification of VR Human–Computer Interaction Based on IoT Technology

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Geng, J.; Chai, C.S.; Jong, M.S.Y.; Luk, E.T.H. Understanding the pedagogical potential of Interactive Spherical Video-based Virtual Reality from the teachers’ perspective through the ACE framework. Interact. Learn. Environ. 2019, 1–16. [Google Scholar] [CrossRef]

- Yang, J.R.; Tan, F.H. Classroom Education Using Animation and Virtual Reality of the Great Wall of China in Jinshanling: Human Subject Testing. In Didactics of Smart Pedagogy; Springer: Cham, Switzerland, 2019; pp. 415–431. [Google Scholar]

- García-Pereira, I.; Vera, L.; Aixendri, M.P.; Portalés, C.; Casas, S. Multisensory Experiences in Virtual Reality and Augmented Reality Interaction Paradigms. In Smart Systems Design, Applications, and Challenges; IGI Global: Hershey, PA, USA, 2020; pp. 276–298. [Google Scholar]

- Tang, J.; Zhang, X. Hybrid Projection For Encoding 360 VR Videos. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 440–447. [Google Scholar]

- Wu, J.; Guo, R.; Wang, Z.; Zeng, R. Integrating spherical video-based virtual reality into elementary school students’ scientific inquiry instruction: Effects on their problem-solving performance. Interact. Learn. Environ. 2019, 1–14. [Google Scholar] [CrossRef]

- Choe, N.; Zhao, H.; Qiu, S.; So, Y. A sensor-to-segment calibration method for motion capture system based on low cost MIMU. Measurement 2019, 131, 490–500. [Google Scholar] [CrossRef]

- Liu, Q.; Qian, G.; Meng, W.; Ai, Q.; Yin, C.; Fang, Z. A new IMMU-based data glove for hand motion capture with optimized sensor layout. Int. J. Intell. Robot. Appl. 2019, 3, 19–32. [Google Scholar] [CrossRef]

- Deiss, D.; Irace, C.; Carlson, G.; Tweden, K.S.; Kaufman, F.R. Real-world safety of an implantable continuous glucose sensor over multiple cycles of use: A post-market registry study. Diabetes Technol. Ther. 2020, 22, 48–52. [Google Scholar] [CrossRef]

- Jones, S.; Dawkins, S. The sensorama revisited: Evaluating the application of multi-sensory input on the sense of presence in 360-degree immersive film in virtual reality. Augment. Real. Virtual Real. 2018, 7, 183–197. [Google Scholar]

- Zhang, F.; Wei, Q.; Xu, L. An fast simulation tool for fluid animation in vr application based on gpus. Multimed. Tools Appl. 2019, 1–24. [Google Scholar] [CrossRef]

- Yu, J. A light-field journey to virtual reality. IEEE Multimed. 2017, 24, 104–112. [Google Scholar] [CrossRef]

- Xu, Q.; Ragan, E.D. Effects of character guide in immersive virtual reality stories. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2019; pp. 375–391. [Google Scholar]

- Wang, Y. Analysis of VR (Virtual Reality) Movie Sound Production Process. Adv. Motion Pict. Technol. 2017, 1, 22–28. [Google Scholar]

- Ben-Daya, M.; Hassini, E.; Bahroun, Z. Internet of things and supply chain management: A literature review. Int. J. Prod. Res. 2019, 57, 4719–4742. [Google Scholar] [CrossRef] [Green Version]

- Hebling, E.D.; Partesotti, E.; Santana, C.P.; Figueiredo, A.; Dezotti, C.G.; Botechia, T.; Cielavin, S. MovieScape: Audiovisual Landscapes for Silent Movie: Enactive Experience in a Multimodal Installation. In Proceedings of the 9th International Conference on Digital and Interactive Arts, Braga, Portugal, 23–25 October 2019; pp. 1–7. [Google Scholar]

- Yu, T.; Zhao, J.; Huang, Y.; Li, Y.; Liu, Y. Towards Robust and Accurate Single-View Fast Human Motion Capture. IEEE Access 2019, 7, 85548–85559. [Google Scholar] [CrossRef]

- Takahashi, K.; Mikami, D.; Isogawa, M.; Sun, S.; Kusachi, Y. Easy Extrinsic Calibration of VR System and Multi-camera Based Marker-Less Motion Capture System. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Beijing, China, 10–18 October 2019; pp. 83–88. [Google Scholar]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Camarinopoulos, S.; Doulamis, N.; Miaoulis, G. Dance pose identification from motion capture data: A comparison of classifiers. Technologies 2018, 6, 31. [Google Scholar] [CrossRef] [Green Version]

- Rose, T.; Nam, C.S.; Chen, K.B. Immersion of virtual reality for rehabilitation—Review. Appl. Ergon. 2018, 69, 153–161. [Google Scholar] [CrossRef] [PubMed]

- Jinnai, Y.; Fukunaga, A. On hash-based work distribution methods for parallel best-first search. J. Artif. Intell. Res. 2017, 60, 491–548. [Google Scholar] [CrossRef] [Green Version]

- Feng, X.; Li, S.; Yuan, C.; Zeng, P.; Sun, Y. Prediction of slope stability using naive bayes classifier. KSCE J. Civ. Eng. 2018, 22, 941–950. [Google Scholar] [CrossRef]

- Choi, J.; Lee, K.K.; Choi, J. Determinants of User Satisfaction with Mobile VR Headsets: The Human Factors Approach by the User Reviews Analysis and Product Lab Testing. Int. J. Contents 2019, 15, 1–9. [Google Scholar]

- Rothe, S.; Brunner, H.; Buschek, D.; Hußmann, H. Spaceline: A Way of Interaction in Cinematic Virtual Reality. In Proceedings of the Symposium on Spatial User Interaction, Berlin, Germany, 13–14 October 2018; p. 179. [Google Scholar]

- Rothe, S.; Tran, K.; Hussmann, H. Positioning of Subtitles in Cinematic Virtual Reality. In Proceedings of the ICAT-EGVE, 2018, Limassol, Cyprus, 7–9 November 2018; pp. 1–8. [Google Scholar]

- Johnston, A.P.; Rae, J.; Ariotti, N.; Bailey, B.; Lilja, A.; Webb, R.; McGhee, J. Journey to the centre of the cell: Virtual reality immersion into scientific data. Traffic 2018, 19, 105–110. [Google Scholar] [CrossRef] [Green Version]

- Hudson, S.; Matson-Barkat, S.; Pallamin, N.; Jegou, G. With or without you? Interaction and immersion in a virtual reality experience. J. Bus. Res. 2019, 100, 459–468. [Google Scholar] [CrossRef]

- Shen, C.-W.; Ho, J.-T.; Ly, P.T.M.; Kuo, T.-C. Behavioural intentions of using virtual reality in learning: Perspectives of acceptance of information technology and learning style. Virtual Real. 2019, 23, 313–324. [Google Scholar] [CrossRef]

- Shen, C.-W.; Luong, T.-H.; Ho, J.-T.; Djailani, I. Social media marketing of IT service companies: Analysis using a concept-linking mining approach. Ind. Mark. Manag. 2019. [Google Scholar] [CrossRef]

- Shen, C.-W.; Min, C.; Wang, C.-C. Analyzing the trend of O2O commerce by bilingual text mining on social media. Comput. Hum. Behav. 2019, 101, 474–483. [Google Scholar] [CrossRef]

| Field Names | Field Type | Field Length | Empty or Not | Introductions |

|---|---|---|---|---|

| ID | NUMBER | 10 | Yes | Recording ID |

| RULE_NEME | VARCHAR | 200 | Yes | Information extraction rule name |

| RULE_TITLE | VARCHAR | 200 | Yes | Extraction rule-title of the article |

| RULE_ABSTRA | VARCHAR | 200 | Yes | Extraction rule-abstract of the article |

| RULE_IMG | VARCHAR | 200 | Yes | Extraction rule-figures of the article |

| RULE_AUTHOR | VARCHAR | 200 | Yes | Extraction rule-authors of the article |

| RULE_CONTENT | VARCHAR | 200 | Yes | Extraction rule-the text of the article |

| CREATE_USER_ID | NUMBER | 10 | Yes | Creator ID |

| CREATE_TIME | DATATIME | 8 | No | Creating time |

| Weak Learning Machine ID | Root Mean Squared Error | Weights |

|---|---|---|

| 1 | 0.35 | 0.11 |

| 2 | 0.39 | 0.10 |

| 3 | 0.38 | 0.10 |

| 4 | 0.42 | 0.10 |

| 5 | 0.39 | 0.99 |

| 6 | 0.41 | 0.98 |

| Sample 1 | Sample 2 | Sample 3 | Sample 4 | Sample 5 | |

|---|---|---|---|---|---|

| Expected values | 0.11 | 0.17 | 0.09 | 0.13 | 0.16 |

| Predicted values | 0.09 | 0.15 | 0.07 | 0.12 | 0.11 |

| Error values | 0.15 | 0.14 | 0.09 | 0.06 | 0.18 |

| Motions | Wave | Leaning | Squat | Raising Hands | Stepping |

|---|---|---|---|---|---|

| Recognition accuracy | 90.26% | 100.00% | 98.17% | 98.26% | 90.87% |

| Projects | Video Stream Template Matching | DTW | FSM | Proposed Method |

|---|---|---|---|---|

| Recognition accuracy | 86.37% | 95.97% | 95.33% | 91.37% |

| Speed | Slow | Relatively fast | Relatively fast | Fast |

| Off-line training | Yes | Yes | No | No |

| Expansibility | Bad | Relatively bad | Good | Good |

| Adapter | 2D, simple | Complex, continuous, standard | Simple, continuous, definition | Simple, continuous/non-continuous |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Q.; Wook, Y.S. Exploration of the Application of Virtual Reality and Internet of Things in Film and Television Production Mode. Appl. Sci. 2020, 10, 3450. https://doi.org/10.3390/app10103450

Song Q, Wook YS. Exploration of the Application of Virtual Reality and Internet of Things in Film and Television Production Mode. Applied Sciences. 2020; 10(10):3450. https://doi.org/10.3390/app10103450

Chicago/Turabian StyleSong, Qian, and Yoo Sang Wook. 2020. "Exploration of the Application of Virtual Reality and Internet of Things in Film and Television Production Mode" Applied Sciences 10, no. 10: 3450. https://doi.org/10.3390/app10103450

APA StyleSong, Q., & Wook, Y. S. (2020). Exploration of the Application of Virtual Reality and Internet of Things in Film and Television Production Mode. Applied Sciences, 10(10), 3450. https://doi.org/10.3390/app10103450