A Review of Convolutional Neural Network Applied to Fruit Image Processing

Abstract

:1. Introduction

- To the best of our knowledge, the presented paper is the first study that extensively reviews the application of CNN-based models to fruit image processing.

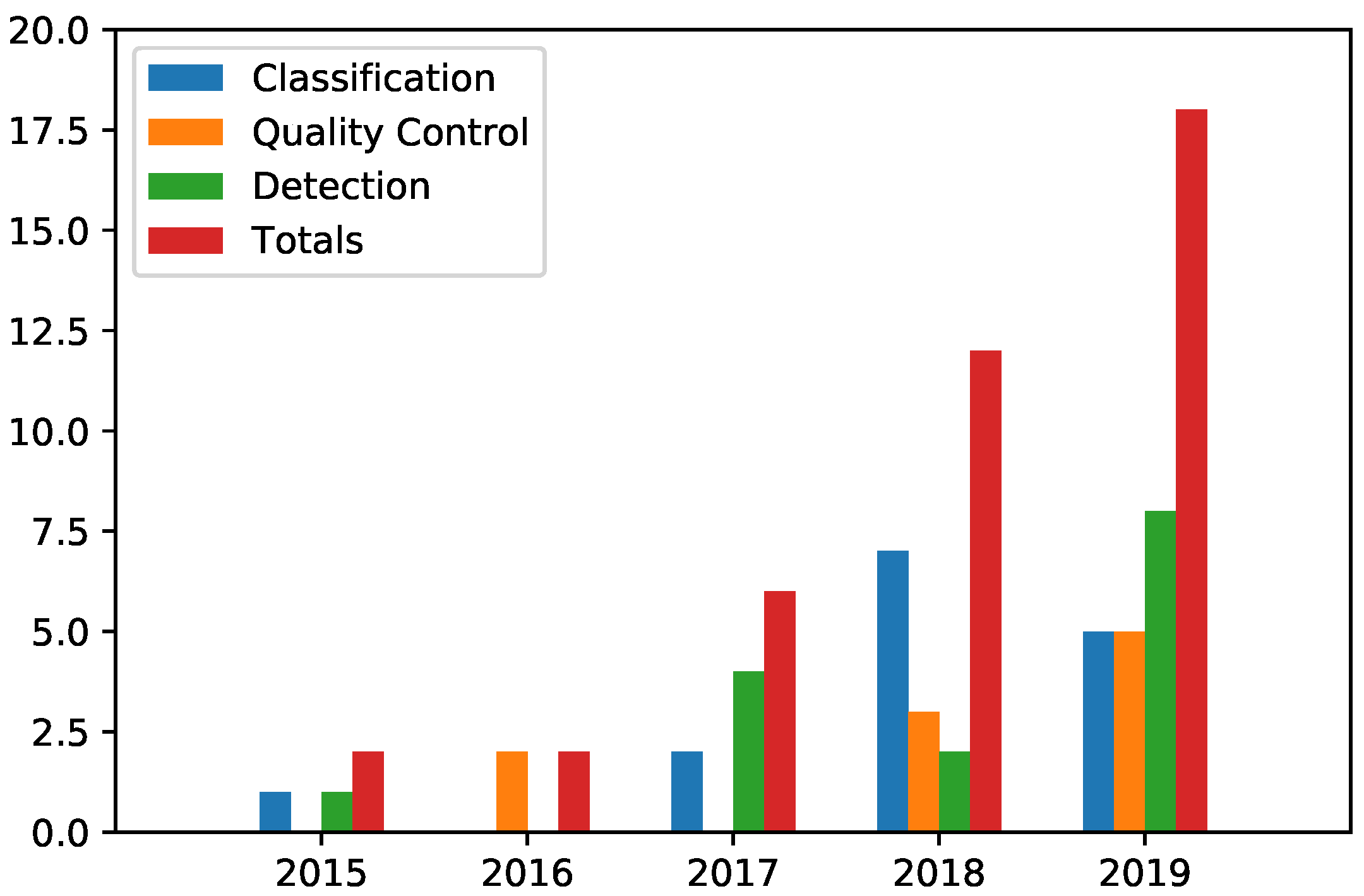

- Our study covers very recent literature from 2015 to the present, due to the novelty of the use of CNNs in the studied area.

- We summarize the main aspects, properties, and results of the collected works on three main areas of the agri-food industry related to fruit classification, fruit quality control, and fruit detection.

- Aiming to give a better understanding of how CNN models are implemented, we present a theoretical background on CNNs and also provide two practical examples of CNN model for fruit classification.

2. Preliminaries

3. Background on Convolutional Neural Networks

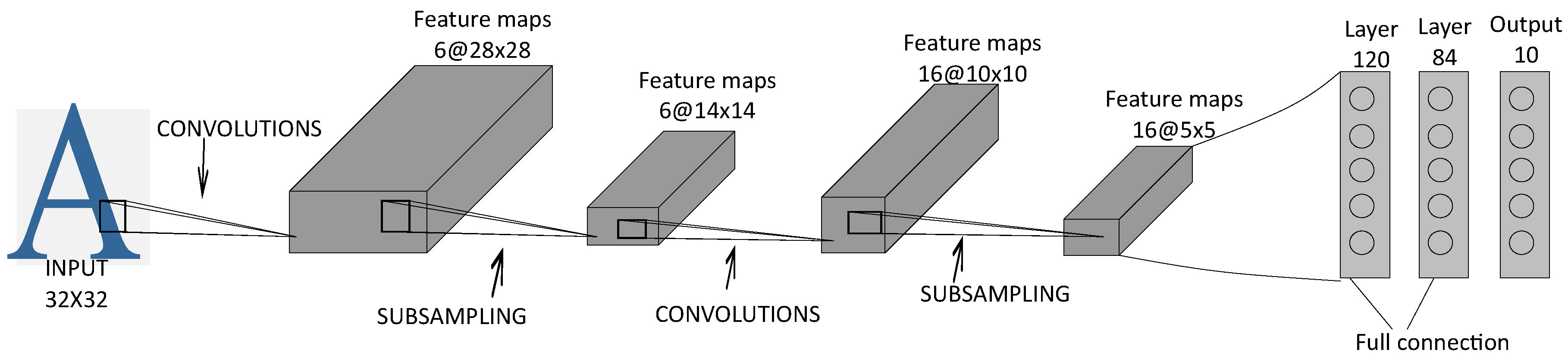

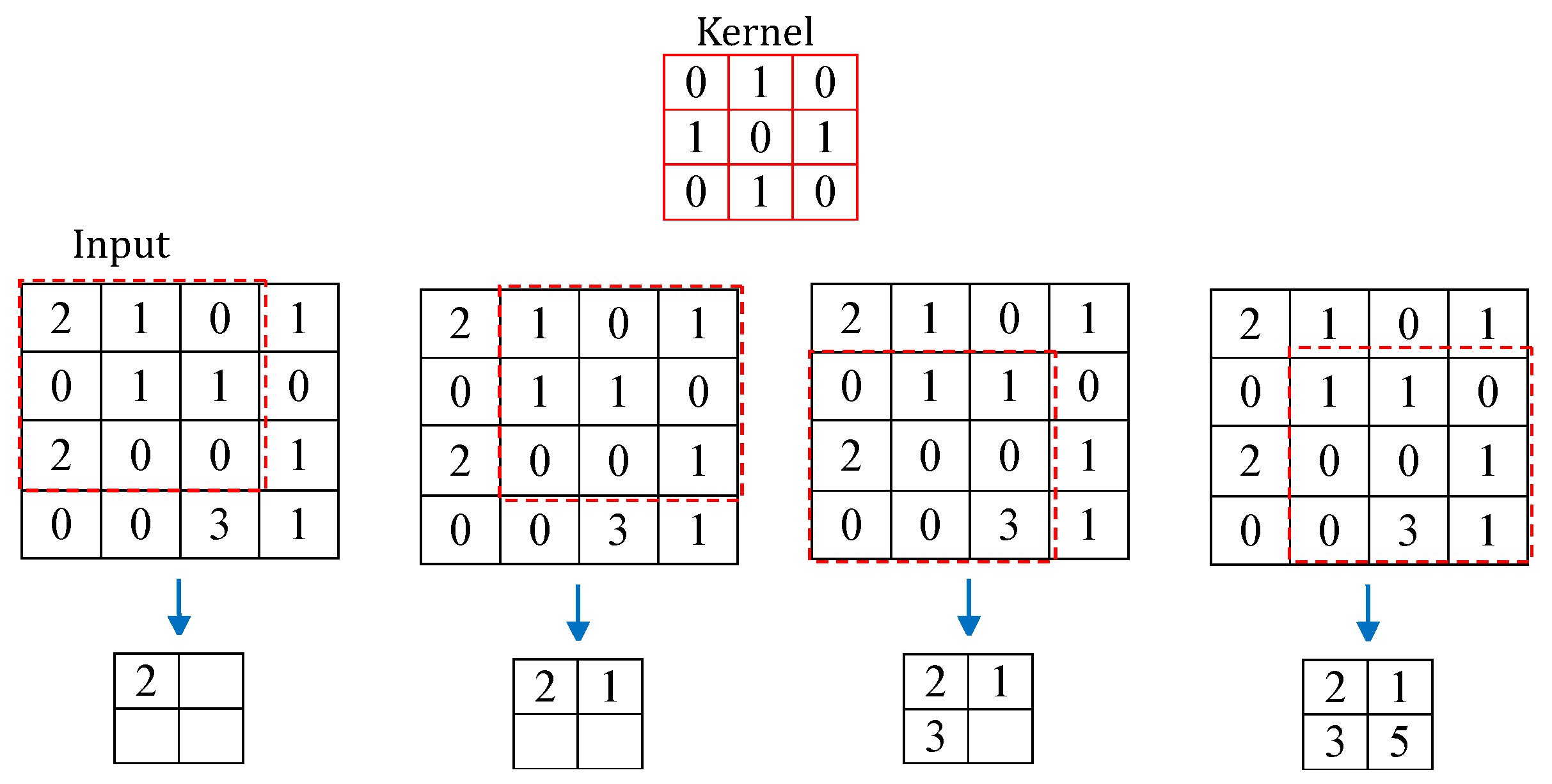

3.1. CNN Architecture

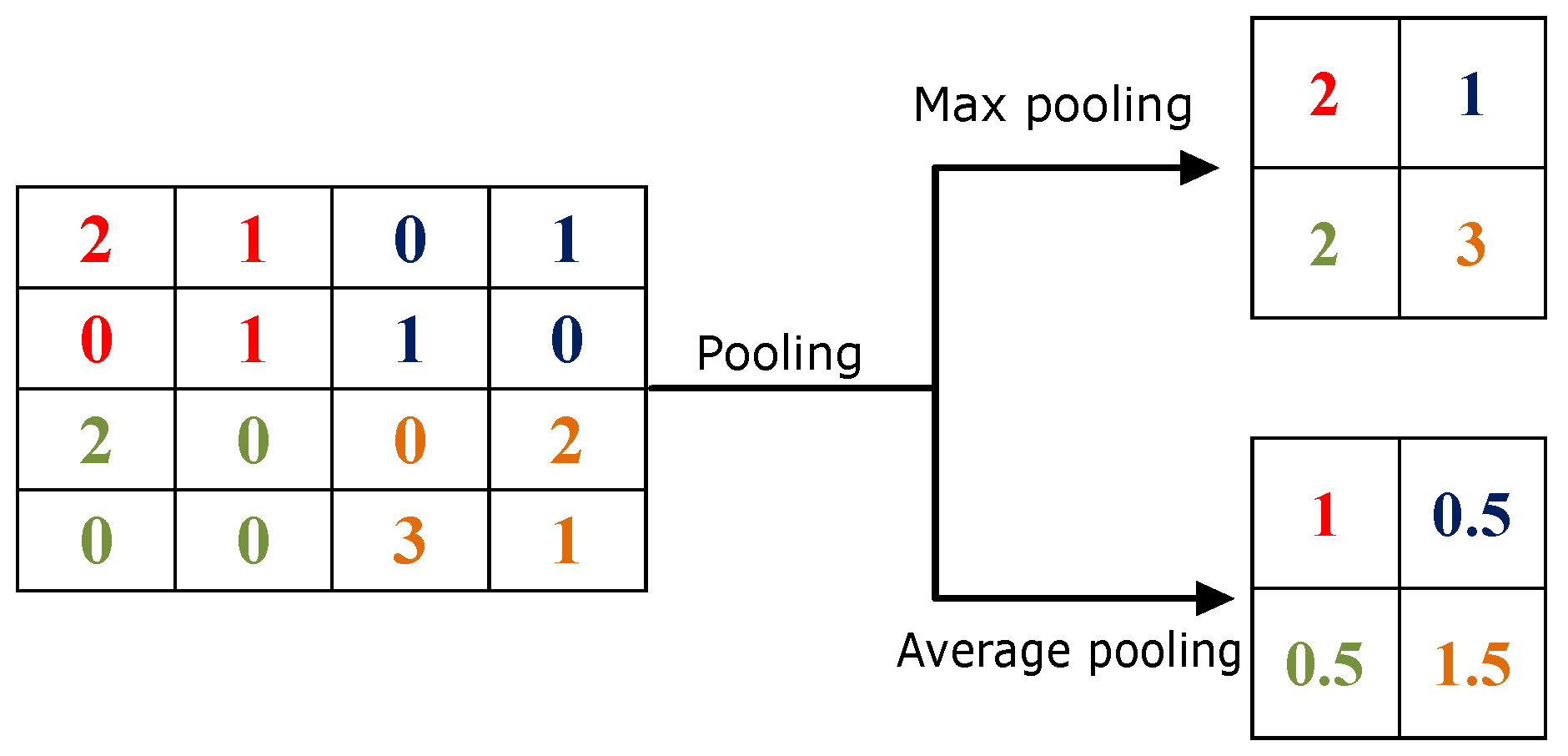

- Max pooling: it calculates the maximum value for each patch of the input [48,49]. The max-pooling layer preserves the maximum value of each patch by sliding the filter over the feature map. Mathematically it has the form:Commonly, in max pooling layer a filters are applied with a stride of 2. It downsamples the input by 2 along its dimensions and discards the of the convolutional outputs.

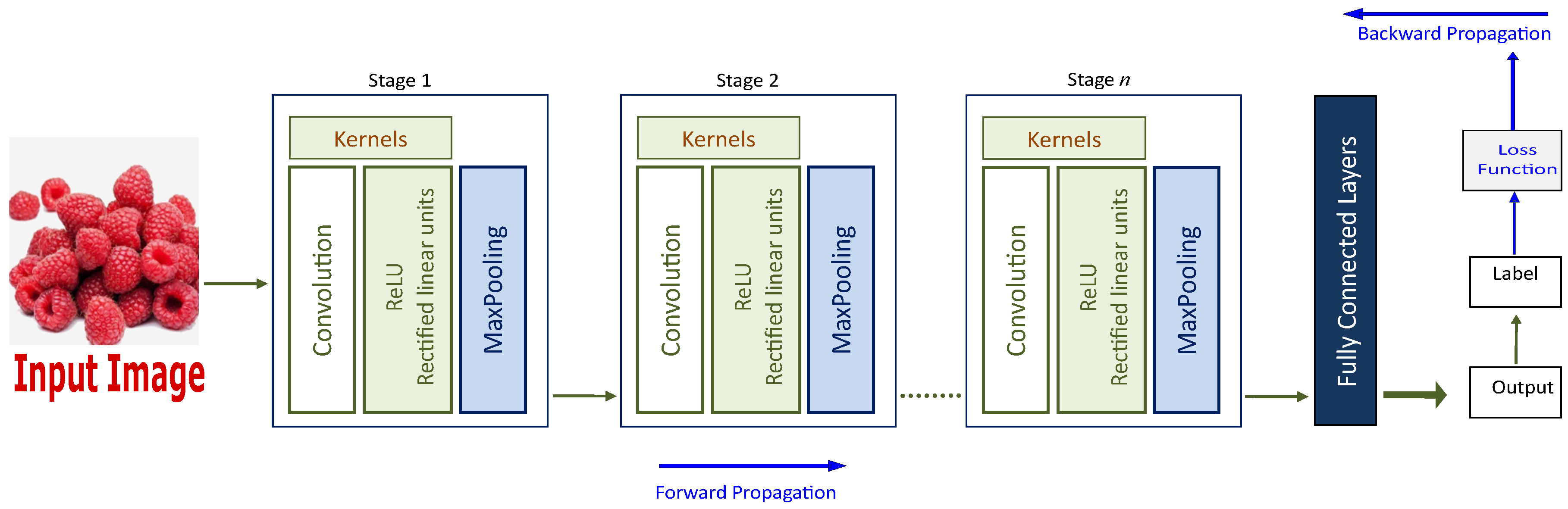

3.2. Training Process of CNN

- Select a training dataset of images, usually taken by batch with lesser dimensions.

- Pass each batch over the network and obtain the output.

- Compute the error between the given labels and the output predictions by using a loss function L.

- Propagate the error throughout the network by the backpropagation algorithm.

- Update the weights W to minimize the error.

- Repeat until converge or reach a limit of iterations.

- Define the CNN architecture: it consists of establishing the number of layers for each corresponding type, as well as the size and number of filters for each layer. The architecture design always depends on the objective of CNN.

- Loss function: it measures the difference between the given ground-truth labels and the outputs of the network. Typically, the Mean Squared Error function is applied and it is given by:Hence, L must be minimized to find the contribution of each weight and optimized them. The gradient descent algorithm is widely adopted for the minimization procedure, which is mathematically expressed as partial derivative of the loss function. Then, the parameter update process is formulated as follows [19]:where denotes the learning rate. Thus, the learning rate is a very important hyper-parameters and must be established before starting the training process. It should be noted that a lower learning rate can give a more accurate result, but the network may take longer to train.

- Training dataset: the available data is generally divided into three subsets: a training set to train the network, the validation set to evaluate the model during the training process, and the testing set to evaluate the final trained model. Most CNN frameworks require that all training data have the same shape (i.e., dimensions). Therefore, pre-processing the data is the first step before the training process to normalize the data.

3.3. Transfer Learning with CNN

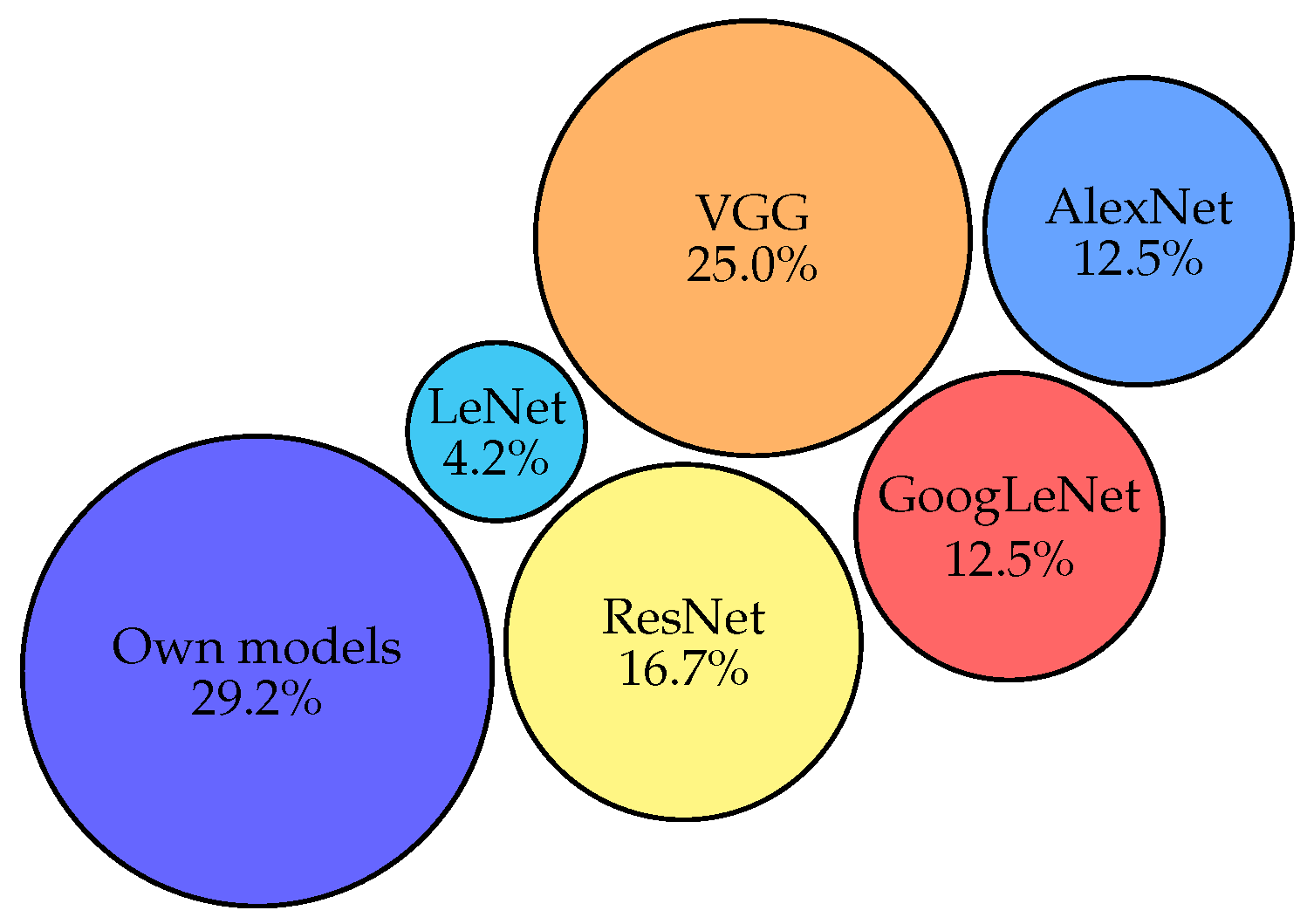

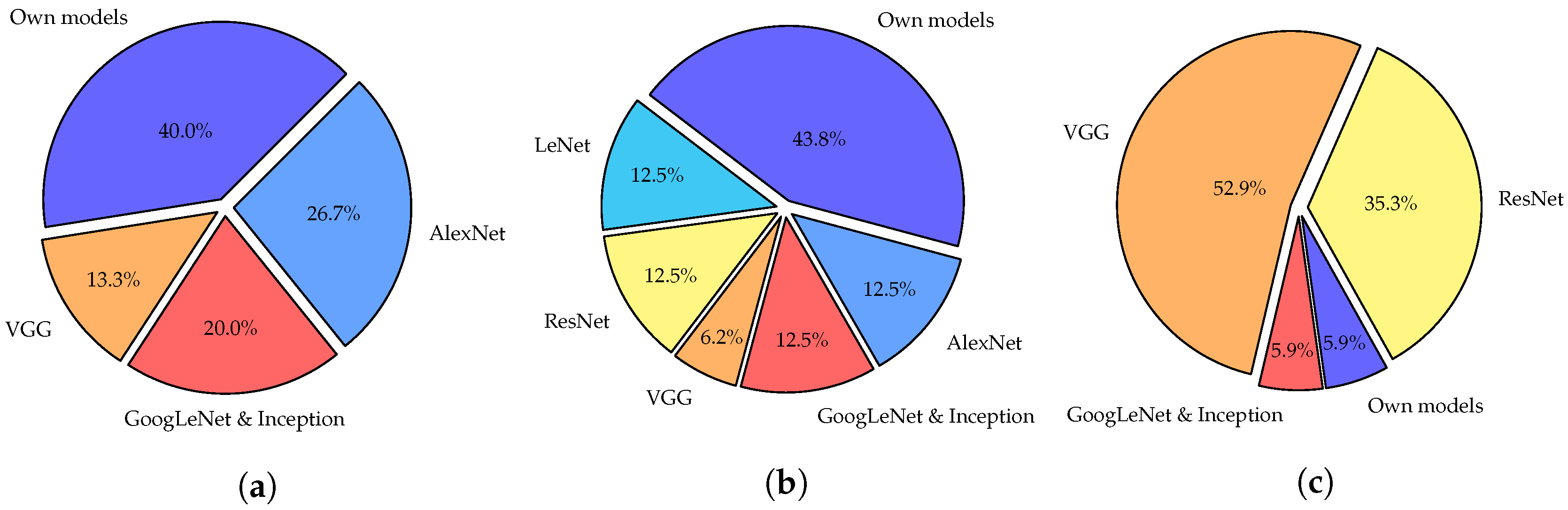

4. CNN-Based Approaches for Fruit Classification Tasks

5. CNN-Based Approaches for Fruit Quality Control Tasks

6. CNN-Based Approaches for Fruit Detection

7. Discussion on the Review of CNN-Based Approaches for Fruit Image Processing

Challenges and Future Research Directions

- Size of the datasets—the dataset must be sufficient large and well labeled to train CNN, address overfitting problems, and to perform the assigned task efficiently. Therefore, the process of preparing the dataset is one of the activities that require more time and effort in the application of CNN. Although there is a wide variety of databases proposed by the authors, not all are available, for this reason, the reproducibility of all studies is not entirely guaranteed. In addition, in many cases, the databases are collected depending on the task at hand.

- Search of CNN parameters: the number of layers and filters when proposing a CNN architecture for a specific problem, as well as determining the parameters and hyperparameters of the model, remains a relevant problem commonly solve by trial-and-error tuning until getting the best settings, which is very time-consuming for very deep models. At this point, pre-trained CNN models represent a great help since they can be taken as the basic design of other CNNs. Besides, other recent approaches, such as Multi-layer Extreme Learning Machine [98], could be evaluated aiming to reduce the computation time for tuning network parameters and the amount of data for training purposes.

- Multi-fruit classification—in fruit classification studies, we found that no evaluation has been carried out with multiple types of fruit in the same image, limiting themselves to images with a single kind of fruit, either individually or grouped. Thus, the challenge is to design a CNN model for multi-detection and classification of different kinds of fruit at the same time.

- Pre-processing of fruit images for quality control—almost all the quality control works were carried out under laboratory conditions by using sensors that are not ready for real conditions. Hence, extensive pre-processing procedures are required in all cases, making them very hard to implement efficiently in real-world scenarios.

8. Deep Learning Frameworks and CNN-Based Examples

8.1. CNN Frameworks

- TensorFlow [99]: is an open source ML library developed by Google, which provides a collection of workflows to develop and train models using Python, C++, JavaScript, or Java.

- Caffe [52]: Convolutional Architecture for Fast Feature Embedding (Caffe) is a DL framework developed by Berkeley AI Research (BAIR) at UC Berkeley. It is open-source, under a BSD license. It is written in C++, with a Python interface.

- Theano [100]: is a Python library that allows to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently. It has been one of the most used CPU and GPU mathematical compilers, especially in machine learning.

- PyTorch [101]: is an ML library based on Torch and Caffe2, which is used by Facebook, IBM, among others. It supports Lua programming language for the user interface. It is an open-source and well-supported on major cloud platforms, providing frictionless development and easy scaling.

- MatLab Deep Learning Toolbox [102]: is a MATLAB toolbox that provides a framework for designing and implementing deep neural networks with algorithms, pre-trained models, and apps. It can exchange models with TensorFlow and PyTorch, and also import models from TensorFlow-Keras and Caffe.

- MatConvNet [103]: is a MATLAB toolbox implementing CNNs for computer vision applications. It can run state-of-the-art CNNs models, pre-trained CNNs for image classification, segmentation, face recognition, and text detection.

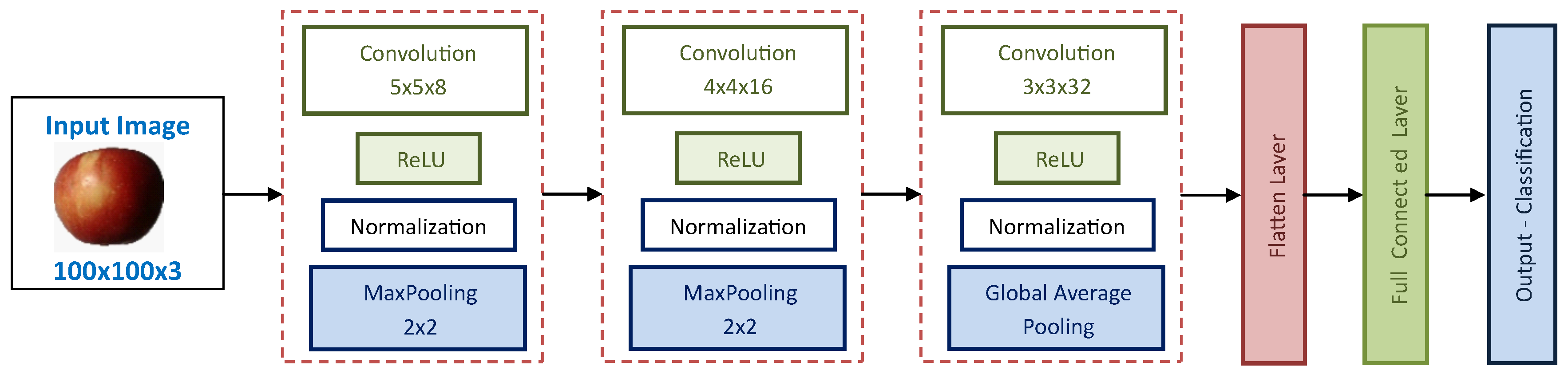

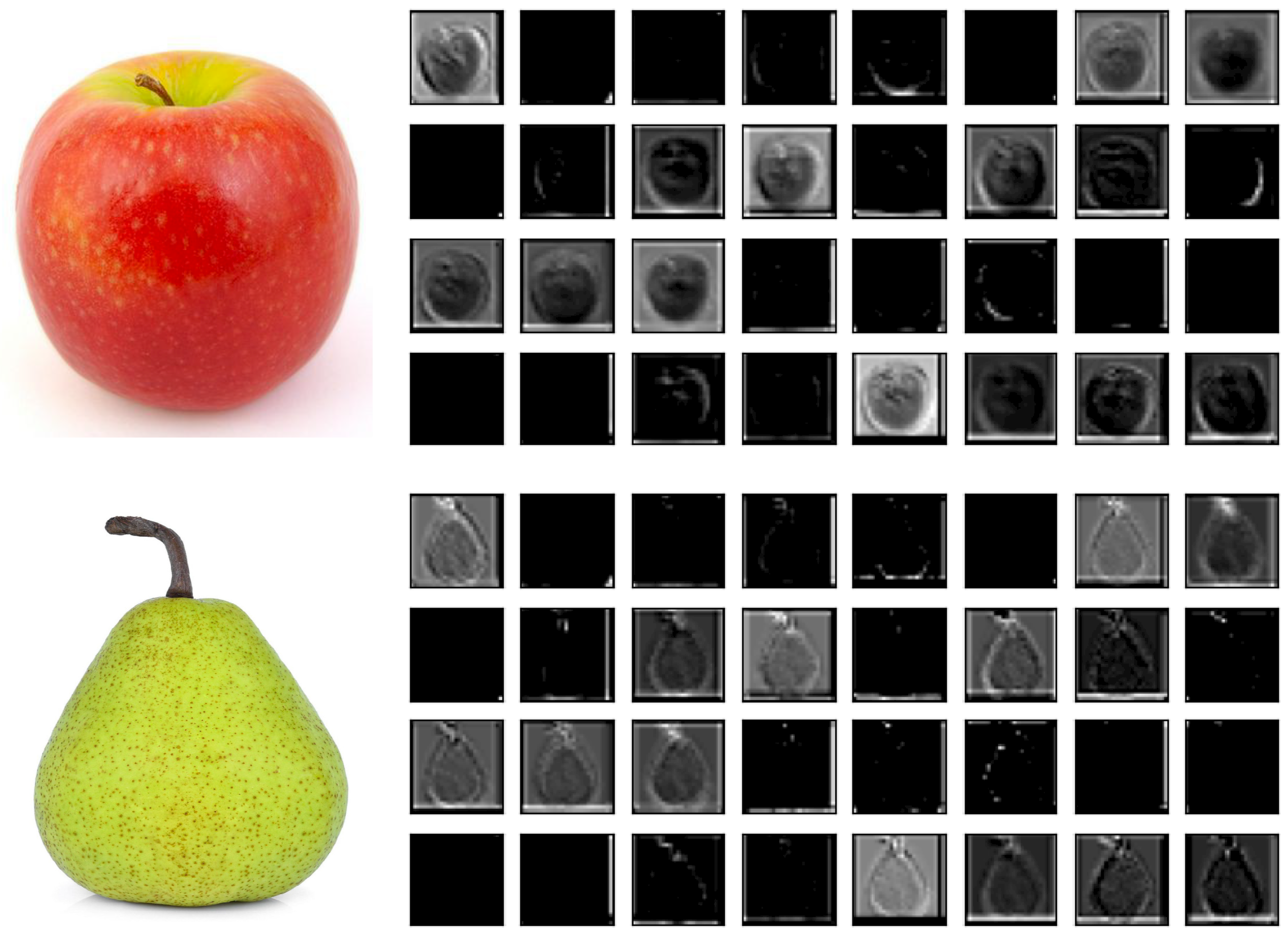

8.2. CNN-Based Examples for Fruit Classification

8.2.1. Example of Fruit Classification

8.2.2. Example of Fruit Quality Classification

- Rotation in the range of degrees.

- Width and/or height shifting of of the image dimensions.

- Zoom the image in the range of .

- Horizontal and/or vertical flipping.

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Abdullahi, H.S.; Sheriff, R.; Mahieddine, F. Convolution neural network in precision agriculture for plant image recognition and classification. In Proceedings of the IEEE 2017 Seventh International Conference on Innovative Computing Technology (Intech), Porto, Portugal, 12–13 July 2017; pp. 1–3. [Google Scholar]

- Annabel, L.S.P.; Annapoorani, T.; Deepalakshmi, P. Machine Learning for Plant Leaf Disease Detection and Classification–A Review. In Proceedings of the IEEE 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019; pp. 538–542. [Google Scholar]

- Agarwal, M.; Kaliyar, R.K.; Singal, G.; Gupta, S.K. FCNN-LDA: A Faster Convolution Neural Network model for Leaf Disease identification on Apple’s leaf dataset. In Proceedings of the IEEE 2019 12th International Conference on Information & Communication Technology and System (ICTS), Surabaya, Indonesia, 18 July 2019; pp. 246–251. [Google Scholar]

- Perez, R.M.; Cheein, F.A.; Rosell-Polo, J.R. Flexible system of multiple RGB-D sensors for measuring and classifying fruits in agri-food Industry. Comput. Electron. Agric. 2017, 139, 231–242. [Google Scholar] [CrossRef] [Green Version]

- Rocha, A.; Hauagge, D.C.; Wainer, J.; Goldenstein, S. Automatic fruit and vegetable classification from images. Comput. Electron. Agric. 2010, 70, 96–104. [Google Scholar] [CrossRef] [Green Version]

- Capizzi, G.; Sciuto, G.L.; Napoli, C.; Tramontana, E.; Woźniak, M. Automatic classification of fruit defects based on co-occurrence matrix and neural networks. In Proceedings of the IEEE 2015 Federated Conference on Computer Science and Information Systems (FedCSIS), Lodz, Poland, 13–16 September 2015; pp. 861–867. [Google Scholar]

- Rachmawati, E.; Supriana, I.; Khodra, M.L. Toward a new approach in fruit recognition using hybrid RGBD features and fruit hierarchy property. In Proceedings of the 2017 IEEE 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017; pp. 1–6. [Google Scholar]

- Tao, Y.; Zhou, J. Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Comput. Electron. Agric. 2017, 142, 388–396. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Coppin, B. Artificial Intelligence Illuminated; Jones & Bartlett Learning: Burlington, MA, USA, 2004. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Siau, K. Artificial intelligence, machine learning, automation, robotics, future of work and future of humanity: A review and research agenda. J. Database Manag. 2019, 30, 61–79. [Google Scholar] [CrossRef]

- Samuel, A.L. Some studies in machine learning using the game of checkers. IBM J. Res. Dev. 2000, 44, 206–226. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Gewali, U.B.; Monteiro, S.T.; Saber, E. Machine learning based hyperspectral image analysis: A survey. arXiv 2018, arXiv:1802.08701. [Google Scholar]

- Femling, F.; Olsson, A.; Alonso-Fernandez, F. Fruit and Vegetable Identification Using Machine Learning for Retail Applications. In Proceedings of the IEEE 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 9–15. [Google Scholar]

- Singh, R.; Balasundaram, S. Application of extreme learning machine method for time series analysis. Int. J. Intell. Technol. 2007, 2, 256–262. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1; NIPS’12; Curran Associates Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Lu, Y. Food image recognition by using convolutional neural networks (CNNs). arXiv 2019, arXiv:1612.00983. [Google Scholar]

- Zhang, Y.D.; Dong, Z.; Chen, X.; Jia, W.; Du, S.; Muhammad, K.; Wang, S.H. Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation. Multim. Tools Appl. 2019, 78, 3613–3632. [Google Scholar] [CrossRef]

- Steinbrener, J.; Posch, K.; Leitner, R. Hyperspectral fruit and vegetable classification using convolutional neural networks. Comput. Electron. Agric. 2019, 162, 364–372. [Google Scholar] [CrossRef]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting apples and oranges with deep learning: A data-driven approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands Singapore, Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar]

- Liu, F.; Snetkov, L.; Lima, D. Summary on fruit identification methods: A literature review. In Proceedings of the 2017 3rd International Conference on Economics, Social Science, Arts, Education and Management Engineering (ESSAEME 2017), Huhhot, China, 29–30 July 2017; Atlantis Press SARL: Paris, France, 2017. [Google Scholar]

- Zhang, Y.; Wang, S.; Ji, G.; Phillips, P. Fruit classification using computer vision and feedforward neural network. J. Food Eng. 2014, 143, 167–177. [Google Scholar] [CrossRef]

- Zhang, Y.; Phillips, P.; Wang, S.; Ji, G.; Yang, J.; Wu, J. Fruit classification by biogeography-based optimization and feedforward neural network. Exp. Syst. 2016, 33, 239–253. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Y.; Ji, G.; Yang, J.; Wu, J.; Wei, L. Fruit classification by wavelet-entropy and feedforward neural network trained by fitness-scaled chaotic ABC and biogeography-based optimization. Entropy 2015, 17, 5711–5728. [Google Scholar] [CrossRef] [Green Version]

- Naik, S.; Patel, B. Machine Vision based Fruit Classification and Grading-A Review. Int. J. Comput. Appl. 2017, 170, 22–34. [Google Scholar] [CrossRef]

- Zhu, N.; Liu, X.; Liu, Z.; Hu, K.; Wang, Y.; Tan, J.; Huang, M.; Zhu, Q.; Ji, X.; Jiang, Y.; et al. Deep learning for smart agriculture: Concepts, tools, applications, and opportunities. Int. J. Agric. Biol. Eng. 2018, 11, 32–44. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud Unive. Comput. Inf. Sci. 2018, in press. Available online: https://doi.org/10.1016/j.jksuci.2018.06.002 (accessed on 5 June 2018). [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Hameed, K.; Chai, D.; Rassau, A. A comprehensive review of fruit and vegetable classification techniques. Image Vis. Comput. 2018, 80, 24–44. [Google Scholar] [CrossRef]

- Li, S.; Luo, H.; Hu, M.; Zhang, M.; Feng, J.; Liu, Y.; Dong, Q.; Liu, B. Optical non-destructive techniques for small berry fruits: A review. Artif. Intell. Agric. 2019, 2, 85–98. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Proc. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Cascio, D.; Taormina, V.; Raso, G. Deep Convolutional Neural Network for HEp-2 Fluorescence Intensity Classification. Appl. Sci. 2019, 9, 408. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Boser, B.E.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.E.; Jackel, L.D. Handwritten digit recognition with a back-propagation network. In Advances in Neural Information Processing Systems; Morgan Kaufmann: Burlington, MA, USA, 1990; pp. 396–404. ISBN 1-55860-100-7. [Google Scholar]

- LeCun, Y.; Kavukcuoglu, K.; Farabet, C. Convolutional networks and applications in vision. In Proceedings of the IEEE 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 30 May–2 June 2010; pp. 253–256. [Google Scholar]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imag. 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference On Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of pooling operations in convolutional architectures for object recognition. In International Conference on Artificial Neural Networks; Springer: Berlin, Germany, 2010; pp. 92–101. [Google Scholar]

- Lee, C.Y.; Gallagher, P.W.; Tu, Z. Generalizing pooling functions in convolutional neural networks: Mixed, gated, and tree. Artif. Intell. Stat. 2016, 464–472. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. arXiv 2014, arXiv:1408.5093. [Google Scholar]

- Katarzyna, R.; Paweł, M. A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales. Appl. Sci. 2019, 9, 3971. [Google Scholar] [CrossRef] [Green Version]

- Sakib, S.; Ashrafi, Z.; Siddique, M.A.B. Implementation of Fruits Recognition Classifier using Convolutional Neural Network Algorithm for Observation of Accuracies for Various Hidden Layers. arXiv 2019, arXiv:1904.00783. [Google Scholar]

- Mureşan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sapientiae Inform. 2018, 10, 26–42. [Google Scholar] [CrossRef] [Green Version]

- Zhu, L.; Li, Z.; Li, C.; Wu, J.; Yue, J. High performance vegetable classification from images based on alexnet deep learning model. Int. J. Agric. Biol. Eng. 2018, 11, 217–223. [Google Scholar] [CrossRef]

- Hussain, I.; He, Q.; Chen, Z. Automatic fruit recognition based on dcnn for commercial source trace system. Int. J. Comput. Sci. Appl. IJCSA 2018, 8. [Google Scholar] [CrossRef]

- Lu, S.; Lu, Z.; Aok, S.; Graham, L. Fruit classification based on six layer convolutional neural network. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Patino-Saucedo, A.; Rostro-Gonzalez, H.; Conradt, J. Tropical Fruits Classification Using an AlexNet-Type Convolutional Neural Network and Image Augmentation. In International Conference on Neural Information Processing; Springer: Berlin, Germany, 2018; pp. 371–379. [Google Scholar]

- Wang, S.H.; Chen, Y. Fruit category classification via an eight-layer convolutional neural network with parametric rectified linear unit and dropout technique. Multim. Tools Appl. 2018, 1–17. [Google Scholar] [CrossRef]

- Zeng, G. Fruit and vegetables classification system using image saliency and convolutional neural network. In Proceedings of the 2017 IEEE 3rd Information Technology and Mechatronics Engineering Conference (ITOEC), Chongquing, China, 3–5 October 2017; pp. 613–617. [Google Scholar]

- Hou, S.; Feng, Y.; Wang, Z. Vegfru: A domain-specific dataset for fine-grained visual categorization. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 541–549. [Google Scholar]

- Zhang, W.; Zhao, D.; Gong, W.; Li, Z.; Lu, Q.; Yang, S. Food image recognition with convolutional neural networks. In 2015 IEEE 12th Intl Conf on Ubiquitous Intelligence and Computing and 2015 IEEE 12th Intl Conf on Autonomic and Trusted Computing and 2015 IEEE 15th Intl Conf on Scalable Computing and Communications and Its Associated Workshops (UIC-ATC-ScalCom); IEEE: Piscataway, NJ, USA, 2015; pp. 690–693. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Zhang, Y.; Wu, L. Classification of fruits using computer vision and a multiclass support vector machine. Sensors 2012, 12, 12489–12505. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Lu, Z.; Yang, J.; Zhang, Y.; Liu, J.; Wei, L.; Chen, S.; Phillips, P.; Dong, Z. Fractional Fourier entropy increases the recognition rate of fruit type detection. BMC Plant Biol. 2016, 16. [Google Scholar]

- Lu, Z.; Li, Y. A fruit sensing and classification system by fractional fourier entropy and improved hybrid genetic algorithm. In Proceedings of the 5th International Conference on Industrial Application Engineering (IIAE); Institute of Industrial Applications Engineers: Kitakyushu, Japan, 2017; pp. 293–299. [Google Scholar]

- Jia, W.; Snetkov, L.; Aok, S. An effective model based on Haar wavelet entropy and genetic algorithm for fruit identification. In AIP Conference Proceedings; AIP: Melville, NY, USA, 2018; Volume 1955, pp. 040013-1–040013-4. [Google Scholar]

- Kheiralipour, K.; Pormah, A. Introducing new shape features for classification of cucumber fruit based on image processing technique and artificial neural networks. J. Food Proc. Eng. 2017, 40, e12558. [Google Scholar] [CrossRef]

- Oltean, M. Fruits 360 dataset. Mendeley Data 2018. [Google Scholar] [CrossRef]

- Rocha, A.; Hauagge, D.C.; Wainer, J.; Goldenstein, S. Automatic produce classification from images using color, texture and appearance cues. In 2008 XXI Brazilian Symposium on Computer Graphics and Image Processing; IEEE: Piscataway, NJ, USA, 2008; pp. 3–10. [Google Scholar]

- Matsuda, Y.; Hoashi, H.; Yanai, K. Recognition of Multiple-Food Images by Detecting Candidate Regions. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo (ICME), Melbourne, Australia, 9–13 July 2012. [Google Scholar]

- Wu, A.; Zhu, J.; Ren, T. Detection of apple defect using laser-induced light backscattering imaging and convolutional neural network. Comput. Electric. Eng. 2020, 81, 106454. [Google Scholar] [CrossRef]

- Jahanbakhshi, A.; Momeny, M.; Mahmoudi, M.; Zhang, Y.D. Classification of sour lemons based on apparent defects using stochastic pooling mechanism in deep convolutional neural networks. Sci. Hortic. 2020, 263, 109133. [Google Scholar] [CrossRef]

- Barré, P.; Herzog, K.; Höfle, R.; Hullin, M.B.; Töpfer, R.; Steinhage, V. Automated phenotyping of epicuticular waxes of grapevine berries using light separation and convolutional neural networks. Comput. Electron. Agric. 2019, 156, 263–274. [Google Scholar] [CrossRef] [Green Version]

- Munasingha, L.V.; Gunasinghe, H.N.; Dhanapala, W.W.G.D.S. Identification of Papaya Fruit Diseases using Deep Learning Approach. In Proceedings of the 4th International Conference on Advances in Computing and Technology (ICACT2019), Kelaniya, Sri Lanka, 29–30 July 2019. [Google Scholar]

- Ranjit, K.N.; Raghunandan, K.S.; Naveen, C.; Chethan, H.K.; Sunil, C. Deep Features Based Approach for Fruit Disease Detection and Classification. Int. J. Comput. Sci. Eng. 2019, 7, 810–817. [Google Scholar] [CrossRef]

- Tran, T.T.; Choi, J.W.; Le, T.T.H.; Kim, J.W. A Comparative Study of Deep CNN in Forecasting and Classifying the Macronutrient Deficiencies on Development of Tomato Plant. Appl. Sci. 2019, 9, 1601. [Google Scholar] [CrossRef] [Green Version]

- Sustika, R.; Subekti, A.; Pardede, H.F.; Suryawati, E.; Mahendra, O.; Yuwana, S. Evaluation of Deep Convolutional Neural Network Architectures for Strawberry Quality Inspection. Int. J. Eng.Technol. 2018, 7, 75–80. [Google Scholar]

- Wang, Z.; Hu, M.; Zhai, G. Application of deep learning architectures for accurate and rapid detection of internal mechanical damage of blueberry using hyperspectral transmittance data. Sensors 2018, 18, 1126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Y.; Lian, J.; Fan, M.; Zheng, Y. Deep indicator for fine-grained classification of banana’s ripening stages. EURASIP J. Image Video Proc. 2018, 2018, 46. [Google Scholar] [CrossRef] [Green Version]

- Cen, H.; He, Y.; Lu, R. Hyperspectral imaging-based surface and internal defects detection of cucumber via stacked sparse auto-encoder and convolutional neural network. In 2016 ASABE Annual International Meeting; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2016; p. 1. [Google Scholar]

- Tan, W.; Zhao, C.; Wu, H. Intelligent alerting for fruit-melon lesion image based on momentum deep learning. Multim. Tools Appl. 2016, 75, 16741–16761. [Google Scholar] [CrossRef]

- Williams, H.A.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Ganesh, P.; Volle, K.; Burks, T.F.; Mehta, S.S. Deep Orange: Mask R-CNN based Orange Detection and Segmentation; 6th IFAC Conference on Sensing, Control and Automation Technologies for Agriculture AGRICONTROL 2019. IFAC-PapersOnLine 2019, 52, 70–75. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, J.; Fu, L.; Majeed, Y.; Feng, Y.; Li, R.; Cui, Y. Improved kiwifruit detection using pre-trained VGG16 with RGB and NIR information fusion. IEEE Access 2019, 8, 2327–2336. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; From, P.J. Instance Segmentation and Localization of Strawberries in Farm Conditions for Automatic Fruit Harvesting; 6th IFAC Conference on Sensing, Control and Automation Technologies for Agriculture AGRICONTROL 2019. IFAC-PapersOnLine 2019, 52, 294–299. [Google Scholar] [CrossRef]

- Altaheri, H.; Alsulaiman, M.; Muhammad, G. Date fruit classification for robotic harvesting in a natural environment using deep learning. IEEE Access 2019, 7, 117115–117133. [Google Scholar] [CrossRef]

- Zapotezny-Anderson, P.; Lehnert, C. Towards Active Robotic Vision in Agriculture: A Deep Learning Approach to Visual Servoing in Occluded and Unstructured Protected Cropping Environments. IFAC-PapersOnLine 2019, 52, 120–125. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava detection and pose estimation using a low-cost RGB-D sensor in the field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Habaragamuwa, H.; Ogawa, Y.; Suzuki, T.; Shiigi, T.; Ono, M.; Kondo, N. Detecting greenhouse strawberries (mature and immature), using deep convolutional neural network. Eng. Agric. Environ. Food 2018, 11, 127–138. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [Green Version]

- Bargoti, S.; Underwood, J.P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef] [Green Version]

- Stein, M.; Bargoti, S.; Underwood, J. Image based mango fruit detection, localisation and yield estimation using multiple view geometry. Sensors 2016, 16, 1915. [Google Scholar] [CrossRef]

- Tu, S.; Xue, Y.; Zheng, C.; Qi, Y.; Wan, H.; Mao, L. Detection of passion fruits and maturity classification using Red-Green-Blue Depth images. Biosyst. Eng. 2018, 175, 156–167. [Google Scholar] [CrossRef]

- Park, Y.; Yang, H.S. Convolutional neural network based on an extreme learning machine for image classification. Neurocomputing 2019, 339, 66–76. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Al-Rfou, R.; Alain, G.; Almahairi, A.; Angermueller, C.; Bahdanau, D.; Ballas, N.; Bastien, F.; Bayer, J.; Belikov, A.; Belopolsky, A.; et al. Theano: A Python framework for fast computation of mathematical expressions. arXiv 2016, arXiv:abs/1605.02688. [Google Scholar]

- Facebook, I. PyTorch. Available online: https://pytorch.org/ (accessed on 15 January 2020).

- MathWorks, I. Deep Learning Toolbox™—Matlab. Available online: https://www.mathworks.com/products/deep-learning.html (accessed on 22 January 2020).

- Vedaldi, A.; Lenc, K. MatConvNet—Convolutional Neural Networks for MATLAB. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015. [Google Scholar]

- Ismail, A.; Idris, M.Y.I.; Ayub, M.N.; Por, L.Y. Investigation of Fusion Features for Apple Classification in Smart Manufacturing. Symmetry 2019, 11, 1194. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Data Type | CNN Model | Performance Results |

|---|---|---|---|

| ImageNet [24] | RGB Images | 5-layer CNN model | 74% without data augmentation 90% with data augmentation |

| VegFru [25] | RGB Images | 13-layer CNN model | Accuracy 94.94%, |

| Own [26] | Hyperspectral images | Modified GoogLeNet | 88.15% with Pseudo-RGB images 85.93% with linear combinations 92.23% with convolutional kernels |

| Own [53] | RGB Images | 9-layer CNN model | Accuracy 99.78%. |

| Fruits-360 [54] | RGB Images | Proposed CNN models | Accuracy 100% Training accuracy 99.79% |

| Fruits-360 [55] | RGB Images | AlexNet, GoogLeNet proposed CNN models | Accuracy ∼99% all models |

| ImageNet [56] | RGB Images | AlexNet model | Accuracy 92.1% |

| Own [57] | RGB Images | Proposed CNN models | Accuracy 99%. |

| Own [58] | RGB Images | 6-layer CNN model | Accuracy 91.44% |

| Supermarket Data [59] | RGB Images | Fruit-AlexNet | Accuracy of 99.56% |

| VegFru [60] | RGB Images | 8-layer CNN model | Accuracy 95.67% |

| Own [61] | RGB-image Saliency | Modified VGG | Accuracy 95.6% |

| VegFru [62] | RGB Images N/A | CBP-CNN, VGGNet proposed HybridNet | VGGNet 77.12%–84.46%–72.32%, CBP-CNN 82.21%–87.49%–84.91% HybridNet 83.51%–88.84%–85.78%. |

| UEC-FOOD100 [63] Own | RGB Images | 5-layer CNN model | Accuracy 80.8% single fruit Accuracy 60.9% multi-food |

| Fruit | Data Type | CNN Model | Performance Results |

|---|---|---|---|

| Apple [73] | Laser backscattering spectroscopic images | Modified AlexNet with 11-layers | Defects identification-detection accuracy 92.5% |

| Lemons [74] | RGB images | Three CNN models with 11-16-18 layers | Defects detection accuracy |

| Grapevine [75] | Image capture with the LSL | CNN model | Distribution of epicuticular waxes accuracy |

| Papaya [76] | RGB images | CNN model | Disease classification accuracy ∼92% |

| 10-class [77] | Quadtree segmentation RGB images | CNN model | Diseased region detection accuracy |

| Tomato [78] | RGB images | Inception-ResNet v2 Autoencoder | Classification of nutritional deficiencies Inception-ResNet v2 Autoencoder |

| Strawberry [79] | RGB images | AlexNet, MobileNet, GoogLeNet, VGGNet, Xception and 2-layer CNN | Quality classification Baseline-CNN 85.61%–73.33% AlexNet 96.48%–87.37% GoogLeNet 91.93%–85.26% VGGNet 96.49%–89.12% Xception 92.63%–87.72% MobileNet 83.51%–64.56% |

| Blueberry [80] | Hyperspectral transmittance data | ResNet and ResNeXt | Internal damage detection accuracy and F1-score ResNet esNeXt |

| Banana [81] | RGB images | CNN model | Classification of ripening stages accuracy |

| Cucumber [82] | Hyperspectral imaging | Stacked Sparse Auto-Encoder and CNN model | Defects detection CNN-SSAE |

| Melon [83] | Infrared video | 5-layer CNN LeNet-5 B-LeNet-4 | Recognition of lesions on skin accuracy and recovery rate |

| Fruit | Data | CNN Model | Performance Results |

|---|---|---|---|

| Kiwi [84] | RGB images | modified VGG-16 called FCN-8S | Harvesting 51% |

| Wine grapes [85] | RGB images | modified ResNet | Segmentatition F1-Score |

| Strawberry [86] | RGB images | Resnet-50 | Detection Recuperation |

| Orange [87] | RGB images | ResNet-101 | Detection |

| Kiwi [88] | RGB-D and NIR | VGG-16 | Detection |

| Strawberry [89] | RGB-D images | ResNet modified | Detection |

| Date Fruit [90] | RGB images | AlexNet and VGG-16 | 99.01–97.01%–98.59% |

| Sweet Peppers [91] | RGB-D images | ResNet Modified | Training Loss Validation Loss |

| Guava [92] | RGB-D images | VGG-16 and modified GoogLeNet | Detection 98.3%–94.8% |

| Passion Fruit | RGB-D images | VGG-16 model 5 | Detection |

| Strawberry [93] | RGB images | CNN model | Detection 88.03%–77.21% |

| Tomato [94] | Synthetic images and RGB images | Inception-ResNet modified | Detection 91%–93% |

| Apple and Mangoes [28,95] | RGB images | VGG-16 | Detection F1-Score |

| Apple and Orange [27] | RGB images | Two CNN model | Segmentation Oranges 0.813 Apples 0.838 |

| Sweet Pepper [36] | RGB and NIR images | modified VGG-16 | Detection F1-Score |

| Mangoes [96] | RGB, NIR, and LiDAR images | modified VGG-16 | Segmentation error 1.36% |

| Weight Decay | DropOut | Learning Rate | Momentum | Batch Size |

|---|---|---|---|---|

| 32 |

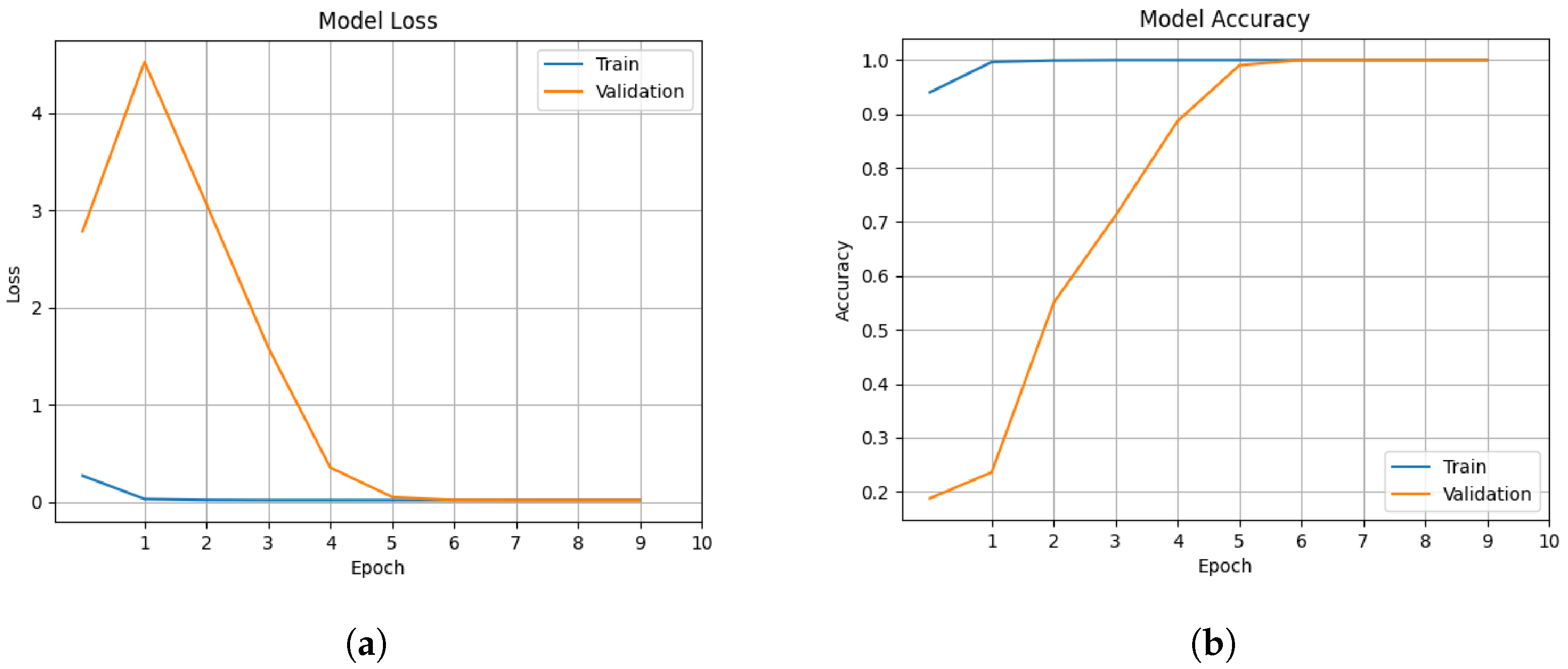

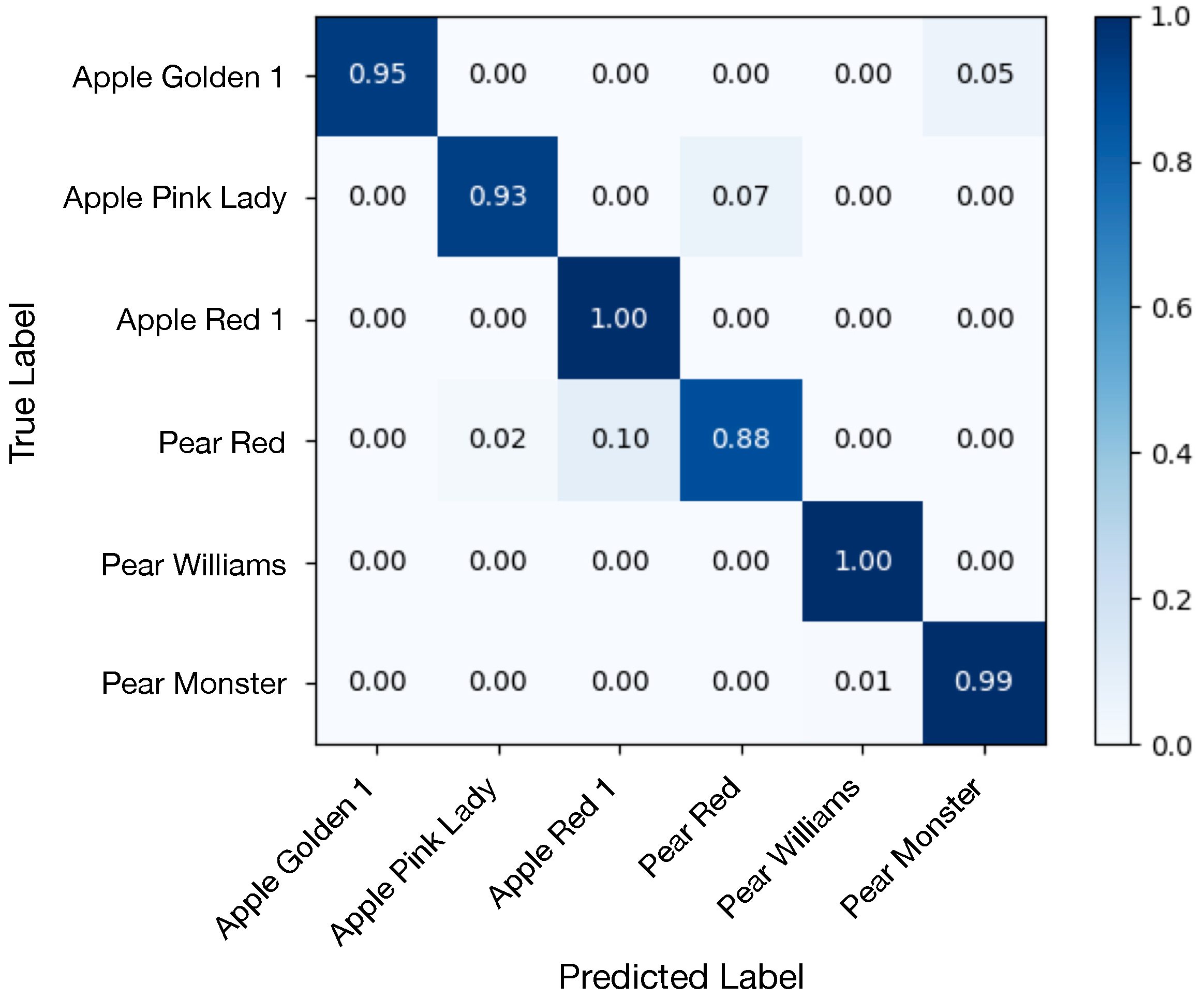

| CNN Model | Depth | Training | Validation | Testing | |||

|---|---|---|---|---|---|---|---|

| Loss | Accuracy | Loss | Accuracy | Accuracy | F1-Score | ||

| Proposed example | 6 | 0.0167 | 100% | 0.0165 | 100% | 95.45% | 0.96 |

| AlexNet | 8 | 1.2 × 10−5 | 100% | 4.9 × 10−5 | 100% | 100% | 1 |

| VGG16 | 16 | 0.2067 | 96.32% | 0.2070 | 95.94% | 91.32% | 0.89 |

| MobileNet | 88 | 0.0799 | 97.45% | 0.7201 | 73.86% | 70.02% | 0.67 |

| InceptionV3 | 159 | 0.5592 | 80.49% | 1.1755 | 62.82% | 54.49% | 0.49 |

| ResNet50 | 168 | 0.1436 | 99.17% | 0.9102 | 66.69% | 57.74% | 0.48 |

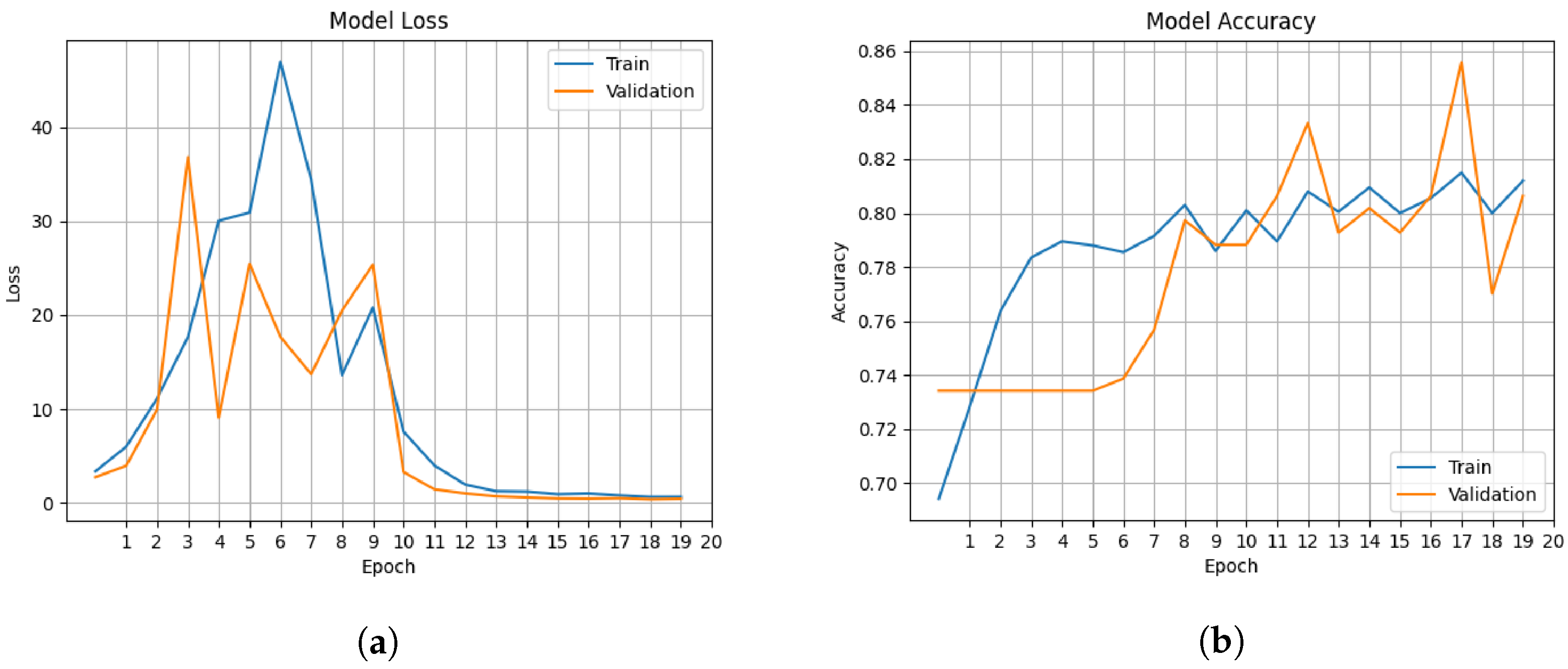

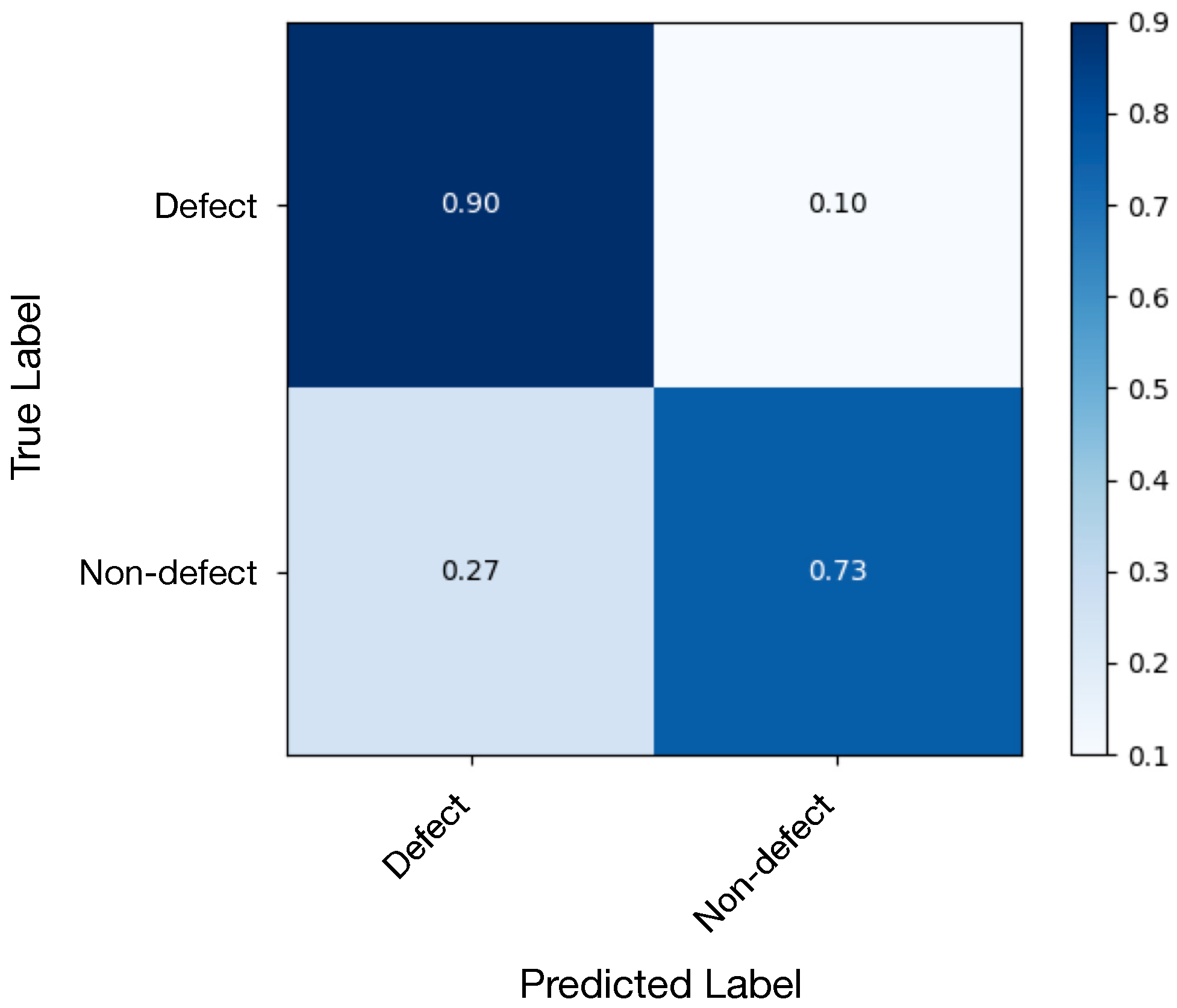

| CNN Model | Depth | Training | Validation | Testing | |||

|---|---|---|---|---|---|---|---|

| Loss | Accuracy | Loss | Accuracy | Accuracy | F1-Score | ||

| Proposed example | 6 | 0.3725 | 81.34% | 0.2812 | 80.86% | 81.25% | 0.87 |

| AlexNet | 8 | 0.3592 | 90.63% | 0.2877 | 90.13% | 88.70% | 0.87 |

| VGG16 | 16 | 0.0535 | 91.95% | 0.2133 | 90.48% | 89.58% | 0.88 |

| MobileNet | 88 | 0.1529 | 91.29% | 0.9016 | 86.95% | 83.33% | 0.83 |

| InceptionV3 | 159 | 0.5628 | 71.43% | 0.6351 | 66.67% | 62.54% | 0.62 |

| ResNet50 | 168 | 0.2816 | 88.05% | 0.7477 | 64.58% | 64.29% | 0.61 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. https://doi.org/10.3390/app10103443

Naranjo-Torres J, Mora M, Hernández-García R, Barrientos RJ, Fredes C, Valenzuela A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Applied Sciences. 2020; 10(10):3443. https://doi.org/10.3390/app10103443

Chicago/Turabian StyleNaranjo-Torres, José, Marco Mora, Ruber Hernández-García, Ricardo J. Barrientos, Claudio Fredes, and Andres Valenzuela. 2020. "A Review of Convolutional Neural Network Applied to Fruit Image Processing" Applied Sciences 10, no. 10: 3443. https://doi.org/10.3390/app10103443

APA StyleNaranjo-Torres, J., Mora, M., Hernández-García, R., Barrientos, R. J., Fredes, C., & Valenzuela, A. (2020). A Review of Convolutional Neural Network Applied to Fruit Image Processing. Applied Sciences, 10(10), 3443. https://doi.org/10.3390/app10103443