1. Introduction

Corn is one of the largest crops grown around the world due to its application in food, feed, chemical engineering, and energy [

1,

2,

3]. Mechanization and automation for corn harvest have been fully developed in the last century. In America, Oceania, and Europe, corn kernels are usually obtained directly through harvest process using grain harvesters or combined grain-stover harvesters [

4]. However, for some growing regions in Asia, the moisture is too large for kernel harvest, as it would induce unacceptable kernel damage [

5]. Hence, the segmented harvesting is widely applied in such regions [

6]. Specifically, corn ears are snapped firstly in the farmland by a harvester, as shown in

Figure 1a. Then, corn ears are dried until the moisture content of kernels are suitable for threshing. However, as shown in

Figure 1b, corn ears are inevitably damaged by the peeling devices. Ear damage means the corn kernels drop from ears during the peeling process, and the dropped kernels fail to be harvested, leading to considerable harvest loss. Preliminary studies have demonstrated corn ear damage is mainly affected by the rotation speed, surface structure, and material and installation clearance of snapping roller and transmission blade [

7,

8]. Researchers believe adjusting these working parameters can effectively reduce corn ear damage [

9,

10]. However, the adjusting operation cannot be executed without efficient and accurate recognition of corn ear damage. In other words, recognition is the technical prerequisite to reduce damage. Therefore, it is necessary to develop an automatic, non-contact, non-destructive, and rapid method for corn ear damage recognition.

Image processing methods have been attracting increasing attentions in agriculture products non-destructive detection [

11,

12]. Depending on the type of information, such methods can be divided into hyperspectral image (HSI) processing methods and nature image processing methods [

13,

14]. HSIs comprise dozens or hundreds of narrow spectral bands with high spatial resolution, such that they are able to be used for recognition of objects [

14]. Due to its great volume and high cost, HSI recognition is frequently employed for agriculture products detection in post-harvest. For instance, Atas et al. developed a compact machine vision system to detect aflatoxin in chilli peppers based on HSIs and machine learning [

15]. Farrell et al. utilized HSIs to identify water and nitrogen availability for rainfed corn crops [

16]. Nature images are acquired by normal camera [

17,

18,

19]. RGB images are the typical form of nature images [

20,

21]. Comparing to HSIs, RGB images are more suitable for online agricultural detection as its lower computational complexity and cost. For the field of seeding, Leemans et al. developed a machine-vision-based mechanism to measure position of seed drills relative to the previous lines, which is used in a feedback control loop [

22]. Karayel et al. utilized high-speed camera system to measure the seed drill seed spacing and velocity of fall of seeds [

23]. Liu et al. proposed an image processing algorithm to detect the performance of seed-sowing devices, including the the breadth of the seed array, the coordinates of the seed array center, the distance between seed arrays, and seed intervals and non-seed intervals of each seed array [

24]. For the filed of harvesting, Gunchenko et al. used unmanned aerial vehicle to acquire nature images for agricultural harvesting equipment route planning and harvest volume measuring [

25]. Plebe et al. developed an image processing system to guide automatic harvesting of oranges [

26]. Jia et. al proposed an image preprocessing method for night vision of apple harvesting robot [

27].

For the field of corn ear harvest, damage forms are various in different processes, such as whole ear broken, kernel damage, and peeling ear damage. In ear snapping process, a whole ear may be broken off by headers. To address this issue, Liu et al. employed a camera to acquire RGB images of ears on conveyor belt, and then proposed a method to distinguish broken ear based on YOLO [

6]. The information of ear broken can be used to adjust header speed. Kernel damage is also attracting research interests, which means kernels are cracked during harvesting. Liao et al. utilized on-board hardware operation and parallel statistical look-up table mapping to extract images of kernels, based on which the image processing algorithm is developed to distinguish whole and damaged kernels [

28]. Another damage form is ear peeling damage, the primary characteristic of which is the kernel loss from ears. Unfortunately, to date, the automatic detection of ear peeling damage has not been reported.

The recognition method is the basis of online detection of ear peeling damage. Mechanical peeling leads to random dropping of kernels from ears. Hence, comparing to kernel damage, ear damage usually exhibits large region and random distribution. These characteristics make it necessary and possible to be recognized by RGB images. However, conventional image processing methods, such as image segmentation [

29,

30], are difficult to be applied to recognize the damage in corn ear. This is because the objective images of snapped ears always contain great amount of features. Next, we provide an example to illustrate this issue. As presented in

Figure 2a, we selected a typical objective image during snapping as the original image in this example with the spatial resolution of

, containing intact ears, damaged ears, impurities, and backgrounds. The well-known image segmentation method, thresholding, was used for the objective image [

31,

32,

33,

34]. The red, green, and blue bands were employed for the test, respectively. The result is shown in

Figure 2. The image is divided into numerous of parts, and the region of damage is almost impossible to be recognized, regardless of the used band.

In this study, we aimed to develop a peeling damage recognition method based on RGB image. Different from conventional methods, the proposed method contains two steps. First, we recognize the regions of corn kernels by using a novel dictionary-learning-based method. Second, we recognize the ear damage in the regions outside of the kernel regions by using a novel thresholding method. For both two steps, we also develop the method based on the concept of image corrosion and expansion for post-processing of recognized results. The practicality and accuracy of the proposed method was verified through experiments on both single ear recognition and multiple ears recognition. This paper is organized as follows.

Section 2 introduces the the materials used in this study and the methods to recognize corn kernel region and ear damage region.

Section 3 and

Section 4 presents the experimental results and discussions, respectively.

Section 5 concludes this paper.

2. Materials and Methods

In this section, we first introduce the materials used in this study. Next, we introduce the proposed recognition method, including recognition of corn kernel and recognition of ear damage.

2.1. Materials

The variety of used corns were Feitian 358, and they were harvested in Lishu city, Jilin province, China, which is located at N and E. The row spacing and the plant spacing were 600 and 269 mm, respectively. The moisture content while harvesting was 27.3%. In this producing region, more than 90% of corn is segmentally harvested. The most widely used machineries are JIMIG-562 type of harvesters. The rotation speeds of snapping roller and transmission blade were set to 370 and 120 rpm, respectively.

2.2. Recognition of Corn Kernel

In this section, we introduce the process of recognizing corn kernels that is achieved by using the correlation between the original image and the learned dictionary [

35]. For this purpose, we should first train dictionaries for corn kernel, ear damage, and backgrounds. The outline of the proposed dictionary learning strategy in given in Algorithm 1. Without loss of generality, we primarily introduce the training process for corn kernels, and the methods for ear damage and backgrounds are similar. In this method, we employ a set of regions of corn kernels, containing

spatial pixels totally. Then, we reshape and normalize them into training samples.

Figure 3 shows the graphical representation for the generation of kernel training samples. Specifically, let

denote the normalized collection of selected samples,

, and

,

. Each column of

denotes a sample, expressed as

, where

,

, and

represent the reflection of the pixel in the red, green, and blue bands, respectively. These samples are used to train a dictionary,

, that satisfies

where

returns the maximum absolute entry of a vector. The above constraint ensures at least one atom of the learned dictionary is correlative enough to each sample. The parameter

controls the threshold of correlation. In this study, we set

.

| Algorithm 1 Proposed dictionary learning algorithm. |

Require:, , , threshold . 1: Initialize ; 2: for to do 3: if then 4: ; 5: end if 6: end for Ensure: Learned dictionary .

|

The RGB information in the objective is regarded to be similar to that in the training samples. Therefore, we can always find an atom strongly correlative to RGB data of a core kernel pixel in objective images. Besides corn kernel, we also utilize the proposed algorithm to train dictionaries for ear damage and backgrounds, denoted as

and

, respectively. Based this consideration, we recognize whether an arbitrary spatial pixel belongs to core kernel using Algorithm 2.

| Algorithm 2 Recognizing corn kernels using learned dictionaries. |

Require: Original RGB image , dictionaries , , and threshold , . 1: Initialize 2: for each spatial pixel do 3: Denote ,; 4: if and then 5: Normalize by ; 6: Compute , , and ; 7: Compute , , and ; 8: if then 9: ; 10: end if 11: end if 12: end for Ensure: Recognizing result .

|

We compute the correlation between and each atom of the dictionary and find the atom that is most correlative to . If the atom belongs to and the reflection of the red band satisfies , we then regard this pixel as corn kernel. As indicated in Step 4 of Algorithm 2, two preconditions are set to determine whether a pixel should be an alternative based on the prior information of corn kernels. The two preconditions can reduce the interference of dark backgrounds, shadow, etc. Through the above operations, the original RGB image is transformed into binary image, . The recognized kernels are displayed as 1, i.e., white, while other pixels are set to be 0, i.e., black.

Next, we provide an example for the proposed corn kernel recognition method. As presented in

Figure 4a, a damaged ear was selected with the spatial size of

for our test. The dictionary was trained using the method given in Algorithm 1 based on

training samples. Then, we utilized Algorithm 2 to recognize corn kernels. The results is shown in

Figure 4b. Obviously, the region of kernels in

Figure 4a is highly consistent with the white region in

Figure 4b.

Remark 1. It should be noted we only use Algorithm 2 to recognize corn kernels rather than ear damages. This is because ear damage is close to kernel gap in terms of RGB information, such that it is easy to confuse with damages with gap. Hence, we first recognize kernels, and then occupy the region of kernels and their gap using corroding and expanding operations. Finally, we find the damage based on RGB information within the region that is not occupied.

2.3. Recognition of Ear Damage

Corroding is used to eliminate outliers, which can also be regarded as noise. In this method, corroding is executed before expanding to avoid unexpected expansion caused by outliers. Here, we should provide the definition of the operator in advance.

Definition 1. Given a binary image , the operation with respect to a pixel , denoted as , is defined byand we define once or . Remark 2. In fact, the operation returns the number of 1-value pixels, i.e., the number of white pixels, among its surrounding 8 pixels. However, when a pixel is located at the edge of an image, the total number of surrounding pixels is 5; when a pixel is located ing the corner, the total number of surrounding pixels is 3.

| Algorithm 3 Proposed corroding algorithm. |

Require: Binary image , threshold , maximum number of iterative cycles . 1: while termination condition is not reached do 2: Initialize ; 3: for each pixel do 4: if then 5: ; 6: end if 7: end for 8: ; 9: end while Ensure: Corroded binary image .

|

The proposed corroding algorithm is summarized in Algorithm 3. Our strategy is setting a pixel to be 1 only when more than

of its surrounding pixels are 1. The corroding process is repeated until one of the two termination conditions is reached. The first condition is the number of iterative cycles is enough. The second is the outputted image no longer changes. The parameter

controls the threshold for corroding a pixel. A larger

encourages radical corrosion, whereas a smaller

leads conservative corrosion. A graphical demonstration for comparing corroding operations based on different values of

is provided in

Figure 5. We randomly selected a patch of a binarized corn kernel image as the test image. The maximum number of iterative cycles was set to 5. Obviously, the outliers still remain after corroding when

. On the other hand, too many features of kernels are corroded when

. When

, the outliers can be removed and the main features of kernels still exist. Therefore, we empirically set

in this study. Experimental results on the test ear are provided in

Figure 6.

Figure 6a shows the binary image after the kernel recognition process, which is just the image presented in

Figure 4b.

Figure 6b is the corroded image of

Figure 6a. It is obvious most outliers have been successfully removed, and the main features of kernel are unaffected.

Next, we expand the corroded image to fill pores and gap of kernels. The proposed expanding algorithm is summarized in Algorithm 4. For each pixel, all 8 of its surrounding pixels are set to 1 if more than

of its surrounding pixels are 1. Similar to the corroding algorithm, the total numbers of surrounding pixels for pixels at edges and corners are 5 and 3, respectively. The parameter

controls the threshold of expanding. A smaller

can speed up expanding. In this study, we empirically set

. Two termination conditions are detected after each iterative cycle, namely whether the number of iterative cycles is enough and whether the outputted image no longer changes.

| Algorithm 4 Proposed expanding algorithm, |

Require: Binary image , threshold , maximum number of iterative cycles . 1: while termination condition is not reached do 2: Initialize ; 3: for each pixel do 4: if then 5: Set all its surrounding pixels to 1; 6: end if 7: end for 8: ; 9: end while Ensure: Expanded binary image .

|

The expanded result of the corroded image is presented in

Figure 6c. Comparing the corroded image, i.e.,

Figure 6b, the pores and gap get apparent filling. Using the corrosion and expanding operations, we can not only accurately determine the region of kernels, but also reduce the searching scope for the following damage recognition.

In previous operations, we obtain the region of corn kernels. Next, we introduce the method to recognize ear damage. The strategy is mainly based on the distinguishing reflections of kernel-dropping region in the red, green, and blue bands. We set the thresholds for upper and lower bounds of the three bands, respectively. A pixel is regarded to belong to ear damage region only when its reflections of all three bands are in the range of thresholds. The detailed steps are provided in Algorithm 5. The input matrix

is just the image obtained after kernel recognizing, corroding, and expanding operations (see

Figure 6c). All white pixels of

are regarded as kernels, such that they will not be considered in the process of ear damage recognizing. The results is presented in

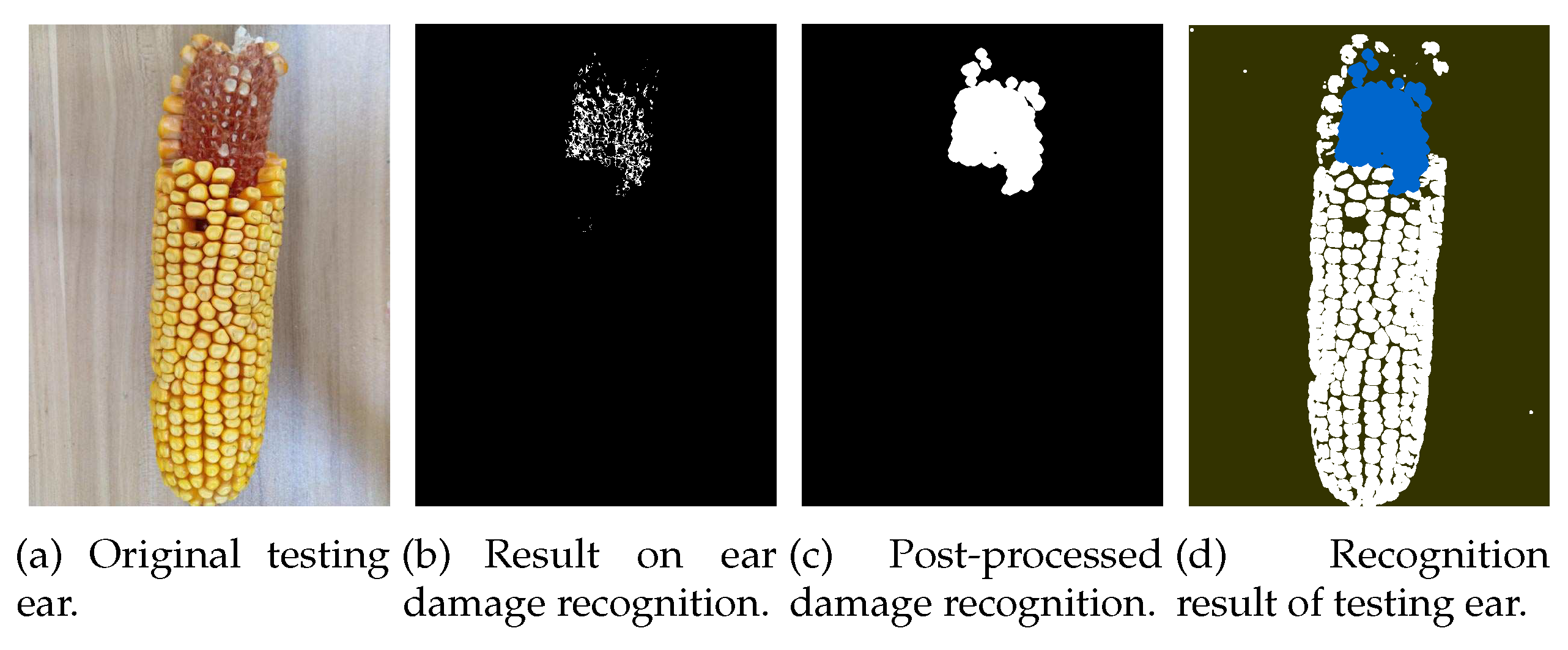

Figure 7b. We can note the recognized pixels are consistent with the actual damaged region. However, they are dispersed and cannot form a complete region. This is because many stubbles exist along the kernel-dropping region. These stubbles do not have the same RGB features as kernel-dropping region, and, therefore, they cannot be recognized directly by Algorithm 5.

| Algorithm 5 Recognizing ear damage. |

Require: Original objective RGB image , binary image . 1: Initialize ; 2: for each pixel do 3: if then 4: Let , , and ; 5: if , , and then 6: ; 7: end if 8: end if 9: end for Ensure: Outputted binary image .

|

To address this problem, we propose the post-processing strategy that is summarized in Algorithm 6. The corrosion step and the expansion step are alternatively executed until the termination condition is reached. Here, the termination condition is also composed of two judgements. The first is whether the number of iterative cycles is enough. The second is whether the outputted image is the same as the inputted image in an iterative cycle. The proposed strategy can be regarded as a kind of close operation, aiming to connect spreading pixels in damage region and eliminate isolated pixels that are misidentified. We utilize Algorithm 6 for

Figure 7b with

and

. The post-processed image is presented as

Figure 7c. Obviously, the complete region is obtained instead of spreading pixels. The region is consistent with the damage region of the testing ear. As shown in

Figure 7d, we combine the results on corn kernel recognition and ear damage recognition to obtain the final recognition result, where the black, white, and blue regions represent the background, the corn kernels, and the ear damage, respectively. The outline of complete method is summarized in

Figure 8.

| Algorithm 6 Post-processing of ear damage recognizing. |

Require: Binary image obtained through ear damage recognizing , maximum number of iterative cycles . Initialize ; while termination condition is not reached do Corrode to obtain using Algorithm 3 with 1 iterative cycle; Expand to obtain using Algorithm 4 with 1 iterative cycle; end while Set ; Ensure: Outputted binary image .

|