How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset

Abstract

:1. Introduction

2. Literature Review

3. Methodology

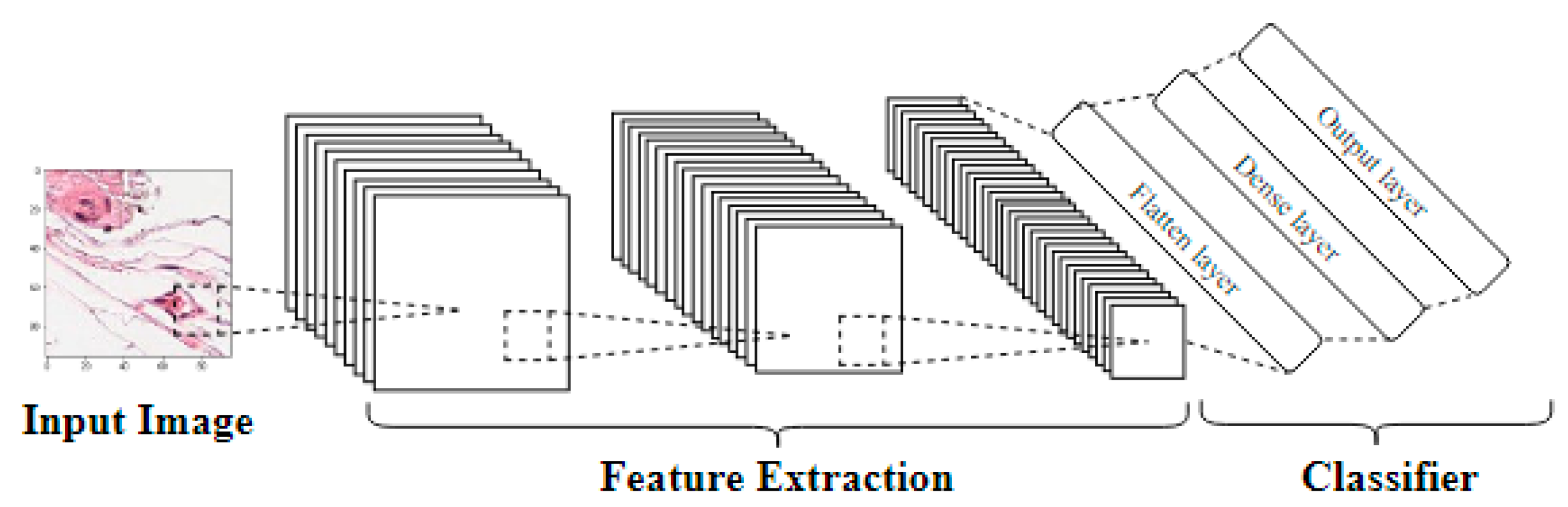

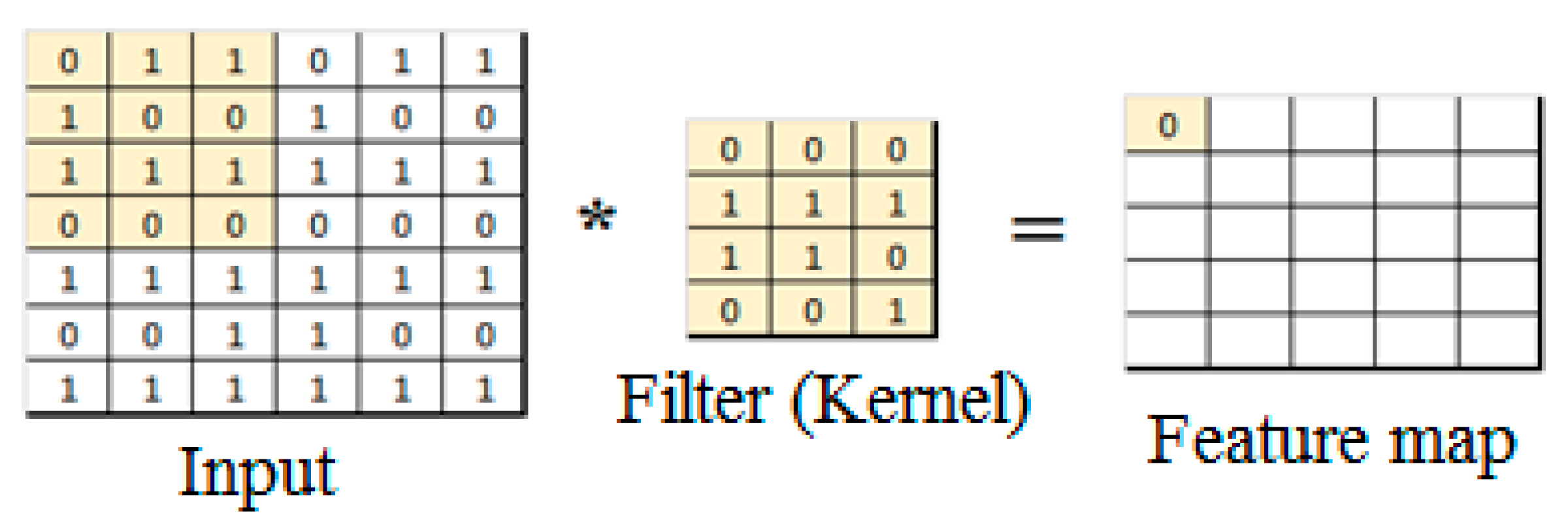

3.1. Convolutional Neural Networks

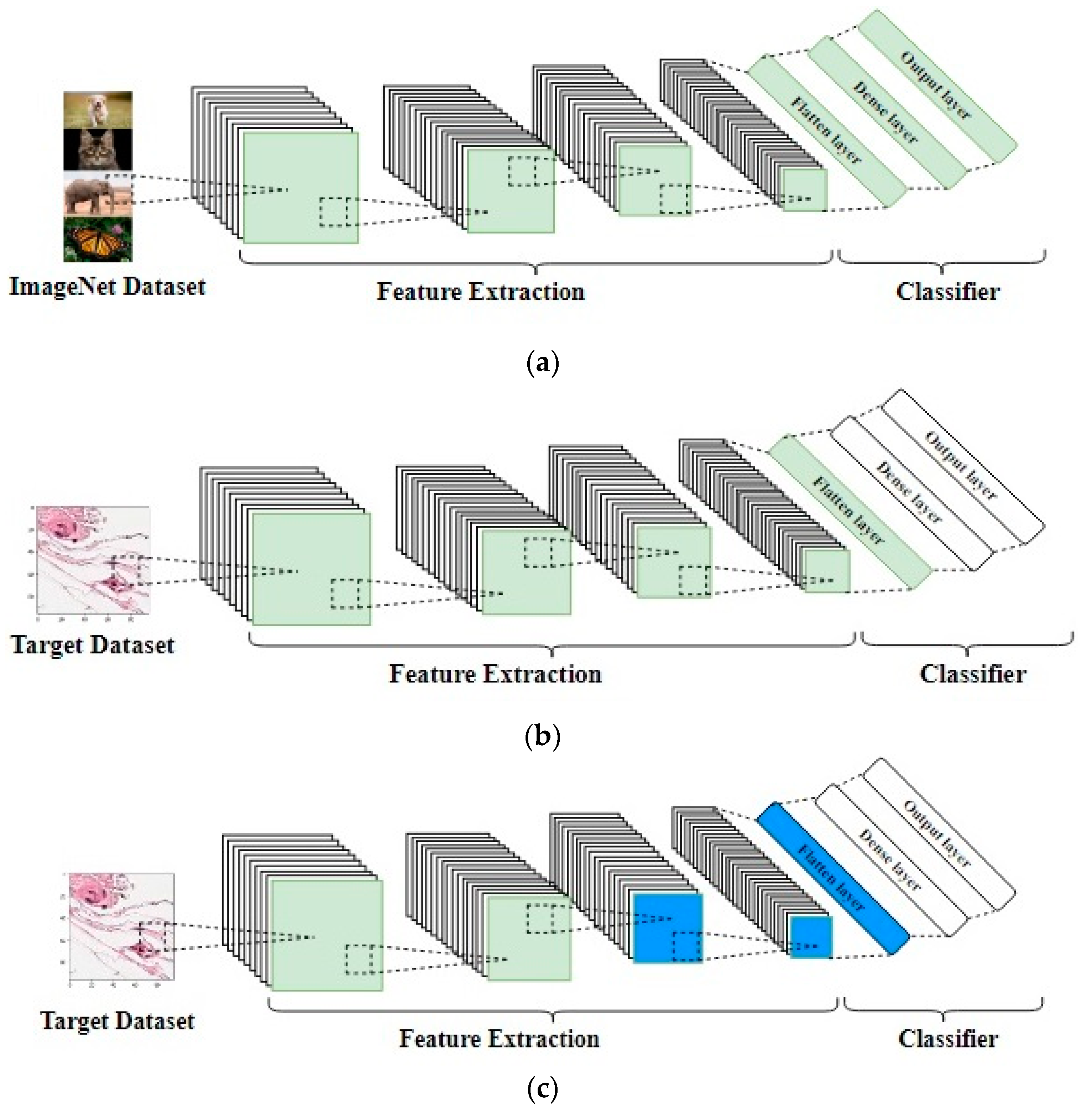

3.2. Transfer Learning

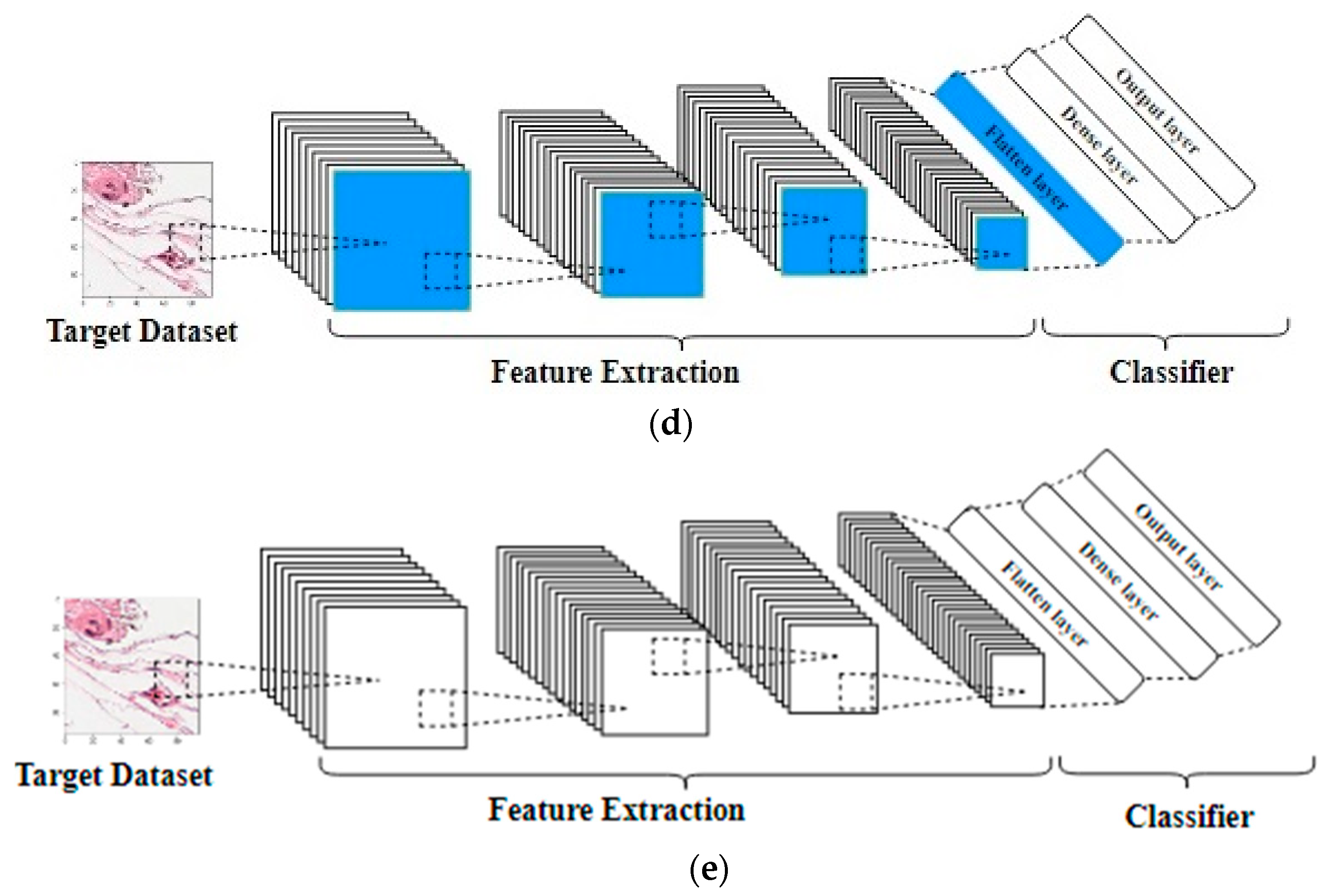

- The first technique is to freeze the source CNN’s weights (like ImageNet’s weights) and then remove the original fully connected layers and add another classifier, either a new fully connected layer or any machine learning classifier, like support vector machine (SVM), that is, to use the original weights for feature extraction.

- The second technique is to fine-tune the top layers of the source CNN with a very small learning rate and freeze the bottom layers, under the assumption that the bottom layers are very generic and can be used for any kind of image dataset [16].

- The third technique is to fine-tune the entire network’s weights using a very small learning rate to avoid losing the source weights, then remove the last fully connected layers, and add another layer to suit the target dataset.

- The fourth technique is to use the CNN’s original architecture without importing any weights, that is, to initialize the weights from scratch. The point of this technique is using a well-known architecture that has been experimented with challenging datasets and proven to be good. Different transfer learning techniques are shown in Figure 4.

3.3. CNN Architectures

3.3.1. VGG Architectures

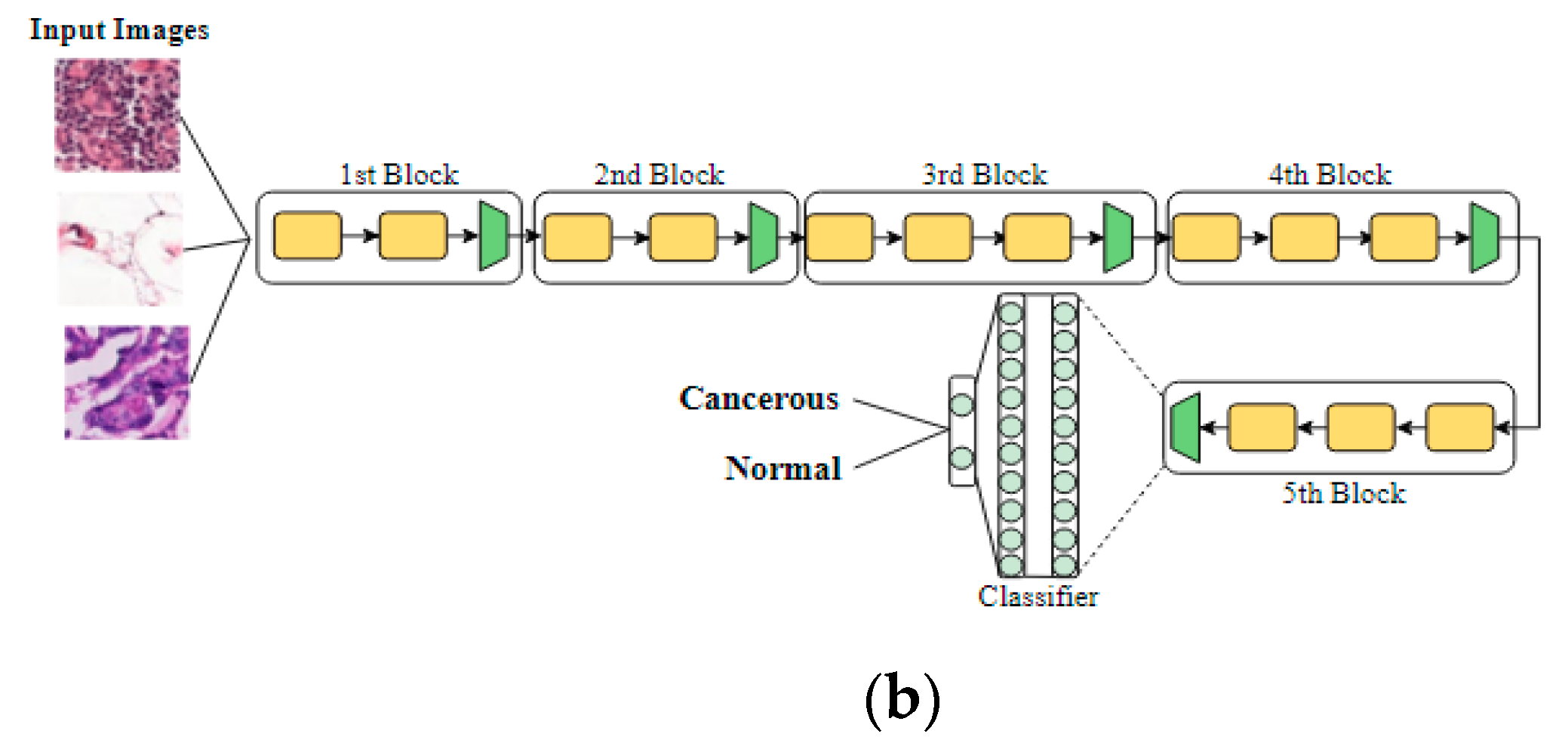

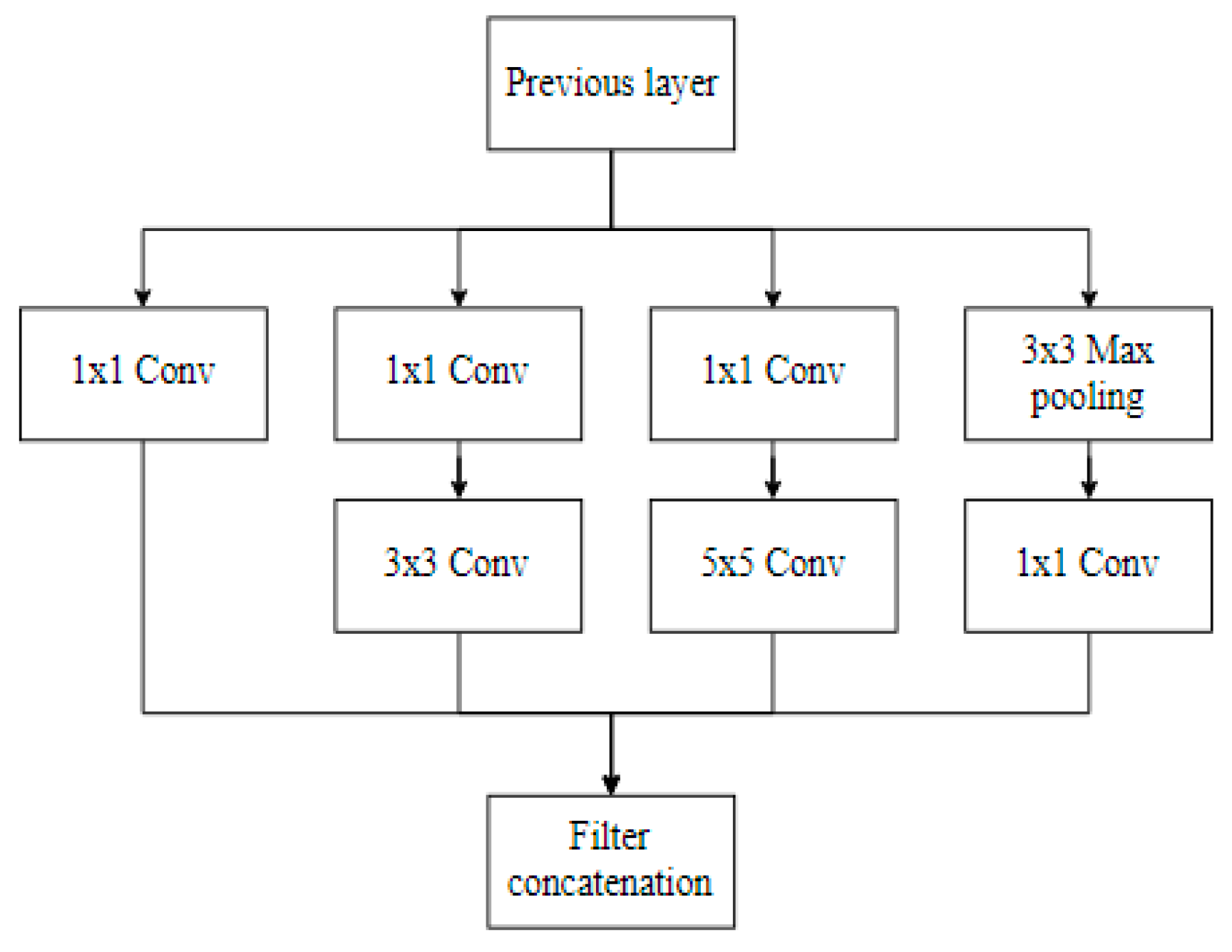

3.3.2. InceptionV3 Architecture

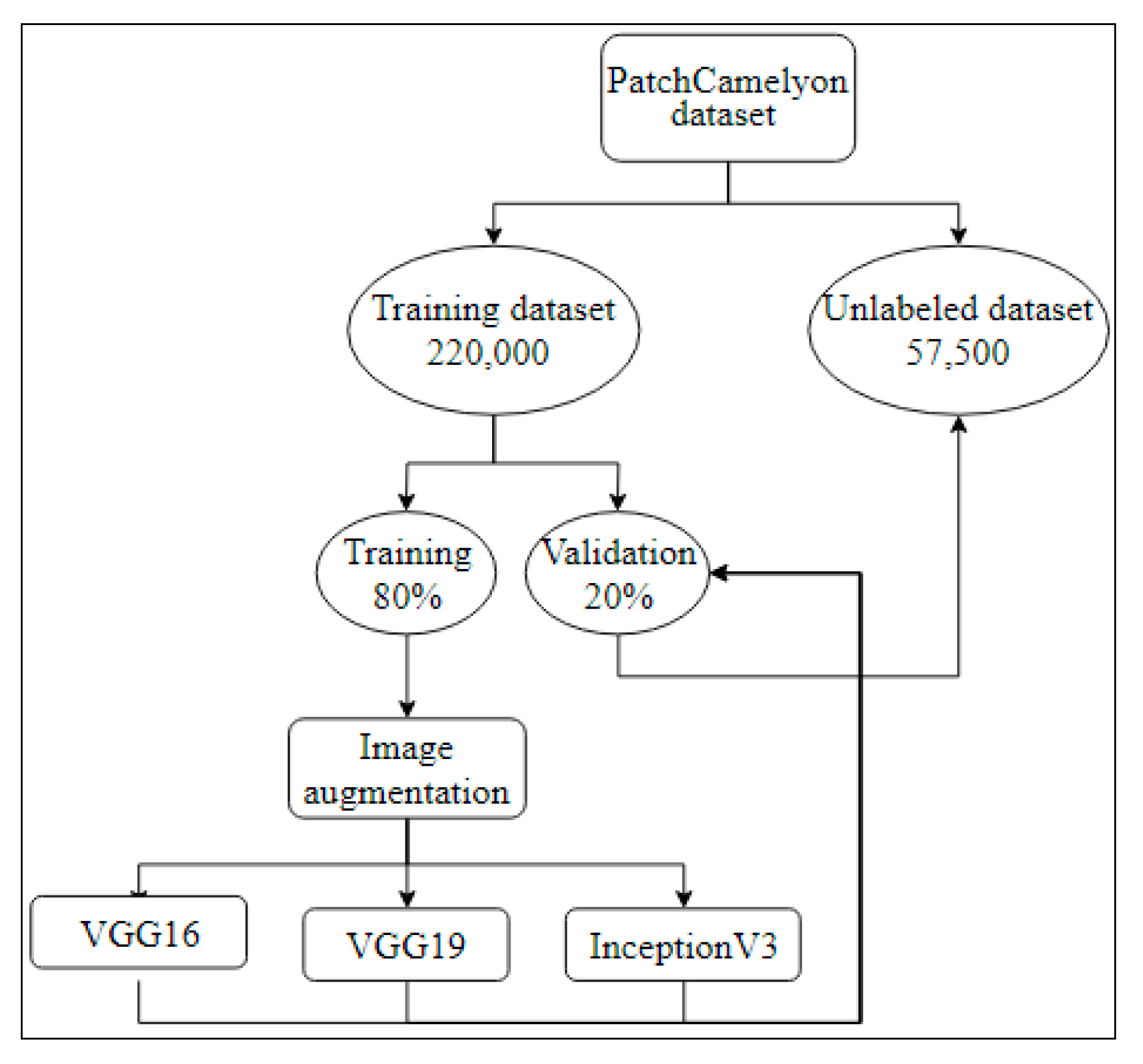

3.4. Datasets Used

3.4.1. ImageNet Dataset

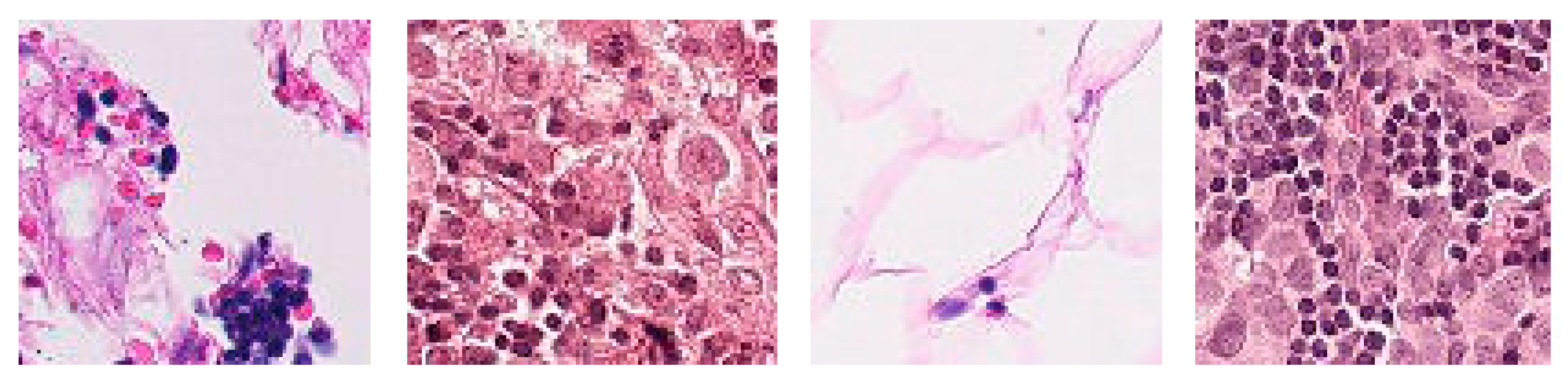

3.4.2. PatchCamelyon Histopathology Dataset

3.5. Performance Measures

3.6. Measures to Avoid Overfitting

3.6.1. Early Stopping

3.6.2. Best Model Saved

3.6.3. Dropout

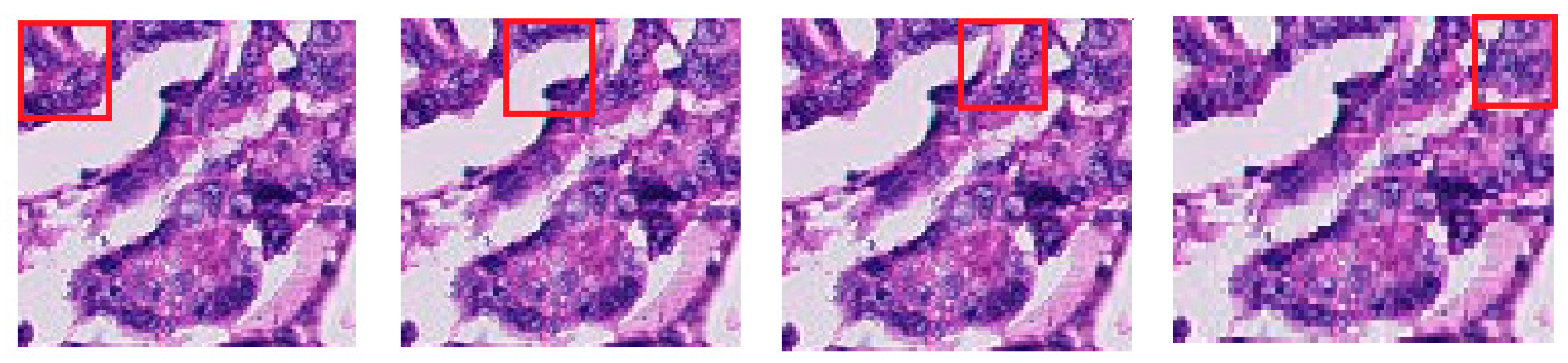

3.6.4. Image Augmentation

4. Results

4.1. Experiment Parameters

4.2. Experiment Results

4.3. Experiment Results on a Different Histopathology Dataset

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gurcan, M.N.; Boucheron, L.E.; Can, A.; Madabhushi, A.; Rajpoot, N.; Yener, B. Histopathological Image Analysis: A Review. IEEE Rev. Biomed. Eng. 2009, 2, 147–171. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Metter, D.M.; Colgan, T.J.; Leung, S.T.; Timmons, C.F.; Park, J.Y. Trends in the US and Canadian Pathologist Workforces from 2007 to 2017. JAMA Netw. Open 2019, 2, e194337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Mohammadian, S.; Karsaz, A.; Roshan, Y.M. Comparative Study of Fine-Tuning of Pre-Trained Convolutional Neural Networks for Diabetic Retinopathy Screening. In Proceedings of the 2017 24th National and 2nd International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 30 November–1 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Prentašić, P.; Lončarić, S. Detection of exudates in fundus photographs using convolutional neural networks. In Proceedings of the 2015 9th International Symposium on Image and Signal Processing and Analysis (ISPA), Zagreb, Croatia, 7–9 September 2015; pp. 188–192. [Google Scholar] [CrossRef]

- Khan, N.; Abraham, N.; Hon, M. Transfer learning with intelligent training data selection for prediction of Alzheimer’s Disease. IEEE Access 2019, 7, 72726–72735. [Google Scholar] [CrossRef]

- Farooq, A.; Anwar, S.M.; Awais, M.; Rehman, S. A deep CNN based multi-class classification of Alzheimer’s disease using MRI. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.; Foaud, M.M. Classification of skin lesions using transfer learning and augmentation with Alex-net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef] [Green Version]

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32. [Google Scholar] [CrossRef]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Boil. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Ferrier lecture. Functional architecture of macaque monkey visual cortex. Proc. R. Soc. Lond. Ser. B 1977, 198, 1–59. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [Green Version]

- Chollet, F. Deep Learning with Python, 1st ed.; Manning Publications Co.: Greenwich, CT, USA, 2017. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Sharma, S.; Mehra, D.R. Breast cancer histology images classification: Training from scratch or transfer learning? ICT Express 2018, 4, 247–254. [Google Scholar] [CrossRef]

- Spanhol, F.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2015, 63, 1455–1462. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Classification of Histopathological Biopsy Images Using Ensemble of Deep Learning Networks. arXiv 2019, arXiv:1909.11870. [Google Scholar]

- Veeling, B.S.; Linmans, J.; Winkens, J.; Cohen, T.; Welling, M. Rotation Equivariant CNNs for Digital Pathology. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 210–218. [Google Scholar]

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; Van Der Laak, J.A.W.M. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef] [PubMed]

- Aresta, G.; Araújo, T.; Kwok, S.; Chennamsetty, S.S.; Safwan, M.; Alex, V.; Marami, B.; Prastawa, M.; Chan, M.; Donovan, M.; et al. BACH: Grand challenge on breast cancer histology images. Med. Image Anal. 2019, 56, 122–139. [Google Scholar] [CrossRef]

- BioImaging Dataset. 2015. Available online: http://www.bioimaging2015.ineb.up.pt/dataset.html (accessed on 1 December 2019).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Vesal, S.; Ravikumar, N.; Davari, A.; Ellmann, S.; Maier, A. Classification of Breast Cancer Histology Images Using Transfer Learning; Image Analysis and Recognition; Springer Nature: Berlin, Germay, 2018. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Deniz, E.; Sengur, A.; Kadiroğlu, Z.; Guo, Y.; Bajaj, V.; Budak, Ü. Transfer learning based histopathologic image classification for breast cancer detection. Health Inf. Sci. Syst. 2018, 6, 18. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Ghuffar, S.; Khurshid, K. Classification of Breast Cancer Histology Images Using Transfer Learning. In Proceedings of the IEEE International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; Volume 16. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- AnaSubtil, L.G.; Oliveira, M.; Bermudez, P. ROC curve estimation: An overview. Revstat Stat. J. 2014, 12, 1–20. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7700, pp. 437–478. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Paper | Dataset Name | Dataset Size | Architectures Used | Classes | Best Accuracy |

|---|---|---|---|---|---|

| Sharma et al. [17] | BreakHis [18] | 7909 | VGG16 VGG19 ResNet50 | Binary | 92.6% |

| Ahmad et al. [31] | BioImaging [23] | 260 | AlexNet GoogleNet ResNet50 | Multiclass | 85% |

| Deniz et al. [30] | BreakHis [18] | 7909 | AlexNet VGG16 | Binary | 91.37% |

| Vesal et al. [27] | Bach [22] | 400 | InceptionV3 ResNet50 | Multiclass | 97.50% |

| Kassani et al. [19] | PatchCamelyon [21] BreakHis [18] Bach [22] BioImaging [23] | 327,680 7909 400 249 | Ensemble of: VGG19 DenseNet ImageNet | Binary | 94.64% 98.13% 95% 83.10% |

| VGG16 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 5th Block | 0.9303 | 0.9260 | 0.9212 |

| Fine-Tuning 4th Block | 0.9398 | 0.9480 | 0.9382 |

| Fine-Tuning 3rd Block | 0.9364 | 0.9603 | 0.9475 |

| Fine-Tuning 2nd Block | 0.8893 | 0.9350 | 0.9384 |

| Fine-Tuning ALL | 0.9383 | 0.9310 | 0.9404 |

| VGG19 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 5th Block | 0.9082 | 0.9028 | 0.9058 |

| Fine-Tuning 4th Block | 0.9268 | 0.9266 | 0.9235 |

| Fine-Tuning 3rd Block | 0.9087 | 0.9514 | 0.9440 |

| Fine-Tuning 2nd Block | 0.8377 | 0.9480 | 0.9254 |

| Fine-Tuning ALL | 0.8669 | 0.9427 | 0.9342 |

| InceptionV3 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 13th Block | 0.8250 | 0.8220 | 0.8221 |

| Fine-Tuning 12th Block | 0.8514 | 0.8466 | 0.8446 |

| Fine-Tuning 11th Block | 0.8702 | 0.8446 | 0.8274 |

| Fine-Tuning 10th Block | 0.8648 | 0.8675 | 0.8429 |

| Fine-Tuning 9th Block | 0.8526 | 0.8816 | 0.8538 |

| Fine-Tuning 8th Block | 0.8574 | 0.8637 | 0.8469 |

| Fine-Tuning 7th Block | 0.8673 | 0.8429 | 0.8907 |

| Fine-Tuning 6th Block | 0.8923 | 0.8468 | 0.8950 |

| Fine-Tuning 5th Block | 0.8680 | 0.8730 | 0.8883 |

| Fine-Tuning 4th Block | 0.8483 | 0.8335 | 0.8686 |

| Fine-Tuning 3rd Block | 0.7715 | 0.8575 | 0.8641 |

| Fine-Tuning 2nd Block | 0.7175 | 0.8636 | 0.8785 |

| Fine-Tuning ALL | 0.8071 | 0.9058 | 0.9280 |

| Training from Scratch AUC Results | |||

|---|---|---|---|

| Networks | |||

| VGG16 | 50% | 90.55% | 85.91% |

| VGG19 | 50% | 84.77% | 85.81% |

| InceptionV3 | 84.83% | 87.93% | 81.97% |

| VGG16 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 5th Block | 89.51% | 91.76% | 89.73% |

| Fine-Tuning 4th Block | 88.50% | 93.58% | 94.03% |

| Fine-Tuning 3rd Block | 86.21% | 95.39% | 95.76% |

| Fine-Tuning 2nd Block | 86.79% | 94.90% | 93.91% |

| Fine-Tuning ALL | 85.04% | 92.47% | 93.04% |

| VGG19 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 5th Block | 89.14% | 90.11% | 88.68% |

| Fine-Tuning 4th Block | 87.41% | 91.67% | 93.80% |

| Fine-Tuning 3rd Block | 88.72% | 96.79% | 94.46% |

| Fine-Tuning 2nd Block | 50.00% | 95.46% | 94.39% |

| Fine-Tuning ALL | 87.29% | 95.26% | 94.30% |

| InceptionV3 Test AUC Results | |||

|---|---|---|---|

| Blocks | |||

| Fine-Tuning 13th Block | 55.09% | 55.80% | 55.26% |

| Fine-Tuning 12th Block | 61.61% | 57.78% | 53.45% |

| Fine-Tuning 11th Block | 56.92% | 56.74% | 62.26% |

| Fine-Tuning 10th Block | 61.58% | 54.85% | 53.54% |

| Fine-Tuning 9th Block | 59.33% | 55.35% | 51.61% |

| Fine-Tuning 8th Block | 51.26% | 50.79% | 50.63% |

| Fine-Tuning 7th Block | 58.38% | 51.62% | 50.41% |

| Fine-Tuning 6th Block | 56.63% | 50.90% | 53.38% |

| Fine-Tuning 5th Block | 56.87% | 50.52% | 50.64% |

| Fine-Tuning 4th Block | 57.46% | 54.39% | 50.57% |

| Fine-Tuning 3rd Block | 52.00% | 50.00% | 50.00% |

| Fine-Tuning 2nd Block | 50.00% | 50.00% | 52.00% |

| Fine-Tuning ALL | 85.69% | 94.72% | 94.41% |

| Training from Scratch AUC Results | |||

|---|---|---|---|

| Networks | |||

| VGG16 | 50% | 90.45% | 86.51% |

| VGG19 | 50% | 92.02% | 85.65% |

| InceptionV3 | 88.32% | 92.65% | 81.64% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kandel, I.; Castelli, M. How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset. Appl. Sci. 2020, 10, 3359. https://doi.org/10.3390/app10103359

Kandel I, Castelli M. How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset. Applied Sciences. 2020; 10(10):3359. https://doi.org/10.3390/app10103359

Chicago/Turabian StyleKandel, Ibrahem, and Mauro Castelli. 2020. "How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset" Applied Sciences 10, no. 10: 3359. https://doi.org/10.3390/app10103359

APA StyleKandel, I., & Castelli, M. (2020). How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset. Applied Sciences, 10(10), 3359. https://doi.org/10.3390/app10103359