Automatic Pancreas Segmentation Using Coarse-Scaled 2D Model of Deep Learning: Usefulness of Data Augmentation and Deep U-Net

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

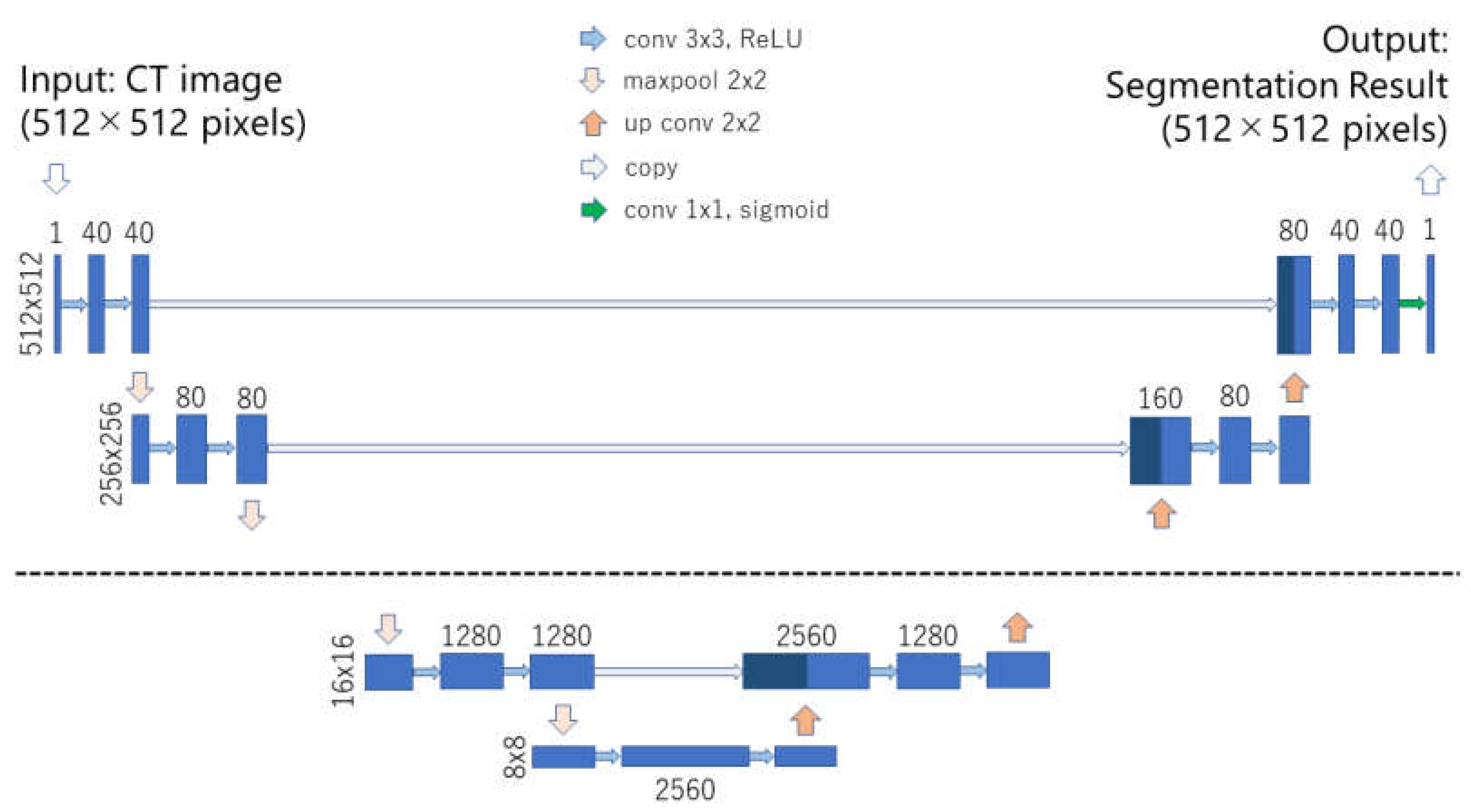

2.2. Deep Learning Model

2.3. Data Augmentation

2.4. Training

- Baseline U-net + no data augmentation,

- Baseline U-net + conventional method,

- Baseline U-net + mixup,

- Baseline U-net + RICAP,

- Baseline U-net + RICAP + mixup,

- Deep U-net + no data augmentation,

- Deep U-net + conventional method,

- Deep U-net + mixup,

- Deep U-net + RICAP,

- Deep U-net + RICAP + mixup.

2.5. Evaluation of Pancreas Segmentation

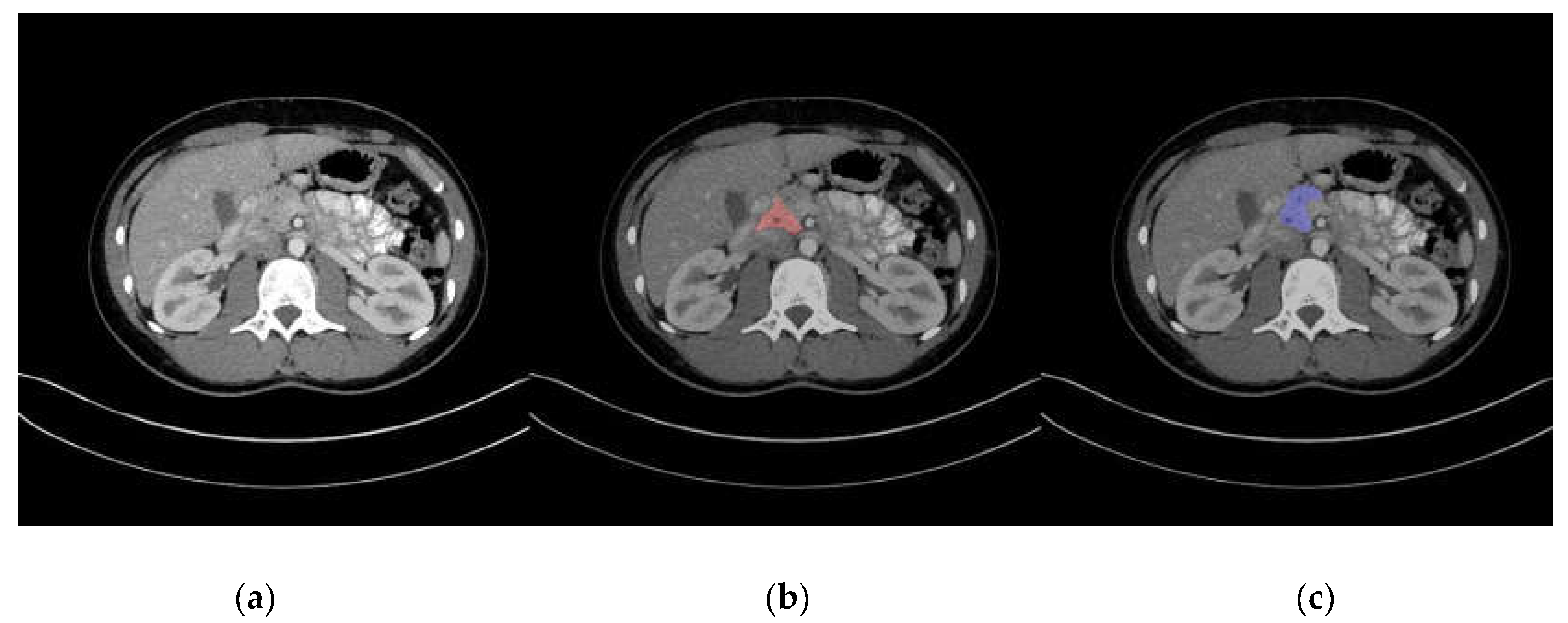

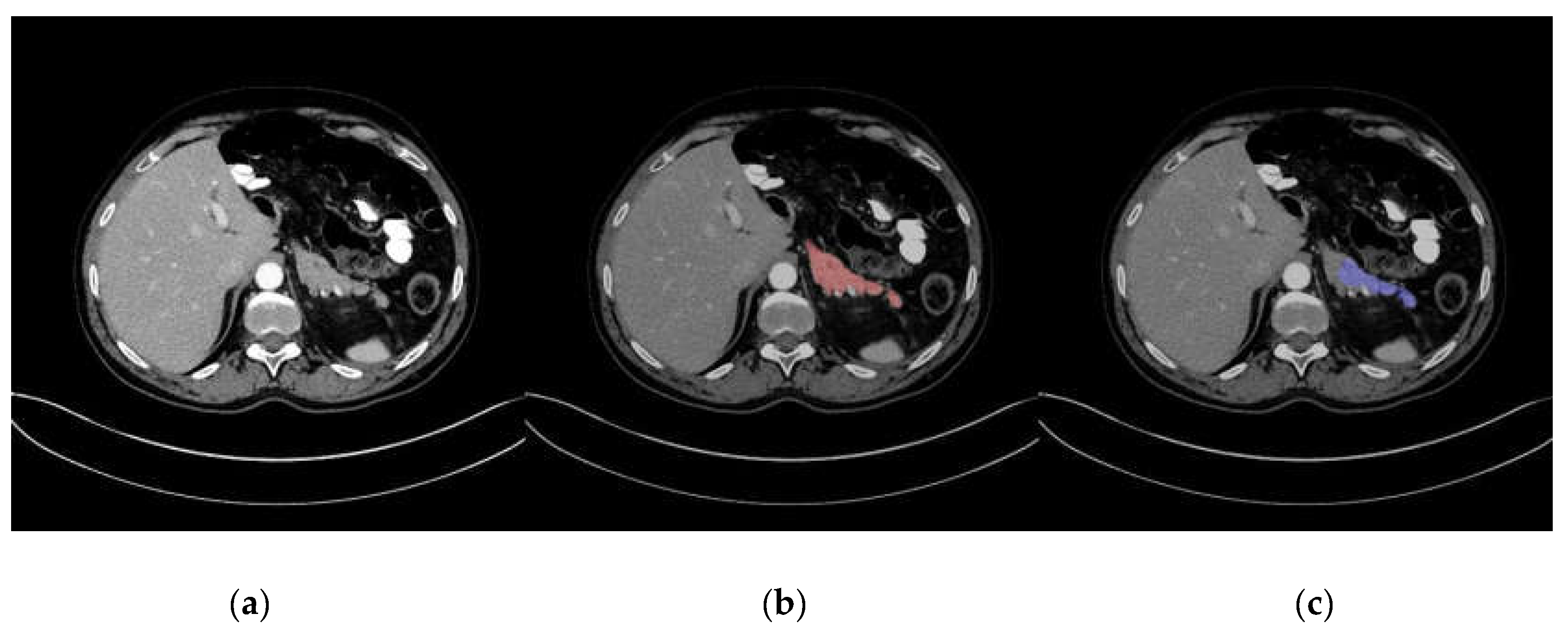

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Name of Model | 2D/3D | Coarse/Fine | Mean DSC | Data Splitting |

|---|---|---|---|---|

| Holistically Nested 2D FCN Stage-2 [11] | 2D | fine | 0.811 ± 0.073 | 4-fold CV |

| 2D FCN + Recurrent Network [13] | 2D | fine | 0.824 ± 0.067 | 4-fold CV |

| Fine-scaled Model 2D FCN [14] | 2D | fine | 0.824 ± 0.057 | 4-fold CV |

| Fine-scaled Model 3D U-net [15] | 3D | fine | 0.860 ± 0.045 | 4-fold CV |

Appendix B

| Target 1 | Target 2 | p-Value | Statistical Significance for DSC Difference |

|---|---|---|---|

| 1 | 2 | 0.727381623 | No |

| 1 | 3 | 0.560489877 | No |

| 1 | 4 | 0.921405534 | No |

| 1 | 5 | 0.037061458 | No |

| 1 | 6 | 0.727381623 | No |

| 1 | 7 | 0.148802462 | No |

| 1 | 8 | 0.553863735 | No |

| 1 | 9 | 0.012907274 | No |

| 1 | 10 | 5.45 × 10−5 | Yes |

| 2 | 3 | 0.85904175 | No |

| 2 | 4 | 0.87456599 | No |

| 2 | 5 | 0.080182031 | No |

| 2 | 6 | 0.958034301 | No |

| 2 | 7 | 0.211395881 | No |

| 2 | 8 | 0.856459499 | No |

| 2 | 9 | 0.029961825 | No |

| 2 | 10 | 0.000143632 | Yes |

| 3 | 4 | 0.422285602 | No |

| 3 | 5 | 0.057745373 | No |

| 3 | 6 | 0.668985055 | No |

| 3 | 7 | 0.331951771 | No |

| 3 | 8 | 0.85904175 | No |

| 3 | 9 | 0.033624033 | No |

| 3 | 10 | 3.72 × 10−5 | Yes |

| 4 | 5 | 0.047352438 | No |

| 4 | 6 | 0.764727204 | No |

| 4 | 7 | 0.157310432 | No |

| 4 | 8 | 0.529901132 | No |

| 4 | 9 | 0.024270868 | No |

| 4 | 10 | 0.000120757 | Yes |

| 5 | 6 | 0.067465313 | No |

| 5 | 7 | 0.649935631 | No |

| 5 | 8 | 0.067465313 | No |

| 5 | 9 | 0.580595554 | No |

| 5 | 10 | 0.031228349 | No |

| 6 | 7 | 0.227439002 | No |

| 6 | 8 | 0.784877257 | No |

| 6 | 9 | 0.028739708 | No |

| 6 | 10 | 9.60 × 10−5 | Yes |

| 7 | 8 | 0.292611693 | No |

| 7 | 9 | 0.355409719 | No |

| 7 | 10 | 0.017108607 | No |

| 8 | 9 | 0.040470933 | No |

| 8 | 10 | 5.23 × 10−5 | Yes |

| 9 | 10 | 0.185045722 | No |

References

- Nakagomi, K.; Shimizu, A.; Kobatake, H.; Yakami, M.; Fujimoto, K.; Togashi, K. Multi-shape graph cuts with neighbor prior constraints and its application to lung segmentation from a chest CT volume. Med. Image Anal. 2013, 17, 62–77. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.; Huang, C.; Bassenne, M.; Xiao, R.; Xing, L. Modified U-Net (mU-Net) with Incorporation of Object-Dependent High Level Features for Improved Liver and Liver-Tumor Segmentation in CT Images. IEEE Trans. Med. Imaging 2020, 39, 1316–1325. [Google Scholar] [CrossRef] [PubMed]

- Asaturyan, H.; Gligorievski, A.; Villarini, B. Morphological and multi-level geometrical descriptor analysis in CT and MRI volumes for automatic pancreas segmentation. Comput. Med. Imaging Graph. 2019, 75, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Kurata, Y.; Nishio, M.; Kido, A.; Fujimoto, K.; Yakami, M.; Isoda, H.; Togashi, K. Automatic segmentation of the uterus on MRI using a convolutional neural network. Comput. Biol. Med. 2019, 114, 103438. [Google Scholar] [CrossRef] [PubMed]

- Hiasa, Y.; Otake, Y.; Takao, M.; Ogawa, T.; Sugano, N.; Sato, Y. Automated Muscle Segmentation from Clinical CT using Bayesian U-Net for Personalized Musculoskeletal Modeling. IEEE Trans. Med. Imaging 2020, 39, 1030–1040. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics, 2019. CA Cancer J. Clin. 2019, 69, 7–34. [Google Scholar] [CrossRef] [PubMed]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- National Lung Screening Trial Research Team; Aberle, D.R.; Adams, A.M.; Berg, C.D.; Black, W.C.; Clapp, J.D.; Fagerstrom, R.M.; Gareen, I.F.; Gatsonis, C.; Marcus, P.M.; et al. Reduced lung-cancer mortality with low-dose computed tomographic screening. N. Engl. J. Med. 2011, 365, 395–409. [Google Scholar]

- Hesamian, M.H.; Jia, W.; He, X.; Kennedy, P. Deep Learning Techniques for Medical Image Segmentation: Achievements and Challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef]

- Kumar, H.; DeSouza, S.V.; Petrov, M.S. Automated pancreas segmentation from computed tomography and magnetic resonance images: A systematic review. Comput. Methods Programs Biomed. 2019, 178, 319–328. [Google Scholar] [CrossRef]

- Roth, H.R.; Lu, L.; Lay, N.; Harrison, A.P.; Farag, A.; Sohn, A.; Summers, R.M. Spatial aggregation of holistically-nested convolutional neural networks for automated pancreas localization and segmentation. Med. Image Anal. 2018, 45, 94–107. [Google Scholar] [CrossRef] [PubMed]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning (MIDL2018), Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Cai, J.; Lu, L.; Xie, Y.; Xing, F.; Yang, L. Improving deep pancreas segmentation in CT and MRI images via recurrent neural contextual learning and direct loss function. In Proceedings of the MICCAI 2017, Quebec City, QC, Canada, 11–13 September 2017. [Google Scholar]

- Zhou, Y.; Xie, L.; Shen, W.; Wang, Y.; Fishman, E.K.; Yuille, A.L. A fixed-point model for pancreas segmentation in abdominal CT scans. In Proceedings of the MICCAI 2017, Quebec City, QC, Canada, 11–13 September 2017. [Google Scholar]

- Zhao, N.; Tong, N.; Ruan, D.; Sheng, K. Fully Automated Pancreas Segmentation with Two-stage 3D Convolutional Neural Networks. arXiv 2019, arXiv:1906.01795. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Takahashi, R.; Matsubara, T.; Uehara, K. Data Augmentation using Random Image Cropping and Patching for Deep CNNs. arXiv 2018, arXiv:1811.09030. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Policies from Data. In Proceedings of the Computer Vision and Pattern Recognition (CVPR2019), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Roth, H.R.; Farag, A.; Turkbey, E.B.; Lu, L.; Liu, J.; Summers, R.M. Data from Pancreas-CT. The Cancer Imaging Archive. 2016. Available online: http://doi.org/10.7937/K9/TCIA.2016.tNB1kqBU (accessed on 13 February 2020).

- Roth, H.R.; Lu, L.; Farag, A.; Shin, H.-C.; Liu, J.; Turkbey, E.B.; Summers, R.M. DeepOrgan: Multi-level Deep Convolutional Networks for Automated Pancreas Segmentation. In Proceedings of the MICCA 2015, Munich, Germany, 5–9 October 2015; Volume 9349, pp. 556–564. [Google Scholar]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [PubMed]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. In Proceedings of the 25th Annual Conference on Neural Information Processing Systems 2011, Granada, Spain, 12–15 December 2011; Available online: http://dl.acm.org/citation.cfm?id=2986743 (accessed on 5 May 2020).

- Noguchi, S.; Nishio, M.; Yakami, M.; Nakagomi, L.; Togashi, K. Bone segmentation on whole-body CT using convolutional neural network with novel data augmentation techniques. Comput. Biol. Med. 2020, 121, 103767. [Google Scholar] [CrossRef] [PubMed]

- Eaton-Rosen, Z.; Bragman, F.; Ourselin, S.; Cardoso, M.J. Improving Data Augmentation for Medical Image Segmentation. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning (MIDL 2018), Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Nakai, H.; Nishio, M.; Yamashita, R.; Ono, A.; Nakao, K.K.; Fujimoto, K.; Togashi, K. Quantitative and Qualitative Evaluation of Convolutional Neural Networks with a Deeper U-Net for Sparse-View Computed Tomography Reconstruction. Acad. Radiol. 2020, 27, 563–574. [Google Scholar] [CrossRef] [PubMed]

- Sandfort, V.; Yan, K.; Pickhardt, P.J.; Summers, R.M. Data augmentation using generative adversarial networks (CycleGAN) to improve generalizability in CT segmentation tasks. Sci. Rep. 2019, 9, 16884. [Google Scholar] [CrossRef] [PubMed]

- Muramatsu, C.; Nishio, M.; Goto, T.; Oiwa, M.; Morita, T.; Yakami, M.; Kubo, T.; Togashi, K.; Fujita, H. Improving breast mass classification by shared data with domain transformation using a generative adversarial network. Comput. Biol. Med. 2020, 119, 103698. [Google Scholar] [CrossRef] [PubMed]

| Radiologist | Target | Number of Score 1 | Number of Score 2 | Number of Score 3 | Number of Score 4 | Number of Score 5 |

|---|---|---|---|---|---|---|

| Radiologist 1 | manually annotated label | 0 | 0 | 0 | 4 | 78 |

| Radiologist 1 | automatic segmentation | 0 | 3 | 3 | 31 | 45 |

| Radiologist 2 | manually annotated label | 0 | 0 | 0 | 8 | 74 |

| Radiologist 2 | automatic segmentation | 0 | 2 | 6 | 42 | 32 |

| Type of Model and Data Augmentation | DSC | JI | SE | SP |

|---|---|---|---|---|

| Baseline U-net + no data augmentation | 0.686 ± 0.186 | 0.548 ± 0.186 | 0.618 ± 0.221 | 1.000 ± 0.000 |

| Baseline U-net + conventional method | 0.694 ± 0.182 | 0.556 ± 0.183 | 0.631±0.220 | 1.000 ± 0.000 |

| Baseline U-net + mixup | 0.733 ± 0.106 | 0.588 ± 0.122 | 0.698 ± 0.155 | 1.000 ± 0.000 |

| Baseline U-net + RICAP | 0.699 ± 0.155 | 0.557 ± 0.169 | 0.624 ± 0.200 | 1.000 ± 0.000 |

| Baseline U-net + RICAP + mixup | 0.748 ± 0.127 | 0.611 ± 0.141 | 0.700 ± 0.176 | 1.000 ± 0.000 |

| Deep U-net + no data augmentation | 0.703 ± 0.166 | 0.563 ± 0.169 | 0.645 ± 0.201 | 1.000 ± 0.000 |

| Deep U-net + conventional method | 0.720 ± 0.171 | 0.586 ± 0.176 | 0.685 ± 0.210 | 1.000 ± 0.000 |

| Deep U-net + mixup | 0.725 ± 0.125 | 0.582 ± 0.137 | 0.694 ± 0.158 | 1.000 ± 0.000 |

| Deep U-net + RICAP | 0.740 ± 0.160 | 0.609 ± 0.169 | 0.691 ± 0.200 | 1.000 ± 0.000 |

| Deep U-net + RICAP + mixup | 0.789 ± 0.083 | 0.658 ± 0.103 | 0.762 ± 0.120 | 1.000 ± 0.000 |

| Name of Model | 2D/3D | Coarse/Fine | Mean DSC | Data Splitting |

|---|---|---|---|---|

| Holistically Nested 2D FCN Stage-1 [11] | 2D | coarse | 0.768 ± 0.111 | 4-fold CV |

| 2D FCN [13] | 2D | coarse | 0.803 ± 0.09 | 4-fold CV |

| Coarse-scaled Model 2D FCN [14] | 2D | coarse | 0.757 ± 0.105 | 4-fold CV |

| Single Model 3D U-net [12] (trained from scratch) | 3D | coarse | 0.815 ± 0.057 | 61 training and 21 test sets randomly selected |

| Single Model 3D Attention U-net [12] (trained from scratch) | 3D | coarse | 0.821 ± 0.068 | 61 training and 21 test sets randomly selected |

| Coarse-scaled Model 3D U-net [15] | 3D | coarse | 0.819 ± 0.068 | 4-fold CV |

| Proposed model | 2D | coarse | 0.789 ± 0.083 | 4-fold CV |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nishio, M.; Noguchi, S.; Fujimoto, K. Automatic Pancreas Segmentation Using Coarse-Scaled 2D Model of Deep Learning: Usefulness of Data Augmentation and Deep U-Net. Appl. Sci. 2020, 10, 3360. https://doi.org/10.3390/app10103360

Nishio M, Noguchi S, Fujimoto K. Automatic Pancreas Segmentation Using Coarse-Scaled 2D Model of Deep Learning: Usefulness of Data Augmentation and Deep U-Net. Applied Sciences. 2020; 10(10):3360. https://doi.org/10.3390/app10103360

Chicago/Turabian StyleNishio, Mizuho, Shunjiro Noguchi, and Koji Fujimoto. 2020. "Automatic Pancreas Segmentation Using Coarse-Scaled 2D Model of Deep Learning: Usefulness of Data Augmentation and Deep U-Net" Applied Sciences 10, no. 10: 3360. https://doi.org/10.3390/app10103360

APA StyleNishio, M., Noguchi, S., & Fujimoto, K. (2020). Automatic Pancreas Segmentation Using Coarse-Scaled 2D Model of Deep Learning: Usefulness of Data Augmentation and Deep U-Net. Applied Sciences, 10(10), 3360. https://doi.org/10.3390/app10103360