Recommending Learning Objects with Arguments and Explanations

Abstract

:1. Introduction

2. Related Work

3. Conversational Educational Recommender System (C-ERS)

3.1. Recommendation Process

- if they are facts.

- if it is not possible to be solved.

- if they are derived from other defeasible rules.

3.2. Conversational Process

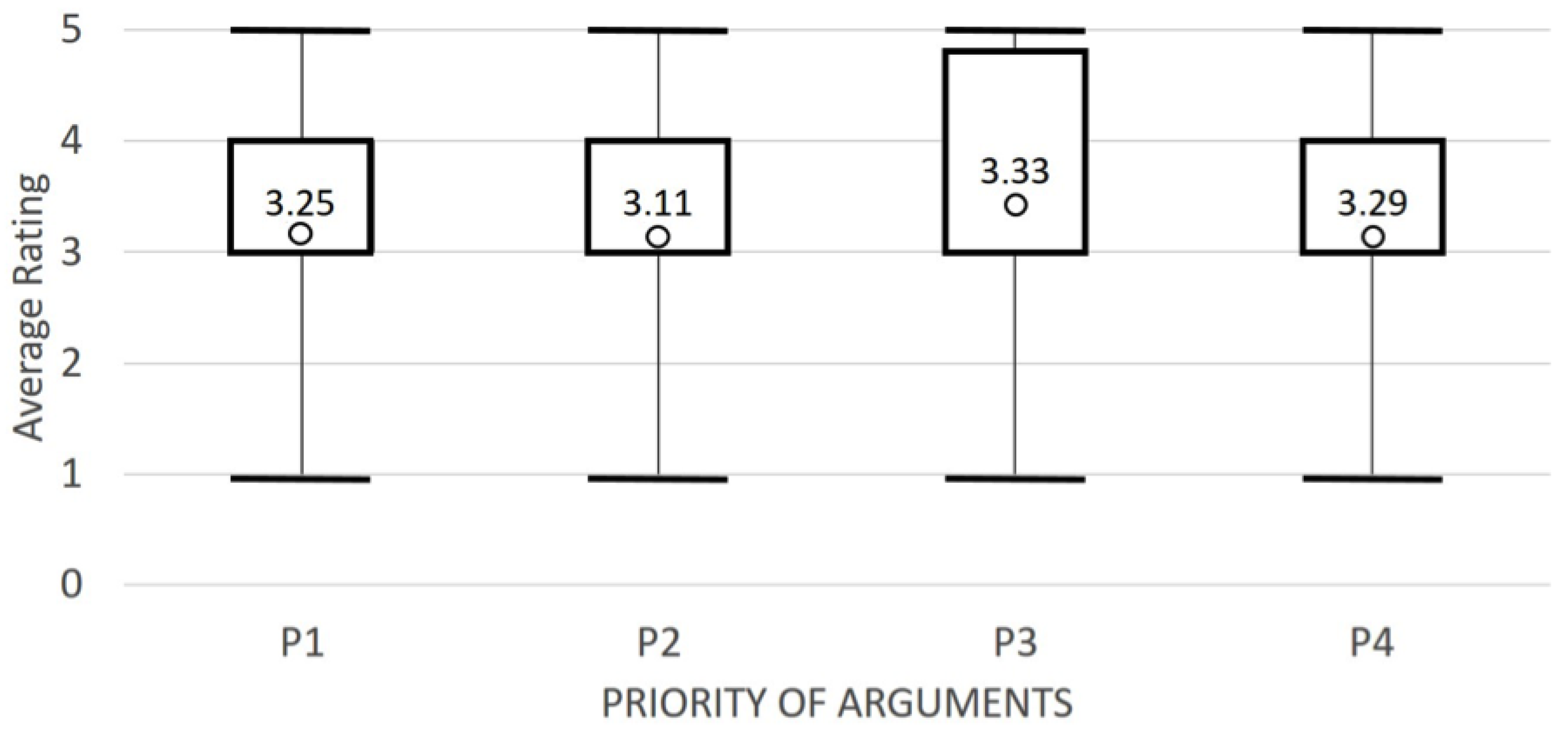

- P1: First, show arguments that suit the student profile and preferences (CONTENT-BASED ARGUMENTS): C1.1.1 > C1.1.2 > C1.2 > C2.1.1 > C2.1.2 > C3.1 > C4.1 > K1 > O1 > G1 > G2

- P2: First, show arguments that suit the profile and preferences of similar users (COLLABORATIVE ARGUMENTS): O1 > C1.1.1 > C1.1.2 > C1.2 > C2.1.1 > C2.1.2 > C3.1 > C4.1 > K1 > G1 > G2

- P3: First, show arguments that suit the usage history of the users (KNOWLEDGE-BASED ARGUMENTS): K1 > C1.1.1 > C1.1.2 > C1.2 > C2.1.1 > C2.1.2 > C3.1 > C4.1 > O1 > G1 > G2

- P4: First, show arguments that justify the format of the object (GENERAL ARGUMENTS): G1 > G2 > C1.1.1 > C1.1.2 > C1.2 > C2.1.1 > C2.1.2 > C3.1 > C4.1 > K1 > O1

4. Evaluation

- To provide effective recommendations that suit the students’ profile and learning objectives (effectiveness).

- To persuade students to try specific LOs (persuasiveness).

- To elicit the actual preferences of the user by allowing him/her to correct the system’s assumptions (scrutability).

4.1. Methodology

- Personal data: ID, name, surname, sex, date of birth, nationality, city of residence, address, language, phone, and mail.

- Student’s educational preferences:

- –

- Interactivity level: high human–computer interaction LOs (preferred by nine students), medium human–computer interaction LOs (preferred by 38 students), or LOs that focus on presentation of content (preferred by three students).

- –

- Preferred language: all students mother tongue was Spanish, and thus all preferred LOs in Spanish.

- –

- Preferred format: was selected by nine students, by eight students, by 31 students, and ’other formats’ by two students.

- Learning Style: to model the learning style of each student, it was followed the VARK (http://vark-learn.com/) model. The model classified 25 as visual students, 6 as auditory, 12 as reader, and 7 as kinesthetic.

- History of uses: for each LO ranked by the student, the system stores its ID, the rating assigned, and the date of use.

- Recommendation processes 1–2: P1 (show content-based arguments first)

- Recommendation processes 3–4, P2 (show collaborative arguments first)

- Recommendation processes 5–6, P3 (show knowledge-based arguments first)

- Recommendation processes 7–8, P2 (show general arguments first)

- Recommendation processes 9–10, random

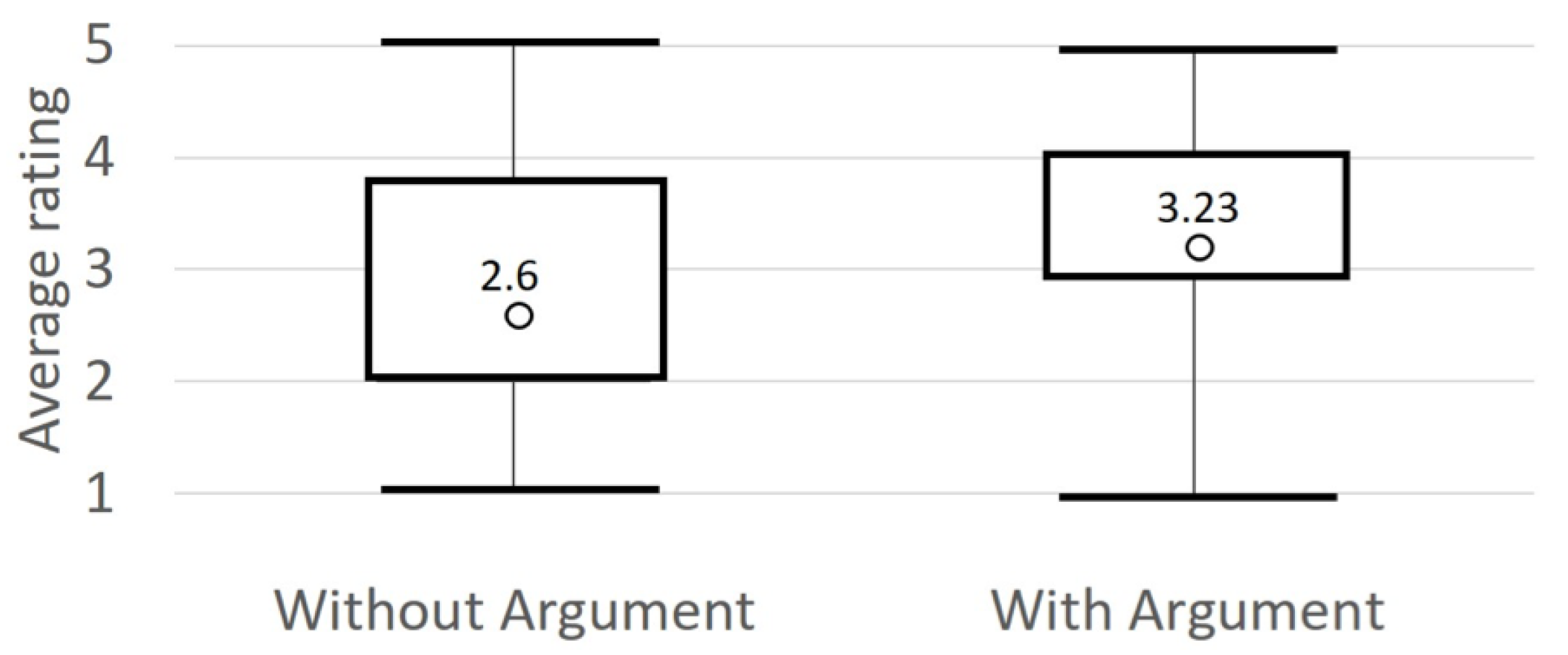

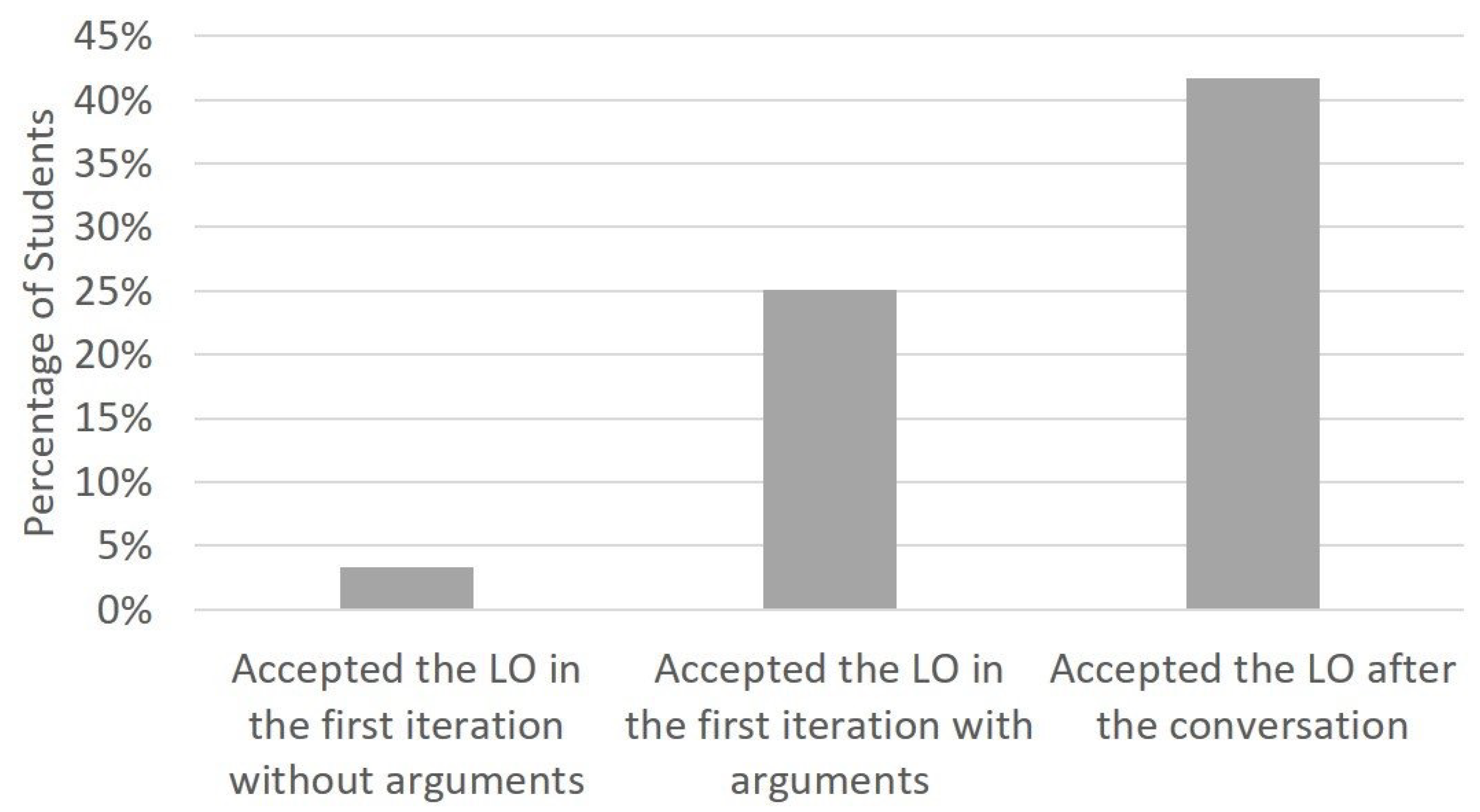

4.2. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Drachsler, H.; Verbert, K.; Santos, O.C.; Manouselis, N. Panorama of recommender systems to support learning. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015; pp. 421–451. [Google Scholar]

- Tucker, B. The flipped classroom. Educ. Next 2012, 12, 82–83. [Google Scholar]

- Zapalska, A.; Brozik, D. Learning styles and online education. Campus-Wide Inf. Syst. 2006, 23, 325–335. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez, P.; Heras, S.; Palanca, J.; Poveda, J.M.; Duque, N.; Julián, V. An educational recommender system based on argumentation theory. AI Commun. 2017, 30, 19–36. [Google Scholar] [CrossRef]

- Rodríguez, P.A.; Ovalle, D.A.; Duque, N.D. A student-centered hybrid recommender system to provide relevant learning objects from repositories. In Proceedings of the International Conference on Learning and Collaboration Technologies, Los Angeles, CA, USA, 2–7 August 2015; pp. 291–300. [Google Scholar]

- Bridge, D.G. Towards Conversational Recommender Systems: A Dialogue Grammar Approach. In Proceedings of the ECCBR Workshops, Aberdeen, UK, 4–7 September 2002; pp. 9–22. [Google Scholar]

- Christakopoulou, K.; Radlinski, F.; Hofmann, K. Towards conversational recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 815–824. [Google Scholar]

- Zhao, X.; Zhang, W.; Wang, J. Interactive collaborative filtering. In Proceedings of the 22nd ACM International Conference on Conference on Information & Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013; pp. 1411–1420. [Google Scholar]

- Rubens, N.; Elahi, M.; Sugiyama, M.; Kaplan, D. Active learning in recommender systems. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015; pp. 809–846. [Google Scholar]

- Chen, L.; Pu, P. Critiquing-based recommenders: Survey and emerging trends. User Model. User-Adapt. Interact. 2012, 22, 125–150. [Google Scholar] [CrossRef] [Green Version]

- Felfernig, A.; Friedrich, G.; Jannach, D.; Zanker, M. Constraint-based recommender systems. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015; pp. 161–190. [Google Scholar]

- Mahmood, T.; Ricci, F. Improving recommender systems with adaptive conversational strategies. In Proceedings of the 20th ACM Conference on Hypertext and Hypermedia, Orino, Italy, 29 June–1 July 2009; pp. 73–82. [Google Scholar]

- He, C.; Parra, D.; Verbert, K. Interactive recommender systems: A survey of the state of the art and future research challenges and opportunities. Expert Syst. Appl. 2016, 56, 9–27. [Google Scholar] [CrossRef]

- Vig, J.; Sen, S.; Riedl, J. Tagsplanations: Explaining Recommendations Using Tags. In Proceedings of the 14th International Conference on Intelligent User Interfaces, Sanibel Island, FL, USA, 8–11 February 2009; pp. 47–56. [Google Scholar] [CrossRef]

- Symeonidis, P.; Nanopoulos, A.; Manolopoulos, Y. MoviExplain: A Recommender System with Explanations. In Proceedings of the Third ACM Conference on Recommender Systems, New York, NY, USA, 23–25 October 2009; pp. 317–320. [Google Scholar] [CrossRef]

- Tintarev, N.; Masthoff, J. Designing and evaluating explanations for recommender systems. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2011; pp. 479–510. [Google Scholar]

- Tintarev, N.; Masthoff, J. Explaining recommendations: Design and evaluation. In Recommender Systems Handbook; Springer US: New York, NY, USA, 2015; pp. 353–382. [Google Scholar]

- Fogg, B. Persuasive technology: using computers to change what we think and do. Ubiquity 2002, 2002, 5. [Google Scholar] [CrossRef] [Green Version]

- Yoo, K.H.; Gretzel, U.; Zanker, M. Source Factors in Recommender System Credibility Evaluation. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015; pp. 689–714. [Google Scholar]

- Benbasat, I.; Wang, W. Trust in and adoption of online recommendation agents. J. Assoc. Inf. Syst. 2005, 6, 4. [Google Scholar] [CrossRef]

- Sinha, R.; Swearingen, K. The role of transparency in recommender systems. In Proceedings of the Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; pp. 830–831. [Google Scholar]

- Zapata, A.; Menendez, V.; Prieto, M.; Romero, C. A hybrid recommender method for learning objects. IJCA Proc. Des. Eval. Digit. Content Educ. (DEDCE) 2011, 1, 1–7. [Google Scholar]

- Sikka, R.; Dhankhar, A.; Rana, C. A Survey Paper on E-Learning Recommender Systems. Int. J. Comput. Appl. 2012, 47, 27–30. [Google Scholar] [CrossRef]

- Salehi, M.; Pourzaferani, M.; Razavi, S. Hybrid attribute-based recommender system for learning material using genetic algorithm and a multidimensional information model. Egypt. Inform. J. 2013, 14, 67–78. [Google Scholar] [CrossRef] [Green Version]

- Dwivedi, P.; Bharadwaj, K. e-Learning recommender system for a group of learners based on the unified learner profile approach. Expert Syst. 2015, 32, 264–276. [Google Scholar] [CrossRef]

- Tarus, J.K.; Niu, Z.; Mustafa, G. Knowledge-based recommendation: a review of ontology-based recommender systems for e-learning. Artif. Intell. Rev. 2018, 50, 21–48. [Google Scholar] [CrossRef]

- Walton, D. Argumentation Schemes and Their Application to Argument Mining. Stud. Crit. Think. Ed. Blair Windsor Stud. Argum. 2019, 8, 177–211. [Google Scholar]

- Briguez, C.; Budán, M.; Deagustini, C.; Maguitman, A.; Capobianco, M.; Simari, G. Towards an Argument-based Music Recommender System. COMMA 2012, 245, 83–90. [Google Scholar]

- Briguez, C.; Capobianco, M.; Maguitman, A. A theoretical framework for trust-based news recommender systems and its implementation using defeasible argumentation. Int. J. Artif. Intell. Tools 2013, 22. [Google Scholar] [CrossRef] [Green Version]

- Recio-García, J.; Quijano, L.; Díaz-Agudo, B. Including social factors in an argumentative model for Group Decision Support Systems. Decis. Support Syst. 2013, 56, 48–55. [Google Scholar] [CrossRef]

- Chesñevar, C.; Maguitman, A.; González, M. Empowering recommendation technologies through argumentation. In Argumentation in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 403–422. [Google Scholar]

- Briguez, C.; Budán, M.; Deagustini, C.; Maguitman, A.; Capobianco, M.; Simari, G. Argument-based mixed recommenders and their application to movie suggestion. Expert Syst. Appl. 2014, 41, 6467–6482. [Google Scholar] [CrossRef]

- Naveed, S.; Donkers, T.; Ziegler, J. Argumentation-Based Explanations in Recommender Systems: Conceptual Framework and Empirical Results. In Proceedings of the Adjunct Publication of the 26th Conference on User Modeling, Adaptation and Personalization, Singapore, 8–11 July 2018; pp. 293–298. [Google Scholar]

- Klašnja-Milićević, A.; Ivanović, M.; Nanopoulos, A. Recommender systems in e-learning environments: A survey of the state-of-the-art and possible extensions. Artif. Intell. Rev. 2015, 44, 571–604. [Google Scholar] [CrossRef]

- Fleming, N. The VARK Questionnaire-Spanish Version. 2014. Available online: https://vark-learn.com/wp-content/uploads/2014/08/The-VARK-Questionnaire-Spanish.pdf (accessed on 10 April 2020).

- Ricci, F.; Rokach, L.; Shapira, B. Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- García, A.; Simari, G. Defeasible logic programming: An argumentative approach. Theory Pract. Log. Program. 2004, 4, 95–138. [Google Scholar] [CrossRef] [Green Version]

- Gelfond, M.; Lifschitz, V. Classical negation in logic programs and disjunctive databases. New Gener. Comput. 1991, 9, 365–385. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Oren, N.; Norman, T. Probabilistic argumentation frameworks. In Theory and Applications of Formal Argumentation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–16. [Google Scholar]

- Kirkpatrick, D.; Kirkpatrick, J. Evaluating Training Programs: The Four Levels; Berrett-Koehler Publishers: San Francisco, CA, USA, 2006. [Google Scholar]

- Snow, R.E. Aptitude-treatment interaction as a framework for research on individual differences in psychotherapy. J. Consult. Clin. Psychol. 1991, 59, 205–216. [Google Scholar] [CrossRef] [PubMed]

| 1: | user_type(, ) |

| 2: | resource_type(, ) |

| 3: | structure(, ) |

| 4: | state(, ) |

| 5: | similarity(, ) > |

| 6: | similarity(, ) > |

| 7: | vote(, ) ≥ 4 |

| 8: | interactivity_type(, ) ← resource_type(, ) |

| 9: | appropriate_resource(, ) ← user_type(, ) ∧ resource_type(, ) |

| 10: | appropriate_interactivity(, ) ← user_type(, ) ∧ interactivity_type(, ) |

| 11: | educationally_appropriate(, ) ← appropriate_resource(, ) ∧ appropriate_interactivity(, ) |

| 12: | generally_appropriate() ← structure(, ) ∧ state(, ) |

| 13: | recommend(, ) ← educationally_appropriate(, ) ∧ generally_appropriate() |

| 14: | recommend(, ) ← similarity(, ) > ∧ vote(, ) ≥ 4 |

| 15: | recommend(, ) ← similarity(, ) > ∧ vote(, ) ≥ 4 |

| General Rules |

| G1: recommend(user, LO) ← cost(LO) = 0 |

| Explanation: ‘This LO may interest you, since it is for free’ |

| Responses: |

| +RG1: ’Accept’ |

| ∼RG1: ’Not sure. I don’t care about the cost’ |

| −RG1: ’Reject. I don’t like it’ |

| G2: recommend(user, LO) ← quality_metric(LO) ≥ 0.7 |

| Explanation: ‘This LO may interest you, since its quality is high’ |

| Responses: |

| +RG2: ’Accept’ |

| ∼RG2: ’Not sure. I don’t care about the quality’ |

| −RG2: ’Reject. I don’t like it’ |

| Content-based Rules |

| C1: recommend(user, LO) ← educationally_appropriate(user, LO) ∧ generally_appropriate(LO) |

| C1.1: educationally_appropriate(user, LO) ← appropriate_resource(user, LO) ∧ appropriate_interactivity(user, LO) |

| C1.1.1: appropriate_resource(user, LO) ← user_type(user, type) ∧ resource_type(LO, type) |

| Explanation: ‘This LO may interest you, since it is a [RESOURCE_TYPE], which is suitable for your [LEARNING_STYLE] learning style’ |

| Responses: |

| +RC1.1.1: ’Accept’ |

| ∼RC1.1.1: ’Not sure. Show me more reasons |

| −RC1.1.1: ’Reject. I prefer LOs of the type [TYPE]’ |

| C1.1.2: appropriate_interactivity(user, LO) ← user_type(user, type) ∧ interactivity_type(LO, type) |

| Explanation: ‘This LO may interest you, since it requires [INTERACTIVITY_TYPE] interaction, which is suitable for your [LEARNING_STYLE] learning style’ |

| Responses: |

| +RC1.1.2: ’Accept’ |

| ∼RC1.1.2: ’Not sure. Show me more reasons’ |

| −RC1.1.2: ’Reject. I prefer LOs that require [INTERACTIVITY_TYPE] interaction’ |

| C1.2: generally_appropriate(LO) ← structure(LO, atomic) ∧ state(LO, final) |

| Explanation: ‘This LO may interest you, since it is self-contained’ |

| Responses: |

| +RC1.2: ’Accept’ |

| ∼RC1.2: ’Not sure. I do not care that the LO is not self-contained’ |

| −RC1.2: ’Reject. I don’t like it’ |

| C2: recommend(user, LO)← educationally_appropriate(user, LO) ∧ generally_appropriate(LO) ∧ technically_appropriate(user, LO) |

| C2.1: technically_appropriate(user, LO) ← appropriate_language(user, LO) ∧ appropriate_format(LO) |

| C2.1.1: appropriate_language(user, LO) ← language_preference(user, language) ∧ object_language(LO, language) |

| Explanation: ‘This LO may interest you, since it suits your language preferences: [OBJECT_LANGUAGE]’ |

| Responses: |

| +RC2.1.1: ’Accept’ |

| ∼RC2.1.1: ’Not sure. Show me more reasons’ |

| −RC2.1.1: ’Reject. I prefer LOs in [LANGUAGE]’ |

| C2.1.2: appropriate_format(LO) ← format_preference(user, format) ∧ object_format(LO, format) |

| Explanation: ‘This LO may interest you, since it suits your format preferences: [OBJECT_FORMAT] |

| Responses: |

| +RC2.1.2: ’Accept’ |

| ∼RC2.1.2: ’Not sure. Show me more reasons’ |

| −RC2.1.2: ’Reject. I prefer LOs with format [OBJECT_FORMAT]’ |

| C3: recommend(user, LO) ← educationally_appropriate(user, LO) ∧ generally_appropriate (LO) ∧ updated(LO) |

| C3.1: updated(LO) ← date(LO, date) < 5 years |

| Explanation: ‘This LO may interest you, since it is updated’ |

| Responses: |

| +RC3.1: ’Accept’ |

| ∼RC3.1: ’Not sure. I do not care that the LO is not updated’ |

| −RC3.1: ’Reject. I don’t like it’ |

| C4: recommend(user, LO) ← educationally_appropriate(user, LO) ∧ generally_appropriate(LO) ∧ learning_time_appropriate(LO) |

| C4.1: learning_time_appropriate(LO) ← hours(LO) < γ |

| Explanation: ‘This LO may interest you, since it suits your learning time preferences (less than [] hours to use it)’ |

| Responses: |

| +RC4.1: ’Accept’ |

| ∼RC4.1: ’Not sure. I do not care about the learning time required to use it’ |

| −RC4.1: ’Reject. I don’t like it’ |

| Collaborative Rules |

| O1: recommend(user, LO) ← similarity(user, user) ∧ vote(user, LO) ≥ 4 |

| Explanation: ‘This LO may interest you, since it likes to users like you’ |

| Responses: |

| +RO1: ’Accept’ |

| ∼RO1: ’Not sure. Show me more reasons’ |

| −RO1: ’Reject. I don’t like it’ |

| Knowledge-based Rules |

| K1: recommend(user, LO)← similarity(LO, LO) ∧ vote(user, LO) ≥ 4 |

| Explanation: ‘This LO may interest you, since it is similar to another LO that you liked ([LO])’ |

| Responses: |

| +RO1: ’Accept’ |

| ∼RO1: ’Not sure. Show me more reasons’ |

| −RO1: ’Reject. I don’t like it’ |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heras, S.; Palanca, J.; Rodriguez, P.; Duque-Méndez, N.; Julian, V. Recommending Learning Objects with Arguments and Explanations. Appl. Sci. 2020, 10, 3341. https://doi.org/10.3390/app10103341

Heras S, Palanca J, Rodriguez P, Duque-Méndez N, Julian V. Recommending Learning Objects with Arguments and Explanations. Applied Sciences. 2020; 10(10):3341. https://doi.org/10.3390/app10103341

Chicago/Turabian StyleHeras, Stella, Javier Palanca, Paula Rodriguez, Néstor Duque-Méndez, and Vicente Julian. 2020. "Recommending Learning Objects with Arguments and Explanations" Applied Sciences 10, no. 10: 3341. https://doi.org/10.3390/app10103341

APA StyleHeras, S., Palanca, J., Rodriguez, P., Duque-Méndez, N., & Julian, V. (2020). Recommending Learning Objects with Arguments and Explanations. Applied Sciences, 10(10), 3341. https://doi.org/10.3390/app10103341