The main remote sensing task is to obtain a large number of measurement points to achieve a detailed and precise description of a surrounding area and objects in a scanned space. The inseparable part constitutes their processing to obtain desired input data for the purpose of the application which the scanning was used for. There are many different applications where the processing of remote sensing is important; see the following subsections for how to deal and process point clouds.

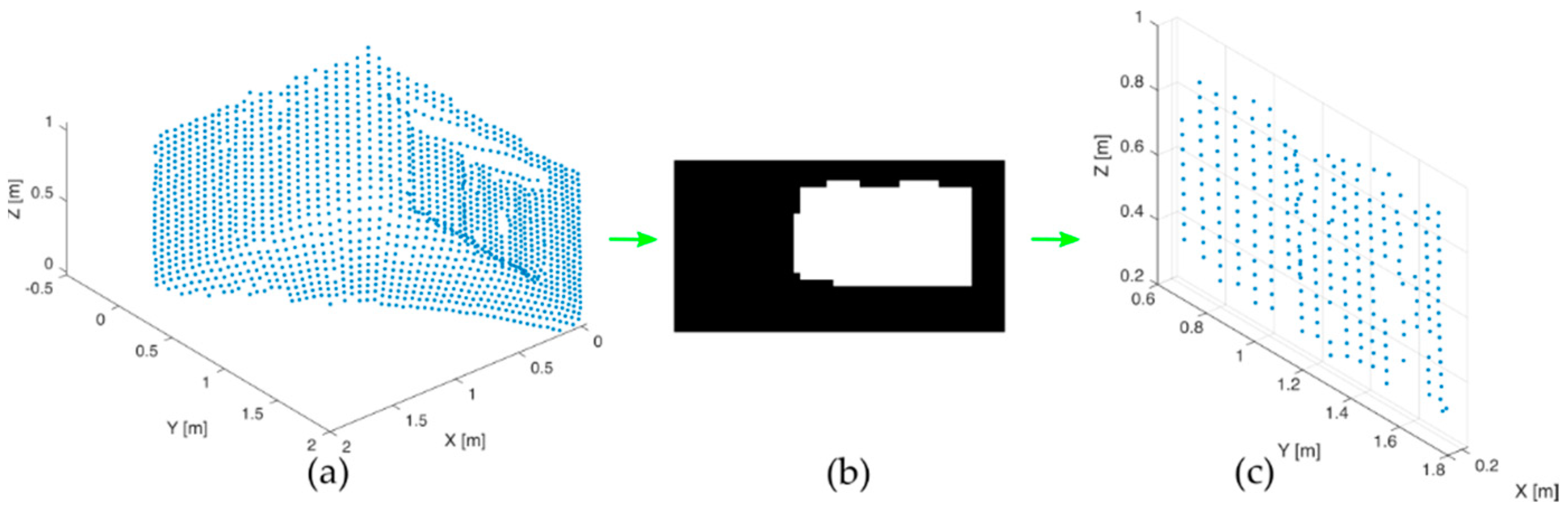

The main issue of our research is point cloud processing and simplification with the focus on the detection, statistical description, vectorization, and visualization of basic space features. This will allow the decreased memory consumption of physical data storage. For example, individual points of a planar surface composed of a thousand points are not so important for the subsequent processing. We rather look for vertices of the analyzed planar surface. Our contribution in point cloud processing is to show a different alternative way of processing. The main advantages of our approach lie in the combination of the physical space points quantization with image processing methods. This connection allows: obtaining important space features such as the area, perimeter and volume; the space and statistical descriptions of planar surfaces, including the descriptive point amount decreasing by the vectorization of vertices; the correction and recovery of missing space data; and a planar surface presented as the image allows the alternative way of the physical points storage, including its visualization. It also serves to other researchers as the extension to the used methods presented in the following subsection. Moreover, the presented approach is usable not only for point clouds but also as the general processing and analysis of different levels for any 3D data.

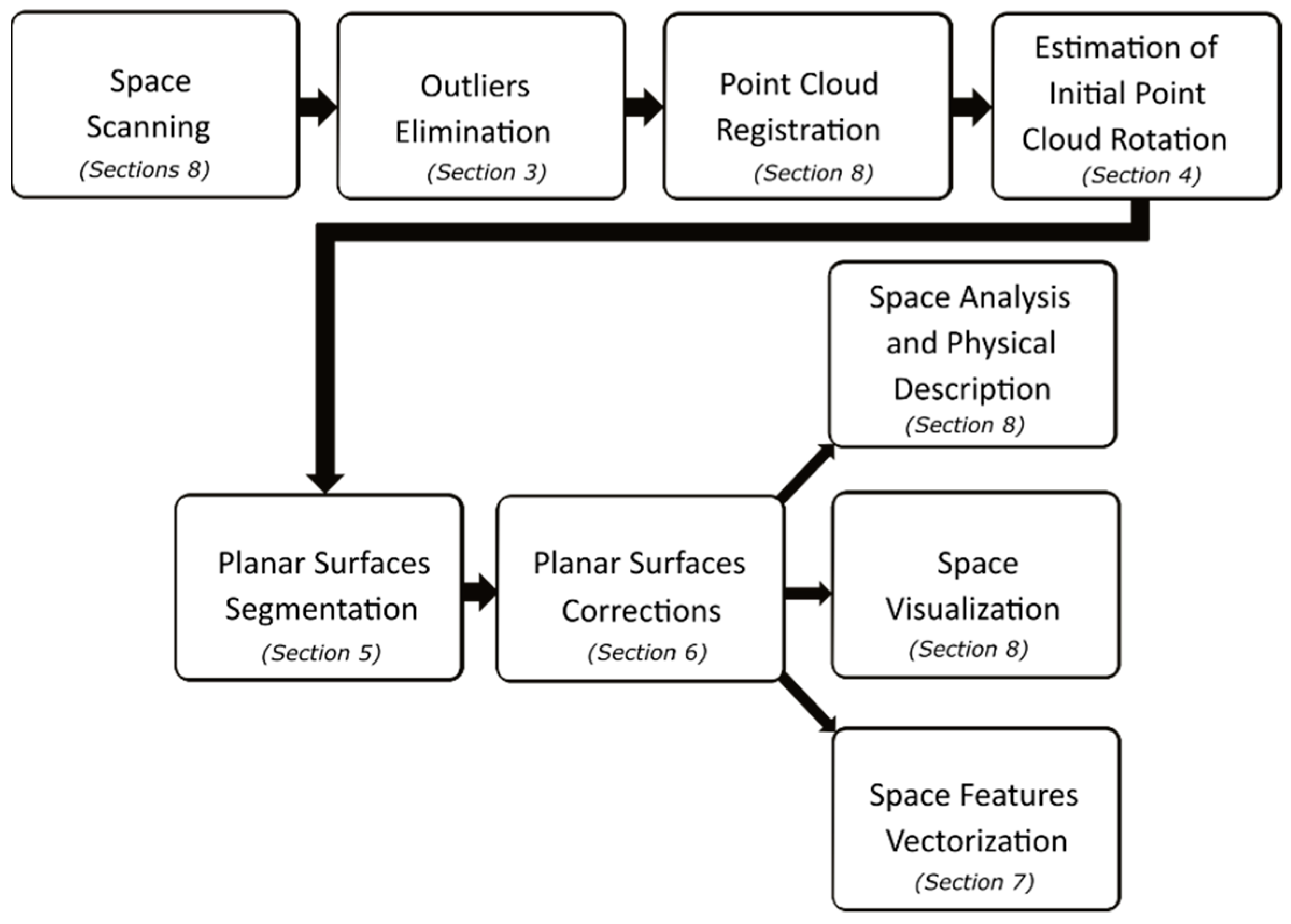

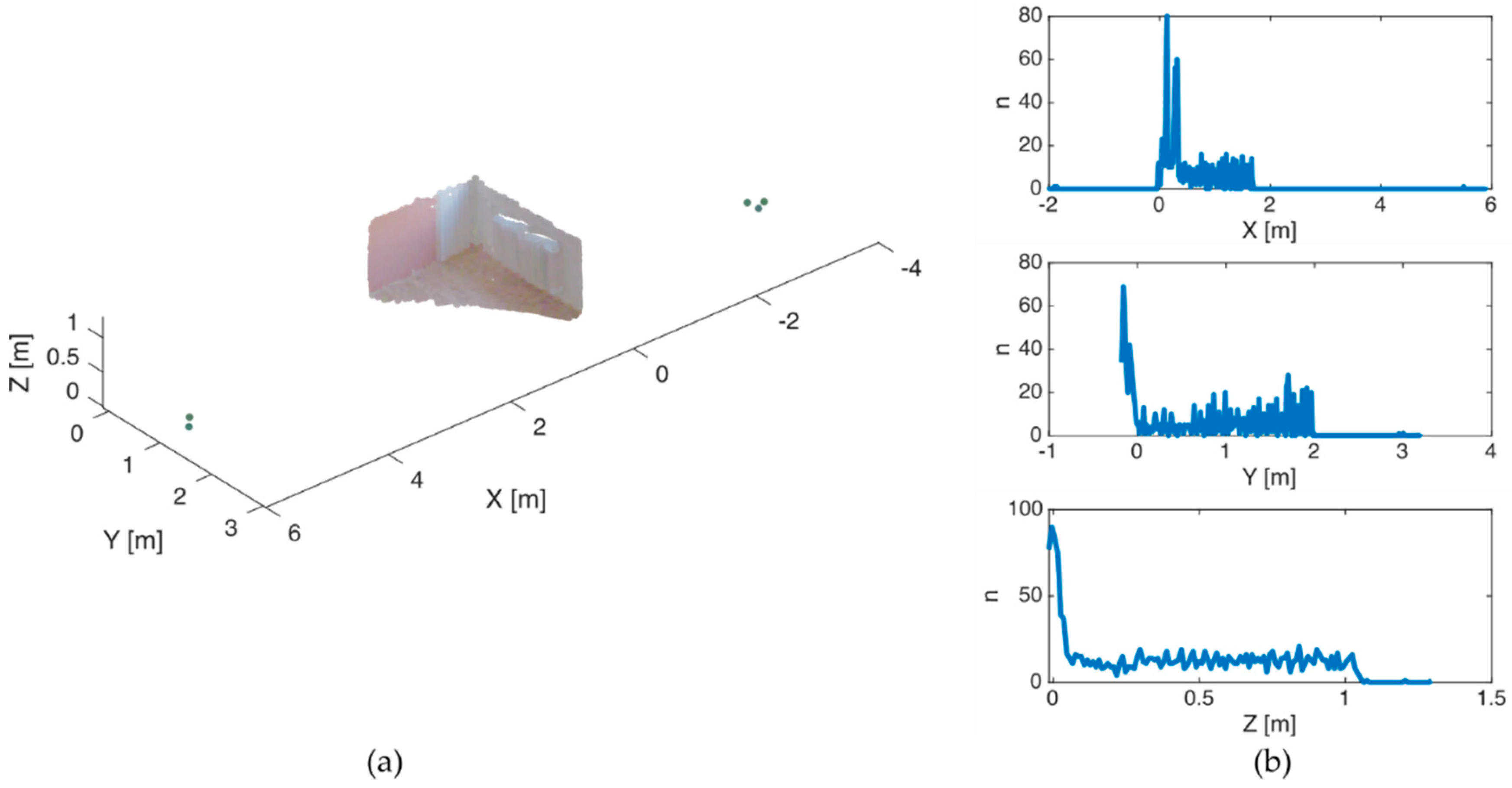

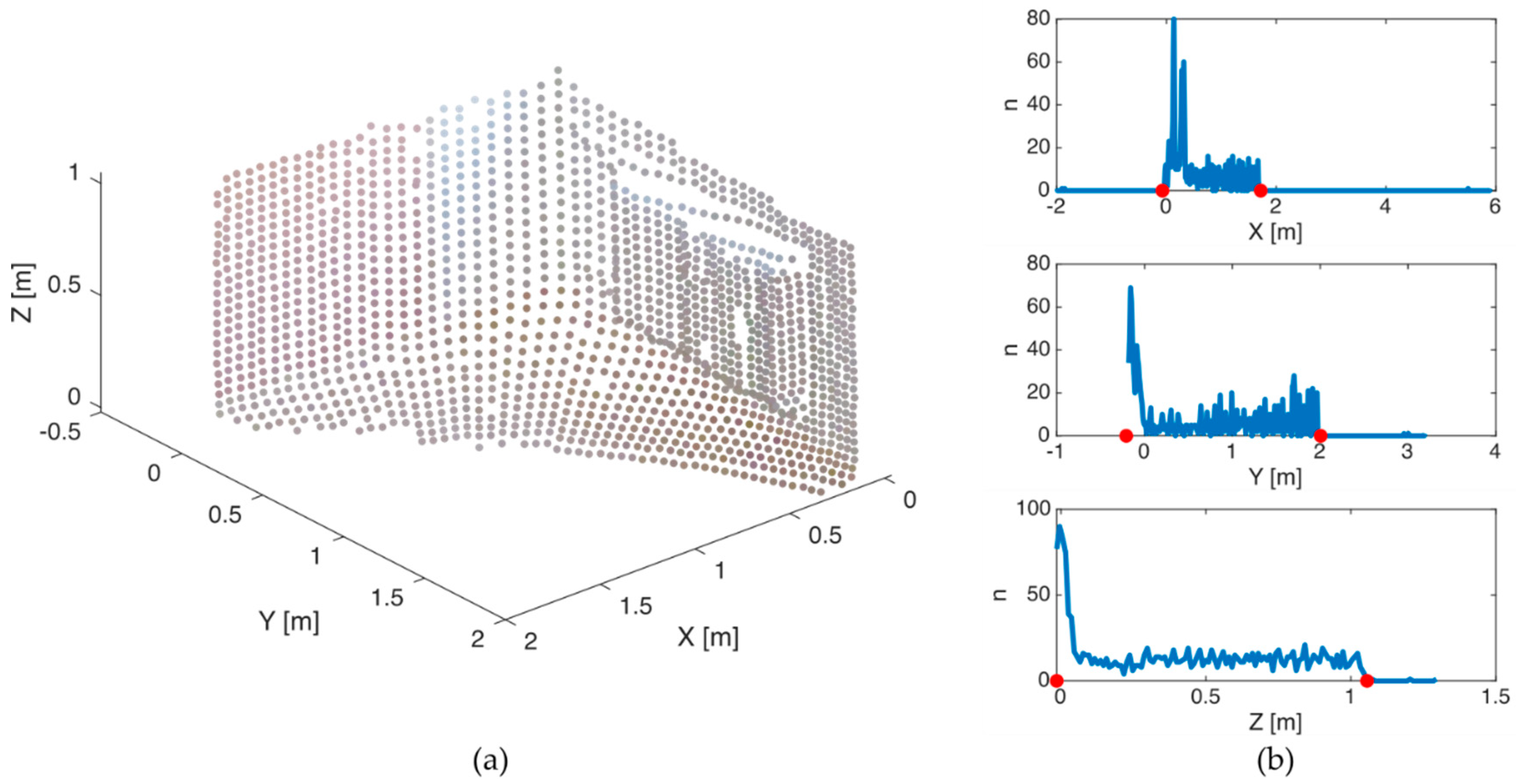

This paper is focused on the presentation of several new methods for point cloud processing such as the outlier points removal, estimation of the initial point cloud rotation, point cloud data correction and recovery, and the vertices detection of a planar surface. In the Introduction section is a survey about different point cloud processing methods for basic object detection and segmentation, the planar surface and space features estimation, and their typical application. The paper further includes the description of our developed point cloud processing pipeline and the presentation of the new methods in detail to give a comprehensive overview of our developed approach, including verification on real space data. In the results part, the processing of the complex point cloud with several rooms is presented. The results and algorithms are evaluated also in terms of precision against the physical space dimensions and comparison with the convex hull results. The recommendations for this processing approach are stated.

1.1. Related Works

A wide survey of 3D surface reconstruction methods is provided in reference [

1]. The authors accurately and in a well-arranged way evaluated the state-of-the-art methods with their advantages and disadvantages. The survey includes a table of all methods, where the main features and the possibility of use are summarized.

Valuable work is presented by the authors in reference [

2]. The paper deals with the automatic reconstruction of the fully volumetric 3D building models from oriented point clouds. The proposed method can process the large complex real-world point clouds from the unfiltered form into the separated room model, including suppressing of undesired objects inside a room. Several methods for 3D modelling an indoor environment relying explicitly on the prior knowledge of scanner positions are in reference [

3]. In many software products provided by a manufacturer of scanners, the scanning position is irretrievably lost. Therefore, the authors propose a method for the reconstruction of the original position of the scanners. The presented method can determine these positions also under very unfavorable conditions. The next research focuses on the estimation of surface geometries directly from a point cloud as in reference [

4]. It introduces the 3D surface estimation method of the household objects inside the area. The authors developed a Global Principal Curvature Shape Descriptor (GPCSD) for the categorization of objects into groups. The main purpose is to improve the manipulation planning for a robotic hand.

Researchers in reference [

5] introduced a point cloud segmentation method for urban environment data. Their method is based on the robust normal estimation by the construction of an octree-based hierarchical representation for input data. The achieved results prove the concept of usability for the urban environment point clouds even if there is space for improving the detection of some regular objects with curved surfaces. The octree-based approach, with a combination of PCA analysis, is presented in reference [

6]. Results are compared with other detection algorithms such as a 3D Kernel-based Hough Transform (3D-KHT) or the classical Random Sample Consensus (RANSAC). This method provides accurate results, robustness to noise, and the possibility to detect planes with small angle variations. The similar research in reference [

7] focuses on the plane segmentation of building point clouds. The proposed Density-Based Spatial Clustering of Applications with Noise (DBSCAN) method also respects the curvatures to provide the final fine segmentation. The DBSCAN clustering for surface segmentation is used also in reference [

8]. A part, in which the results are presented, shows the desired surface segmentation, but only in a simple point cloud, which is not a real-world case. The surfaces can be extracted also from the RGBD images (see reference [

9]). The proposed split and merge approach produces interesting visual results. The planes are detected from depth images by the Depth-driven Plane Detection (DPD) algorithm based on a region grooving (see reference [

10]). The Hough transformation (HT) approach called D-KHT can be used for a plane segmentation from these images, as it is shown in reference [

11]. Due to the properties of the HT, this method can detect the planes with discontinuities. The ground plane detection using the depth maps captured by a stereo camera is presented in reference [

12]. The camera is moving, and the system deals with the elimination of their roll angle for the correct plane segmentation.

The growing topical issue of deep learning in recent years is used for point cloud segmentation. One case of use is indoor boundary estimation (see reference [

13]). The described method relies on the depth estimation and wall segmentation to generate the exterior point cloud. The deep supervised clustering helps to fit the wall planes to obtain the resulting boundary map. In a different research work, the deep learning method called PCPNet is used to estimate local 3D shape properties in point clouds (see reference [

14]). The main purpose is to classify the objects and shapes or do semantic labeling. The presented results show good estimation ability for the normals and the curvatures, even in the noisy point clouds. The deep learning approach is likewise used on automatic building detection and segmentation from the aerial images or the point clouds (see reference [

15]). The main focus lies in improving and preparing high-quality training data, which allows better segmentation of the detected objects. The presented results show the high detection accuracy (higher than 90%) with the RGB-lidar and the fused RGB-lidar data of the urban scenes. The next research (shown in reference [

16]) presents the new topology-based global 3D point cloud descriptor called Signature of Topologically Persistent Points (STPP). The topological grouping improves the detection robustness and increases the resistance against a noise. The complementary information also improves deep learning performance. The different topological method TopoLAB described in reference [

17], that no longer uses neural networks, focuses on a pipeline to recover the broken topology of planar primitives during the reconstruction of complex building models. Due to the scanning difficulties and a variable point cloud density, some parts of a model can be missing. The proposed method allows the recovery of these parts, including to visualize the different levels of the details.

With respect to the safety and health of the persons, the laser scanning plays an important role in exploring abandoned or dangerous places like various mines (see reference [

18]). Similar to this use is the scanning of rock masses (see reference [

19]). The proposed method for the planar surface extraction is able to deal with rough and complex surfaces.

For the Mobile Laser Scanning (MLS), the new method Planes detection and Segmentation is proposed based on the Planarity values of Scan profile groups (PSPS) (see reference [

20]). The presented results prove the quality of this method in comparison with other state-of-the-art algorithms for these types of spare point clouds, for example, the segmented planar surfaces stand out by their compactness.

The 3D point cloud processing is important not only to get physical shapes, but also it can be used to quantitatively characterize the height, width, and volume of shrub plants. Authors in reference [

21] analyze point clouds of different blueberry genotype groups to improve the mechanical harvesting process. For comparison of different shrubs, the correlation is used. In the study described in reference [

22], the researchers focus on the filtering method of leaves based on the manifold distance and the normal estimation. The scanned leaves contain the outliers and the noise. The precise estimation of the shape and area of a leaf is important to obtain the tree growth index. Moreover, also the ground plane segmentation plays an important role in the analysis of asparagus harvesting (see reference [

23]). The asparagus stems are scanned by a modern time-of-flight camera, which ranks as the fastest scanning device. The proposed Hyun method outperformed the classical RANSAC method in a scene with high clutter. Similar to the previous works is a parametrization of the forest stand described in reference [

24]. The researchers propose to use, instead of leaf area index, other space characteristics such as the stand denseness, canopy density, crown porosity, and others. In the practical part, they discovered a high correlation index between each other. The geometric characterization of vines from 3D point clouds is important in the agronomy sector (see reference [

25]). The convex hull method is used for calculating the volume of these plants. The recent research in reference [

26] deals also with this method in the aim to find the shortest path in 2D complexes with non-positive curvatures. The convex hull method is often used for the determination of an area and perimeter in 2D shapes.

Point cloud processing, object detection and segmentation are widely used in various research areas for different purposes as is shown above. The right processing method choice helps with the extraction of desired information. Input data transformation into the different forms allows the easy obtaining of an unknown feature.

1.2. Our Point Cloud Processing Contribution

The first work of object detection, which we proposed, was the algorithm for point cloud unfolding into a single plane with height preservation, as in reference [

27]. The presented method works correctly for rectangular rooms with four walls, a known scanner position, and walls not occupied by many objects. The aim of this work was to unfold all walls with objects into a single plane. The single plane representation allows the removal of walls close to zero and analyzing of the remaining objects. Finally, this method proved to be a dead-end for follow-up utilization. The unfolding of the objects placed on the corner splitting line caused their division into two parts. The global position of the object is also lost.

Many researchers are using depth images in their work (as shown in references [

9,

10,

11,

12,

13]). The depth images contain the real physical sizes and distances which are possible to utilize further. We had a point cloud without depth information, so we decided to develop the algorithm for the depth map construction, which we described in reference [

28]. The main advantage of this algorithm lies in the possibility to create a depth map from any point cloud with the arbitrary camera position and arbitrary camera orientation. The concept of space quantization has shown to be useful for further space data processing. Moreover, we can process these images by image processing methods and for example, transform depth pixels back into the point cloud by using the reverse process. The depth image includes space information about the structure and the quality of an analyzed surface. The depth image offers to detect surfaces or other objects (see references [

9,

10,

11,

12,

13]). A depth camera covers only the area, which is seen by the camera itself. This is the main disadvantage for global object detection and segmentation. Some objects cover the other objects and areas present in a scene. For this reason, a depth image is not suitable for global space segmentation and description, but the space quantization into the image with a connection of image processing methods offers wide possibilities for basic object detection and detailed space description.

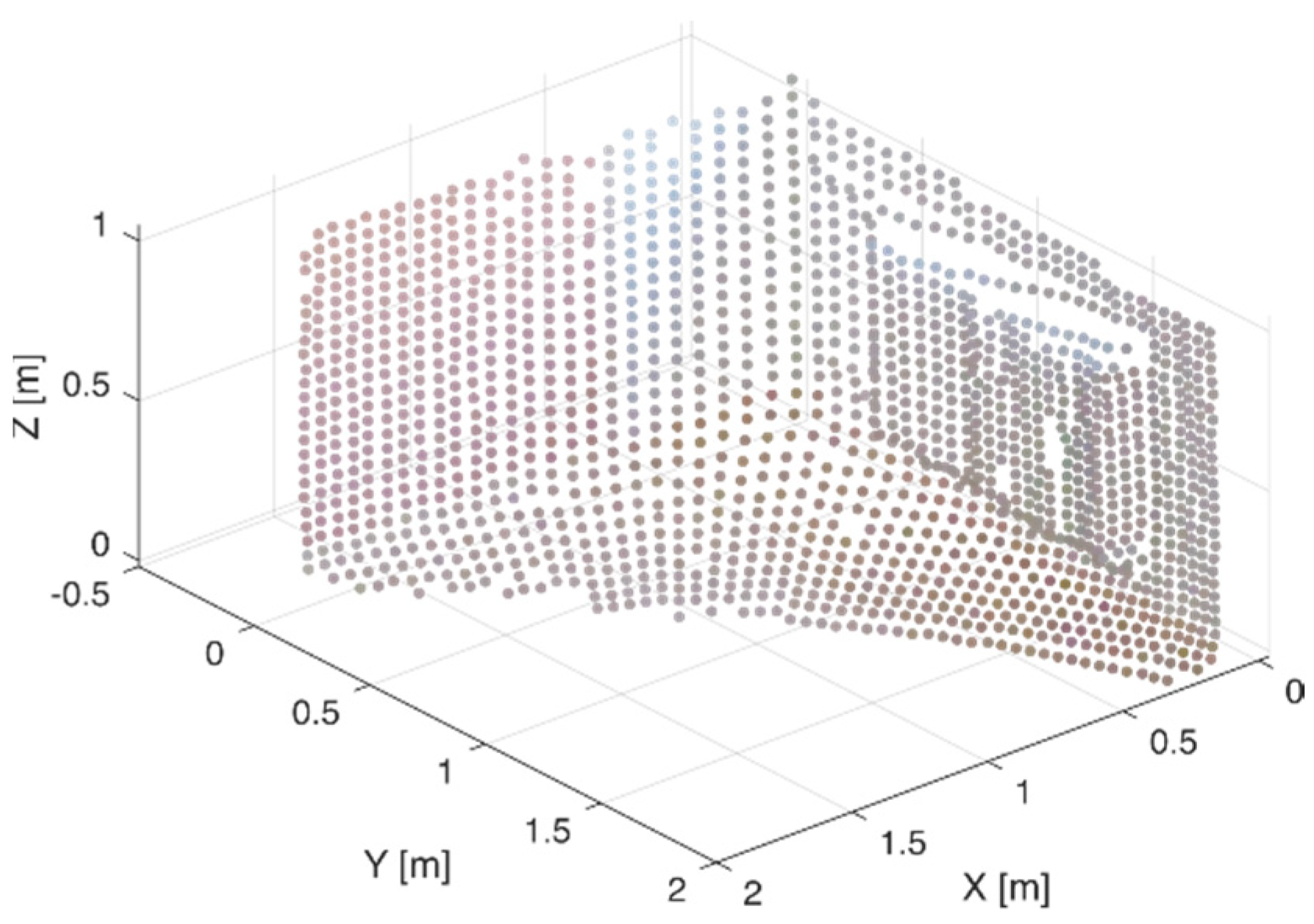

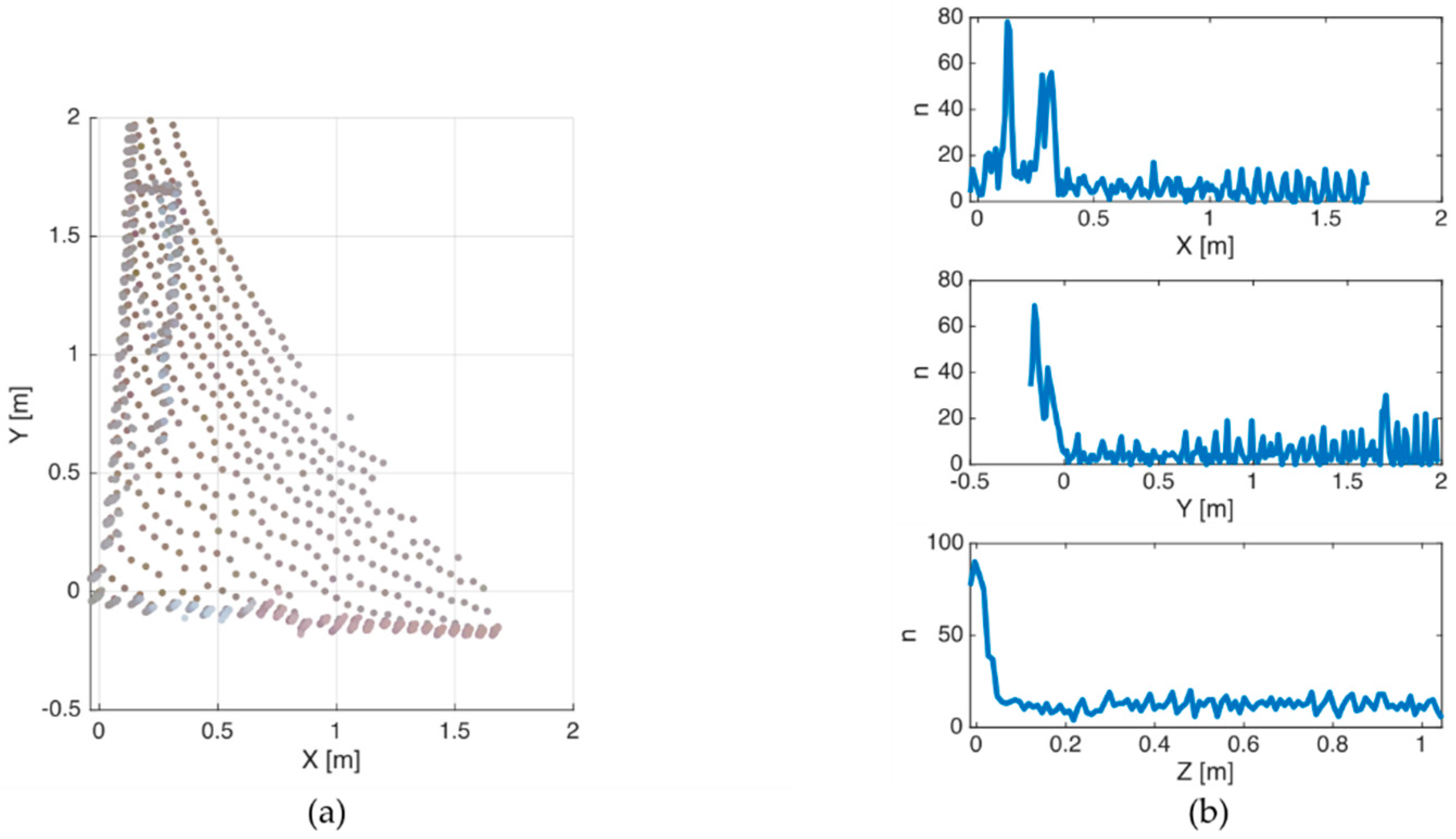

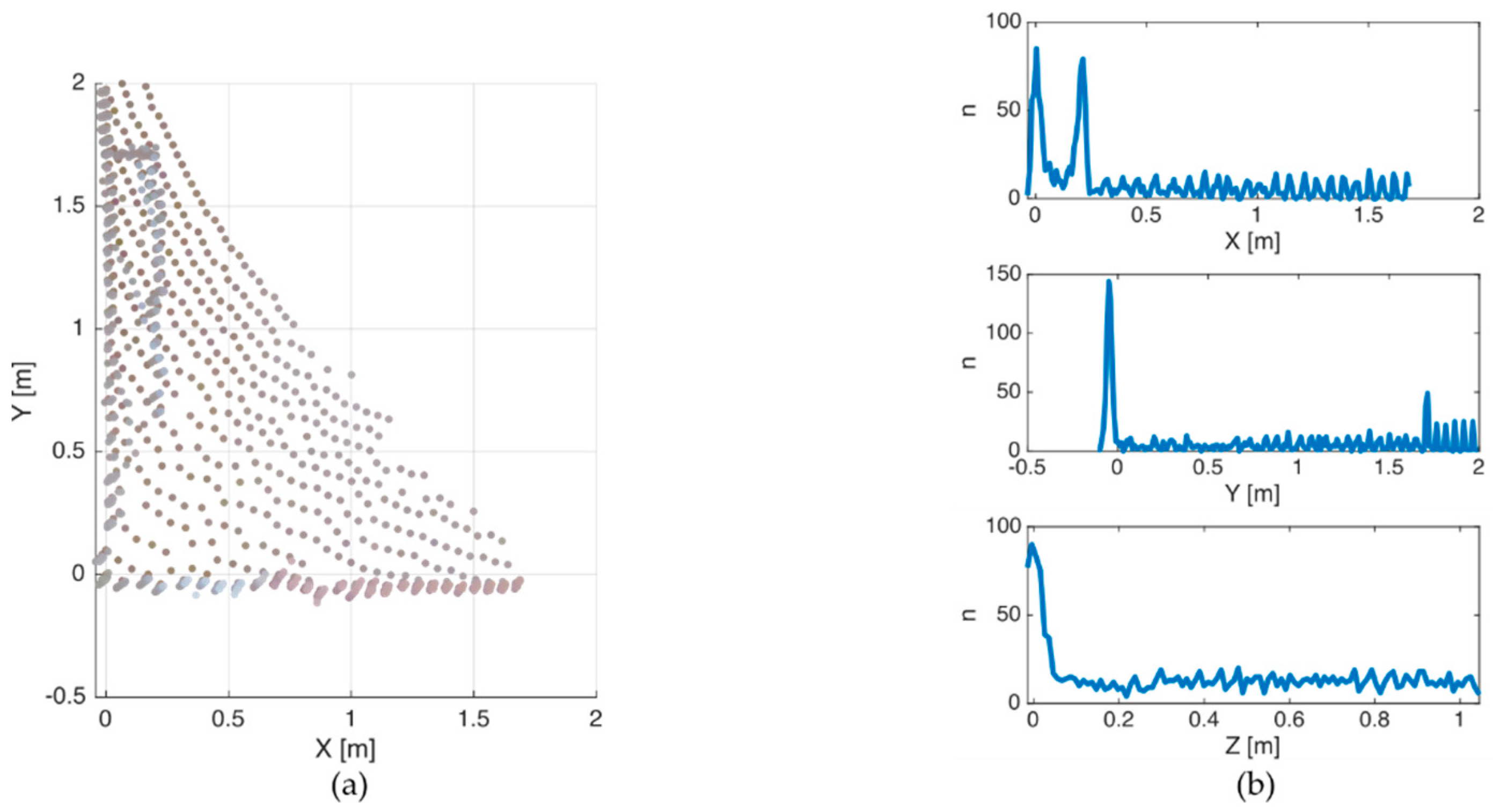

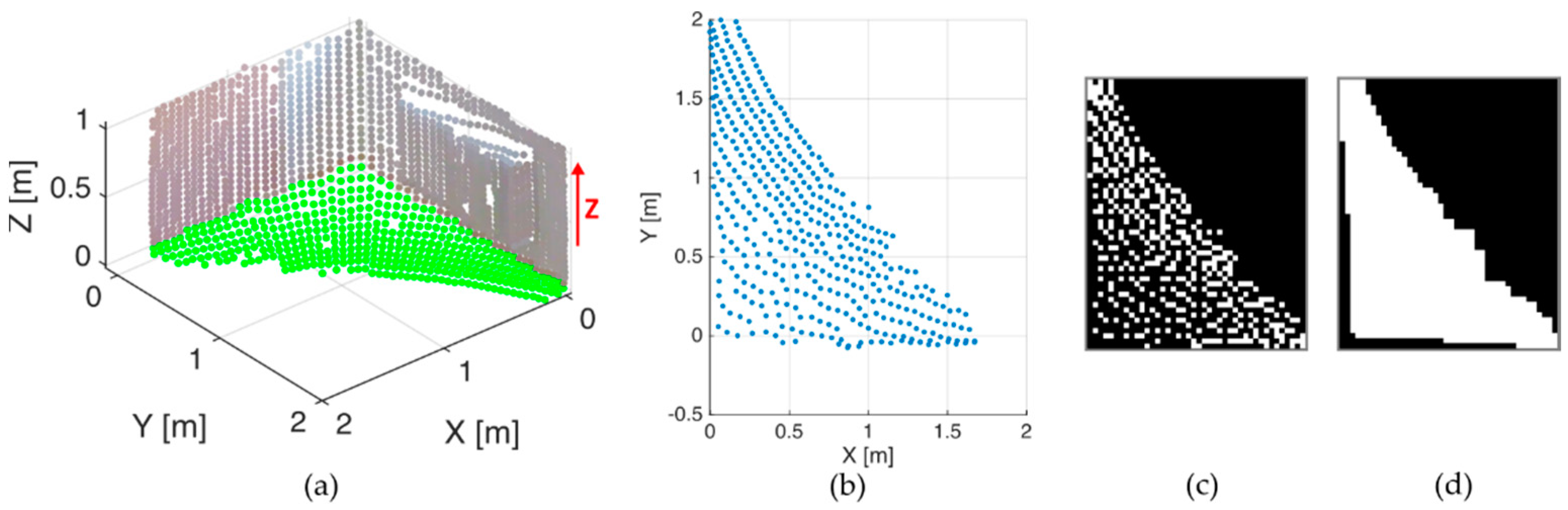

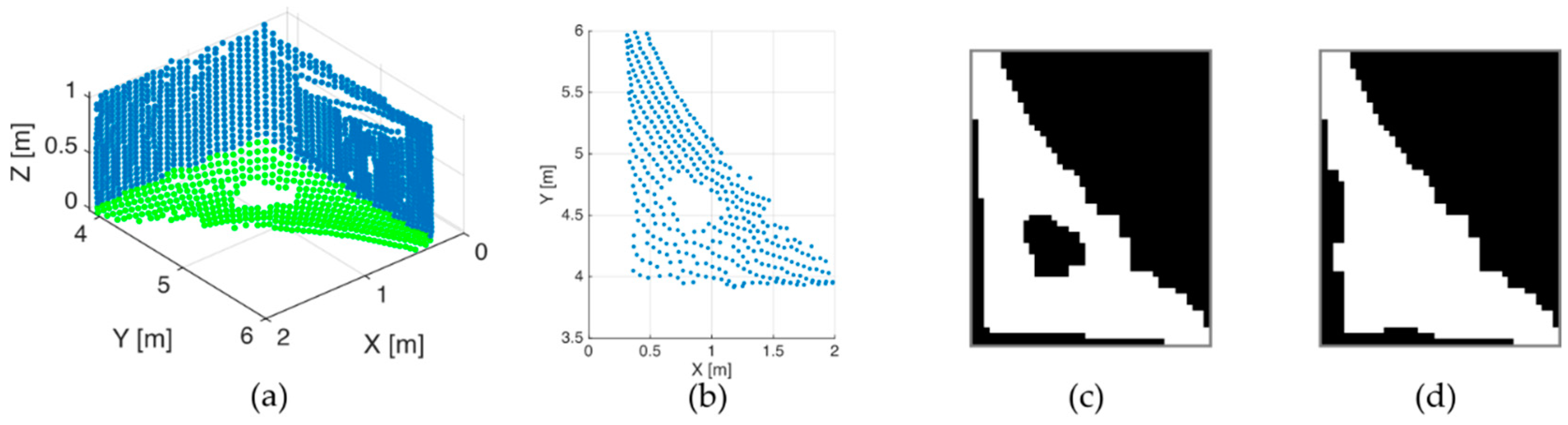

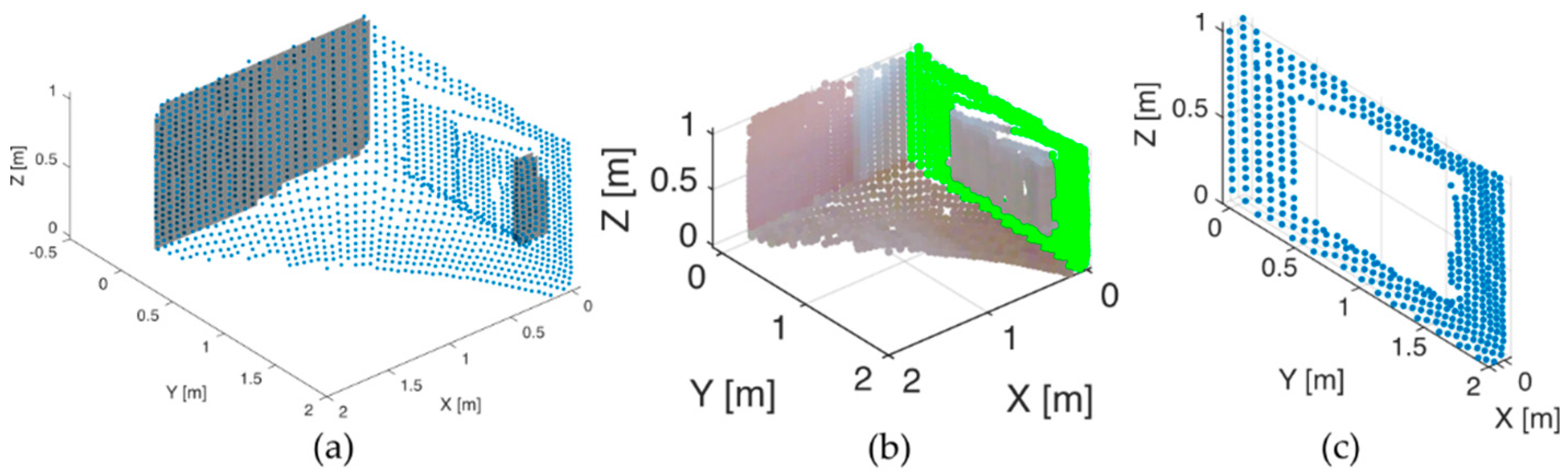

In the papers [

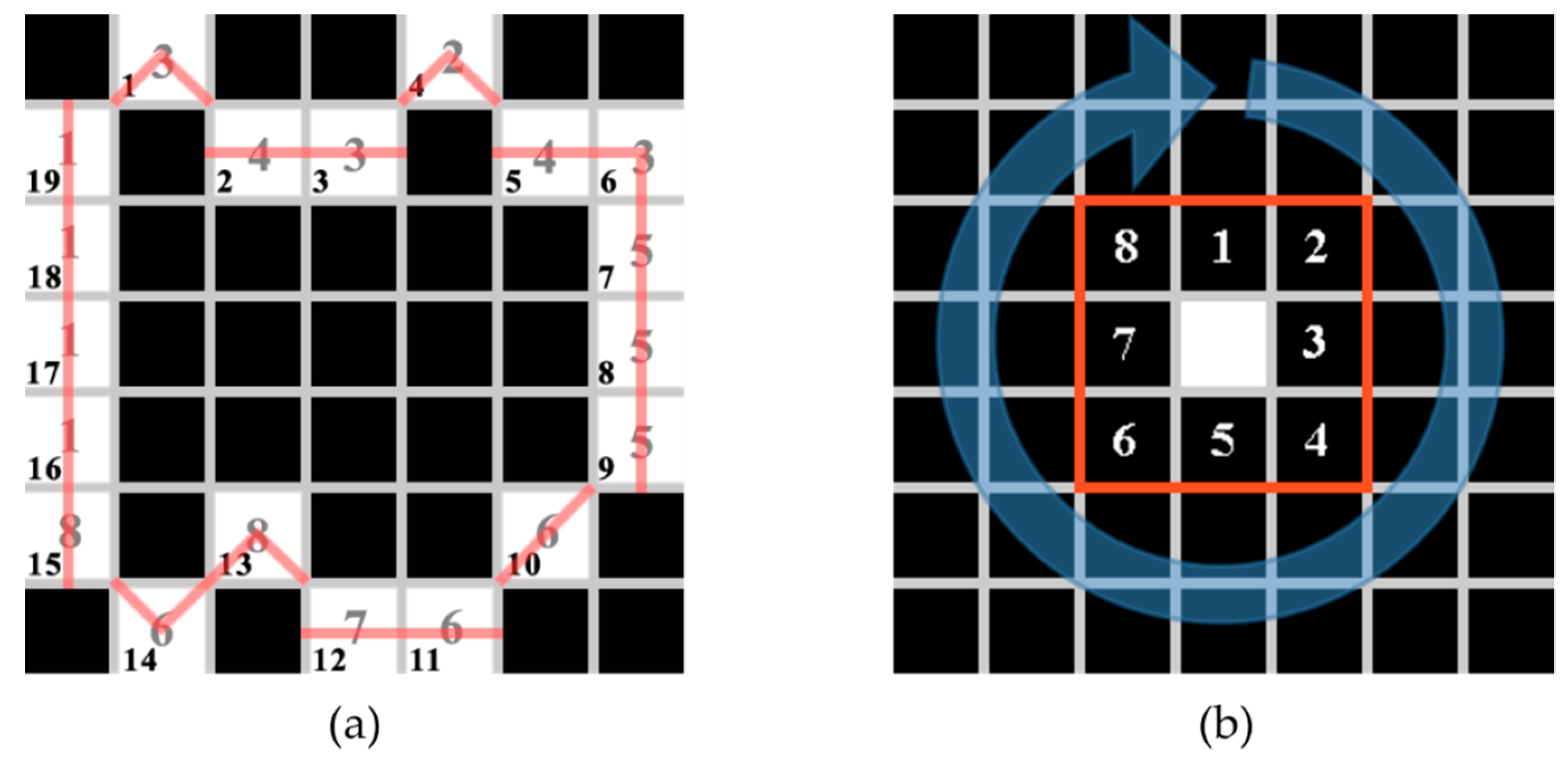

29,

30], we introduced a novel algorithm called the Level Connected Component Labeling (LCCL) for global 3D data analysis in terms of detecting global levels with high data concentration in selected space dimensions. A planar surface presence is indicated mainly by the high concentration of points in the particular dimension at the specific level. To find the high point concentration at the specific level is not sufficient for planar surface detection. Several planar surfaces can be present in one of the detected levels. Thus, we are using space quantization into an image for this purpose. This allows the use of classically connected labeling to separate individual planar surfaces. A planar surface is presented by the tool called Level Image (

), which is described in references [

29,

30,

31,

32]. For each

, its origin and rotation angle are known. The surface selected dimension is calculated as the average of the points on this surface. From the LCCL output, the statistical parameters, such as the mean value, variance, standard deviation, and data mode, are also known. These parameters describe the quality of the detected planar surface.

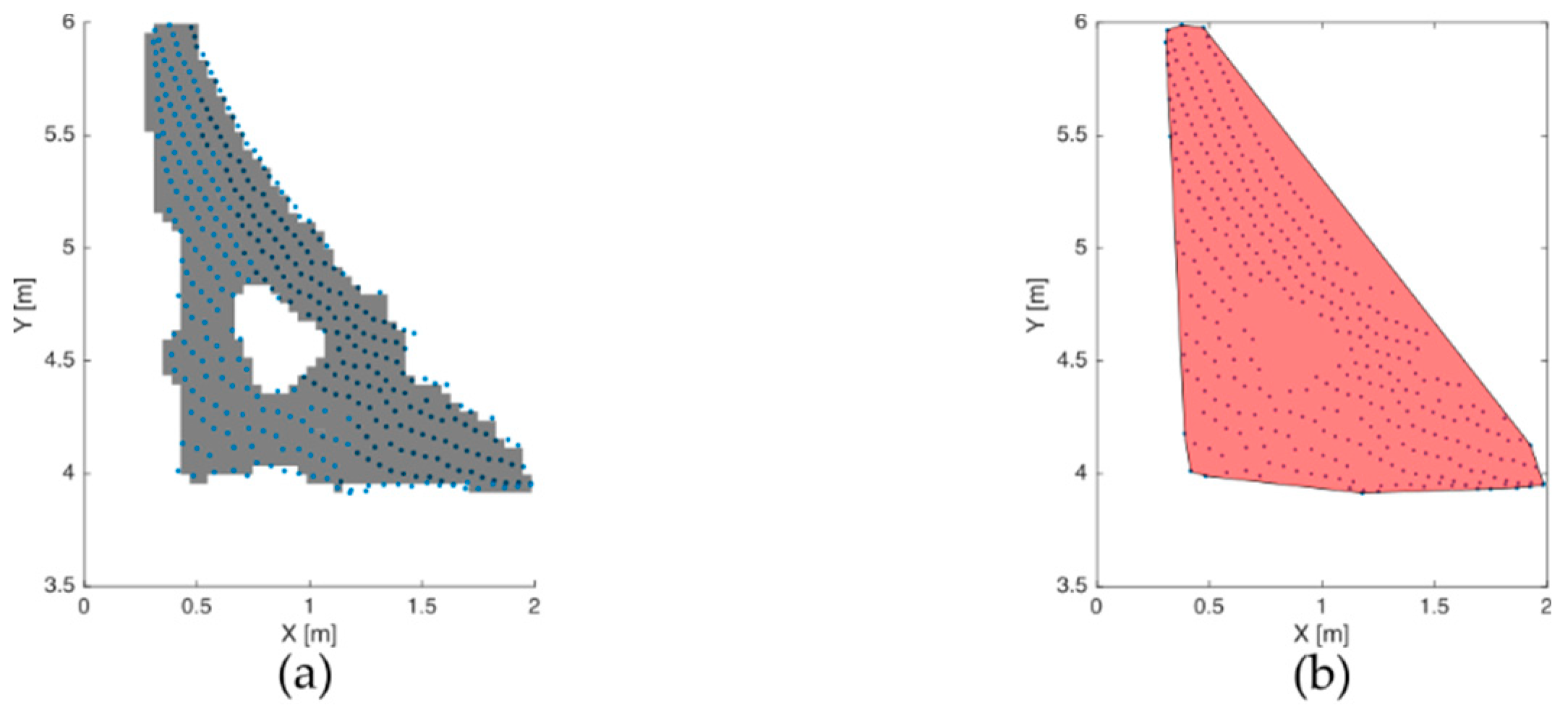

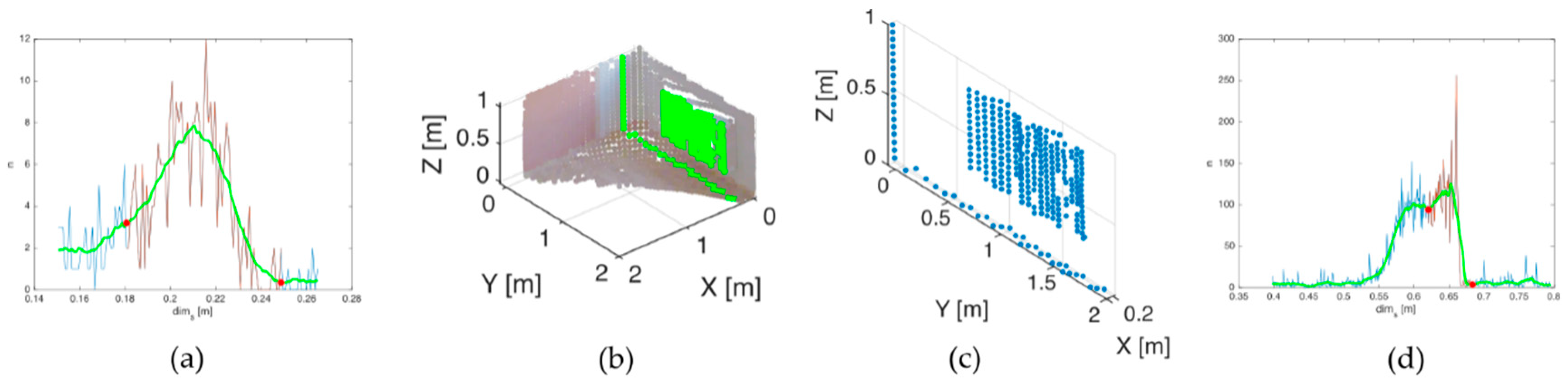

The connection of a

with image processing methods allows expression of a planar surface area and perimeter [

30] or interactive visualization [

31], including color presentation when available. One of our last research works [

32] deals with fine plane range estimation. In this paper, we will present the next methods for point cloud preprocessing and processing, which we did not publish yet, helping to decrease a point amount for the planar surface description, fill the gaps in the point clouds, and obtain other important space features.