SSNet: Learning Mid-Level Image Representation Using Salient Superpixel Network

Abstract

:1. Introduction

- (1)

- Susceptible to the interference of the burstiness. Burstiness is a phenomenon in which frequently occurring features, often carrying little information about the object, are more influential in the image representation than rarely occurring ones in the natural image [10,11]. As demonstrated in Figure 1, a large number of similar and less informative features occur in the background and will weaken the discriminative power of the representation. To solve it, Russakovsky et al. [12] and Angelova et al. [13] introduce location information to separate the foreground and background features and form the image representation. These methods have enhanced the discriminative ability of the representation; however, training an object detector is time-consuming. Ji et al. [14] and Sharma et al. [15] build the image representation by using saliency maps to weight the corresponding visual features. Nevertheless, they ignore the difference between features in the detected salient regions. In addition, normalization or pooling operations [16,17] are proposed to deal with the burstiness issue. However, the spatial relationship between features are usually ignored, which is important for alleviating the burstiness phenomenon of the features.

- (2)

- Ignoring the high-order information. The traditional BoVW method and its variants usually use the first-order statistics of features to form the image representation. However, the high-order information can provide more accurate representation for images [18,19]. The lack of the high-order information will limit the performance. To address it, Huang et al. [20] and Han et al. [21] utilize high-order information for building feature descriptors. However, simply introducing the high-order information into the design of the feature descriptor contributes little to improve the performance of image classification tasks. Sanchez et al. [22] and Li et al. [23] introduce the second-order information in the encoding procedure. However, they only use the second-order information of each visual word itself and ignore the relations between the visual words. To solve it, the research [18,19,24] introduces the high-order information between the visual words with the use of the pooling strategy.

- (1)

- A fast saliency detection model based on the Gestalt grouping principle is proposed to highlight the salient regions in the image. With the use of the saliency strategy, only the features inside the salient regions are extracted to represent the image. As a result, the interference of the burstiness inside the non-salient regions can be weakened.

- (2)

- Based on the similarity and spatial relationship between the features, we propose a novel weighting scheme to balance the influence between the frequently occurring and rarely occurring features, and in the meantime reduce the side effects of the burstiness.

- (3)

- To introduce the high-order information and further downplay the influence of the burstiness, we conduct the power normalization based on the proposed weighted second-order pooling operation. Consequently, more discriminative representation is achieved.

2. Related Work

2.1. Research on Mid-Level Image Representation

2.2. Methods of Extracting the Off-the-Shelf CNN Feature

2.3. Research Work about Burstiness Issue

2.4. Image Representation with Second-Order Information

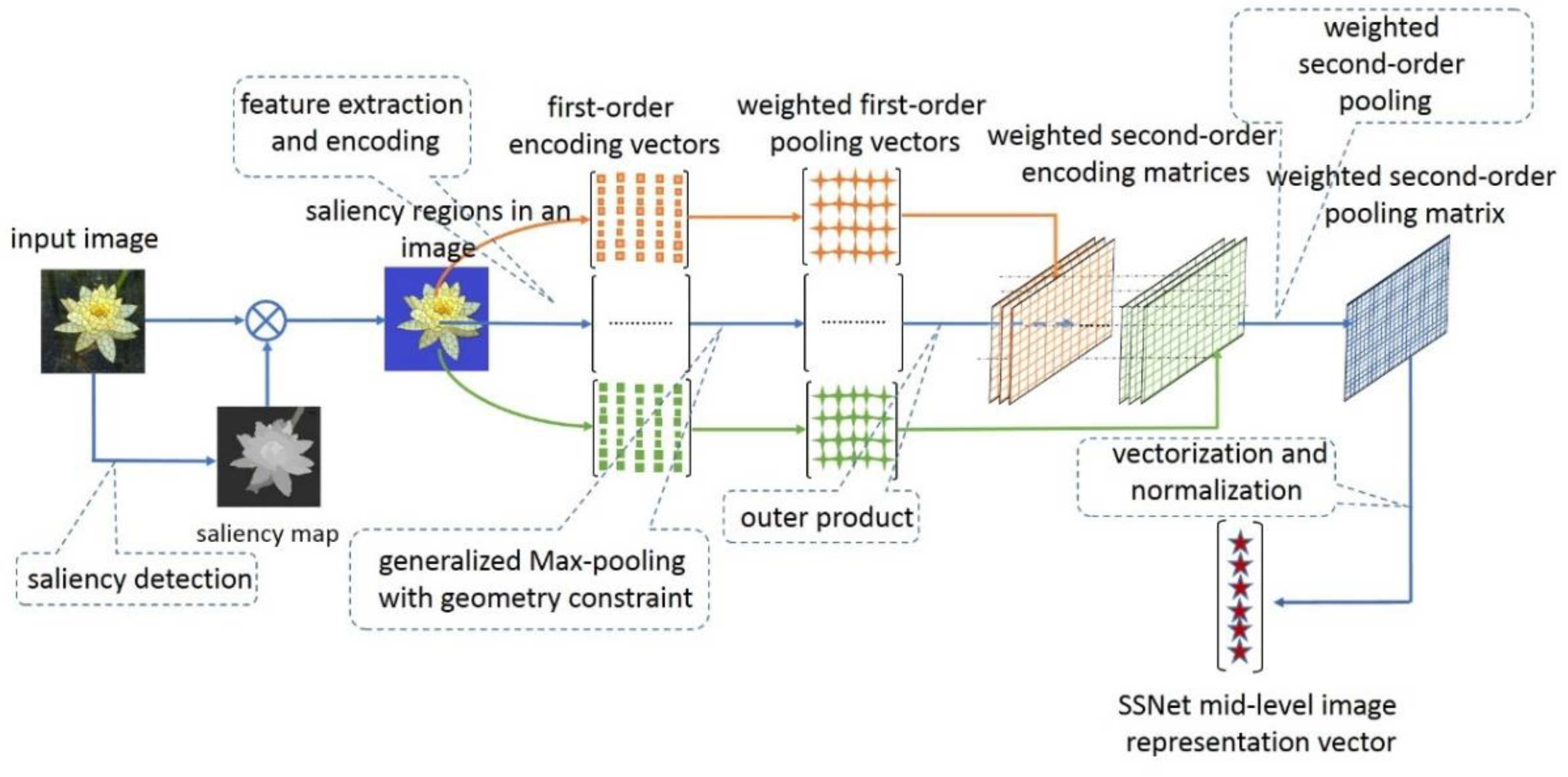

3. The Proposed Method for Image Representation

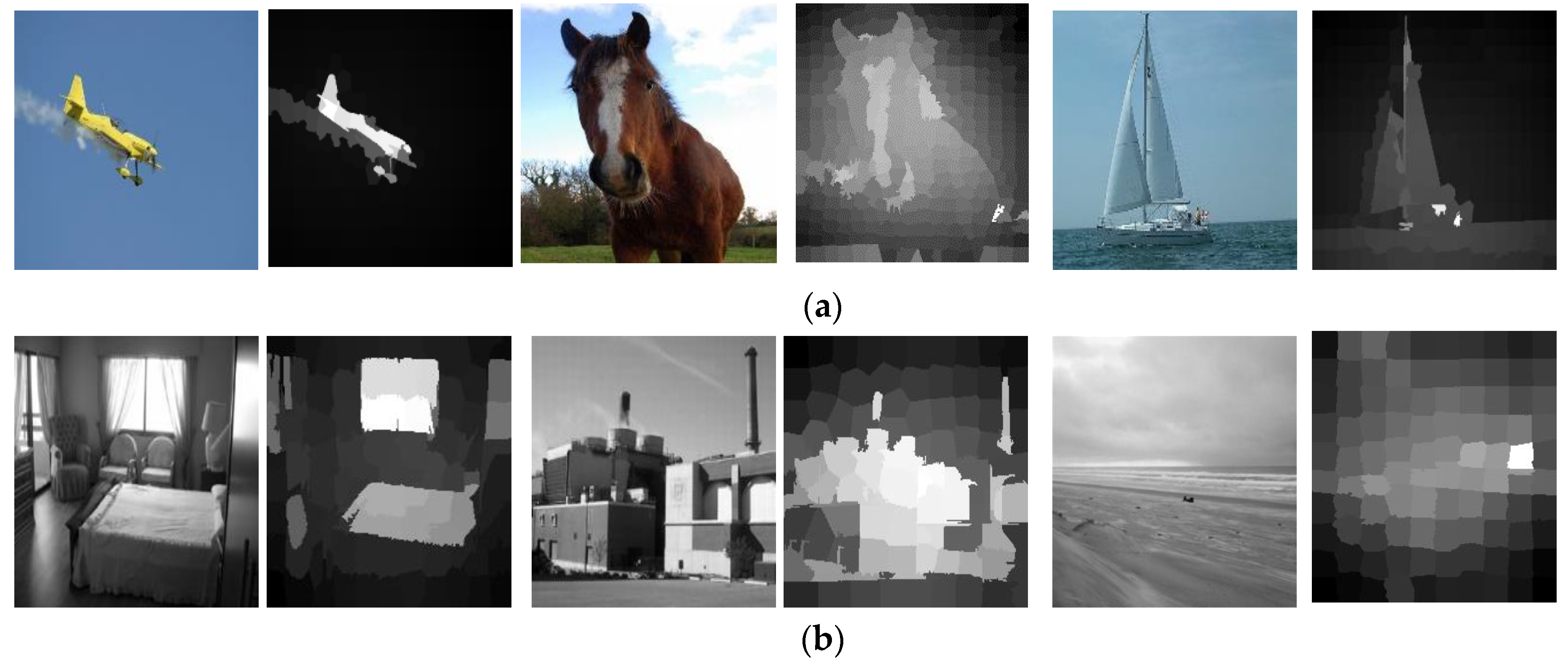

3.1. Saliency Region Detection

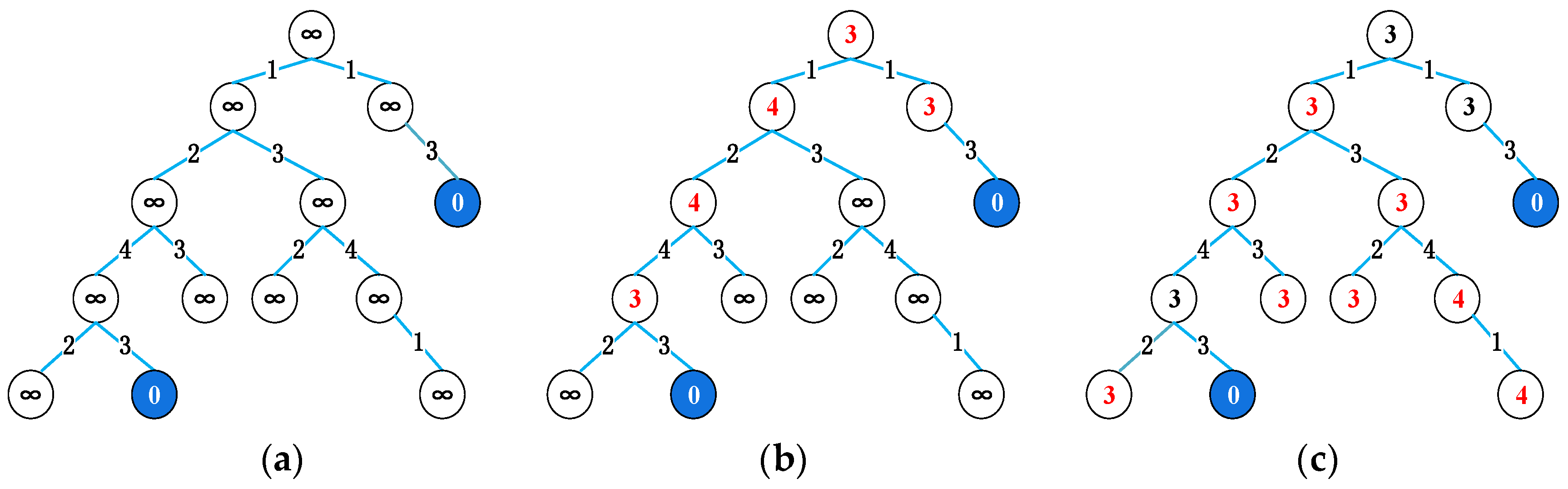

3.1.1. Measuring the Gestalt Grouping Connectedness

3.1.2. Saliency Map Generation

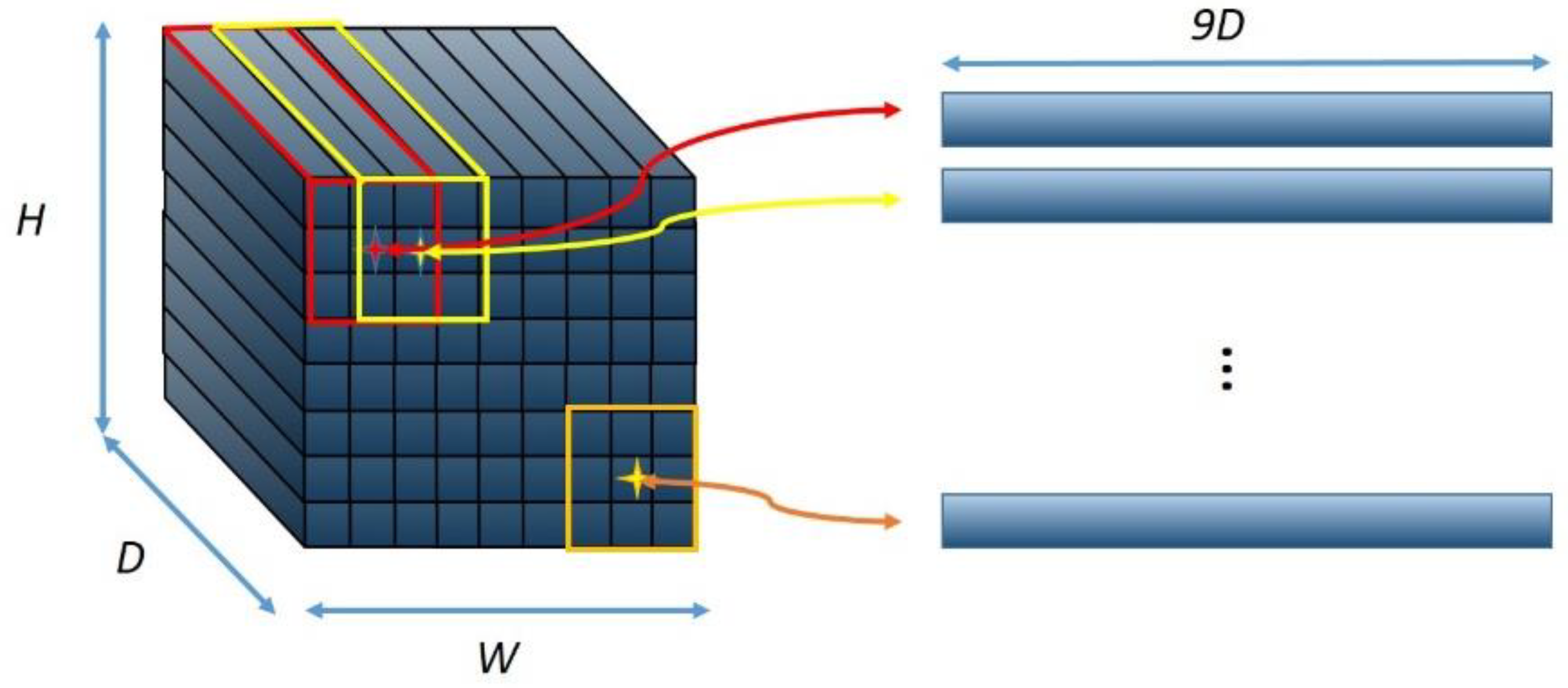

3.2. Image Representation with the Weighted Second-Order Pooling

3.2.1. The Proposed Feature Weighting Method

Generalized Max-Pooling Method

Weighting Scheme with Geometry Constraint

3.2.2. Weighted Second-Order Pooling

3.2.3. Vectorization and Normalization

3.3. The Mid-Level Image Representation Based on SSNet

| Algorithm 1: Generating the SSNet Mid-Level Image Representation |

| Input: a. Input image b. , the ratio between the number of the chosen saliency superpixels and the total number of the superpixels in an image c. , the reduced dimension of the feature d. , the reduced dimension of the encoding vector Output: The final mid-level representation for the input image 1. Computing the saliency map of the image ; 2. Computing the , the number of salient regions used to represent an image, using Equation (33); 3. Choosing the salient regions with the top- saliency value; 4. for i = 1:S 4.1 Extracting Dense SIFTs/CNN local features from and reducing their dimensionality to ; 4.2 Encoding the features, reducing the dimensionality of the codes to , and achieving the coding matrix ; 4.3 Computing the weight for the codes using Equation (25); 4.4 Computing the first-order pooling vector for the salient region using Equation (26); end 5 Computing the second-order pooling / for the image using Equation (27)/Equation (28); 6 Mapping or Normalizing the / using Equation (29)/Equation (30); 7 Obtaining the final representation for image by vectorizing operation using Equation (31)/Equation (32). |

4. Experiments and Results

4.1. Experimental Setting

4.2. Benchmark Datasets

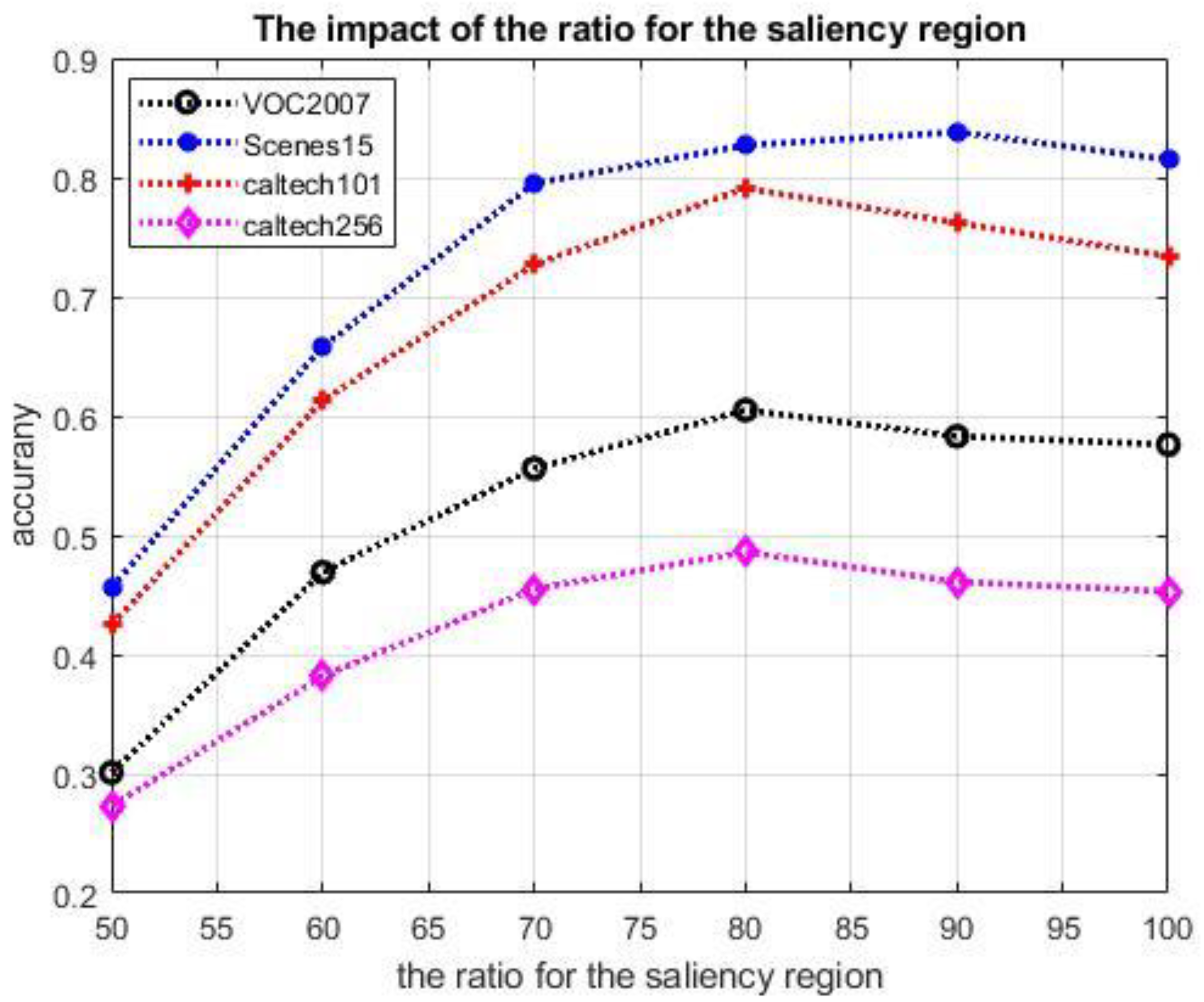

4.3. Effectiveness Evaluation of the Proposed Saliency Detection Scheme for Image Classification

4.4. Effectiveness Evaluation of Weighted Second-Order Pooling

4.5. Performance Analysis of Mid-Level Representation Based on SSNet

4.5.1. Comparison with Related BoVW Baselines

4.5.2. Comparison with the Baselines and State-of-the-Art Methods with the CNN Local Features

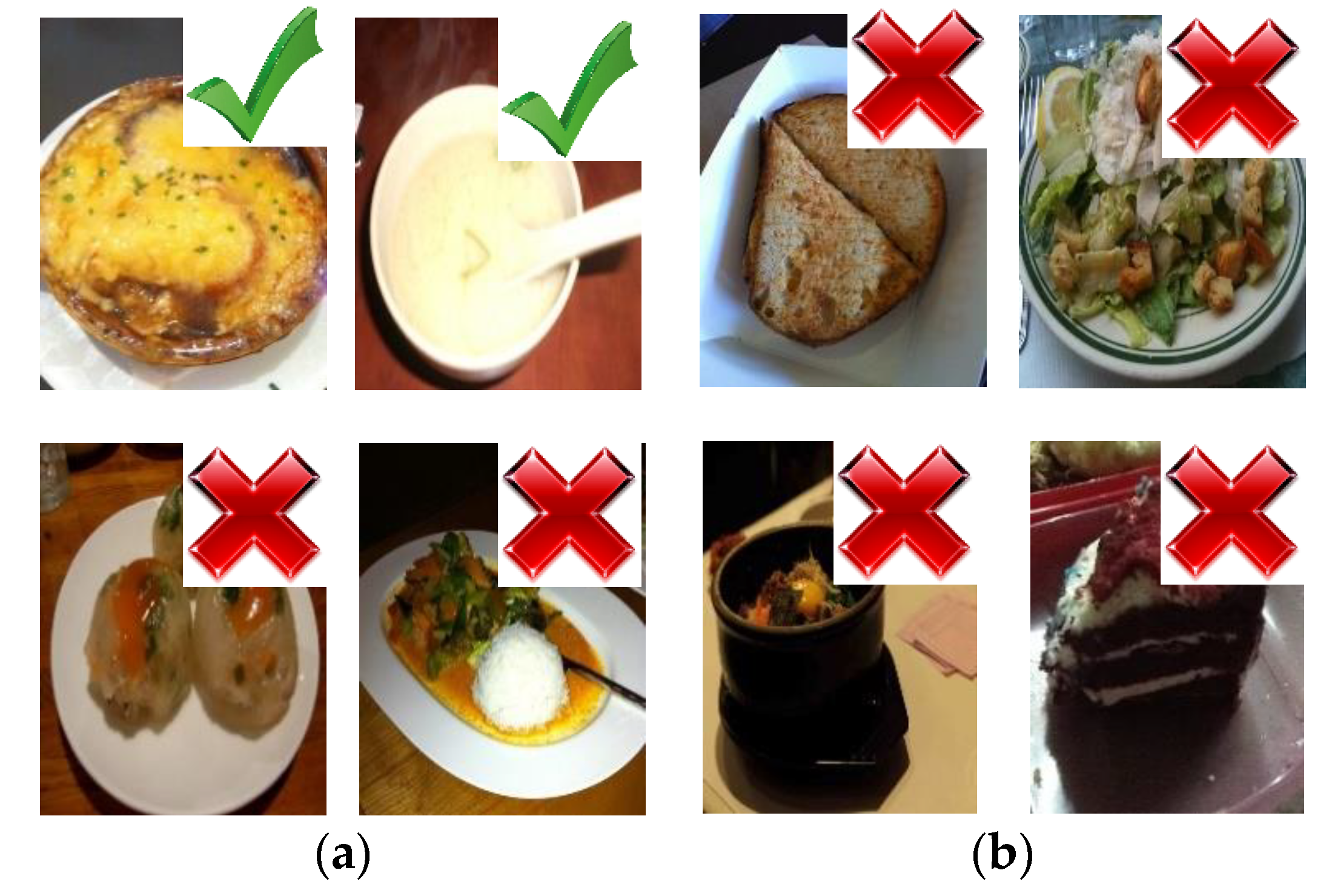

4.5.3. Performance of the SSNet Mid-Level Image Representation in Practical Applications

4.6. Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision, European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 1–22. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Yang, J.C.; Yu, K.; Gong, Y.H.; Huang, T. Linear Spatial Pyramid Matching Using Sparse Coding for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; Volumes 1–4, pp. 1794–1801. [Google Scholar]

- Wang, J.J.; Yang, J.C.; Yu, K.; Lv, F.J.; Huang, T.; Gong, Y.H. Locality-constrained Linear Coding for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3360–3367. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C.; Perez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2169–2178. [Google Scholar]

- Gong, Y.; Wang, L.; Guo, R.; Lazebnik, S. Multi-scale Orderless Pooling of Deep Convolutional Activation Features. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8695, pp. 392–407. [Google Scholar]

- Liu, L.; Shen, C.; van den Hengel, A. The Treasure beneath Convolutional Layers: Cross-convolutional-Iayer Pooling for Image Classification. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4749–4757. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C. On the burstiness of visual elements. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; Volume 4, pp. 1169–1176. [Google Scholar]

- Murray, N.; Jegou, H.; Perronnin, F.; Zisserman, A. Interferences in Match Kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1797–1810. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Russakovsky, O.; Lin, Y.; Yu, K.; Li, F.F. Object-Centric Spatial Pooling for Image Classification. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Volume 7573, pp. 1–15. [Google Scholar]

- Angelova, A.; Zhu, S. Efficient object detection and segmentation for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 811–818. [Google Scholar]

- Ji, Z.; Wang, J.; Su, Y.; Song, Z.; Xing, S. Balance between object and background: Object-enhanced features for scene image classification. Neurocomputing 2013, 120, 15–23. [Google Scholar] [CrossRef]

- Sharma, G.; Jurie, F.; Schmid, C. Discriminative Spatial Saliency for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3506–3513. [Google Scholar]

- Murray, N.; Perronnin, F. Generalized Max Pooling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2473–2480. [Google Scholar]

- Koniusz, P.; Yan, F.; Mikolajczyk, K. Comparison of mid-level feature coding approaches and pooling strategies in visual concept detection. Comput. Vis. Image Underst. 2013, 117, 479–492. [Google Scholar] [CrossRef]

- Carreira, J.; Caseiro, R.; Batista, J.; Sminchisescu, C. Free-Form Region Description with Second-Order Pooling. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1177–1189. [Google Scholar] [CrossRef]

- Koniusz, P.; Yan, F.; Gosselin, P.H.; Mikolajczyk, K. Higher-Order Occurrence Pooling for Bags-of-Words: Visual Concept Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 313–326. [Google Scholar] [CrossRef] [Green Version]

- Huang, D.; Zhu, C.; Wang, Y.; Chen, L. HSOG: A Novel Local Image Descriptor Based on Histograms of the Second-Order Gradients. IEEE Trans. Image Process. 2014, 23, 4680–4695. [Google Scholar] [CrossRef] [Green Version]

- Han, X.H.; Chen, Y.W.; Xu, G. High-Order Statistics of Weber Local Descriptors for Image Representation. IEEE Trans. Cybern. 2015, 45, 1180–1193. [Google Scholar] [CrossRef]

- Sanchez, J.; Perronnin, F.; Mensink, T.; Verbeek, J. Image Classification with the Fisher Vector: Theory and Practice. Int. J. Comput. Vis. 2013, 105, 222–245. [Google Scholar] [CrossRef]

- Li, P.; Lu, X.; Wang, Q. From Dictionary of Visual Words to Subspaces: Locality-constrained Affine Subspace Coding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2348–2357. [Google Scholar]

- Li, P.; Zeng, H.; Wang, Q.; Shiu, S.C.K.; Zhang, L. High-Order Local Pooling and Encoding Gaussians Over a Dictionary of Gaussians. IEEE Trans. Image Process. 2017, 26, 3372–3384. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Liu, L.; Wang, L.; Liu, X. In Defense of Soft-assignment Coding. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2486–2493. [Google Scholar]

- van Gemert, J.C.; Veenman, C.J.; Smeulders, A.W.M.; Geusebroek, J.M. Visual Word Ambiguity. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1271–1283. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boureau, Y.L.; Bach, F.; LeCun, Y.; Ponce, J. Learning Mid-Level Features For Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2559–2566. [Google Scholar]

- Sicre, R.; Tasli, H.E.; Gevers, T. SuperPixel based Angular Differences as a mid-level Image Descriptor. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 3732–3737. [Google Scholar]

- Yuan, J.; Wu, Y.; Yang, M. Discovery of collocation patterns: From visual words to visual phrases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; Volumes 1–8, pp. 1930–1938. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. arXiv 2014, arXiv:1405.3531. Available online: https://arxiv.org/abs/1405.3531 (accessed on 5 November 2014).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556 (accessed on 10 April 2015).

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Zhang, X.; Sun, J. Object Detection Networks on Convolutional Feature Maps. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1476–1481. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Razavian, A.S.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN Features off-the-shelf: An Astounding Baseline for Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar]

- Tang, P.; Wang, X.; Shi, B.; Bai, X.; Liu, W.; Tu, Z. Deep FisherNet for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2244–2250. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef] [Green Version]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and Transferring Mid-Level Image Representations using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Li, Y.; Liu, L.; Shen, C.; van den Hengel, A. Mining Mid-level Visual Patterns with Deep CNN Activations. Int. J. Comput. Vis. 2017, 121, 344–364. [Google Scholar] [CrossRef] [Green Version]

- Donggeun, Y.; Sunggyun, P.; Joon-Young, L.; In So, K. Multi-scale pyramid pooling for deep convolutional representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 71–80. [Google Scholar]

- Liang, Z.; Yali, Z.; Shengjin, W.; Jingdong, W.; Qi, T. Good Practice in CNN Feature Transfer. arXiv 2016, arXiv:1604.00133. Available online: https://arxiv.org/abs/1604.00133 (accessed on 1 April 2016).

- Azizpour, H.; Razavian, A.S.; Sullivan, J.; Maki, A.; Carlsson, S. From generic to specific deep representations for visual recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 36–45. [Google Scholar]

- Liu, L.; Shen, C.; Wang, L.; van den Hengel, A.; Wang, C. Encoding High Dimensional Local Features by Sparse Coding Based Fisher Vectors. In Advances in Neural Information Processing Systems; NIPS: Montreal, QC, Canada, 2014; Volume 27, pp. 1143–1151. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar]

- Agrawal, P.; Girshick, R.; Malik, J. Analyzing the Performance of Multilayer Neural Networks for Object Recognition. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8695, pp. 329–344. [Google Scholar]

- Zhang, L.; Gao, Y.; Xia, Y.; Dai, Q.; Li, X. A Fine-Grained Image Categorization System by Cellet-Encoded Spatial Pyramid Modeling. IEEE Trans. Ind. Electron. 2015, 62, 564–571. [Google Scholar] [CrossRef]

- Celikkale, B.; Erdem, A.; Erdem, E. Visual Attention-driven Spatial Pooling for Image Memorability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 976–983. [Google Scholar]

- Zhang, L.; Hong, R.; Gao, Y.; Ji, R.; Dai, Q.; Li, X. Image Categorization by Learning a Propagated Graphlet Path. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 674–685. [Google Scholar] [CrossRef]

- Perronnin, F.; Sanchez, J.; Mensink, T. Improving the Fisher Kernel for Large-Scale Image Classification. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; Volume 6314, pp. 143–156. [Google Scholar]

- Tuzel, O.; Porikli, F.; Meer, P. Region covariance: A fast descriptor for detection and classification. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Volume 3952, pp. 589–600. [Google Scholar]

- Xu, L.; Ji, Z.; Dempere-Marco, L.; Wang, F.; Hu, X. Gestalt-grouping based on path analysis for saliency detection. Signal Process. Image Commun. 2019, 78, 9–20. [Google Scholar] [CrossRef]

- Tu, W.C.; He, S.; Yang, Q.; Chen, S.Y. Real-Time Salient Object Detection with a Minimum Spanning Tree. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2334–2342. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Suesstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef] [Green Version]

- Dong, Z.; Jia, S.; Zhang, C.; Pei, M.; Wu, Y. Deep Manifold Learning of Symmetric Positive Definite Matrices with Application to Face Recognition. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4009–4015. [Google Scholar]

- Arsigny, V.; Fillard, P.; Pennec, X.; Ayache, N. Geometric means in a novel vector space structure on symmetric positive-definite matrices. Siam J. Matrix Anal. Appl. 2007, 29, 328–347. [Google Scholar] [CrossRef] [Green Version]

- Vedaldi, A.; Fulkerson, B. VLFeat: An open and portable library of computer vision algorithms. In Proceedings of the 18th ACM international conference on Multimedia, Firenze, Italy, 21–25 October 2010; pp. 1469–1472. [Google Scholar]

- Vedaldi, A.; Lenc, K. Matconvnet: Convolutional neural networks for matlab. In Proceedings of the 23rd ACM International Conference on Multimedia; ACM: New York, NY, USA, 2015; pp. 689–692. [Google Scholar]

- Chen, M.; Dhingra, K.; Wu, W.; Yang, L.; Sukthankar, R.; Yang, J. PFID: Pittsburgh fast-food image dataset. In Proceedings of the 16th IEEE International Conference on Image Processing, Piscataway, NJ, USA, 7–10 November 2009; pp. 289–292. [Google Scholar]

- Bossard, L.; Guillaumin, M.; Van Gool, L. Food-101–mining discriminative components with random forests. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 446–461. [Google Scholar]

- Bin-Bin, G.; Xiu-Shen, W.; Jianxin, W.; Weiyao, L. Deep spatial pyramid: The devil is once again in the details. arXiv 2015, arXiv:1504.05277. Available online: https://arxiv.org/abs/1504.05277 (accessed on 23 April 2015).

| PASCAL VOC 2007 | Caltech 101 | Caltech 256 | 15-Scene | |

|---|---|---|---|---|

| ScSPM [3] | 51.9 | 73.2 | 37.5 | 80.3 |

| LLC [4] | 58.7 | 75.4 | 46.3 | 82.0 |

| FV [22] | 61.8 | 77.8 | 52.1 | 85.3 |

| VLAD [5] | 55.3 | 77.6 | 48.9 | 83.2 |

| SC + WSOAP | 63.1 | 80.1 | 47.3 | 85.7 |

| SC + WSOMP | 64.9 | 81.9 | 51.8 | 86.1 |

| LLC + WSOAP | 69.8 | 82.7 | 58.2 | 87.4 |

| LLC + WSOMP | 71.3 | 83.1 | 60.3 | 87.9 |

| FV + WSOAP | 72.4 | 86.1 | 66.1 | 89.6 |

| FV + WSOMP | 73.5 | 87.5 | 69.9 | 91.0 |

| VLAD + WSOAP | 69.6 | 84.2 | 61.6 | 86.7 |

| VLAD + WSOMP | 72.1 | 85.6 | 63.2 | 88.2 |

| PASCAL VOC 2007 | Caltech 101 | Caltech 256 | 15-Scene | |

|---|---|---|---|---|

| ScSPM [3] | 51.9 | 73.2 | 37.5 | 80.3 |

| LLC [4] | 58.7 | 75.4 | 46.3 | 82.0 |

| FV [22] | 61.8 | 77.8 | 52.1 | 85.3 |

| VLAD [5] | 55.3 | 77.6 | 48.9 | 83.2 |

| SC + SSNet(max) | 66.5 | 83.3 | 53.1 | 87.2 |

| LLC + SSNet(max) | 73.7 | 85.0 | 61.9 | 88.7 |

| FV + SSNet(max) | 76.1 | 89.7 | 72.5 | 90.6 |

| VLAD + SSNet(max) | 74.2 | 87.3 | 65.0 | 89.4 |

| PASCAL VOC 2007 | Caltech 101 | Caltech 256 | 15-Scene | ||

|---|---|---|---|---|---|

| Baseline | FC-CNN [36] | 84.3 | 89.7 | 81.3 | 90.2 |

| CL-FV | 85.1 | 86.1 | 79.5 | 90.5 | |

| SOA Methods | MOP [8] | 85.5 | 91.8 | 85.4 | 92.7 |

| MPP [41] | 88.0 | 93.7 | 87.3 | 94.1 | |

| DSP [32] | 88.6 | 94.7 | 84.2 | 91.1 | |

| MS-DSP [61] | 89.3 | 95.1 | 85.5 | 91.8 | |

| Ours | FV + SSNet(max) | 89.6 | 95.3 | 87.6 | 95.7 |

| Features | Methods | PFID61 | Food-101 |

|---|---|---|---|

| Dense SIFT | FV [22] | 40.2 | 38.9 |

| FV (using MASK) | 42.9 | NA | |

| FV + WSOMP | 55.8 | 51.6 | |

| FV + SSNet | 57.1 | 52.4 | |

| Off-the-shelf CNN features | FC-CNN [36] | 48.2 | 54.1 |

| MOP [8] | 61.7 | 59.7 | |

| FV + SSNet | 65.3 | 60.6 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, Z.; Wang, F.; Gao, X.; Xu, L.; Hu, X. SSNet: Learning Mid-Level Image Representation Using Salient Superpixel Network. Appl. Sci. 2020, 10, 140. https://doi.org/10.3390/app10010140

Ji Z, Wang F, Gao X, Xu L, Hu X. SSNet: Learning Mid-Level Image Representation Using Salient Superpixel Network. Applied Sciences. 2020; 10(1):140. https://doi.org/10.3390/app10010140

Chicago/Turabian StyleJi, Zhihang, Fan Wang, Xiang Gao, Lijuan Xu, and Xiaopeng Hu. 2020. "SSNet: Learning Mid-Level Image Representation Using Salient Superpixel Network" Applied Sciences 10, no. 1: 140. https://doi.org/10.3390/app10010140

APA StyleJi, Z., Wang, F., Gao, X., Xu, L., & Hu, X. (2020). SSNet: Learning Mid-Level Image Representation Using Salient Superpixel Network. Applied Sciences, 10(1), 140. https://doi.org/10.3390/app10010140