From Brochures to Bytes: Destination Branding through Social, Mobile, and AI—A Systematic Narrative Review with Meta-Analysis

Abstract

1. Introduction

1.1. From Brochures to Bytes: Rationale and Gap Analysis

1.2. Research Questions

2. Conceptual and Historical Framework

2.1. Defining ‘Digital Evolution’ in Destination Branding

2.2. Phase Delineation Rationale and Digital Evolution of Destination Branding

2.3. Core Constructs Across Eras

2.3.1. Imagery (Visual Representation)

2.3.2. Narratives: Brand Story and Messaging

2.3.3. Engagement Mechanisms

2.3.4. Metrics and Performance Indicators

2.3.5. Critical Synthesis

2.4. Integrating Communication, Technology-Adoption, and Brand-Equity Theories

2.5. Algorithmic Governance, Bias, and Data Ethics in Digital Destination Branding

- (1)

- Transparency and explainability. Perceived fairness and trust in AI assistants and personalised itineraries increase when audiences are told why a recommendation is shown and how data are used (Wanner et al., 2022; I. P. Tussyadiah, 2020). DMOs should adopt ‘explain-why’ cues and consent dashboards aligned with GDPR, treating transparency as a reputational asset rather than a legal minimum.

- (2)

- Representational fairness and inclusivity. Algorithmic curation tends to privilege already iconic, photogenic sites and dominant narratives, risking spatial and cultural bias (Yallop & Seraphin, 2020). Governance should include periodic audits of training data and outputs (e.g., hashtag/search bias checks, resident representation), with corrective actions (counter-seeding content, inclusive creator portfolios, multilingual equity goals).

- (3)

- Authenticity, manipulation, and synthetic media. AI-generated visuals, virtual influencers, and deepfake videos complicate authenticity cues central to destination image (Sivathanu et al., 2024; Hernández-Méndez et al., 2024; Yu & Meng, 2025). Disclosure norms (‘AI-generated’ labels), parity rules (synthetic content never outweighs lived narratives), and provenance tools should be incorporated.

3. Methodology

3.1. Two-Tiered Design

3.2. Scope and Inclusion Criteria

3.2.1. Temporal Boundaries and Phase Mapping

3.2.2. Definition of ‘Destination’

3.2.3. Branding Outcomes

3.2.4. Eligibility Filter

3.2.5. Critical Reflection

3.2.6. Construct Harmonisation and Measurement Equivalence

- Define canonical constructs using destination-adapted CBBE and engagement literature (Boo et al., 2009; Kladou & Kehagias, 2014; Rather, 2020).

- Catalogue raw measures reported by each study (e.g., aided recall; follower growth; 5-point favourability; repeat-visit intention; comment/repost probability; share counts).

- Apply inclusion rules:

- ⭘

- Awareness: aided/unaided recall/recognition; excluded: impressions, reach.

- ⭘

- Image: multi-item cognitive/affective associations; excluded: single-item sentiment unless validated.

- ⭘

- Attitudes: global favourability/warmth; excluded: satisfaction unless explicitly framed as brand attitude.

- ⭘

- Loyalty: revisit/recommendation/advocacy intention; excluded: arrivals unless directly linked to brand equity.

- ⭘

- Engagement intentions: intention to follow, share, or co-create; counts (likes/views) used only when authors explicitly theorised them as behavioural engagement and they were not co-pooled with equity outcomes.

- Standardise to Hedges’ g or Fisher’s z and orient signs so larger values uniformly denote stronger branding outcomes. Where multiple indicators existed per construct, we averaged within-study before pooling (Cheung, 2015), preserving independence.

- Priority of validated scales. Where both survey scales and platform metrics were available for the same construct, validated multi-item scales anchor the construct. Platform/process metrics are analysed as moderators or ancillary descriptors, not substitutes for equity outcomes.

- Composites within studies. When a study reported several indicators of the same construct (e.g., three awareness items), we z-standardised and averaged them to a single within-study score before computing effect sizes.

- Engagement taxonomy. We coded engagement at three levels, but pooled only levels 2 and 3:

- ⭘

- L1 Ephemeral exposure: impressions, views, likes—excluded from pooling.

- ⭘

- L2 Relational interaction: comments, meaningful shares/saves, follows.

- ⭘

- L3 Co-creation: production of UGC/reviews; participation in DMO contests; stated intent to produce content. These map to our engagement intentions outcome. L2 and L3 contribute to quantitative pooling, L1 informs narrative context and moderator coding only.

- Directionality and sign. All effects were oriented so positive values reflect improved branding outcomes.

- Cross-phase comparability. For outcomes lacking a pre-digital analogue (e.g., L2/L3 engagement), we treat them as digital-era constructs and discuss cross-era comparability narratively rather than statistically.

3.2.7. Engagement vs. Equity: Scope Decisions

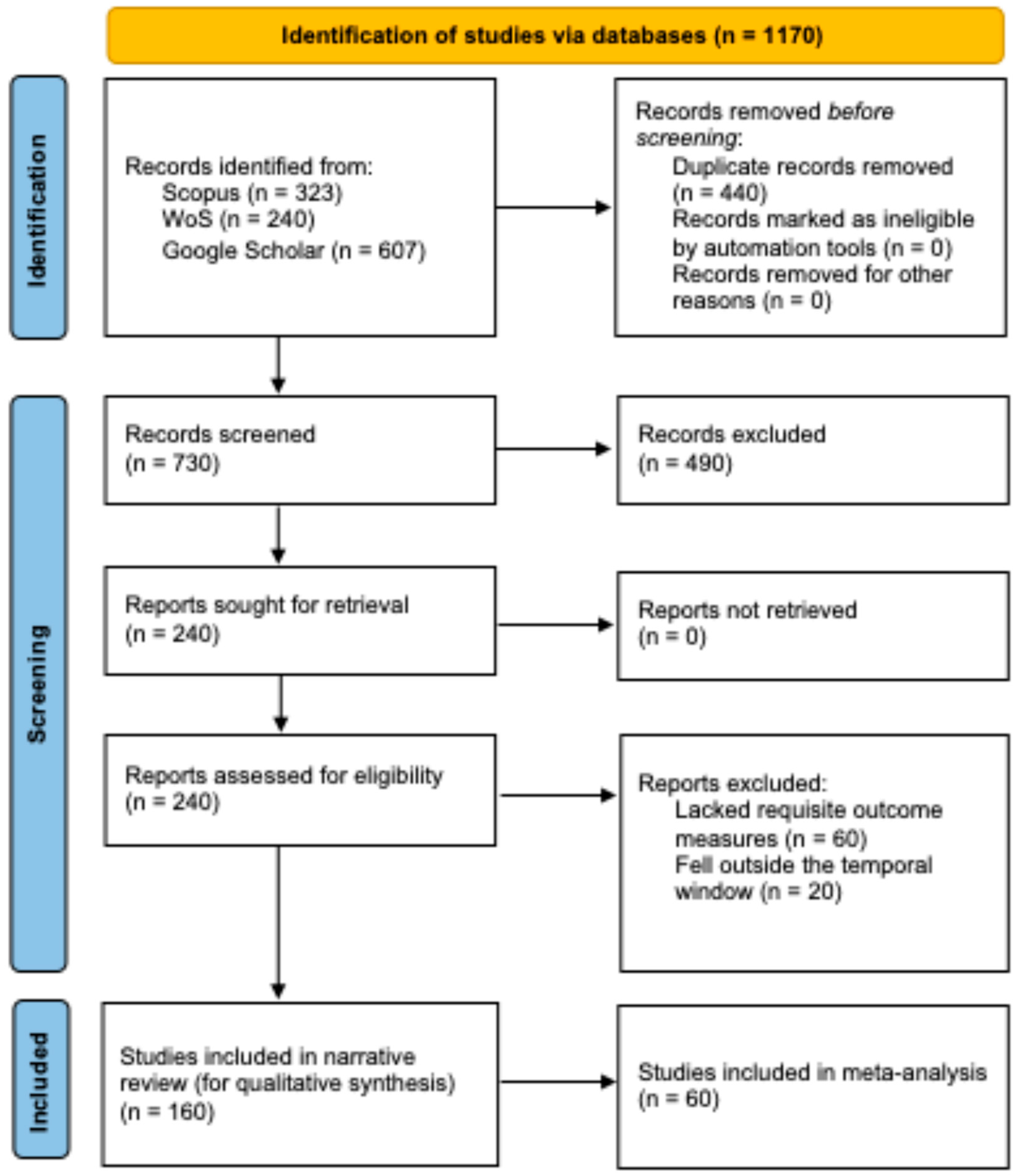

3.3. Search Strategy and Screening

3.4. Data Extraction Protocols

3.5. Risk-of-Bias and Quality Assessment

3.6. Coding and Thematic Analysis

3.7. Meta-Analysis Criteria

4. Narrative Review: Evolution of Strategies and Evidence

4.1. Pre-Digital Era: Print Collateral, Mass-Media Buys, Push Narratives

4.2. Phase-by-Phase Thematic Analysis

4.2.1. Web 1.0: Informational Websites and Virtual Brochures

4.2.2. Web 2.0: Participatory Culture, UGC, and Social Proof

4.2.3. Mobile: Always-On Destination Storytelling and Location-Based Engagement

4.2.4. AI, XR, and Predictive Personalisation (Chatbots, Recommender Systems)

4.3. Cross-Phase Comparative Insights: Message Control, Co-Creation, Speed, Reach, and Data

4.4. Emergent Research Themes and Under-Explored Areas

5. Meta-Analysis: Quantifying Digital-Era Branding Impacts

5.1. Rationale for Focusing on Digital-Era Studies

5.1.1. Addressing Scarcity of Comparable Pre-Digital Effect-Size Data

5.1.2. Justifying Split Between Quantitative Meta-Analysis (Digital) and Qualitative Comparison (Pre-Digital and Digital)

5.2. Effect-Size Computation and Standardisation

5.2.1. Primary Outcomes: Brand Awareness, Image, Attitudes, Loyalty, Engagement Intentions

5.2.2. Statistical Approach (Hedges’ g/r to z, Random-Effect Models)

5.3. Moderator Analyses

5.3.1. Platform Type (Facebook, Instagram, TikTok, X)

5.3.2. Content Strategy (UGC vs. DMO-Generated)

5.3.3. Influencer Tier, Interactivity Level, Destination Type

5.4. Publication Bias and Sensitivity Tests

5.5. Meta-Analytic Findings—Summary of Effect Magnitudes

6. Integrated Discussion

7. Research Questions Guide the Significance of Study Findings

7.1. Theoretical Significance and Implications

7.2. Managerial Significance and Implications

8. Limitations, Risks and Future-Proofing

9. Conclusions and Suggestions for Further Research

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| AR | augmented reality |

| CASP | Critical Appraisal Skills Programme |

| CBBE | consumer-based brand equity |

| CSAT | customer satisfaction score |

| DMO | destination-marketing organisation |

| eWOM | electronic word of mouth |

| GDPR | General Data Protection Regulation |

| GPS | Global Positioning System |

| HTML | Hypertext Markup Language |

| IoT | Internet of Things |

| IT | information technology |

| KPIs | key performance indicators |

| MMAT | Mixed Methods Appraisal Tool |

| OS | organisation studies |

| PA | public administration |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| Q&A | questions and answers |

| QR | quick response |

| ROI | return on investment |

| RQ | research question |

| SoCoMo | social, contextual, mobile |

| SoLoMo | social–local–mobile |

| UGC | user-generated content |

| UTAUT | unified theory of acceptance and use of technology |

| VFR | visiting friends and relatives |

| VR | virtual reality |

| WCAG | Web Content Accessibility Guidelines |

| WOM | word of mouth |

| XR | extended reality |

Appendix A

AI-Era Governance Checklist (Practitioner Aide-Mémoire)

- Consent and transparency: clear why-this-recommendation notices; public privacy page for chatbots/recommenders.

- Representation audit: quarterly checks of who/what appears; add corrective content for under-represented communities/areas.

- Synthetic-media policy: label AI-generated imagery; cap its share; maintain provenance records.

- Accessibility and inclusion: WCAG compliance; multilingual assets; low-bandwidth alternatives.

- Resident voice: integrate resident sentiment into dashboards; co-create with local creators.

- Risk and recovery: red-team prompts for chatbots; escalation paths; log and review errors.

- Measurement: track audit pass rate, explain-why usage, complaint resolution time, alongside CBBE KPIs.

Appendix B

Appendix B.1. Definitions (Canonical Outcomes)

- Awareness: recognition/recall of destination name/brand elements.

- Image: cognitive/affective associations (multi-item); themes of nature/culture/amenities/people.

- Attitudes: global valuation (favourability/warmth).

- Loyalty: revisit intent, recommend intent, advocacy.

- Engagement intentions: intention to follow/share/review, UGC participation; where explicitly theorised, persistent platform behaviours proxied by counts are coded here.

Appendix B.2. Cross Era Operational Examples and Mapping (Extract)

| Outcome | Pre Internet | Web 1.0 | Web 2.0 | Mobile First | AI/XR Infused | Mapping Rule |

| Awareness | Survey recall of national slogan; brochure recall | Aided website recall; familiarity index | Familiarity after DMO page exposure; brand listing tasks | Brand familiarity after app exposure | Recall after chatbot/XR trial | Map to awareness if survey-based; exclude impressions |

| Image | 7-item place image scale | 10-item website induced image | UGC exposure → image scale | AR trail → perceived innovativeness + image | VR preview → affective image | Multi-item only; single sentimental phrases excluded |

| Attitudes | Global favourability (1–7) | As left | Attitude toward destination brand | As left | As left | Satisfaction not coded here unless framed as brand attitude |

| Loyalty | Intention to revisit/recommend | As left | Advocacy/WOM intent; subscribe intention (if tied to brand) | Revisit intent post app experience | WOM/revisit after chatbot/XR | Behaviours without brand framing excluded |

| Engagement intentions | Intention to follow/share; join mailing list | Subscribe intention | Share/UGC intention; live stream participation intent | QR/AR participation intent | Co-create with AI assistant intent | Raw counts (likes/views) only if theorised as behaviour; never co pooled with equity |

Appendix B.3. Handling Multiple Indicators Within a Study

Appendix B.4. Measurement Quality Checks

Appendix B.5. Links to Replication Assets

Appendix C

| Database | Search String |

|---|---|

| Scopus | (‘destination brand*’ OR ‘place branding’ OR ‘tourism marketing’) AND (‘internet’ OR ‘social media’ OR ‘Web 2.0’ OR ‘smart tourism’ OR ‘Web 3.0’ OR ‘mobile marketing’ OR ‘AI’ OR ‘artificial intelligence’ OR ‘user-generated content’ OR ‘influencer marketing’) AND PUBYEAR > 1989 AND PUBYEAR < 2026 AND (LIMIT-TO (LANGUAGE, ’English’)) |

| Web of Science | TS = (‘destination brand*’ OR ‘place branding’ OR ‘tourism marketing’) AND TS = (‘internet’ OR ‘social media’ OR ‘Web 2.0’ OR ‘smart tourism’ OR ‘Web 3.0’ OR ‘mobile marketing’ OR ‘AI’ OR ‘artificial intelligence’ OR ‘user-generated content’ OR ‘influencer marketing’) AND PY = (1990–2025) AND LA = (English) |

| Google Scholar | (‘destination brand’ OR ‘place branding’ OR ‘tourism marketing’) AND (‘internet’ OR ‘social media’ OR ‘Web 2.0’ OR ‘smart tourism’ OR ‘Web 3.0’ OR ‘mobile marketing’ OR ‘AI’ OR ‘artificial intelligence’ OR ‘user-generated content’ OR ‘influencer marketing’) (first 200 results manually inspected) |

Appendix D

| PRISMA 2020 Item and Brief Description | Status/Where Addressed in Manuscript | Notes | |

|---|---|---|---|

| 1 | Title—Identify the report as a systematic review | Title page: ‘… A Systematic Review with Narrative Synthesis and Meta-Analysis’ | — |

| 2 | Abstract—Structured summary of background, methods, results, discussion | Structured abstract (p. 1) | Follows PRISMA abstract headings |

| 3 | Rationale—Describe rationale for the review | Section 1.1 Rationale and Gap Analysis | — |

| 4 | Objectives—State specific objectives/questions | Section 1.2 Research Questions (RQ1–RQ4) | Objectives explicitly enumerated |

| 5 | Eligibility criteria—Specify inclusion/exclusion criteria | Section 3.2 Scope and Inclusion Criteria (Section 3.2.1, Section 3.2.2, Section 3.2.3, Section 3.2.4 and Section 3.2.5) | Binary four-criterion filter detailed |

| 6 | Information sources—List all sources searched and last search date | Section 3.3 Search Strategy and Screening (first paragraph) | Scopus, Web of Science, Google Scholar; search from 1990 to May 2025 |

| 7 | Search strategy—Present full search strategies for at least one database | Section 3.3, 2nd–3rd paragraphs (Boolean strings, truncation, limits) | Representative query strings shown |

| 8 | Selection process—Describe screening, duplicate removal and reviewers | Section 3.3, 4th–6th paragraphs; Figure 1 PRISMA flow diagram | Duplicate removal in EndNote; dual independent screeners |

| 9 | Data-collection process—Methods for extracting data from reports | Section 3.4 Data Extraction Protocols | Piloted spreadsheet; dual verification |

| 10 | Data items—List and define all variables sought | Section 3.4 (3rd–4th paragraphs) | Bibliographic, design, outcomes (awareness, image, etc.) |

| 11 | Risk-of-bias assessment—Methods for each study | Section 3.5 Risk-of-Bias and Quality Assessment | MMAT 2018 core; CASP/Cochrane supplements |

| 12 | Effect measures—Specify effect measures used | Section 3.7 Meta-Analysis Criteria (conversion to Hedges’ g/Fisher’s z) | Effect-size algorithms and software cited |

| 13 | Synthesis methods—Describe criteria, models, handling of heterogeneity | Section 3.1 (Two-Tiered Design), Section 3.7 (random effects, I2, meta-regression), Section 3.6 (qualitative), Section 5.3 (moderators) | Quantitative and qualitative integration explained |

| 14 | Reporting-bias assessment—Methods to assess risk of bias due to missing results | Section 5.4 Publication Bias and Sensitivity Tests | Funnel plots, Egger, trim and fill, fail-safe N |

| 15 | Certainty (confidence) assessment—Methods to assess certainty of evidence | Partially in Section 3.5 (quality strata) and Section 5.4 (sensitivity) | GRADE not applied; certainty discussed narratively |

| 16 | Study selection (Results)—Numbers of records screened/included | Figure 1 (PRISMA flow); Section 3.3, last paragraph | 1170 → 160 qualitative → 60 meta-analysed |

| 17 | Study characteristics—Cite each included study and characteristics | Section 3.4 (coding template description); Appendix C and Appendix D | Characteristics summarised; full refs in References |

| 18 | Risk of bias in studies (Results) | Section 3.5 (quality distribution and Table 2) | High/medium/low counts; common issues discussed |

| 19 | Results of individual studies | Appendix C and Appendix D (effect statistics); Section 5 tables | Each study’s r or d listed |

| 20 | Results of syntheses—Summary effects, heterogeneity, sub-groups | Section 5.5 (Table 11 pooled effects); Section 5.3 and Section 5.4 (moderators, bias) | Moderator Table 7, Table 8 and Table 9; I2 and Q reported |

| 21 | Reporting biases (Results)—Outcomes of bias assessments | Section 5.4 (Table 10 diagnostics) | No significant small-study effects |

| 22 | Certainty of evidence (Results) | Discussed in Section 6 Integrated Discussion (first paragraph) | Overall certainty inferred from quality and sensitivity; not formally graded |

| 23 | Discussion—Interpretation, limitations, implications | Section 6, Section 7, Section 8 and Section 9 (Discussion, Limitations, Conclusion) | Limits (breadth, data gaps), future research mapped |

| 24 | Registration and protocol—Provide registration, access to protocol | Not preregistered; stated in Funding/IRB box | Protocol not preregistered (item unmet) |

| 25 | Support—Describe sources of financial/non-financial support | Funding statement (‘no external funding’) | — |

| 26 | Competing interests—Declare competing interests | Conflicts of interest (‘none declared’) | — |

| 27 | Availability of data, code and other materials | Data availability statement (‘data sharing applicable’) | Data extraction log archived externally |

Appendix E

| Study | Combined N | Pearson’s r | Fisher’s z | Variance z |

|---|---|---|---|---|

| Study 1 | 750 | 0.324 | 0.337 | 0.0013 |

| Study 2 | 407 | 0.550 | 0.618 | 0.0025 |

| Study 3 | 775 | 0.206 | 0.209 | 0.0013 |

| Study 4 | 495 | 0.543 | 0.608 | 0.0020 |

| Study 5 | 1062 | 0.364 | 0.381 | 0.0009 |

| Study 6 | 904 | 0.372 | 0.390 | 0.0011 |

| Study 7 | 665 | 0.299 | 0.308 | 0.0015 |

| Study 8 | 321 | 0.405 | 0.429 | 0.0031 |

| Study 9 | 729 | 0.616 | 0.718 | 0.0014 |

| Study 10 | 334 | 0.239 | 0.244 | 0.0030 |

| Study 11 | 1215 | 0.535 | 0.598 | 0.0008 |

| Study 12 | 602 | 0.447 | 0.482 | 0.0017 |

| Study 13 | 487 | 0.422 | 0.451 | 0.0021 |

| Study 14 | 989 | 0.498 | 0.547 | 0.0010 |

| Study 15 | 119 | 0.185 | 0.188 | 0.0087 |

| Study 16 | 278 | 0.196 | 0.199 | 0.0037 |

| Study 17 | 436 | 0.189 | 0.192 | 0.0023 |

| Study 18 | 1048 | 0.576 | 0.657 | 0.0010 |

| Study 19 | 892 | 0.604 | 0.698 | 0.0011 |

| Study 20 | 532 | 0.498 | 0.547 | 0.0019 |

| Study 21 | 666 | 0.180 | 0.182 | 0.0015 |

| Study 22 | 1356 | 0.341 | 0.355 | 0.0007 |

| Study 23 | 248 | 0.418 | 0.445 | 0.0041 |

| Study 24 | 127 | 0.274 | 0.281 | 0.0082 |

| Study 25 | 775 | 0.636 | 0.748 | 0.0013 |

| Study 26 | 612 | 0.584 | 0.669 | 0.0017 |

| Study 27 | 979 | 0.177 | 0.179 | 0.0010 |

| Study 28 | 487 | 0.582 | 0.664 | 0.0021 |

| Study 29 | 355 | 0.154 | 0.155 | 0.0029 |

| Study 30 | 1485 | 0.317 | 0.329 | 0.0007 |

| Study 31 | 843 | 0.621 | 0.726 | 0.0012 |

| Study 32 | 654 | 0.401 | 0.425 | 0.0016 |

| Study 33 | 983 | 0.390 | 0.411 | 0.0010 |

| Study 34 | 507 | 0.288 | 0.296 | 0.0020 |

| Study 35 | 212 | 0.489 | 0.534 | 0.0049 |

| Study 36 | 1105 | 0.174 | 0.176 | 0.0009 |

| Study 37 | 598 | 0.610 | 0.711 | 0.0017 |

| Study 38 | 332 | 0.525 | 0.582 | 0.0031 |

| Study 39 | 764 | 0.486 | 0.532 | 0.0013 |

| Study 40 | 238 | 0.448 | 0.482 | 0.0044 |

| Study 41 | 682 | 0.615 | 0.718 | 0.0015 |

| Study 42 | 1201 | 0.569 | 0.647 | 0.0009 |

| Study 43 | 455 | 0.182 | 0.184 | 0.0023 |

| Study 44 | 538 | 0.153 | 0.154 | 0.0019 |

| Study 45 | 915 | 0.409 | 0.434 | 0.0011 |

| Study 46 | 341 | 0.096 | 0.096 | 0.0030 |

| Study 47 | 218 | 0.087 | 0.087 | 0.0048 |

| Study 48 | 466 | 0.044 | 0.044 | 0.0022 |

| Study 49 | 391 | 0.029 | 0.029 | 0.0026 |

| Study 50 | 543 | –0.023 | –0.023 | 0.0019 |

| Study 51 | 788 | –0.034 | –0.034 | 0.0013 |

| Study 52 | 604 | –0.057 | –0.057 | 0.0017 |

| Study 53 | 129 | –0.081 | –0.081 | 0.0083 |

| Study 54 | 221 | –0.097 | –0.097 | 0.0047 |

| Study 55 | 1002 | 0.142 | 0.143 | 0.0010 |

| Study 56 | 927 | 0.125 | 0.126 | 0.0011 |

| Study 57 | 347 | 0.066 | 0.066 | 0.0029 |

| Study 58 | 412 | 0.112 | 0.113 | 0.0025 |

| Study 59 | 1038 | 0.138 | 0.139 | 0.0010 |

| Study 60 | 606 | 0.149 | 0.150 | 0.0017 |

Appendix F

| Study | N Before | Mean Before | SD Before | N After | Mean After | SD After | Cohen’s d | Variance d |

|---|---|---|---|---|---|---|---|---|

| 1 | 223 | 2.83 | 1.13 | 216 | 3.31 | 1.18 | +0.42 | 0.0093 |

| 2 | 82 | 3.22 | 0.76 | 90 | 3.68 | 0.81 | +0.58 | 0.0243 |

| 3 | 66 | 3.00 | 0.91 | 73 | 3.31 | 0.95 | +0.33 | 0.0293 |

| 4 | 61 | 3.50 | 0.88 | 56 | 3.79 | 1.03 | +0.30 | 0.0347 |

| 5 | 146 | 2.89 | 1.10 | 139 | 3.62 | 1.09 | +0.67 | 0.0148 |

| 6 | 197 | 3.77 | 0.96 | 190 | 4.36 | 1.01 | +0.60 | 0.0108 |

| 7 | 218 | 3.38 | 0.85 | 219 | 3.78 | 0.82 | +0.48 | 0.0094 |

| 8 | 119 | 3.18 | 1.05 | 112 | 3.04 | 0.91 | −0.14 | 0.0174 |

| 9 | 150 | 3.47 | 0.85 | 146 | 3.80 | 0.84 | +0.39 | 0.0138 |

| 10 | 122 | 3.26 | 0.99 | 117 | 3.48 | 0.94 | +0.23 | 0.0169 |

| 11 | 201 | 3.12 | 0.88 | 208 | 3.64 | 0.86 | +0.58 | 0.0097 |

| 12 | 74 | 3.05 | 1.02 | 78 | 3.21 | 0.98 | +0.16 | 0.0272 |

| 13 | 97 | 3.40 | 0.90 | 92 | 3.67 | 0.96 | +0.29 | 0.0209 |

| 14 | 289 | 3.20 | 0.98 | 297 | 3.71 | 1.00 | +0.46 | 0.0069 |

| 15 | 212 | 3.09 | 1.02 | 207 | 3.47 | 1.01 | +0.37 | 0.0095 |

| 16 | 88 | 3.55 | 0.89 | 91 | 3.23 | 0.95 | −0.35 | 0.0230 |

| 17 | 132 | 2.95 | 1.11 | 126 | 3.48 | 1.14 | +0.48 | 0.0157 |

| 18 | 177 | 3.61 | 0.87 | 182 | 4.20 | 0.92 | +0.65 | 0.0111 |

| 19 | 119 | 3.11 | 0.92 | 124 | 3.59 | 0.90 | +0.53 | 0.0160 |

| 20 | 163 | 3.34 | 0.83 | 158 | 3.74 | 0.86 | +0.48 | 0.0124 |

| 21 | 96 | 3.02 | 1.05 | 92 | 2.90 | 1.01 | −0.11 | 0.0217 |

| 22 | 185 | 3.48 | 0.88 | 191 | 3.99 | 0.93 | +0.54 | 0.0107 |

| 23 | 79 | 3.26 | 0.87 | 74 | 3.42 | 0.89 | +0.19 | 0.0258 |

| 24 | 143 | 3.18 | 1.07 | 148 | 3.70 | 1.10 | +0.47 | 0.0139 |

| 25 | 134 | 3.42 | 0.95 | 129 | 3.11 | 0.90 | −0.33 | 0.0154 |

| 26 | 203 | 3.25 | 0.86 | 196 | 3.69 | 0.88 | +0.51 | 0.0099 |

| 27 | 117 | 2.97 | 1.02 | 113 | 3.36 | 1.07 | +0.38 | 0.0174 |

| 28 | 167 | 3.51 | 0.93 | 174 | 4.08 | 0.95 | +0.59 | 0.0118 |

| 29 | 75 | 3.08 | 0.90 | 70 | 3.54 | 1.00 | +0.48 | 0.0270 |

| 30 | 211 | 3.30 | 0.91 | 203 | 3.72 | 0.90 | +0.47 | 0.0096 |

| 31 | 142 | 3.07 | 1.08 | 137 | 3.61 | 1.05 | +0.51 | 0.0141 |

| 32 | 168 | 3.59 | 0.85 | 163 | 3.92 | 0.90 | +0.39 | 0.0118 |

| 33 | 69 | 3.14 | 0.99 | 66 | 3.06 | 0.95 | −0.08 | 0.0309 |

| 34 | 190 | 3.47 | 0.87 | 184 | 3.89 | 0.91 | +0.47 | 0.0104 |

| 35 | 155 | 3.22 | 1.04 | 158 | 3.60 | 1.06 | +0.36 | 0.0127 |

| 36 | 88 | 3.33 | 0.94 | 93 | 3.98 | 1.00 | +0.68 | 0.0220 |

| 37 | 216 | 3.11 | 0.86 | 218 | 3.50 | 0.88 | +0.46 | 0.0090 |

| 38 | 169 | 3.60 | 0.90 | 173 | 3.92 | 0.92 | +0.35 | 0.0118 |

| 39 | 118 | 3.05 | 1.03 | 111 | 3.49 | 1.08 | +0.42 | 0.0176 |

| 40 | 93 | 3.50 | 0.88 | 90 | 4.19 | 0.95 | +0.75 | 0.0222 |

| 41 | 124 | 3.12 | 0.96 | 128 | 3.55 | 0.97 | +0.44 | 0.0159 |

| 42 | 140 | 3.41 | 0.89 | 137 | 3.68 | 0.87 | +0.31 | 0.0129 |

| 43 | 179 | 3.16 | 1.07 | 174 | 3.55 | 1.02 | +0.37 | 0.0113 |

| 44 | 203 | 3.56 | 0.90 | 210 | 4.08 | 0.94 | +0.55 | 0.0099 |

| 45 | 77 | 3.22 | 0.93 | 81 | 3.65 | 0.98 | +0.45 | 0.0260 |

| 46 | 161 | 3.10 | 1.09 | 165 | 3.59 | 1.10 | +0.44 | 0.0126 |

| 47 | 132 | 3.33 | 0.91 | 129 | 3.70 | 0.95 | +0.41 | 0.0148 |

| 48 | 256 | 3.46 | 0.92 | 250 | 3.79 | 0.90 | +0.36 | 0.0079 |

| 49 | 103 | 3.05 | 1.01 | 99 | 3.60 | 1.09 | +0.52 | 0.0201 |

| 50 | 147 | 3.28 | 0.90 | 150 | 3.87 | 0.97 | +0.63 | 0.0134 |

| 51 | 89 | 3.12 | 0.96 | 86 | 3.41 | 0.92 | +0.31 | 0.0224 |

| 52 | 174 | 3.54 | 0.87 | 171 | 3.83 | 0.88 | +0.33 | 0.0114 |

| 53 | 119 | 2.95 | 1.06 | 123 | 3.27 | 1.04 | +0.31 | 0.0167 |

| 54 | 208 | 3.47 | 0.85 | 203 | 4.05 | 0.93 | +0.66 | 0.0098 |

| 55 | 131 | 3.22 | 0.97 | 133 | 3.62 | 0.99 | +0.41 | 0.0149 |

| 56 | 92 | 3.30 | 0.88 | 94 | 3.15 | 0.90 | −0.17 | 0.0226 |

| 57 | 178 | 3.11 | 1.08 | 182 | 3.51 | 1.06 | +0.37 | 0.0113 |

| 58 | 145 | 3.68 | 0.83 | 142 | 4.10 | 0.86 | +0.50 | 0.0132 |

| 59 | 80 | 3.08 | 0.95 | 76 | 3.45 | 1.00 | +0.39 | 0.0261 |

| 60 | 235 | 3.44 | 0.90 | 228 | 3.86 | 0.92 | +0.47 | 0.0088 |

References

- Aaker, D. A. (1991). Managing brand equity: Capitalizing on the value of a brand name. Free Press. [Google Scholar]

- Abderrahim, C., & Mustapha, T. (2018). Building destination loyalty using tourist satisfaction and destination image: A holistic conceptual framework. Journal of Tourism, Heritage & Services Marketing, 4(2), 37–43. [Google Scholar] [CrossRef]

- Aboalganam, K. M., AlFraihat, S. F., & Tarabieh, S. (2025). The impact of user-generated content on tourist visit intentions: The mediating role of destination imagery. Administrative Sciences, 15(4), 117. [Google Scholar] [CrossRef]

- Almeyda-Ibáñez, M., & George, B. P. (2017). The evolution of destination branding: A review of branding literature in tourism. Journal of Tourism, Heritage & Services Marketing, 3(1), 9–17. [Google Scholar] [CrossRef]

- Alonso-Almeida, M. d. M., Borrajo-Millán, F., & Yi, L. (2019). Are social media data pushing overtourism? The case of Barcelona and Chinese tourists. Sustainability, 11(12), 3356. [Google Scholar] [CrossRef]

- Alves, H. M., Sousa, B., Carvalho, A., Santos, V., Lopes Dias, A., & Valeri, M. (2022). Encouraging brand attachment on consumer behaviour: Pet-friendly tourism segment. Journal of Tourism, Heritage & Services Marketing, 8(2), 16–24. [Google Scholar] [CrossRef]

- Alzboun, N., Khawaldah, H., Harb, A., & Aburumman, A. (2025). Tourism destination image of Aqaba in the digital age: A user-generated content analysis of cognitive, affective, and conative elements. International Journal of Innovative Research and Scientific Studies, 8(2), 3763–3774. [Google Scholar] [CrossRef]

- Aman, E. E., Papp-Váry, Á. F., Kangai, D., & Odunga, S. O. (2024). Building a sustainable future: Challenges, opportunities, and innovative strategies for destination branding in tourism. Administrative Sciences, 14(12), 312. [Google Scholar] [CrossRef]

- Amanatidis, D., Mylona, I., Mamalis, S., & Kamenidou, I. (2020). Social media for cultural communication: A critical investigation of museums’ Instagram practices. Journal of Tourism, Heritage & Services Marketing, 6(2), 38–44. [Google Scholar] [CrossRef]

- Anaya-Sánchez, R., Rejón-Guardia, F., & Molinillo, S. (2024). Impact of virtual reality experiences on destination image and visit intentions: The moderating effects of immersion, destination familiarity and sickness. International Journal of Contemporary Hospitality Management, 36(11), 3607–3627. [Google Scholar] [CrossRef]

- Argyris, Y. A., Muqaddam, A., & Miller, S. (2021). The effects of the visual presentation of an influencer’s extroversion on perceived credibility and purchase intentions—Moderated by personality matching with the audience. Journal of Retailing and Consumer Services, 59, 102347. [Google Scholar] [CrossRef]

- Asthana, S. (2022). Twenty-five years of SMEs in tourism and hospitality research: A bibliometric analysis. Journal of Tourism, Heritage & Services Marketing, 8(2), 35–47. [Google Scholar] [CrossRef]

- Ayeh, J. K., Au, N., & Law, R. (2013). Do we believe in TripAdvisor? Examining credibility perceptions and online travellers’ attitudes toward user-generated content. Journal of Travel Research, 52(4), 437–452. [Google Scholar] [CrossRef]

- Baloglu, S., & McCleary, K. W. (1999). A model of destination image formation. Annals of Tourism Research, 26(4), 868–897. [Google Scholar] [CrossRef]

- Barari, M., Eisend, M., & Jain, S. P. (2025). A meta-analysis of the effectiveness of social media influencers: Mechanisms and moderation. Journal of the Academy of Marketing Science. Advance online publication. [Google Scholar] [CrossRef]

- Barreda, A. A., Bilgihan, A., Nusair, K., & Okumus, F. (2015). Generating brand awareness in online social networks. Computers in Human Behavior, 50, 600–609. [Google Scholar] [CrossRef]

- Barreda, A. A., Nusair, K., Wang, Y., Okumus, F., & Bilgihan, A. (2020). The impact of social media activities on brand image and emotional attachment: A case in the travel context. Journal of Hospitality and Tourism Technology, 11(1), 109–135. [Google Scholar] [CrossRef]

- Băltescu, C. A., & Untaru, E.-N. (2025). Exploring the characteristics and extent of travel influencers’ impact on generation Z tourist decisions. Sustainability, 17(1), 66. [Google Scholar] [CrossRef]

- Bălţescu, C. A. (2019). Do we still use tourism brochures in taking the decision to purchase tourism products? Annals of the ‘Constantin Brâncuşi’ University of Târgu-Jiu, Economy Series, 6, 110–115. [Google Scholar]

- Best, P., Manktelow, R., & Taylor, B. (2014). Online communication, social media and adolescent wellbeing: A systematic narrative review. Children and Youth Services Review, 41, 27–36. [Google Scholar] [CrossRef]

- Bigné, E., Oltra, E., & Andreu, L. (2019). Harnessing stakeholder input on Twitter: A case study of short breaks in Spanish tourist cities. Tourism Management, 71, 490–503. [Google Scholar] [CrossRef]

- Boo, S., Busser, J., & Baloglu, S. (2009). A model of customer-based brand equity and its application to multiple destinations. Tourism Management, 30(2), 219–231. [Google Scholar] [CrossRef]

- Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis. Wiley. [Google Scholar]

- Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2010). A basic introduction to fixed-effect and random-effects models for meta-analysis. Research Synthesis Methods, 1, 97–111. [Google Scholar] [CrossRef] [PubMed]

- Bramer, W. M., Giustini, D., de Jonge, G. B., Holland, L., & Bekhuis, T. (2016). De-duplication of database search results for systematic reviews in EndNote. Journal of the Medical Library Association, 104(3), 240–243. [Google Scholar] [CrossRef]

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Google Scholar] [CrossRef]

- Brodie, R. J., Hollebeek, L. D., Juric, B., & Ilić, A. (2011). Customer engagement: Conceptual domain, fundamental propositions, and implications for research. Journal of Service Research, 14(3), 252–271. [Google Scholar] [CrossRef]

- Buhalis, D. (2020). Technology in tourism—From information communication technologies to eTourism and smart tourism towards ambient intelligence tourism: A perspective article. Tourism Review, 75(1), 267–272. [Google Scholar] [CrossRef]

- Buhalis, D., & Foerste, M. (2015). SoCoMo marketing for travel and tourism: Empowering co-creation of value. Journal of Destination Marketing & Management, 4(3), 151–161. [Google Scholar] [CrossRef]

- Buhalis, D., & Law, R. (2008). Progress in information technology and tourism management: 20 years on and 10 years after the Internet—The state of eTourism research. Tourism Management, 29(4), 609–623. [Google Scholar] [CrossRef]

- Buhalis, D., & Sinarta, Y. (2019). Real-time co-creation and ‘nowness’ service: Lessons from tourism and hospitality. Journal of Travel & Tourism Marketing, 36(5), 563–582. [Google Scholar] [CrossRef]

- Bulchand-Gidumal, J., Secin, E. W., O’Connor, P., & Buhalis, D. (2023). Artificial intelligence’s impact on hospitality and tourism marketing: Exploring key themes and addressing challenges. Current Issues in Tourism, 27(14), 2345–2362. [Google Scholar] [CrossRef]

- Campbell, J. L., Quincy, C., Osserman, J., & Pedersen, O. K. (2013). Coding in-depth semistructured interviews: Problems of unitization and intercoder reliability and agreement. Sociological Methods & Research, 42(3), 294–320. [Google Scholar] [CrossRef]

- Card, N. A. (2012). Applied meta-analysis for social science research. Guilford Press. [Google Scholar]

- Chatzigeorgiou, C., & Christou, E. (2020). Adoption of social media as distribution channels in tourism marketing: A qualitative analysis of consumers’ experiences. Journal of Tourism, Heritage & Services Marketing, 6(1), 25–32. [Google Scholar] [CrossRef]

- Chekalina, T., Fuchs, M., & Lexhagen, M. (2016). Customer-based destination brand equity modeling: The role of destination resources, value for money, and value in use. Journal of Travel Research, 57(1), 31–51. [Google Scholar] [CrossRef]

- Chen, C., & Kim, S. (2025). The role of social media in shaping brand equity for historical tourism destinations. Sustainability, 17(10), 4407. [Google Scholar] [CrossRef]

- Cheung, M. W.-L. (2015). Meta-analysis: A structural equation modeling approach. John Wiley & Sons, Ltd. [Google Scholar]

- Christou, E., Fotiadis, A., & Giannopoulos, A. (2025). Generative AI as a tourism actor: Reconceptualising experience co-creation, destination governance and responsible innovation in the synthetic experience economy. Journal of Tourism, Heritage & Services Marketing, 11(2), 16–41. [Google Scholar] [CrossRef]

- Confetto, M. G., Conte, F., Palazzo, M., & Siano, A. (2023). Digital destination branding: A framework to define and assess European DMOs’ practices. Journal of Destination Marketing & Management, 30, 100804. [Google Scholar] [CrossRef]

- Cooper, H. (2010). Research synthesis and meta-analysis: A step-by-step approach (4th ed.). Sage Publications. [Google Scholar]

- Corbin, J., & Strauss, A. (2015). Basics of qualitative research: Techniques and procedures for developing grounded theory (4th ed.). Sage. [Google Scholar]

- Coudounaris, D. N., Björk, P., Trifonova Marinova, S., Jafarguliyev, F., Kvasova, O., Sthapit, E., Varblane, U., & Talias, M. A. (2025). ‘Big-5’ personality traits and revisit intentions: The mediating effect of memorable tourism experiences. Journal of Tourism, Heritage & Services Marketing, 11(1), 46–60. [Google Scholar] [CrossRef]

- Csapó, J., & Kusumaningrum, S. D. (2025). Uncovering trends in destination branding and destination brand equity research: Results of a topic modelling approach. Journal of Tourism, Heritage & Services Marketing, 11(1), 34–45. [Google Scholar] [CrossRef]

- Dedeoğlu, B. B., van Niekerk, M., Küçükergin, K. G., De Martino, M., & Okumuş, F. (2020). Effect of social media sharing on destination brand awareness and destination quality. Journal of Vacation Marketing, 26(1), 33–56. [Google Scholar] [CrossRef]

- de la Hoz-Correa, A., Muñoz-Leiva, F., & Bakucz, M. (2018). Past themes and future trends in medical tourism research: A co-word analysis. Tourism Management, 65, 200–211. [Google Scholar] [CrossRef]

- De Veirman, M., Cauberghe, V., & Hudders, L. (2017). Marketing through Instagram influencers: The impact of number of followers and product divergence on brand attitude. International Journal of Advertising, 36(5), 798–828. [Google Scholar] [CrossRef]

- Doan Do, T. T. M., Silva, J. A. M., Del Chiappa, G., & Pereira, L. N. (2024). The moderating role of sense of power and psychological risk on the effect of eWOM and purchase intentions for Airbnb. Journal of Tourism, Heritage & Services Marketing, 10(2), 3–14. [Google Scholar] [CrossRef]

- Doolin, B., Burgess, L., & Cooper, J. (2002). Evaluating the use of the web for tourism marketing: A case study from New Zealand. Tourism Management, 23(5), 557–561. [Google Scholar] [CrossRef]

- Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot–based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21(3), 719–734. [Google Scholar] [CrossRef]

- Echtner, C. M., & Ritchie, J. R. B. (1993). The measurement of destination image: An empirical assessment. Journal of Travel Research, 31(4), 3–13. [Google Scholar] [CrossRef]

- Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315(7109), 629–634. [Google Scholar] [CrossRef]

- Femenia-Serra, F., & Gretzel, U. (2020). Influencer marketing for tourism destinations: Lessons from a mature destination. In J. Neidhardt, & W. Wörndl (Eds.), Information and communication technologies in tourism 2020. Springer. [Google Scholar] [CrossRef]

- Flavián, C., Ibáñez-Sánchez, S., & Orús, C. (2019). The impact of virtual, augmented and mixed reality technologies on the customer experience. Journal of Business Research, 100, 574–560. [Google Scholar] [CrossRef]

- Fletcher, A. J., & Marchildon, G. P. (2014). Using the Delphi method for qualitative, participatory action research in health leadership. International Journal of Qualitative Methods, 13(1), 1–18. [Google Scholar] [CrossRef]

- Florido-Benítez, L., & del Alcázar Martínez, B. (2024). How artificial intelligence (AI) is powering new tourism marketing and the future agenda for smart tourist destinations. Electronics, 13(21), 4151. [Google Scholar] [CrossRef]

- Fragidis, G., & Kotzaivazoglou, I. (2022). Goal modelling for strategic dependency analysis in destination management. Journal of Tourism, Heritage & Services Marketing, 8(2), 3–15. [Google Scholar] [CrossRef]

- Gartner, W. C. (1993). Image formation process. Journal of Travel & Tourism Marketing, 2(2–3), 191–216. [Google Scholar] [CrossRef]

- Gertner, D. (2011). A (tentative) meta-analysis of the place marketing and place branding literature. Journal of Brand Management, 19(2), 112–131. [Google Scholar] [CrossRef]

- Giannopoulos, A., Skourtis, G., Kalliga, A., Dontas-Chrysis, D. M., & Paschalidis, D. (2020). Co-creating high-value hospitality services in the tourism ecosystem: Towards a paradigm shift? Journal of Tourism, Heritage & Services Marketing, 6(2), 3–11. [Google Scholar] [CrossRef]

- Glass, G. (2000). Meta-analysis at 25. Available online: https://ed2worlds.blogspot.com/2022/07/meta-analysis-at-25-personal-history.html (accessed on 15 May 2025).

- Gotschall, T. (2021). EndNote 20 desktop version. Journal of the Medical Library Association, 109(3), 520–522. [Google Scholar] [CrossRef]

- Gössling, S., & Higham, J. (2020). The low-carbon imperative: Destination management under urgent climate change. Journal of Travel Research, 60(6), 1167–1179. [Google Scholar] [CrossRef]

- Gretzel, G., Fesenmaier, D. R., Formica, S., & O’Leary, J. T. (2006). Searching for the future: Challenges faced by destination marketing organizations. Journal of Travel Research, 45, 116–126. [Google Scholar] [CrossRef]

- Gretzel, U. (2022). The smart DMO: A new step in the digital transformation of destination management organisations. European Journal of Tourism Research, 30, 3002. [Google Scholar] [CrossRef]

- Gretzel, U., Sigala, M., Xiang, Z., & Koo, C. (2015). Smart tourism: Foundations and developments. Electronic Markets, 25(3), 179–188. [Google Scholar] [CrossRef]

- Gusenbauer, M., & Haddaway, N. R. (2020). Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of google scholar, PubMed, and 26 other resources. Research Synthesis Methods, 11(2), 181–217. [Google Scholar] [CrossRef]

- Guttentag, D. A. (2010). Virtual reality: Applications and implications for tourism. Tourism Management, 31(5), 637–651. [Google Scholar] [CrossRef]

- Haddaway, N. R., Collins, A. M., Coughlin, D., & Kirk, S. (2015). The role of google scholar in evidence reviews and its applicability to grey literature searching. PLoS ONE, 10(9), e0138237. [Google Scholar] [CrossRef] [PubMed]

- Hanna, S., & Rowley, J. (2015). Towards a model of the Place Brand Web. Tourism Management, 48, 100–112. [Google Scholar] [CrossRef]

- Hanna, S., Rowley, J., & Keegan, B. (2021). Place and destination branding: A review and conceptual mapping of the domain. European Management Review, 18(2), 105–117. [Google Scholar] [CrossRef]

- Harrigan, P., Evers, U., Miles, M., & Daly, T. (2018). Customer engagement and the relationship between involvement, engagement, self-brand connection and brand usage intent. Journal of Business Research, 88, 388–396. [Google Scholar] [CrossRef]

- Hedges, L. V., & Olkin, I. (2014). Statistical methods for meta-analysis. Academic Press. [Google Scholar]

- Hedges, L. V., & Vevea, J. L. (1998). Fixed- and random-effects models in meta-analysis. Psychological Methods, 3(4), 486–504. [Google Scholar] [CrossRef]

- Hernández-Méndez, J., Baute-Díaz, N., & Gutiérrez-Taño, D. (2024). The effectiveness of virtual versus human influencer marketing for tourism destinations. Journal of Vacation Marketing. [Google Scholar] [CrossRef]

- Higgins, J. P. T., Altman, D. G., Gøtzsche, P. C., Jüni, P., Moher, D., Oxman, A. D., Savović, J., Schulz, K. F., Weeks, L., & Sterne, J. A. (2011). The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ, 343, d5928. [Google Scholar] [CrossRef]

- Higgins, J. P. T., Thompson, S. G., Deeks, J. J., & Altman, D. G. (2003). Measuring inconsistency in meta-analyses. BMJ, 327(7414), 557–560. [Google Scholar] [CrossRef]

- Ho, C. I., & Lee, Y. L. (2007). The development of an e-travel service quality scale. Tourism Management, 28(6), 1434–1449. [Google Scholar] [CrossRef]

- Hong, Q. N., Fàbregues, S., Bartlett, G., Boardman, F., Cargo, M., Dagenais, P., Gagnon, M.-P., Griffiths, F., Nicolau, B., O’Cathain, A., Rousseau, M.-C., Vedel, I., & Pluye, P. (2019). The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers. Education for Information, 34(4), 285–291. [Google Scholar] [CrossRef]

- Hopewell, S., Clarke, M., & Mallett, S. (2005). Grey literature and systematic reviews. In H. Rothstein, A. Sutton, & M. Borenstein (Eds.), Publication bias in meta-analysis: Prevention, assessment and adjustments (pp. 49–72). Wiley. [Google Scholar] [CrossRef]

- Huang, G. I., Karl, M., Wong, I. A., & Law, R. (2023). Tourism destination research from 2000 to 2020: A systematic narrative review in conjunction with bibliographic mapping analysis. Tourism Management, 95, 104686. [Google Scholar] [CrossRef]

- Huang, Z. J., Lin, M. S., & Chen, J. (2024). Tourism experiences co-created on social media. Tourism Management, 105, 104940. [Google Scholar] [CrossRef]

- Hudson, S., & Thal, K. (2013). The impact of social media on the consumer decision process. Journal of Travel & Tourism Marketing, 30(1–2), 156–160. [Google Scholar] [CrossRef]

- Huerta-Álvarez, R., Cambra-Fierro, J., & Fuentes-Blasco, M. (2020). The interplay between social media communication, brand equity and brand engagement in tourist destinations: An analysis in an emerging economy. Journal of Destination Marketing & Management, 16, 100413. [Google Scholar] [CrossRef]

- Hunter, J. E., & Schmidt, F. L. (2004). Methods of meta-analysis. SAGE Publications, Inc. [Google Scholar] [CrossRef]

- Judijanto, L., Suharto, B., & Susilo, A. (2024). Bibliometric trends in social media and destination marketing: Shaping perceptions in the tourism industry. West Science Interdisciplinary Studies, 2(12), 2353–2367. [Google Scholar] [CrossRef]

- Kannan, P. K., & Li, H. (2017). Digital marketing: A framework, review, and research agenda. International Journal of Research in Marketing, 34(1), 22–45. [Google Scholar] [CrossRef]

- Kavaratzis, M., & Hatch, M. J. (2013). The dynamics of place brands: An identity-based approach to place branding theory. Marketing Theory, 13(1), 69–86. [Google Scholar] [CrossRef]

- Keller, K. L. (1993). Conceptualizing, measuring, and managing customer-based brand equity. Journal of Marketing, 57(1), 1–22. [Google Scholar] [CrossRef]

- Kietzmann, J., Paschen, J., & Treen, E. R. (2018). Artificial intelligence in advertising: How marketers can leverage AI along the consumer journey. Journal of Advertising Research, 58(3), 263–267. [Google Scholar] [CrossRef]

- Kim, J. J., & Fesenmaier, D. R. (2015). Sharing tourism experiences: The posttrip experience. Journal of Travel Research, 56(1), 28–40. [Google Scholar] [CrossRef]

- Kim, M. J., Lee, C.-K., & Jung, T. H. (2020). Exploring consumer behavior in virtual reality tourism using an extended stimulus–organism–response model. Journal of Travel Research, 59(1), 69–89. [Google Scholar] [CrossRef]

- Kladou, S., & Kehagias, J. (2014). Assessing destination brand equity: An integrated approach. Journal of Destination Marketing & Management, 3(1), 2–10. [Google Scholar] [CrossRef]

- Koo, C., Kwon, J., Chung, N., & Kim, J. (2022). Metaverse tourism: Conceptual framework and research propositions. Current Issues in Tourism, 26(20), 3268–3274. [Google Scholar] [CrossRef]

- Krabokoukis, T. (2025). Bridging neuromarketing and data analytics in tourism: An adaptive digital marketing framework for hotels and destinations. Tourism and Hospitality, 6(1), 12. [Google Scholar] [CrossRef]

- Krakover, S., & Corsale, A. (2021). Sieving tourism destinations: Decision-making processes and destination choice implications. Journal of Tourism, Heritage & Services Marketing, 7(1), 33–43. [Google Scholar] [CrossRef]

- Lam, J. M. S., Kozak, M., & Ariffin, A. A. (2024). Would you like to travel after the COVID-19 pandemic? A novel examination of the causal correlations within the attitudinal theory of planned behaviour. Journal of Tourism, Heritage & Services Marketing, 10(2), 58–68. [Google Scholar] [CrossRef]

- Lasswell, H. D. (1948). The structure and function of communication in society. In L. Bryson (Ed.), The communication of ideas (pp. 37–51). Harper & Row. [Google Scholar]

- Law, R., Qi, S., & Buhalis, D. (2010). Progress in tourism management: A review of website evaluation in tourism research. Tourism Management, 31(3), 297–313. [Google Scholar] [CrossRef]

- Le, N. T. C., & Khuong, M. N. (2023). Investigating brand image and brand trust in airline service: Evidence of Korean Air. Journal of Tourism, Heritage & Services Marketing, 9(2), 55–65. [Google Scholar] [CrossRef]

- Lee, J. L.-M., Lau, C. Y.-L., & Wong, C. W.-G. (2023). Reexamining brand loyalty and brand awareness with social media marketing: A collectivist country perspective. Journal of Tourism, Heritage & Services Marketing, 9(2), 3–10. [Google Scholar] [CrossRef]

- Leech, N. L., & Onwuegbuzie, A. J. (2011). Beyond constant comparison qualitative data analysis: Using NVivo. School Psychology Quarterly, 26(1), 70–84. [Google Scholar] [CrossRef]

- Leung, D., Law, R., van Hoof, H., & Buhalis, D. (2013). Social media in tourism and hospitality: A literature review. Journal of Travel & Tourism Marketing, 30(1–2), 3–22. [Google Scholar] [CrossRef]

- Leung, X. Y. (2019). Do destination Facebook pages increase fan’s visit intention? A longitudinal study. Journal of Hospitality and Tourism Technology, 10(2), 205–218. [Google Scholar] [CrossRef]

- Leung, X. Y. (2024). Bridging the digital divide in hospitality and tourism: Digital inclusion for disadvantaged. Journal of Global Hospitality and Tourism, 3(2), 181–184. [Google Scholar] [CrossRef]

- Li, S. C. H., Robinson, P., & Oriade, A. (2017). Destination marketing: The use of technology since the millennium. Journal of Destination Marketing & Management, 6(2), 95–102. [Google Scholar] [CrossRef]

- Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gøtzsche, P. C., Ioannidis, J. P. A., Clarke, M., Devereaux, P. J., Kleijnen, J., & Moher, D. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and elaboration. PLoS Medicine, 6(7), e1000100. [Google Scholar] [CrossRef] [PubMed]

- Lin, M. S., Liang, Y., Xue, J. X., Pan, B., & Schroeder, A. (2021). Destination image through social media analytics and the survey method. International Journal of Contemporary Hospitality Management, 33(6), 2219–2238. [Google Scholar] [CrossRef]

- Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. SAGE Publications, Inc. [Google Scholar]

- Litvin, S. W., Goldsmith, R. E., & Pan, B. (2008). Electronic word-of-mouth in hospitality and tourism management. Tourism Management, 29(3), 458–468. [Google Scholar] [CrossRef]

- Liu, X., & Zhang, L. (2024). Impacts of different interactive elements on consumers’ purchase intention in live streaming e-commerce. PLoS ONE, 19(12), e0315731. [Google Scholar] [CrossRef] [PubMed]

- Long, H. A., French, D. P., & Brooks, J. M. (2020). Optimising the value of the critical appraisal skills programme (CASP) tool for quality appraisal in qualitative evidence synthesis. Research Methods in Medicine & Health Sciences, 1(1), 31–42. [Google Scholar] [CrossRef]

- Lu, L., Cai, R., & Gursoy, D. (2019). Developing and validating a service-robot integration willingness scale. International Journal of Hospitality Management, 80, 36–51. [Google Scholar] [CrossRef]

- Lu, W., & Stepchenkova, S. (2014). User-generated content as a research mode in tourism and hospitality applications: Topics, methods, and software. Journal of Hospitality Marketing & Management, 24(2), 119–154. [Google Scholar] [CrossRef]

- Luong, T.-B. (2024). The impact of uses and motivation gratifications on tourist behavioral intention: The mediating role of destination image and tourists’ attitudes. Journal of Tourism, Heritage & Services Marketing, 10(1), 3–13. [Google Scholar] [CrossRef]

- Machado, M., Dias, Á., Patuleia, M., & Pereira, L. (2025). A model of marketing-driven innovation in lifestyle tourism businesses. Journal of Tourism, Heritage & Services Marketing, 11(1), 21–33. [Google Scholar] [CrossRef]

- Mak, A. H. N. (2017). Online destination image: Comparing national tourism organisation’s and tourists’ perspectives. Tourism Management, 60, 280–297. [Google Scholar] [CrossRef]

- Mandagi, D. W., Indrajit, I., & Wulyatiningsih, T. (2024). Navigating digital horizons: A systematic review of social media’s role in destination branding. Journal of Enterprise and Development (JED), 6(2), 373–389. [Google Scholar] [CrossRef]

- Marco-Gardoqui, M., García-Feijoo, M., & Eizaguirre, A. (2023). Changes in marketing strategies at Spanish hotel chains under the framework of sustainability. Journal of Tourism, Heritage & Services Marketing, 10(1), 28–38. [Google Scholar] [CrossRef]

- Mariani, M. (2020). Web 2.0 and destination marketing: Current trends and future directions. Sustainability, 12(9), 3771. [Google Scholar] [CrossRef]

- Mariani, M., & Baggio, R. (2020). The relevance of mixed methods for network analysis in tourism and hospitality research. International Journal of Contemporary Hospitality Management, 32(4), 1643–1673. [Google Scholar] [CrossRef]

- Mariani, M., Buhalis, D., Longhi, C., & Vitouladiti, O. (2014). Managing change in tourism destinations: Key issues and current trends. Journal of Destination Marketing & Management, 4(4), 269–272. [Google Scholar] [CrossRef]

- Mariani, M. M., Di Felice, M., & Mura, M. (2016). Facebook as a destination marketing tool: Evidence from Italian regional DMOs. Journal of Travel & Tourism Marketing, 33(9), 1081–1093. [Google Scholar] [CrossRef]

- Marine-Roig, E. (2019). Destination image analytics through traveller-generated content. Sustainability, 11(12), 3392. [Google Scholar] [CrossRef]

- Marine-Roig, E., & Anton Clavé, S. (2016). Perceived image specialisation in multiscalar tourism destinations. Journal of Destination Marketing & Management, 5(3), 202–213. [Google Scholar] [CrossRef]

- Martins, M., & Santos, A. (2024). Exploring the potential of flickr user–Generated content for tourism research: Insights from Portugal. European Journal of Tourism, Hospitality and Recreation, 14(2), 258–272. [Google Scholar] [CrossRef]

- Martín-Martín, A., Thelwall, M., Orduna-Malea, E., & López-Cózar, E. D. (2021). Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: A multidisciplinary comparison of coverage via citations. Scientometrics, 126(1), 871–906. [Google Scholar] [CrossRef] [PubMed]

- McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282. [Google Scholar] [CrossRef]

- Michael, N., Chunawala, M. A., & Fusté-Forné, F. (2025). Instagrammable destinations: The use of photographs in digital tourism marketing in the United Arab Emirates. Journal of Tourism, Heritage & Services Marketing, 11(1), 3–10. [Google Scholar] [CrossRef]

- Milano, C., Novelli, M., & Cheer, J. M. (2019). Overtourism and tourismphobia: A journey through four decades of tourism development, planning and local concerns. Tourism Planning & Development, 16(4), 353–357. [Google Scholar] [CrossRef]

- Minghetti, V., & Buhalis, D. (2009). Digital divide in tourism. Journal of Travel Research, 49(3), 267–281. [Google Scholar] [CrossRef]

- Mirzaalian, F., & Halpenny, E. (2019). Social media analytics in hospitality and tourism: A systematic literature review and future trends. Journal of Hospitality and Tourism Technology, 10(4), 764–790. [Google Scholar] [CrossRef]

- Misirlis, N., Lekakos, G., & Vlachopoulou, M. (2018). Associating Facebook measurable activities with personality traits: A fuzzy sets approach. Journal of Tourism, Heritage & Services Marketing, 4(2), 10–16. [Google Scholar] [CrossRef]

- Mitev, A. Z., Irimiás, A. R., & Michalkó, G. (2024). Making parasocial identification tangible: Can film memorabilia strengthen travel intention? Journal of Tourism, Heritage & Services Marketing, 10(2), 24–32. [Google Scholar] [CrossRef]

- Mohammadi, S., Darzian Azizi, A., & Hadian, N. (2021). Location-based services as marketing promotional tools to provide value-added in E-tourism. International Journal of Digital Content Management, 2(3), 189–215. [Google Scholar] [CrossRef]

- Morgan, N. J., & Pritchard, A. (1998). Tourism promotion and power: Creating images, creating identities. John Wiley & Sons. [Google Scholar]

- Munar, A. M. (2011). Tourist-created content: Rethinking destination branding. International Journal of Culture, Tourism and Hospitality Research, 5(3), 291–305. [Google Scholar] [CrossRef]

- Munar, A. M., & Jacobsen, J. K. (2014). Motivations for sharing tourism experiences through social media. Tourism Management, 43, 46–54. [Google Scholar] [CrossRef]

- Nadalipour, Z., Hassan, A., Bhartiya, S., & Shah Hosseini, F. (2024). The role of influencers in destination marketing through Instagram social platform. In Technology and social transformations in hospitality, tourism and gastronomy: South Asia perspectives (pp. 20–38). CABI Publishing. [Google Scholar] [CrossRef]

- Nechoud, L., Ghidouche, F., & Seraphin, H. (2021). The influence of eWOM credibility on visit intention: An integrative moderated mediation model. Journal of Tourism, Heritage & Services Marketing, 7(1), 54–63. [Google Scholar] [CrossRef]

- Neuhofer, B., Buhalis, D., & Ladkin, A. (2015). Smart technologies for personalized experiences: A case study in the hospitality domain. Electronic Markets, 25, 243–254. [Google Scholar] [CrossRef]

- O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19, 1609406919899220. [Google Scholar] [CrossRef]

- Orden-Mejía, M., Carvache-Franco, M., Huertas, A., Carvache-Franco, O., & Carvache-Franco, W. (2025). Analysing how AI-powered chatbots influence destination decisions. PLoS ONE, 20(3), e0319463. [Google Scholar] [CrossRef]

- Pace, R., Pluye, P., Bartlett, G., Macaulay, A. C., Salsberg, J., Jagosh, J., & Seller, R. (2012). Testing the reliability and efficiency of the pilot Mixed Methods Appraisal Tool (MMAT). International Journal of Nursing Studies, 49(1), 47–53. [Google Scholar] [CrossRef]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, n71. [Google Scholar] [CrossRef]

- Pencarelli, T. (2020). The digital revolution in the travel and tourism industry. Information Technology & Tourism, 22(3), 455–476. [Google Scholar] [CrossRef]

- Peterson, R. A., & Brown, S. P. (2005). On the use of beta coefficients in meta-analysis. Journal of Applied Psychology, 90(1), 175–181. [Google Scholar] [CrossRef]

- Phuthong, T., & Chotisarn, N. (2025). Place branding as a soft power tool: A systematic review, bibliometric analysis, and future research directions. International Review of Management and Marketing, 15(4), 123–142. [Google Scholar] [CrossRef]

- Pike, S. (2002). Destination image analysis—A review of 142 papers from 1973 to 2000. Tourism Management, 23(5), 541–549. [Google Scholar] [CrossRef]

- Pike, S., & Page, S. J. (2014). Destination marketing organizations and destination marketing: A narrative analysis of the literature. Tourism Management, 41, 202–227. [Google Scholar] [CrossRef]

- Pirnar, I., Kurtural, S., & Tutuncuoglu, M. (2019). Festivals and destination marketing: An application from Izmir City. Journal of Tourism, Heritage & Services Marketing, 5(1), 9–14. [Google Scholar] [CrossRef]

- Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. (2012). Sources of method bias in social science research and recommendations on how to control it. Annual Review of Psychology, 63, 539–569. [Google Scholar] [CrossRef]

- Pricope Vancia, A. P., Băltescu, C. A., Brătucu, G., Tecău, A. S., Chițu, I. B., & Duguleană, L. (2023). Examining the Disruptive Potential of Generation Z Tourists on the Travel Industry in the Digital Age. Sustainability, 15(11), 8756. [Google Scholar] [CrossRef]

- Qu, H., Kim, L. H., & Im, H. H. (2011). A model of destination branding: Integrating the concepts of the branding and destination image. Tourism Management, 32(3), 465–476. [Google Scholar] [CrossRef]

- Rasul, T., Santini, F. O., Lim, W. M., Buhalis, D., & Ladeira, W. J. (2024). Tourist engagement: Toward an integrated framework using meta-analysis. Journal of Vacation Marketing, 31(4), 845–867. [Google Scholar] [CrossRef]

- Rather, R. A. (2020). Customer experience and engagement in tourism destinations: The experiential marketing perspective. Journal of Travel & Tourism Marketing, 37(1), 15–32. [Google Scholar] [CrossRef]

- Raudenbush, S. W. (2009). Analyzing effect sizes: Random-effects models. In H. Cooper, L. V. Hedges, & J. C. Valentine (Eds.), The handbook of research synthesis and meta-analysis (pp. 295–315). Russell Sage Foundation. [Google Scholar]

- Revilla Hernández, M., Santana Talavera, A., & Parra López, E. (2016). Effects of co-creation in a tourism destination brand image through Twitter. Journal of Tourism, Heritage & Services Marketing, 2(2), 3–10. [Google Scholar] [CrossRef]

- Rogers, E. M. (2003). Diffusion of innovations (5th ed.). Free Press. [Google Scholar]

- Roque, V., & Raposo, R. (2015). Social media as a communication and marketing tool in tourism: An analysis of online activities from international key player DMO. Anatolia, 27(1), 58–70. [Google Scholar] [CrossRef]

- Ruiz-Real, J. L., Uribe-Toril, J., & Gázquez-Abad, J. C. (2020). Destination branding: Opportunities and new challenges. Journal of Destination Marketing & Management, 17, 100453. [Google Scholar] [CrossRef]

- Saldaña, J. (2015). The coding manual for qualitative researchers. Sage. [Google Scholar]

- Schaar, R. (2013). Destination branding: A snapshot. Journal of Undergraduate Research, 16, 1–10. [Google Scholar]

- Schmidt, L., Finnerty Mutlu, A. N., Elmore, R., Olorisade, B. K., Thomas, J., & Higgins, J. P. T. (2021). Data extraction methods for systematic review (semi)automation: Update of a living systematic review. F1000Research, 10, 401. [Google Scholar] [CrossRef]

- Seraphin, H., & Yallop, A. (2023). The marriage à la mode: Hospitality industry’s connection to the dating services industry. Hospitality Insights, 7(1), 7–9. [Google Scholar] [CrossRef]

- Séraphin, H., & Jarraud, N. (2022). Interactions between stakeholders in Lourdes: An ‘Alpha’ framework approach. Journal of Tourism, Heritage & Services Marketing, 8(1), 48–57. [Google Scholar] [CrossRef]

- Sigala, M. (2015). Collaborative commerce in tourism: Implications for research and industry. Current Issues in Tourism, 20(4), 346–355. [Google Scholar] [CrossRef]

- Sigala, M. (2020). Tourism and COVID-19: Impacts and implications for advancing and resetting industry and research. Journal of Business Research, 117, 312–321. [Google Scholar] [CrossRef]

- Sihombing, A., Liu, L.-W., & Pahrudin, P. (2024). The impact of online marketing on tourists’ visit intention: Mediating roles of trust. Journal of Tourism, Heritage & Services Marketing, 10(2), 15–23. [Google Scholar] [CrossRef]

- Silvanto, S., & Ryan, J. (2023). Rethinking destination branding frameworks for the age of digital nomads and telecommuters: An abstract. In B. Jochims, & J. Allen (Eds.), Optimistic marketing in challenging times: Serving ever-shifting customer needs. AMSAC 2022. Developments in marketing science: Proceedings of the academy of marketing science. Springer. [Google Scholar] [CrossRef]

- Singh, R., & Sibi, P. S. (2023). E-loyalty formation of Generation Z: Personal characteristics and social influences. Journal of Tourism, Heritage & Services Marketing, 9(1), 3–14. [Google Scholar] [CrossRef]

- Sivathanu, B., Pillai, R., Mahtta, M., & Gunasekaran, A. (2024). All that glitters is not gold: A study of tourists’ visit intention by watching deepfake destination videos. Journal of Tourism Futures, 10(2), 218–236. [Google Scholar] [CrossRef]

- Sotiriadis, M. D. (2020). Tourism Destination Marketing: Academic Knowledge. Encyclopedia, 1(1), 42–56. [Google Scholar] [CrossRef]

- Sousa, A. E., Cardoso, P., & Dias, F. (2024). The use of artificial intelligence systems in tourism and hospitality: The tourists’ perspective. Administrative Sciences, 14(8), 165. [Google Scholar] [CrossRef]

- Sousa, N., Alén, E., Losada, N., & Melo, M. (2024). The adoption of Virtual Reality technologies in the tourism sector: Influences and post-pandemic perspectives. Journal of Tourism, Heritage & Services Marketing, 10(2), 47–57. [Google Scholar] [CrossRef]

- Sterne, J. A. C., Savović, J., Page, M. J., Elbers, R. G., Blencowe, N. S., Boutron, I., Cates, C. J., Cheng, H. Y., Corbett, M. S., Eldridge, S. M., Emberson, J. R., Hernán, M. A., Hopewell, S., Hróbjartsson, A., Junqueira, D. R., Jüni, P., Kirkham, J. J., Lasserson, T., Li, T., … Higgins, J. P. T. (2019). RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ, 366, l4898. [Google Scholar] [CrossRef]

- Stojanovic, I., Andreu, L., & Curras-Pérez, R. (2022). Social media communication and destination brand equity. Journal of Hospitality and Tourism Technology, 13(4), 650–666. [Google Scholar] [CrossRef]

- Tasci, A. D. A. (2018). Testing the cross-brand and cross-market validity of a consumer-based brand equity (CBBE) model for destination brands. Tourism Management, 65, 143–159. [Google Scholar] [CrossRef]

- Thompson, S. G., & Higgins, J. P. T. (2002). How should meta-regression analyses be undertaken and interpreted? Statistics in Medicine, 21(11), 1559–1573. [Google Scholar] [CrossRef]

- Tosyali, H., Tosyali, F., & Coban-Tosyali, E. (2023). Role of tourist-chatbot interaction on visit intention in tourism: The mediating role of destination image. Current Issues in Tourism, 28(4), 511–526. [Google Scholar] [CrossRef]

- Tran, N. L., & Rudolf, W. (2022). Social media and destination branding in tourism: A systematic review of the literature. Sustainability, 14(20), 13528. [Google Scholar] [CrossRef]

- Turnbull, D., Chugh, R., & Luck, J. (2023). Systematic-narrative hybrid literature review: A strategy for integrating a concise methodology into a manuscript. Social Sciences & Humanities Open, 7(1), 100381. [Google Scholar] [CrossRef]

- Tussyadiah, I., & Miller, G. (2019). Nudged by a robot: Responses to agency and feedback. Annals of Tourism Research, 78, 102752. [Google Scholar] [CrossRef]

- Tussyadiah, I. P. (2020). A review of research into automation in tourism: Launching the annals of tourism research curated collection on artificial intelligence and robotics in tourism. Annals of Tourism Research, 81, 102883. [Google Scholar] [CrossRef]

- Tussyadiah, I. P., Wang, D., Jung, T. H., & Tom Dieck, M. C. (2018). Virtual reality, presence, and attitude change: Empirical evidence from tourism. Tourism Management, 66, 140–154. [Google Scholar] [CrossRef]

- Veríssimo, J. M. C., Tiago, M. T. B., Tiago, F. G., & Jardim, J. S. (2017). Tourism destination brand dimensions: An exploratory approach. Tourism & Management Studies, 13(4), 1–8. [Google Scholar] [CrossRef]

- Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36(3), 1–48. [Google Scholar] [CrossRef]

- Wanner, J., Herm, L.-V., Heinrich, K., & Janiesch, C. (2022). The effect of transparency and trust on intelligent system acceptance: Evidence from a user-based study. Electronic Markets, 32, 2079–2102. [Google Scholar] [CrossRef]

- Wells, G. A., Shea, B., O’Connell, D., Peterson, J., Welch, V., Losos, M., & Tugwell, P. (2013). The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. Available online: http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp (accessed on 5 June 2025).

- Wengel, Y., Ma, L., Ma, Y., Apollo, M., Maciuk, K., & Ashton, A. S. (2022). The TikTok effect on destination development: Famous overnight, now what? Journal of Outdoor Recreation and Tourism, 37, 100458. [Google Scholar] [CrossRef]

- Whiting, A., & Williams, D. (2013). Why people use social media: A uses and gratifications approach. Qualitative Market Research, 16(4), 362–369. [Google Scholar] [CrossRef]

- Wüst, K., & Bremser, K. (2025). Artificial intelligence in tourism through chatbot support in the booking process—An experimental investigation. Tourism and Hospitality, 6(1), 36. [Google Scholar] [CrossRef]

- Xiang, Z., Du, Q., Ma, Y., & Fan, W. (2017). Assessing reliability of social media data: Lessons from mining tripadvisor hotel reviews. In R. Schegg, & B. Stangl (Eds.), Information and communication technologies in tourism 2017. Springer. [Google Scholar] [CrossRef]

- Xiang, Z., & Fesenmaier, D. R. (2017). Analytics in tourism design. In Z. Xiang, & D. Fesenmaier (Eds.), Analytics in smart tourism design. Tourism on the verge. Springer. [Google Scholar] [CrossRef]

- Xiang, Z., & Gretzel, U. (2010). Role of social media in online travel information search. Tourism Management, 31(2), 179–188. [Google Scholar] [CrossRef]

- Xiang, Z., Magnini, V. P., & Fesenmaier, D. R. (2015). Information technology and consumer behavior in travel and tourism: Insights from travel planning using the Internet. Journal of Retailing and Consumer Services, 22, 244–249. [Google Scholar] [CrossRef]

- Xu, F., Buhalis, D., & Weber, J. (2017). Serious games and the gamification of tourism. Tourism Management, 60, 244–256. [Google Scholar] [CrossRef]

- Yagmur, Y., & Demirel, A. (2024). An exploratory study on determining motivations, constraints, and strategies for coping with constraints to participate in outdoor recreation activities: Generation Z. Journal of Tourism, Heritage & Services Marketing, 10(1), 14–27. [Google Scholar] [CrossRef]

- Yağmur, Y., & Aksu, A. (2022). Investigation of destination image mediating effect on tourists’ risk assessment, behavioural intentions and satisfaction. Journal of Tourism, Heritage & Services Marketing, 8(1), 27–37. [Google Scholar] [CrossRef]

- Yallop, A., & Seraphin, H. (2020). Big data and analytics in tourism and hospitality: Opportunities and risks. Journal of Tourism Futures, 6(3), 257–262. [Google Scholar] [CrossRef]

- Yin, C. Z. Y., Jung, T., tom Dieck, M. C., & Lee, M. Y. (2021). Mobile augmented reality heritage applications: Meeting the needs of heritage tourists. Sustainability, 13(5), 2523. [Google Scholar] [CrossRef]

- Yu, J., & Meng, T. (2025). Image generative AI in tourism: Trends, impacts, and future research directions. Journal of Hospitality & Tourism Research. [Google Scholar] [CrossRef]

- Zafiropoulos, K., Vrana, V., & Antoniadis, K. (2015). Use of Twitter and Facebook by top European museums. Journal of Tourism, Heritage & Services Marketing, 1(1), 16–24. [Google Scholar] [CrossRef]

- Zamawe, F. C. (2015). The implication of using NVivo software in qualitative data analysis: Evidence-based reflections. Malawi Medical Journal, 27(1), 13–15. [Google Scholar] [CrossRef] [PubMed]

- Zeng, B., & Gerritsen, R. (2014). What do we know about social media in tourism? A review. Tourism Management Perspectives, 10, 27–36. [Google Scholar] [CrossRef]

- Zenker, S., & Braun, E. (2017). Questioning a ‘one size fits all’ city brand: Developing a branded house strategy for place brand management. Journal of Place Management and Development, 10(3), 270–287. [Google Scholar] [CrossRef]

- Zhou, Z. (1997). Destination marketing: Measuring the effectiveness of brochures. Journal of Travel & Tourism Marketing, 6(3–4), 143–158. [Google Scholar] [CrossRef]

| Phase | Timeframe | Dominant Interfaces | Signature Practices | Typical KPIs |

|---|---|---|---|---|

| Pre-internet (brochures) | To mid-1990s | Print, trade fairs, TV/radio | ☑ Top-down slogans and imagery ☑ Centralised message control | Arrivals, brochure distribution, aided recall |

| Web 1.0 | ~1995–2004 | Static websites, email | ☑ ‘Online brochure’ sites ☑ Basic usability & FAQs | Page views, downloads, email queries |

| Web 2.0 (Social) | ~2004–2013 | Blogs, review sites, Facebook, YouTube, Flickr, Twitter/X | ☑ UGC co creation ☑ Dialogue and community mgmt. ☑ Early influencer programs | Follows, shares, sentiment, community growth |

| Mobile first | ~2013–2020 | Smartphones, apps, GPS/AR, 4G | ☑ Context-aware prompts ☑ Live stories and vertical video ☑ On-site service recovery | App retention, click to navigate, geo-engagement |

| AI/XR-infused | ~2020–present | Chatbots, recommenders, AR/VR/XR, 5G/IoT | ☑ Personalisation at scale ☑ Immersive previews ☑ Automation and governance | Chatbot CSAT, VR dwell time, personalised CTR, audit logs |

| Era | Objective Focus | Do More of | Avoid | Phase-Appropriate KPIs |

|---|---|---|---|---|

| Pre-internet → Web1.0 | Establish credibility; canonical narrative | Keep a content-rich, accessible site; multilingual basics | PDF ‘dumping’; outdated pages | Aided recall; task completion; accessibility checks |

| Web 2.0 | Build peer credibility and dialogue | Curate UGC; run two-way communities; micro-influencers | Broadcasting without replies; vanity metric obsession | Sentiment with validation; community health; share of voice |

| Mobile first | Context + immediacy | On-site service recovery; geo-nudges; stories/vertical video | One-size-fits-all pushes; ignoring bandwidth constraints | App retention; click to navigate; service recovery time |

| AI/XR | Personalisation + governance | Chatbots with disclosure; inclusive training data; XR previews with expectation management | Opaque targeting; overuse of synthetic imagery without labels | Chatbot CSAT; explain-why rate; VR dwell time; audit pass rate |

| Canonical Outcome | Definition Used in this Review | Examples of Accepted Measures (By Phase) | Examples of Excluded Measures | Standardisation to Meta-Variable |

|---|---|---|---|---|

| Awareness | Ability to recognise/recall destination | Pre-internet/Web 1.0: aided/unaided recall; Web 2.0+: survey-based familiarity/visibility | Impressions/reach; search volume without survey validation | Means/SD → Hedges’ g; correlations → Fisher’s z |

| Image | Cognitive and affective associations | Multi-item image scales, semantic differentials; UGC exposure → perceived image | Single-item ‘sentiment’ without validation | As above; sign oriented positive |

| Attitudes | Global evaluative orientation | 5–7 pt favourability/warmth; brand attitude index | Satisfaction unless framed as attitude toward destination brand | As above |

| Loyalty | Conative commitment (revisit, recommend, advocacy) | Intention to revisit/recommend; WOM intention | Arrivals/sales unless causally linked to equity | As above |

| Engagement intentions | Willingness to follow, share, co-create | Follow/subscribe/share intention; UGC intention; platform counts when theorised as behaviour | Clicks/impressions without behavioural intent | As above (separate stratum) |

| Study Quality | Number of Studies | Mean Effect Size (Cohen’s d) | Standard Deviation (SD) |

|---|---|---|---|

| High | 68 | 0.57 | 0.12 |

| Medium | 72 | 0.53 | 0.14 |

| Low | 20 | 0.50 | 0.17 |

| Core Theme | Sub-Themes | What Changed | So What for Equity? |

|---|---|---|---|

| Engagement evolution | From one-way → dialogic → real-time | Visitors move from audience to co-creators |

|

| Phased tech adoption | Brochureware → social → mobile → AI/XR | Capabilities cumulate; laggards lose relevance |

|

| Changing tourist roles | UGC, micro-communities, influencers | Peer credibility eclipses official claims |

|

| Brand equity in the digital age | Awareness, image, attitudes, loyalty, engagement | Multi-modal measurement and feedback loops |

|

| Outcome Category | Egger’s Regression Coefficient (Intercept) | Standard Error | t-Value | p-Value |

|---|---|---|---|---|

| Brand Awareness | 0.35 | 0.42 | 0.83 | 0.41 |

| Brand Image/Attitude | 0.28 | 0.39 | 0.72 | 0.47 |

| Engagement Metrics | 0.20 | 0.30 | 0.67 | 0.51 |

| Branding Outcome | Number of Studies (k) | Pooled Effect Size (Hedges’ g) | 95% CI | Cochran’s Q | p-Value (Q) | I2 (%) |

|---|---|---|---|---|---|---|

| Brand Awareness | 24 | 0.52 | [0.31, 0.73] | 32.1 | <0.01 | 66.5 |

| Brand Image and Attitudes | 20 | 0.61 | [0.39, 0.83] | 28.6 | <0.01 | 68.5 |

| Engagement Metrics | 16 | 0.78 | [0.54, 1.02] | 21.9 | <0.01 | 58.0 |

| Aspect | Pre-Digital (Print Era) | Web 1.0 (Early Internet) | Web 2.0 (Social Media) | Mobile Era (Smartphone) | AI and XR Era (Current) |

|---|---|---|---|---|---|

| Message control vs. co-creation | DMO monopoly; no feedback. | Managerial dominance; static pages. | Shared narration via UGC. | Real time visitor input blends with official voice. | Algorithmic personalisation; facilitative DMO role. |

| Communication model | One way broadcast. | One way online. | Dialogic, peer to peer. | Always-on multilateral exchange. | Immersive, AI-mediated interaction. |

| Speed of dissemination | Annual cycles. | Occasional updates. | Second by second virality. | Instant, location-triggered. | Continuous, predictive responsiveness. |

| Reach and audience | Market-bounded print audiences. | Global yet search dependent. | Viral network diffusion. | Ubiquitous in-journey targeting. | Hyper-segmented worldwide access, virtual visitation. |

| Data and feedback depth | Sparse surveys. | Basic traffic metrics. | Engagement and sentiment analytics. | Contextual behavioural traces. | Integrated big data, real-time modelling. |

| Social-Media Platform (Primary) | k (Effect Sizes) | Pooled Hedges’ g | 95% CI | I2 (%) | Q (df) | p Heterogeneity |

|---|---|---|---|---|---|---|

| 24 | 0.45 | 0.28–0.63 | 52 | 22.1 (11) | 0.024 | |

| 16 | 0.57 | 0.35–0.79 | 48 | 13.4 (7) | 0.064 | |

| TikTok/YouTube (high-visual) | 12 | 0.62 | 0.31–0.93 | 60 | 12.0 (5) | 0.034 |

| Twitter/X | 8 | 0.30 | 0.05–0.55 | 45 | 5.5 (3) | 0.139 |

| Region | k (Effect Sizes) | Pooled Hedges’ g | 95% CI | I2 (%) | Q (df) | p Heterogeneity |

|---|---|---|---|---|---|---|

| Europe | 20 | 0.49 | 0.29–0.69 | 46 | 17.2 (9) | 0.045 |

| North America | 16 | 0.44 | 0.19–0.69 | 42 | 12.3 (7) | 0.091 |

| Asia–Pacific | 14 | 0.53 | 0.25–0.81 | 51 | 14.4 (6) | 0.026 |

| Other | 10 | 0.32 | 0.05–0.59 | 38 | 6.5 (4) | 0.165 |

| Design | k (Effect Sizes) | Pooled Hedges’ g | 95% CI | I2 (%) |

|---|---|---|---|---|

| Experiments (field/lab) | 22 | 0.50 | 0.30–0.70 | 48 |

| Quasi-experiments (pre/post) | 18 | 0.46 | 0.23–0.69 | 41 |

| Cross-sectional surveys | 20 | 0.42 | 0.22–0.62 | 46 |

| Content Strategy (Primary) | k (Effect Sizes) | Pooled Hedges’ g | 95% CI | I2 (%) | Q (df) | p Heterogeneity |

|---|---|---|---|---|---|---|

| User-generated content (UGC) | 30 | 0.58 | 0.40–0.76 | 55 | 28.4 (14) | 0.013 |

| DMO-generated content | 24 | 0.42 | 0.25–0.59 | 50 | 24.2 (11) | 0.018 |

| Integrated/mixed | 14 | 0.49 | 0.25–0.73 | 58 | 14.7 (6) | 0.023 |

| Moderator and Categories | k | Pooled Hedges’ g | 95% CI | I2 (%) | Q (df) | p Heterogeneity |

|---|---|---|---|---|---|---|

| Influencer tier | ||||||

| Micro (<50 K followers) | 16 | 0.64 | 0.41–0.87 | 48 | 13.4 (7) | 0.063 |

| Mid (50 K–500 K) | 12 | 0.58 | 0.32–0.85 | 52 | 10.5 (5) | 0.060 |

| Macro/Mega (>500 K) | 10 | 0.36 | 0.08–0.64 | 44 | 7.1 (4) | 0.131 |

| Interactivity level | ||||||

| Low (broadcast/one-way) | 22 | 0.31 | 0.14–0.48 | 40 | 16.4 (10) | 0.088 |

| High (dialogic/co-creation) | 28 | 0.69 | 0.49–0.88 | 46 | 24.3 (13) | 0.028 |

| Destination type | ||||||

| Emerging/lesser known | 18 | 0.71 | 0.45–0.96 | 50 | 16.0 (8) | 0.041 |

| Well-known city/flagship | 14 | 0.38 | 0.12–0.64 | 47 | 11.3 (6) | 0.080 |

| Nation-branding campaigns | 10 | 0.29 | 0.04–0.55 | 43 | 7.0 (4) | 0.135 |