1. Introduction

Since 2022, the introduction of artificial intelligence (AI) technologies in the field of education has marked a significant turning point in teaching and learning methods. Among these technologies, ChatGPT, developed by OpenAI, stands out for its ability to generate text in a contextual manner and to explore different domains, thus offering new pedagogical perspectives (

Moussavou 2023;

Lo 2023;

Rahman and Watanobe 2023;

Mogavi et al. 2023). The aim of this research is to examine the factors influencing the perceptions, acceptance and use of ChatGPT, and its influence on the teaching practices of higher education practitioners. Through the application of Technology Acceptance Theory (TAM) as a theoretical framework (

Davis 1986), this study aims to propose a TAM model specific to ChatGPT as advocated by

Venkatesh (

2022), considering the specificities of the application domain, namely education and university teaching. This will make it possible to understand the contexts in which the adoption of ChatGPT could modify teachers’ pedagogical approach, in terms of course design, pedagogical experience, assessment methods and interaction with students (

Klyshbekova and Abbott 2024;

Karthikeyan 2023;

Montenegro-Rueda et al. 2023).

While some teachers proclaim the effectiveness and usefulness of ChatGPT, others remain highly sceptical and fear the excesses of such a tool. Based on TAM theory, the aim of this research is to empirically examine teachers’ perceptions and practices. By addressing this issue, this study contributes to the existing literature on educational technologies and the integration of AI into teaching, by providing an in-depth and empirical analysis of the impact of ChatGPT on pedagogical dynamics.

In the current academic landscape, the integration of ChatGPT into education is attracting increased research interest. However, an in-depth exploration of the literature reveals a lack of empirical studies on the use and actual impact of ChatGPT. To date, few field studies have been identified. Most of the published work takes a theoretical approach or focuses on the analysis of existing literature, offering conceptual perspectives on the potential applications and theoretical implications of ChatGPT without drawing on concrete empirical data.

This gap raises pertinent questions about the actual effectiveness of ChatGPT in practical contexts, particularly in teaching. The aim of this work is to fill in some of the gaps in the literature by means of an exploratory study of teachers who use or do not use AI in their daily work. This approach will not only enable existing theoretical hypotheses to be validated but will also identify ways of optimising the use of ChatGPT in various practical applications.

To achieve this objective, a qualitative approach was favoured (

Bardin 2013). Semi-directive interviews were conducted with a targeted sample of university teachers who had or had not integrated ChatGPT into their teaching methods. The aim of this first stage was to gather detailed data on their perceptions, their experiences and the concrete changes in their teaching practices following the use of ChatGPT. Following the analysis of the interviews, this research proposes a version of the TAM model revisited and adapted to the adoption of ChatGPT in education.

First, we present an updated literature review on the use of ChatGPT in education, as well as on models of acceptance of the technology. The methodological choices are then presented. Finally, the main results of this exploratory study are highlighted, allowing us to propose a specific and adapted model.

2. Theoretical Framework

Our research is based on two main concepts: ChatGPT and technology acceptance models. The first part will discuss the evolution of ChatGPT, its impact on education and its opportunities and challenges. The second part will look at the models of the acceptance of the technology, highlighting how they have evolved over the years and the results obtained.

2.1. Evolution of ChatGPT: Impacts on Higher Education Sector

Humanity’s ongoing quest to simulate human intelligence is not new. For many years, the ambition of scientists has been to create systems capable of thinking, understanding and interacting like a human being. Since 1950, the first Turing experiments have been exploring the possibilities of thinking machines by attempting to model complex cognitive processes. The first neural network computer was created, and Turing published the Turing Test used to evaluate AIs. These major advances went on to form the foundations of artificial intelligence. But it was not until 1956 that the word ‘Artificial Intelligence’ was officially used.

In 1989, the Frenchman Yann Lecun developed the first neural network capable of recognising handwritten numbers, an invention that was to lead to the development of deep learning. In 1997, IBM’s Deep Blue system won against the world chess champion, the first time a machine had surpassed human capabilities. Over the following decades, AI continued to evolve, each time pushing the limits of what machines could achieve.

The launch of ChatGPT by OpenAI, in November 2022, represents a significant step forward in this evolution, illustrating both a great capacity to accumulate and process information and to generate a language identical to that of human beings. ChatGPT, derived from the GPT (Generative Pre-trained Transformer) family of models, is the fruit of several years’ research into automatic natural language processing (ANLP). It is an ‘encyclopaedic model that integrates a large number of references to the real world’ (

Langlais 2023). Its operating mechanism is based on a large corpus of data, on which it is trained and which enables it to respond to user queries in a superficially coherent and nuanced way (

Langlais 2023). One of the special features of ChatGPT is its ability to perform fine-tuning, which allows the model to be specialised on specific tasks or domains, by re-training it on targeted datasets.

This ability to process large amounts of data and produce text in a consistent and contextual way has led to and facilitated its rapid adoption in several fields. The integration of AI in small- and medium-sized enterprises is marking a profound transformation of their environment and their working conditions (

Berger-Douce et al. 2023). Indeed, this tool has been used in the healthcare sector to support research and clinical decision-making (

Garg et al. 2023), in improving medical documentation (

Baker et al. 2024), in business to improve employee productivity and customer service (

Zhu et al. 2023), in entrepreneurship education (

Dabbous and Boustani 2023) and in education in general to foster student engagement and improve learning (

Lo 2023;

Montenegro-Rueda et al. 2023). Thus, according to

Kwan (

2023), ChatGPT is an assistance tool for teachers, enabling more effective lesson preparation (

Rahman and Watanobe 2023;

Lo 2023) and offering personalised support to students (

Montenegro-Rueda et al. 2023). Thanks to ChatGPT, we can indeed witness a transformation in teaching and learning methods, including personalised assistance, the creation of educational content, learner engagement, automated formative assessment and the diversification of pedagogical approaches. The table below summarises the main opportunities and threats of using ChatGPT in education (

Table 1).

In the higher education context, although ChatGPT has several advantages and provides a good opportunity (

Adeshola and Adepoju 2023) to improve the educational experience (

Grassini 2023), the integration of ChatGPT is double-edged. Although this technology has real potential for both teachers and students, it is not without risk and its integration is not as straightforward as one might think. Admittedly, in theory, this AI can be used by teachers as an assistant, a course creation tool, an assessment support tool (

Klyshbekova and Abbott 2024), a tool for personalising learning (

Mhlanga 2023;

Grassini 2023;

Karthikeyan 2023) and a tool for managing administrative tasks (

Memarian and Doleck 2023). All of this makes it possible to enhance the teacher’s experience and place greater emphasis on interaction and exchange with students (

Lo 2023;

Montenegro-Rueda et al. 2023). However, the problems of data security (

Al-Mughairi and Bhaskar 2024), plagiarism (

Lo 2023;

Grassini 2023;

Rahman and Watanobe 2023;

Yu 2024), intellectual property (

Mhlanga 2023), addiction (

Al-Mughairi and Bhaskar 2024) and algorithmic bias (

Memarian and Doleck 2023) hamper its rapid implementation. Furthermore, the empirical effectiveness and veracity of such promises have yet to be demonstrated, and their real impact on the depersonalisation of teaching and on students’ cognitive abilities has yet to be proven (

Rahman and Watanobe 2023). Hence, it is important to put into place a framework that optimises the benefits of ChatGPT while reducing the ethical, psychological, legal and technical risks.

2.2. Theories of Technology Acceptance: Evolution and Specificities

Under the name TAM, there are in fact several models of technology acceptance which have been enriched as studies have been carried out and results obtained, making it possible to obtain ‘enriched’ or ‘extended’ versions of the basic model. The first TAM model (

Davis 1986,

1989) focuses on perceived usefulness and perceived ease of use, which determine behavioural intention. It is a simplified, even minimalist, model which has the advantage of being easy to understand and usable in many contexts. TAM 2 (

Venkatesh and Davis 2000) proposes an enriched version of the model by highlighting seven antecedents of perceived usefulness. TAM 3 (

Venkatesh and Bala 2008) proposes the addition of six antecedents of perceived ease of use. In parallel with the TAM models, the UTAUT model, known as the unified model (

Venkatesh et al. 2003), allows several approaches to be considered to explain intention to use. It focuses on four moderating factors and four determining factors that provide a better understanding of intention to use. The UTAUT2 model (

Venkatesh et al. 2012) is more consumer-oriented, and includes habits, price and pleasure as additional antecedents. The table below summarises the different models (

Table 2).

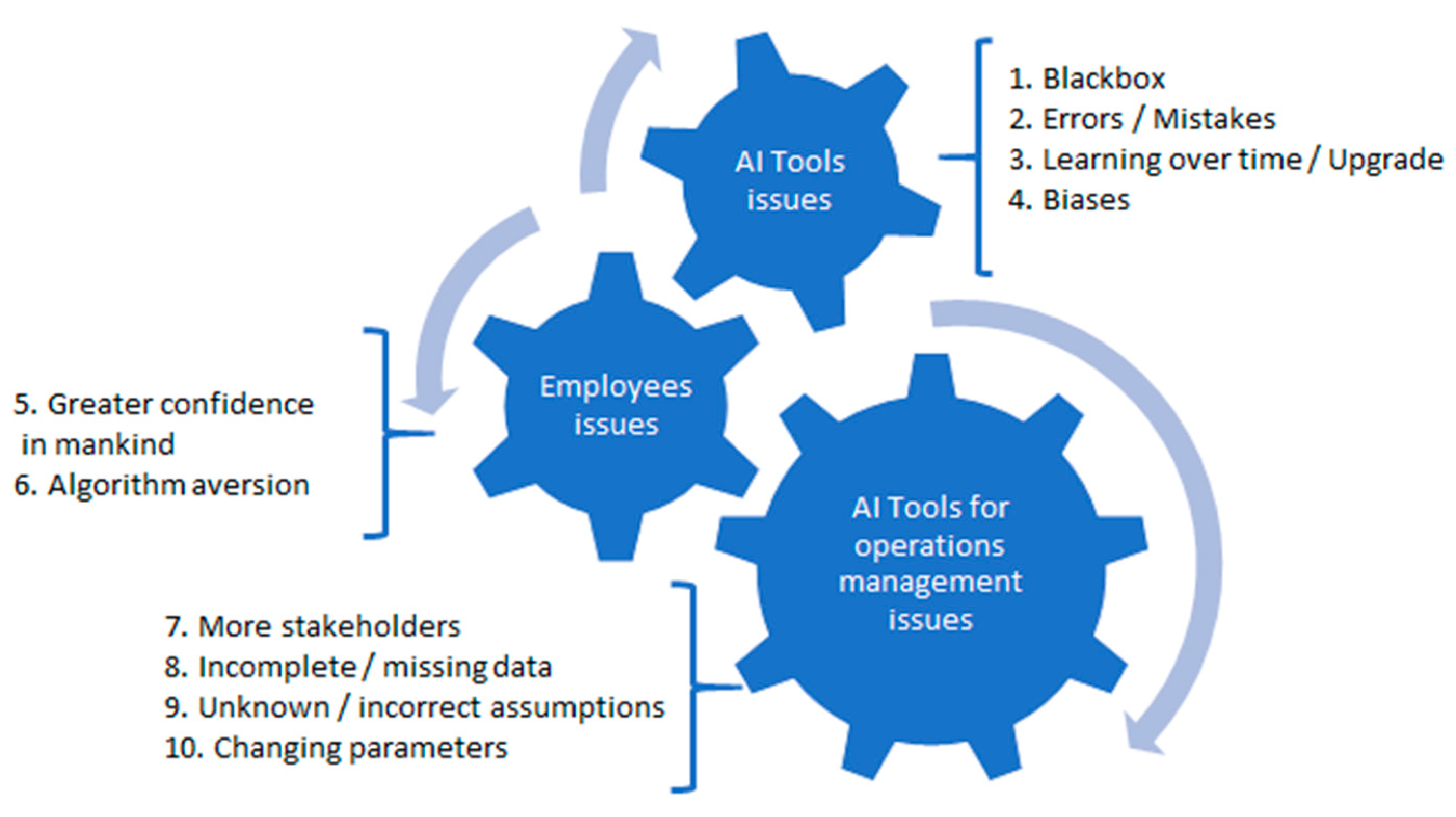

This study is focused on the latest UTAUT model proposed by

Venkatesh (

2022), which focuses on the context of artificial intelligence. Indeed, the specificity of AI leads to significant modifications of the model that need to be considered. The author proposes an adapted research programme to study the adoption of AI by employees. Ten points of vigilance, grouped into three categories, are highlighted as follows: the intrinsic characteristics of artificial intelligence tools, the characteristics of the employees who will use them and the characteristics of the organisation in which the AI will be used. The figure below illustrates these different elements (

Figure 1).

Given these specific features, the proposed model incorporates four new moderating factors: individual characteristics linked to the personality of employees (acceptance or even search for risk, tolerance of uncertainty or desire to learn), characteristics linked to artificial intelligence technology (quality, errors and transparency), characteristics linked to the organisation in which the tools will be used (climate conducive to innovation, number of people involved and incomplete or missing information), and characteristics linked to training (regular training sessions, initiation and gamification). These four moderating factors can influence the classic determining factors of the model, namely performance expectancy, effort expectancy, social influence and facilitating conditions, which themselves help to explain the intention to use AI.

Menon and Shilpa (

2023) tested the model on ChatGPT users (students and employees) and found results in line with previous literature: performance expectancy, effort expectancy, social influence, facilitating conditions and privacy concerns were the predominant factors influencing the usage of ChatGPT. What about others who have not yet accepted this technique, such as teachers who have complete control over their teachings?

In the context of our study, we are going to question these different models of the acceptance of technology. The organisation considered will be represented by the higher education institutions considered, the employees will be the teachers interviewed and the artificial intelligence tool studied will mainly be ChatGPT. To do this, we used a qualitative methodology presented in the following section.

3. Methodology: A Qualitative Study

In order to gain a better understanding of the factors influencing the adoption of ChatGPT in the context of higher education and more specifically by teachers, a qualitative approach was adopted. The authors have conducted semi-directive face-to-face interviews. This method provides interesting and nuanced data on the participants’ experiences, perceptions and attitudes.

The sample consisted of teachers working at various levels of university education and academic fields in public and private institutions across continental France. Participants were chosen progressively using purposive sampling, with the goal of increasing profile variety while maintaining qualitative representativeness (

Paillé and Mucchielli 2021). Respondents possessed unique characteristics such as gender, age, seniority, area of competence, and interest in technology.

The individual semi-structured interviews lasted an average of one hour (the length of the interviews varied between 30 min and 90 min) and were conducted face-to-face by two interviewers between March 2024 and April 2024. After an initial introductory phase, during which the interviewers introduced themselves and explained the purpose of the study, the interviewees were asked to introduce themselves and their relationship with the technology. Seven main themes are then addressed (the interview guide is presented in

Appendix A):

The experience of using ChatGPT;

Its perceived usefulness;

Its perceived ease of use;

Its perceived interest;

The users’ attitude to generative AI;

Their intentions to use;

Subjective opinions and standards.

The interviewers used the classic techniques of reminder and reformulation. Interviewees were encouraged to express themselves freely about their experience with ChatGPT and generative AI.

All of the qualitative data (transcribed verbatim) was subjected to content analysis (

Bardin 2013;

Paillé and Mucchielli 2021) using an abductive approach, an analytical approach that is both deductive, based on the enriched TAM framework, and inductive, aimed at bringing out new relevant themes from the field.

To comply with an ethical approach to data collection and use (

Kozinets 2019), we explained the research objectives to the interviewees, specifying that the results would be used in a scientific and/or educational context. Oral consent was systematically obtained. Anonymity and confidentiality were guaranteed both during data collection and data processing and analysis.

An in-depth examination of the verbatim transcripts by the researchers enabled us to identify the main trends and factors explaining the adoption of ChatGPT. Our empirical results confirm the relevance of the enriched TAM theoretical framework, while highlighting certain specificities linked to the singular nature of this conversational AI technology.

4. Results

The ten people interviewed had a wide range of characteristics. They ranged in age from 25 to 69. Six men and four women made up our sample. We were able to interview teachers specialising in various fields such as economics, management, finance, communication, marketing, mathematics and chemistry (

Table 3).

This diversity of profiles ensures that the results are representative. Some of the people interviewed use ChatGPT regularly, which can be seen as ‘a time-saving assistant for everyday tasks or tasks that we’re not used to doing’ (I10). The uses may be professional, but also private. It quickly becomes apparent that this tool easily penetrates the different spheres of a person’s life: ‘So, on the other hand, the uses are extremely varied, ranging from preparing my future maths homework to finding the recipe for I don’t know the last time a fish I don’t know any more, in short finding recipes, preparing a road trip to Scotland or writing a paragraph to present a project, etc.’ (I7). On the other hand, other people had not yet used ChatGPT, or had used it very little, and some had only tried it: ‘Apart from the test I’ve just told you about, I’ve never tried it’ (I8); they had no plans to use it for the time being: ‘In any case, the attempt I made was a trial’ (I6).

The content analysis reveals that teachers’ perception of the usefulness of ChatGPT is very mixed. While the productivity gains (the creation of resources, time saving, optimisation of research, reformulation and editorial benefits, etc.) are recognised, which is in line with the findings on the perceived usefulness of other educational technologies (

Mugo et al. 2017), the profound pedagogical impact of ChatGPT on learning is divisive. Some see it as a tool that allows ‘

more flexibility for students depending on the subject, of course for students so that they can acquire more autonomy [...] so that students are more involved in their own learning’ (I6); others remain highly critical of these effects on learning: ‘

What I think about students is that they no longer think. They don’t reason any more. They think they know everything because they’ve got ChatGPT. I’m afraid of the day when ChatGPT will take over from humans..., and I think that with young people now, it’s OK, it’s taken over’ (I1). Another aspect directly concerns the teacher, who wants to ‘

remain in control of my lesson. I’m not interested in being the spokesperson or microphone for a computerised lesson’ (I6). Teachers can thus feel that their role or status has been taken away from them. This profound questioning of the teaching profession goes hand in hand with a questioning of its credibility: ‘

they [the students] have the opportunity to check everything you say and the drift of course, we’re not going to believe the teacher’ (I8). Between the leverage of personalisation and autonomy and the risk of disengaging students and calling into question the status of the teacher, this crucial dimension of perceived usefulness remains very mixed between advocates and opponents.

Despite an intuitive conversational interface, our results show that the optimal pedagogical integration of ChatGPT is perceived as complex by a majority of teachers, who may feel overwhelmed by the amount of information available: ‘I have the impression that you can’t get to grips with the subject because you haven’t mastered it’ (I6); ‘so there’s too much information, which perhaps requires time to reprocess or to be able to stand back’ (I8). ChatGPT responses depend on the relevance of the prompts, which many teachers do not master: ‘In fact, when the answer doesn’t suit me, it’s because the question was badly put. When it doesn’t suit me, it’s because the prompt was badly written and so I have to revise my prompt’ (I7); ‘the tool is fairly intuitive. However, to specify the results obtained effectively, resources on prompting may be useful’ (I5).

The people interviewed are aware of the importance of training: ‘If I had training, it would be easier to use it to master it’ (I8). They even went so far as to call for an appropriate training program: ‘I’d like us to have support in getting to grips with it, whether for teaching or for research techniques, in a gradual way’ (I9). Without this training, teachers run the risk of abandoning ChatGPT and giving up using it: ‘sometimes I’d give him a command and he’d give me the wrong answer, so I thought, «I don’t have to give him the right information so that he can be reactive afterwards, so that made me angry»’ (I9). While ChatGPT may be perceived as magical by novices, experts will have more distance from its use and the reliability of the results obtained: ‘We know that what ChatGPT gives us is an approximation of what we’re looking for, and it always needs to be checked, so it’s absolutely unreliable’ (I10); “as the data isn’t necessarily reliable, since it’s everything that’s on the Web, we’re not necessarily sure of the result, so we need to be sufficiently trained and sufficiently wary about using this tool” (I7). Training is therefore essential for the proper use of generative AI, and it must be progressive and ongoing. Indeed, after the ‘Wow’ effect of discovering the tool, there is always a ‘Down’ phase when the person realises that anything can be made to say anything and obtain completely false results. Training must help them get past this second stage to reach the ‘Stabilisation’ phase, during which users become aware of the tool’s limitations: ‘For me, that’s the point of training. For me, that’s the point of training. It’s to ensure that when people come out of a training course, they understand the limits, the benefits and the uses they’re going to be able to make of it’ (I7).

This highlights the limitations of the perceived ease of use construct as initially conceptualised in the TAM. A more explicit consideration of the systemic dimension and the profound adaptation of practices seems necessary. The use of ChatGPT in certain areas, coding for example, seems easier and more relevant. To reassure teachers, effective mastery of ChatGPT and its prompts is seen as a major prerequisite for its successful adoption. ‘So in fact, I discovered that it was a tool that could be interesting for giving you the first bits of structuring, I find that in terms of structuring, you give a subject, very quickly, it is able to propose a good structure.... I took part in two videoconference training sessions with some guys, who talked about using ChatGPT and how to challenge it with prompts. I found it very interesting to discover that it’s difficult to get out of Google where you type queries, but I realised as I used it that the question of exchange, challenge and prompts is important’ (I4).

The issue of the controllability and transparency of ChatGPT emerges as a central concern in all of the teachers’ comments, and some even consider it to be ‘

a plundering or copying software. Moreover, there’s a whole problem with copyright, which poses a real problem at that level’ (I6). The problem of data and its sources is ‘

an element that, in any case, will necessarily be considered. Depending on everyone’s interests, particularly those of individual countries. I think that this notion of intellectual property rights will be settled when the two powers that are going to be at the forefront of AI have made their move, namely the Chinese and then the Americans, and it is on the basis of this tug of war that a code of property rights will emerge, and as I see it, we’ll have two of them!’ (I3). This new construct that emerges from the data analysis is particularly illuminating in understanding the reservations and reticence observed. The ‘black box’ (

Kleinpeter 2020) represented by the opaque internal workings of AI, the lack of traceability of the results generated and the unpredictability of possible biases or ethical abuses are sources of many questions and concerns. As a result, major efforts on algorithmic transparency (explanations of reasoning), data training (what happens to the information entered), data sources and auditability and user control seem essential to reassure the teaching profession. ‘

I don’t use ChatGPT, because apparently there are risks in terms of personal data... I don’t know who can do what with this data. You’ve always got someone behind it who manipulates as they please and does what they want with the data to sell it, to exploit it … so even for pedagogy, I don’t really need it.’ (I2).

5. Discussion

Since its initial conception, TAM has been the subject to numerous theoretical extensions aimed at strengthening its explanatory power, particularly in specific contexts such as teaching (

Scherer et al. 2019). However, the adoption of such an innovative and disruptive technology as ChatGPT raises singular issues that require some adjustments to the initial model.

Indeed, beyond its simple practical utility, ChatGPT raises ethical concerns and questions among teachers, such as plagiarism, intellectual property, the risks of dehumanization, the oppression of creativity and the reinforcement of biases and discriminations. This ethical dimension in the AIA2M model was considered as a complementary variable influencing the general attitude towards the tool. Moreover, the very nature of ChatGPT, as a conversational AI, implies specific issues of the controllability and transparency of data perceived by users. Understanding the internal functioning of ChatGPT, its training and the possibility of parameterizing and auditing its results, as well as the ability to explain its reasoning in an interpretable way would seem to be key factors in its effective adoption by teachers.

What emerges from this study is a TAM model that has been enriched and adjusted to the specific features of ChatGPT. This revisited model should enable a finer, contextualized analysis of the psychological, technical and ethical factors shaping teachers’ perceptions, attitudes and intentions towards this disruptive innovation (

Figure 2).

The model proposed in this study represents a significant evolution of the classic Technology Acceptance Model (TAM), specifically adapted to the challenges posed by the adoption of ChatGPT in the educational context. The major contribution of this new model lies in the integration of new variables that reflect the specificities of generative artificial intelligence technologies in general and ChatGPT in particular. The introduction of the “Perceived controllability and transparency” construct at the heart of the model captures teachers’ concerns about mastering and understanding the tool. In addition, the addition of “ethical concerns” as a moderating variable of general attitude demonstrates the importance of ethical, moral and deontological considerations in the adoption process. Furthermore, the distinction between “perceived educational gains” and “perceived pedagogical risks” within perceived usefulness provides a better understanding of teachers’motivations and disincentives.

It is therefore important to define a very clear ethical framework for the use of this tool and to put in place a well-defined institutional policy. This framework can be implemented by a protocol involving teachers, students and AI experts. This charter should define the fundamental principles (academic integrity, student interests, the primacy of human subjectivity, non-discrimination and data transparency) and the conditions for AI use (prohibited during assessments, limited by level and mandatory training for students). A monitoring committee must be set up to ensure that the charter is implemented and respected.

It is also important to encourage experimentation with ChatGPT through training courses and subscriptions offered to teaching teams to promote its integration and capitalize on the gains identified in the model. These training courses can be customized to suit individual needs and profiles. Similarly, awareness campaigns are needed to limit the risks associated with generative AI, including data security, the verification of the information generated and the risk of addiction. And let us not forget that AI cannot replace the sensitivity of a teacher, nor can it feel emotions when managing students.

The introduction of AI in general and ChatGPT in particular will not mean the disappearance of the teacher. Nevertheless, it will lead to a transformation of this profession. The role of the teacher must no longer be solely that of transmission and assessment. They must evolve towards the skills of co-construction with the student, using the tools made available. Given the multiplicity of data sources, the teachers’ expectations must evolve from teaching the student to search for information to sorting out existing information, detecting the true from the false and developing a sense of discernment.

Our findings have important implications for the enterprise sector in higher education, particularly with regard to performance promotion. Indeed, generative AI tools, such as ChatGPT, can facilitate distance learning solutions while stimulating student engagement and personalized learning experiences (

Mallah Boustani and Merhej Sayegh 2021). ChatGPT is identified as “an assistant” by some of our interviewees, who anticipate an AI tutoring role for students. AI could, for example, help them identify which concepts need to be explored further, which options need to be chosen, which exercises they still need to work on. AI could become a veritable personalized guidance counsellor. This ability to manage large quantities of information and automate routine processes can streamline administrative tasks (such as resource management), reducing the need for human and financial resources.

Furthermore, these solutions also support the ongoing professional development of instructors/teachers and stakeholders, as AI can provide real-time information on market trends and emerging corporate strategies, enabling educators to cultivate a better-prepared workforce for the future.

From an educational policy perspective, business schools using generative AI can lead the way in supporting digital transformation and sustainable practices in higher education. By integrating AI-based tools into their courses, they help students acquire skills that are essential in today’s working world, such as digital literacy, critical thinking and ethical decision-making. This is consistent with the broader aims of sustainable education, as it prepares students to tackle important global issues such as resource management, ethical business practices and environmental conservation.

It is important to emphasize here the impact that political leaders can have in the field: if school directors show a strong willingness and involvement in the use and adoption of these tools, then training sessions will be offered, and teachers will be accompanied and encouraged to use these tools, which corresponds to the traditional model of primary adoption (the organization decides to deploy a technology); then comes the decision of actual use by employees, which is secondary adoption (

Gallivan 2001). However,

Bidan et al. (

2020) have proposed an inverted model, focusing on latent or dormant technologies. As the technology is available, the employee can seize it, accept it and use it without the organization even being aware of it. These are informal practices, born of the personal experience of the individual and/or those around him. Only then is the technology partly proposed by the organization, which is presented with a sort of fait accompli. The acceptance and adoption of generative AI seems to borrow from both models: indeed, the interviews enabled us to observe both the traditional and the inverted model, depending on the nature of the establishments concerned (private or public business schools), or the link with technology and the pedagogical innovation of the people interviewed.

In the long run, implementing AI in educational and business contexts can encourage a more responsible use of technology, instil a sense of accountability, and promote transparent decision-making processes, ultimately fostering a sustainability culture that extends beyond academic institutions and into the corporate world.

6. Conclusions

The main contribution of our study lies in questioning the traditional Technology Acceptance Model (TAM) and proposing a revisited TAM adapted to AI. This model takes into account ethics, data controllability, the control of the tool and its settings, knowledge of how personal data is used and intellectual property—elements often neglected in existing models. This enriched approach aims not only to address teachers’ concerns about data security and privacy (

Grassini 2023;

Rahman and Watanobe 2023), but also to strengthen transparency and user trust, key aspects for a sustainable and responsible adoption of AI in education (

Memarian and Doleck 2023). By introducing this new version of the TAM, we aim, based on field results, to establish a theoretical framework to better understand the factors driving teachers’ acceptance of ChatGPT. This includes the identification of potential benefits such as time savings and the automation of repetitive tasks, but also the risks associated with plagiarism and the lowering of students’ cognitive abilities. This model could thus serve as a basis for future research exploring in greater detail the implications of AI in education, particularly in diverse and multicultural contexts.

Furthermore, the contribution of this article lies in its empirical approach, which responds to a gap identified in the literature. Most studies on ChatGPT in education are based on theoretical analysis or commentary, with little empirical data (

Pradana et al. 2023;

Memarian and Doleck 2023). By collecting qualitative data from teachers, this research enriches the understanding of the real dynamics of ChatGPT adoption, considering the reality of practices and expectations specific to the educational context (

Abdaljaleel et al. 2024).

This work has enabled us to study teachers’ perceptions. Some see it as an opportunity for pedagogical enrichment, others as a risk to the quality of learning and the cognitive capacity of students. While ChatGPT is appreciated for its speed and ability to synthesize, the absence of critical reflection and the risk of cheating and algorithmic bias are causes for concern. This study thus clarified the role of ethics and the mastery of the tool in the acceptance of ChatGPT, highlighting the importance of appropriate, ongoing training and supervision. This ethical and pedagogical aspect is frequently highlighted in the literature as a condition for the beneficial integration of AI into educational practices, but remains underexplored. For a successful integration of ChatGPT, it becomes essential to formulate and formalize educational policies that take into consideration these dimensions of control and safety, thus offering a balance between technological innovation and the protection of fundamental pedagogical interests.

Despite the interest in this research on the integration of ChatGPT into teachers’ pedagogical practices, it nevertheless presents certain limitations that deserve to be highlighted in order to contextualize the results and guide future research.

First of all, this model adds other external variables than the basic model. It is important to test it empirically to validate the relationships between the different variables and the most influential factors. A large-scale questionnaire is needed to validate the model. Secondly, the exploratory work was carried out in a limited geographical area and reflects only local perceptions and experiences. It would be more interesting to extend the research to other geographical contexts (

Boustani and Chammaa 2023). International comparative studies would enable us to assess other variables, such as cultural specificities, which may influence the adoption of ChatGPT. From a methodological point of view, this research was conducted on the basis of semi-structured interviews. Other methodologies, such as observation and longitudinal studies, could provide a better understanding of the conditions under which ChatGPT is used. This study does not take into account the diversity and specificity of the different domains. It would also be interesting to conduct targeted research on specific disciplines and measure the impact of ChatGPT adoption between the hard sciences and the humanities and social sciences, for example. Exploring these additional dimensions will enable us to better grasp the potential and challenges of using ChatGPT in particular, and generative AI in general, in the field of education.

In terms of perspectives, this article paves the way for future research to explore how students perceive the integration of ChatGPT into their educational pathways, examining in particular the influence of this technology on their autonomous learning (memorization, creativity, assimilation and comprehension) and their capacity for critical analysis. Indeed, the majority of respondents believe that ChatGPT could weaken critical thinking, the ability to reflect and solve problems autonomously, particularly when students become too dependent on AI-generated suggestions. These aspects call for more detailed explorations, particularly on the impact of the tool on their academic performance and results. Studying ChatGPT’s impact on students’ motivation and engagement, as well as their ability to learn self-discipline and digital ethics, could also enrich the understanding of the use of this technology in an educational context (

Pradana et al. 2023).

In conclusion, this exploratory study marks the starting point for a structured research project articulated in several phases, each aimed at deepening our understanding of this pedagogical revolution. The first phase will consist of a large-scale qualitative study of a wide range of teachers (permanent or part-time teachers, with or without research activity). This survey will not only enable us to refine the conceptual model, but also to question the uses of ChatGPT either in professional or personal dimensions. It will also provide an opportunity to draw up typical teacher-user profiles, the outlines of which are already emerging from this exploratory study. The second phase will focus on students’ perceptions and practices through a quantitative study. The aim of this phase will be to shed light, from a dual perspective, on the dynamics of appropriating generative AI by comparing the views of teachers and students.

We are convinced that this ambitious study program will pave the way for a more holistic and in-depth understanding of the pedagogical mutations underway