Static vs. Immersive: A Neuromarketing Exploratory Study of Augmented Reality on Packaging Labels

Abstract

1. Introduction

1.1. The Application of Augmented Reality in Marketing and Packaging

1.2. Static vs. Immersive

1.3. AR Effects on Consumer Dimensions

1.4. Research Gap

1.5. Hypotheses Development

2. Methods

2.1. Sample

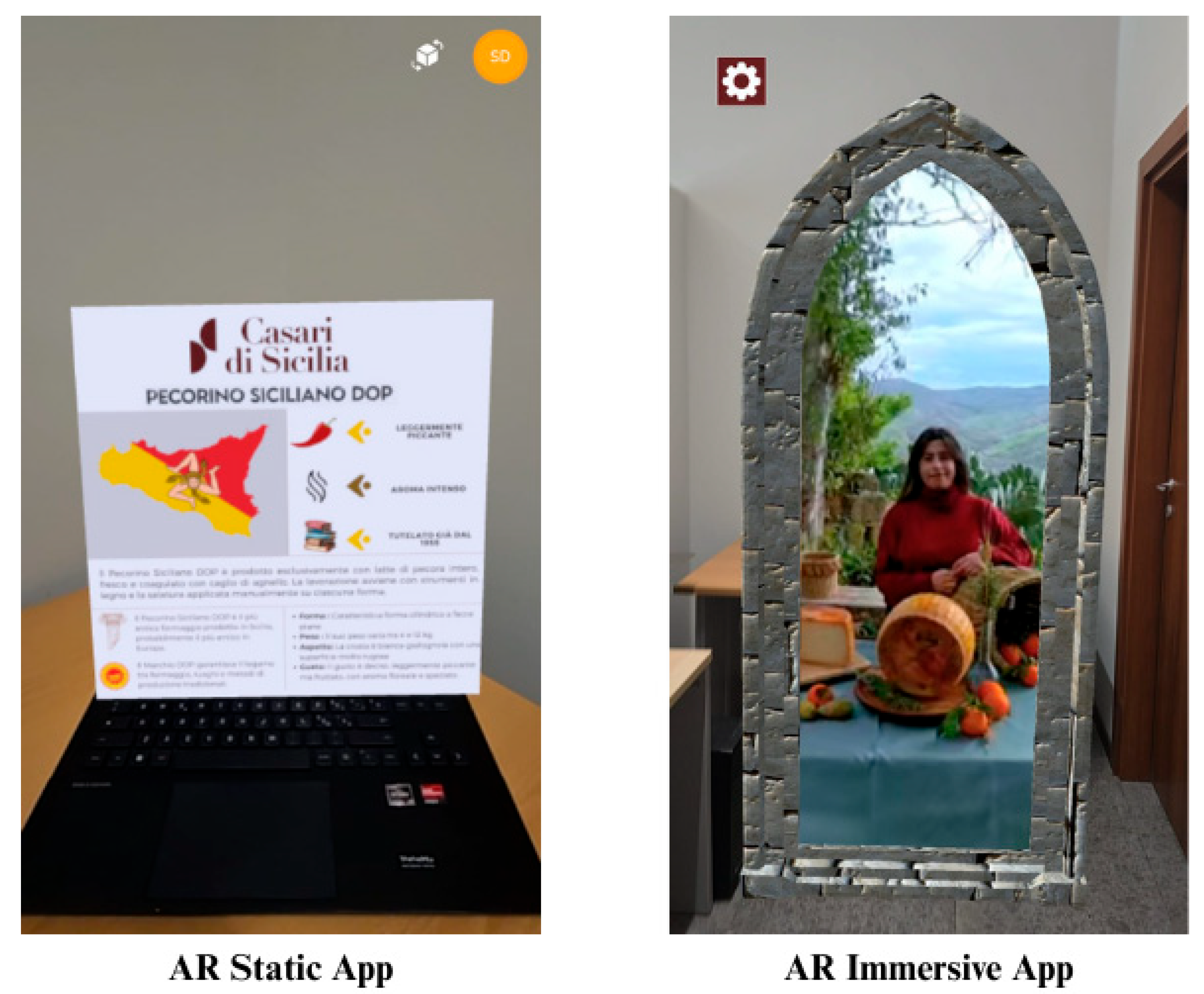

2.2. Materials

- Static AR: Content consisted of static pop-up image text above the label with details on the product. All information about the cheese was presented in written form and delivered passively, without any interaction with the augmented elements.

- Immersive AR: Content consisted of a virtual portal that appeared within the room. Users could walk through this portal to access a 360-degree video set inside a dairy farm. In the video, a dairy producer explained the product’s details. Participants had the opportunity to interact with the app by tapping predefined questions within the content, which triggered the corresponding video segment where the cheesemaker responded to the selected inquiry.

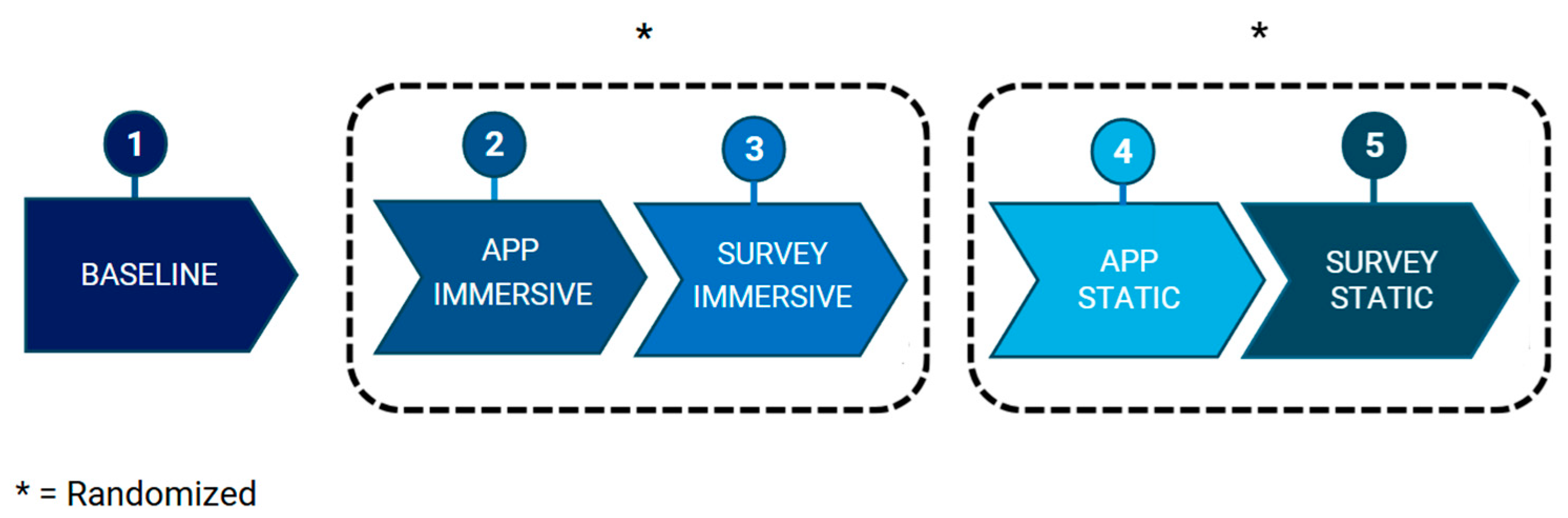

2.3. Experimental Design

2.4. Instrumentation

2.5. Neurophysiological Measures

2.5.1. Emotional Index (EI)

2.5.2. Beta/Alpha Theta Ratio (BATR)

2.6. Self-Report Measures

2.7. Protocol

- Task 1: The participant pointed the packaging label through the cell phone camera and interacted with the AR application using a smartphone for up to 5 min, for as long as they thought it was appropriate.

- Task 2: After the AR interaction, one of the two researchers accompanied the participant in completing a questionnaire on the AR interaction. The questionnaire lasted an average of 7 min.

2.8. Data Processing

2.9. Statistical Analysis

3. Results

3.1. Neurophysiological Results

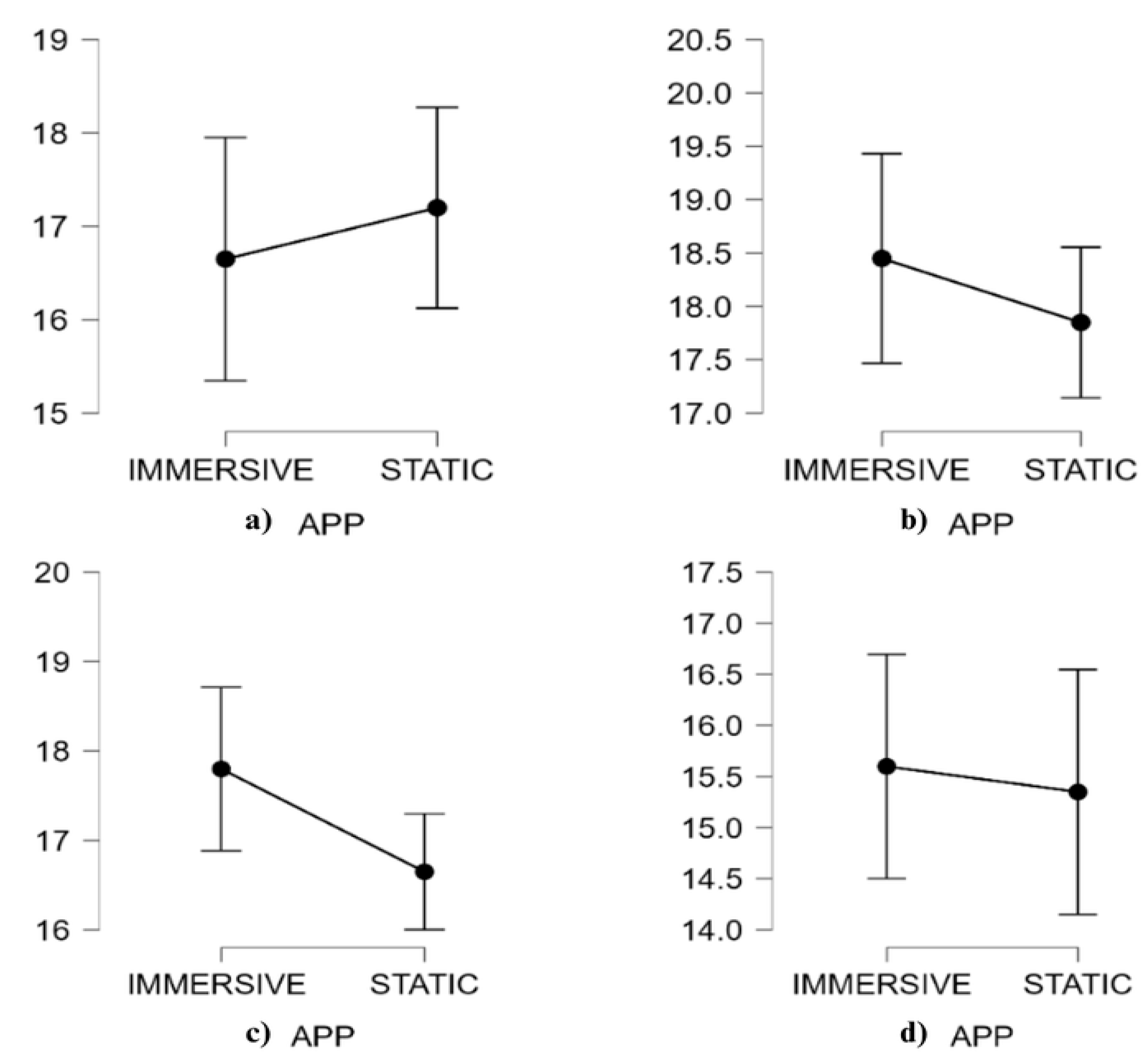

3.2. ARI Results

3.3. Self-Report Results

3.4. Correlation Results

4. Discussion

4.1. Immersive AR Engages Emotionally and Cognitively

4.2. Managerial Implications

4.3. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented Reality |

| S | Static (AR) |

| I | Immersive (AR) |

| EI | Emotional Index |

| BATR | Beta/Alpha Theta Ratio |

| ARI | Augmented Reality Immersion questionnaire |

| PI | Perceived Informativeness |

| PBA | Perceived Brand Authenticity |

| PPA | Perceived Product Authenticity |

| ITB | Intention to Buy |

References

- Abao, R. P., Malabanan, C. V., & Galido, A. P. (2018). Design and development of FoodGo: A mobile application using situated analytics to augment product information. Procedia Computer Science, 135, 186–193. [Google Scholar] [CrossRef]

- Abrash, M. (2021, December 11–16). Creating the future: Augmented reality, the next human-machine interface. 2021 IEEE International Electron Devices Meeting (IEDM) (pp. 1–11), San Francisco, CA, USA. [Google Scholar] [CrossRef]

- Adhani, N. I., & Rambli, D. R. A. (2012, November 15). A survey of mobile augmented reality applications. 1st International Conference on Future Trends in Computing and Communication Technologies (pp. 89–96), Lahore, Pakistan. [Google Scholar]

- Alvino, L., Pavone, L., Abhishta, A., & Robben, H. (2020). Picking your brains: Where and how neuroscience tools can enhance marketing research. Frontiers in Neuroscience, 14, 577666. [Google Scholar] [CrossRef]

- Antonioli, M., Blake, C., & Sparks, K. (2014). Augmented Reality Applications in Education. The Journal of Technology Studies, 40(2), 96–107. [Google Scholar] [CrossRef]

- Archana, T., & Stephen, R. K. (2025). Foundations of augmented reality technology. In Virtual and augmented reality applications in the automobile industry (pp. 205–230). IGI Global Scientific Publishing. [Google Scholar] [CrossRef]

- Barsom, E. Z., Graafland, M., & Schijven, M. P. (2016). Systematic review on the effectiveness of augmented reality applications in medical training. Surgical Endoscopy, 30, 4174–4183. [Google Scholar] [CrossRef]

- Bilucaglia, M., Laureanti, R., Zito, M., Circi, R., Fici, A., Rivetti, F., Valesi, R., Wahl, S., & Russo, V. (2019, July 23–27). Looking through blue glasses: Bioelectrical measures to assess the awakening after a calm situation. 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (pp. 526–529), Berlin, Germany. [Google Scholar] [CrossRef]

- Bilucaglia, M., Laureanti, R., Zito, M., Circi, R., Fici, A., Russo, V., & Mainardi, L. T. (2021, November 1–5). It’s a question of methods: Computational factors influencing the frontal asymmetry in measuring the emotional valence. 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) (pp. 575–578), Virtual, Mexico. [Google Scholar] [CrossRef]

- Bokil, H., Andrews, P., Kulkarni, J. E., Mehta, S., & Mitra, P. P. (2010). Chronux: A platform for analyzing neural signals. Journal of Neuroscience Methods, 192(1), 146–151. [Google Scholar] [CrossRef] [PubMed]

- Borghini, G., Aricò, P., Di Flumeri, G., Sciaraffa, N., & Babiloni, F. (2019). Correlation and similarity between cerebral and non-cerebral electrical activity for user’s states assessment. Sensors, 19(3), 704. [Google Scholar] [CrossRef]

- Bosshard, S., & Walla, P. (2023). Sonic influence on initially neutral brands: Using EEG to unveil the secrets of audio evaluative conditioning. Brain Sciences, 13(10), 1393. [Google Scholar] [CrossRef]

- Brislin, R. W. (1970). Back-translation for cross-cultural research. Journal of Cross-Cultural Psychology, 1(3), 185–216. [Google Scholar] [CrossRef]

- Cao, J., Lam, K.-Y., Lee, L.-H., Liu, X., Hui, P., & Su, X. (2023). Mobile augmented reality: User interfaces, frameworks, and intelligence. ACM Computing Surveys, 55(9), 189. [Google Scholar] [CrossRef]

- Chang, C. Y., Hsu, S. H., Pion-Tonachini, L., & Jung, T. P. (2018, July 18–21). Evaluation of artifact subspace reconstruction for automatic EEG artifact removal. 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (pp. 1242–1245), Honolulu, HI, USA. [Google Scholar] [CrossRef]

- Chatzopoulos, D., Bermejo, C., Huang, Z., & Hui, P. (2017). Mobile augmented reality survey: From where we are to where we go. IEEE Access, 5, 6917–6950. [Google Scholar] [CrossRef]

- Clayson, P. E., Carbine, K. A., Shuford, J. L., McDonald, J. B., & Larson, M. J. (2025). A registered report of preregistration practices in studies of electroencephalogram (EEG) and event-related potentials (ERPs): A first look at accessibility, adherence, transparency, and selection bias. Cortex, 185, 253–269. [Google Scholar] [CrossRef]

- Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Routledge. [Google Scholar] [CrossRef]

- Dall’Olio, L., Curti, N., Remondini, D., Safi Harb, Y., Asselbergs, F. W., Castellani, G., & Uh, H. W. (2020). Prediction of vascular aging based on smartphone acquired PPG signals. Scientific Reports, 10(1), 19756. [Google Scholar] [CrossRef]

- Dawson, M. E., Schell, A. M., & Filion, D. L. (2007). The electrodermal system. In Handbook of psychophysiology (Vol. 2, pp. 200–223). Cambridge University Press. [Google Scholar] [CrossRef]

- de Amorim, I. P., Guerreiro, J., Eloy, S., & Loureiro, S. M. C. (2022). How augmented reality media richness influences consumer behaviour. International Journal of Consumer Studies, 46(6), 2351–2366. [Google Scholar] [CrossRef]

- Delorme, A., & Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. [Google Scholar] [CrossRef]

- Diaz, C., Hincapié, M., & Moreno, G. (2015). How the type of content in educative augmented reality application affects the learning experience. Procedia Computer Science, 75, 205–212. [Google Scholar] [CrossRef]

- Dong, L., Li, F., Liu, Q., Wen, X., Lai, Y., Xu, P., & Yao, D. (2017). MATLAB toolboxes for reference electrode standardization technique (REST) of scalp EEG. Frontiers in Neuroscience, 11, 601. [Google Scholar] [CrossRef]

- Fan, X., Jiang, X., & Deng, N. (2022). Immersive technology: A meta-analysis of augmented/virtual reality applications and their impact on tourism experience. Tourism Management, 91, 104534. [Google Scholar] [CrossRef]

- Farida, I., & Clark, I. (2024). Using multimedia tools to enhance cognitive engagement: A comparative study in secondary education. Scientechno: Journal of Science and Technology, 3(3), 318–327. [Google Scholar] [CrossRef]

- Farshid, M., Paschen, J., Eriksson, T., & Kietzmann, J. (2018). Go boldly!: Explore augmented reality (AR), virtual reality (VR), and mixed reality (MR) for business. Business Horizons, 61(5), 657–663. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. [Google Scholar] [CrossRef]

- Fici, A., Bilucaglia, M., Casiraghi, C., Rossi, C., Chiarelli, S., Columbano, M., Micheletto, V., Zito, M., & Russo, V. (2024). From E-commerce to the metaverse: A neuroscientific analysis of digital consumer behavior. Behavioral Sciences, 14(7), 596. [Google Scholar] [CrossRef]

- Freeman, F. G., Mikulka, P. J., Prinzel, L. J., & Scerbo, M. W. (1999). Evaluation of an adaptive automation system using three EEG indices with a visual tracking task. Biological Psychology, 50(1), 61–76. [Google Scholar] [CrossRef] [PubMed]

- Garczarek-Bąk, U., Szymkowiak, A., Gaczek, P., & Disterheft, A. (2021). A comparative analysis of neuromarketing methods for brand purchasing predictions among young adults. Journal of Brand Management, 28(2), 171–185. [Google Scholar] [CrossRef]

- Georgiou, Y., & Kyza, E. A. (2017). The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. International Journal of Human Computer Studies, 98, 24–37. [Google Scholar] [CrossRef]

- Greco, A., Valenza, G., Lanata, A., Scilingo, E. P., & Citi, L. (2015). cvxEDA: A convex optimization approach to electrodermal activity processing. IEEE Transactions on Biomedical Engineering, 63(4), 797–804. [Google Scholar] [CrossRef]

- Hagtvedt, H., & Chandukala, S. R. (2023). Immersive retailing: The in-store experience. Journal of Retailing, 99(4), 505–517. [Google Scholar] [CrossRef]

- Holdack, E., Lurie-Stoyanov, K., & Fromme, H. F. (2022). The role of perceived enjoyment and perceived informativeness in assessing the acceptance of AR wearables. Journal of Retailing and Consumer Services, 65, 102259. [Google Scholar] [CrossRef]

- Hu, S., Lai, Y., Valdes-Sosa, P. A., Bringas-Vega, M. L., & Yao, D. (2018). How do reference montage and electrodes setup affect the measured scalp EEG potentials? Journal of Neural Engineering, 15(2), 026013. [Google Scholar] [CrossRef]

- Hubert, M., & Kenning, P. (2008). A current overview of consumer neuroscience. Journal of Consumer Behaviour: An International Research Review, 7(4–5), 272–292. [Google Scholar] [CrossRef]

- Hultén, B. (2011). Sensory marketing: The multi-sensory brand-experience concept. European Business Review, 23(3), 256–273. [Google Scholar] [CrossRef]

- Hyvärinen, A., & Oja, E. (2000). Independent component analysis: Algorithms and applications. Neural Networks, 13(4–5), 411–430. [Google Scholar] [CrossRef]

- Irshad, S., & Awang, D. R. B. (2016, August 15–17). User perception on mobile augmented reality as a marketing tool. 2016 3rd International Conference on Computer and Information Sciences (ICCOINS) (pp. 109–113), Kuala Lumpur, Malaysia. [Google Scholar] [CrossRef]

- Javornik, A. (2016). Augmented reality: Research agenda for studying the impact of its media characteristics on consumer behaviour. Journal of Retailing and Consumer Services, 30, 252–261. [Google Scholar] [CrossRef]

- Javornik, A., Kostopoulou, E., Rogers, Y., Fatah gen Schieck, A., Koutsolampros, P., Maria Moutinho, A., & Julier, S. (2019). An experimental study on the role of augmented reality content type in an outdoor site exploration. Behaviour & Information Technology, 38(1), 9–27. [Google Scholar] [CrossRef]

- Juan, M. C., Charco, J. L., García-García, I., & Mollá, R. (2019). An augmented reality app to learn to interpret the nutritional information on labels of real packaged foods. Frontiers in Computer Science, 1, 1. [Google Scholar] [CrossRef]

- Kim, J., Ko, E., Lee, H., Shim, W., Kang, W., & Kim, J. (2019). A study on the application of packaging and augmented reality as a marketing tool. Korean Journal of Packaging Science & Technology, 25(2), 37–45. [Google Scholar] [CrossRef]

- Kleckner, I. R., Jones, R. M., Wilder-Smith, O., Wormwood, J. B., Akcakaya, M., Quigley, K. S., Lord, C., & Goodwin, M. S. (2017). Simple, transparent, and flexible automated quality assessment procedures for ambulatory electrodermal activity data. IEEE Transactions on Biomedical Engineering, 65(7), 1460–1467. [Google Scholar] [CrossRef]

- Klimesch, W. (1997). EEG-alpha rhythms and memory processes. International Journal of Psychophysiology, 26(1–3), 319–340. [Google Scholar] [CrossRef] [PubMed]

- Krugliak, A., & Clarke, A. (2022). Towards real-world neuroscience using mobile EEG and augmented reality. Scientific Reports, 12(1), 2291. [Google Scholar] [CrossRef] [PubMed]

- Laureanti, R., Bilucaglia, M., Zito, M., Circi, R., Fici, A., Rivetti, F., Valesi, R., Wahl, S., Mainardi, L. T., & Russo, V. (2021, November 1–5). Yellow (lens) better: Bioelectrical and biometrical measures to assess arousing and focusing effects. 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) (pp. 6163–6166), Virtual, Mexico. [Google Scholar] [CrossRef]

- Liao, L. X., Corsi, A. M., Chrysochou, P., & Lockshin, L. (2015). Emotional responses towards food packaging: A joint application of self-report and physiological measures of emotion. Food Quality and Preference, 42, 48–55. [Google Scholar] [CrossRef]

- Love, J., Selker, R., Marsman, M., Jamil, T., Dropmann, D., Verhagen, J., Ly, A., Gronau, Q. F., Šmíra, M., Epskamp, S., Matzke, D., Wild, A., Knight, P., Rouder, J. N., Morey, R. D., & Wagenmakers, E.-J. (2019). JASP: Graphical statistical software for common statistical designs. Journal of Statistical Software, 88, 1–17. [Google Scholar] [CrossRef]

- Mayer, R. E. (2005). Cognitive theory of multimedia learning. In The Cambridge handbook of multimedia learning (Vol. 41, pp. 31–48). Cambridge University Press. [Google Scholar] [CrossRef]

- McDonald, R. P. (1999). Test theory: A unified treatment. Lawrence Erlbaum. [Google Scholar] [CrossRef]

- Micheletto, V., Accardi, S., Fici, A., Piccoli, F., Rossi, C., Bilucaglia, M., Russo, V., & Zito, M. (2025). Enjoy it! Cosmetic try-on apps and augmented reality, the impact of enjoyment, informativeness and ease of use. Frontiers in Virtual Reality, 6, 1515937. [Google Scholar] [CrossRef]

- Missaglia, A. L., Oppo, A., Mauri, M., Ghiringhelli, B., Ciceri, A., & Russo, V. (2017). The impact of emotions on recall: An empirical study on social ads. Journal of Consumer Behaviour, 16(5), 424–433. [Google Scholar] [CrossRef]

- Modica, E., Cartocci, G., Rossi, D., Levy, A. C. M., Cherubino, P., Maglione, A. G., Di Flumeri, G., Mancini, M., Montanari, M., Perrotta, D., Di Feo, P., Vozzi, A., Ronca, V., Aricò, P., & Babiloni, F. (2018). Neurophysiological responses to different product experiences. Computational Intelligence and Neuroscience, 2018, 9616301. [Google Scholar] [CrossRef]

- Nakamura, J., & Csikszentmihalyi, M. (2009). Flow theory and research. In The Oxford handbook of positive psychology. Oxford University Press. [Google Scholar] [CrossRef]

- Nardelli, M., Valenza, G., Greco, A., Lanata, A., & Scilingo, E. P. (2015). Recognizing emotions induced by affective sounds through heart rate variability. IEEE Transactions on Affective Computing, 6(4), 385–394. [Google Scholar] [CrossRef]

- Nhan, V. K., Dung, H. T., & Vu, N. T. (2022). A conceptual model for studying the immersive mobile augmented reality application-enhanced experience. Heliyon, 8(8), e10141. [Google Scholar] [CrossRef]

- Nunnally, J. C. (1978). Psychometric theory. McGraw-Hill. [Google Scholar]

- Park, J., Lee, J. M., Xiong, V. Y., Septianto, F., & Seo, Y. (2021). David and goliath: When and why micro-influencers are more persuasive than mega-influencers. Journal of Advertising, 50(5), 584–602. [Google Scholar] [CrossRef]

- Penco, L., Serravalle, F., Profumo, G., & Viassone, M. (2021). Mobile augmented reality as an internationalization tool in the “Made in Italy” food and beverage industry. Journal of Management and Governance, 25, 1179–1209. [Google Scholar] [CrossRef]

- Petit, O., Velasco, C., & Spence, C. (2018). Multisensory consumer-packaging interaction (CPI): The role of new technologies. In Multisensory packaging: Designing new product experiences (pp. 349–374). Springer International Publishing. [Google Scholar] [CrossRef]

- Petty, R. E., & Cacioppo, J. T. (1986). The elaboration likelihood model of persuasion. In Advances in experimental social psychology (Vol. 19, pp. 123–205). Academic Press. [Google Scholar] [CrossRef]

- Pion-Tonachini, L., Kreutz-Delgado, K., & Makeig, S. (2019). ICLabel: An automated electroencephalographic independent component classifier, dataset, and website. NeuroImage, 198, 181–197. [Google Scholar] [CrossRef] [PubMed]

- Pope, A. T., Bogart, E. H., & Bartolome, D. S. (1995). Biocybernetic system evaluates indices of operator engagement in automated task. Biological Psychology, 40(1-2), 187–195. [Google Scholar] [CrossRef]

- Pozharliev, R., De Angelis, M., & Rossi, D. (2022). The effect of augmented reality versus traditional advertising: A comparison between neurophysiological and self-reported measures. Marketing Letters, 33(1), 113–128. [Google Scholar] [CrossRef]

- Qin, H., Peak, D. A., & Prybutok, V. (2021). A virtual market in your pocket: How does mobile augmented reality (MAR) influence consumer decision making? Journal of Retailing and Consumer Services, 58, 102337. [Google Scholar] [CrossRef]

- Raman, R., Mandal, S., Gunasekaran, A., Papadopoulos, T., & Nedungadi, P. (2025). Transforming business management practices through metaverse technologies: A Machine Learning approach. International Journal of Information Management Data Insights, 5(1), 100335. [Google Scholar] [CrossRef]

- Rauschnabel, P. A., Felix, R., & Hinsch, C. (2019). Augmented reality marketing: How mobile AR-apps can improve brands through inspiration. Journal of Retailing and Consumer Services, 49, 43–53. [Google Scholar] [CrossRef]

- Rosado-Pinto, F., & Loureiro, S. M. C. (2024). Authenticity: Shedding light on the branding context. EuroMed Journal of Business, 19(3), 544–570. [Google Scholar] [CrossRef]

- Russo, V., Bilucaglia, M., Casiraghi, C., Chiarelli, S., Columbano, M., Fici, A., Rivetti, F., Rossi, C., Valesi, R., & Zito, M. (2023). Neuroselling: Applying neuroscience to selling for a new business perspective. An analysis on teleshopping advertising. Frontiers in Psychology, 14, 1238879. [Google Scholar] [CrossRef]

- Russo, V., Bilucaglia, M., Circi, R., Bellati, M., Valesi, R., Laureanti, R., Licitra, G., & Zito, M. (2022a). The role of the emotional sequence in the communication of the territorial cheeses: A neuromarketing approach. Foods, 11(15), 2349. [Google Scholar] [CrossRef] [PubMed]

- Russo, V., Bilucaglia, M., & Zito, M. (2022b). From virtual reality to augmented reality: A neuromarketing perspective. Frontiers in Psychology, 13, 965499. [Google Scholar] [CrossRef]

- Russo, V., Zito, M., Bilucaglia, M., Circi, R., Bellati, M., Marin, L. E. M., Catania, E., & Licitra, G. (2021). Dairy products with certification marks: The role of territoriality and safety perception on intention to buy. Foods, 10, 2352. [Google Scholar] [CrossRef] [PubMed]

- Scholkmann, F., Boss, J., & Wolf, M. (2012). An efficient algorithm for automatic peak detection in noisy periodic and quasi-periodic signals. Algorithms, 5(4), 588–603. [Google Scholar] [CrossRef]

- Scholz, J., & Duffy, K. (2018). We ARe at home: How augmented reality reshapes mobile marketing and consumer-brand relationships. Journal of Retailing and Consumer Services, 44, 11–23. [Google Scholar] [CrossRef]

- Scholz, J., & Smith, A. N. (2016). Augmented reality: Designing immersive experiences that maximize consumer engagement. Business Horizons, 59(2), 149–161. [Google Scholar] [CrossRef]

- Silayoi, P., & Speece, M. (2007). The importance of packaging attributes: A conjoint analysis approach. European Journal of Marketing, 41(11/12), 1495–1517. [Google Scholar] [CrossRef]

- Sonnenberg, C., Cudmore, B. A., & Swain, S. D. (2025). An approach for dynamic testing of augmented reality in retail contexts. International Journal of Technology Marketing, 19(2), 243–268. [Google Scholar] [CrossRef]

- Souza, R. H. C. E., & Naves, E. L. M. (2021). Attention detection in virtual environments using EEG signals: A scoping review. Frontiers in Physiology, 12, 727840. [Google Scholar] [CrossRef] [PubMed]

- Specht, M., Ternier, S., & Greller, W. (2011). Mobile augmented reality for learning: A case study. Journal of the Research Center for Educational Technology, 7(1), 117–127. [Google Scholar]

- Statista. (2024). Statista Report. Available online: https://www.statista.com/statistics/1098630/global-mobile-augmented-reality-ar-users/ (accessed on 19 July 2025).

- Stein, B. E., & Stanford, T. R. (2008). Multisensory integration: Current issues from the perspective of the single neuron. Nature Reviews Neuroscience, 9(4), 255–266. [Google Scholar] [CrossRef] [PubMed]

- Sung, E. C. (2021). The effects of augmented reality mobile app advertising: Viral marketing via shared social experience. Journal of Business Research, 122, 75–87. [Google Scholar] [CrossRef]

- Sung, E. C., Han, D. I. D., Choi, Y. K., Gillespie, B., Couperus, A., & Koppert, M. (2023). Augmented digital human vs. human agents in storytelling marketing: Exploratory electroencephalography and experimental studies. Psychology & Marketing, 40(11), 2428–2446. [Google Scholar] [CrossRef]

- Sungkur, R. K., Panchoo, A., & Bhoyroo, N. K. (2016). Augmented reality, the future of contextual mobile learning. Interactive Technology and Smart Education, 13(2), 123–146. [Google Scholar] [CrossRef]

- Szucs, D., & Ioannidis, J. P. (2017). Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature. PLoS Biology, 15, e2000797. [Google Scholar] [CrossRef]

- Tabaeeian, R. A., Hossieni, F. A., Fatehi, M., & Forghani Tehrani, A. (2024). Investigating the effect of augmented reality packaging on behavioral intentions in traditional Iranian nougat GAZ packaging. British Food Journal, 126(6), 2438–2453. [Google Scholar] [CrossRef]

- Tan, Y. C., Chandukala, S. R., & Reddy, S. K. (2022). Augmented reality in retail and its impact on sales. Journal of Marketing, 86(1), 48–66. [Google Scholar] [CrossRef]

- Tseng, C. H., & Wei, L. F. (2020). The efficiency of mobile media richness across different stages of online consumer behavior. International Journal of Information Management, 50, 353–364. [Google Scholar] [CrossRef]

- Vecchiato, G., Cherubino, P., Maglione, A. G., Ezquierro, M. T. H., Marinozzi, F., Bini, F., Trettel, A., & Babiloni, F. (2014). How to measure cerebral correlates of emotions in marketing relevant tasks. Cognitive Computation, 6, 856–871. [Google Scholar] [CrossRef]

- Voicu, M. C., Sîrghi, N., & Toth, D. M. M. (2023). Consumers’ experience and satisfaction using augmented reality apps in E-shopping: New empirical evidence. Applied Sciences, 13(17), 9596. [Google Scholar] [CrossRef]

- Wimmer, M., Pepicelli, A., Volmer, B., ElSayed, N., Cunningham, A., Thomas, B. H., Müller-Putz, G. R., & Veas, E. E. (2025). Counting on AR: EEG responses to incongruent information with real-world context. Computers in Biology and Medicine, 185, 109483. [Google Scholar] [CrossRef] [PubMed]

- World Medical Association. (2013). World Medical Association Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA, 310(20), 2191–2194. [Google Scholar] [CrossRef]

- Wu, J., Zhang, D., Liu, T., Yang, H. H., Wang, Y., Yao, H., & Zhao, S. (2022). Usability evaluation of augmented reality: A neuro-information-systems study. Journal of Visualized Experiments, 189, e64667. [Google Scholar] [CrossRef]

- Xu, X., & Sui, L. (2021). EEG cortical activities and networks altered by watching 2D/3D virtual reality videos. Journal of Psychophysiology, 36(1), 4–12. [Google Scholar] [CrossRef]

- Yim, M. Y. C., Chu, S. C., & Sauer, P. L. (2017). Is augmented reality technology an effective tool for e-commerce? An interactivity and vividness perspective. Journal of Interactive Marketing, 39(1), 89–103. [Google Scholar] [CrossRef]

| Demographic Characteristics | |||

|---|---|---|---|

| Variables | Category | Static AR | Immersive AR |

| Gender | Male | 5 | 5 |

| Female | 5 | 5 | |

| Age | Mean | 45.80 | 9.73 |

| Dev. stand. | 41.90 | 8.44 |

| Constructs | Item * | References |

|---|---|---|

| Engagement | I liked the activity because it was novel | Adopted and revised from Augmented Reality Immersion questionnaire (Georgiou & Kyza, 2017) |

| I liked the type of the activity | ||

| I wanted to spend the time to complete the activity successfully | ||

| I wanted to spend time to participate in the activity | ||

| It was easy for me to use the AR application | ||

| I found the AR application confusing | ||

| The AR application was unnecessarily complex | ||

| I did not have difficulties in controlling the AR application | ||

| Engrossment | I was curious about how the activity would progress | |

| I was often excited since I felt I was part of the activity | ||

| I often felt suspense in the activity | ||

| If interrupted, I looked forward to returning to the activity | ||

| Everyday thoughts and concerns faded out during the activity | ||

| I was more focused on the activity rather on any external distraction | ||

| Total Immersion | The activity felt so authentic that it made me think that the virtual objects existed for real | |

| I felt that what I was experiencing was something real, instead of a fictional activity | ||

| I was so involved in the activity that in some cases I wanted to interact with the virtual objects directly | ||

| I so was involved that I felt that my actions could affect the activity | ||

| I did not have any irrelevant thoughts or external distractions during the activity | ||

| The activity became the unique and only thought occupying my mind | ||

| I lost track of time, as if everything just stopped, and the only thing that I could think about was the activity | ||

| Perceived Informativeness | The AR app provides complete information about the cheese | Adopted and revised from (Holdack et al., 2022) |

| The AR app provides information that helps me in my buying decision | ||

| The AR app provides information to compare products. | ||

| Perceived Brand Authenticity | The brand of the app is genuine | Adopted and revised from (Park et al., 2021) |

| The brand of the app is authentic | ||

| The brand of the app is real | ||

| Perceived Product Authenticity | The product of the app is genuine | |

| The product of the app is authentic | ||

| The product of the app is real | ||

| Intention to Buy | I would like to try this product | Adopted and revised from (Russo et al., 2021) |

| I would buy this product if I happened to see it | ||

| I would actively seek out this product in a store in order to purchase it |

| Constructs | N. Item | M | SD | Cronbach’s α | McDonald’s ω |

|---|---|---|---|---|---|

| Engagement | 8 | 36.98 | 7.08 | 0.68 | 0.76 |

| Engrossment | 6 | 28.15 | 9.01 | 0.93 | 0.94 |

| Total Immersion | 7 | 30.68 | 9.71 | 0.86 | 0.86 |

| Perceived Informativeness | 3 | 16.93 | 2.90 | 0.60 | 0.61 |

| Perceived Brand Authenticity | 3 | 18.15 | 2.99 | 0.91 | 0.93 |

| Perceived Product Authenticity | 3 | 17.23 | 3.25 | 0.84 | 0.85 |

| Intention to Buy | 3 | 15.48 | 4.07 | 0.90 | 0.91 |

| Condition | Static | Immersive |

|---|---|---|

| Dependent Variables | p-Value of Shapiro-Wilk | p-Value of Shapiro-Wilk |

| Engagement | 0.540 | 0.180 |

| Engrossment | 0.158 | 0.248 |

| Total Immersion | 0.326 | 0.849 |

| Perceived Informativeness | 0.167 | 0.088 |

| Perceived Brand Authenticity | 0.002 | 0.001 |

| Perceived Product Authenticity | 0.280 | 0.002 |

| Intention to Buy | 0.001 | 0.004 |

| Emotional Index | 0.270 | 0.006 |

| BATR | 0.431 | 0.306 |

| BATR | EI | |||||||

|---|---|---|---|---|---|---|---|---|

| 95% CI | 95% CI | |||||||

| AR Content | M | SD | Min | Max | M | SD | Min | Max |

| Static | −0.88 | 0.75 | −1.23 | −0.53 | 0.30 | 0.37 | 0.13 | 0.47 |

| Immersive | −0.55 | 0.88 | −0.97 | −0.14 | 0.50 | 0.36 | 0.33 | 0.67 |

| Static | Immersive | |||||||

|---|---|---|---|---|---|---|---|---|

| 95% CI | 95% CI | |||||||

| Constructs | M | SD | Min | Max | M | SD | Min | Max |

| Engagement | 35.55 | 6.68 | 32.43 | 38.68 | 38.40 | 7.36 | 34.96 | 41.84 |

| Engrossment | 26.55 | 9.20 | 22.24 | 30.86 | 29.75 | 8.76 | 25.65 | 33.85 |

| Total Immersion | 28.35 | 9.46 | 23.93 | 32.78 | 33.00 | 9.64 | 28.49 | 37.5 |

| Static | Immersive | |||||||

|---|---|---|---|---|---|---|---|---|

| 95% CI | 95% CI | |||||||

| Constructs | M | SD | Min | Max | M | SD | Min | Max |

| Perceived Informativeness | 17.20 | 2.82 | 15.88 | 18.52 | 16.65 | 3.01 | 15.24 | 18.06 |

| Perceived Brand Authenticity | 17.85 | 2.83 | 16.52 | 19.18 | 18.45 | 3.19 | 16.96 | 19.94 |

| Perceived Product Authenticity | 16.65 | 3.13 | 15.18 | 18.12 | 17.80 | 3.35 | 16.23 | 19.37 |

| Intention to Buy | 15.35 | 4.30 | 13.34 | 17.36 | 15.60 | 3.94 | 13.76 | 17.44 |

| Correlation Matrix—Static Condition | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Engagement | Engrossment | Total Immersion | PI | PBA | PPA | ITB | EI | BATR | ||

| Engagement | Pearson’s r | — | ||||||||

| df | — | |||||||||

| p-value | — | |||||||||

| Engrossment | Pearson’s r | 0.854 *** | — | |||||||

| df | 18 | — | ||||||||

| p-value | <0.001 | — | ||||||||

| Total Immersion | Pearson’s r | 0.685 *** | 0.716 *** | — | ||||||

| df | 18 | 18 | — | |||||||

| p-value | <0.001 | <0.001 | — | |||||||

| PI | Pearson’s r | 0.642 ** | 0.462 * | 0.441 | — | |||||

| df | 18 | 18 | 18 | — | ||||||

| p-value | 0.002 | 0.040 | 0.051 | — | ||||||

| PBA | Pearson’s r | 0.430 | 0.389 | 0.153 | 0.722 *** | — | ||||

| df | 18 | 18 | 18 | 18 | — | |||||

| p-value | 0.058 | 0.090 | 0.519 | <0.001 | — | |||||

| PPA | Pearson’s r | 0.369 | 0.520 * | 0.292 | 0.634 ** | 0.776 *** | — | |||

| df | 18 | 18 | 18 | 18 | 18 | — | ||||

| p-value | 0.109 | 0.019 | 0.211 | 0.003 | <0.001 | — | ||||

| ITB | Pearson’s r | 0.547 * | 0.735 *** | 0.539 * | 0.567 ** | 0.588 ** | 0.803 *** | — | ||

| df | 18 | 18 | 18 | 18 | 18 | 18 | — | |||

| p-value | 0.013 | <0.001 | 0.014 | 0.009 | 0.006 | <0.001 | — | |||

| EI | Pearson’s r | 0.015 | 0.002 | 0.030 | 0.293 | 0.140 | 0.180 | −0.078 | — | |

| df | 18 | 18 | 18 | 18 | 18 | 18 | 18 | — | ||

| p-value | 0.949 | 0.992 | 0.899 | 0.211 | 0.557 | 0.449 | 0.745 | — | ||

| BATR | Pearson’s r | 0.212 | 0.356 | −0.175 | 0.113 | 0.421 | 0.391 | 0.382 | 0.151 | — |

| df | 18 | 18 | 18 | 18 | 18 | 18 | 18 | 18 | — | |

| p-value | 0.370 | 0.123 | 0.461 | 0.637 | 0.064 | 0.089 | 0.097 | 0.524 | — | |

| Correlation Matrix—Immersive Condition | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Engagement | Engrossment | Total Immersion | PI | PBA | PPA | ITB | EI | BATR | ||

| Engagement | Pearson’s r | — | ||||||||

| df | — | |||||||||

| p-value | — | |||||||||

| Engrossment | Pearson’s r | 0.732 *** | — | |||||||

| df | 18 | — | ||||||||

| p-value | <0.001 | — | ||||||||

| Total Immersion | Pearson’s r | 0.588 ** | 0.782 *** | — | ||||||

| df | 18 | 18 | — | |||||||

| p-value | 0.006 | <0.001 | — | |||||||

| PI | Pearson’s r | 0.351 | 0.312 | 0.475 * | — | |||||

| df | 18 | 18 | 18 | — | ||||||

| p-value | 0.129 | 0.181 | 0.034 | — | ||||||

| PBA | Pearson’s r | 0.537 * | 0.276 | 0.021 | 0.258 | — | ||||

| df | 18 | 18 | 18 | 18 | — | |||||

| p-value | 0.015 | 0.239 | 0.931 | 0.271 | — | |||||

| PPA | Pearson’s r | 0.552 * | 0.280 | 0.080 | 0.269 | 0.916 *** | — | |||

| df | 18 | 18 | 18 | 18 | 18 | — | ||||

| p-value | 0.012 | 0.232 | 0.738 | 0.251 | <0.001 | — | ||||

| ITB | Pearson’s r | 0.707 *** | 0.595 ** | 0.359 | 0.271 | 0.648 ** | 0.748 *** | — | ||

| df | 18 | 18 | 18 | 18 | 18 | 18 | — | |||

| p-value | <0.001 | 0.006 | 0.120 | 0.247 | 0.002 | <0.001 | — | |||

| EI | Pearson’s r | −0.227 | −0.063 | −0.020 | −0.120 | −0.097 | 0.010 | −0.198 | — | |

| df | 18 | 18 | 18 | 18 | 18 | 18 | 18 | — | ||

| p-value | 0.337 | 0.792 | 0.932 | 0.614 | 0.685 | 0.966 | 0.404 | — | ||

| BATR | Pearson’s r | 0.316 | 0.274 | 0.115 | 0.023 | 0.178 | 0.180 | 0.076 | 0.369 | — |

| df | 18 | 18 | 18 | 18 | 18 | 18 | 18 | 18 | — | |

| p-value | 0.174 | 0.242 | 0.630 | 0.924 | 0.452 | 0.448 | 0.749 | 0.109 | — | |

| Research Question (RQ) | Hypotheses | Associated Metrics | Type | Answer |

|---|---|---|---|---|

| RQ1: From a neurophysiological perspective, does the immersive AR on the packaging label of a product engage consumers differently compared to the static AR? | H1a: Immersive AR on packaging labels generates more emotional engagement compared to static AR. | EI | Neurophysiological | YES |

| H1b: Immersive AR on packaging labels generate more cognitive engagement compared to static AR. | BATR | Neurophysiological | NO | |

| RQ2: From a declarative perspective, does the immersive AR on the packaging label of a product change how the product is perceived compared to static AR? | H2a: Immersive AR on packaging labels generates more Perceived Informativeness (PI) compared to static AR. | PI | Declarative | NO |

| H2b: Immersive AR on packaging labels generates more Perceived Brand Authenticity (PBA) compared to static AR. | PBA | Declarative | NO | |

| H2c: Immersive AR on packaging labels generates more Perceived Product Authenticity (PPA) compared to static AR. | PPA | Declarative | NO | |

| H2d: Immersive AR on packaging labels generates more Intention to Buy (ITB) compared to static AR. | ITB | Declarative | NO |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Accardi, S.; Campo, C.; Bilucaglia, M.; Zito, M.; Caccamo, M.; Russo, V. Static vs. Immersive: A Neuromarketing Exploratory Study of Augmented Reality on Packaging Labels. Behav. Sci. 2025, 15, 1241. https://doi.org/10.3390/bs15091241

Accardi S, Campo C, Bilucaglia M, Zito M, Caccamo M, Russo V. Static vs. Immersive: A Neuromarketing Exploratory Study of Augmented Reality on Packaging Labels. Behavioral Sciences. 2025; 15(9):1241. https://doi.org/10.3390/bs15091241

Chicago/Turabian StyleAccardi, Sebastiano, Carmelo Campo, Marco Bilucaglia, Margherita Zito, Margherita Caccamo, and Vincenzo Russo. 2025. "Static vs. Immersive: A Neuromarketing Exploratory Study of Augmented Reality on Packaging Labels" Behavioral Sciences 15, no. 9: 1241. https://doi.org/10.3390/bs15091241

APA StyleAccardi, S., Campo, C., Bilucaglia, M., Zito, M., Caccamo, M., & Russo, V. (2025). Static vs. Immersive: A Neuromarketing Exploratory Study of Augmented Reality on Packaging Labels. Behavioral Sciences, 15(9), 1241. https://doi.org/10.3390/bs15091241