You Understand, So I Understand: How a “Community of Knowledge” Shapes Trust and Credibility in Expert Testimony Evidence

Abstract

1. Community of Knowledge

2. Source Credibility and the CK Effect

3. Scientific Information in the Courtroom

4. Current Studies

4.1. Study One

4.1.1. Study One Method

Study One Participants

Study One Design and Procedure

Study One Measures

4.1.2. Study One Results

Study One Expert Understanding

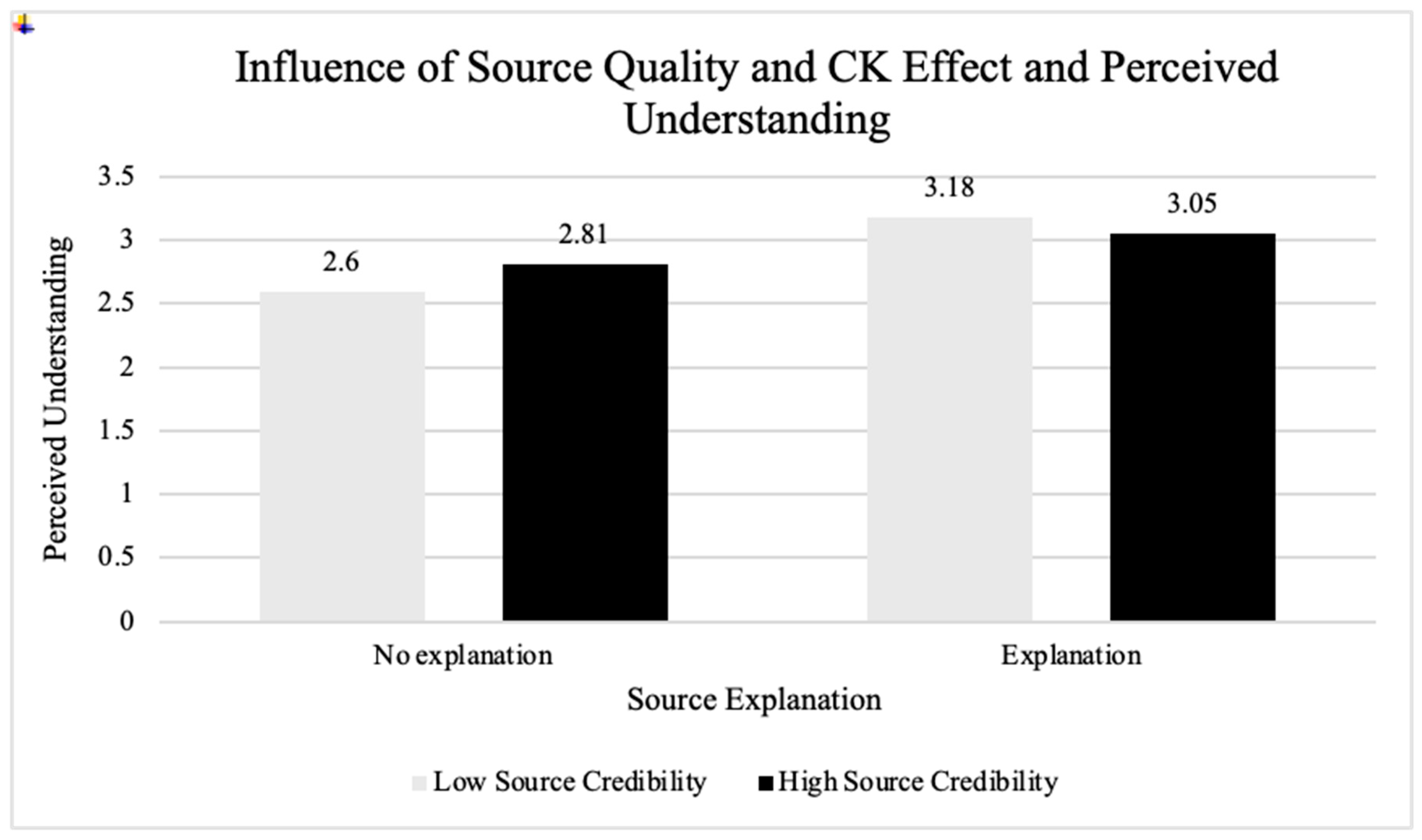

Study One Source Quality

Study One Expert Understanding vs. Source Quality

4.1.3. Study One Discussion

4.2. Study Two

4.2.1. Study Two Method

Study Two Participants

Study Two Design and Procedure

Study Two Witness Credibility Scale

Study Two Perceived Homophily Measure

Study Two Demographic Questionnaire

Study Two Researcher-Derived Questions

4.2.2. Study Two Results

Study Two Expert Understanding

Study Two Expert Credentials

Study Two Weight of Expert Testimony and Scientific Information

Study Two Mediating Role of Perceived Social Distance

Study Two Mediating Role of Perceived Credibility

Study Two Perceptions of Expert Opinion

4.2.3. Study Two Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brodsky, S. L., Griffin, M. P., & Cramer, R. J. (2010). The Witness Credibility Scale: An outcome measure for expert witness research. Behavioral Sciences & the Law, 28(6), 892–907. [Google Scholar] [CrossRef]

- Cooper, J., & Neuhaus, I. M. (2000). The “Hired Gun” effect: Assessing the effect of pay, frequency of testifying, and credentials on the perception of expert testimony. Law and Human Behavior, 24(2), 149–171. [Google Scholar] [CrossRef]

- Cramer, R. J., Brodsky, S. L., & DeCoster, J. (2009). Expert witness confidence and juror personality: Their impact on credibility and persuasion in the courtroom. The Journal of the American Academy of Psychiatry and the Law, 37(1), 63–74. [Google Scholar]

- Cramer, R. J., DeCoster, J., Harris, P. B., Fletcher, L. M., & Brodsky, S. L. (2011). A confidence-credibility model of expert witness persuasion: Mediating effects and implications for trial consultation. Consulting Psychology Journal: Practice and Research, 63(2), 129–137. [Google Scholar] [CrossRef]

- Daubert v. Merrell Dow Pharmaceuticals, Inc. (1993). 509 U.S. 579. Available online: https://supreme.justia.com/cases/federal/us/509/579/ (accessed on 1 August 2025).

- Devine, D. J. (2012). Jury decision making: The state of the science. New York University Press. [Google Scholar]

- Eldridge, H. (2019). Juror comprehension of forensic testimony: A literature review and gap analysis. Forensic Science International: Synergy, 1, 24–34. [Google Scholar] [CrossRef]

- Federal Rules of Evidence. (2000). Rule 702. Testimony by expert witnesses. Article VII, §1. Available online: https://www.law.cornell.edu/rules/fre/rule_702 (accessed on 1 August 2025).

- Fernandez-Duque, D., Evans, J., Christian, C., & Hodges, S. D. (2015). Superfluous neuroscience information makes explanations of psychological phenomena more appealing. Journal of Cognitive Neuroscience, 27(5), 926–944. [Google Scholar] [CrossRef]

- Flick, C., Smith, O. K. H., & Schweitzer, K. (2022). Influence of expert degree and scientific validity of testimony on mock jurors’ perceptions of credibility. Applied Cognitive Psychology, 36(3), 494–507. [Google Scholar] [CrossRef]

- Gatowski, S. I., Dobbin, S. A., Richardson, J. T., Ginsburg, G. P., Merlino, M. L., & Dahir, V. (2001). Asking the gatekeepers: A national survey of judges on judging expert evidence in a post-Daubert world. Law and Human Behavior, 25(5), 433–458. [Google Scholar] [CrossRef]

- Gowensmith, W. N., Murrie, D. C., & Boccaccini, M. T. (2012). Field reliability of competence to stand trial opinions: How often do evaluators agree, and what do judges decide when evaluators disagree? Law and Human Behavior, 36(2), 130–139. [Google Scholar] [CrossRef]

- Hafdahl, R., Edwards, C. P., & Miller, M. K. (2021). Social cognitive processes of jurors. Washburn LJ, 61, 305. [Google Scholar]

- Hopkins, E. J., Weisberg, D. S., & Taylor, J. C. V. (2016). The seductive allure is a reductive allure: People prefer scientific explanations that contain logically irrelevant reductive information. Cognition, 155, 67–76. [Google Scholar] [CrossRef]

- Koehler, J. J., Schweitzer, N. J., Saks, M. J., & McQuiston, D. E. (2016). Science, technology, or the expert witness: What influences jurors’ judgments about forensic science testimony? Psychology, Public Polic, and Law, 22(4), 401–413. [Google Scholar] [CrossRef]

- Kovera, M. B., & McAuliff, B. D. (2000). The effects of peer review and evidence quality on judge evaluations of psychological science: Are judges effective gatekeepers? The Journal of Applied Psychology, 85(4), 574–586. [Google Scholar] [CrossRef]

- Litman, L., Robinson, J., & Abberbock, T. (2017). TurkPrime.com: A versatile crowdsourcing data acquisition platform for the behavioral sciences. Behavior Research Methods, 49(2), 433–442. [Google Scholar] [CrossRef]

- McCroskey, J. C., Richmond, V. P., & Daly, J. A. (1975). The development of a measure of perceived homophily in interpersonal communication. Human Communication Research, 1(4), 323–332. [Google Scholar] [CrossRef]

- Medin, D., & Ortony, A. (1989). Psychological essentialism. In S. Vosniadou, & A. Ortony (Eds.), Similarity and analogical reasoning. Cambridge University Press. [Google Scholar]

- Parrott, C. T., Neal, T. M. S., Wilson, J. K., & Brodsky, S. L. (2015). Differences in expert witness knowledge: Do mock jurors notice and does it matter? The Journal of the American Academy of Psychiatry and the Law, 43(1), 69–81. [Google Scholar]

- Petty, R. E., & Cacioppo, J. T. (1986). The elaboration likelihood model of persuasion. Advances in Experimental Social Psychology, 19, 123–205. [Google Scholar] [CrossRef]

- Pornpitakpan, C. (2006). The persuasion of source credibility: A critical review of five decades’ evidence. Journal of Applied Social Psychology, 34(2), 243–281. [Google Scholar] [CrossRef]

- Rabb, N., Geana, M., & Sloman, S. (2024). Communities of knowledge in trouble. Perspectives on Psychological Science, 19(2), 432–443. [Google Scholar] [CrossRef]

- Rozenblit, L., & Keil, F. (2002). The misunderstood limits of folk science: An illusion of explanatory depth. Cognitive Science, 26(5), 521–562. [Google Scholar] [CrossRef]

- Sloman, S., & Rabb, N. (2016). Your understanding is my understanding: Evidence for a community of knowledge. Psychological Science, 27(11), 1451–1460. [Google Scholar] [CrossRef]

- Vickers, A. (2005). Daubert, critique and interpretation: What empirical studies tell us about the application of Daubert. University of San Francisco Law Review, 40(1), 109–148. [Google Scholar]

- Welbourne, M. (1981). The community of knowledge. The Philosophical Quarterly, 31(125), 302–314. [Google Scholar] [CrossRef]

- Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bulletin & Review, 9, 625–636. [Google Scholar]

| Demographic | N | % | Demographic | N | % |

|---|---|---|---|---|---|

| Race/Ethnicity | |||||

| Sex | African American/Black | 24 | 8.2 | ||

| Male | 161 | 55.3 | Middle Eastern/North African | 1 | <1 |

| Female | 130 | 44.7 | Asian/Pacific Islander | 28 | 9.6 |

| White/Caucasian | 208 | 71.5 | |||

| Political Views | Hispanic/Latino/Central/South American | 21 | 7.2 | ||

| Very Conservative | 17 | 5.8 | Other | 9 | 3.1 |

| Somewhat Conservative | 35 | 12.0 | |||

| Moderate, Leaning Conservative | 18 | 6.2 | Education | ||

| Moderate | 65 | 22.3 | High school graduate | 49 | 16.8 |

| Moderate, Leaning Liberal | 37 | 12.7 | Some college | 80 | 27.5 |

| Somewhat Liberal | 60 | 20.6 | Associate’s degree | 32 | 11.0 |

| Very Liberal | 59 | 20.3 | Bachelor’s degree | 106 | 36.4 |

| Master’s degree | 20 | 6.9 | |||

| Doctoral degree | 4 | 1.4 |

| DV | M | SD |

|---|---|---|

| Rock Scenario | ||

| Perceived understanding | 1.62 | 1.01 |

| Perceived ability to explain | 1.62 | 1.07 |

| Believability | 2.30 | 1.17 |

| Trustworthiness | 2.61 | 1.20 |

| Received explanation | 0.49 | 0.50 |

| Stalactites Scenario | ||

| Perceived understanding | 1.57 | 0.96 |

| Perceived ability to explain | 1.48 | 0.92 |

| Believability | 2.83 | 1.06 |

| Trustworthiness | 3.01 | 1.02 |

| Received Explanation | 0.50 | 0.50 |

| Demographic | N | % | Demographic | N | % |

|---|---|---|---|---|---|

| Gender | Ethnicity | ||||

| Male | 162 | 40.9 | Hispanic/Latine/Spanish | 43 | 10.9 |

| Female | 222 | 56.1 | Not applicable | 352 | 88.9 |

| Non-binary | 8 | 2.0 | Prefer not to respond | 1 | <1 |

| Gender Fluid | 1 | <1 | |||

| Transgender Nonbinary | 1 | <1 | Education | ||

| Prefer not to respond | 2 | <1 | Less than high school | 1 | <1 |

| diploma | |||||

| Race | High school | 44 | 11.1 | ||

| African American/Black | 67 | 16.9 | degree/equivalent | ||

| American Arab/Middle | 2 | <1 | Some college, no degree | 82 | 20.7 |

| Eastern/North African | Technical certificate or | 8 | 2.0 | ||

| Asian/Asian American | 12 | 3.0 | training | ||

| Native American/Alaskan | 4 | 1.0 | Associate’s degree | 39 | 9.8 |

| Native | Bachelor’s degree | 144 | 36.4 | ||

| White | 277 | 69.9 | Master’s degree | 58 | 14.6 |

| Multiracial | 22 | 5.6 | Professional degree | 4 | 1.0 |

| Other | 6 | 1.5 | Doctorate | 14 | 3.5 |

| Prefer not to respond | 6 | 1.5 | Other | 1 | <1 |

| Prefer not to respond | 1 | <1 | |||

| Political affiliation | |||||

| Democratic | 177 | 44.7 | |||

| Republican | 101 | 25.5 | |||

| Independent | 106 | 26.8 | |||

| Prefer not to respond | 12 | 3.0 |

| Scale | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Understanding | - | - | - | - | - | - | - | - | - | - | - | - | - | - |

| 2. Ability to Explain | 0.86 *** | - | - | - | - | - | - | - | - | - | - | - | - | - |

| 3. Trust | 0.68 *** | 0.71 *** | - | - | - | - | - | - | - | - | - | - | - | - |

| 4. Believability | 0.56 *** | 0.56 *** | 0.72 *** | - | - | - | - | - | - | - | - | - | - | - |

| 5. Others’ Understanding | 0.68 *** | 0.67 *** | 0.67 *** | 0.58 *** | - | - | - | - | - | - | - | - | - | - |

| 6. Explanation | 0.56 *** | 0.55 *** | 0.49 *** | 0.39 *** | 0.45 *** | - | - | - | - | - | - | - | - | - |

| 7. WCS Likability | 0.12 * | 0.11 * | 0.14 ** | 0.14 ** | 0.15 ** | 0.12 * | - | - | - | - | - | - | - | - |

| 8. WCS Trust | 0.32 *** | 0.30 *** | 0.40 *** | 0.34 *** | 0.35 *** | 0.31 *** | 0.67 *** | - | - | - | - | - | - | - |

| 9. WCS Knowledge | 0.31 *** | 0.27 *** | 0.37 *** | 0.30 *** | 0.32 *** | 0.28 *** | 0.64 *** | 0.90 *** | - | - | - | - | - | - |

| 10. PHM Attitude | 0.29 *** | 0.29 *** | 0.36 *** | 0.25 *** | 0.30 *** | 0.28 *** | 0.29 *** | 0.14 ** | 0.46 *** | - | - | - | - | - |

| 11. PHM Background | 0.18 *** | 0.20 *** | 0.23 *** | 0.14 ** | 0.19 *** | 0.14 ** | 0.03 | 0.14 ** | 0.13 * | 0.39 *** | - | - | - | - |

| 12. Testimony Weight | 0.47 *** | 0.43 *** | 0.58 *** | 0.45 *** | 0.45 *** | 0.40 *** | 0.31 *** | 0.64 *** | 0.60 *** | 0.39 *** | 0.19 *** | - | - | - |

| 13. Science Weight | 0.52 *** | 0.52 *** | 0.70 *** | 0.59 *** | 0.54 *** | 0.47 *** | 0.22 *** | 0.54 *** | 0.48 *** | 0.43 *** | 0.21 *** | 0.77 *** | - | - |

| 14. Experience Weight | 0.30 *** | 0.28 *** | 0.39 *** | 0.32 *** | 0.31 *** | 0.26 *** | 0.35 *** | 0.58 *** | 0.59 *** | 0.32 *** | 0.10 | 0.68 *** | 0.56 *** | - |

| M | 1.82 | 1.72 | 1.84 | 2.02 | 2.07 | 2.18 | 29.94 | 27.50 | 30.18 | 14.49 | 13.26 | 40.66 | 32.48 | 50.54 |

| SD | 1.01 | 0.97 | 1.06 | 1.15 | 1.05 | 0.96 | 8.51 | 11.23 | 11.10 | 4.24 | 4.38 | 27.28 | 29.45 | 26.87 |

| DV | Path | b | SE | p | 95% CI | |

|---|---|---|---|---|---|---|

| Lower | Upper | |||||

| Perceived Understanding (PU) | Direct Effect | 0.228 | 0.051 | <0.001 * | 0.125 | 0.324 |

| EU → PHM A → PU | 0.044 | 0.015 | 0.003 * | 0.019 | 0.078 | |

| EU → PHM B → PU | 0.001 | 0.006 | 0.927 | −0.011 | 0.014 | |

| Total Indirect Effect | 0.045 | 0.017 | 0.008 * | 0.013 | 0.080 | |

| Total Effect | 0.273 | 0.049 | <0.001 * | 0.173 | 0.365 | |

| Trust | Direct Effect | 0.178 | 0.048 | <0.001 * | 0.081 | 0.272 |

| EU → PHM A → Trust | 0.062 | 0.017 | <0.001 * | 0.032 | 0.100 | |

| EU → PHM B → Trust | 0.001 | 0.007 | 0.925 | −0.013 | 0.015 | |

| Total Indirect Effect | 0.063 | 0.020 | 0.002 * | 0.026 | 0.104 | |

| Total Effect | 0.241 | 0.048 | <0.001 * | 0.147 | 0.337 | |

| Believability (Bel.) | Direct Effect | 0.116 | 0.049 | 0.019 * | 0.018 | 0.212 |

| EU → PHM A → Bel. | 0.044 | 0.016 | 0.006 * | 0.018 | 0.082 | |

| EU → PHM B → Bel. | 0.001 | 0.004 | 0.939 | −0.007 | 0.011 | |

| Total Indirect Effect | 0.045 | 0.017 | 0.009 * | 0.015 | 0.082 | |

| Total Effect | 0.160 | 0.049 | 0.001 * | 0.064 | 0.256 | |

| Ability to Explain (Ab. To Exp.) | Direct Effect | 0.149 | 0.051 | 0.003 * | 0.048 | 0.246 |

| EU → PHM A → Ab. to Exp. | 0.048 | 0.016 | 0.002 * | 0.022 | 0.085 | |

| EU → PHM B → Ab. to Exp. | 0.001 | 0.007 | 0.926 | −0.012 | 0.015 | |

| Total Indirect Effect | 0.049 | 0.018 | 0.008 * | 0.015 | 0.087 | |

| Total Effect | 0.198 | 0.049 | <0.001 * | 0.099 | 0.291 | |

| DV | Path | b | SE | p | 95% CI | |

|---|---|---|---|---|---|---|

| Lower | Upper | |||||

| Perceived Understanding (PU) | Direct Effect | 0.170 | 0.052 | 0.001 * | 0.070 | 0.273 |

| EU → WCS L → PU | −0.017 | 0.011 | 0.113 | −0.048 | −0.003 | |

| EU → WCS T → PU | 0.082 | 0.037 | 0.029 * | 0.008 | 0.156 | |

| EU → WCS K → PU | 0.034 | 0.031 | 0.282 | −0.022 | 0.100 | |

| Total Indirect Effect | 0.098 | 0.019 | <0.001 * | 0.066 | 0.140 | |

| Total Effect | 0.268 | 0.049 | <0.001 * | 0.172 | 0.361 | |

| Trust | Direct Effect | 0.095 | 0.047 | 0.044 * | 0.006 | 0.193 |

| EU → WCS L → Trust | −0.024 | 0.013 | 0.054 | −0.055 | −0.005 | |

| EU → WCS T → Trust | 0.137 | 0.037 | <0.001 * | 0.065 | 0.210 | |

| EU → WCS K → Trust | 0.021 | 0.029 | 0.484 | −0.030 | 0.086 | |

| Total Indirect Effect | 0.133 | 0.023 | <0.001 * | 0.093 | 0.182 | |

| Total Effect | 0.228 | 0.047 | <0.001 * | 0.140 | 0.323 | |

| Believability (Bel.) | Direct Effect | 0.039 | 0.049 | 0.430 | −0.057 | 0.136 |

| EU → WCS L → Bel. | −0.017 | 0.010 | 0.099 | −0.046 | −0.003 | |

| EU → WCS T → Bel. | 0.131 | 0.040 | 0.001 | 0.055 | 0.211 | |

| EU → WCS K → Bel. | 0.002 | 0.030 | 0.960 | −0.054 | 0.066 | |

| Total Indirect Effect | 0.115 | 0.023 | <0.001 * | 0.075 | 0.166 | |

| Total Effect | 0.154 | 0.047 | 0.001 * | 0.062 | 0.246 | |

| Ability to Explain (Ab. to Exp.) | Direct Effect | 0.094 | 0.051 | 0.066 | −0.005 | 0.196 |

| EU → WCS L → Ab. to Exp. | −0.017 | 0.011 | 0.109 | −0.048 | −0.003 | |

| EU → WCS T → Ab. to Exp. | 0.101 | 0.036 | 0.005 * | 0.033 | 0.176 | |

| EU → WCS K → Ab. to Exp. | 0.014 | 0.031 | 0.646 | −0.044 | 0.079 | |

| Total Indirect Effect | 0.098 | 0.018 | <0.001 * | 0.067 | 0.138 | |

| Total Effect | 0.192 | 0.049 | <0.001 * | 0.099 | 0.288 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jones, A.C.T.; Haga, M.R. You Understand, So I Understand: How a “Community of Knowledge” Shapes Trust and Credibility in Expert Testimony Evidence. Behav. Sci. 2025, 15, 1071. https://doi.org/10.3390/bs15081071

Jones ACT, Haga MR. You Understand, So I Understand: How a “Community of Knowledge” Shapes Trust and Credibility in Expert Testimony Evidence. Behavioral Sciences. 2025; 15(8):1071. https://doi.org/10.3390/bs15081071

Chicago/Turabian StyleJones, Ashley C. T., and Morgan R. Haga. 2025. "You Understand, So I Understand: How a “Community of Knowledge” Shapes Trust and Credibility in Expert Testimony Evidence" Behavioral Sciences 15, no. 8: 1071. https://doi.org/10.3390/bs15081071

APA StyleJones, A. C. T., & Haga, M. R. (2025). You Understand, So I Understand: How a “Community of Knowledge” Shapes Trust and Credibility in Expert Testimony Evidence. Behavioral Sciences, 15(8), 1071. https://doi.org/10.3390/bs15081071