Sorry, Am I Intruding? Comparing Performance and Intrusion Rates for Pretested and Posttested Information

Abstract

1. Introduction

1.1. Pretesting and Posttesting

1.2. True/False Testing

1.3. Pretesting and Posttesting with True/False Tests

1.4. The Present Experiments

2. Experiment 1

2.1. Methods

2.1.1. Participants and Design

2.1.2. Materials

2.1.3. Procedure

2.2. Results

2.2.1. Pretest and Posttest Performance

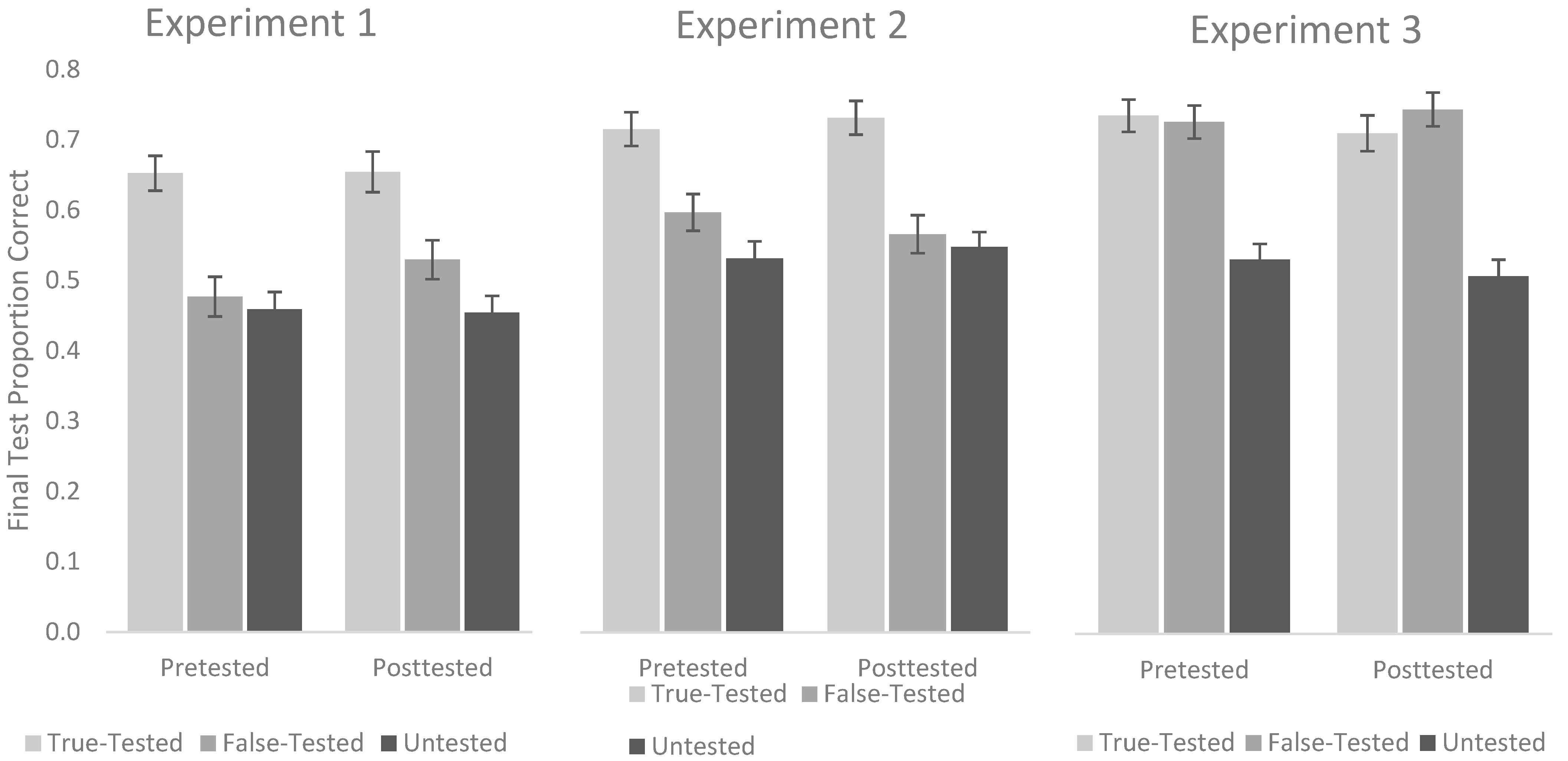

2.2.2. Final Test Performance

2.2.3. Final Test Intrusions

3. Experiment 2

3.1. Methods

3.1.1. Participants and Design

3.1.2. Materials and Procedure

3.2. Results

3.2.1. Pretest and Posttest Performance

3.2.2. Final Test Performance

3.2.3. Final Test Intrusions

4. Experiment 3

4.1. Methods

4.1.1. Participants and Design

4.1.2. Materials and Procedure

4.2. Results

4.2.1. Pretest and Posttest Performance

4.2.2. Final Test Performance

4.2.3. Final Test Intrusions

5. General Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Passage: Joan of Arc | ||||

| True Item | False Item | Cued Recall Question | Substantive Feedback (Exp 3 Only) | |

| 1 | Before Joan of Arc convinced the embattled crown prince Charles of Valois to allow her to lead an army, she had no military experience. | Before Joan of Arc convinced the embattled crown prince Charles of Valois to allow her to lead an army, she had 5 years of military experience. | Before Joan of Arc convinced the embattled crown prince Charles of Valois to allow her to lead an army, how much military experience did she have? ________________. | She had no military experience. |

| 2 | In 1920, Joan of Arc was officially canonized as a saint. | In 1920, Joan of Arc was officially recognized on a list of famous female warriors. | In 1920, Joan of Arc was officially ________________. | She was canonized. |

| 3 | Joan of Arc was born around the year 1412. | Joan of Arc was born around the year 1300 | Around what year was Joan of Arc born? __________________________. | She was born around 1412. |

| 4 | Joan of Arc’s father worked as a farmer. | Joan of Arc’s father worked as a tailor. | Her father worked as a ___________________________. | He worked as a farmer. |

| 5 | Joan’s mother instilled in her a deep love for the Catholic Church. | Joan’s mother instilled in her a deep love for books and scholarly pursuits. | Joan’s mother instilled in her a deep love for ___________________. | She instilled a love for the church. |

| 6 | In 1420, when the French crown prince was disinherited, King Henry V was made ruler of England and France. | In 1420, when the French crown prince was disinherited, King Edward was made ruler of England and France. | In 1420, when the French crown prince was disinherited, ________________________ was made ruler of England and France. | King Henry V was made ruler. |

| 7 | Joan heard voices, which she believed were coming from God. | Joan heard voices, which she believed were coming from her deceased uncle. | Where did Joan believe the voices she heard were coming from? | She believed they were coming from God. |

| 8 | Joan went to court after her father tried to arrange a marriage for her. | Joan went to court after her father began to physically abuse her. | Joan went to court after her father ____________________. | He tried to arrange a marriage for her. |

| 9 | As part of her mission, Joan took a vow of chastity. | As part of her mission, Joan took a vow of silence. | As part of her mission, Joan took a vow of __________________________. | She took a vow of chastity. |

| 10 | Joan dressed in men’s clothes to make the 11-day journey across enemy territory to Chinon, the site of the crown prince’s palace. | Joan dressed in a black dress to make the 11-day journey across enemy territory to Chinon, site of the crown prince’s palace. | Joan dressed in __________________ to make the 11-day journey across enemy territory to Chinon, site of the crown prince’s palace. | She dressed in men’s clothes. |

| 11 | Joan promised Charles she would see him crowned king at Reims, the traditional site of French royal investiture. | Joan promised Charles she would see him crowned king at Versailles, the traditional site of French royal investiture. | Joan promised Charles she would see him crowned king at ______________________, the traditional site of French royal investiture. | Charles would be crowned at Reims. |

| 12 | After Joan’s early victory, she and her followers escorted Charles across enemy territory, enabling him to be coronated in July 1429. | After Joan’s early victory, she and her followers escorted Charles across enemy territory, enabling him to be freed from enemy capture in July 1429. | After Joan’s early victory, she and her followers escorted Charles across enemy territory, enabling him to be ____________________ in July 1429. | Charles was coronated at that time. |

| 13 | In the spring of 1430, the king ordered Joan to confront an assault on Compiégne carried out by the Anglo-Burgundians. | In the spring of 1430, the king ordered Joan to confront an assault on Compiégne carried out by the Austrians. | In the spring of 1430, the king ordered Joan to confront an assault on Compiégne carried out by the ______________________. | The assault was carried out by the Anglo-Burgundians. |

| 14 | When Joan was captured, she was forced to answer some 70 charges against her, including witchcraft, heresy, and dressing like a man. | When Joan was captured, she was forced to answer some 15 charges against her, including witchcraft, heresy, and dressing like a man. | When Joan was captured, she was forced to answer some _______ (#) charges against her, including witchcraft, heresy, and dressing like a man. | She had to answer 70 charges. |

| 15 | After a year in captivity and under threat of death, Joan relented and signed a confession saying she had never received divine guidance. | After a year in captivity and under threat of death, Joan relented and signed a confession saying she had captured and killed soldiers. | After a year in captivity and under threat of death, Joan relented and signed a confession saying ___________________________. | She signed a confession saying that she did not receive divine guidance. |

| 16 | Joan of Arc was 19 years old when she was burned at the stake. | Joan of Arc was 25 years old when she was burned at the stake. | How old was Joan when she was burned at the stake? ____________________ | She was nineteen. |

| Passage: Machu Picchu | ||||

| True Item | False Item | Cued Recall Question | Substantive Feedback (Exp 3 Only) | |

| 1 | Machu Picchu is tucked away in the rocky countryside northwest of Cuzco, Peru. | Machu Picchu is tucked away in the rocky countryside northwest of Nazca, Peru. | Machu Picchu is tucked away in the rocky countryside northwest of ________________, Peru. | It is near Cuzco, Peru. |

| 2 | In the 16th century, the civilization that created Machu Picchu was nearly wiped out by Spanish Invaders. | In the 16th century, the civilization that created Machu Picchu was nearly wiped out by famine. | In the 16th century, the civilization that created Machu Picchu was nearly wiped out by ____________________. | It was nearly wiped out by Spanish Invaders. |

| 3 | Hiram Bingham is credited with discovering Machu Picchu in 1911. | Howard Carter is credited with discovering Machu Picchu in 1911. | ____________________ is credited with discovering Machu Picchu in 1911. | Hiram Bingham is credited with this discovery. |

| 4 | The site stretches over an impressive 5-mile distance, featuring more than 3000 stone steps. | The site stretches over an impressive 5-mile distance, featuring more than 3000 buildings. | The site stretches over an impressive 5-mile distance, featuring more than 3000 __________________________. | It features more than 3000 stone steps. |

| 5 | It was abandoned an estimated 100 years after its construction. | It was abandoned an estimated 1000 years after its construction. | It was abandoned an estimated _____________________ years after its construction. | It was abandoned after 100 years. |

| 6 | One theory of why Machu Picchu was deserted was that there was a smallpox epidemic. | One theory of why Machu Picchu was deserted was that there was a Malaria epidemic. | One theory of why Machu Picchu was deserted was that there was a/n _________________________ epidemic. | The theory states that there was a smallpox epidemic. |

| 7 | When the archaeologist credited with finding Machu Picchu arrived, he was actually trying to find Vilcabamba, the last Inca stronghold. | When the archaeologist credited with finding Machu Picchu arrived, he was actually trying to find Saqsaywaman, the first capital of Peru. | When the archaeologist credited with finding Machu Picchu arrived, he was actually trying to find ________________________________. | He was trying to find Vilcabamba, the last Inca stronghold. |

| 8 | The name Machu Picchu means Old Peak in the native Quechua language. | The name Machu Picchu means Green Tree in the native Quechua language. | The name Machu Picchu means ________________ in the native Quechua language. | The name means Old Peak. |

| 9 | The person who helped the archaeologist find Machu Picchu was 11 years old. | The person who helped the archaeologist find Machu Picchu was 80 years old. | How old was the person who helped the archaeologist find the site? | He was 11. |

| 10 | When the excavated artifacts were brought to Yale University, a custody dispute was ignited. | When the excavated artifacts were brought to Columbia University, a custody dispute was ignited. | When the excavated artifacts were brought to _____________________ University, a custody dispute was ignited. | The artifacts were brought to Yale. |

| 11 | The artifacts of Machu Picchu were finally returned to Peru under Barack Obama. | The artifacts of Machu Picchu were finally returned to Peru under Bill Clinton. | Under which president were the artifacts finally returned to Peru? | They were returned under Barack Obama. |

| 12 | In the midst of a tropical mountain forest on the eastern slopes of the Andes mountains, Machu Picchu blends seamlessly into its natural setting. | In the midst of a tropical mountain forest on the eastern slopes of the Cordillera mountains, Machu Picchu blends seamlessly into its natural setting. | In the midst of a tropical mountain forest on the eastern slopes of the ___________________________, Machu Picchu blends seamlessly into its natural setting. | Machu Picchu is along the slopes of the Andes Mountains. |

| 13 | Its central buildings are prime examples of a masonry technique mastered by the Incas in which stones fit together without mortar. | Its central buildings are prime examples of a masonry technique mastered by the Incas in which stones were cut in interesting shapes. | Its central buildings are prime examples of a masonry technique mastered by the Incas in which stones _______________________________. | The stones were cut to fit without mortar. |

| 14 | The Intihuatana stone, is a sculpted granite rock that is believed to have functioned as a solar clock. | The Intihuatana stone, is a sculpted granite rock that is believed to have functioned as an idol for worshipping a god. | The Intihuatana stone, is a sculpted granite rock that is believed to have functioned as a ________________________. | It is believed to have functioned as a solar clock or calendar. |

| 15 | In 2007, Machu Picchu was designated as one of the new 7 wonders of the world. | In 2007, Machu Picchu was designated as one of the new sacred lands of Peru. | In 2007, Machu Picchu was designated as one of the new _______________________. | It was designated as one of the new 7 world wonders. |

| 16 | Machu Picchu is the most famous ruin in South America, welcoming hundreds of thousands of people per year. | Machu Picchu is the most famous ruin in South America, welcoming around ten thousand people per year. | Machu Picchu is the most famous ruin in South America, welcoming ____________________ (#) people per year. | The site welcomes hundreds of thousands of people per year |

References

- Anderson, M. C., Bjork, R. A., & Bjork, E. L. (1994). Remembering can cause forgetting: Retrieval dynamics in long-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20, 1063–1087. [Google Scholar] [CrossRef]

- Bäuml, K.-H. T., & Kliegl, O. (2017). Retrieval-induced remembering and forgetting. In J. T. Wixted, & J. H. Byrne (Eds.), Cognitive psychology of memory, Vol. 2 of learning and memory: A comprehensive reference (pp. 27–51). Academic Press. [Google Scholar]

- Bäuml, K.-H. T., & Samenieh, A. (2012). Selective memory retrieval can impair and improve retrieval of other memories. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 488–494. [Google Scholar] [CrossRef]

- Brabec, J. A., Pan, S. C., Bjork, E. L., & Bjork, R. A. (2021). True-false testing on trial: Guilty as charged or falsely accused? Educational Psychology Review, 33, 667–692. [Google Scholar] [CrossRef]

- Butler, A. C., & Roediger, H. L. (2008). Feedback enhances the positive effects and reduces the negative effects of multiple-choice testing. Memory & Cognition, 36(3), 604–616. [Google Scholar] [CrossRef]

- Carpenter, S. K., & Toftness, A. R. (2017). The effect of prequestions on learning from video presentations. Journal of Applied Research in Memory and Cognition, 6(1), 104–109. [Google Scholar] [CrossRef]

- Chan, J. C. K., & McDermott, K. B. (2007). The testing effect in recognition memory: A dual process account. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(2), 431–437. [Google Scholar] [CrossRef]

- Chan, J. C. K., McDermott, K. B., & Roediger, H. L., III. (2006). Retrieval-induced facilitation: Initially nontested material can benefit from prior testing of related material. Journal of Experimental Psychology: General, 135(4), 553–571. [Google Scholar] [CrossRef]

- Cranney, J., Ahn, M., McKinnon, R., Morris, S., & Watts, K. (2009). The testing effect, collaborative learning, and retrieval-induced facilitation in a classroom setting. European Journal of Cognitive Psychology, 21(6), 919–940. [Google Scholar] [CrossRef]

- Geller, J., Carpenter, S. K., Lamm, M. H., Rahman, S., Armstrong, P. I., & Coffman, C. R. (2017). Prequestions do not enhance the benefits of retrieval in a STEM classroom. Cognitive Research: Principles and Implications, 2(1), 1–13. [Google Scholar] [CrossRef]

- Grimaldi, P. J., & Karpicke, J. D. (2012). When and why do retrieval attempts enhance subsequent encoding? Memory & Cognition, 40(4), 505–513. [Google Scholar] [CrossRef] [PubMed]

- Hildenbrand, L., & Wiley, J. (2025). Supporting comprehension: The advantages of multiple–choice over true—False practice tests. Memory & Cognition, 1–17. [Google Scholar] [CrossRef]

- Jacoby, L. L., Kelley, C., Brown, J., & Jasechko, J. (1989). Becoming famous overnight: Limits on the ability to avoid unconscious influences of the past. Journal of Personality and Social Psychology, 56(3), 326–338. [Google Scholar] [CrossRef]

- James, K. K., & Storm, B. C. (2019). Beyond the pretesting effect: What happens to the information that is not pretested? Journal of Experimental Psychology: Applied, 25(4), 576–587. [Google Scholar] [CrossRef]

- Kang, S. H., McDermott, K. B., & Roediger, H. L., III. (2007). Test format and corrective feedback modify the effect of testing on long-term retention. European Journal of Cognitive Psychology, 19(4–5), 528–558. [Google Scholar] [CrossRef]

- Karpicke, J. D., & Roediger, H. L., III. (2008). The critical importance of retrieval for learning. Science, 319, 966–968. [Google Scholar] [CrossRef]

- Kornell, N. (2014). Attempting to answer a meaningful question enhances subsequent learning even when feedback is delayed. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40(1), 106–114. [Google Scholar] [CrossRef]

- Kriechbaum, V. M., & Bäuml, K.-H. T. (2024). Retrieval practice can promote new learning with both related and unrelated prose materials. Journal of Applied Research in Memory and Cognition, 13, 319–328. [Google Scholar] [CrossRef]

- Latimier, A., Riegert, A., Peyre, H., Ly, S. T., Casati, R., & Ramus, F. (2019). Does pre-testing promote better retention than post-testing? NPJ Science of Learning, 4(1), 1–15. [Google Scholar] [CrossRef] [PubMed]

- Levine, T. R., Park, H. S., & McCornack, S. A. (1999). Accuracy in detecting truths and lies: Documenting the “veracity effect”. Communications Monographs, 66(2), 125–144. [Google Scholar] [CrossRef]

- Little, J. L., & Bjork, E. L. (2015). Optimizing multiple-choice tests as tools for learning. Memory & Cognition, 43(1), 14–26. [Google Scholar]

- Little, J. L., & Bjork, E. L. (2016). Multiple-choice pretesting potentiates learning of related information. Memory & Cognition, 44(7), 1085–1101. [Google Scholar] [CrossRef]

- McClusky, H. Y. (1934). The negative suggestion effect of the false statement in the true-false test. The Journal of Experimental Education, 2(3), 269–273. [Google Scholar] [CrossRef]

- McDaniel, M. A., Agarwal, P. K., Huelser, B. J., McDermott, K. B., & Roediger, H. L., III. (2011). Test-enhanced learning in a middle school science classroom: The effects of quiz frequency and placement. Journal of Educational Psychology, 103(2), 399–414. [Google Scholar] [CrossRef]

- Oliva, M. T., & Storm, B. C. (2023). Examining the effect size and duration of retrieval-induced facilitation. Psychological Research, 87, 1166–1179. [Google Scholar] [CrossRef] [PubMed]

- Overoye, A. L., James, K. K., & Storm, B. C. (2021). A little can go a long way: Giving learners some context can enhance the benefits of pretesting. Memory, 29(9), 1206–1215. [Google Scholar] [CrossRef] [PubMed]

- Pan, S. C., & Sana, F. (2021). Pretesting versus posttesting: Comparing the pedagogical benefits of errorful generation and retrieval practice. Journal of Experimental Psychology. Applied, 27(2), 237–257. [Google Scholar] [CrossRef] [PubMed]

- Pashler, H., Cepeda, N. J., Wixted, J. T., & Rohrer, D. (2005). When does feedback facilitate learning of words? Journal of Experimental Psychology: Learning, Memory, and Cognition, 31(1), 3–8. [Google Scholar] [CrossRef]

- Pastötter, B., & Bäuml, K.-H. T. (2014). Retrieval practice enhances new learning: The forward effect of testing. Frontiers in Psychology, 5, 83305. [Google Scholar] [CrossRef]

- Remmers, H. H., & Remmers, E. M. (1926). The negative suggestion effect on true-false examination questions. Journal of Educational Psychology, 17(1), 52–56. [Google Scholar] [CrossRef]

- Richland, L. E., Kornell, N., & Kao, L. S. (2009). The pretesting effect: Do unsuccessful retrieval attempts enhance learning? Journal of Experimental Psychology: Applied, 15(3), 243. [Google Scholar] [CrossRef]

- Roediger, H. L., III, Agarwal, P. K., McDaniel, M. A., & McDermott, K. B. (2011a). Test-enhanced learning in the classroom: Long-term improvements from quizzing. Journal of Experimental Psychology: Applied, 17(4), 382. [Google Scholar] [CrossRef]

- Roediger, H. L., III, & Karpicke, J. D. (2006). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210. [Google Scholar] [CrossRef]

- Roediger, H. L., III, & Marsh, E. J. (2005). The positive and negative consequences of multiple-choice testing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31(5), 1155–1159. [Google Scholar] [CrossRef]

- Roediger, H. L., III, Putnam, A. L., & Smith, M. A. (2011b). Ten benefits of testing and their applications to educational practice. Psychology of Learning and Motivation, 55, 1–36. [Google Scholar]

- Rowland, C. A. (2014). The effect of testing versus restudy on retention: A meta-analytic review of the testing effect. Psychological Bulletin, 140(6), 1432–1463. [Google Scholar] [CrossRef]

- Spitzer, H. F. (1939). Studies in retention. Journal of Educational Psychology, 30(9), 641–656. [Google Scholar] [CrossRef]

- Storm, B. C., & Levy, B. J. (2012). A progress report on the inhibitory account of retrieval-induced forgetting. Memory & Cognition, 40, 827–843. [Google Scholar] [CrossRef] [PubMed]

- Toppino, T. C., & Brochin, H. A. (1989). Learning from tests: The case of true-false examinations. The Journal of Educational Research, 83(2), 119–124. [Google Scholar] [CrossRef]

- Toppino, T. C., & Luipersbeck, S. M. (1993). Generality of the negative suggestion effect in objective tests. The Journal of Educational Research, 86(6), 357–362. [Google Scholar] [CrossRef]

- Uner, O., Tekin, E., & Roediger, H. L. (2022). True-false tests enhance retention relative to rereading. Journal of Experimental Psychology: Applied, 28(1), 114–129. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

James, K.K.; Storm, B.C. Sorry, Am I Intruding? Comparing Performance and Intrusion Rates for Pretested and Posttested Information. Behav. Sci. 2025, 15, 1060. https://doi.org/10.3390/bs15081060

James KK, Storm BC. Sorry, Am I Intruding? Comparing Performance and Intrusion Rates for Pretested and Posttested Information. Behavioral Sciences. 2025; 15(8):1060. https://doi.org/10.3390/bs15081060

Chicago/Turabian StyleJames, Kelsey K., and Benjamin C. Storm. 2025. "Sorry, Am I Intruding? Comparing Performance and Intrusion Rates for Pretested and Posttested Information" Behavioral Sciences 15, no. 8: 1060. https://doi.org/10.3390/bs15081060

APA StyleJames, K. K., & Storm, B. C. (2025). Sorry, Am I Intruding? Comparing Performance and Intrusion Rates for Pretested and Posttested Information. Behavioral Sciences, 15(8), 1060. https://doi.org/10.3390/bs15081060