Trust the Machine or Trust Yourself: How AI Usage Reshapes Employee Self-Efficacy and Willingness to Take Risks

Abstract

1. Introduction

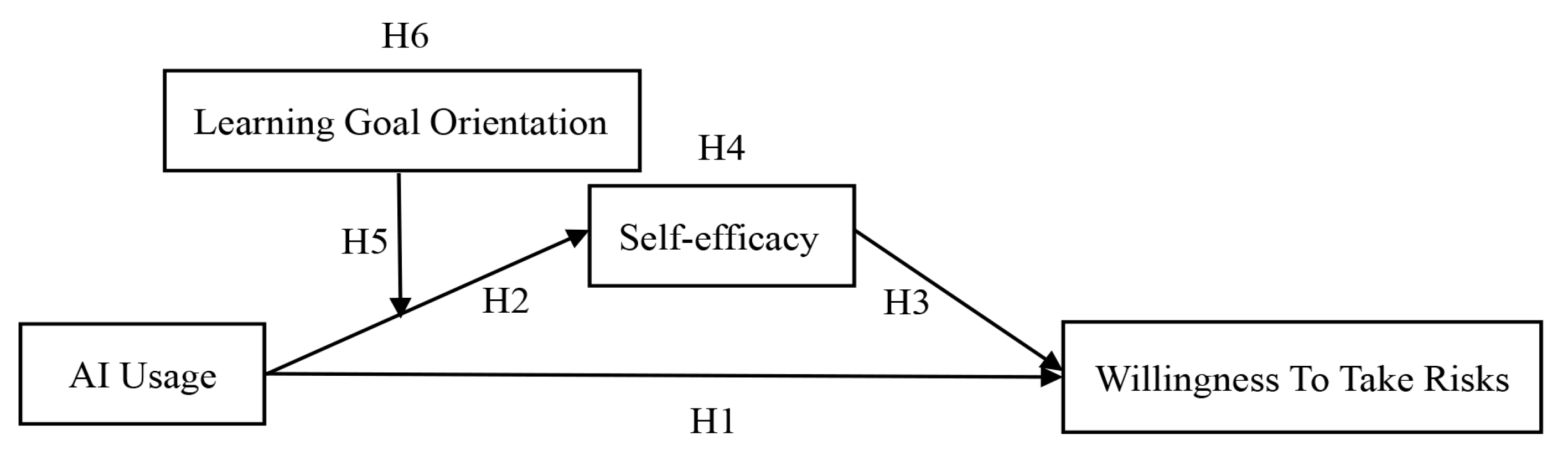

2. Theoretical Foundation and Research Hypotheses

2.1. AI Usage and Willingness to Take Risks

2.2. The Mediating Role of Self-Efficacy

2.3. The Moderating Effect of Learning Goal Orientation

2.4. The Moderated Mediation Effect

3. Method

3.1. Procedure and Participants

3.2. Measures

4. Results

4.1. Statistical Analysis

4.2. Common Method Bias Test

4.3. Confirmatory Factor Analysis

4.4. Descriptive Statistics and Correlation Analysis

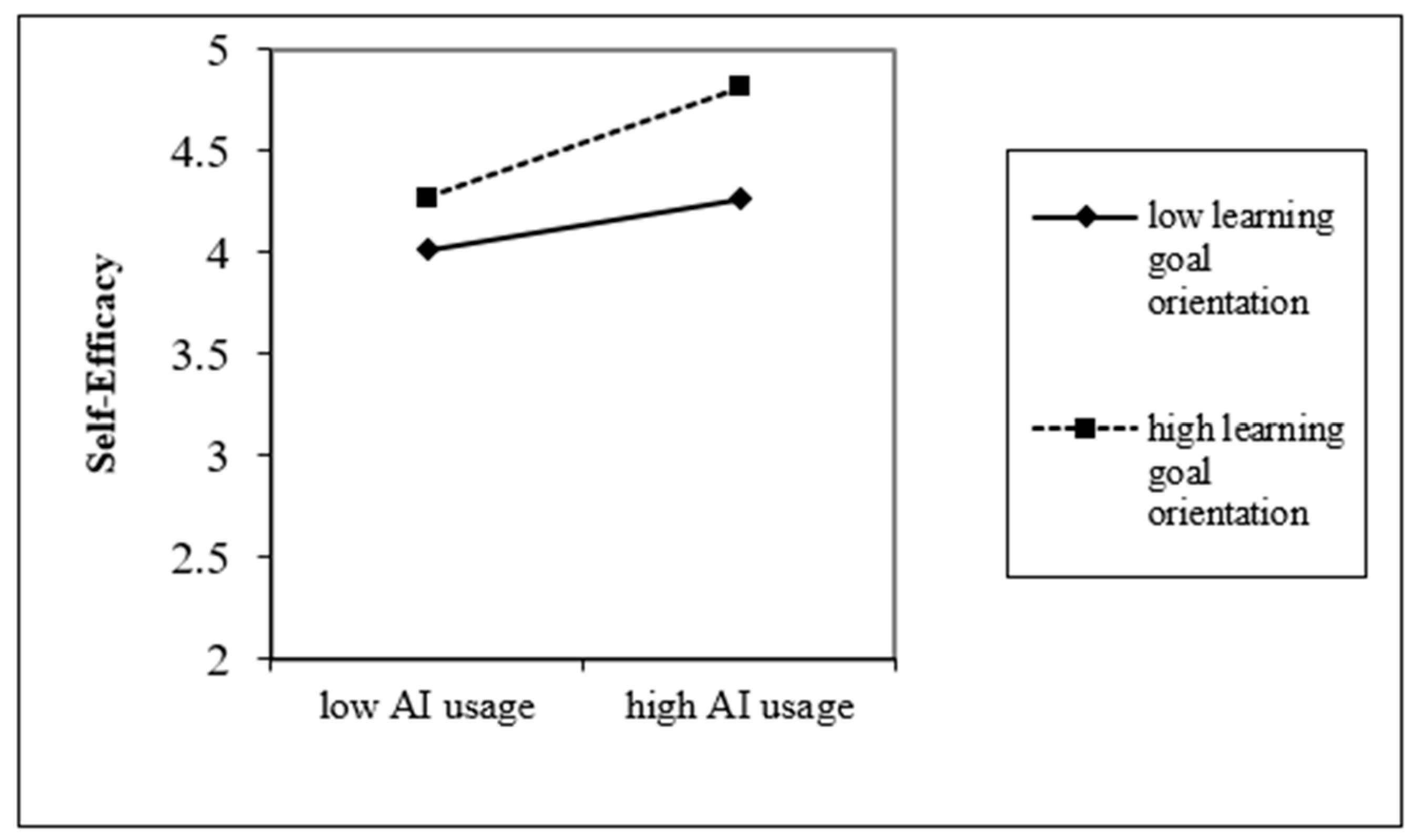

4.5. Hypothesis Testing

5. Discussion

5.1. Conclusions

5.2. Theoretical Contributions

5.3. Management Insights

6. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Survey Questionnaire

- Dear Sir/Madam:

- Hello!

- Q1. Please select your gender [Single Choice]

- Male

- Female

- Q2. Please select your age group [Single Choice]

- 0–20 years old

- 21–30 years old

- 31–40 years old

- 41–50 years old

- Over 51 years old

- Q3. Please select your highest education level [Single Choice]

- Associate degree or below

- Bachelor’s degree

- Master’s degree

- Doctoral degree

- Q4. Please select your years of work experience [Single Choice]

- Less than 5 years

- 6–10 years

- 11–15 years

- 16–20 years

- More than 21 years

- Q5. Your current position level is: [Single Choice]

- General employee (no management responsibilities)

- Frontline manager (supervisor/team leader level)

- Middle manager (department manager level)

- Senior manager (director level and above)

- Q6. Your industry type is: [Single Choice]

- Information Technology/Internet/Software

- Finance/Banking/Insurance

- Manufacturing/Industry

- Healthcare/Pharmaceutical/Health

- Real Estate/Construction

- Professional Services (Consulting/Legal/Accounting, etc.)

- Other

- Q7. The following questions are about your specific use of artificial intelligence (AI) in actual work. Artificial intelligence here includes but is not limited to: ChatGPT, intelligent assistants, intelligent analysis tools, automation systems, etc. Please make selections based on your actual usage.

- Scale: 1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly Agree

- I used artificial intelligence to carry out most of my job functions.

- I spent most of the time working with artificial intelligence.

- I worked with artificial intelligence in making major work decisions.

- Q8. The following questions are about your attitude toward learning and development at work. Please make selections based on your true thoughts and feelings.

- Scale: 1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly Agree

- I am willing to seek out challenging work assignments that I can learn a lot from.

- I often look for opportunities to develop new skills and knowledge.

- I enjoy challenging and difficult tasks at work where I’ll learn new skills.

- For me, development of my work ability is important enough to take risks.

- I prefer to work in situations that require a high level of ability and talent.

- Q9. The following items describe an individual’s attitudes and abilities when facing various situations. Please evaluate based on your true feelings.

- Scale: 1 = Strongly Disagree, 2 = Disagree, 3 = Uncertain, 4 = Agree, 5 = Strongly Agree

- I can always manage to solve difficult problems if I try hard enough.

- If someone opposes me, I can find the means and ways to get what I want.

- I am certain that I can accomplish my goals.

- I am confident that I could deal efficiently with unexpected events.

- Thanks to my resourcefulness, I can handle unforeseen situations.

- I can solve most problems if I invest the necessary effort.

- I can remain calm when facing difficulties because I can rely on my coping abilities.

- When I am confronted with a problem, I can find several solutions.

- If I am in trouble, I can think of a good solution.

- I can handle whatever comes my way.

- Q10. The following items describe your attitude toward risk and innovation at work. Please evaluate based on your true thoughts.Scale: 1 = Strongly Disagree, 2 = Disagree, 3 = Uncertain, 4 = Agree, 5 = Strongly Agree

- When I think of a good way to improve the way I accomplish my work, I will risk potential failure to try it out.

- I will take a risk and try something new if I have an idea that might improve my work, regardless of how I might be evaluated.

- I will take informed risks at work in order to get the best results, even though my efforts might fail.

- I am willing to go out on a limb at work and risk failure when I have a good idea that could help me become more successful.

- I don’t think twice about taking calculated risks in my job if I think they will make me more productive, regardless of whether or not my efforts will be successful.

- Even if failure is a possibility, I will take informed risks on the job if I think they will help me reach my goals.

- When I think of a way to increase the quality of my work, I will take a risk and pursue the idea even though it might not pan out.

- In an effort to improve my performance, I am willing to take calculated risks with my work, even if they may not prove successful.

References

- Ahmad, S. F., Han, H., Alam, M. M., Rehmat, M. K., Irshad, M., Arraño-Muñoz, M., & Ariza-Montes, A. (2023). Impact of artificial intelligence on human loss in decision making, laziness and safety in education. Humanities and Social Sciences Communications, 10(1), 1–14. [Google Scholar] [CrossRef]

- Albashrawi, M. (2025). Generative AI for decision-making: A multidisciplinary perspective. Journal of Innovation & Knowledge, 10(4), 100751. [Google Scholar] [CrossRef]

- Bag, S., Gupta, S., Kumar, A., & Sivarajah, U. (2021). An integrated artificial intelligence framework for knowledge creation and B2B marketing rational decision making for improving firm performance. Industrial Marketing Management, 92, 178–189. [Google Scholar] [CrossRef]

- Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall, Inc. [Google Scholar]

- Bandura, A. (1997). Self-efficacy: The exercise of control. Freeman. [Google Scholar]

- Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52, 1–26. [Google Scholar] [CrossRef]

- BarNir, A., Watson, W. E., & Hutchins, H. M. (2011). Mediation and moderated mediation in the relationship among role models, self-efficacy, entrepreneurial career intention, and gender. Journal of Applied Social Psychology, 41(2), 270–297. [Google Scholar] [CrossRef]

- Brynjolfsson, E., & McAfee, A. (2014). The second machine age: Work, progress, and prosperity in a time of brilliant technologies. W. W. Norton & Company. [Google Scholar]

- Caputo, A., Nguyen, V. H. A., & Delladio, S. (2025). Risk-taking, knowledge, and mindset: Unpacking the antecedents of entrepreneurial intention. International Entrepreneurship and Management Journal, 21(1), 1–29. [Google Scholar] [CrossRef]

- Chen, A., Yang, T., Ma, J., & Lu, Y. (2023). Employees’ learning behavior in the context of AI collaboration: A perspective on the job demand-control model. Industrial Management & Data Systems, 123(8), 2169–2193. [Google Scholar] [CrossRef]

- Chen, W., Kang, C., Yang, Y., & Wan, Y. (2022). The potential substitution risk of artificial intelligence and the development of employee occupational capabilities: Based on the perspective of employee insecurity. China Human Resource Development, 39(1), 84–97. [Google Scholar] [CrossRef]

- Choudhary, V., Marchetti, A., Shrestha, Y. R., & Puranam, P. (2023). Human-AI ensembles: When can they work? Journal of Management, 51(2), 536–569. [Google Scholar] [CrossRef]

- Dewett, T. (2006). Exploring the role of risk in employee creativity. The Journal of Creative Behavior, 40(1), 27–45. [Google Scholar] [CrossRef]

- Ding, C., Wang, S., & Zhao, S. (2023). The impact of learning goal orientation on employee innovation: Psychological and behavioral mechanisms based on meta-analysis. Science and Technology Progress and Policy, 40(2), 151–160. [Google Scholar] [CrossRef]

- Dweck, C. S. (1986). Motivational processes affecting learning. American Psychologist, 41(10), 1040–1048. [Google Scholar] [CrossRef]

- Hu, B., Mao, Y., & Kim, K. J. (2023). How social anxiety leads to problematic use of conversational AI: The roles of loneliness, rumination, and mind perception. Computers in Human Behavior, 145, 107760. [Google Scholar] [CrossRef]

- Jeong, J., & Jeong, I. (2025). Driving creativity in the AI-enhanced workplace: Roles of self-efficacy and transformational leadership. Current Psychology, 44(9), 8001–8014. [Google Scholar] [CrossRef]

- Jia, N., Luo, X., Fang, Z., & Liao, C. (2024). When and how artificial intelligence augments employee creativity. Academy of Management Journal, 67(1), 5–32. [Google Scholar] [CrossRef]

- Kim, M., & Beehr, T. A. (2023). Employees’ entrepreneurial behavior within their organizations: Empowering leadership and employees’ resources help. International Journal of Entrepreneurial Behavior & Research, 29(4), 986–1006. [Google Scholar] [CrossRef]

- Krueger, N., Jr., & Dickson, P. R. (1994). How believing in ourselves increases risk taking: Perceived self-efficacy and opportunity recognition. Decision Sciences, 25(3), 385–400. [Google Scholar] [CrossRef]

- Liang, W., Yao, J., Chen, A., Lv, Q., Zanin, M., Liu, J., Wong, S., Li, Y., Lu, J., Liang, H., Chen, G., Guo, H., Guo, J., Zhou, R., Ou, L., Zhou, N., Chen, H., Yang, F., Han, X., … He, J. (2020). Early triage of critically ill COVID-19 patients using deep learning. Nature Communications, 11(1), 3543. [Google Scholar] [CrossRef]

- Liu, D., Xu, X., & Yang, Z. (2025). Multiple social roles increase risk-taking in consumer decisions. Journal of Business Research, 196, 115424. [Google Scholar] [CrossRef]

- Liu, L., He, Y., & Hu, M. (2024). Active learning or deliberate avoidance? The impact of AI algorithm monitoring on employee innovative performance. Science of Science and Management of S&T, 45(10), 181–198. [Google Scholar] [CrossRef]

- Liu, Y., Li, Y., Song, K., & Chu, F. (2024). The two faces of Artificial Intelligence (AI): Analyzing how AI usage shapes employee behaviors in the hospitality industry. International Journal of Hospitality Management, 122, 103875. [Google Scholar] [CrossRef]

- Lucas, M. M., Samnallathampi, M. G., Rohit, George, H. J., & Parayitam, S. (2025). Risk taking and need for achievement as mediators in the relationship between self-efficacy and entrepreneurial intention. International Entrepreneurship and Management Journal, 21(1), 69. [Google Scholar] [CrossRef]

- Maran, T. K., Liegl, S., Davila, A., Moder, S., Kraus, S., & Mahto, R. V. (2022). Who fits into the digital workplace? Mapping digital self-efficacy and agility onto psychological traits. Technological Forecasting and Social Change, 175, 121352. [Google Scholar] [CrossRef]

- Mariani, M. M., Machado, I., Magrelli, V., & Dwivedi, Y. K. (2023). Artificial intelligence in innovation research: A systematic review, conceptual framework, and future research directions. Technovation, 122, 102623. [Google Scholar] [CrossRef]

- Markiewicz, Ł., & Weber, E. U. (2013). DOSPERT’s gambling risk-taking propensity scale predicts excessive stock trading. Journal of Behavioral Finance, 14(1), 65–78. [Google Scholar] [CrossRef]

- McKinsey & Company. (2024). The state of AI in early 2024: Gen AI adoption spikes and starts to generate value. McKinsey Global Survey on AI. Available online: https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-2024 (accessed on 1 March 2025).

- Payne, S. C., Youngcourt, S. S., & Beaubien, J. M. (2007). A meta-analytic examination of the goal orientation nomological net. Journal of Applied Psychology, 92(1), 128–150. [Google Scholar] [CrossRef]

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879–903. [Google Scholar] [CrossRef]

- Qian, C., & Kee, D. M. H. (2023). Exploring the path to enhance employee creativity in chinese MSMEs: The influence of individual and team learning orientation, transformational leadership, and creative self-efficacy. Information, 14(8), 449. [Google Scholar] [CrossRef]

- Qian, J., Chen, J., & Zhao, S. (2025). “Remaining Vigilant” while “Enjoying Prosperity”: How artificial intelligence usage impacts employees’ innovative behavior and proactive skill development. Behavioral Sciences, 15(4), 465. [Google Scholar] [CrossRef]

- Raisch, S., & Krakowski, S. (2021). Artificial intelligence and management: The automation-augmentation paradox. Academy of Management Review, 46(1), 192–210. [Google Scholar] [CrossRef]

- Said, N., Potinteu, A. E., Brich, I., Buder, J., Schumm, H., & Huff, M. (2023). An artificial intelligence perspective: How knowledge and confidence shape risk and benefit perception. Computers in Human Behavior, 149, 107855. [Google Scholar] [CrossRef]

- Scholz, U., Doña, B. G., Sud, S., & Schwarzer, R. (2002). Is general self-efficacy a universal construct? Psychometric findings from 25 countries. European Journal of Psychological Assessment, 18(3), 242–251. [Google Scholar] [CrossRef]

- Shrestha, Y. R., Ben-Menahem, S. M., & von Krogh, G. (2019). Organizational Decision-Making Structures in the Age of Artificial Intelligence. California Management Review, 61(4), 66–83. [Google Scholar] [CrossRef]

- Tang, P. M., Koopman, J., McClean, S. T., Zhang, J. H., Li, C. H., De Cremer, D., Lu, Y., & Ng, C. T. S. (2022). When conscientious employees meet intelligent machines: An integrative approach inspired by complementarity theory and role theory. Academy of Management Journal, 65(3), 1019–1054. [Google Scholar] [CrossRef]

- Tang, P. M., Koopman, J., Yam, K. C., De Cremer, D., Zhang, J. H., & Reynders, P. (2023). The self-regulatory consequences of dependence on intelligent machines at work: Evidence from field and experimental studies. Human Resource Management, 62(5), 721–744. [Google Scholar] [CrossRef]

- Vandewalle, D. (1997). Development and validation of a work domain goal orientation instrument. Educational and Psychological Measurement, 57(6), 995–1015. [Google Scholar] [CrossRef]

- VandeWalle, D., Cron, W. L., & Slocum, J. W., Jr. (2001). The role of goal orientation following performance feedback. Journal of Applied Psychology, 86(4), 629–640. [Google Scholar] [CrossRef]

- Wang, S., Sun, Z., Wang, H., Yang, D., & Zhang, H. (2025). Enhancing student acceptance of artificial intelligence-driven hybrid learning in business education: Interaction between self-efficacy, playfulness, emotional engagement, and university support. The International Journal of Management Education, 23(2), 101184. [Google Scholar] [CrossRef]

- Xu, X. (2024). Large language models empowering the financial industry in the digital age: Opportunities, challenges, and solutions. Jinan Journal (Philosophy & Social Sciences), 46(8), 108–122. [Google Scholar]

- Yang, C., Tang, D., & Mei, J. (2021). The relationship between abusive management and employees’ proactive innovation behavior: Based on the perspectives of motivation and ability beliefs. Science & Technology Progress and Policy, 38(3), 143–150. [Google Scholar] [CrossRef]

- Yin, M., Jiang, S., & Niu, X. (2024). Can AI really help? The double-edged sword effect of AI assistant on employees’ innovation behavior. Computers in Human Behavior, 150, 107987. [Google Scholar] [CrossRef]

- Zhan, X. J., Wan, Y., Li, Z. C., Zhang, M. F., & Wang, Z. (2025). Research on the influence mechanism of self-leadership on proactive service behavior of gig workers from the perspective of social cognitive theory. Chinese Journal of Management, 22(1), 65–73. [Google Scholar] [CrossRef]

- Zhang, H., Gao, Z., & Li, H. (2023). Gain or loss: The “double-edged sword” effect of artificial intelligence technology application on employee innovative behavior. Science and Technology Progress and Policy, 40(18), 1–11. [Google Scholar] [CrossRef]

- Zhang, Q., Liao, G., Ran, X., & Wang, F. (2025). The impact of AI usage on innovation behavior at work: The moderating role of openness and job complexity. Behavioral Sciences, 15(4), 491. [Google Scholar] [CrossRef]

- Zhang, S., Zhao, X., Zhou, T., & Kim, J. H. (2024). Do you have AI dependency? The roles of academic self-efficacy, academic stress, and performance expectations on problematic AI usage behavior. International Journal of Educational Technology in Higher Education, 21(1), 34. [Google Scholar] [CrossRef]

- Zhang, Z., & He, W. (2024). Research on human and artificial intelligence and its significance for organizational management. Foreign Economics & Management, 46(10), 3–17. [Google Scholar] [CrossRef]

- Zheng, S., Guo, Z., Liao, C., Li, S., Zhan, X., & Feng, X. (2025). Booster or stumbling block? Unpacking the ‘double-edged’ influence of artificial intelligence usage on employee innovative performance. Current Psychology, 44, 7800–7817. [Google Scholar] [CrossRef]

- Zhu, X., Wang, S., & He, Q. (2021). The impact of job skill requirements on employee work engagement in the context of artificial intelligence embedding. Foreign Economics & Management, 43(11), 15–25. [Google Scholar] [CrossRef]

| Variable | Conception | Hypotheses and Empirical Support | Theoretical Support |

|---|---|---|---|

| AI usage | Employees engaging with various forms of AI to perform relevant tasks, including analysis, computation, and decision-making (Tang et al., 2022). | H1 (Xu, 2024; Said et al., 2023; Albashrawi, 2025; Mariani et al., 2023) | Based on social cognitive theory, AI usage as an external environmental change reduces employees’ cognitive burden and increases their confidence in handling work and tasks. In turn, this enhanced self-efficacy improves willingness to take risks. Learning goal orientation, as an individual characteristic, moderates the extent to which AI usage influences self-efficacy and subsequently affects the degree of willingness to take risks. Thus, SCT provides a theoretical framework for linking the relationships among AI usage, self-efficacy, learning goal orientation, and willingness to take risks. |

| H2 (Zheng et al., 2025; Jeong & Jeong, 2025; Y. Liu et al., 2024) | |||

| Willingness to take risks | The tendency to accept certain job-related risks for the sake of achieving positive outcomes at work (Dewett, 2006). | H3 (Lucas et al., 2025; D. Liu et al., 2025; Kim & Beehr, 2023) | |

| H4 (Q. Zhang et al., 2025; Yin et al., 2024; Liang et al., 2020) | |||

| Self-efficacy | An individual’s belief in their ability to succeed in specific tasks (Bandura, 1997). | H5 (Wang et al., 2025; L. Liu et al., 2024; H. Zhang et al., 2023) | |

| Learning goal orientation | An individual’s drive to enhance their abilities by acquiring new knowledge and skills (Dweck, 1986). | H6 (J. Qian et al., 2025; Ding et al., 2023; C. Qian & Kee, 2023) |

| Model | χ2 | df | χ2/df | IFI | CFI | TLI | RMSEA |

|---|---|---|---|---|---|---|---|

| 1. Four-factor model (AIU, LGO, SE, WTR) | 678.723 | 287 | 2.365 | 0.908 | 0.894 | 0.907 | 0.056 |

| 2. Three-factor model (AIU + LGO, SE, WTR) | 1127.894 | 296 | 3.81 | 0.803 | 0.783 | 0.802 | 0.080 |

| 3. Three-factor model (AIU + SE, LGO, WTR) | 1160.794 | 296 | 3.922 | 0.796 | 0.794 | 0.774 | 0.081 |

| 4. Three-factor model (AIU + WTR, LGO, SE) | 1196.331 | 296 | 4.04 | 0.787 | 0.786 | 0.765 | 0.083 |

| 5. Two-factor model (AIU + WTRLGO + SE) | 1377.565 | 298 | 4.623 | 0.745 | 0.743 | 0.72 | 0.091 |

| 6. Single-factor model (AIU + SE + LGO + WTR) | 1464.489 | 299 | 4.898 | 0.724 | 0.723 | 0.698 | 0.094 |

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Gender | 1.713 | 0.453 | ||||||||||

| 2. Age | 2.658 | 0.669 | 0.079 | |||||||||

| 3. Edu | 2.206 | 0.576 | 0.027 | 0.124 ** | ||||||||

| 4. Work | 1.993 | 0.977 | 0.042 | 0.805 ** | −0.038 | |||||||

| 5. Position | 2.029 | 0.958 | 0.082 | 0.486 ** | 0.240 ** | 0.444 ** | ||||||

| 6. Section | 3.380 | 2.168 | 0.005 | 0.054 | −0.155 ** | 0.156 ** | −0.024 | |||||

| 7. AIU | 3.628 | 0.910 | −0.013 | 0.021 | 0.121 * | −0.059 | 0.072 | −0.318 ** | (0.845) | |||

| 8. LGO | 4.259 | 0.519 | −0.028 | 0.044 | 0.07 | 0.008 | 0.185 ** | −0.196 ** | 0.579 ** | (0.810) | ||

| 9. SE | 4.217 | 0.376 | −0.101 * | 0.055 | 0.197 ** | −0.005 | 0.174 ** | −0.231 ** | 0.560 ** | 0.572 ** | (0.804) | |

| 10. WTR | 4.221 | 0.435 | −0.085 | −0.042 | 0.145 ** | −0.063 | 0.101 * | −0.258 ** | 0.531 ** | 0.553 ** | 0.641 ** | (0.817) |

| Variable | SE | WTR | ||||||

|---|---|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | Model 7 | ||

| Control Variable | Gender | −0.12 * | −0.106 ** | −0.095 ** | −0.083 * | −0.089 | −0.079 * | −0.028 |

| Age | 0.027 | −0.023 | −0.004 | −0.004 * | −0.098 | −0.145 * | −0.133 * | |

| Edu | 0.125 * | 0.102 * | 0.114 ** | 0.102 ** | 0.093 | 0.071 | 0.021 | |

| Work | −0.06 | 0.003 | 0 | −0.013 | 0.004 | 0.06 | 0.058 | |

| Position | 0.16 ** | 0.129 ** | 0.066 | 0.056 | 0.126 * | 0.096 * | 0.034 | |

| Section | −0.2 *** | −0.044 | −0.039 | −0.023 | −0.235 | −0.089 | −0.067 | |

| Independent Variable | AIU | 0.524 *** | 0.323 *** | 0.3 *** | 0.493 *** | 0.238 *** | ||

| Moderating Variable | LGO | 0.355 *** | 0.557 *** | |||||

| Interaction Term | AIU*LGO | 0.251 *** | ||||||

| Intermediary Variable | SE | 0.487 *** | ||||||

| R2 | 0.112 | 0.356 | 0.436 | 0.464 | 0.098 | 0.313 | 0.466 | |

| ΔR2 | 0.112 | 0.243 | 0.324 | 0.351 | 0.098 | 0.215 | 0.368 | |

| F | 9.173 *** | 34.199 *** | 41.834 *** | 41.512 *** | 7.889 *** | 28.302 *** | 47.243 *** | |

| Paths: AI Usage → Self-Efficacy → Willingness to Take Risks | ||||

|---|---|---|---|---|

| Model | Efficiency Value | Standard Error | 95% Confidence Interval | |

| Total Effect | 0.236 | 0.020 | 0.196 | 0.276 |

| Direct Effect | 0.114 | 0.021 | 0.073 | 0.155 |

| Indirect Effect | 0.122 | 0.027 | 0.071 | 0.176 |

| Independent Variable | Dependent Variable | Moderating Variable Grouping | Mediating Effect Estimate | Standard Error | 95% Confidence Interval | |

|---|---|---|---|---|---|---|

| AI Usage | Willingness to Take Risks | eff1 (M − 1 SD) | 0.028 | 0.024 | −0.014 | 0.082 |

| eff2 (M) | 0.084 | 0.019 | 0.036 | 0.110 | ||

| eff3 (M + 1 SD) | 0.112 | 0.025 | 0.067 | 0.163 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Z.; Song, G.; Zhang, Y.; Li, B. Trust the Machine or Trust Yourself: How AI Usage Reshapes Employee Self-Efficacy and Willingness to Take Risks. Behav. Sci. 2025, 15, 1046. https://doi.org/10.3390/bs15081046

Han Z, Song G, Zhang Y, Li B. Trust the Machine or Trust Yourself: How AI Usage Reshapes Employee Self-Efficacy and Willingness to Take Risks. Behavioral Sciences. 2025; 15(8):1046. https://doi.org/10.3390/bs15081046

Chicago/Turabian StyleHan, Zhiyong, Guoqing Song, Yanlong Zhang, and Bo Li. 2025. "Trust the Machine or Trust Yourself: How AI Usage Reshapes Employee Self-Efficacy and Willingness to Take Risks" Behavioral Sciences 15, no. 8: 1046. https://doi.org/10.3390/bs15081046

APA StyleHan, Z., Song, G., Zhang, Y., & Li, B. (2025). Trust the Machine or Trust Yourself: How AI Usage Reshapes Employee Self-Efficacy and Willingness to Take Risks. Behavioral Sciences, 15(8), 1046. https://doi.org/10.3390/bs15081046