Navigating by Design: Effects of Individual Differences and Navigation Modality on Spatial Memory Acquisition

Abstract

1. Introduction

1.1. Types of Spatial Knowledge

1.2. Sense of Direction and Individual Differences

1.3. Gender, Strategy Use, and Spatial Ability

1.4. Effects of Navigation Modalities on Spatial Learning

1.5. Present Study

2. Materials and Methods

2.1. Participants

2.2. Design

2.3. Materials

2.3.1. Pre-Screening Scale

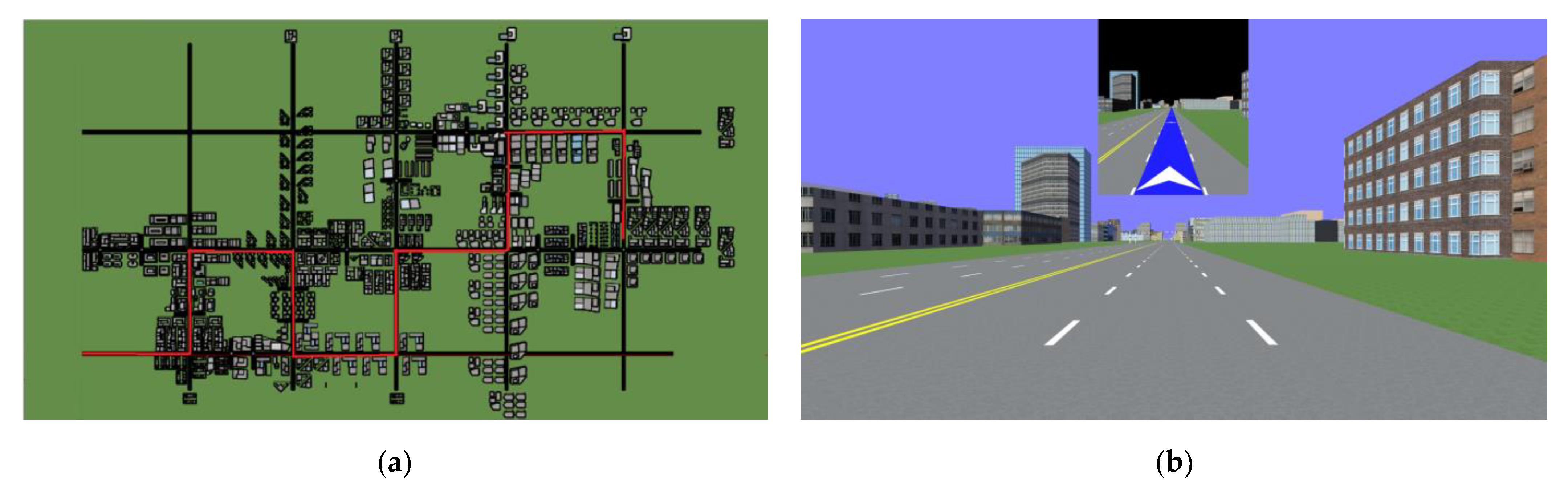

2.3.2. Learning Phase

2.3.3. Test Phase

2.4. Procedure

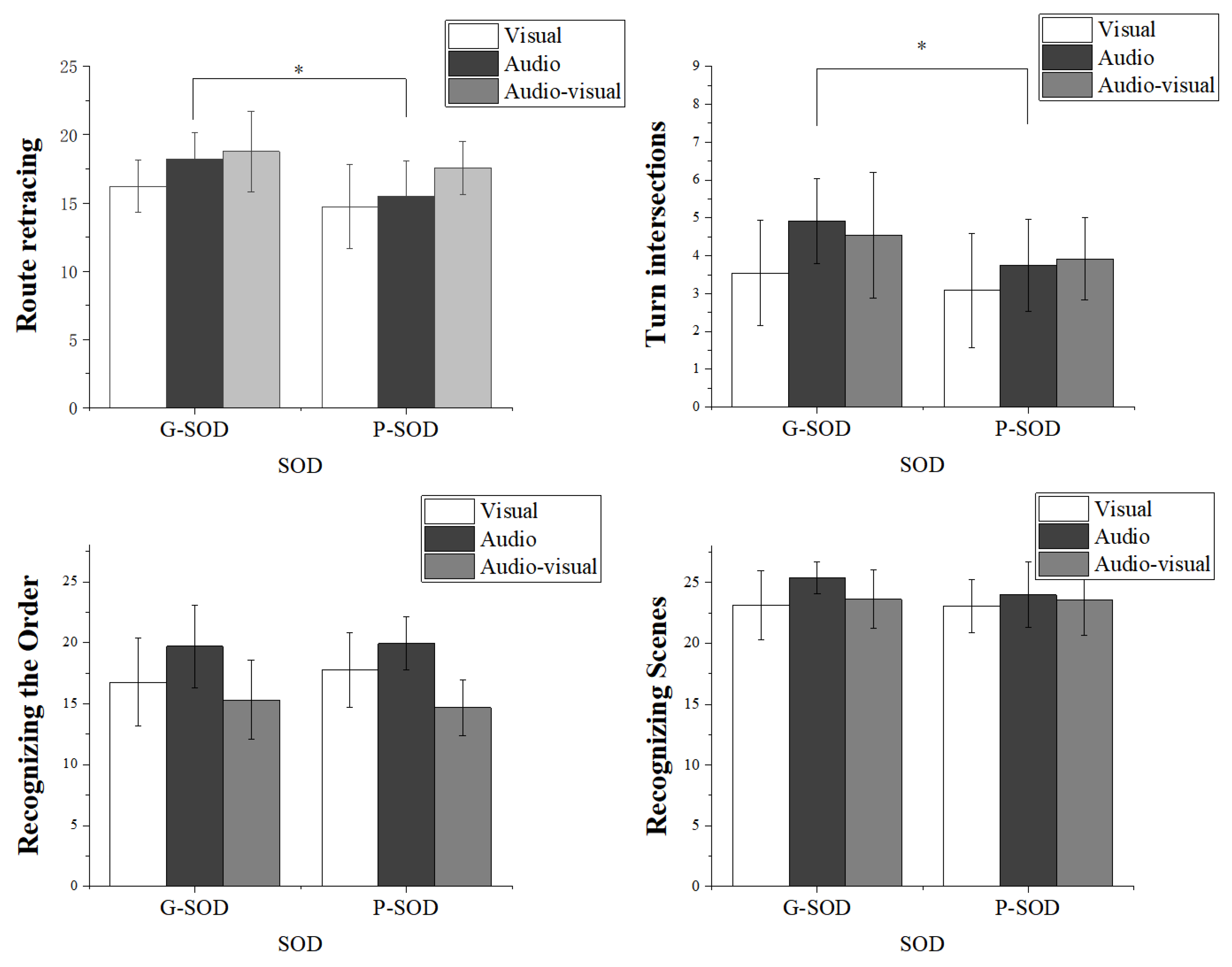

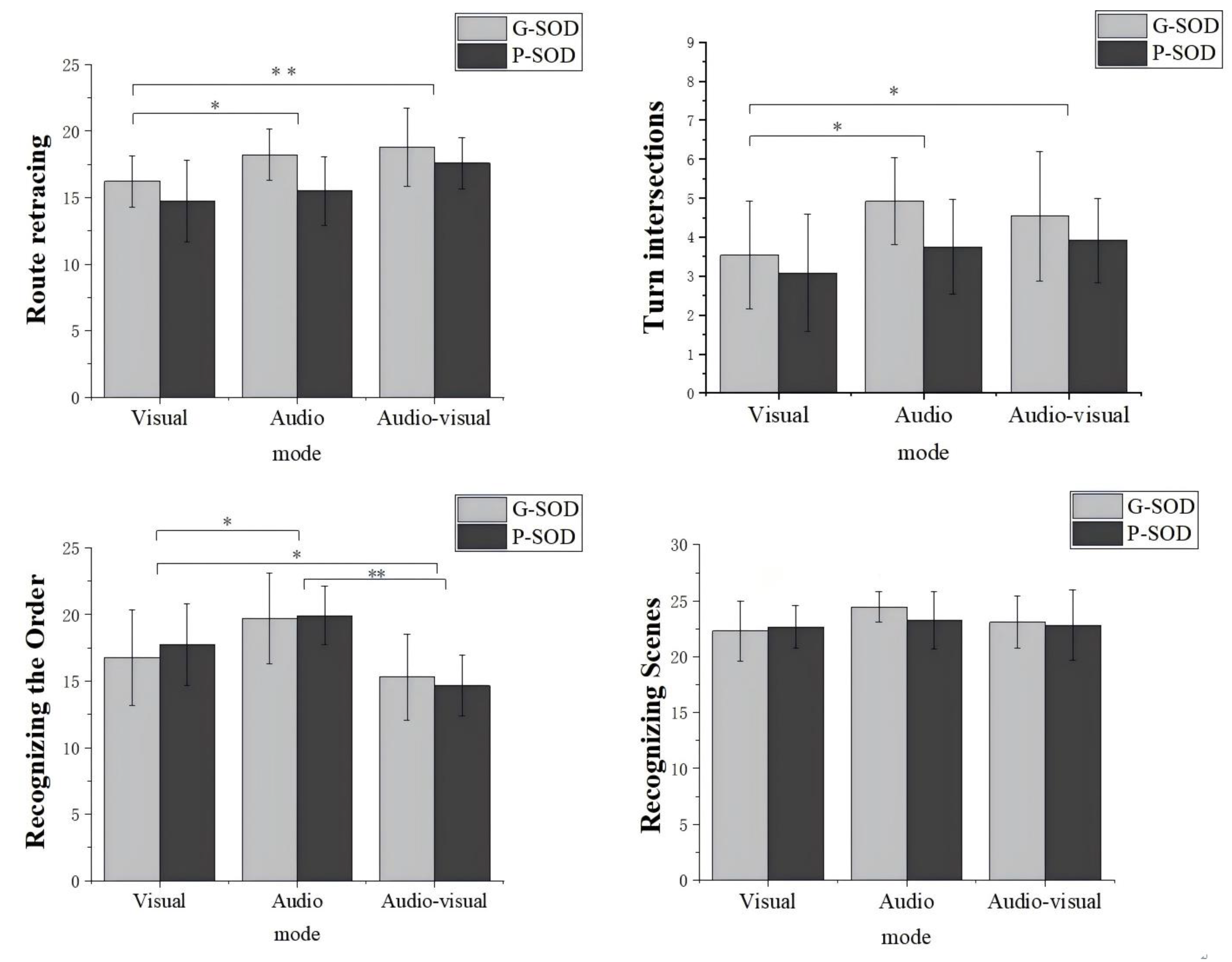

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boone, A. P., Gong, X., & Hegarty, M. (2018). Sex differences in navigation strategy and efficiency. Memory & Cognition, 46(6), 909–922. [Google Scholar] [CrossRef] [PubMed]

- Burte, H., & Montello, D. R. (2017). How sense-of-direction and learning intentionality relate to spatial knowledge acquisition in the environment. Cognitive Research: Principles and Implications, 2(1), 18. [Google Scholar] [CrossRef] [PubMed]

- Chrastil, E. R., & Warren, W. H. (2012). Active and passive spatial learning in human navigation: Acquisition of survey knowledge. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(5), 1520–1537. [Google Scholar] [CrossRef] [PubMed]

- Coluccia, E., & Louse, G. (2004). Gender differences in spatial orientation: A review. Journal of Environmental Psychology, 24(3), 329–340. [Google Scholar] [CrossRef]

- Duif, I., Wegman, J., de Graaf, K., Smeets, P. A. M., & Aarts, E. (2020). Distraction decreases rIFG-putamen connectivity during goal-directed effort for food rewards. Scientific Reports, 10(1), 19072. [Google Scholar] [CrossRef] [PubMed]

- Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Sciences, 8(4), 162–169. [Google Scholar] [CrossRef] [PubMed]

- Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef] [PubMed]

- Fenech, E., Drews, F., & Bakdash, J. (2010, September 27). The effects of acoustic turn-by-turn navigation on wayfinding. Human Factors and Ergonomics Society Annual Meeting, San Francisco, CA, USA. [Google Scholar] [CrossRef]

- Fernandez-Baizan, C., Arias, J. L., & Mendez, M. (2019). Spatial memory in young adults: Gender differences in egocentric and allocentric performance. Behavioural Brain Research, 359, 694–700. [Google Scholar] [CrossRef] [PubMed]

- Garden, S., Cornoldi, C., & Logie, R. (2002). Visuo-spatial working memory in navigation. Applied Cognitive Psychology, 16(1), 35–50. [Google Scholar] [CrossRef]

- Gardony, A. L., Brunyé, T. T., Mahoney, C. R., & Taylor, H. A. (2013). How navigational aids impair spatial memory: Evidence for divided attention. Spatial Cognition & Computation, 13(4), 319–350. [Google Scholar] [CrossRef]

- Gardony, A. L., Brunyé, T. T., & Taylor, H. A. (2015). Navigational aids and spatial memory impairment: The role of divided attention. Spatial Cognition and Computation, 15(4), 246–284. [Google Scholar] [CrossRef]

- Golledge, R. G., Jacobson, R. D., Kitchin, R., & Blades, M. (2000). Cognitive maps, spatial abilities, and human wayfinding. Geographical Review of Japan, Series B, 73(2), 93–104. [Google Scholar] [CrossRef]

- Hegarty, M., Richardson, A. E., Montello, D. R., Lovelace, K., & Subbiah, I. (2002). Development of a self-report measure of environmental spatial ability. Intelligence, 30(5), 425–447. [Google Scholar] [CrossRef]

- Hejtmánek, L., Oravcová, I., Motýl, J., Horáček, J., & Fajnerová, I. (2018). Spatial knowledge impairment after GPS guided navigation: Eye-tracking study in a virtual town. International Journal of Human-Computer Studies, 116(4), 15–24. [Google Scholar] [CrossRef]

- Ishikawa, T., Fujiwara, H., Imai, O., & Okabe, A. (2008). Wayfinding with a GPS-based mobile navigation system: A comparison with maps and direct experience. Journal of Environmental Psychology, 28(1), 74–82. [Google Scholar] [CrossRef]

- Ishikawa, T., & Montello, D. R. (2006). Spatial knowledge acquisition from direct experience in the environment: Individual differences in the development of metric knowledge and the integration of separately learned places. Cognitive Psychology, 52(2), 93–129. [Google Scholar] [CrossRef] [PubMed]

- Jones, J. A., Swan, J. E., Singh, G., Kolstad, E., & Ellis, S. R. (2008, August 9–10). The effects of virtual reality, augmented reality, and motion parallax on egocentric depth perception. 5th Symposium on Applied Perception in Graphics and Visualization, Los Angeles, CA, USA. [Google Scholar] [CrossRef]

- Kim, K., & Bock, O. (2021). Acquisition of landmark, route, and survey knowledge in a wayfinding task: In stages or in parallel? Psychological Research, 85, 2098–2106. [Google Scholar] [CrossRef] [PubMed]

- Kozlowski, L. T., & Bryant, K. J. (1977). Sense of direction, spatial orientation, and cognitive maps. Journal of Experimental Psychology Human Perception & Performance, 3(4), 590–598. [Google Scholar]

- Kuipers, B. (1978). Modeling spatial knowledge. Cognitive Science, 2(2), 129–153. [Google Scholar] [CrossRef]

- Lawton, C. A. (1994). Gender differences in way-finding strategies: Relationship to spatial ability and spatial anxiety. Sex Roles, 30, 765–779. [Google Scholar] [CrossRef]

- Lei, X., & Mou, W. (2022). Developing global spatial memories by one-shot across-boundary navigation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 48(6), 798–812. [Google Scholar] [CrossRef] [PubMed]

- Liu, X., Mou, W., & McNamara, T. P. (2012). Selection of spatial reference directions prior to seeing objects. Spatial Cognition & Computation, 12(1), 53–69. [Google Scholar] [CrossRef]

- Meilinger, T., Knauff, M., & Bülthoff, H. H. (2008). Working memory in wayfinding—A dual task experiment in a virtual city. Cognitive Science, 32(4), 755–770. [Google Scholar] [CrossRef] [PubMed]

- Moffat, S. D. (2009). Aging and spatial navigation: What do we know and where do we go? Neuropsychology Review, 19(4), 478–489. [Google Scholar] [CrossRef] [PubMed]

- Montello, D. R., Hegarty, M., Richardson, A. E., & Waller, D. (2004). Spatial memory of real environments, virtual environments, and maps. In G. L. Allen (Ed.), Human spatial memory: Remembering where (pp. 251–285). Lawrence Erlbaum Associates Publishers. [Google Scholar] [CrossRef]

- Munion, A. K., Stefanucci, J. K., Rovira, E., Squire, P., & Hendricks, M. (2019). Gender differences in spatial navigation: Characterizing wayfinding behaviors. Psychonomic Bulletin & Review, 26(6), 1933–1940. [Google Scholar] [CrossRef] [PubMed]

- Münzer, S., Zimmer, H. D., Schwalm, M., Baus, J., & Aslan, I. (2006). Computer-assisted navigation and the acquisition of route and survey knowledge. Journal of Environmental Psychology, 26(4), 300–308. [Google Scholar] [CrossRef]

- Padilla, L. M., Creem-Regehr, S. H., Stefanucci, J. K., & Cashdan, E. A. (2017). Sex differences in virtual navigation influenced by scale and navigation experience. Psychonomic Bulletin & Review, 24(2), 582–590. [Google Scholar] [CrossRef]

- Pazzaglia, F., & De Beni, R. (2001). Strategies of processing spatial information in survey and landmark-centred individuals. European Journal of Cognitive Psychology, 13(4), 493–508. [Google Scholar] [CrossRef]

- Peer, M., Brunec, I. K., Newcombe, N. S., & Epstein, R. A. (2021). Structuring knowledge with cognitive maps and cognitive graphs. Trends in Cognitive Sciences, 25(1), 37–54. [Google Scholar] [CrossRef] [PubMed]

- Presson, C. C., & Montello, D. R. (1988). Points of reference in spatial cognition: Stalking the elusive landmark. British Journal of Developmental Psychology, 6(4), 378–381. [Google Scholar] [CrossRef]

- Riecke, B. E., Cunningham, D. W., & Bülthoff, H. H. (2007). Spatial updating in virtual reality: The sufficiency of visual information. Psychological Research, 71(3), 298–313. [Google Scholar] [CrossRef] [PubMed]

- Rochais, C., Henry, S., & Hausberger, M. (2017). Spontaneous attention-capture by auditory distractors as predictor of distractibility: A study of domestic horses (Equus caballus). Scientific Reports, 7(1), 15283. [Google Scholar] [CrossRef] [PubMed]

- Roskos-Ewoldsen, B., McNamara, T. P., Shelton, A. L., & Carr, W. (1998). Mental representations of large and small spatial layouts are orientation dependent. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24(1), 215–226. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Seminati, L., Hadnett-Hunter, J., Joiner, R., & Petrini, K. (2022). Multisensory GPS impact on spatial representation in an immersive virtual reality driving game. Scientific Reports, 12(1), 7401. [Google Scholar] [CrossRef] [PubMed]

- Sholl, M. J. (1988). The relation between sense of direction and mental geographic updating. Intelligence, 12(3), 299–314. [Google Scholar] [CrossRef]

- Siegel, A. W., & White, S. H. (1975). The development of spatial representations of large-scale environments. Advances in Child Development and Behavior, 10, 9–55. [Google Scholar] [CrossRef] [PubMed]

- Spiers, H. J., & Maguire, E. A. (2008). The dynamic nature of cognition during wayfinding. Journal of Environmental Psychology, 28(3), 232–249. [Google Scholar] [CrossRef] [PubMed]

- Uttal, D. H., Meadow, N. G., Tipton, E., Hand, L. L., Alden, A. R., Warren, C., & Newcombe, N. S. (2013). The malleability of spatial skills: A meta-analysis of training studies. Psychological Bulletin, 139(2), 352–402. [Google Scholar] [CrossRef] [PubMed]

- von Stülpnagel, R., & Steffens, M. C. (2013). Active route learning in virtual environments: Disentangling movement control from intention, instruction specificity, and navigation control. Psychological Research, 77(5), 555–574. [Google Scholar] [CrossRef] [PubMed]

- Weisberg, S. M., Schinazi, V. R., Newcombe, N. S., Shipley, T. F., & Epstein, R. A. (2014). Variations in cognitive maps: Understanding individual differences in navigation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40(3), 669–682. [Google Scholar] [CrossRef] [PubMed]

- Wen, W., Ishikawa, T., & Sato, T. (2011). Working memory in spatial knowledge acquisition: Differences in encoding processes and sense of direction. Applied Cognitive Psychology, 25(4), 654–662. [Google Scholar] [CrossRef]

- Wen, W., Ishikawa, T., & Sato, T. (2013). Individual differences in the encoding processes of egocentric and allocentric survey knowledge. Cognitive Science, 37(1), 176–192. [Google Scholar] [CrossRef] [PubMed]

- Wiener, J. M., Büchner, S. J., & Hölscher, C. (2009). Taxonomy of human wayfinding tasks: A knowledge-based approach. Spatial Cognition and Computation, 9(2), 152–165. [Google Scholar] [CrossRef]

- Wolbers, T., & Hegarty, M. (2010). What determines our navigational abilities? Trends in Cognitive Sciences, 14(3), 138–146. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, N., & DeGirolamo, G. J. (2012). Differential effects of aging on spatial learning through exploratory navigation and map reading. Frontiers in Aging Neuroscience, 4, 14. [Google Scholar] [CrossRef] [PubMed]

| Male (n = 33) | Female (n = 42) | t | p | |||

|---|---|---|---|---|---|---|

| M | SD | M | SD | |||

| Route retracing | 17.36 | 2.40 | 16.5 | 3.01 | 1.345 | 0.183 |

| turn intersection | 4.00 | 1.20 | 3.95 | 1.62 | 0.141 | 0.888 |

| Recognizing Scenes | 24.15 | 2.06 | 23.55 | 2.76 | 1.209 | 0.298 |

| Recognizing the Order | 17.30 | 3.57 | 17.38 | 3.58 | −0.094 | 0.926 |

| Route retracing RT (ms) | 546,213.33 | 50,100.94 | 541,563.71 | 46,479.63 | 0.416 | 0.782 |

| Recognizing Scenes RT (ms) | 2029.30 | 494.60 | 1948.56 | 363.35 | 0.815 | 0.069 |

| Recognizing the Order RT (ms) | 3485.72 | 856.83 | 3162.76 | 821.16 | 1.659 | 0.683 |

| G-SOD (n = 39) | P-SOD (n = 36) | ||||||

|---|---|---|---|---|---|---|---|

| Visual (n = 13) | Audio (n = 13) | Audio–Visual (n = 13) | Visual (n = 12) | Audio (n = 12) | Audio–Visual (n = 12) | ||

| Route retracing | Total CN (25) | 16.23 (1.92) | 18.23 (1.92) | 18.77 (2.95) | 14.75 (3.08) | 15.50 (2.58) | 17.58 (1.93) |

| turn intersection | CN (9) | 3.54 (1.39) | 4.92 (1.12) | 4.54 (1.66) | 3.08 (1.51) | 3.75 (1.22) | 3.92 (1.08) |

| Recognizing Scenes | CN (28) | 23.15 (2.83) | 25.38 (1.33) | 23.62 (2.40) | 23.08 (2.19) | 24.00 (2.66) | 23.58 (2.91) |

| Recognizing the Order | CN (28) | 16.77 (3.61) | 19.69 (3.40) | 15.31 (3.25) | 17.75 (3.05) | 19.92 (2.19) | 14.67 (2.27) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, Y.; Sun, B. Navigating by Design: Effects of Individual Differences and Navigation Modality on Spatial Memory Acquisition. Behav. Sci. 2025, 15, 959. https://doi.org/10.3390/bs15070959

Liu X, Zhang Y, Sun B. Navigating by Design: Effects of Individual Differences and Navigation Modality on Spatial Memory Acquisition. Behavioral Sciences. 2025; 15(7):959. https://doi.org/10.3390/bs15070959

Chicago/Turabian StyleLiu, Xianyun, Yanan Zhang, and Baihu Sun. 2025. "Navigating by Design: Effects of Individual Differences and Navigation Modality on Spatial Memory Acquisition" Behavioral Sciences 15, no. 7: 959. https://doi.org/10.3390/bs15070959

APA StyleLiu, X., Zhang, Y., & Sun, B. (2025). Navigating by Design: Effects of Individual Differences and Navigation Modality on Spatial Memory Acquisition. Behavioral Sciences, 15(7), 959. https://doi.org/10.3390/bs15070959