Social Metamemory Judgments in the Legal Context: Examining Judgments About the Memory of Others

Abstract

1. Introduction

1.1. Social Metamemory Judgments in the Identification Context

1.2. The Utilization of Instructions

1.3. Assessor Characteristics and Beliefs

1.4. The Current Study

2. Materials and Methods

2.1. Design

2.2. Participants

2.3. Materials and Procedure

2.4. Individual Difference Measures

3. Results

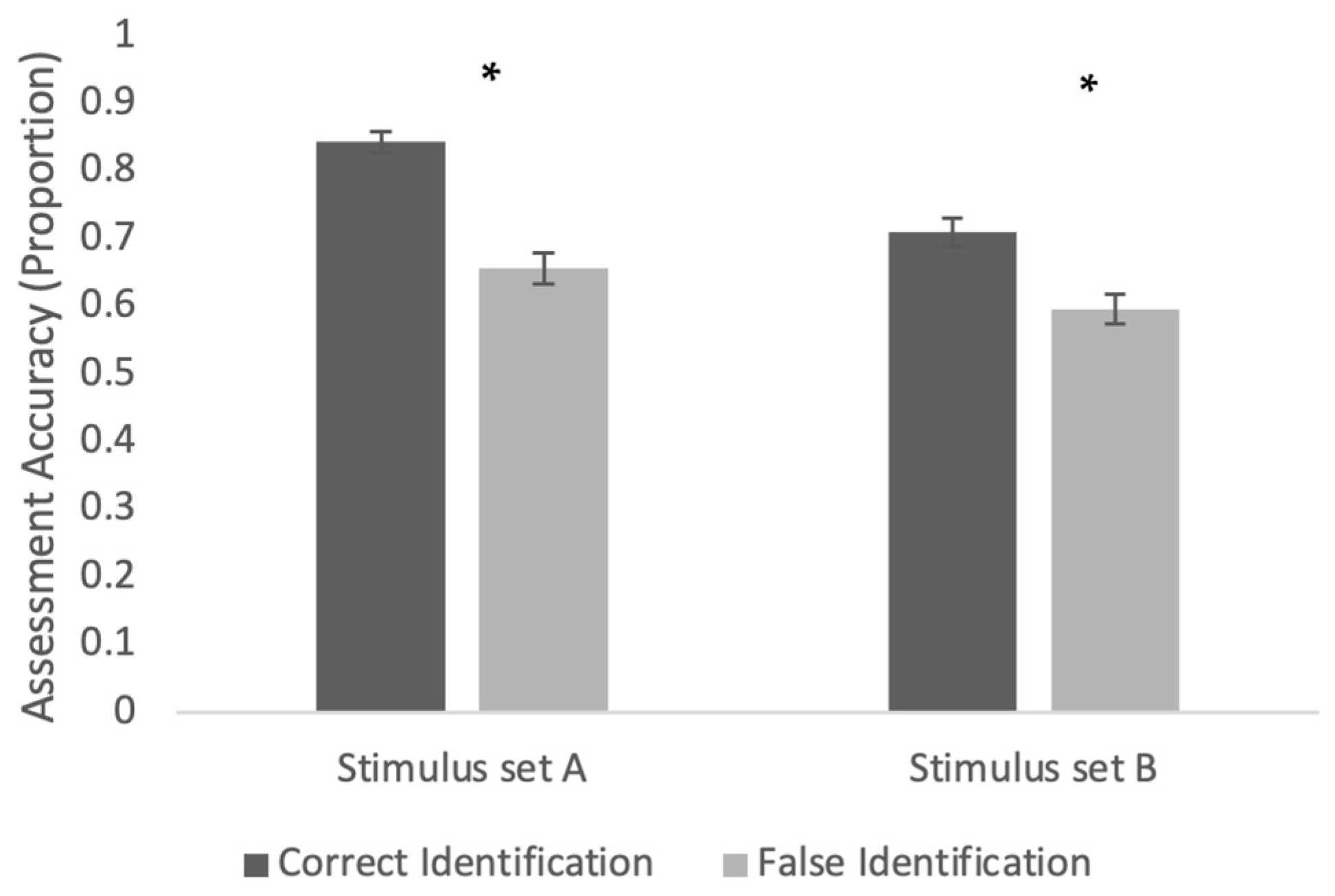

3.1. Overall Accuracy (And the Impact of Instructions on Overall Accuracy)

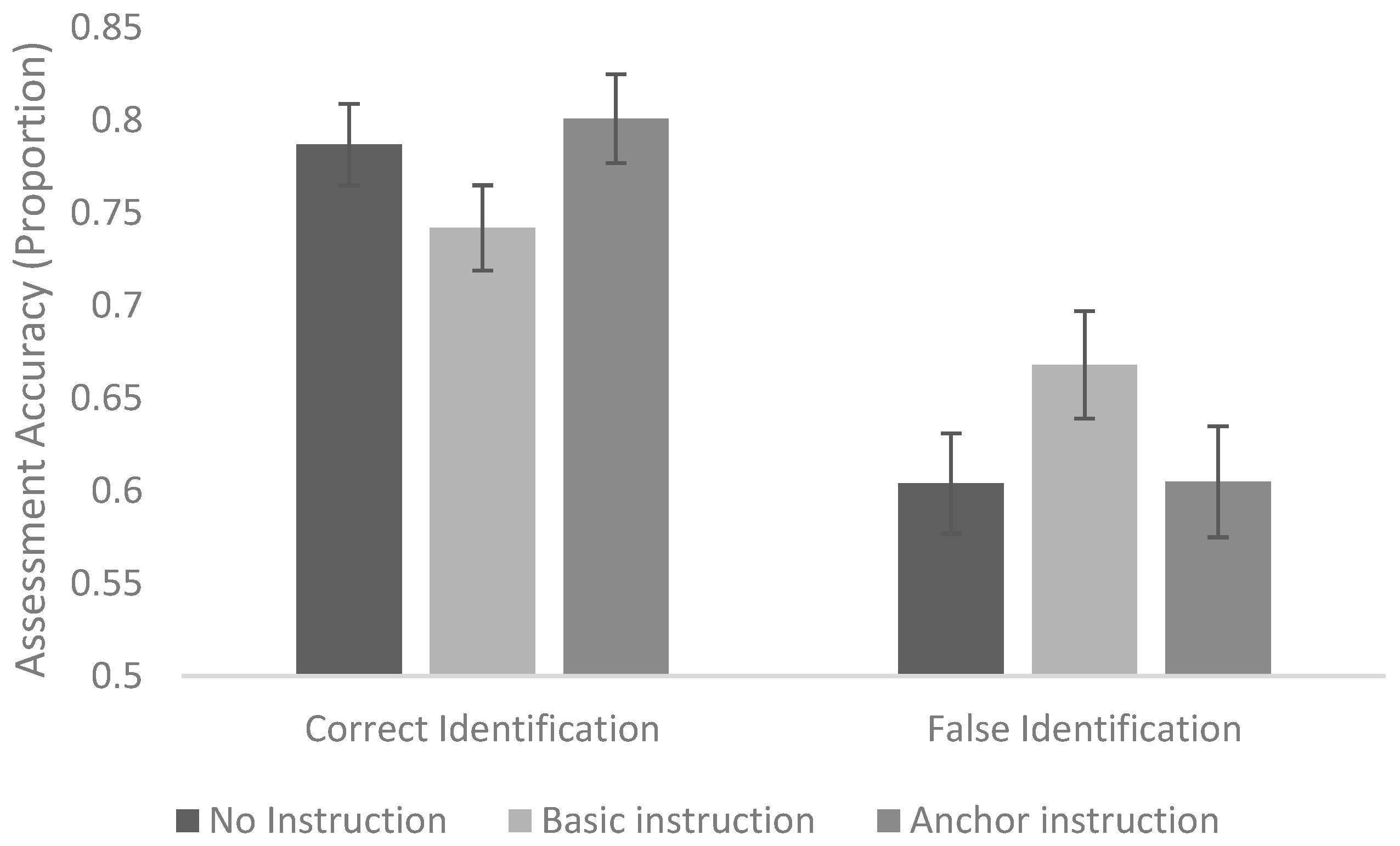

3.2. Witness Confidence and the Relationship Between Instructions and Witness Confidence

3.3. Associations Between Assessor Characteristics and Beliefs and Accuracy

3.3.1. Beliefs About Memory

3.3.2. Gist vs. Verbatim Processing (Autistic Traits)

3.3.3. Beliefs About the Criminal Justice System

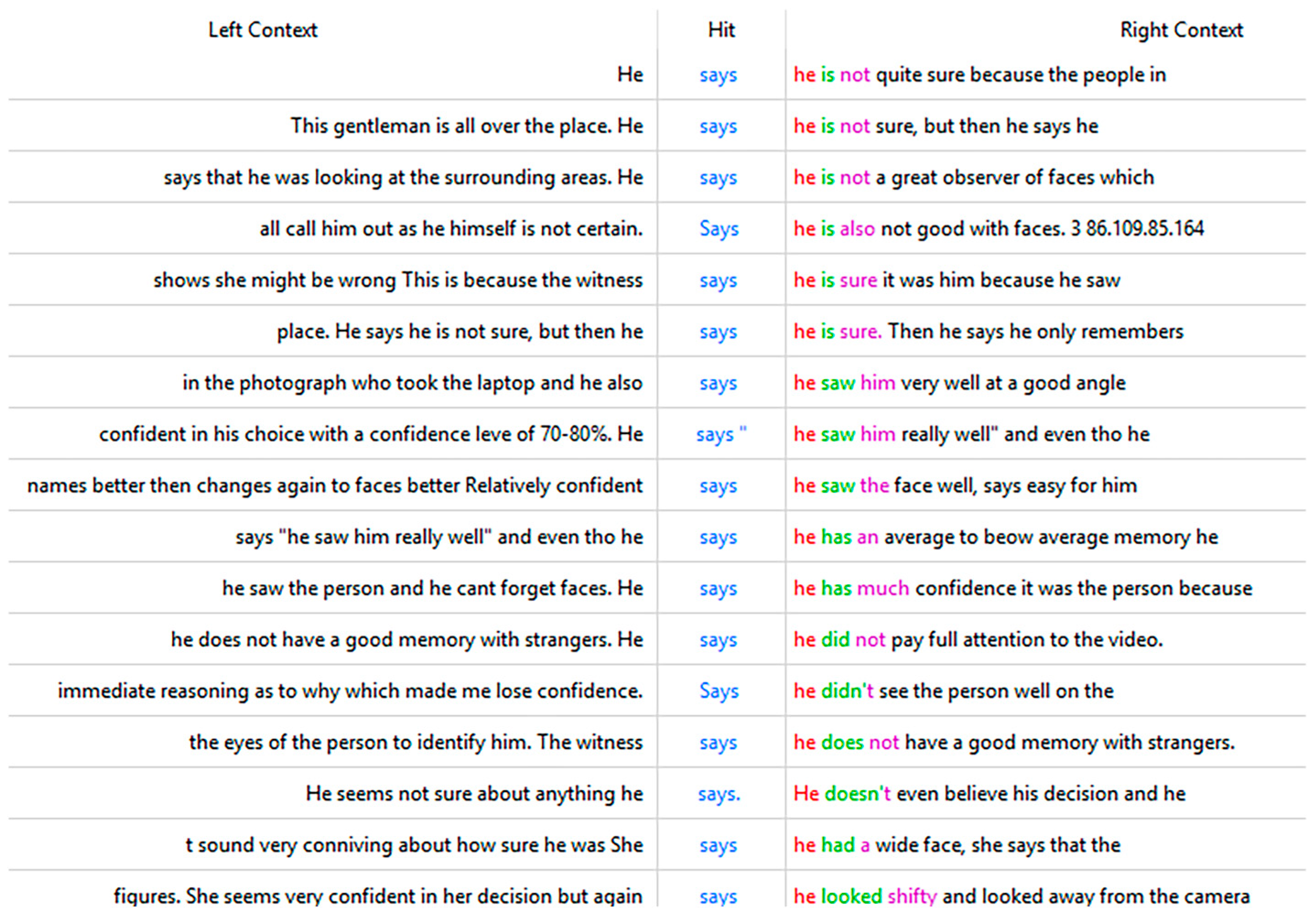

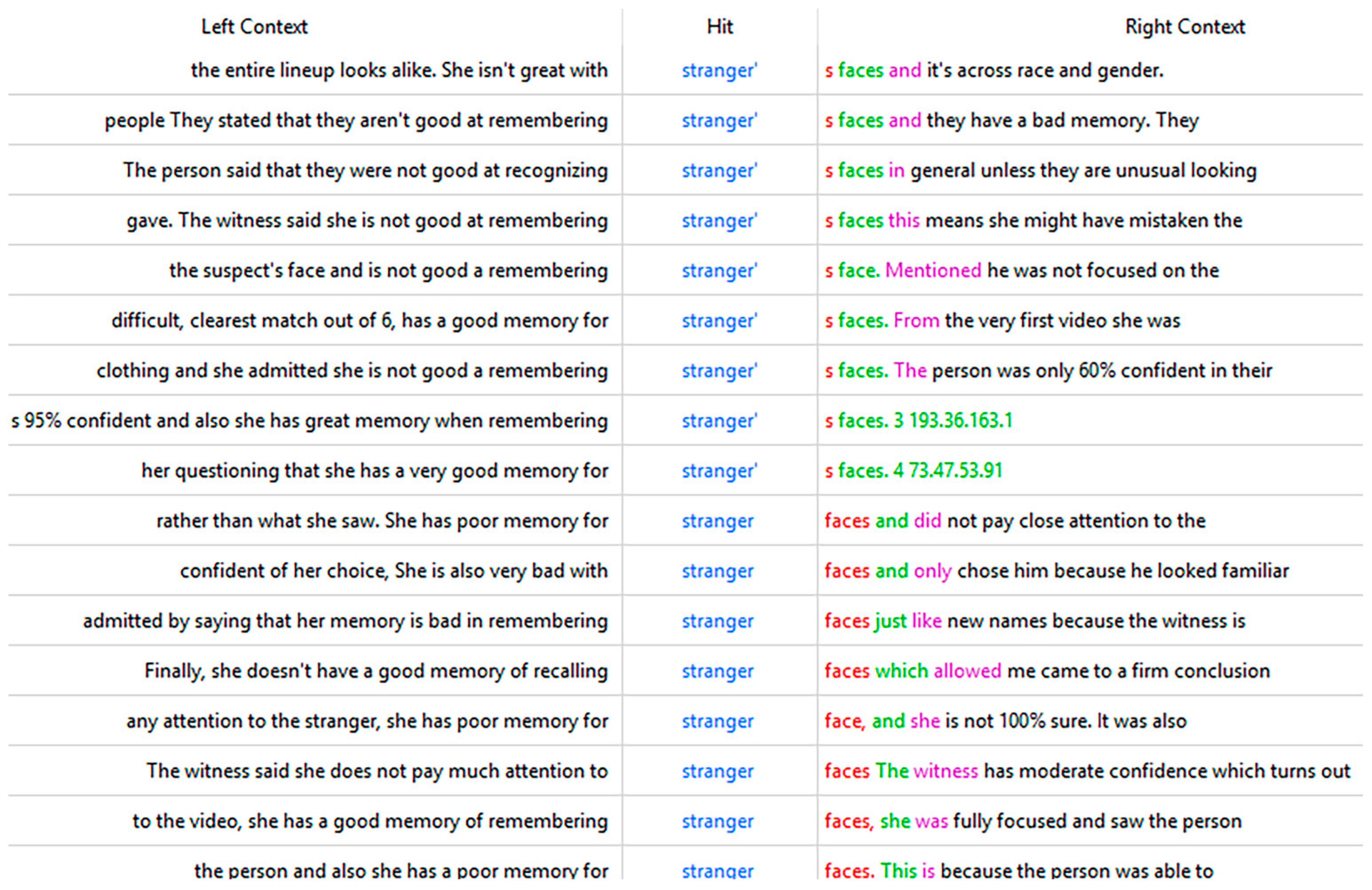

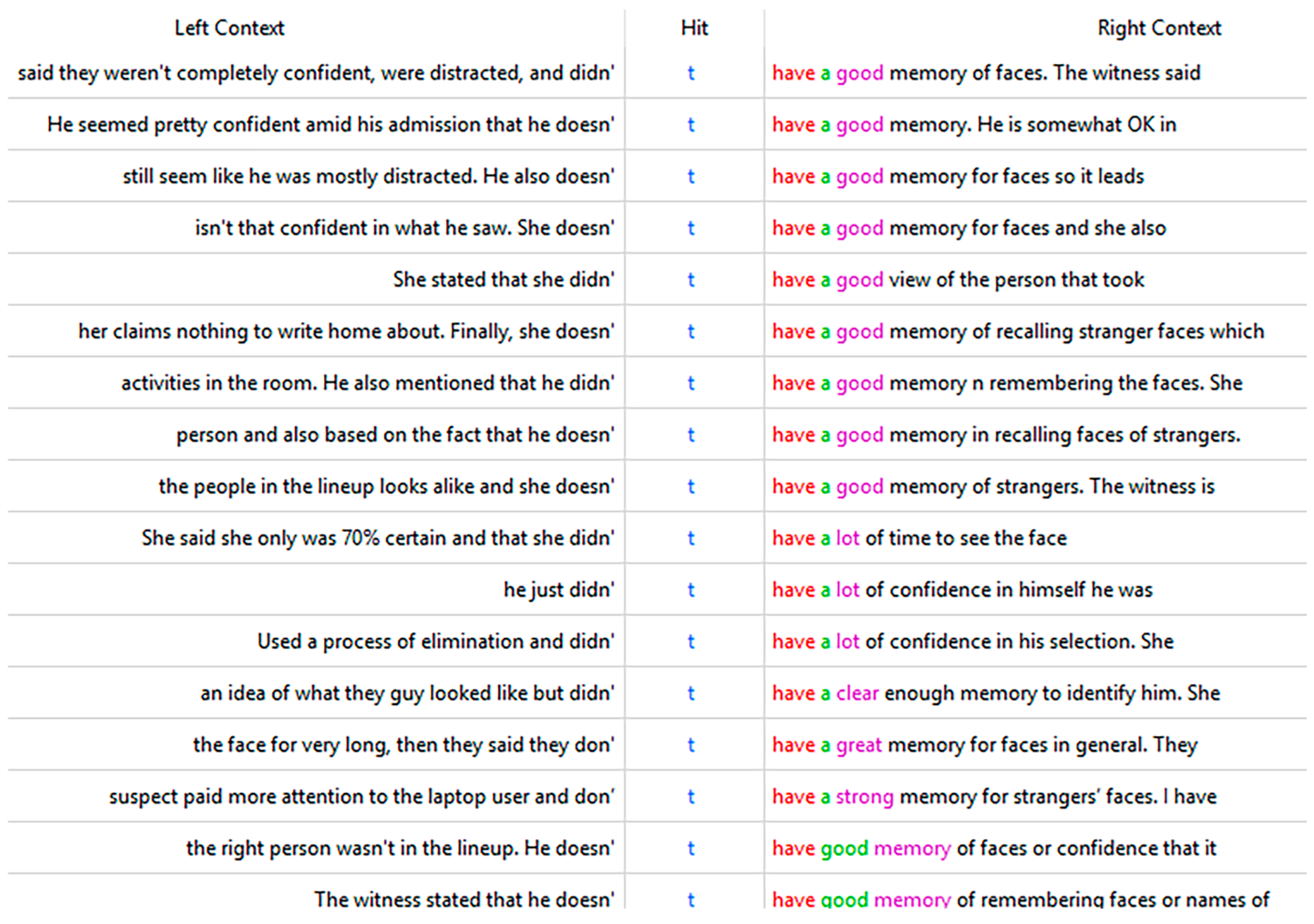

3.3.4. Reasoning Underlying Assessments

“The witness gave a detailed and clear explanation for their identification, mentioning specific features like the distinct red jacket and the way the person moved. These specific details suggest that the witness paid close attention to the suspect’s characteristics, which supports the likelihood of accuracy.”

“…he was able to grab the shape of his head and a view detail even though he is not 100 percent confident, but he was able to identify number 5 to be the culprit. that tells a lot”

“I think he seems somewhat uncertain but he does say he has a generally good memory for faces of people he meets. I think it’s quite possible he picked the right one but there is also a possibility that he was wrong, His hesitation made me wonder about how sure he really was.”

“I believe the witness made the correct identification because she appeared confident in her decision. She showed no signs of hesitation at all. Given the clarity and the speed of her choice, along with the absence of any cues or influence, I feel confident that their identification was accurate.”

“He seemed consistent, but admitted to missing key features and being unable to say with confidence which was the person who stole the laptop. Admitted to not being good with faces as well! When he said he was 60% confident in addition to not being good with faces, I had my mind made up to not fully rely on his testimony! He was honest, which is vital!”

“Inconsistent markers in the first few sets of answers. He claimed he seen a direct shot of the person who stole the laptop, then after that had said he looked similar to the picture he chose from. That choice should have been more direct, instead of seeming more like a choice or opinion. Regardless of the circumstance! It should have been more direct! Based on the disorganized thought process/speaking (mixing up words & numbers slightly) I would also say this is a little bit of a red flag, since we are seeking honesty here. I personally find these two things inconsistent when determining if someone is correct or incorrect in what they say.”

4. Discussion

4.1. How Effective Are Lay Assessors at Distinguishing Between Accurate and Inaccurate Identification Evidence?

4.2. Can Instructions Utilizing Anchors Informed by Psycho-Legal Research Improve the Way That Assessors Utilize Confidence as a Cue in Assessing Identification Evidence?

4.3. Which Characteristics and Beliefs of Assessors Facilitate More Accurate Assessments of Identification Evidence?

4.4. Limitations, Future Directions, and Policy Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Rank | Type | Freq_Tar | Range_Tar | Keyness (Likelihood) |

|---|---|---|---|---|

| 1 | witness | 1140 | 306 | 6301.6 |

| 2 | person | 927 | 332 | 4192.761 |

| 3 | confident | 721 | 343 | 3893.981 |

| 4 | she | 1831 | 367 | 3476.409 |

| 5 | identification | 433 | 164 | 2280.037 |

| 6 | memory | 462 | 244 | 2135.866 |

| 7 | sure | 526 | 293 | 2005.005 |

| 8 | faces | 399 | 224 | 1989.449 |

| 9 | laptop | 339 | 190 | 1848.386 |

| 10 | he | 1647 | 366 | 1792.416 |

| 11 | face | 508 | 254 | 1648.942 |

| 12 | video | 298 | 183 | 1430.945 |

| 13 | confidence | 294 | 142 | 1398.197 |

| 14 | saw | 398 | 226 | 1355.317 |

| 15 | suspect | 267 | 121 | 1292.155 |

| 16 | very | 466 | 266 | 1218.169 |

| 17 | good | 480 | 271 | 1157.195 |

| 18 | not | 964 | 386 | 1123.588 |

| 19 | attention | 297 | 192 | 1052.662 |

| 20 | was | 1359 | 405 | 926.82 |

| 21 | lineup | 146 | 80 | 819.119 |

| 22 | facial | 145 | 104 | 791.056 |

| 23 | correct | 161 | 101 | 706.731 |

| 24 | remembering | 118 | 84 | 637.672 |

| 25 | took | 260 | 155 | 617.297 |

| 26 | also | 388 | 213 | 612.347 |

| 27 | features | 145 | 104 | 581.984 |

| 28 | the | 5086 | 473 | 555.762 |

| 29 | unsure | 97 | 87 | 507.574 |

| 30 | identify | 131 | 94 | 504.008 |

| 31 | strangers | 106 | 76 | 491.567 |

| 32 | paying | 113 | 97 | 459.511 |

| 33 | seemed | 180 | 121 | 442.097 |

| 34 | mentioned | 98 | 63 | 430.707 |

| 35 | choice | 141 | 91 | 425.531 |

| 36 | identified | 114 | 85 | 421.374 |

| 37 | details | 115 | 81 | 413.689 |

| 38 | stated | 92 | 62 | 402.145 |

| 39 | decision | 129 | 93 | 400.775 |

| 40 | clearly | 124 | 97 | 398.493 |

| 41 | clear | 147 | 105 | 371.77 |

| 42 | pretty | 120 | 97 | 369.089 |

| 43 | because | 278 | 162 | 367.048 |

| 44 | camera | 95 | 78 | 332.956 |

| 45 | wasn | 147 | 94 | 312.302 |

| 46 | made | 225 | 140 | 308.554 |

| 47 | is | 960 | 342 | 307.28 |

| 48 | paid | 100 | 78 | 307.129 |

| 49 | admitted | 65 | 45 | 298.451 |

| 49 | seems | 123 | 86 | 296.628 |

| 51 | thief | 59 | 33 | 295.572 |

| 52 | assessment | 90 | 59 | 289.209 |

| 53 | description | 73 | 55 | 285.835 |

| 54 | accuracy | 60 | 34 | 274.993 |

| 55 | t | 497 | 201 | 271.48 |

| 56 | difficult | 98 | 75 | 265.305 |

| 56 | they | 514 | 139 | 257.582 |

| 58 | did | 215 | 147 | 250.928 |

| 59 | stole | 52 | 40 | 245.505 |

| 60 | able | 106 | 79 | 238.902 |

| 61 | identifying | 56 | 40 | 227.187 |

| 62 | based | 123 | 81 | 225.163 |

| 63 | incorrect | 46 | 33 | 224.049 |

| 64 | said | 382 | 199 | 222.622 |

| 65 | testimony | 53 | 26 | 216.667 |

| 66 | guessing | 43 | 35 | 215.853 |

| 67 | defendant | 42 | 29 | 214.881 |

| 68 | that | 1176 | 349 | 213.46 |

| 69 | guy | 83 | 47 | 213.079 |

| 70 | number | 131 | 87 | 210.386 |

| 71 | certain | 98 | 76 | 197.113 |

| 72 | seem | 90 | 73 | 197.02 |

| 73 | believe | 102 | 68 | 194.899 |

| 74 | answers | 52 | 47 | 182.579 |

| 75 | accurate | 50 | 38 | 179.893 |

| 76 | culprit | 30 | 18 | 172.564 |

| 77 | uncertainty | 44 | 27 | 169.584 |

| 78 | really | 110 | 82 | 163.531 |

| 79 | remember | 78 | 64 | 162.83 |

| 80 | explanation | 43 | 29 | 160.804 |

| 81 | hesitant | 28 | 24 | 152.476 |

| 82 | look | 113 | 92 | 147.377 |

| 83 | shape | 54 | 46 | 146.891 |

| 84 | detail | 49 | 44 | 145.586 |

| 85 | picked | 54 | 45 | 141.077 |

| 86 | about | 298 | 194 | 140.435 |

| 87 | saying | 68 | 59 | 139.892 |

| 88 | specific | 68 | 43 | 139.25 |

| 89 | doubt | 55 | 42 | 138.642 |

| 90 | somewhat | 47 | 37 | 138.24 |

| 91 | angle | 33 | 32 | 136.553 |

| 92 | didn | 135 | 89 | 134.857 |

| 93 | close | 81 | 67 | 134.459 |

| 94 | hesitation | 28 | 21 | 130.067 |

| 95 | who | 310 | 191 | 128.851 |

| 96 | recall | 41 | 33 | 128.206 |

| 97 | think | 125 | 93 | 125.346 |

| 98 | her | 495 | 233 | 124.539 |

| 99 | elimination | 26 | 23 | 122.742 |

| 100 | acne | 22 | 18 | 118.434 |

| AC (39,086 Tokens, 309 Files) | CBC (21,718 Tokens, 178 Files) | ||||||

|---|---|---|---|---|---|---|---|

| Type | Freq_Tar | Range_Tar | Keyness (Likelihood) | Type | Freq_Tar | Range_Tar | Keyness (Likelihood) |

| t | 367 | 147 | 264.814 | explanation | 27 | 16 | 137.973 |

| difficult | 77 | 59 | 242.784 | consistent | 30 | 16 | 112.259 |

| guy | 61 | 32 | 177.129 | matched | 24 | 14 | 111.666 |

| really | 77 | 60 | 128.419 | shape | 29 | 25 | 106.333 |

| angle | 26 | 25 | 122.812 | specific | 34 | 20 | 94.055 |

| didn | 100 | 66 | 122.368 | somewhat | 23 | 18 | 87.02 |

| picked | 40 | 33 | 118.579 | recollection | 12 | 9 | 85.84 |

| recall | 30 | 23 | 105.271 | attentive | 12 | 8 | 77.964 |

| acne | 17 | 14 | 104.47 | videos | 13 | 10 | 77.142 |

| look | 74 | 61 | 101.962 | individual | 31 | 19 | 76.301 |

| her | 334 | 159 | 99.937 | hesitation | 12 | 8 | 70.188 |

| perpetrator | 16 | 7 | 97.992 | likely | 34 | 18 | 68.179 |

| stranger | 25 | 19 | 96.162 | appeared | 24 | 14 | 66.558 |

| elimination | 18 | 16 | 95.006 | who | 121 | 71 | 64.216 |

| think | 84 | 65 | 91.988 | gave | 33 | 24 | 62.499 |

| close | 52 | 44 | 89.728 | their | 131 | 34 | 59.38 |

| suspects | 18 | 15 | 82.969 | level | 31 | 17 | 57.677 |

| picture | 36 | 32 | 81.66 | average | 24 | 19 | 57.04 |

| lot | 50 | 43 | 80.641 | witnesses | 11 | 10 | 56.469 |

| judgement | 14 | 13 | 80.426 | match | 15 | 13 | 55.145 |

References

- Alter, A. L., & Oppenheimer, D. M. (2009). Uniting the tribes of fluency to form a metacognitive nation. Personality and Social Psychology Review, 13(3), 219–235. [Google Scholar] [CrossRef] [PubMed]

- Anthony, L. (2024). AntConc (Version 4.3.1) [Computer software]. Waseda University. Available online: https://www.laurenceanthony.net/software (accessed on 20 June 2025).

- Baker, P. (2010). Corpus methods in linguistics. In L. Litosseliti (Ed.), Research methods in linguistics (pp. 93–113). Continuum International Publishing Group. [Google Scholar]

- Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ): Evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31, 5–17. [Google Scholar] [CrossRef]

- Bell, B. E., & Loftus, E. F. (1988). Degree of detail of eyewitness testimony and mock juror judgments 1. Journal of Applied Social Psychology, 18(14), 1171–1192. [Google Scholar] [CrossRef]

- Benton, T. R., Ross, D. F., Bradshaw, E., Thomas, W. N., & Bradshaw, G. S. (2006). Eyewitness memory is still not common sense: Comparing jurors, judges and law enforcement to eyewitness experts. Applied Cognitive Psychology, 20(1), 115–129. [Google Scholar] [CrossRef]

- Bernstein, D. M., & Loftus, E. F. (2009). How to tell if a particular memory is true or false. Perspectives on Psychological Science, 4(4), 370–374. [Google Scholar] [CrossRef] [PubMed]

- Bogliacino, F., Grimalda, G., Ortoleva, P., & Ring, P. (2017). Exposure to and recall of violence reduce short-term memory and cognitive control. Proceedings of the National Academy of Sciences of the United States of America, 114(32), 8505–8510. [Google Scholar] [CrossRef]

- Bruer, K., & Pozzulo, J. D. (2014). Influence of eyewitness age and recall error on mock juror decision-making. Legal and Criminological Psychology, 19(2), 332–348. [Google Scholar] [CrossRef]

- Canadian Registry of Wrongful Convictions. (n.d.). Available online: https://www.wrongfulconvictions.ca/ (accessed on 20 June 2025).

- Cutler, B. L., Penrod, S. D., & Dexter, H. R. (1990). Juror sensitivity to eyewitness identification evidence. Law and Human Behavior, 14(2), 185–191. [Google Scholar] [CrossRef]

- DePaulo, B. M., Lindsay, J. J., Malone, B. E., Muhlenbruck, L., Charlton, K., & Cooper, H. (2003). Cues to deception. Psychological Bulletin, 129(1), 74–118. [Google Scholar] [CrossRef]

- Devine, D. J. (2012). Jury decision making: The state of the science. NYU Press. [Google Scholar]

- Dillon, M. K., Jones, A. M., Bergold, A. N., Hui, C. Y., & Penrod, S. D. (2017). Henderson instructions: Do they enhance evidence evaluation? Journal of Forensic Psychology Research and Practice, 17(1), 1–24. [Google Scholar] [CrossRef]

- European Registry of Exonerations. (n.d.). Available online: https://www.registryofexonerations.eu/ (accessed on 20 June 2025).

- Evidence Based Justice Lab Miscarriages of Justice Registry. (n.d.). Available online: https://evidencebasedjustice.exeter.ac.uk/miscarriages-of-justice-registry/ (accessed on 20 June 2025).

- Fisher, R. P., Vrij, A., & Leins, D. A. (2012). Does testimonial inconsistency indicate memory inaccuracy and deception? Beliefs, empirical research, and theory. In Applied issues in investigative interviewing, eyewitness memory, and credibility assessment (pp. 173–189). Springer. [Google Scholar]

- Frank, D. J., & Kuhlmann, B. G. (2017). More than just beliefs: Experience and beliefs jointly contribute to volume effects on metacognitive judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(5), 680–693. [Google Scholar] [CrossRef] [PubMed]

- Fraser, B. M., Mackovichova, S., Thompson, L. E., Pozzulo, J. D., Hanna, H. R., & Furat, H. (2022). The influence of inconsistency in eyewitness reports, eyewitness age and crime type on mock juror decision-making. Journal of Police and Criminal Psychology, 37(2), 351–364. [Google Scholar] [CrossRef]

- Frith, U., & Happé, F. (1994). Autism: Beyond “theory of mind”. Cognition, 50(1–3), 115–132. [Google Scholar] [CrossRef] [PubMed]

- Hans, V. P., Reed, K., Reyna, V. F., Garavito, D., & Helm, R. K. (2022). Guiding jurors’ damage award decisions: Experimental investigations of approaches based on theory and practice. Psychology, Public Policy, and Law, 28(2), 188–212. [Google Scholar] [CrossRef]

- Helm, R. K. (2021a). Evaluating witness testimony: Juror knowledge, false memory, and the utility of evidence-based directions. The International Journal of Evidence & Proof, 25(4), 264–285. [Google Scholar]

- Helm, R. K. (2021b). The anatomy of ‘factual error’ misscarriages of justice in England and Wales: A fifty-year review. Criminal Law Review, 5, 351–373. [Google Scholar]

- Helm, R. K. (2022). Wrongful conviction in England and Wales: An assessment of successful appeals and key contributors. The Wrongful Conviction Law Review, 3(3), 196–217. [Google Scholar] [CrossRef]

- Helm, R. K. (2024). How juries work: And how they could work better. Oxford University Press. [Google Scholar]

- Helm, R. K., & Growns, B. (2023). Predicting and projecting memory: Error and bias in metacognitive judgements underlying testimony evaluation. Legal and Criminological Psychology, 28(1), 15–33. [Google Scholar] [CrossRef]

- Helm, R. K., & Spearing, E. R. (2025). The impact of race on assessor ability to differentiate accurate and inaccurate witness identifications: Areas of vulnerability, bias, and discriminatory outcomes. Psychology, Public Policy, and Law. [Google Scholar] [CrossRef]

- Howe, M. L., Knott, L. M., & Conway, M. A. (2017). Memory and miscarriages of justice. Psychology Press. [Google Scholar]

- Hudson, C. A., Vrij, A., Akehurst, L., Hope, L., & Satchell, L. P. (2020). Veracity is in the eye of the beholder: A lens model examination of consistency and deception. Applied Cognitive Psychology, 34(5), 996–1004. [Google Scholar] [CrossRef]

- John Jenkins v Her Majesty’s Advocate. (2011). HCJAC 86.

- Jones, A. M., Bergold, A. N., Dillon, M. K., & Penrod, S. D. (2017). Comparing the effectiveness of Henderson instructions and expert testimony: Which safeguard improves jurors’ evaluations of eyewitness evidence? Journal of Experimental Criminology, 13, 29–52. [Google Scholar] [CrossRef]

- Jones, A. M., Bergold, A. N., & Penrod, S. (2020). Improving juror sensitivity to specific eyewitness factors: Judicial instructions fail the test. Psychiatry, Psychology and Law, 27(3), 366–385. [Google Scholar] [CrossRef]

- Jones, A. M., & Penrod, S. (2018). Improving the effectiveness of the Henderson instruction safeguard against unreliable eyewitness identification. Psychology, Crime & Law, 24(2), 177–193. [Google Scholar]

- Kassin, S. M., Tubb, V. A., Hosch, H. M., & Memon, A. (2001). On the “general acceptance” of eyewitness testimony research: A new survey of the experts. American Psychologist, 56(5), 405–416. [Google Scholar] [CrossRef]

- Lavis, T., & Brewer, N. (2017). Effects of a proven error on evaluations of witness testimony. Law and Human Behavior, 41(3), 314–323. [Google Scholar] [CrossRef]

- Lecci, L., & Myers, B. (2008). Individual differences in attitudes relevant to juror decision making: Development and validation of the pretrial juror attitude questionnaire (PJAQ). Journal of Applied Social Psychology, 38(8), 2010–2038. [Google Scholar] [CrossRef]

- Lindsay, D. S., Nilsen, E., & Read, J. D. (2000). Witnessing-condition heterogeneity and witnesses’ versus investigators’ confidence in the accuracy of witnesses’ identification decisions. Law and Human Behavior, 24, 685–697. [Google Scholar] [CrossRef]

- Lindsay, R. C. L., Lim, R., Marando, L., & Cully, D. (1986). Mock-juror evaluations of eyewitness testimony: A test of metamemory hypotheses. Journal of Applied Social Psychology, 16(5), 447–459. [Google Scholar] [CrossRef]

- Loftus, E. F., & Greenspan, R. L. (2017). If I’m certain, is it true? Accuracy and confidence in eyewitness memory. Psychological Science in the Public Interest, 18(1), 1–2. [Google Scholar] [CrossRef]

- Mann, S., Vrij, A., & Bull, R. (2004). Detecting true lies: Police officers’ ability to detect suspects’ lies. Journal of Applied Psychology, 89(1), 137–149. [Google Scholar] [CrossRef]

- Martire, K. A., & Kemp, R. I. (2009). The impact of eyewitness expert evidence and judicial instruction on juror ability to evaluate eyewitness testimony. Law and Human Behavior, 33, 225–236. [Google Scholar] [CrossRef]

- McKimmie, B. M., Masser, B. M., & Bongiorno, R. (2014). Looking shifty but telling the truth: The effect of witness demeanour on mock jurors’ perceptions. Psychiatry, Psychology and Law, 21(2), 297–310. [Google Scholar] [CrossRef]

- National Registry of Exonerations. (n.d.). Available online: https://exonerationregistry.org/ (accessed on 20 June 2025).

- New Jersey v Henderson. (2011). 27 A.3d 872.

- O’Neill, M. C., & Pozzulo, J. D. (2012). Jurors’ judgments across multiple identifications and descriptions and descriptor inconsistencies. American Journal of Forensic Psychology, 30(2), 39–66. [Google Scholar]

- Potts, A., & Baker, P. (2012). Does semantic tagging identify cultural change in British and American English? International Journal of Corpus Linguistics, 17(3), 295–324. [Google Scholar] [CrossRef]

- Pozzulo, J. D., Crescini, C., & Panton, T. (2008). Does methodology matter in eyewitness identification research?: The effect of live versus video exposure on eyewitness identification accuracy. International Journal of Law and Psychiatry, 31(5), 430–437. [Google Scholar] [CrossRef]

- Ramirez, G., Zemba, D., & Geiselman, R. E. (1996). Judges’ cautionary instructions on eyewitness testimony. American Journal of Forensic Psychology, 14(1), 31–66. [Google Scholar]

- Reyna, V. F. (2012). A new intuitionism: Meaning, memory, and development in fuzzy-trace theory. Judgment and Decision Making, 7(3), 332–359. [Google Scholar] [CrossRef]

- Reyna, V. F., & Brainerd, C. J. (2011). Dual processes in decision making and developmental neuroscience: A fuzzy-trace model. Developmental Review, 31(2–3), 180–206. [Google Scholar] [CrossRef]

- Rubínová, E., Fitzgerald, R. J., Juncu, S., Ribbers, E., Hope, L., & Sauer, J. D. (2021). Live presentation for eyewitness identification is not superior to photo or video presentation. Journal of Applied Research in Memory and Cognition, 10(1), 167–176. [Google Scholar] [CrossRef]

- R v Turnbull. (1977). Q.B. 224.

- Saraiva, R. B., van Boeijen, I. M., Hope, L., Horselenberg, R., Sauerland, M., & van Koppen, P. J. (2019). Development and validation of the Eyewitness Metamemory Scale. Applied Cognitive Psychology, 33(5), 964–973. [Google Scholar] [CrossRef]

- Semmler, C., Brewer, N., & Douglass, A. B. (2012). Jurors believe eyewitnesses. In B. L. Cutler (Ed.), Conviction of the innocent: Lessons from psychological research (pp. 185–209). American Psychological Association. [Google Scholar]

- Simons, D. J., & Chabris, C. F. (2011). What people believe about how memory works: A representative survey of the US population. PLoS ONE, 6(8), e22757. [Google Scholar] [CrossRef] [PubMed]

- Simons, D. J., & Chabris, C. F. (2012). Common (mis) beliefs about memory: A replication and comparison of telephone and Mechanical Turk survey methods. PLoS ONE, 7(12), e51876. [Google Scholar] [CrossRef]

- Slane, C. R., & Dodson, C. S. (2022). Eyewitness confidence and mock juror decisions of guilt: A meta-analytic review. Law and Human Behavior, 46(1), 45–66. [Google Scholar] [CrossRef]

- United States v Telfaire. (1972). 469 F.2d 552.

- Vrij, A., Hartwig, M., & Granhag, P. A. (2019). Reading lies: Nonverbal communication and deception. Annual Review of Psychology, 70(1), 295–317. [Google Scholar] [CrossRef] [PubMed]

- Weber, N., & Brewer, N. (2008). Eyewitness recall: Regulation of grain size and the role of confidence. Journal of Experimental Psychology: Applied, 14(1), 50–60. [Google Scholar] [CrossRef]

- Wells, G. L., & Bradfield, A. L. (1998). “Good, you identified the suspect”: Feedback to eyewitnesses distorts their reports of the witnessing experience. Journal of Applied Psychology, 83(3), 360–376. [Google Scholar] [CrossRef]

- Wells, G. L., Kovera, M. B., Douglass, A. B., Brewer, N., Meissner, C. A., & Wixted, J. T. (2020). Policy and procedure recommendations for the collection and preservation of eyewitness identification evidence. Law and Human Behavior, 44(1), 3–36. [Google Scholar] [CrossRef]

- Wixted, J. T., & Wells, G. L. (2017). The relationship between eyewitness confidence and identification accuracy: A new synthesis. Psychological Science in the Public Interest, 18(1), 10–65. [Google Scholar] [CrossRef]

| Title 1 | All Identifications | Stimuli Identifications | ||

|---|---|---|---|---|

| Set A | Set B | Set A | Set B | |

| Confidence in Correct Identifications (SD) | 85.81 (15.87) | 76.11 (22.59) | 84.00 (10.75) | 77.00 (25.84) |

| Confidence in Incorrect Identifications (SD) | 64.81 (18.05) | 56.88 (25.75) | 58.00 (20.44) | 53.00 (22.14) |

| Statement | % Participants (n = 487) | % of Experts (Kassin et al., 2001; n = 64) | Endorsement of Statement Significantly Associated with Higher Accuracy in Identification Assessments |

|---|---|---|---|

| Very high levels of stress impair the accuracy of eyewitness testimony. | 81.5 | 60 | No |

| The presence of a weapon impairs an eyewitness’s ability to accurately identify the perpetrator’s face | 62.2 | 87 | No |

| The use of a one-person show-up instead of a full lineup increases the risk of misidentification. | 59.1 | 74 | No |

| The more members of a lineup resemble the suspect, the higher is the likelihood that identification of the suspect is accurate. | 42.7 | 70 | No |

| Police instructions can affect an eyewitness’s willingness to make an identification. | 80.3 | 98 | No |

| The less time an eyewitness has to observe an event, the less well he or she will remember it. | 65.1 | 81 | No |

| The rate of memory loss for an event is greatest right after the event and then levels off over time. | 50.7 | 83 | No |

| An eyewitness’s confidence is not a good predictor of his or her identification accuracy. | 50.7 | 87 | No |

| Eyewitness testimony about an event often reflects not only what they actually saw but also information they obtained later on. | 75.8 | 94 | No |

| Judgements of color made under monochromatic light (e.g., an orange street light) are highly unreliable. | 51.7 | 63 | No |

| An eyewitness’s testimony about an event can be affected by how the questions put to that witness are worded. | 81.7 | 98 | No |

| Eyewitnesses sometimes identify as a culprit someone they have seen in another situation or context. | 71.3 | 81 | Yes (Mtrue = 2.86, SD = 0.93, Mother = 2.66, SD = 0.95, f(486) = 4.763, p = 0.030) |

| Police officers and other trained observers are no more accurate as eyewitnesses than is the average person. | 49.9 | 39 | No |

| Hypnosis increases the accuracy of an eyewitness’s reported memory. | 41.3 | 45 | No |

| Hypnosis increases suggestibility to leading and misleading questions. | 54.6 | 91 | No |

| An eyewitness’s perception and memory for an event may be affected by his or her attitudes and expectations. | 79.5 | 92 | No |

| Eyewitnesses have more difficulty remembering violent than non-violent events. | 47.6 | 36 | No |

| Eyewitnesses are more accurate when identifying members of their own race than members of other races. | 52.8 | 90 | No |

| An eyewitness’s confidence can be influenced by factors that are unrelated to identification accuracy. | 79.5 | 87 | Yes (Mtrue = 2.87, SD = 0.96, Mother = 2.54, SD = 0.92, f(486) = 10.033, p = 0.002) |

| Alcoholic intoxication impairs an eyewitness’s later ability to recall persons and events. | 83 | 90 | No |

| Exposure to mug shots of a suspect increases the likelihood that the witness will later choose that suspect in a lineup | 69.4 | 95 | No |

| Traumatic experiences can be repressed for many years and then recovered. | 82.3 | 22 | No |

| Memories people recover from their own childhood are often false or distorted in some way. | 51.7 | 68 | No |

| It is possible to reliably differentiate between true and false memories. | 55.6 | 32 | No |

| Young children are less accurate as witnesses than are adults. | 43.9 | 70 | No |

| Young children are more vulnerable than adults to interviewer suggestion, peer pressures and other social influences. | 76.6 | 94 | Yes (Mtrue = 2.86, SD = 0.96, Mother = 2.62, SD = 0.87, f(486) = 5.517, p = 0.019) |

| The more members of a lineup resemble a witness’s description of the culprit, the more accurate an identification of the suspect is likely to be. | 49.3 | 71 | No |

| Witnesses are more likely to misidentify someone by making a relative judgement when presented with a simultaneous (as opposed to sequential) lineup. A simultaneous lineup is one where all members in the lineup are presented to thewitness at the same time, and the witness is asked whether the culprit/suspect is in thelineup. In a sequential lineup, the witness is presented each member in the lineup one at atime and the witness is asked whether that person is the culprit/suspect. | 55.9 | 81 | No |

| Elderly eyewitnesses are less accurate than are younger adults. | 52.2 | 50 | No |

| The more quickly a witness makes an identification upon seeing the lineup, the more accurate he or she is likely to be. | 66.5 | 40 | No |

| AC (39,086 Tokens, 309 Files) | CBC (21,718 Tokens, 178 Files) | ||||

|---|---|---|---|---|---|

| Type | Freq | Range | Type | Freq | Range |

| wasn | 112 | 74 | based | 62 | 38 |

| only | 103 | 68 | details | 58 | 41 |

| paying | 89 | 76 | identified | 54 | 41 |

| says | 87 | 41 | able | 43 | 32 |

| difficult | 77 | 59 | assessment | 42 | 28 |

| really | 77 | 60 | even | 42 | 31 |

| more | 76 | 54 | no | 40 | 31 |

| paid | 71 | 55 | believe | 39 | 29 |

| strangers | 71 | 56 | seem | 38 | 31 |

| camera | 68 | 58 | how | 36 | 26 |

| looked | 68 | 52 | much | 36 | 32 |

| Rank | Collocate | FreqR | Range | Likelihood |

|---|---|---|---|---|

| 1 | he | 33 | 19 | 25.481 |

| 2 | she | 28 | 19 | 10.686 |

| 3 | that | 24 | 16 | 18.996 |

| 4 | is | 23 | 17 | 22.793 |

| 4 | the | 23 | 14 | 5.289 |

| 6 | not | 21 | 19 | 17.564 |

| 7 | it | 12 | 11 | 11.367 |

| 8 | was | 11 | 11 | 0.125 |

| 9 | a | 10 | 9 | 0.792 |

| 10 | saw | 9 | 6 | 8.002 |

| 10 | difficult | 9 | 9 | 26.37 |

| 10 | to | 9 | 8 | 0.004 |

| 13 | sure | 8 | 7 | 3.378 |

| 14 | good | 6 | 6 | 1.141 |

| 14 | confident | 6 | 5 | 0.056 |

| 16 | memory | 5 | 5 | 0.67 |

| 16 | face | 5 | 3 | 0.366 |

| 16 | very | 5 | 4 | 0.461 |

| 16 | has | 5 | 5 | 3.536 |

| 16 | well | 5 | 4 | 7.475 |

| Rank | Collocate | Freq | Range | Likelihood |

|---|---|---|---|---|

| 1 | seem | 24 | 22 | 68.846 |

| 2 | sure | 52 | 45 | 50.566 |

| 3 | paying | 25 | 23 | 49.183 |

| 4 | have | 32 | 29 | 45.038 |

| 5 | attention | 37 | 33 | 43.325 |

| 6 | See | 17 | 17 | 34.646 |

| 7 | Any | 12 | 11 | 33.502 |

| 8 | At | 33 | 28 | 28.64 |

| 9 | witness | 8 | 8 | 27.694 |

| 10 | good | 40 | 36 | 26.537 |

| 11 | remember | 14 | 12 | 26.463 |

| 12 | much | 14 | 14 | 24.706 |

| 13 | Not | 8 | 8 | 24.183 |

| 14 | Was | 15 | 15 | 23.095 |

| 15 | give | 9 | 8 | 22.275 |

| 16 | The | 97 | 68 | 22.11 |

| 17 | sound | 7 | 6 | 20.173 |

| 18 | Pay | 10 | 10 | 18.953 |

| 19 | confident | 46 | 39 | 18.527 |

| 20 | great | 9 | 7 | 17.193 |

| 21 | think | 14 | 14 | 15.73 |

| 22 | fully | 6 | 6 | 15.555 |

| 23 | Get | 7 | 5 | 15.248 |

| 24 | really | 13 | 12 | 14.85 |

| Collocate | FreqLR | FreqL | FreqR | Range | Likelihood |

|---|---|---|---|---|---|

| the | 58 | 22 | 36 | 30 | 1.042 |

| and | 19 | 10 | 9 | 14 | 1.053 |

| witness | 14 | 9 | 5 | 12 | 0.464 |

| of | 14 | 4 | 10 | 13 | 0.176 |

| they | 13 | 9 | 4 | 9 | 9.88 |

| specific | 13 | 13 | 0 | 9 | 45.79 |

| to | 12 | 8 | 4 | 11 | 0.009 |

| about | 12 | 1 | 11 | 10 | 16.27 |

| that | 12 | 2 | 10 | 11 | 0.162 |

| person | 11 | 2 | 9 | 10 | 0.174 |

| suspect | 10 | 2 | 8 | 6 | 12.4 |

| she | 9 | 2 | 7 | 6 | 2.478 |

| gave | 7 | 7 | 0 | 5 | 16.997 |

| he | 7 | 3 | 4 | 4 | 5.292 |

| s | 7 | 0 | 7 | 5 | 2.929 |

| on | 6 | 4 | 2 | 6 | 0.684 |

| their | 6 | 5 | 1 | 6 | 1.503 |

| as | 6 | 3 | 3 | 5 | 2.976 |

| face | 6 | 3 | 3 | 6 | 0.459 |

| facial | 6 | 4 | 2 | 6 | 7.765 |

| with | 6 | 1 | 5 | 6 | 1.427 |

| i | 6 | 0 | 6 | 5 | 0.001 |

| was | 5 | 3 | 2 | 5 | 5.992 |

| a | 5 | 3 | 2 | 4 | 2.965 |

| not | 5 | 4 | 1 | 5 | 1.514 |

| provided | 5 | 4 | 1 | 4 | 12.742 |

| matched | 5 | 1 | 4 | 4 | 11.968 |

| confident | 5 | 3 | 2 | 4 | 0.242 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Helm, R.K.; Chen, Y. Social Metamemory Judgments in the Legal Context: Examining Judgments About the Memory of Others. Behav. Sci. 2025, 15, 878. https://doi.org/10.3390/bs15070878

Helm RK, Chen Y. Social Metamemory Judgments in the Legal Context: Examining Judgments About the Memory of Others. Behavioral Sciences. 2025; 15(7):878. https://doi.org/10.3390/bs15070878

Chicago/Turabian StyleHelm, Rebecca K., and Yan Chen. 2025. "Social Metamemory Judgments in the Legal Context: Examining Judgments About the Memory of Others" Behavioral Sciences 15, no. 7: 878. https://doi.org/10.3390/bs15070878

APA StyleHelm, R. K., & Chen, Y. (2025). Social Metamemory Judgments in the Legal Context: Examining Judgments About the Memory of Others. Behavioral Sciences, 15(7), 878. https://doi.org/10.3390/bs15070878