A Reliability Generalization Meta-Analysis of the Antisocial Process Screening Device

Abstract

1. Introduction

1.1. The Antisocial Process Screening Device (APSD)

1.2. Internal Consistency Reliability of the APSD Scores

1.3. The Current Study

2. Method

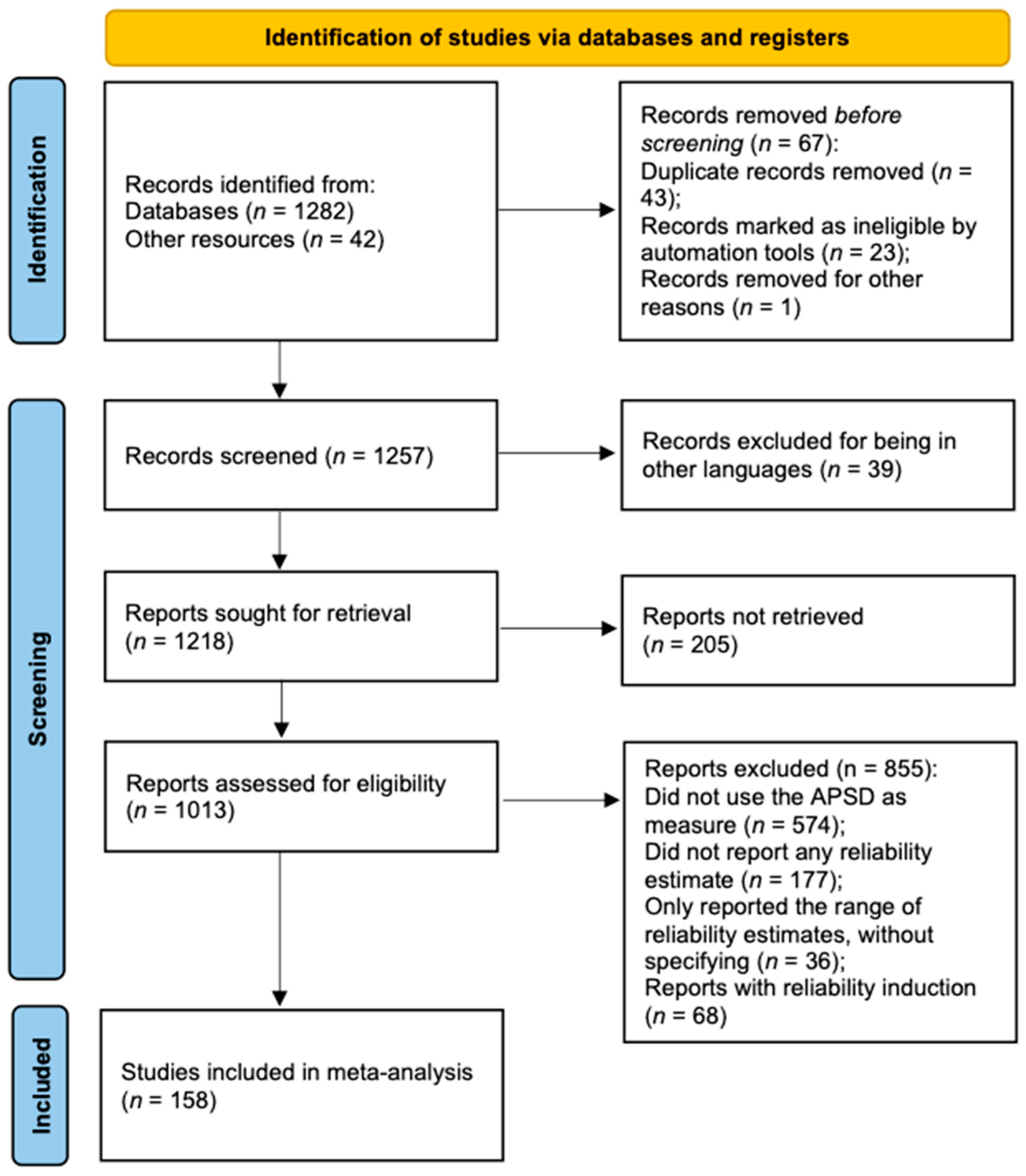

2.1. Literature Search and Selection

2.2. Data Extraction and Coding

2.3. Analytical Strategies

3. Results

3.1. Description of the Data

3.2. Mean Reliability and Heterogeneity

3.3. Moderator Analysis

3.4. Explanatory Models

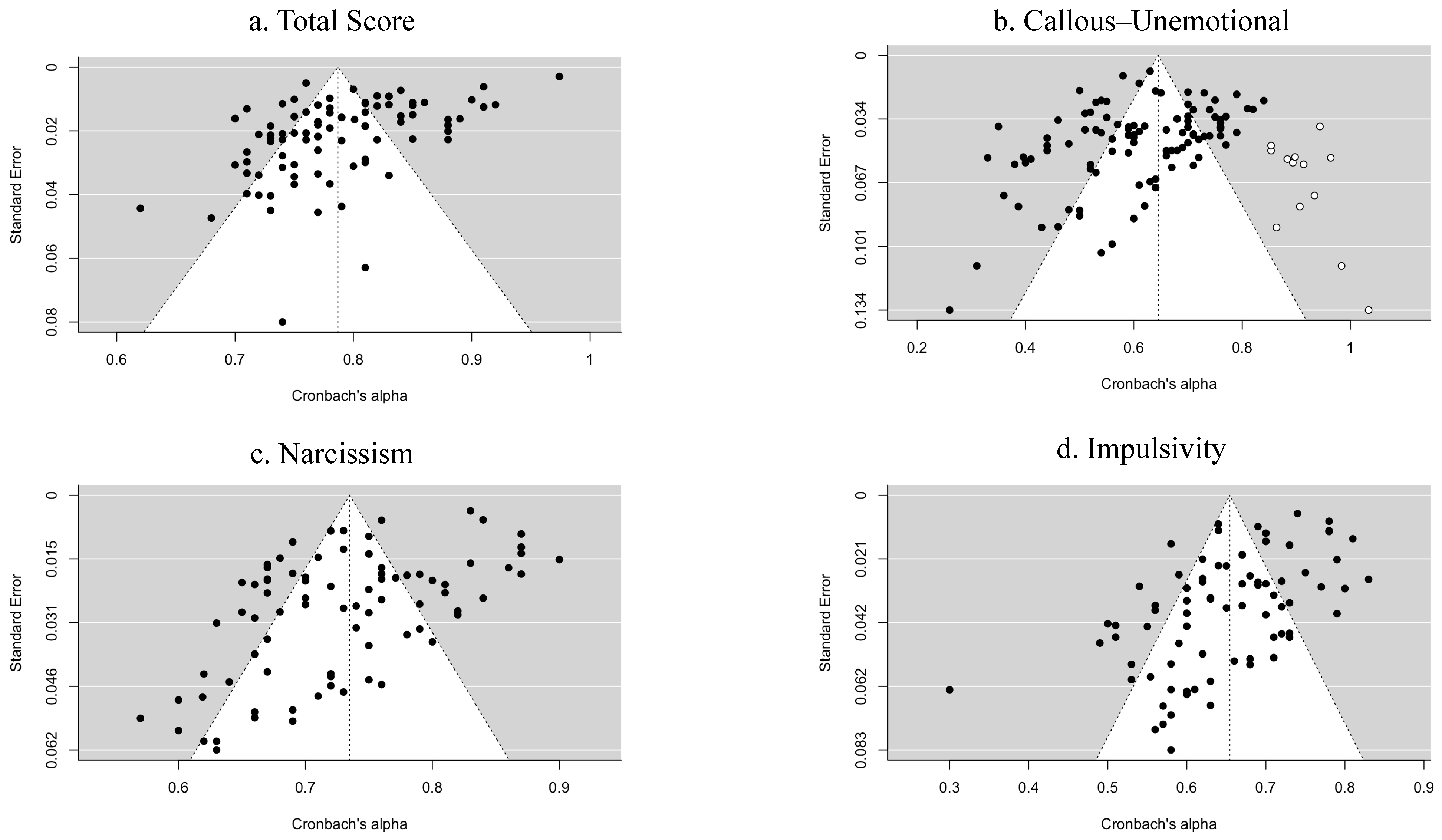

3.5. Publication Bias

4. Discussion

4.1. Moderators of the Coefficient Alphas

4.2. Publication Bias

4.3. Recommendations, Limitations, and Future Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | Emails were sent to the corresponding authors of articles that had missing values. The absence of reply from some authors yielded a dataset with 88 studies which including missing values. |

References

- Achenbach, T. M., McConaughy, S. H., & Howell, C. T. (1987). Child/adolescent behavioral and emotional problems: Implications of cross-informant correlations for situational specificity. Psychological Bulletin, 101(2), 213–232. [Google Scholar] [CrossRef] [PubMed]

- American Educational Research Association, American Psychological Association & National Council on Measurement in Education. (2014). Standards for educational and psychological testing (2014th ed.). American Educational Research Association (AERA). [Google Scholar]

- Anastasi, A., & Urbina, S. (1997). Psychological testing (7th ed.). Prentice Hall. [Google Scholar]

- Barrutieta, L. H., & Prieto-Ursúa, M. (2015). Neurophysiological indicators of emotional processing in youth psychopathy. Psicothema, 27(3), 235–240. [Google Scholar] [CrossRef] [PubMed]

- Begg, C. B., & Mazumdar, M. (1994). Operating characteristics of a rank correlation test for publication bias. Biometrics, 50(4), 1088–1101. [Google Scholar] [CrossRef] [PubMed]

- Bijttebier, P., & Decoene, S. (2009). Assessment of psychopathic traits in children and adolescents: Further validation of the antisocial process screening device and the childhood psychopathy scale. European Journal of Psychological Assessment, 25(3), 157–163. [Google Scholar] [CrossRef]

- Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis. Wiley. [Google Scholar] [CrossRef]

- Botella, J., Suero, M., & Gambara, H. (2010). Psychometric inferences from a meta-analysis of reliability and internal consistency coefficients. Psychological Methods, 15(4), 386–397. [Google Scholar] [CrossRef]

- Buuren, S. V., & Groothuis-Oudshoorn, K. (2010). mice: Multivariate imputation by chained equations in R. Journal of Statistical Software, 45(3), 1–67. [Google Scholar] [CrossRef]

- Cardinale, E. M., & Marsh, A. A. (2017). The reliability and validity of the inventory of callous unemotional traits: A meta-analytic review. Assessment, 27(1), 57–71. [Google Scholar] [CrossRef]

- Clark, L. A., & Watson, D. (1991). Tripartite model of anxiety and depression: Psychometric evidence and taxonomic implications. Journal of Abnormal Psychology, 100(3), 316–336. [Google Scholar] [CrossRef]

- Colins, O. F., Andershed, H., Salekin, R. T., & Fanti, K. A. (2018). Comparing different approaches for subtyping children with conduct problems: Callous-unemotional traits only versus the multidimensional psychopathy construct. Journal of Psychopathology and Behavioral Assessment, 40, 6–15. [Google Scholar] [CrossRef]

- Colins, O. F., Bijttebier, P., Broekaert, E., & Andershed, H. (2014). Psychopathic-like traits among detained female adolescents: Reliability and validity of the antisocial process screening device and the youth psychopathic traits inventory. Assessment, 21(2), 195–209. [Google Scholar] [CrossRef]

- Colins, O. F., Noom, M., & Vanderplasschen, W. (2012). Youth psychopathic traits inventory-short version: A further test of the internal consistency and criterion validity. Journal of Psychopathology and Behavioral Assessment, 34, 476–486. [Google Scholar] [CrossRef] [PubMed]

- Colins, O. F., Van Damme, L., Fanti, K. A., & Andershed, H. (2017). The prospective usefulness of callous–unemotional traits and conduct disorder in predicting treatment engagement among detained girls. European Child & Adolescent Psychiatry, 26, 75–85. [Google Scholar] [CrossRef]

- Crapanzano, A. M., Frick, P. J., Childs, K., & Terranova, A. M. (2011). Gender differences in the assessment, stability, and correlates to bullying roles in middle school children. Behavioral Sciences & the Law, 29(5), 677–694. [Google Scholar] [CrossRef]

- Crocker, L., & Algina, J. (1986). Introduction to classical and modern test theory. Holt, Rinehart & Winston. [Google Scholar]

- De Los Reyes, A., Augenstein, T. M., Wang, M., Thomas, S. A., Drabick, D. A. G., Burgers, D. E., & Rabinowitz, J. (2015). The validity of the multi-informant approach to assessing child and adolescent mental health. Psychological Bulletin, 141(4), 858–900. [Google Scholar] [CrossRef]

- De Los Reyes, A., & Kazdin, A. E. (2005). Informant discrepancies in the assessment of childhood psychopathology: A critical review, theoretical framework, and recommendations for further study. Psychological Bulletin, 131(4), 483–509. [Google Scholar] [CrossRef]

- Deng, J., Wang, M.-C., Zhang, X., Shou, Y., Gao, Y., & Luo, J. (2019). The inventory of callous unemotional traits: A reliability generalization meta-analysis. Psychological Assessment, 31(6), 765–780. [Google Scholar] [CrossRef]

- de Wied, M., van der Baan, H., Raaijmakers, Q., de Ruiter, C., & Meeus, W. (2014). Factor structure and construct validity of the Dutch version of the Antisocial Process Screening Device. Journal of Psychopathology and Behavioral Assessment, 36, 84–92. [Google Scholar] [CrossRef]

- Dong, L., Wu, H., & Waldman, I. D. (2014). Measurement and structural invariance of the antisocial process screening device. Psychological Assessment, 26(2), 598–608. [Google Scholar] [CrossRef]

- Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463. [Google Scholar] [CrossRef]

- Ebrahimi, A., Athar, M. E., Darvishi, M., & Colins, O. F. (2021). The Persian self-report version of the Antisocial Process Screening Device (APSD-P): A psychometric evaluation. Frontiers in Psychiatry, 12, 760531. [Google Scholar] [CrossRef]

- Eremsoy, C. E., Karanci, A. N., & Berument, S. K. (2011). Psychometric properties of the antisocial process screening device in a non-clinical sample of Turkish children. Procedia-Social and Behavioral Sciences, 30, 2319–2323. [Google Scholar] [CrossRef]

- Falkenbach, D. M., Poythress, N. G., & Heide, K. M. (2003). Psychopathic features in a juvenile diversion population: Reliability and predictive validity of two self-report measures. Behavioral Sciences and the Law, 21(6), 787–805. [Google Scholar] [CrossRef] [PubMed]

- Feilhauer, J., Cima, M., & Arntz, A. (2012). Assessing callous–unemotional traits across different groups of youths: Further cross-cultural validation of the inventory of callous–unemotional traits. International Journal of Law and Psychiatry, 35(4), 251–262. [Google Scholar] [CrossRef]

- Fite, P. J., Greening, L., Stoppelbein, L., & Fabiano, G. A. (2009). Confirmatory factor analysis of the antisocial process screening device with a clinical inpatient population. Assessment, 16(1), 103–114. [Google Scholar] [CrossRef] [PubMed]

- Frick, P. J. (2003). The inventory of callous-unemotional traits. The University of New Orleans. [Google Scholar]

- Frick, P. J. (2009). Extending the construct of psychopathy to youth: Implications for understanding, diagnosing, and treating antisocial children and adolescents. The Canadian Journal of Psychiatry, 54(12), 803–812. [Google Scholar] [CrossRef]

- Frick, P. J. (2022). Some critical considerations in applying the construct of psychopathy to research and classification of childhood disruptive behavior disorders. Clinical Psychology Review, 96, 102188. [Google Scholar] [CrossRef]

- Frick, P. J., Bodin, S. D., & Barry, C. T. (2000). Psychopathic traits and conduct problems in community and clinic-referred samples of children: Further development of the psychopathy screening device. Psychological Assessment, 12(4), 382–393. [Google Scholar] [CrossRef]

- Frick, P. J., & Hare, R. (2001). The antisocial process screening device (APSD): Technical manual. Multi-Health Systems. [Google Scholar]

- Frick, P. J., Kimonis, E. R., Dandreaux, D. M., & Farell, J. (2003). The 4 year stability of psychopathic traits in non-referred youth. Behavioral Sciences & the Law, 21(4), 713–736. [Google Scholar] [CrossRef]

- Frick, P. J., O’Brien, B. S., Woorton, J., & McBurnett, K. (1994). Psychopathy and conduct problems in children. Journal of Abnormal Psychology, 103(4), 700–707. [Google Scholar] [CrossRef]

- Fung, A., Gao, Y., & Raine, A. (2010). The utility of the child and adolescent psychopathy construct in Hong Kong, China. Journal of Clinical Child & Adolescent Psychology, 39(1), 134–140. [Google Scholar] [CrossRef]

- Garland, E. L., Howard, M. O., & Perron, B. E. (2009). Nitrous oxide inhalation among adolescents: Prevalence, correlates, and co-occurrence with volatile solvent inhalation. Journal of Psychoactive Drugs, 41(4), 337–347. [Google Scholar] [CrossRef] [PubMed]

- Geisinger, K. F. (2013). Reliability. In K. F. Geisinger (Ed.), APA handbook of testing and assessment in psychology: Vol. 1. Test theory and testing and assessment in industrial and organizational psychology (pp. 21–41). American Psychological Association. [Google Scholar] [CrossRef]

- Gillen, C. T. A., MacDougall, E. A. M., Forth, A. E., Barry, C. T., & Salekin, R. T. (2019). Validity of the Youth Psychopathic Traits Inventory–Short Version in justice-involved and at-risk adolescents. Assessment, 26(3), 479–491. [Google Scholar] [CrossRef] [PubMed]

- Goodwin, B. E., Sellbom, M., & Salekin, R. T. (2015). Elucidating the construct validity of the antisocial process screening device (APSD) in a sample of young adults. Journal of Psychopathology and Behavioral Assessment, 37, 1–11. [Google Scholar] [CrossRef]

- Green, C. E., Chen, C. E., Helms, J. E., & Henze, K. T. (2011). Recent reliability reporting practices in Psychological Assessment: Recognizing the people behind the data. Psychological Assessment, 23(3), 656–669. [Google Scholar] [CrossRef]

- Grigorenko, E. L., Geiser, C., Slobodskaya, H. R., & Francis, D. J. (2010). Cross-informant symptoms from CBCL, TRF, and YSR: Trait and method variance in a normative sample of Russian youths. Psychological Assessment, 22(4), 893–911. [Google Scholar] [CrossRef] [PubMed]

- Hare, R. D. (2003). Hare psychopathy checklist-revised (PCL-R): Technical manual (2nd ed.). Multi-Health Systems Inc. [Google Scholar]

- Hare, R. D., & Neumann, C. (2008). Psychopathy as a clinical and empirical construct. Annual Review of Clinical Psychology, 4, 217–246. [Google Scholar] [CrossRef]

- Henson, R. K., & Thompson, B. (2002). Characterizing measurement error in scores across studies: Some recommendations for conducting “reliability generalization” studies. Measurement and Evaluation in Counseling and Development, 35(2), 113–127. [Google Scholar] [CrossRef]

- Higgins, J. P. T., Thompson, S. G., Deeks, J. J., & Altman, D. G. (2003). Measuring inconsistency in meta-analyses. British Medical Journal, 327(7414), 557–560. [Google Scholar] [CrossRef]

- Huedo-Medina, T. B., Sánchez-Meca, J., Marín-Martínez, F., & Botella, J. (2006). Assessing heterogeneity in meta-analysis: Q statistic or I2 index? Psychological Methods, 11(2), 193–206. [Google Scholar] [CrossRef]

- Javdani, S., Sadeh, N., & Verona, E. (2011). Suicidality as a function of impulsivity, callous–unemotional traits, and depressive symptoms in youth. Journal of Abnormal Psychology, 120(2), 400–413. [Google Scholar] [CrossRef]

- Johnstone, L., & Cooke, D. J. (2004). Psychopathic-like traits in childhood: Conceptual and measurement concerns. Behavioral Sciences and the Law, 22(1), 103–125. [Google Scholar] [CrossRef]

- Kleinke, K. (2017). Multiple imputation under violated distributional assumptions: A systematic evaluation of the assumed robustness of predictive mean matching. Journal of Educational and Behavioral Statistics, 42(4), 371–404. [Google Scholar] [CrossRef]

- Knapp, G., & Hartung, J. (2003). Improved tests for a random effects meta-regression with a single covariate. Statistics in Medicine, 22(17), 2693–2710. [Google Scholar] [CrossRef] [PubMed]

- Kotler, J. S., & McMahon, R. J. (2010). Assessment of child and adolescent psychopathy. In R. T. Salekin, & D. R. Lynam (Eds.), Handbook of child and adolescent psychopathy. The Guilford Press. [Google Scholar]

- Laajasalo, T., Aronen, E. T., Saukkonen, S., Salmi, V., Aaltonen, M., & Kivivuori, J. (2016). To tell or not to tell? Psychopathic traits and response integrity in youth delinquency surveys. Criminal Behaviour and Mental Health, 26(2), 81–93. [Google Scholar] [CrossRef]

- Laajasalo, T., Saukkonen, S., Kivivuori, J., Salmi, V., Lipsanen, J., & Aronen, E. T. (2014). Brief report: Self-reported psychopathic-like features among Finnish community youth: Investigation of the factor structure of the antisocial personality screening device. Journal of Adolescence, 37(7), 1185–1188. [Google Scholar] [CrossRef]

- Lankester, M., Coles, C., Trotter, A., Scott, S., Downs, J., Dickson, H., & Wickersham, A. (2025). The association between academic achievement and subsequent youth offending: A systematic review and meta-analysis. Journal of Developmental and Life-Course Criminology, 10, 477–500. [Google Scholar] [CrossRef] [PubMed]

- Lilienfeld, S. O., & Andrews, B. P. (1996). Development and preliminary validation of a self-report measure of psychopathic personality traits in noncriminal populations. Journal of Personality Assessment, 66(3), 488–524. [Google Scholar] [CrossRef]

- Lilienfeld, S. O., & Fowler, K. A. (2006). The self-report assessment of psychopathy: Problems, pitfalls, and promises. In C. J. Patrick (Ed.), Handbook of psychopathy (pp. 107–132). Guilford Press. [Google Scholar]

- López-López, J. A., Botella, J., Sánchez-Meca, J., & Marín-Martínez, F. (2013). Alternatives for mixed-effects meta-regression models in the reliability generalization approach: A simulation study. Journal of Educational and Behavioral Statistics, 38(5), 443–469. [Google Scholar] [CrossRef]

- López-López, J. A., Marín-Martínez, F., Sánchez-Meca, J., Van den Noortgate, W., & Viechtbauer, W. (2014). Estimation of the predictive power of the model in mixed-effects meta-regression: A simulation study. British Journal of Mathematical and Statistical Psychology, 67(1), 30–48. [Google Scholar] [CrossRef]

- Lynam, D. R., Caspi, A., Moffitt, T. E., Loeber, R., & Stouthamer-Loeber, M. (2007). Longitudinal evidence that psychopathy scores in early adolescence predict adult psychopathy. Journal of Abnormal Psychology, 116(1), 155–165. [Google Scholar] [CrossRef]

- Lynam, D. R., Whiteside, S., & Jones, S. (1999). Self-reported psychopathy: A validation study. Journal of Personality Assessment, 73(1), 110–132. [Google Scholar] [CrossRef] [PubMed]

- Muñoz, L. C., & Frick, P. J. (2007). The reliability, stability, and predictive utility of the self-report version of the antisocial process screening device. Scandinavian Journal of Psychology, 48(4), 299–312. [Google Scholar] [CrossRef]

- Murrie, D. C., Cornell, D. G., Kaplan, S., McConville, D., & Levy-Elkon, A. (2004). Psychopathy scores and violence among juvenile offenders: A multi-measure study. Behavioral Sciences & the Law, 22(1), 49–67. [Google Scholar] [CrossRef]

- Ooi, Y. P., Glenn, A. L., Ang, R. P., Vanzetti, S., Falcone, T., Gaab, J., & Fung, D. S. S. (2017). Agreement between parent- and self-reports of psychopathic traits and externalizing behaviors in a clinical sample. Child Psychiatry and Human Development, 48, 151–165. [Google Scholar] [CrossRef]

- Patrick, C. J., Fowles, D. C., & Krueger, R. F. (2009). Triarchic conceptualization of psychopathy: Developmental origins of disinhibition, boldness, and meanness. Development and Psychopathology, 21(3), 913–938. [Google Scholar] [CrossRef] [PubMed]

- Pechorro, P., Gonçalves, R. A., Marôco, J., Gama, A. P., Neves, S., & Nunes, C. (2014). Juvenile delinquency and psychopathic traits: An empirical study with Portuguese adolescents. International Journal of Offender Therapy and Comparative Criminology, 58(2), 174–189. [Google Scholar] [CrossRef]

- Poore, H. E., Watts, A. L., Lilienfeld, S. O., & Waldman, I. D. (2020). Construct validity of youth psychopathic traits as assessed by the antisocial process screening device. Psychological Assessment, 32(9), 527–540. [Google Scholar] [CrossRef] [PubMed]

- Poythress, N. G., Dembo, R., Wareham, J., & Greenbaum, P. E. (2006a). Construct validity of the youth psychopathic traits inventory (YPI) and the antisocial process screening device (APSD) with justice-involved adolescents. Criminal Justice and Behavior, 33(1), 26–55. [Google Scholar] [CrossRef]

- Poythress, N. G., Douglas, K. S., Falkenbach, D., Cruise, K., Lee, Z., Murrie, D. C., & Vitacco, M. (2006b). Internal consistency reliability of the self-report Antisocial Process Screening Device. Assessment, 13(1), 107–113. [Google Scholar] [CrossRef]

- Revelle, W., & Condon, D. M. (2017). Reliability. In P. Irwing, T. Booth, & D. J. Hughes (Eds.), The Wiley handbook of psychometric testing: A multidisciplinary reference on survey, scale and test development (Vol. 2, pp. 709–749). Wiley Blackwell. [Google Scholar]

- Roose, A., Bijttebier, P., Claes, L., & Lilienfeld, S. O. (2011). Psychopathic traits in adolescence: Associations with the revised reinforcement sensitivity theory systems. Personality and Individual Differences, 50(2), 201–205. [Google Scholar] [CrossRef]

- Roşan, A., Frick, P. J., Gottlieb, K. A., & Faşicaru, L. (2015). Callous-unemotional traits and anxiety in a sample of detained adolescents in Romania. Journal of Evidence-Based Psychotherapies, 15(1), 79–95. [Google Scholar]

- Sadeh, N., Verona, E., Javdani, S., & Olson, L. (2009). Examining psychopathic tendencies in adolescence from the perspective of personality theory. Aggressive Behavior, 35(5), 399–407. [Google Scholar] [CrossRef] [PubMed]

- Salekin, R. T. (2016). Psychopathy in childhood: Toward better informing the DSM–5 and ICD-11 conduct disorder specifiers. Personality Disorders: Theory, Research, and Treatment, 7(2), 180–191. [Google Scholar] [CrossRef]

- Salekin, R. T. (2017). Research Review: What do we know about psychopathic traits in children? Journal of Child Psychology and Psychiatry, 58(11), 1180–1200. [Google Scholar] [CrossRef]

- Salekin, R. T., & Lynam, D. R. (2010). Handbook of child and adolescent psychopathy. Guilford Press. [Google Scholar]

- Sánchez-Meca, J., López-López, J. A., & López-Pina, J. A. (2013). Some recommended statistical analytic practices when reliability generalization (RG) studies are conducted. British Journal of Mathematical and Statistical Psychology, 66(3), 402–425. [Google Scholar] [CrossRef] [PubMed]

- Sellbom, M., & Phillips, T. R. (2013). An examination of the triarchic conceptualization of psychopathy in incarcerated and nonincarcerated samples. Journal of Abnormal Psychology, 122(1), 208–214. [Google Scholar] [CrossRef]

- Shaffer, C., Gatner, D., Gray, A. L., Douglas, K. S., Viljoen, J. L., Tweed, R., Bhatt, G., Dooley, S., & Gagnon, N. (2016). Incremental and predictive validity of the antisocial process screening device in a community sample of male and female ethnic minority and Caucasian youth. Journal of Abnormal Child Psychology, 44, 1599–1612. [Google Scholar] [CrossRef]

- Shi, L., & Lin, L. (2019). The trim-and-fill method for publication bias: Practical guidelines and recommendations based on a large database of meta-analyses. Medicine (Baltimore), 98(23), e15987. [Google Scholar] [CrossRef]

- Stellwagen, K., & Kerig, P. K. (2010). Relating callous-unemotional traits to physically restrictive treatment measures among child psychiatric inpatients. Journal of Child & Family Studies, 19, 588–595. [Google Scholar] [CrossRef]

- Suárez-Alvarez, J., Pedrosa, I., Lozano, L. M., García-Cueto, E., Cuesta, M., & Muñiz, J. (2018). Using reversed items in Likert scales: A questionable practice. Psicothema, 30(2), 149–158. [Google Scholar] [CrossRef]

- Thomson, N. D., & Centifanti, L. C. M. (2018). Proactive and reactive aggression subgroups in typically developing children: The role of executive functioning, psychophysiology, and psychopathy. Child Psychiatry and Human Development, 49, 197–208. [Google Scholar] [CrossRef] [PubMed]

- Tsang, S., Royse, C. F., & Terkawi, A. S. (2017). Guidelines for developing, translating, and validating a questionnaire in perioperative and pain medicine. Saudi Journal of Anaesthesia, 11(Suppl. S1), S80–S89. [Google Scholar] [CrossRef] [PubMed]

- Vacha-Haase, T., Henson, R. K., & Caruso, J. C. (2002). Reliability generalization: Moving toward improved understanding and use of score reliability. Educational and Psychological Measurement, 62(4), 562–569. [Google Scholar] [CrossRef]

- van Baardewijk, Y., Andershed, H., Stegge, H., Nilsson, K. W., Scholte, E., & Vermeiren, R. (2010). Development and tests of short versions of the youth psychopathic traits inventory and the youth psychopathic traits inventory-child version. European Journal of Psychological Assessment, 26(2), 122–128. [Google Scholar] [CrossRef]

- van Baardewijk, Y., Stegge, H., Andershed, H., Thomaes, S., Scholte, E., & Vermeiren, R. (2008). Measuring psychopathic traits in children through self-report. The development of the youth psychopathic traits inventory-child version. International Journal of Law and Psychiatry, 31(3), 199–209. [Google Scholar] [CrossRef] [PubMed]

- Van Riezen, H., & Segal, M. (1988). Comparative evaluation of rating scales for clinical psychopharmacology. Elsevier. [Google Scholar]

- Van Zwieten, A., Meyer, J., Hermens, D. F., Hickie, I. B., Hawes, D. J., Glozier, N., Naismith, S. L., Scott, E. M., Lee, R. S. C., & Guastella, A. J. (2013). Social cognition deficits and psychopathic traits in young people seeking mental health treatment. PLoS ONE, 8, e67753. [Google Scholar] [CrossRef]

- Viechtbauer, W. (2010). Conducting meta-analyses in r with the metafor package. Journal of Statistical Software, 36(3), 1–48. [Google Scholar] [CrossRef]

- Viechtbauer, W., López-López, J. A., Sánchez-Meca, J., & Marín-Martínez, F. (2015). A comparison of procedures to test for moderators in mixed-effects meta-regression models. Psychological Methods, 20(3), 360–374. [Google Scholar] [CrossRef]

- Vitacco, M. J., Rogers, R., & Neumann, C. S. (2003). The antisocial process screening device: An examination of its construct and criterion-related validity. Assessment, 10(2), 143–150. [Google Scholar] [CrossRef]

- Wang, M.-C., Deng, Q., Armour, C., Bi, X., & Zeng, H. (2015). The psychometric properties and factor structure of the antisocial process screening device self-report version in chinese adolescents. Journal of Psychopathology and Behavioral Assessment, 37, 553–562. [Google Scholar] [CrossRef]

| k | α+ | 95% CI | Tau2 | Q | I2% | H2 | |

|---|---|---|---|---|---|---|---|

| Total Score | 97 | 0.79 | [0.77, 0.80] | 0.003 | 4106.680 *** | 94.65 | 18.68 |

| Total Score a | 96 | 0.78 | [0.77, 0.80] | 0.003 | 1423.179 *** | 92.72 | 13.74 |

| Callous–Unemotional | 104 | 0.62 | [0.59, 0.64] | 0.012 | 1007.952 *** | 92.22 | 12.86 |

| Narcissism | 82 | 0.73 | [0.72, 0.75] | 0.004 | 1379.95 *** | 94.16 | 17.12 |

| Impulsivity | 78 | 0.65 | [0.63, 0.67] | 0.006 | 848.965 *** | 92.52 | 13.37 |

| Impulsivity b | 77 | 0.66 | [0.64, 0.68] | 0.005 | 808.883 *** | 91.52 | 11.79 |

| Total Scale | Callous–Unemotional | Narcissism | Impulsivity | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| k | α+ | 95% CI | k | α+ | 95% CI | k | α+ | 95% CI | k | α+ | 95% CI | |

| Administration Format | ||||||||||||

| APSD-PR | 15 | 0.81 | [0.78, 0.84] | 31 | 0.62 | [0.58, 0.65] | 20 | 0.75 | [0.73, 0.78] | 21 | 0.69 | [0.66, 0.72] |

| APSD-SR | 70 | 0.77 | [0.76, 0.78] | 33 | 0.51 | [0.48, 0.55] | 44 | 0.70 | [0.69, 0.71] | 42 | 0.62 | [0.60, 0.64] |

| APSD-TR | 5 | 0.90 | [0.88, 0.92] | 15 | 0.73 | [0.69, 0.77] | 9 | 0.85 | [0.83, 0.87] | 7 | 0.77 | [0.74, 0.81] |

| APSD-OR | 7 | 0.85 | [0.79, 0.90] | 25 | 0.68 | [0.65, 0.71] | 9 | 0.76 | [0.70, 0.81] | 8 | 0.65 | [0.56, 0.74] |

| Country | ||||||||||||

| United States | 43 | 0.79 | [0.77, 0.81] | 58 | 0 | [0.59, 0.65] | 44 | 0.74 | [0.72, 0.76] | 44 | 0.65 | [0.63, 0.68] |

| Other | 54 | 0.78 | [0.77, 0.80] | 46 | 0.61 | [0.57, 0.65] | 38 | 0.73 | [0.71, 0.75] | 34 | 0.66 | [0.62, 0.69] |

| Scale Language | ||||||||||||

| English | 50 | 0.79 | [0.77, 0.81] | 74 | 0.62 | [0.59, 0.65] | 58 | 0.74 | [0.72, 0.76] | 58 | 0.65 | [0.63, 0.68] |

| Non-English | 37 | 0.79 | [0.77, 0.80] | 21 | 0.59 | [0.54, 0.65] | 14 | 0.73 | [0.69, 0.76] | 12 | 0.66 | [0.61, 0.70] |

| Sample Type | ||||||||||||

| Clinical Sample | 6 | 0.82 | [0.77, 0.87] | 20 | 0.64 | [0.58, 0.70] | 10 | 0.77 | [0.73, 0.82] | 12 | 0.67 | [0.63, 0.72] |

| Community Sample | 45 | 0.78 | [0.77, 0.80] | 52 | 0.61 | [0.57, 0.64] | 42 | 0.73 | [0.70, 0.75] | 40 | 0.66 | [0.63, 0.69] |

| Detained Sample | 32 | 0.78 | [0.75, 0.80] | 15 | 0.56 | [0.49, 0.63] | 21 | 0.72 | [0.69, 0.75] | 20 | 0.64 | [0.61, 0.67] |

| Mixed Sample | 13 | 0.79 | [0.77, 0.82] | 17 | 0.67 | [0.63, 0.71] | 6 | 0.76 | [0.71, 0.80] | 5 | 0.62 | [0.52, 0.71] |

| Total Score | Callous–Unemotional | Narcissism | Impulsivity | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | QB | p | R2 | QB | p | R2 | QB | p | R2 | QB | p | |

| Administration Format | 0.439 | 60.412 | <0.001 | 0.474 | 71.297 | <0.001 | 0.567 | 76.635 | <0.001 | 0.445 | 40.292 | <0.001 |

| Country | 0.005 | 0.327 | 0.567 | 0.001 | 0.287 | 0.592 | 0.006 | 0.352 | 0.553 | 0.001 | 0.020 | 0.888 |

| Language | 0.000 | 0.001 | 0.979 | 0.01 | 1.080 | 0.299 | 0.009 | 0.275 | 0.600 | 0.000 | 0.005 | 0.946 |

| Sample Type | 0.026 | 2.483 | 0.478 | 0.071 | 8.090 | 0.044 | 0.059 | 5.161 | 0.160 | 0.032 | 2.047 | 0.563 |

| k | b | t | p | R2 | QE | |

|---|---|---|---|---|---|---|

| Total Score | ||||||

| Age Mean | 87 | −0.005 | −2.614 | 0.009 | 0.085 | 1023.132 *** |

| Age SD | 86 | 0.005 | 0.797 | 0.425 | 0.008 | 1155.398 *** |

| Scores Mean | 47 | −0.003 | −1.692 | 0.091 | 0.065 | 567.180 *** |

| Scores SD | 46 | 0.023 | 3.024 | 0.002 | 0.207 | 448.175 *** |

| Male % of sample | 91 | 0.000 | −0.049 | 0.961 | 0.000 | 3011.995 *** |

| Sample Size | 97 | 0.000 | −1.282 | 0.200 | 0.025 | 3462.726 *** |

| Callous–Unemotional | ||||||

| Age Mean | 95 | −0.009 | −2.756 | 0.006 | 0.084 | 740.735 *** |

| Age SD | 94 | −0.003 | −0.194 | 0.846 | 0.002 | 751.111 *** |

| Scores Mean | 64 | 0.005 | 0.588 | 0.556 | 0.002 | 572.123 *** |

| Scores SD | 61 | 0.040 | 1.899 | 0.058 | 0.048 | 550.730 *** |

| Male % of sample | 98 | 0.001 | 1.563 | 0.118 | 0.021 | 891.213 *** |

| Sample Size | 104 | 0.000 | −1.440 | 0.150 | 0.030 | 963.491 *** |

| Narcissism | ||||||

| Age Mean | 73 | −0.009 | −4.336 | 0.000 | 0.237 | 672.882 *** |

| Age SD | 73 | 0.015 | 1.632 | 0.103 | 0.034 | 1172.357 *** |

| Scores Mean | 52 | −0.006 | −0.942 | 0.346 | 0.024 | 643.070 *** |

| Scores SD | 52 | 0.040 | 2.968 | 0.003 | 0.159 | 609.404 *** |

| Male % of sample | 77 | 0.000 | −0.139 | 0.889 | 0.000 | 1269.825 *** |

| Sample Size | 82 | 0.000 | 0.677 | 0.498 | 0.003 | 1258.529 *** |

| Impulsivity | ||||||

| Age Mean | 70 | −0.013 | −4.303 | 0.000 | 0.287 | 438.220 *** |

| Age SD of Age | 70 | 0.024 | 2.013 | 0.044 | 0.057 | 733.898 *** |

| Scores Mean | 51 | −0.011 | −1.575 | 0.115 | 0.062 | 478.451 *** |

| Scores SD | 49 | 0.060 | 4.068 | 0.000 | 0.325 | 254.423 *** |

| Male % of sample | 73 | 0.000 | 0.295 | 0.768 | 0.002 | 757.510 *** |

| Sample Size | 78 | 0.000 | 1.078 | 0.281 | 0.015 | 834.698 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, M.-C.; Deng, J.; Zhang, X.; Liang, J.; Shou, Y. A Reliability Generalization Meta-Analysis of the Antisocial Process Screening Device. Behav. Sci. 2025, 15, 860. https://doi.org/10.3390/bs15070860

Wang M-C, Deng J, Zhang X, Liang J, Shou Y. A Reliability Generalization Meta-Analysis of the Antisocial Process Screening Device. Behavioral Sciences. 2025; 15(7):860. https://doi.org/10.3390/bs15070860

Chicago/Turabian StyleWang, Meng-Cheng, Jiaxin Deng, Xintong Zhang, Jinghui Liang, and Yiyun Shou. 2025. "A Reliability Generalization Meta-Analysis of the Antisocial Process Screening Device" Behavioral Sciences 15, no. 7: 860. https://doi.org/10.3390/bs15070860

APA StyleWang, M.-C., Deng, J., Zhang, X., Liang, J., & Shou, Y. (2025). A Reliability Generalization Meta-Analysis of the Antisocial Process Screening Device. Behavioral Sciences, 15(7), 860. https://doi.org/10.3390/bs15070860