From Gaze to Interaction: Links Between Visual Attention, Facial Expression Identification, and Behavior of Children Diagnosed with ASD or Typically Developing Children with an Assistance Dog

Abstract

1. Introduction

1.1. Humans’ Visual Attention and Its Importance in Social Interactions

1.2. Social Visual Attention in Autistic Spectrum Disorder (ASD)

1.3. ASD Children’s Visual Attention During Interspecific Interactions

2. General Materials and Methods

2.1. Ethics

2.2. Participants

2.3. Study Context

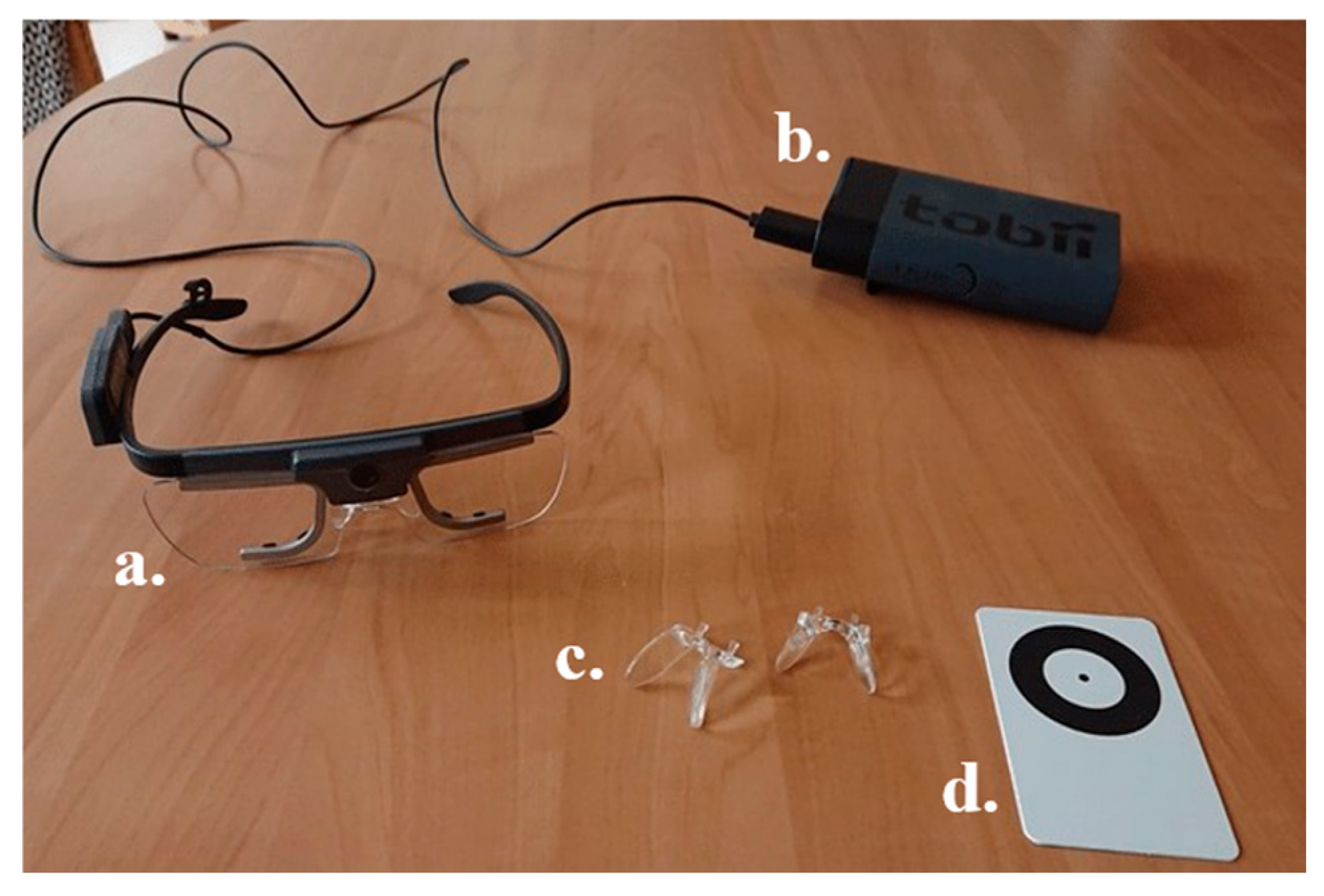

2.4. Methodology

2.5. Statistical Analyses

3. Study 1: Characterization of ASD Children and TD Children’s Visual Exploration Pattern

3.1. Methods

3.1.1. Participants

3.1.2. Procedure

3.1.3. Data Collection and Statistical Analyses

3.2. Results

3.2.1. Visual Exploration of ASD Children

3.2.2. Visual Exploration of TD Children

3.3. Comparisons Between ASD Children and TD Children

4. Study 2: Spontaneous Interactions Between Children and Assistance Dogs

4.1. Participants

4.2. Procedure

4.3. Data Collection and Statistical Analyses

4.4. Results

General Description for ASD Children and TD Children

5. Study 3: Facial Expression Identification Skills

5.1. Participants

5.2. Procedure

5.3. Visual Stimuli

5.4. Data Collection and Statistical Analyses

5.5. Results

5.5.1. Both Groups of Children

5.5.2. ASD Children

5.5.3. TD Children

6. Interactions Between the Results of Study 1 and 2, and Study 1 and 3

6.1. Correlations Between Visual Attention and Spontaneous Interaction

6.2. Correlations Between Visual Attention and Facial Expression Identification Ability

7. Discussion

7.1. Convergences in Interaction with Assistance Dog

7.2. Convergences in the Identification Performance of Human and Canine Facial Expressions

7.3. Similarities and Differences in Face Exploration (Experimenter vs. Assistance Dog)

7.4. Do Facial Exploration Patterns Modulate Facial Expression Identification Performance?

7.5. Do Facial Exploration Patterns Modulate Spontaneous Interaction with an Assistance Dog?

7.6. Limits and Further Research

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ASD | Autism Spectrum Disorder |

| TD | Typical Development |

Appendix A

Appendix B

| Variables | ASD | TD | |||

|---|---|---|---|---|---|

| Mean ± SD (%) | SD | Mean (%) | SD | ||

| Assistance dog presentation | Other | 17.32 ± 23.00 | 23.00 | 20.22 ± 9.12 | 9.12 |

| Dog’s head | 58.32 ± 21.75 | 21.75 | 52.07 ± 18.13 | 18.13 | |

| Muzzle | 14.92 ± 10.89 | 10.89 | 15.43 ± 8.34 | 8.34 | |

| Dog’s eyes | 23.86 ± 22.44 | 22.44 | 25.33 ± 20.45 | 20.45 | |

| Experimenter’s head | 24.36 ± 24.81 | 24.81 | 27.71 ± 18.22 | 18.22 | |

| Dog’s ears | 2.75 ± 5.77 | 5.77 | 2.55 ± 3.06 | 3.06 | |

| Nose-mouth | 8.33 ± 11.01 | 11.01 | 12.35 ± 12.19 | 12.19 | |

| Experimenter’s eyes | 9.84 ± 10.89 | 10.89 | 8.24 ± 7.63 | 7.63 | |

| Questions about assistance dog | Other | 18.01 ± 16.09 | 16.09 | 18.01 ± 13.29 | 13.29 |

| Dog’s head | 70.80 ± 17.75 | 17.75 | 72.28 ± 13.24 | 13.24 | |

| Muzzle | 20.71 ± 17.50 | 17.50 | 15.90 ± 10.38 | 10.38 | |

| Dog’s eyes | 29.49 ± 28.94 | 28.94 | 22.07 ± 11.83 | 11.83 | |

| Experimenter’s head | 11.19 ± 8.62 | 8.62 | 9.71 ± 7.77 | 7.77 | |

| Dog’s ears | 2.71 ± 1.85 | 1.85 | 9.16 ± 8.04 | 8.04 | |

| Nose-mouth | 3.62 ± 5.92 | 5.92 | 3.72 ± 5.04 | 5.04 | |

| Experimenter’s eyes | 3.88 ± 5.32 | 5.32 | 3.17 ± 3.09 | 3.09 | |

| Gaze fixation on the experimenter | Other | 14.00 ± 17.78 | 17.78 | 0.22 ± 0.69 | 0.69 |

| Experimenter’s head | 86.00 ± 17.78 | 17.78 | 99.78 ± 0.69 | 0.69 | |

| Nose-mouth | 24.85 ± 30.65 | 30.65 | 34.62 ± 40.42 | 40.42 | |

| Experimenter’s eyes | 42.99 ± 39.60 | 39.60 | 47.77 ± 39.31 | 39.31 | |

| Gaze fixation on the assistance dog | Other | 20.05 ± 24.81 | 24.81 | 2.28 ± 7.11 | 7.11 |

| Dog’s head | 79.95 ± 24.81 | 24.81 | 97.72 ± 7.11 | 7.11 | |

| Muzzle | 12.14 ± 19.40 | 19.40 | 14.53 ± 25.85 | 25.85 | |

| Dog’s eyes | 50.43 ± 43.14 | 43.14 | 60.80 ± 28.28 | 28.28 | |

| Dog’s ears | 3.27 ± 3.91 | 3.91 | 4.77 ± 13.72 | 13.72 | |

| 1 | A shorter measurement time than the total recording duration (20 s) was due to temporary loss of eye-tracking signal caused by participants’ eye movements (e.g., looking too high or too low relative to the glasses’ sensors, resulting in data loss). This presentation phase generated greater attentional shifts between the experimenter and an assistance dog, creating more signal interferences for some individuals. |

| 2 | The term “emotion” was chosen over “facial expression” because it is better understood by children of the age group included in this study, especially by ASD children. |

References

- Altmann, J. (1974). Observational study of behavior: Sampling methods. Behaviour, 49(3–4), 227–266. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (DSM-5®). American Psychiatric Pub. [Google Scholar]

- Amici, F., Waterman, J., Kellermann, C. M., Karimullah, K., & Bräuer, J. (2019). The ability to recognize dog emotions depends on the cultural milieu in which we grow up. Scientific Reports, 9(1), 16414. [Google Scholar] [CrossRef] [PubMed]

- Black, M. H., Chen, N. T. M., Iyer, K. K., Lipp, O. V., Bölte, S., Falkmer, M., Tan, T., & Girdler, S. (2017). Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neuroscience & Biobehavioral Reviews, 80, 488–515. [Google Scholar] [CrossRef]

- Bloom, T., & Friedman, H. (2013). Classifying dogs’ (Canis familiaris) facial expressions from photographs. Behavioural Processes, 96, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Borgi, M., Cogliati-Dezza, I., Brelsford, V., Meints, K., & Cirulli, F. (2014). Baby schema in human and animal faces induces cuteness perception and gaze allocation in children. Frontiers in Psychology, 5, 411. [Google Scholar] [CrossRef]

- Bölte, S., & Poustka, F. (2003). The recognition of facial affect in autistic and schizophrenic subjects and their first-degree relatives. Psychological Medicine, 33(5), 907–915. [Google Scholar] [CrossRef]

- Celani, G. (2002). Human beings, animals and inanimate objects: What do people with autism like? Autism, 6(1), 93–102. [Google Scholar] [CrossRef]

- Chokron, S., Pieron, M., & Zalla, T. (2014). Troubles du spectre de l’autisme et troubles de la fonction visuelle: Revue critique, implications théoriques et cliniques. L’information Psychiatrique, 90(10), 819–826. [Google Scholar] [CrossRef]

- Correia-Caeiro, C., Lawrence, A., Abdelrahman, A., Guo, K., & Mills, D. (2022). How do children view and categorise human and dog facial expressions? Developmental Science, 26(3), e13332. [Google Scholar] [CrossRef]

- Courchesne, E., Townsend, J., Akshoomoff, N. A., Saitoh, O., Yeung-Courchesne, R., Lincoln, A. J., James, H. E., Haas, R. H., Schreibman, L., & Lau, L. (1994). Impairment in shifting attention in autistic and cerebellar patients. Behavioral Neuroscience, 108(5), 848–865. [Google Scholar] [CrossRef]

- Davis, T. N., Scalzo, R., Butler, E., Stauffer, M., Farah, Y. N., Perez, S., Mainor, K., Clark, C., Miller, S., Kobylecky, A., & Coviello, L. (2015). Animal assisted interventions for children with autism spectrum disorder: A systematic review. Education and Training in Autism and Developmental Disabilities, 50(3), 316–329. [Google Scholar]

- Dollion, N., & Grandgeorge, M. (2022). The pet in the daily life of typical and atypical developing children and their families. La Revue Internationale de Leducation Familiale, 50(1), 157–184. [Google Scholar] [CrossRef]

- Dollion, N., Herbin, A., Champagne, N., Plusquellec, P., & Grandgeorge, M. (2022). Characterization of children with autism spectrum disorder’s interactions with a service dog during their first encounter. Anthrozoos, 35(6), 867–889. [Google Scholar] [CrossRef]

- Dollion, N., Toutain, M., François, N., Champagne, N., Plusquellec, P., & Grandgeorge, M. (2021). Visual exploration and observation of real-life interactions between ASD children and service dogs. Journal of Autism and Developmental Disorders, 51(11), 3785–3805. [Google Scholar] [CrossRef]

- Duarte-Gan, C., Martos-Montes, R., & Garcia-Linares, M. (2023). Visual processing of the faces of humans and dogs by children with autism spectrum disorder: An eye-tracking study. Anthrozoos, 36(4), 605–623. [Google Scholar] [CrossRef]

- Dunn, W. (2010). Profil sensoriel, manuel—Winnie Dunn—ECPA, les Éd. Du Centre de psychologie appliquee (ECPA, les Éd. Du Centre de psychologie appliquee). ECPA, les Éd. du Centre de psychologie appliquee. [Google Scholar]

- Dyck, M. J., Ferguson, K., & Shochet, I. M. (2001). Do autism spectrum disorders differ from each other and from non-spectrum disorders on emotion recognition tests? European Child & Adolescent Psychiatry, 10(2), 105–116. [Google Scholar] [CrossRef]

- Emery, N. J. (2000). The eyes have it: The neuroethology, function and evolution of social gaze. Neuroscience & Biobehavioral Reviews, 24(6), 581–604. [Google Scholar] [CrossRef]

- Erbacher, J. C. E., & Bördlein, C. (2025). Dog-assisted interventions for children and adolescents with autism spectrum disorders. Research on Social Work Practice, 10497315241307636. [Google Scholar] [CrossRef]

- Eretová, P., Chaloupková, H., Hefferová, M., & Jozífková, E. (2020). Can children of different ages recognize dog communication signals in different situations? International Journal of Environmental Research and Public Health, 17(2), 506. [Google Scholar] [CrossRef]

- Filiâtre, J. C., Millot, J. L., & Montagner, H. (1986). New data on communication behaviour between the young child and his pet dog. Behavioural Processes, 12(1), 33–44. [Google Scholar] [CrossRef]

- Gerhardstein, P., & Rovee-Collier, C. (2002). The development of visual search in infants and very young children. Journal of Experimental Child Psychology, 81(2), 194–215. [Google Scholar] [CrossRef] [PubMed]

- Gillet, P., & Barthélémy, C. (2011). Développement de l’attention chez le petit enfant: Implications pour les troubles autistiques. Développements, 9(3), 17–25. [Google Scholar] [CrossRef]

- Girard, J. M., Shandar, G., Liu, Z., Cohn, J. F., Yin, L., & Morency, L.-P. (2019, September 3–6). Reconsidering the duchenne smile: Indicator of positive emotion or artifact of smile intensity? 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII) (pp. 594–599), Cambridge, UK. [Google Scholar] [CrossRef]

- Grandgeorge, M., Bourreau, Y., Alavi, Z., Lemonnier, E., Tordjman, S., Deleau, M., & Hausberger, M. (2015). Interest towards human, animal and object in children with autism spectrum disorders: An ethological approach at home. European Child & Adolescent Psychiatry, 24(1), 83–93. [Google Scholar] [CrossRef]

- Grandgeorge, M., Degrez, C., Alavi, Z., & Lemonnier, E. (2016). Face processing of animal and human static stimuli by children with autism spectrum disorder: A pilot study. Human-Animal Interaction Bulletin, 4(1), 39–53. [Google Scholar] [CrossRef]

- Grandgeorge, M., Deleau, M., Lemonnier, E., Tordjman, S., & Hausberger, M. (2012a). Children with autism encounter an unfamiliar pet: Application of the Strange Animal Situation test. Interaction Studies, 13(2), 165–188. [Google Scholar] [CrossRef]

- Grandgeorge, M., Gautier, Y., Bourreau, Y., Mossu, H., & Hausberger, M. (2020). Visual attention patterns differ in dog vs. cat interactions with children with typical development or autism spectrum disorders. Frontiers in Psychology, 11, 2047. [Google Scholar] [CrossRef]

- Grandgeorge, M., Tordjman, S., Lazartigues, A., Lemonnier, E., Deleau, M., & Hausberger, M. (2012b). Does pet arrival trigger prosocial behaviors in individuals with autism? PLoS ONE, 7(8), e41739. [Google Scholar] [CrossRef]

- Harms, M. B., Martin, A., & Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychology Review, 20(3), 290–322. [Google Scholar] [CrossRef]

- Hawkins, R. D., Hatin, B. D. M., & Revesz, E. O. (2021). Accuracy of canine vs. human emotion identification: Impact of dog ownership and belief in animal mind. Human-Animal Interaction Bulletin, 12(2), 1–18. [Google Scholar] [CrossRef]

- Hudry, K., & Slaughter, V. (2009). Agent familiarity and emotional context influence the everyday empathic responding of young children with autism. Research in Autism Spectrum Disorders, 3(1), 74–85. [Google Scholar] [CrossRef]

- Ingersoll, B. (2008). The effect of context on imitation skills in children with autism. Research in Autism Spectrum Disorders, 2(2), 332–340. [Google Scholar] [CrossRef]

- Johnstone, S. J., Dimoska, A., Smith, J. L., Barry, R. J., Pleffer, C. B., Chiswick, D., & Clarke, A. R. (2007). The development of stop-signal and Go/Nogo response inhibition in children aged 7–12 years: Performance and event-related potential indices. International Journal of Psychophysiology, 63(1), 25–38. [Google Scholar] [CrossRef]

- Jones, W., & Klin, A. (2013). Attention to eyes is present but in decline in 2–6-month-old infants later diagnosed with autism. Nature, 504(7480), 427–431. [Google Scholar] [CrossRef] [PubMed]

- Kaminski, J., Waller, B. M., Diogo, R., Hartstone-Rose, A., & Burrows, A. M. (2019). Evolution of facial muscle anatomy in dogs. Proceedings of the National Academy of Sciences, 116(29), 14677–14681. [Google Scholar] [CrossRef]

- Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59(9), 809–816. [Google Scholar] [CrossRef] [PubMed]

- Kruck, J., Baduel, S., & Roge, B. (2013). Questionnaire de communication sociale pour le depistage des troubles du spectre autistique. Adaptation française. Hogrefe. Available online: https://hal.science/hal-04138726 (accessed on 20 January 2025).

- Lakestani, N. N., Donaldson, M. L., & Waran, N. (2014). Interpretation of dog behavior by children and young adults. Anthrozoos, 27(1), 65–80. [Google Scholar] [CrossRef]

- Langton, S. R. H., Law, A. S., Burton, A. M., & Schweinberger, S. R. (2008). Attention capture by faces. Cognition, 107(1), 330–342. [Google Scholar] [CrossRef]

- Loomes, R., Hull, L., & Mandy, W. P. L. (2017). What is the male-to-female ratio in autism spectrum disorder? A systematic review and meta-analysis. Journal of the American Academy of Child & Adolescent Psychiatry, 56(6), 466–474. [Google Scholar] [CrossRef]

- McNicholas, J., & Collis, G. M. (2000). Dogs as catalysts for social interactions: Robustness of the effect. British Journal of Psychology, 91(1), 61–70. [Google Scholar] [CrossRef]

- Mertens, C., & Turner, D. C. (1988). Experimental analysis of human-cat interactions during first encounters. Anthrozoös, 2(2), 83–97. [Google Scholar] [CrossRef]

- Millot, J.-L., & Filiâtre, J.-C. (1986). The behavioural sequences in the communication system between the child and his pet dog. Applied Animal Behaviour Science, 16(4), 383–390. [Google Scholar] [CrossRef]

- Montagner, H. (2002). L’enfant et l’animal: Les émotions qui libèrent l’intelligence. Odile Jacob. Available online: https://books.google.com/books?hl=fr&lr=&id=WQM2AAAAQBAJ&oi=fnd&pg=PT6&dq=L%27Enfant+et+l%27Animal+:+Les+%C3%A9motions+qui+lib%C3%A8rent+l%27intelligence+&ots=8NcGI0R-_0&sig=NraT_fAAxXtOADwGlyHUGDXt4x4 (accessed on 20 January 2025).

- Morrison, R. L., & Bellack, A. S. (1981). The role of social perception in social skill. Behavior Therapy, 12(1), 69–79. [Google Scholar] [CrossRef]

- Muszkat, M., de Mello, C., Munoz, P., Lucci, T., David, V., Siqueira, J., & Otta, E. (2015). Face scanning in autism spectrum disorder and attention deficit/hyperactivity disorder: Human versus dog face scanning. Frontiers in Psychiatry, 6, 150. [Google Scholar] [CrossRef]

- Nakano, T., Tanaka, K., Endo, Y., Yamane, Y., Yamamoto, T., Nakano, Y., Ohta, H., Kato, N., & Kitazawa, S. (2010). Atypical gaze patterns in children and adults with autism spectrum disorders dissociated from developmental changes in gaze behaviour. Proceedings of the Royal Society B: Biological Sciences, 277(1696), 2935–2943. [Google Scholar] [CrossRef] [PubMed]

- Nelson, C. A. (2001). The development and neural bases of face recognition. Infant and Child Development, 10(1-2), 3–18. [Google Scholar] [CrossRef]

- Norris, M., & Lecavalier, L. (2010). Screening accuracy of level 2 autism spectrum disorder rating scales: A review of selected instruments. Autism, 14(4), 263–284. [Google Scholar] [CrossRef]

- O’Haire, M., McKenzie, S., Beck, A., & Slaughter, V. (2013). social behaviors increase in children with autism in the presence of animals compared to toys. PLoS ONE, 8(2). [Google Scholar] [CrossRef]

- Pascalis, O., de Haan, M., & Nelson, C. A. (2002). Is face processing species-specific during the first year of life? Science, 296(5571), 1321–1323. [Google Scholar] [CrossRef]

- Posner, M. I. (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32(1), 3–25. [Google Scholar] [CrossRef]

- Posner, M. I., & Rothbart, M. K. (2007). Research on attention networks as a model for the integration of psychological science. Annual Review of Psychology, 58, 1–23. [Google Scholar] [CrossRef]

- Prothmann, A., Ettrich, C., & Prothmann, S. (2009). Preference for, and responsiveness to, people, dogs and objects in children with autism. Anthrozoös, 22(2), 161–171. [Google Scholar] [CrossRef]

- Riby, D. M., & Hancock, P. J. B. (2008). Viewing it differently: Social scene perception in williams syndrome and autism. Neuropsychologia, 46(11), 2855–2860. [Google Scholar] [CrossRef] [PubMed]

- Rutter, M., Bailey, A., & Lord, C. (2003). SCQ. The social communication questionnaire (p. 5). Western Psychological Services. [Google Scholar]

- Siniscalchi, M., D’Ingeo, S., Minunno, M., & Quaranta, A. (2018). Communication in dogs. Animals, 8(8), 131. [Google Scholar] [CrossRef]

- Spezio, M. L., Adolphs, R., Hurley, R. S. E., & Piven, J. (2007). Analysis of face gaze in autism using «Bubbles». Neuropsychologia, 45(1), 144–151. [Google Scholar] [CrossRef]

- Thorsson, M., Galazka, M. A., Åsberg Johnels, J., & Hadjikhani, N. (2024). Influence of autistic traits and communication role on eye contact behavior during face-to-face interaction. Scientific Reports, 14(1), 8162. [Google Scholar] [CrossRef] [PubMed]

- Toutain, M., Dollion, N., Henry, L., & Grandgeorge, M. (2024). How do children and adolescents with ASD look at animals? A scoping review. Children, 11(2), 211. [Google Scholar] [CrossRef]

- Toutain, M., Dollion, N., Henry, L., & Grandgeorge, M. (2025). Does a dog at school help identify human and animal facial expressions? A preliminary longitudinal study. European Journal of Investigation in Health, Psychology and Education, 15(2), 13. [Google Scholar] [CrossRef]

- Vacas, J., Antolí, A., Sánchez-Raya, A., Pérez-Dueñas, C., & Cuadrado, F. (2021). Visual preference for social vs. Non-social images in young children with autism spectrum disorders. An eye tracking study. PLoS ONE, 16(6), e0252795. [Google Scholar] [CrossRef] [PubMed]

- Valiyamattam, G. J., Katti, H., Chaganti, V. K., O’Haire, M. E., & Sachdeva, V. (2020). Do animals engage greater social attention in autism? An eye tracking analysis. Frontiers in Psychology, 11, 727. [Google Scholar] [CrossRef]

- Valtakari, N. V., Hooge, I. T. C., Viktorsson, C., Nyström, P., Falck-Ytter, T., & Hessels, R. S. (2021). Eye tracking in human interaction: Possibilities and limitations. Behavior Research Methods, 53(4), 1592–1608. [Google Scholar] [CrossRef]

- Witwer, A. N., & Lecavalier, L. (2007). Autism screening tools: An evaluation of the social communication questionnaire and the developmental behaviour checklist–Autism screening algorithm. Journal of Intellectual & Developmental Disability, 32(3), 179–187. [Google Scholar] [CrossRef]

| Participants Number | Group | Sex | Age (yo) | SCQ Score | Dunn Score | Presence of an Animal at Home * | Vision’s Particularities | Studies in Which the Participant Took Part |

|---|---|---|---|---|---|---|---|---|

| 1 | ASD | M | 11 | 29 | 127 | YES | NO | 1, 2, 3 |

| 2 | ASD | M | 11 | 21 | 114 | YES | NA | 1, 2, 3 |

| 3 | ASD | M | 13 | 23 | 111 | YES | NO | 1, 2, 3 |

| 4 | ASD | M | 9 | 19 | 123 | YES | NA | 1, 2, 3 |

| 5 | ASD | M | 8 | 28 | 120 | YES | NO | 2, 3 |

| 6 | ASD | M | 16 | 22 | NA | YES | Myopia | 1, 2, 3 |

| 7 | ASD | M | 6 | 31 | 89 | YES | Hyperopic | 1, 2, 3 |

| 8 | TD | M | 8 | 0 | 180 | YES | NO | 1, 2, 3 |

| 9 | TD | F | 11 | 1 | 179 | YES | Myopia | 1, 2, 3 |

| 10 | TD | F | 13 | 4 | 190 | YES | NO | 1, 2, 3 |

| 11 | TD | F | 6 | 8 | 117 | YES | Strabismus | 2, 3 |

| 12 | TD | F | 11 | 0 | 178 | YES | NA | 1, 2, 3 |

| 13 | TD | M | 9 | 3 | 156 | NO | NO | 1, 2, 3 |

| 14 | TD | F | 9 | 1 | 162 | YES | NA | 1, 2, 3 |

| 15 | TD | F | 8 | 1 | 155 | YES | Hyperopic | 2, 3 |

| 16 | TD | F | 12 | 1 | 162 | YES | NO | 1, 2, 3 |

| 17 | TD | F | 9 | 1 | 158 | YES | NO | 1, 2, 3 |

| 18 | TD | F | 7 | 5 | 169 | YES | NO | 1, 2, 3 |

| 19 | TD | F | 11 | 0 | 182 | YES | NO | 1, 2, 3 |

| 20 | TD | F | 7 | 0 | 141 | YES | NA | 1, 2, 3 |

| AOI Details | Experimenter | Assistance Dog |

| Head (circle with a maximum tolerance margin of half a hand around the head) | Head (circle around the head with a maximum tolerance margin of a quarter of a paw) | |

| Eyes (oval including both eyes when the head is facing forward, limited to the base of the nostrils) | Eyes (oval including both eyes when the head is facing forward, and only one eye when the head is in profile, as soon as the second eye is no longer visible) | |

| Nose-mouth (circle including the base of the nostrils, the mouth, and the base of the chin) | Muzzle (circle including the entire muzzle) | |

| Ears (not included due to technical challenge, small size, and limited visibility) | Ears (custom shape including the ear up to its lower insertion) | |

| Other (included the entire scene except the AOIs Head, Eyes, and Lower face) | Other (included the entire scene except the AOIs Head, Eyes, Lower face, and Ears) | |

| Example |  |  |

| Behavioural Category | Definition | Subtypes and Behaviours |

|---|---|---|

| Distance between child and assistance dog | Distance between the area of the child’s body closest to the assistance dog. | Direct contact, <1 arm, 1 to 2 arms, >2 arms, further away. |

| Distance between child and parent | Distance between the area of the child’s body closest to the parent. | Direct contact, <1 arm, 1 to 2 arms, >2 arms, further away. |

| Play behaviours | The child engages spontaneously with a dog in a playful activity. | Types of games: toy veterinary kit, rope, intelligence toy, chase, other (e.g., hold on to the lead, child lies down and strokes the dog while laughing, child throws another object at the assistance dog). |

| Gaze orientation of child | Global orientation of head and gaze. | Targets: assistance dog, parent, assistance dog-related object, self-centred, environment, undetermined. |

| Distance modulation with the assistance dog | The child approaches or backs away from the dog with both feet. | Types: approaching the assistance dog, backing away from an assistance dog. |

| Child’s contact with assistance dog | The child had a part of her/his body in physical contact with assistance dog’s body. | Type of contact: active (voluntary, involves movement) or passive (involuntary, does not involve movement). |

| Care behaviour by child | The child’s behaviour to care for the assistance dog or meet its needs. | The child brushes the assistance dog, gives it water or a kibble. |

| Vocalisation of child directed to assistance dog | The child used some vocalisation directed to assistance dog | Type of verbalisation: encourages interaction, neutral, stops interaction. |

| Vocalisation of child directed to parent | The child used some vocalisation directed to parent. | Nature of comments: about the assistance dog, other. |

| Other type of vocalisations | The child gives non-vocal vocal cues when interacting with the assistance dog. | Types: onomatopoeia (sounds, cries, noises to call the dog), laughter, smiles. |

| Joint attention | The child shares a moment of visual attention with the dog after following its gaze. | NA |

| Self-centred behaviour | The child’s behaviour or action is self-centred. | NA |

| Distance from | Assistance Dog | Parent | ||

|---|---|---|---|---|

| ASD | TD | ASD | TD | |

| Mean ± SD (%) | Mean ± SD (%) | Mean ± SD (%) | Mean ± SD (%) | |

| Direct contact | 32.78 ± 21.00 | 34.46 ± 23.56 | 0.45 ± 0.89 | 0.25 ± 0.51 |

| <1 arm | 37.93 ± 8.71 | 44.80 ± 16.93 | 4.55 ± 9.43 | 0.68 ± 1.37 |

| 1 to 2 arms | 11.88 ± 10.12 | 12.15 ± 7.51 | 2.28 ± 2.40 | 9.43 ± 11.61 |

| >2 arms | 17.17 ± 14.07 | 8.59 ± 5.48 | 92.72 ± 11.25 | 89.64 ± 11.92 |

| Statistics | X2 = 19.8; p < 0.001 | X2 = 42.9; p < 0.001 | X2 = 22.8; p < 0.001 | X2 = 43.8; p < 0.001 |

| ASD | TD | |

|---|---|---|

| Mean ± SD (%) | Mean ± SD (%) | |

| Other play | 5.41 ± 8.22 | 7.77 ± 11.38 |

| Toy veterinary kit | 12.83 ± 14.69 | 4.95 ± 5.91 |

| Rope play | 19.94 ± 22.04 | 13.21 ± 9.98 |

| Running with the dog | 2.44 ± 6.46 | 0.62 ± 1.53 |

| Intelligent toy | 4.22 ± 5.75 | 5.98 ± 4.57 |

| Not playing | 55.15 ± 24.99 | 67.46 ± 14.49 |

| Statistics | X2 = 17.7; df = 5; p = 0.003 | X2 = 37; df = 5; p < 0.001 |

| ASD | TD | |

|---|---|---|

| Mean ± SD (%) | Mean ± SD (%) | |

| Assistance dog | 51.13 ± 16.94 | 66.59 ± 10.15 |

| Assistance dog’s objects | 22.30 ± 10.18 | 16.42 ± 8.25 |

| Environment | 13.29 ± 8.59 | 8.99 ± 4.21 |

| Parent | 6.20 ± 6.74 | 5.20 ± 2.65 |

| Self-centered | 1.99 ± 1.84 | 0.85 ± 0.60 |

| Indeterminate | 5.09 ± 5.40 | 1.95 ± 2.44 |

| Statistics | X2 = 26; df = 5; p = 0.003 | X2 = 54.7; df = 5; p < 0.001 |

| ASD | TD | |

|---|---|---|

| Mean ± SD Duration (s) | Mean ± SD Duration (s) | |

| Active contact (duration) | 57.95 ± 49.12 | 137.98 ± 116.61 |

| Passive contact (duration) | 109.21 ± 82.60 | 52.78 ± 41.34 |

| Statistics | W = 5, p = 0.30 | W = 82; p = 0.008 |

| Mean ± SD (%) | Mean ± SD (%) | |

| Active contact (occurrences) | 11.15± | 16.04 ± 6.44 |

| Passive contact (occurrences) | 15.97± | 9.24 ± 4.76 |

| Statistics | W = 7, p = 0.3 | W = 85, p = 0.007 |

| ASD | TD | |

|---|---|---|

| Mean ± SD (%) | Mean ± SD (%) | |

| Encourage interaction with the assistance dog | 14.89 ± 9.94 | 21.98 ± 11.22 |

| Stop interaction with the assistance dog | 1.37 ± 3.20 | 0.07 ± 0.26 |

| Neutral verbalization towards the assistance dog | 18.78 ± 17.14 | 16.82 ± 7.51 |

| Statistics (Friedman test) | X2 = 9.55, p = 0.008 | X2 = 19.86, p < 0.001 |

| Talks about the assistance dog with the parent | 7.21 ± 10.69 | 9.40 ± 5.71 |

| Talks about something other than the assistance dog with the parent | 6.36 ± 7.72 | 5.28 ± 3.74 |

| Statistics (Wilcoxon test) | W = 11, p = 0.690 | W = 68, p = 0.025 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Toutain, M.; Paris, S.; Lefranc, S.; Henry, L.; Grandgeorge, M. From Gaze to Interaction: Links Between Visual Attention, Facial Expression Identification, and Behavior of Children Diagnosed with ASD or Typically Developing Children with an Assistance Dog. Behav. Sci. 2025, 15, 674. https://doi.org/10.3390/bs15050674

Toutain M, Paris S, Lefranc S, Henry L, Grandgeorge M. From Gaze to Interaction: Links Between Visual Attention, Facial Expression Identification, and Behavior of Children Diagnosed with ASD or Typically Developing Children with an Assistance Dog. Behavioral Sciences. 2025; 15(5):674. https://doi.org/10.3390/bs15050674

Chicago/Turabian StyleToutain, Manon, Salomé Paris, Solyane Lefranc, Laurence Henry, and Marine Grandgeorge. 2025. "From Gaze to Interaction: Links Between Visual Attention, Facial Expression Identification, and Behavior of Children Diagnosed with ASD or Typically Developing Children with an Assistance Dog" Behavioral Sciences 15, no. 5: 674. https://doi.org/10.3390/bs15050674

APA StyleToutain, M., Paris, S., Lefranc, S., Henry, L., & Grandgeorge, M. (2025). From Gaze to Interaction: Links Between Visual Attention, Facial Expression Identification, and Behavior of Children Diagnosed with ASD or Typically Developing Children with an Assistance Dog. Behavioral Sciences, 15(5), 674. https://doi.org/10.3390/bs15050674