Attention Dynamics in Spatial–Temporal Contexts

Abstract

1. Introduction

2. Experiment 1

2.1. Method

2.1.1. Participants

2.1.2. Apparatus and Stimuli

2.1.3. Design and Procedure

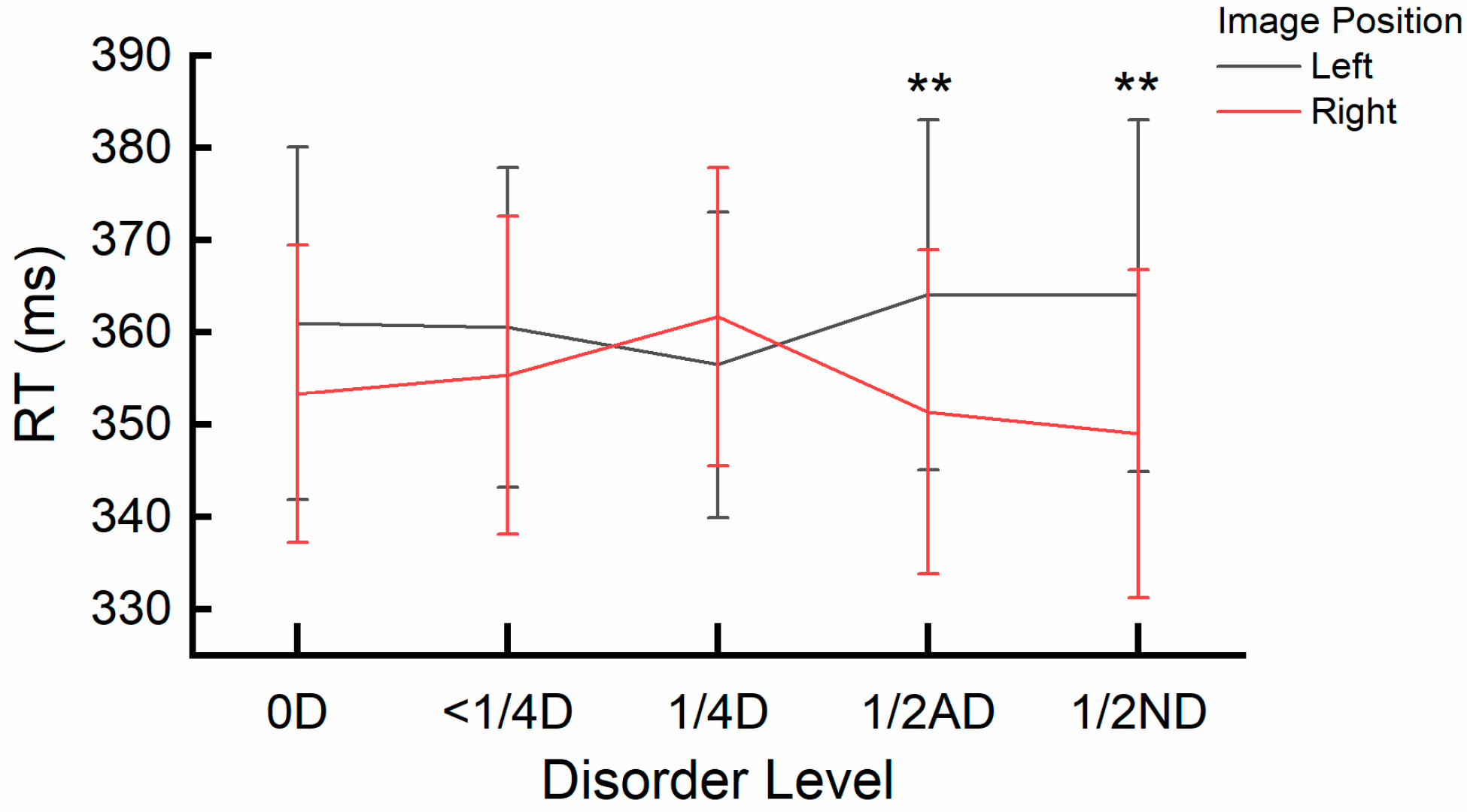

2.2. Results

2.3. Discussion

3. Experiment 2

3.1. Method

3.1.1. Participants

3.1.2. Apparatus and Stimuli

3.1.3. Design and Procedure

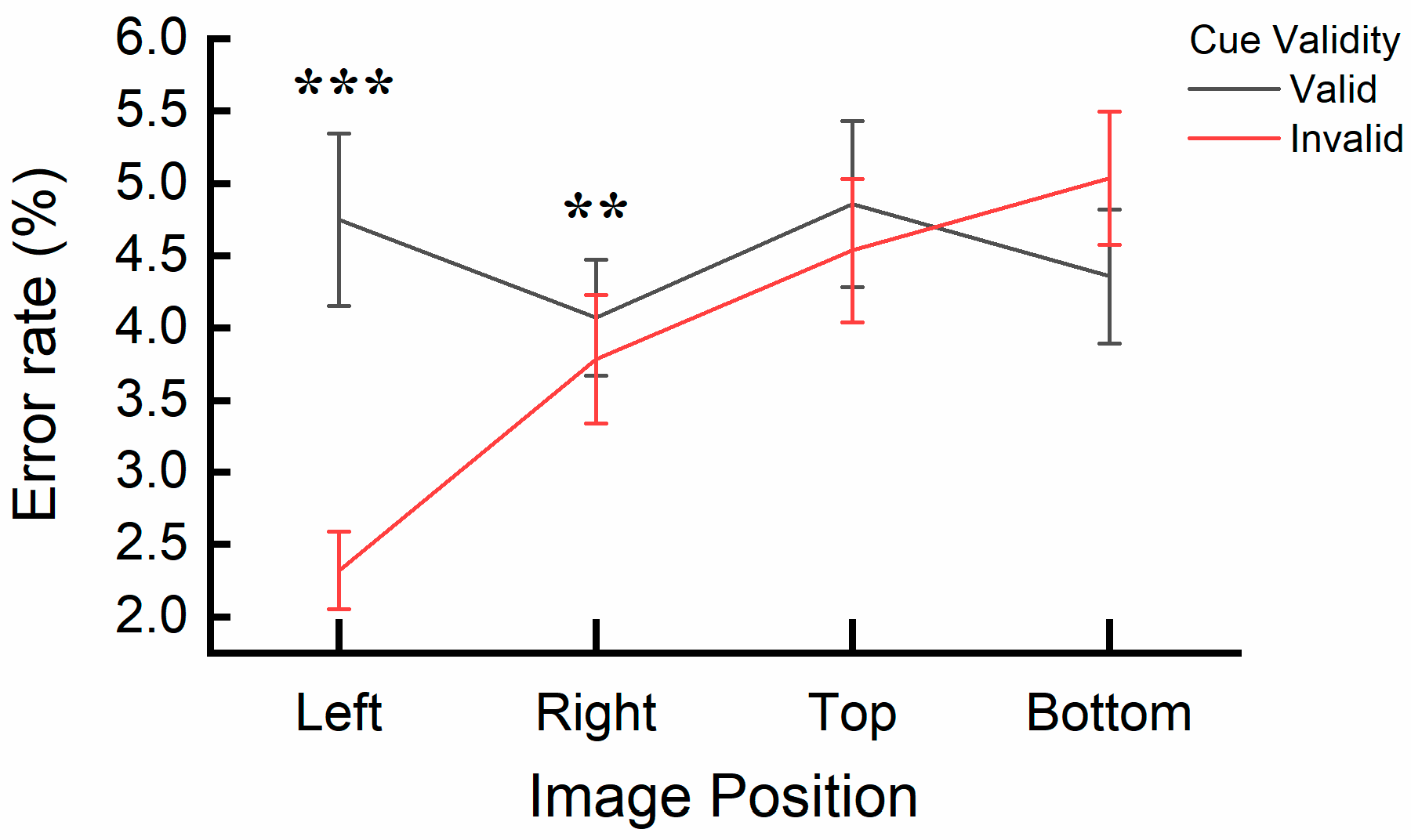

3.2. Results

3.3. Discussion

4. Experiment 3

4.1. Method

4.1.1. Participants

4.1.2. Apparatus, Design, Stimuli and Procedure

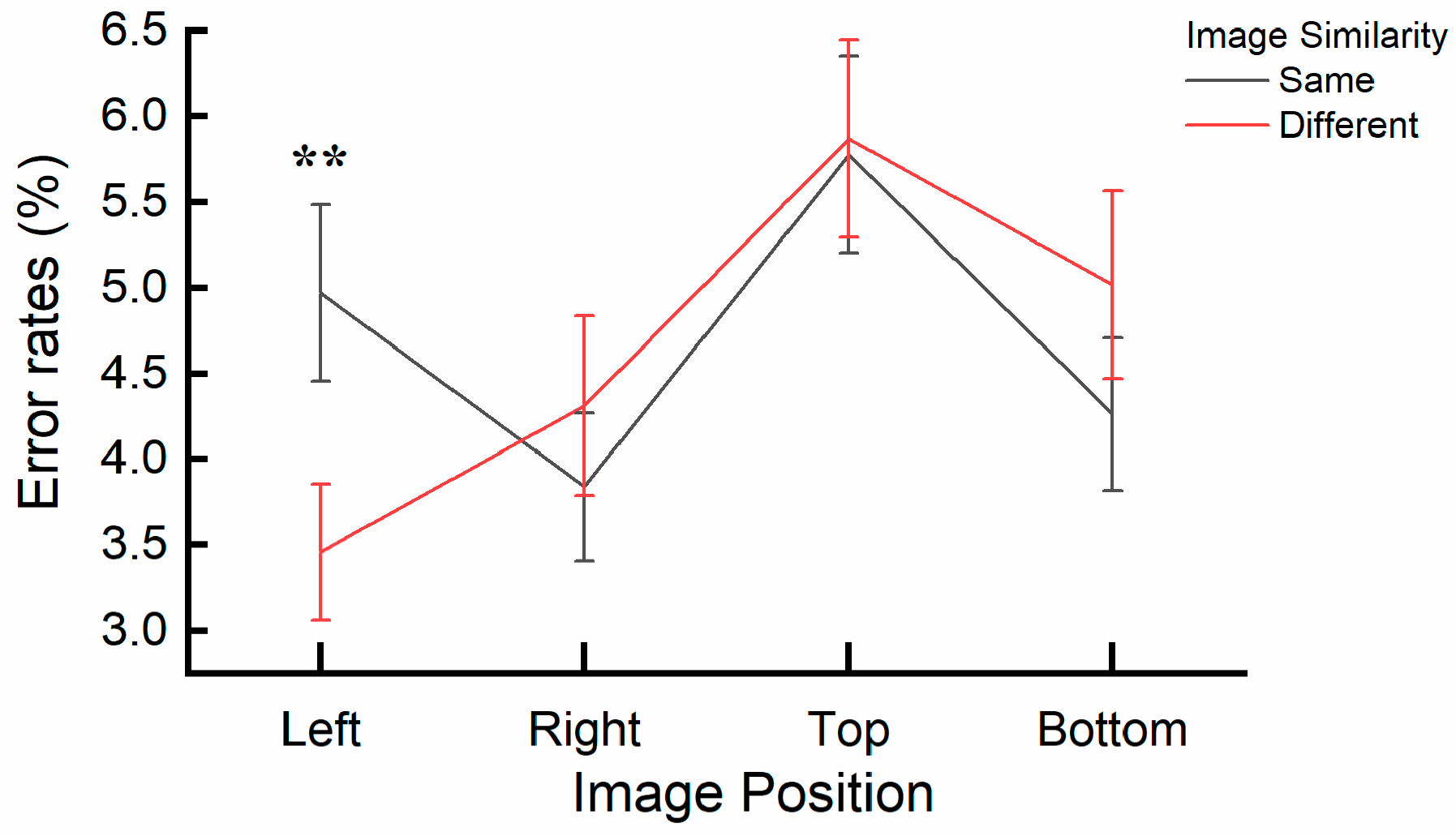

4.2. Results

4.3. Discussion

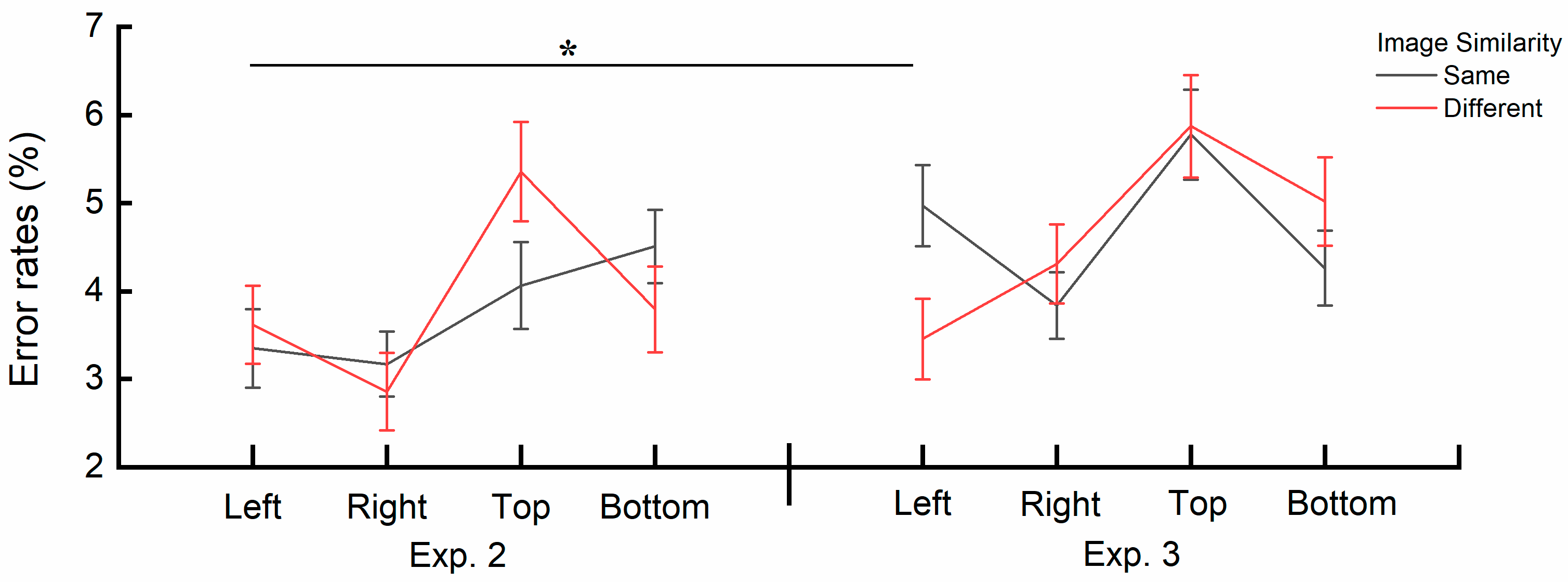

4.4. Combined Results of Experiment 2 and Experiment 3

4.5. Discussion

5. General Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aben, B., Stapert, S., & Blokland, A. (2012). About the distinction between working memory and short-term memory. Frontiers in Psychology, 3, 301. [Google Scholar] [CrossRef] [PubMed]

- Awh, E., Vogel, E. K., & Oh, S.-H. (2006). Interactions between attention and working memory. Neuroscience, 139(1), 201–208. [Google Scholar] [CrossRef] [PubMed]

- Barbot, A., Liu, S., Kimchi, R., & Carrasco, M. (2018). Attention enhances apparent perceptual organization. Psychonomic Bulletin & Review, 25(5), 1824–1832. [Google Scholar] [CrossRef]

- Ben-Av, M. B., Sagi, D., & Braun, J. (1992). Visual attention and perceptual grouping. Perception & Psychophysics, 52(3), 277–294. [Google Scholar] [CrossRef]

- Beracci, A., & Fabbri, M. (2024). Vertical mental timeline is not influenced by VisuoSpatial processing. Brain Sciences, 14(2), 184. [Google Scholar] [CrossRef]

- Bonato, M., Zorzi, M., & Umiltà, C. (2012). When time is space: Evidence for a mental time line. Neuroscience & Biobehavioral Reviews, 36(10), 2257–2273. [Google Scholar] [CrossRef]

- Carlisle, N. B., & Kristjánsson, Á. (2018). How visual working memory contents influence priming of visual attention. Psychological Research, 82(5), 833–839. [Google Scholar] [CrossRef] [PubMed]

- Cavanagh, P., Caplovitz, G. P., Lytchenko, T. K., Maechler, M. R., Tse, P. U., & Sheinberg, D. L. (2023). The Architecture of object-based attention. Psychonomic Bulletin & Review, 30(5), 1643–1667. [Google Scholar] [CrossRef]

- Chen, Y., Wang, Y., Guo, S., Zhang, X., & Yan, B. (2021). The causal future: The influence of shape features caused by external transformation on visual attention. Journal of Vision, 21(11), 17. [Google Scholar] [CrossRef]

- Chen, Z. (2012). Object-based attention: A tutorial review. Attention, Perception, & Psychophysics, 74(5), 784–802. [Google Scholar] [CrossRef]

- Clark, H. H. (1973). Space, time, semantics, and the child1. In T. E. Moore (Ed.), Cognitive development and acquisition of language (pp. 27–63). Academic Press. [Google Scholar] [CrossRef]

- Cohen, R. A. (2014). Processing speed and attentional resources. In R. A. Cohen (Ed.), The neuropsychology of attention (pp. 751–762). Springer. [Google Scholar] [CrossRef]

- Colom, R., Shih, P. C., Flores-Mendoza, C., & Quiroga, M. Á. (2006). The real relationship between short-term memory and working memory. Memory, 14(7), 804–813. [Google Scholar] [CrossRef]

- Cowan, N., Bao, C., Bishop-Chrzanowski, B. M., Costa, A. N., Greene, N. R., Guitard, D., Li, C., Musich, M. L., & Ünal, Z. E. (2024). The relation between attention and memory. Annual Review of Psychology, 75, 183–214. [Google Scholar] [CrossRef] [PubMed]

- Dalmaso, M., Schnapper, Y., & Vicovaro, M. (2023). When time stands upright: STEARC effects along the vertical axis. Psychological Research, 87(3), 894–918. [Google Scholar] [CrossRef]

- Danckert, J., & Goodale, M. A. (2001). Superior performance for visually guided pointing in the lower visual field. Experimental Brain Research, 137(3), 303–308. [Google Scholar] [CrossRef]

- Delogu, F., Nijboer, T. C. W., & Postma, A. (2012). Encoding location and serial order in auditory working memory: Evidence for separable processes. Cognitive Processing, 13(3), 267–276. [Google Scholar] [CrossRef]

- Downing, P. E. (2000). Interactions between visual working memory and selective attention. Psychological Science, 11(6), 467–473. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef]

- Foerster, R. M., & Schneider, W. X. (2019). Task-irrelevant features in visual working memory influence covert attention: Evidence from a partial report task. Vision, 3(3), 42. [Google Scholar] [CrossRef]

- Freeman, E., Sagi, D., & Driver, J. (2001). Lateral interactions between targets and flankers in low-level vision depend on attention to the flankers. Nature Neuroscience, 4(10), 1032–1036. [Google Scholar] [CrossRef] [PubMed]

- Frey, H.-P., Honey, C., & König, P. (2008). What’s color got to do with it? The influence of color on visual attention in different categories. Journal of Vision, 8(14), 6. [Google Scholar] [CrossRef] [PubMed]

- Galeano-Keiner, E. M., Pakzad, S., Brod, G., & Bunge, S. A. (2023). Examining the role of attentional allocation in working memory precision with pupillometry in children and adults. Journal of Experimental Child Psychology, 231, 105655. [Google Scholar] [CrossRef] [PubMed]

- Glavan, J. J., Haggit, J. M., & Houpt, J. W. (2020). Temporal organization of color and shape processing during visual search. Attention, Perception, & Psychophysics, 82(2), 426–456. [Google Scholar] [CrossRef]

- Glicksohn, A., & Cohen, A. (2011). The role of Gestalt grouping principles in visual statistical learning. Attention, Perception, & Psychophysics, 73(3), 708–713. [Google Scholar] [CrossRef]

- Gottwald, V. M., Lawrence, G. P., Hayes, A. E., & Khan, M. A. (2015). Representational momentum reveals visual anticipation differences in the upper and lower visual fields. Experimental Brain Research, 233(8), 2249–2256. [Google Scholar] [CrossRef] [PubMed]

- Han, S., Ding, Y., & Song, Y. (2002). Neural mechanisms of perceptual grouping in humans as revealed by high density event related potentials. Neuroscience Letters, 319(1), 29–32. [Google Scholar] [CrossRef]

- Hartmann, M., Martarelli, C. S., Mast, F. W., & Stocker, K. (2014). Eye movements during mental time travel follow a diagonal line. Consciousness and Cognition, 30, 201–209. [Google Scholar] [CrossRef]

- He, J., Bi, C., Jiang, H., & Meng, J. (2021). The variability of mental timeline in vertical dimension. Frontiers in Psychology, 12, 782975. [Google Scholar] [CrossRef]

- Heitz, R. P. (2014). The speed-accuracy tradeoff: History, physiology, methodology, and behavior. Frontiers in Neuroscience, 8, 150. [Google Scholar] [CrossRef]

- Hilchey, M. D., Klein, R. M., Satel, J., & Wang, Z. (2012). Oculomotor inhibition of return: How soon is it “recoded” into spatiotopic coordinates? Attention. Perception, & Psychophysics, 74(6), 1145–1153. [Google Scholar] [CrossRef]

- Hurlstone, M. J., Hitch, G. J., & Baddeley, A. D. (2014). Memory for serial order across domains: An overview of the literature and directions for future research. Psychological Bulletin, 140(2), 339–373. [Google Scholar] [CrossRef]

- Jones, G. V., & Martin, M. (1997). Handedness dependency in recall from everyday memory. British Journal of Psychology, 88(4), 609–619. [Google Scholar] [CrossRef] [PubMed]

- Kar, B. R., & Kenderla, P. K. (2017). Working memory and executive attention: Insights from developmental studies and implications for learning and education. Journal of the Indian Institute of Science, 97(4), 497–510. [Google Scholar] [CrossRef]

- Kimchi, R., Yeshurun, Y., & Cohen-Savransky, A. (2007). Automatic, stimulus-driven attentional capture by objecthood. Psychonomic Bulletin & Review, 14(1), 166–172. [Google Scholar] [CrossRef]

- Kimchi, R., Yeshurun, Y., Spehar, B., & Pirkner, Y. (2016). Perceptual organization, visual attention, and objecthood. Vision Research, 126, 34–51. [Google Scholar] [CrossRef]

- Kruijne, W., & Meeter, M. (2016). Implicit short-and long-term memory direct our gaze in visual search. Attention, Perception, & Psychophysics, 78(3), 761–773. [Google Scholar] [CrossRef]

- Kubovy, M., Holcombe, A. O., & Wagemans, J. (1998). On the lawfulness of grouping by proximity. Cognitive Psychology, 35(1), 71–98. [Google Scholar] [CrossRef]

- Leone, M. J., Salles, A., Pulver, A., Golombek, D. A., & Sigman, M. (2018). Time drawings: Spatial representation of temporal concepts. Consciousness and Cognition, 59, 10–25. [Google Scholar] [CrossRef]

- Li, A., Wolfe, J. M., & Chen, Z. (2020). Implicitly and explicitly encoded features can guide attention in free viewing. Journal of Vision, 20(6), 8. [Google Scholar] [CrossRef]

- Liu, B., Alexopoulou, Z.-S., & van Ede, F. (2024). Jointly looking to the past and the future in visual working memory. eLife, 12, RP90874. [Google Scholar] [CrossRef]

- Marshuetz, C., Smith, E. E., Jonides, J., DeGutis, J., & Chenevert, T. L. (2000). Order information in working memory: fMRI evidence for parietal and prefrontal mechanisms. Journal of Cognitive Neuroscience, 12(S2), 130–144. [Google Scholar] [CrossRef]

- Matthew, J. S., & Michael, A. N. (2002). Gestalt and feature-intensive processing: Toward a unified model of human information processing. Current Psychology, 21(1), 68–84. [Google Scholar] [CrossRef]

- Miwa, K., & Dijkstra, T. (2017). Lexical processes in the recognition of Japanese horizontal and vertical compounds. Reading and Writing, 30(4), 791–812. [Google Scholar] [CrossRef]

- Oberauer, K. (2019). Working memory and attention—A conceptual analysis and review. Journal of Cognition, 2(1), 36. [Google Scholar] [CrossRef] [PubMed]

- Oliveri, M., Koch, G., & Caltagirone, C. (2009). Spatial–temporal interactions in the human brain. Experimental Brain Research, 195(4), 489–497. [Google Scholar] [CrossRef] [PubMed]

- Pan, L., & Huang, X. (2024). The influence of spatial location on temporal order perception. Current Psychology, 43(3), 2052–2061. [Google Scholar] [CrossRef]

- Posner, M. I., Rafal, R. D., Choate, L. S., & Vaughan, J. (1985). Inhibition of return: Neural basis and function. Cognitive Neuropsychology, 2(3), 211–228. [Google Scholar] [CrossRef]

- Posner, M. I., Snyder, C. R., & Davidson, B. J. (1980). Attention and the detection of signals. Journal of Experimental Psychology, 109(2), 160–174. [Google Scholar] [CrossRef]

- Rakover, S. S. (2013). Explaining the face-inversion effect: The face–scheme incompatibility (FSI) model. Psychonomic Bulletin & Review, 20(4), 665–692. [Google Scholar] [CrossRef]

- Rasoulzadeh, V., Sahan, M. I., van Dijck, J.-P., Abrahamse, E., Marzecova, A., Verguts, T., & Fias, W. (2021). Spatial attention in serial order working memory: An EEG study. Cerebral Cortex, 31(5), 2482–2493. [Google Scholar] [CrossRef]

- Reppa, I., Schmidt, W. C., & Leek, E. C. (2012). Successes and failures in producing attentional object-based cueing effects. Attention, Perception, & Psychophysics, 74(1), 43–69. [Google Scholar] [CrossRef]

- Sasaki, Y. (2007). Processing local signals into global patterns. Current Opinion in Neurobiology, 17(2), 132–139. [Google Scholar] [CrossRef] [PubMed]

- Scozia, G., Pinto, M., Pellegrino, M., Lozito, S., Pia, L., Lasaponara, S., & Doricchi, F. (2023). How time gets spatial: Factors determining the stability and instability of the mental time line. Attention, Perception, & Psychophysics, 85(7), 2321–2336. [Google Scholar] [CrossRef]

- Shinohara, K. (1999). Conceptual mappings from spatial motion to time: Analysis of English and Japanese. In C. L. Nehaniv (Ed.), Computation for metaphors, analogy, and agents (pp. 230–241). Springer. [Google Scholar] [CrossRef]

- Soret, R., Prea, N., & Peysakhovich, V. (2024). Exploring the impact of body position on attentional orienting. Information, 15(2), 111. [Google Scholar] [CrossRef]

- Starr, A., & Srinivasan, M. (2021). The future is in front, to the right, or below: Development of spatial representations of time in three dimensions. Cognition, 210, 104603. [Google Scholar] [CrossRef]

- Stocker, K., Hartmann, M., Martarelli, C. S., & Mast, F. W. (2016). Eye movements reveal mental looking through time. Cognitive Science, 40(7), 1648–1670. [Google Scholar] [CrossRef] [PubMed]

- Taylor, T. L., & Klein, R. M. (1998). On the causes and effects of inhibition of return. Psychonomic Bulletin & Review, 5(4), 625–643. [Google Scholar] [CrossRef]

- Trentin, C., Slagter, H. A., & Olivers, C. N. L. (2023). Visual working memory representations bias attention more when they are the target of an action plan. Cognition, 230, 105274. [Google Scholar] [CrossRef]

- Tse, C.-S., & Altarriba, J. (2012). Recognizing the directionality of an arrow affects subsequent judgments of a temporal statement: The role of directionality in spatial metaphors. The Psychological Record, 62(3), 497–506. [Google Scholar] [CrossRef]

- Tucker, M., & Ellis, R. (2004). Action priming by briefly presented objects. Acta Psychologica, 116(2), 185–203. [Google Scholar] [CrossRef]

- Van Geert, E., Bossens, C., & Wagemans, J. (2021). The order & complexity toolbox for aesthetics shiny application [Online application]. Available online: https://elinevg.shinyapps.io/OCTA_toolbox/ (accessed on 26 April 2025).

- Van Geert, E., Bossens, C., & Wagemans, J. (2023). The order & complexity toolbox for aesthetics (OCTA): A systematic approach to study the relations between order, complexity, and aesthetic appreciation. Behavior Research Methods 55, 2423–2446. [Google Scholar] [CrossRef]

- von Sobbe, L., Scheifele, E., Maienborn, C., & Ulrich, R. (2019). The space–time congruency effect: A meta-analysis. Cognitive Science, 43(1), e12709. [Google Scholar] [CrossRef]

- Weger, U. W., & Pratt, J. (2008). Time flies like an arrow: Space-time compatibility effects suggest the use of a mental timeline. Psychonomic Bulletin & Review, 15(2), 426–430. [Google Scholar] [CrossRef]

- White, B. (2022). Attention, gestalt principles, and the determinacy of perceptual content. Erkenntnis, 87(3), 1133–1151. [Google Scholar] [CrossRef]

- Wickelgren, W. A. (1977). Speed-accuracy tradeoff and information processing dynamics. Acta Psychologica, 41(1), 67–85. [Google Scholar] [CrossRef]

- Wise, T. B., Barack, D. L., & Templer, V. L. (2022). Geometrical representation of serial order in working memory. Learning & Behavior, 50(4), 443–444. [Google Scholar] [CrossRef]

- Wong, B. (2010). Gestalt principles (Part 1). Nature Methods, 7(11), 863. [Google Scholar] [CrossRef]

- Woodman, G. F., Vecera, S. P., & Luck, S. J. (2003). Perceptual organization influences visual working memory. Psychonomic Bulletin & Review, 10(1), 80–87. [Google Scholar] [CrossRef]

- Wu, H., Zuo, Z., Yuan, Z., Zhou, T., Zhuo, Y., Zheng, N., & Chen, B. (2023). Neural representation of gestalt grouping and attention effect in human visual cortex. Journal of Neuroscience Methods, 399, 109980. [Google Scholar] [CrossRef] [PubMed]

- Xie, T., Nan, W., & Fu, S. (2021). Attention can operate on object representations in visual sensory memory. Attention, Perception, & Psychophysics, 83(8), 3069–3085. [Google Scholar] [CrossRef]

- Yu, D., Park, H., Gerold, D., & Legge, G. E. (2010). Comparing reading speed for horizontal and vertical English text. Journal of Vision, 10(2), 21. [Google Scholar] [CrossRef]

- Yu, J.-G., Xia, G.-S., Gao, C., & Samal, A. (2016). A computational model for object-based visual saliency: Spreading attention along gestalt cues. IEEE Transactions on Multimedia, 18(2), 273–286. [Google Scholar] [CrossRef]

- Zampini, M., Shore, D. I., & Spence, C. (2003). Audiovisual temporal order judgments. Experimental Brain Research, 152(2), 198–210. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y., Wu, F., Wan, X., Shen, M., & Gao, Z. (2021). Does the presence of more features in a bound representation in working memory require extra object-based attention? Memory & Cognition, 49(8), 1583–1599. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhang, X.; Van den Bussche, E. Attention Dynamics in Spatial–Temporal Contexts. Behav. Sci. 2025, 15, 599. https://doi.org/10.3390/bs15050599

Wang Y, Zhang X, Van den Bussche E. Attention Dynamics in Spatial–Temporal Contexts. Behavioral Sciences. 2025; 15(5):599. https://doi.org/10.3390/bs15050599

Chicago/Turabian StyleWang, Yuying, Xuemin Zhang, and Eva Van den Bussche. 2025. "Attention Dynamics in Spatial–Temporal Contexts" Behavioral Sciences 15, no. 5: 599. https://doi.org/10.3390/bs15050599

APA StyleWang, Y., Zhang, X., & Van den Bussche, E. (2025). Attention Dynamics in Spatial–Temporal Contexts. Behavioral Sciences, 15(5), 599. https://doi.org/10.3390/bs15050599