Biased and Biasing: The Hidden Bias Cascade and Bias Snowball Effects

Abstract

1. The Hidden Bias Cascade and Bias Snowball Effects

Explicit and Intentional Bias

- It is widespread. Although explicit intentional bias exists, it is not as common and widespread as cognitive bias. Whereas the former is exhibited only by some ‘bad apples’ who are deliberately and intentionally biased, the latter cognitive bias is a ubiquitous phenomenon that impacts everyone due to the top-down nature of human cognition and other aspects of cognitive architecture (Nickerson, 1998).

- It is harder to detect. Explicit intentional bias is much easier to detect relative to implicit hidden cognitive bias. The very nature of implicit bias makes it less apparent, and thus this bias is harder to detect than explicit intentional bias. Furthermore, it is not only implicit but it is also often not even within the conscious awareness of the person who is exhibiting the bias. Indeed, indirect measures are required where implicit cognitive bias is concerned (Greenwald & Banaji, 1995).

- Minimizing and countering cognitive bias is not easy or straightforward. The ‘bad apples’ who exhibit explicit intentional bias are relatively easy to deal with. However, cognitive bias poses a bigger challenge (e.g., Hetey & Eberhardt, 2018).

2. What Is Cognitive Bias?

2.1. Common Misconceptions and Fallacies About Cognitive Bias

2.2. Cognitive Biases in the Justice and Legal Systems and Their Sources

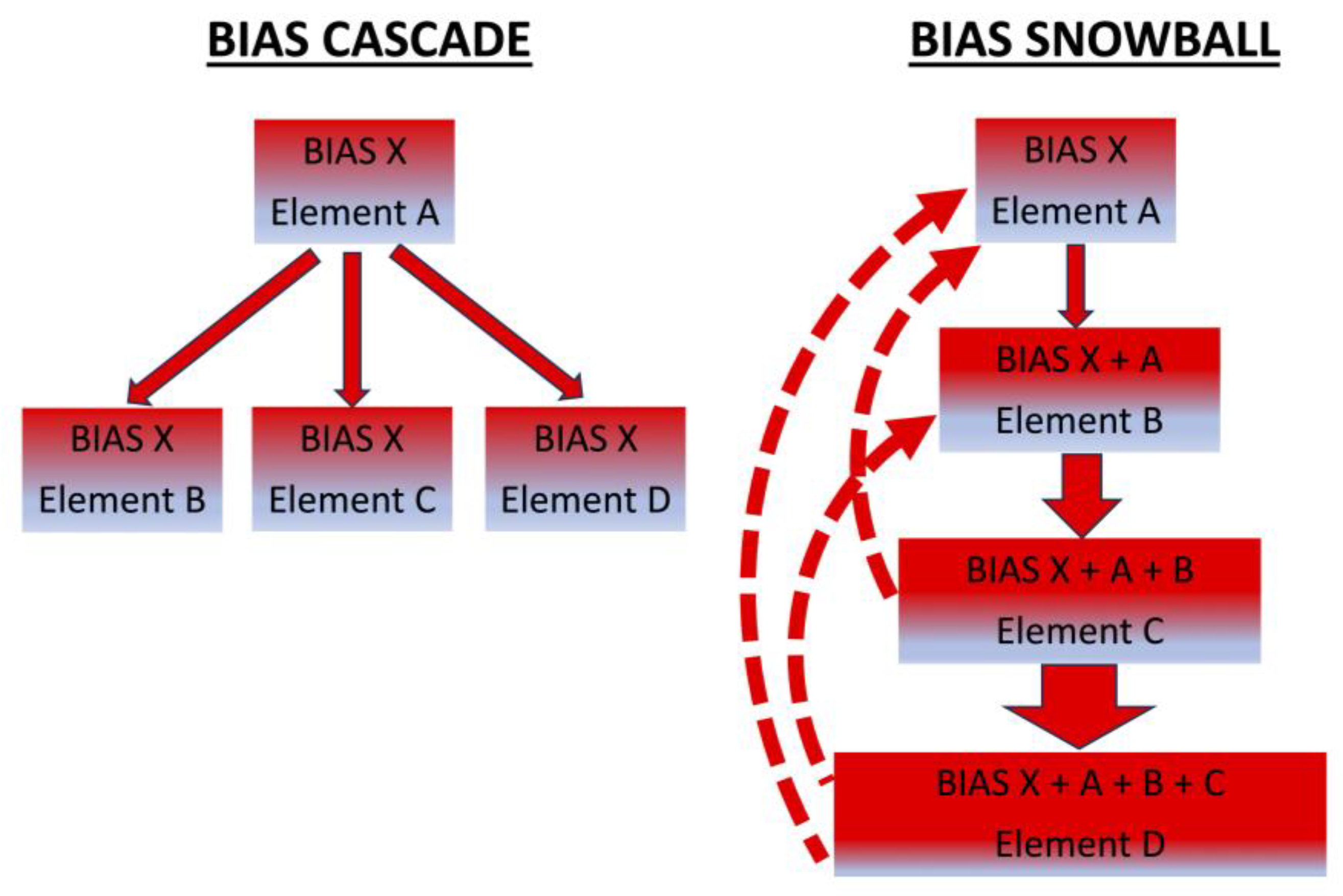

3. Bias Cascade and Bias Snowball Effects

4. Public Policy to Minimize Bias in the Justice and Legal Systems

4.1. The Challenge

- The bias blind spot (Neal & Brodsky, 2016; Pronin et al., 2002, 2004) and the implicit nature of cognitive bias make it hard for people to acknowledge its existence, let alone to be transparent about it (which is the next best thing: if you cannot remove the source of the bias and its possible impact, at least be transparent about it).

- Not only is the bias implicit but, specifically in the justice and legal systems, errors are not apparent. In contrast to other domains, where aircraft crash, patients die, or stocks lose value, in the justice and legal systems, the ground truth is not known, and we have no idea how many innocent people are wrongfully convicted. If/when this happens, it is not as apparent as it is in other domains.

- The adversarial legal system makes it hard, almost impossible, to uncover and acknowledge the biases. There is a (justifiable) fear that any acknowledgment will be used against them in court. Furthermore, to avoid court exposure of existing biases in the justice and legal systems, attractive plea bargains (and even dropping all charges) are offered when the prosecution realizes that the defense is going to publicly reveal the bias against their client, especially when the bias is widespread and systemic to the entire justice and legal systems. The fear of having bias exposed (as well as errors and other issues) also makes forensic science crime laboratories reluctant to do research, validation studies, and proper quality assurance measurement (and sometimes, they stop them in the middle when they find problematic data showing biases and other issues).

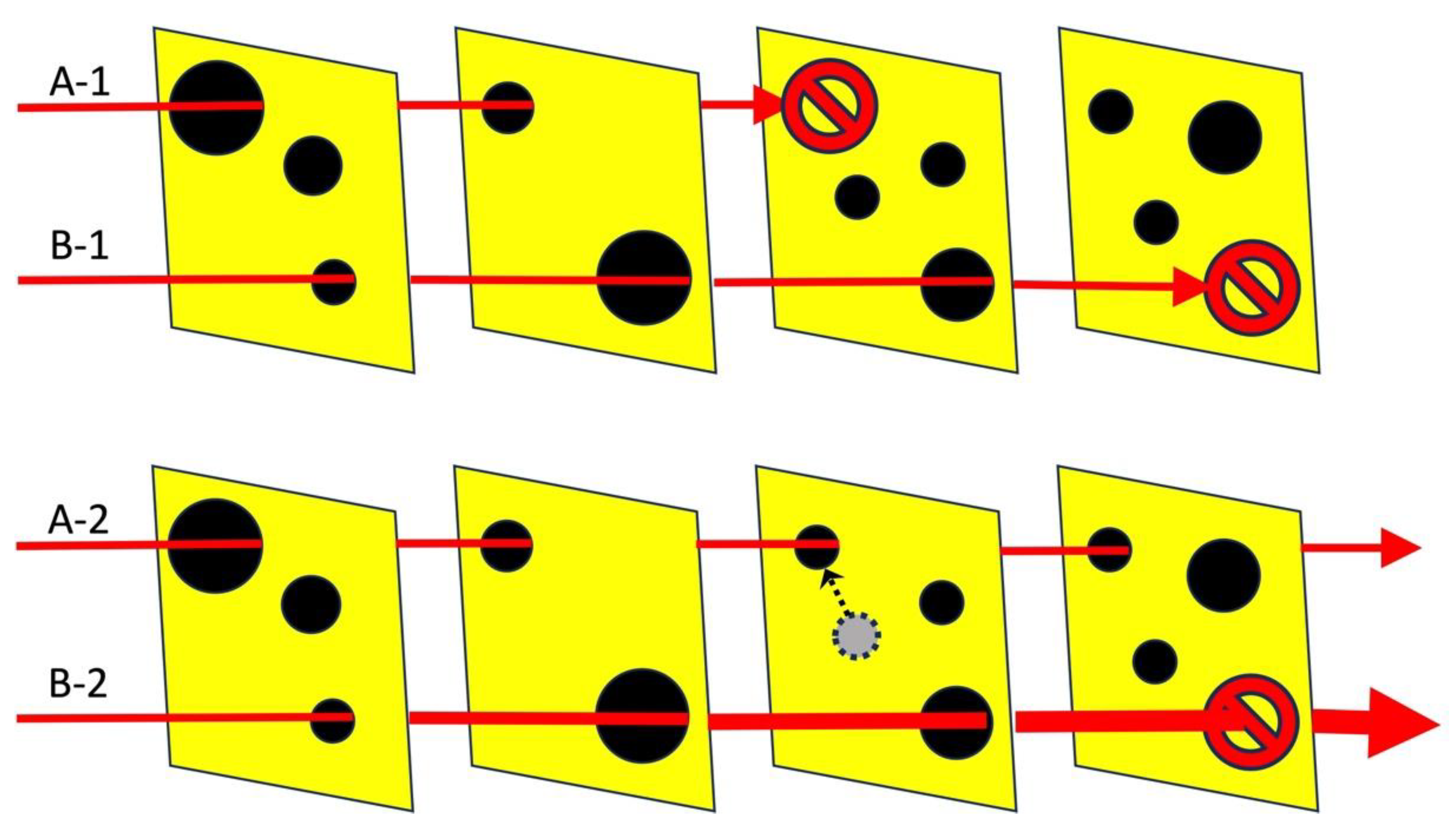

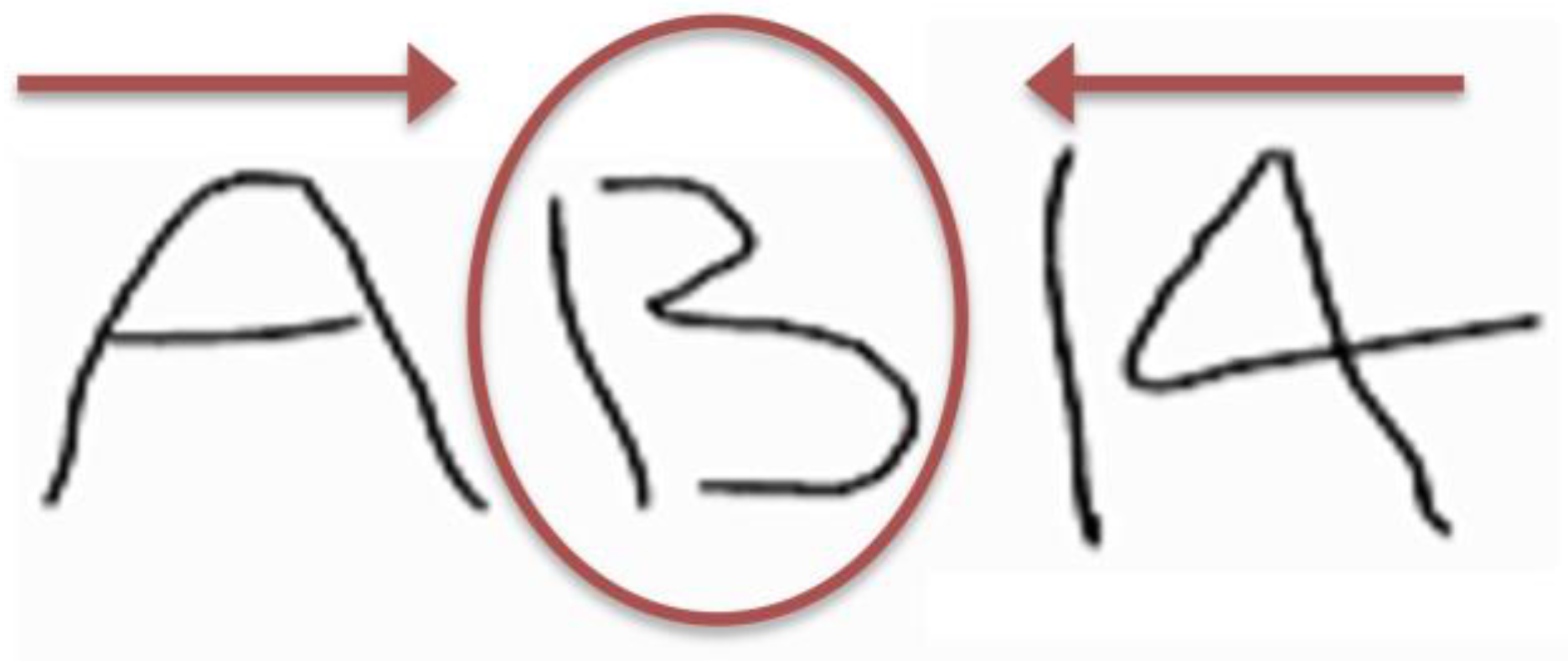

4.2. Blinding to Irrelevant Information

4.3. Compartmentalization

4.4. Bias by Relevant Information and Linear Sequential Unmasking

4.5. Generating a Hypothesis and Using Multiple Hypotheses

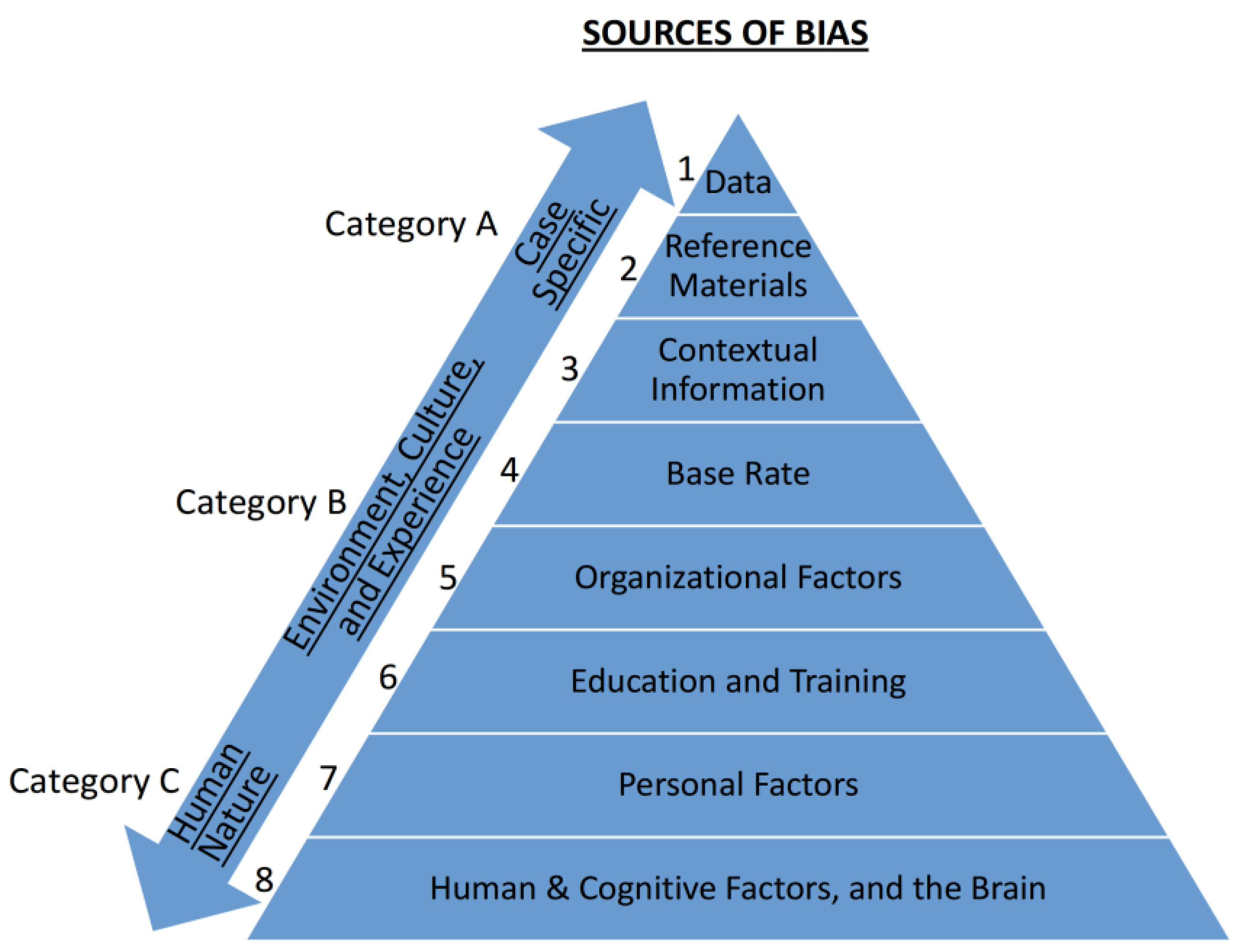

- Proper training and education (see Level 6 in the sources of bias, Figure 1) so the different elements in the justice and legal systems know and understand cognitive bias and acknowledge its existence and potential harm (see above, how this is a prerequisite to minimizing bias, and why it is so hard to achieve in the justice and legal systems).

- Procedures and best practices that blind irrelevant information and manage the flow of information to avoid bias in the first place.

- In case bias occurs, use compartmentalization and other measures to minimize it contaminating further decisions and elements in the justice and legal systems. For example, make sure that each element is organizationally separate and independent (see Level 5 in the sources of bias). Indeed, many forensic laboratories are part of the police and even part of the DA’s Office.

- Mandating full transparency, so every and each communication between the different elements is documented. This, by itself, will make people reluctant to give irrelevant contextual information or attempt to bias others. And, if such things do occur, at least it will be transparent.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Public Significance Statement

Conflicts of Interest

| 1 | This definition was originally created to address bias in forensic science; here, it is applied across the justice and legal systems. |

References

- Almada, H. R., Passalacqua, N. V., Congram, D., & Pilloud, M. (2021). As forensic scientists and as people, we must not confuse objectivity with neutrality. Journal of Forensic Sciences, 66, 2067–2068. [Google Scholar] [CrossRef]

- Ask, K., & Granhag, P. A. (2005). Motivational sources of confirmation bias in criminal investigations: The need for cognitive closure. Journal of Investigative Psychology and Offender Profiling, 2, 43–63. [Google Scholar] [CrossRef]

- Balcetis, E., & Dunning, D. (2006). See what you want to see: Motivational influences on visual perception. Journal of Personality and Social Psychology, 91, 612–625. [Google Scholar] [CrossRef]

- Balcetis, E., & Dunning, D. (2010). Wishful seeing: More desired objects are seen as close. Psychological Science, 21, 147–152. [Google Scholar] [PubMed]

- Baldwin, J. (1993). Police interview techniques: Establishing truth or proof? British Journal of Criminology, 33, 325–352. [Google Scholar] [CrossRef]

- Beilock, S. L., Carr, T. H., MacMahon, C., & Starkes, J. L. (2002). When paying attention becomes counterproductive: Impact of divided versus skill-focused attention on novice and experienced performance of sensorimotor skills. Journal of Experimental Psychology: Applied, 8, 6–16. [Google Scholar]

- Benjamin, R. (Ed.). (2019a). Captivating technology: Race, carceral technoscience, and liberatory imagination in everyday life. Duke University Press. [Google Scholar]

- Benjamin, R. (2019b). Race after technology: Abolitionist tools for the new jim code. Polity Publishing. [Google Scholar]

- Berryessa, C., Dror, I. E., & McCormack, B. (2023). Prosecuting from the bench? Examining sources of pro-prosecution bias in judges. Legal and Criminological Psychology, 28, 1–14. [Google Scholar] [CrossRef]

- Berthet, V. (2022). The impact of cognitive biases on professionals’ decision-making: A review of four occupational areas. Frontiers in Psychology, 12, 802439. [Google Scholar] [CrossRef]

- Biggs, A. T., Kramer, M. R., & Mitroff, S. R. (2018). Using cognitive psychology research to inform professional visual search operations. Journal of Applied Research in Memory and Cognition, 7, 189–198. [Google Scholar]

- Borum, R., Otto, R., & Golding, S. (1993). Improved clinical judgment and decision making in forensic evaluation. Journal of Psychiatry & Law, 21, 35–76. [Google Scholar] [CrossRef]

- Brook, C., Lynøe, N., Eriksson, A., & Balding, D. (2021). Retraction of a peer reviewed article suggests ongoing problems with Australian forensic science. Forensic Science International: Synergy, 3, 100208. [Google Scholar] [CrossRef] [PubMed]

- Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. Proceedings of Machine Learning Research, 81, 77–91. [Google Scholar]

- Burke, J. L., Healy, J., & Yang, Y. (2024). Reducing biases in the criminal legal system: A perspective from expected utility. Law and Human Behavior, 48(5–6), 356–367. [Google Scholar] [CrossRef]

- Carlson, K. A., & Russo, J. E. (2001). Biased interpretation of evidence by mock jurors. Journal of Experimental Psychology: Applied, 7, 91–103. [Google Scholar] [CrossRef]

- Cuellar, M., Mauro, J., & Luby, A. (2022). A probabilistic formalisation of contextual bias: From forensic analysis to systemic bias in the criminal justice system. Journal of the Royal Statistical Society Series A: Statistics in Society, 185, S620–S643. [Google Scholar] [CrossRef]

- Curley, L. J., Munro, J., & Dror, I. E. (2022a). Cognitive and human factors in legal layperson decision making: Sources of bias in juror decision making. Medicine, Science and the Law, 3, 206–215. [Google Scholar] [CrossRef]

- Curley, L. J., Murray, J., MacLean, R., Munro, J., Lages, M., Frumkin, L. A., Laybourn, P., & Brown, D. (2022b). Verdict spotting: Investigating the effects of juror bias, evidence anchors and verdict system in jurors. Psychiatry, Psychology and Law, 29, 323–344. [Google Scholar] [CrossRef]

- Danziger, S., Levav, J., & Avnaim-Pesso, L. (2011). Extraneous factors in judicial decisions. Proceedings of the National Academy of Sciences (PNAS), 108, 6889–6892. [Google Scholar] [CrossRef]

- Darley, J. M., & Gross, P. H. (1983). A hypothesis-confirming bias in labeling effects. Journal of Personality and Social Psychology, 44, 20–33. [Google Scholar] [CrossRef]

- Daumeyer, N. M., Onyeador, I. N., Brown, X., & Richeson, J. A. (2019). Consequences of attributing discrimination to implicit vs. explicit bias. Journal of Experimental Social Psychology, 84, 103812. [Google Scholar] [CrossRef]

- Davidson, M., Nakhaeizadeh, S., & Rando, C. (2023). Cognitive bias and the order of examination in forensic anthropological non-metric methods: A pilot study. Australian Journal of Forensic Science, 5, 255–271. [Google Scholar] [CrossRef]

- Davis, A. J. (2007). Arbitrary justice: The power of the American prosecutor. Oxford University Press. [Google Scholar]

- Delattre, E. J. (2011). Character and cops: Ethics in policing (6th ed.). AEI Press. [Google Scholar]

- Deutsch, J. A., & Deutsch, D. (1963). Attention: Some theoretical considerations. Psychology Review, 70, 80–90. [Google Scholar]

- Dror, I. E. (2011). The paradox of human expertise: Why experts get it wrong. In N. Kapur (Ed.), The paradoxical brain (pp. 177–188). Cambridge University Press. [Google Scholar] [CrossRef]

- Dror, I. E. (2018). Biases in forensic experts. Science, 360, 243. [Google Scholar] [CrossRef]

- Dror, I. E. (2020). Cognitive and human factors in expert decision making: Six fallacies and the eight sources of bias. Analytical Chemistry, 92, 7998–8004. [Google Scholar] [CrossRef] [PubMed]

- Dror, I. E., Charlton, D., & Peron, A. E. (2006). Contextual information renders experts vulnerable to make erroneous identifications. Forensic Science International, 156(1), 74–78. [Google Scholar] [CrossRef]

- Dror, I. E., & Hampikian, G. (2011). Subjectivity and bias in forensic DNA mixture interpretation. Science & Justice, 51, 204–208. [Google Scholar] [CrossRef]

- Dror, I. E., & Kukucka, J. (2021). Linear sequential unmasking–expanded (LSU-E): A general approach for improving decision making as well as minimizing bias. Forensic Science International: Synergy, 3, 100161. [Google Scholar] [CrossRef]

- Dror, I. E., Melinek, J., Arden, J. L., Kukucka, J., Hawkins, S., Carter, J., & Atherton, D. (2021). Cognitive bias in forensic pathology decisions. Journal of Forensic Sciences, 66, 1751–1757. [Google Scholar] [CrossRef]

- Dror, I. E., Thompson, W. C., Meissner, C. A., Kornfield, I., Krane, D., Saks, M., & Risinger, M. (2015). Context management toolbox: A linear sequential unmasking (LSU) approach for minimizing cognitive bias in forensic decision making. Journal of Forensic Sciences, 60, 1111–1112. [Google Scholar] [CrossRef]

- Dror, I. E., Wolf, D. A., Phillips, G., Gao, S., Yang, Y., & Drake, S. A. (2022). Contextual information in medicolegal death investigation decision-making: Manner of death determination for cases of a single gunshot wound. Forensic Science International: Synergy, 5, 100285. [Google Scholar] [CrossRef]

- Dror, L. (2022). Is there an epistemic advantage to being oppressed? Noûs, 57, 618–640. [Google Scholar] [CrossRef]

- Eeden, C. A. J., de Poot, C. J., & van Koppen, P. J. (2019). The forensic confirmation bias: A comparison between experts and novices. Journal of Forensic Sciences, 64, 120–126. [Google Scholar] [CrossRef]

- Ensminger, J. J., Minhinnick, S., Thomas, J. L., & Dror, I. E. (2020). The use and abuse of dogs in the witness box. Suffolk Journal of Trial and Appellate Advocacy, 24(1), 1–65. [Google Scholar]

- Fiske, S. T. (2004). Intent and ordinary bias: Unintended thought and social motivation create casual prejudice. Social Justice Research, 17, 117–127. [Google Scholar] [CrossRef]

- Frank, J. (1930). Law and the modern mind. Brentano’s. [Google Scholar]

- Gardner, B. O., Kelley, S., Murrie, D. C., & Blaisdell, K. N. (2019). Do evidence submission forms expose latent print examiners to task-irrelevant information? Forensic Science International, 297, 236–242. [Google Scholar] [CrossRef]

- Gilbert, D. T. (1993). The assent of man: Mental representation and the control of belief. In D. M. Wegner, & J. W. Pennebaker (Eds.), Handbook of mental control (pp. 57–87). Prentice-Hall. [Google Scholar]

- Godwin, H. J., Menneer, T., Cave, K. R., Thaibsyah, M., & Donnelly, N. (2015). The effects of increasing target prevalence on information processing during visual search. Psychonomic Bulletin & Review, 22, 469–475. [Google Scholar]

- Goldenson, J., & Gutheil, T. (2023). Forensic mental health evaluators’ unprocessed emotions as an often-overlooked form of bias. The journal of the American Academy of Psychiatry and the Law, 51, 551–555. [Google Scholar]

- Greenwald, A. G., & Banaji, M. R. (1995). Implicit social cognition: Attitudes, self-esteem, and stereotypes. Psychological Review, 102, 4–27. [Google Scholar] [CrossRef]

- Grometstein, R. (2007). Prosecutorial misconduct and noble cause corruption. Criminal Law Bulletin, 43, 63–75. [Google Scholar]

- Growns, B., & Kukucka, J. (2021). The prevalence effect in fingerprint identification: Match and non-match base-rates impact misses and false alarms. Applied Cognitive Psychology, 35, 751–760. [Google Scholar] [CrossRef]

- Guthrie, C., Rachlinski, J. J., & Wistrich, A. J. (2001). Inside the judicial mind. Cornell Law Review, 86, 777–830. [Google Scholar] [CrossRef]

- Haber, E. (2021). Racial recognition. Cardozo Law Review, 43, 71–134. [Google Scholar]

- Harris, A. P., & Sen, M. (2019). Bias and judging. Annual Review of Political Science, 22, 241–259. [Google Scholar] [CrossRef]

- Hartley, S., Winburn, A. P., & Dror, I. E. (2022). Metric forensic anthropology decisions: Reliability and biasability of sectioning-point-based sex estimates. Journal of Forensic Sciences, 67, 68–79. [Google Scholar] [CrossRef]

- Hasel, L. E., & Kassin, S. M. (2009). On the presumption of evidentiary independence: Can confessions corrupt eyewitness identifications. Psychological Science, 20, 122–126. [Google Scholar]

- Hetey, R. C., & Eberhardt, J. L. (2018). The numbers don’t speak for themselves: Racial disparities and the persistence of inequality in the criminal justice system. Current Directions in Psychological Science, 27, 183–187. [Google Scholar] [CrossRef]

- Huang, C. Y., & Bull, R. (2021). Applying Hierarchy of Expert Performance (HEP) to investigative interview evaluation: Strengths, challenges and future directions. Psychiatry, Psychology and Law, 28, 255–273. [Google Scholar] [CrossRef]

- Inbau, F. E., Reid, J. E., Buckley, J. P., & Jayne, B. C. (2001). Criminal interrogation and confessions (4th ed.). Aspen. [Google Scholar]

- Jeanguenat, A. M., Bruce Budowle, B., & Dror, I. E. (2017). Strengthening forensic DNA decision making through a better understanding of the influence of cognitive bias. Science and Justice, 57(6), 415–420. [Google Scholar] [CrossRef]

- Jenkins, B. D., Le Grand, A. M., Neuschatz, J. S., Golding, J. M., Wetmore, S. A., & Price, J. L. (2023). Testing the forensic confirmation bias: How jailhouse informants violate evidentiary independence. Journal of Police and Criminal Psychology, 38, 93–104. [Google Scholar] [CrossRef]

- Kahneman, D., Sibony, O., & Sunstein, C. R. (2021). Noise: A flaw in human judgment. William Collins. [Google Scholar]

- Kassin, S. M. (2022). Duped: Why innocent people confess. Prometheus. [Google Scholar]

- Kassin, S. M., Dror, I. E., & Kukucka, J. (2013). The forensic confirmation bias: Problems, perspectives, and proposed solutions. Journal of Applied Research in Memory and Cognition, 2(1), 42–52. [Google Scholar] [CrossRef]

- Kassin, S. M., Goldstein, C. C., & Savitsky, K. (2003). Behavioral confirmation in the interrogation room: On the dangers of presuming guilt. Law and Human Behavior, 27, 187–203. [Google Scholar] [CrossRef] [PubMed]

- Klayman, J., & Ha, Y. W. (1987). Confirmation, disconfirmation, and information in hypothesis testing. Psychological Review, 94, 211–228. [Google Scholar] [CrossRef]

- Klockars, C. B. (1980). The dirty harry problem. The Annals of the American Academy of Political and Social Science, 452, 33–47. [Google Scholar] [CrossRef]

- Koppl, R. (2020). Do court-assessed fees induce laboratory contingency bias in crime laboratories? Journal of Forensic Sciences, 6, 1793–1794. [Google Scholar] [CrossRef] [PubMed]

- Kukucka, J., & Dror, I. E. (2023). Human factors in forensic science: Psychological causes of bias and error. In D. DeMatteo, & K. Scherr (Eds.), The Oxford handbook of psychology and law. Chapter 36, 621–C36P212. Oxford University Press. [Google Scholar] [CrossRef]

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108, 480–498. [Google Scholar] [CrossRef] [PubMed]

- Kunkler, K. S., & Roy, T. (2023). Reducing the impact of cognitive bias in decision making: Practical actions for forensic science practitioners. Forensic Science International: Synergy, 7, 100341. [Google Scholar] [CrossRef]

- Lange, F., Heilbron, M., & Kok, P. (2018). How do expectations shape perception? Trends Cognitive Science, 22, 764–779. [Google Scholar] [CrossRef]

- Lawson, R. G. (1967). Order of presentation as a factor in jury persuasion. Kentucky Law Journal, 56, 523–555. [Google Scholar]

- Lecci, L., & Myers, B. (2008). Individual differences in attitudes relevant to juror decision making: Development and validation of the pretrial juror attitude questionnaire (PJAQ)1. Journal of Applied Social Psychology, 38, 2010–2038. [Google Scholar] [CrossRef]

- Lecci, L., & Myers, B. (2009). Predicting guilt judgements and verdict change using a measure of pretrial bias in a videotaped mock trial with deliberating jurors. Psychology, Crime & Law, 15, 619–634. [Google Scholar] [CrossRef]

- Lidén, M., Thiblin, I., & Dror, I. E. (2023). The role of alternative hypotheses in reducing bias in forensic medical experts’ decision making. Science and Justice, 63(5), 581–587. [Google Scholar] [CrossRef] [PubMed]

- Lund, F. H. (1925). The psychology of belief: IV. The law of primacy in persuasion. Journal of Abnormal Social Psychology, 20, 183–191. [Google Scholar] [CrossRef]

- Lynch, M., Kidd, T., & Shaw, E. (2022). The subtle effects of implicit bias instructions. Law & Policy, 44, 98–124. [Google Scholar]

- Lynøe, N., Elinder, G., Hallberg, B., Rosén, M., Sundgren, P., & Eriksson, A. (2017). Insufficient evidence for ‘shaken baby syndrome’—A systematic review. Acta Paediatrica, 106, 1021–1027. [Google Scholar] [CrossRef]

- Maeder, E., Yamamoto, S., & Saliba, P. (2015). The influence of defendant race and victim physical attractiveness on juror decision-making in a sexual assault trial. Psychology, Crime & Law, 21, 62–79. [Google Scholar] [CrossRef]

- Maude, J. (2014). Differential diagnosis: The key to reducing diagnosis error, measuring diagnosis and a mechanism to reduce healthcare costs. Diagnosis, 1, 107–109. [Google Scholar] [CrossRef]

- Melnikoff, D. E., & Strohminger, N. (2020). The automatic influence of advocacy on lawyers and novices. Nature Human Behaviour, 4, 1258–1264. [Google Scholar]

- Moore, D. A., Cain, D. M., Loewenstein, G., & Bazerman, M. H. (2005). Conflicts of interest: Challenges and solutions in business, law, medicine, and public policy. Cambridge University Press. [Google Scholar]

- Moore, D. A., Tanlu, L., & Bazerman, M. H. (2010). Conflict of interest and the intrusion of bias. Judgment and Decision Making, 5, 37–53. [Google Scholar]

- Murrie, D. C., Boccaccini, M. T., Guarnera, L. A., & Rufino, K. A. (2013). Are forensic experts biased by the side that retained them? Psychological Science, 24, 1889–1897. [Google Scholar] [CrossRef]

- Myles-Worsley, M., Johnston, W. A., & Simons, M. A. (1988). The influence of expertise on X-ray image processing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14, 553–557. [Google Scholar] [CrossRef]

- National Academy of Sciences. (2009). Strengthening forensic science in the United States: A path forward. National Academies Press. Available online: https://www.ojp.gov/pdffiles1/nij/grants/228091.pdf (accessed on 30 January 2024).

- National Commission on Forensic Science. (2015). Ensuring that forensic analysis is based upon task-relevant information. Available online: https://www.justice.gov/ncfs/file/818196/download (accessed on 30 January 2024).

- Neal, T. M. S., & Brodsky, S. L. (2016). Forensic psychologists’ perceptions of bias and potential correction strategies in forensic mental health evaluations. Psychology, Public Policy, and Law, 22, 58–76. [Google Scholar] [CrossRef]

- Neuilly, M. (2022). Sources of bias in death determination: A research note articulating the need to include systemic sources of biases along with cognitive ones as impacting mortality data. Journal of Forensic Sciences, 67, 2032–2039. [Google Scholar] [CrossRef] [PubMed]

- Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2, 175–220. [Google Scholar] [CrossRef]

- Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes. Psychological Review, 84, 231–259. [Google Scholar] [CrossRef]

- Noble, S. U. (2018). Algorithms of oppression. New York University Press. [Google Scholar]

- O’Brien, B. (2009). Prime suspect: An examination of factors that aggravate and counteract confirmation bias in criminal investigations. Psychology, Public Policy, and Law, 15, 315–334. [Google Scholar] [CrossRef]

- OIG. (2011). A review of the FBI’s progress in responding to the recommendations in the office of the inspector general report on the fingerprint misidentification in the brandon mayfield case. Available online: https://www.oversight.gov/sites/default/files/oig-reports/s1105.pdf (accessed on 30 January 2024).

- Peat, M. A. (2021). JFS editor-in-chief preface. Journal of Forensic Sciences, 66, 2539–2540. [Google Scholar] [CrossRef]

- Pezdek, K., & Lerer, T. (2023). The new reality: Non-eyewitness identifications in a surveillance world. Current Directions in Psychological Science, 32, 439–445. [Google Scholar] [CrossRef]

- President’s Council of Advisors on Science and Technology. (2016). Forensic science in criminal courts: Ensuring scientific validity of feature-comparison methods. Available online: https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/PCAST/pcast_forensic_science_report_final.pdf (accessed on 30 January 2024).

- Pronin, E., Gilovich, T., & Ross, L. (2004). Objectivity in the eye of the beholder: Divergent perceptions of bias in self versus others. Psychological Review, 111, 781–799. [Google Scholar] [CrossRef]

- Pronin, E., Lin, D. Y., & Ross, L. (2002). The bias blind spot: Perceptions of bias in self versus others. Personality and Social Psychology Bulletin, 28, 369–381. [Google Scholar] [CrossRef]

- Quigley-McBride, A., Dror, I. E., Roy, T., Garrett, B. L., & Kukucka, J. (2022). A practical tool for information management in forensic decisions: Using Linear Sequential Unmasking-Expanded (LSU-E) in casework. Forensic Science International: Synergy, 4, 100216. [Google Scholar] [CrossRef]

- Reason, J. (1990). Human error. Cambridge University Press. ISBN 978-0-521-30669-0. [Google Scholar]

- Sah, S., Robertson, C. T., & Baughman, S. B. (2015). Blinding prosecutors to defendants’ race: A policy proposal to reduce unconscious bias in the criminal justice system. Behavioral Science & Policy, 1, 83–91. [Google Scholar]

- Shapiro, N., & Keel, T. (2023). Naturalizing unnatural death in Los Angeles County jails. Medical Anthropology Quarterly, 38(1), 6–23. [Google Scholar] [CrossRef] [PubMed]

- Simon, D. (2012). In doubt: The psychology of the criminal justice process. Harvard University Press. [Google Scholar]

- Simon, D., Ahn, M., Stenstrom, D. M., & Read, S. J. (2020). The adversarial mindset. Psychology, Public Policy, and Law, 26, 353–377. [Google Scholar] [CrossRef]

- Smith, A. M., & Wells, G. L. (2023). Telling us less than what they know: Expert inconclusive reports conceal exculpatory evidence in forensic cartridge-case comparisons. Journal of Applied Research in Memory and Cognition, 13(1), 147–152. [Google Scholar] [CrossRef]

- Thompson, W. C. (2023). Shifting decision thresholds can undermine the probative value and legal utility of forensic pattern-matching evidence. Proceedings of the National Academy of Sciences (PNAS), 120, e2301844120. [Google Scholar] [CrossRef]

- Treisman, A. (1961). Contextual cues in selective listening. Quarterly Journal of Experimental Psychology, 12, 242–248. [Google Scholar] [CrossRef]

- Vidmar, N. (2011). The psychology of trial judging. Current Directions in Psychological Science, 20, 58–62. [Google Scholar] [CrossRef]

- Voigt, R., Camp, N. P., Prabhakaran, V., & Eberhardt, J. L. (2017). Language from police body camera footage shows racial disparities in officer respect. PNAS, 114, 6521–6526. [Google Scholar] [CrossRef]

- Vomfell, L., & Stewart, N. (2012). Officer bias, over-patrolling and ethnic disparities in stop and search. Nature Human Behaviour, 5, 566–575. [Google Scholar] [CrossRef]

- Wagenaar, W. A., van Koppen, P. J., & Crombag, H. F. M. (1993). Anchored narratives: The psychology of criminal evidence. St. Martin’s Press. [Google Scholar]

- Wegner, D. M. (1994). Ironic processes of mental control. Psychological Review, 101, 34–52. [Google Scholar] [CrossRef]

- Whitehead, F. A., Williams, M. R., & Sigman, M. E. (2022). Decision theory and linear sequential unmasking in forensic fire debris analysis: A proposed workflow. Forensic Chemistry, 29, 100426. [Google Scholar] [CrossRef]

- Wienroth, M., & McCartney, C. (2023). “Noble cause casuistry” in forensic genetics. WIREs Forensic Science, 6(1), e1502. [Google Scholar] [CrossRef]

- Wilson, T. D., & Brekke, N. (1994). Mental contamination and mental correction: Unwanted influences on judgments and evaluations. Psychological Bulletin, 116, 117–142. [Google Scholar] [CrossRef] [PubMed]

- Winburn, A. P., & Clemmons, C. (2021). Objectivity is a myth that harms the practice and diversity of forensic science. Forensic Science International: Synergy 3, 100196. [Google Scholar] [CrossRef]

- Wood, B. P. (1999). Visual expertise. Radiology, 211, 1–3. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dror, I.E. Biased and Biasing: The Hidden Bias Cascade and Bias Snowball Effects. Behav. Sci. 2025, 15, 490. https://doi.org/10.3390/bs15040490

Dror IE. Biased and Biasing: The Hidden Bias Cascade and Bias Snowball Effects. Behavioral Sciences. 2025; 15(4):490. https://doi.org/10.3390/bs15040490

Chicago/Turabian StyleDror, Itiel E. 2025. "Biased and Biasing: The Hidden Bias Cascade and Bias Snowball Effects" Behavioral Sciences 15, no. 4: 490. https://doi.org/10.3390/bs15040490

APA StyleDror, I. E. (2025). Biased and Biasing: The Hidden Bias Cascade and Bias Snowball Effects. Behavioral Sciences, 15(4), 490. https://doi.org/10.3390/bs15040490