Human-Centred Perspectives on Artificial Intelligence in the Care of Older Adults: A Q Methodology Study of Caregivers’ Perceptions

Abstract

1. Introduction

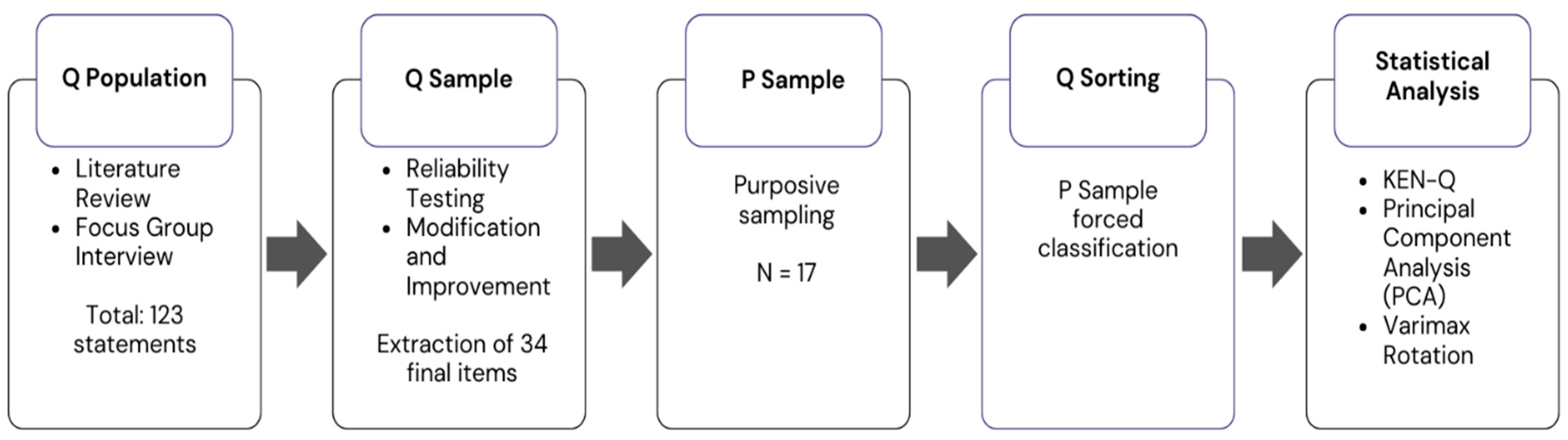

2. Study Method

2.1. AI Virtual Human

2.2. Research Procedure

2.2.1. Organisation of Q Population (Q Concourse)

2.2.2. Selection of the Q Sample

2.2.3. Composition of the P Sample

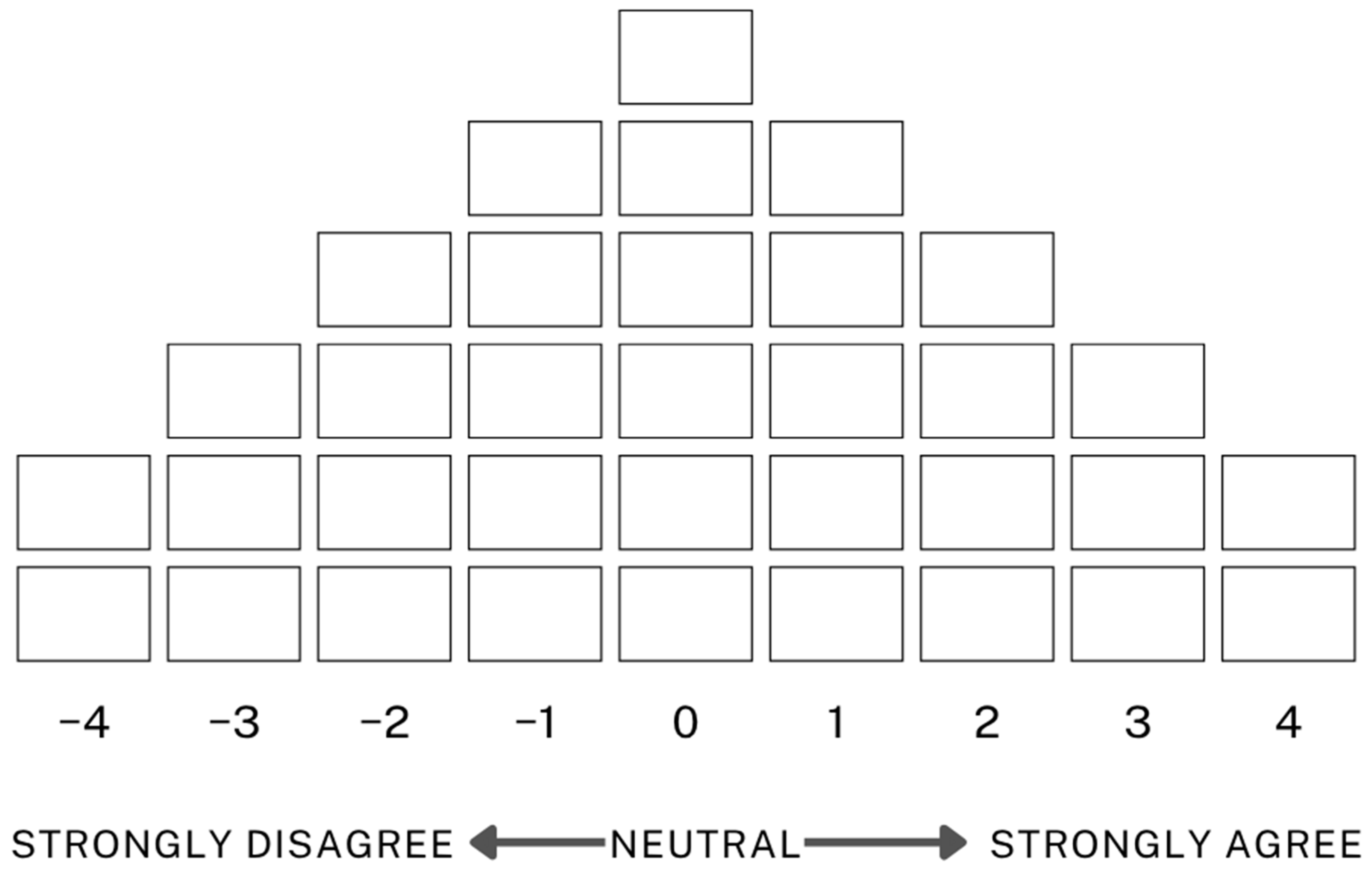

2.2.4. Q Sorting

2.2.5. Data Analysis

3. Study Results

3.1. Q-Factor Analysis Results

3.2. Perception Type Characteristics

3.2.1. Type 1: Active Acceptors

3.2.2. Type 2: Improvement Seekers

It’s frustrating how long it takes for the device to recognise speech, and overall, I don’t think it helps much in daily caregiving. It takes time to activate and process commands properly, and the device lacks sufficient connectivity with medical staff to be useful in responding to changes in a patient’s condition.

3.2.3. Type 3: Emotional Support Seekers

The AI responses were often empathetic and closely matched how I felt, which made me feel understood and emotionally uplifted. I was able to share personal or deeply held thoughts, which brought a sense of relief. While talking to the AI helped shift my mood during moments of sadness, I also felt the need to approach it carefully so as not to become emotionally dependent on it. Still, it helped me recover from temporary emotional lows.

When I talked to the AI about difficult experiences, it responded empathetically and answered my questions in detail, which built trust. But at the same time, I still felt it was ‘just a machine’, so the emotional connection was limited and didn’t fully relieve my stress.

4. Discussion

5. Limitations and Future Research Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Akhtar-Danesh, N. (2017). An overview of the statistical techniques in Q methodology: Is there a better way of doing Q analysis? Operant Subjectivity, 38(3/4), 100553. [Google Scholar] [CrossRef]

- Bijker, W. E. (1997). Of bicycles, bakelites, and bulbs: Toward a theory of sociotechnical change. MIT Press. [Google Scholar]

- Bonacaro, A., Rubbi, I., Artioli, G., Monaco, F., Sarli, L., & Guasconi, M. (2024). AI and big data: Current and future nursing practitioners’ views on future of healthcare education provision. In Innovation in applied nursing informatics (pp. 200–204). IOS Press. [Google Scholar]

- Borna, S., Maniaci, M. J., Haider, C. R., Gomez-Cabello, C. A., Pressman, S. M., Haider, S. A., Demaerschalk, B. M., Cowart, J. B., & Forte, A. J. (2024). Artificial intelligence support for informal patient caregivers: A systematic review. Bioengineering, 11(5), 483. [Google Scholar] [CrossRef] [PubMed]

- Brown, S. R. (1978). The importance of factors in Q methodology: Statistical and theoretical considerations. Operant Subjectivity, 1(4), 100516. [Google Scholar] [CrossRef]

- Brown, S. R. (1980). Political subjectivity: Applications of Q methodology in political science. Yale University Press. [Google Scholar]

- Brown, S. R. (1993). A primer on Q methodology. Operant Subjectivity, 16(3/4), 91–138. [Google Scholar] [CrossRef]

- Cao, X., Zhang, H., Zhou, B., Wang, D., Cui, C., & Bai, X. (2024). Factors influencing older adults’ acceptance of voice assistants. Frontiers in Psychology, 15, 1376207. [Google Scholar] [CrossRef]

- Choi, S. (2022). History and prospects of senior care and nursing issues. OUGHTOPIA, 36(3), 179–208. [Google Scholar] [CrossRef]

- Churruca, K., Ludlow, K., Wu, W., Gibbons, K., Nguyen, H. M., Ellis, L. A., & Braithwaite, J. (2021). A scoping review of Q-methodology in healthcare research. BMC Medical Research Methodology, 21(1), 125. [Google Scholar] [CrossRef]

- Croes, E. A. J., & Antheunis, M. L. (2021). Can we be friends with Mitsuku? A longitudinal study on the process of relationship formation between humans and a social chatbot. Journal of Social and Personal Relationships, 38(1), 279–300. [Google Scholar] [CrossRef]

- Damio, S. M. (2018). The analytic process of Q methodology. Asian Journal of University Education (AJUE), 14(1), 59–75. [Google Scholar]

- Davis, F. D. (1986). A technology acceptance model for empirically testing new end-user information systems. Theory and Results/Massachusetts Institute of Technology. [Google Scholar]

- de Guzman, A. B., Silva, K. E. M., Silvestre, J. Q., Simbillo, J. G. P., Simpauco, J. J. L., Sinugbuhan, R. J. P., Sison, D. M. N., & Siy, M. R. C. (2012). For your eyes only: A Q-methodology on the ontology of happiness among chronically ill Filipino elderly in a penal institution. Journal of Happiness Studies, 13(5), 913–930. [Google Scholar] [CrossRef]

- Edwards, A., & Edwards, C. (2017). The machines are coming: Future directions in instructional communication research. Communication Education, 66(4), 487–488. [Google Scholar] [CrossRef]

- Guzman, A. L., & Lewis, S. C. (2020). Artificial intelligence and communication: A human–machine communication research agenda. New Media & Society, 22(1), 70–86. [Google Scholar]

- Haque, M. R., & Rubya, S. (2023). An overview of chatbot-based mobile mental health apps: Insights from app descriptions and user reviews. JMIR mHealth and uHealth, 11(1), e44838. [Google Scholar] [CrossRef]

- Holden, R. J., & Karsh, B. T. (2010). The technology acceptance model: Its past and its future in health care. Journal of Biomedical Informatics, 43(1), 159–172. [Google Scholar] [CrossRef]

- Hylton, P., Kisby, B., & Goddard, P. (2018). Young people’s citizen identities: A Q-methodological analysis of English youth perceptions of citizenship in Britain. Societies, 8, 121. [Google Scholar] [CrossRef]

- Igarashi, T., Iijima, K., Nitta, K., & Chen, Y. (2024). Estimation of the cognitive functioning of the elderly by AI agents: A comparative analysis of the effects of the psychological burden of intervention. Healthcare, 12(18), 1821. [Google Scholar] [CrossRef]

- Kim, H. (1992). Understanding Q methodology for subjectivity research. Journal of Nursing, 6(1), 1–11. [Google Scholar]

- Kim, H. (2007). P sample selection and Q sorting. Journal of KSSSS, 15, 5–19. [Google Scholar]

- Kim, M., Lee, J., & Kim, S.-H. (2022). Caregiver support services as health care assistants for seniors. Proceedings of the HCI Korea, 2022, 538–541. [Google Scholar]

- Kim, Y., & Yeo, Y. H. (2018). The effect of job environment on burnout and the moderating effect of occupational identity for nursing home workers. Locality and Globality, 42(3), 39–60. [Google Scholar]

- Kwak, Y., Seo, Y. H., & Ahn, J. W. (2022). Nursing students’ intent to use AI-based healthcare technology: Path analysis using the unified theory of acceptance and use of technology. Nurse Education Today, 119, 105541. [Google Scholar] [CrossRef]

- Lee, Y. (2018). Trends in the socialisation of elderly care (No. 2018-03-3). Statistics Development Institute. [Google Scholar]

- Lee, Y., Song, S., & Choi, H. (2023). Case analysis and prospects of artificial intelligence-based elderly care service development. The Journal of the Korea Contents Association, 23(2), 647–656. [Google Scholar] [CrossRef]

- Li, S., Chen, M., Liu, P. L., & Xu, J. (2024). Following medical advice of an AI or a human doctor? Experimental evidence based on clinician–patient communication pathway model. Health Communication, 40(9), 1810–1822. [Google Scholar] [CrossRef] [PubMed]

- Lombard, M., & Xu, K. (2021). Social responses to media technologies in the 21st century: The media are social actors paradigm. Human–Machine Communication, 2(1), 29–55. [Google Scholar] [CrossRef]

- Merx, Q., Steins, M., & Odekerken, G. (2025). The role of psychological comfort with service robot reminders: A dyadic field study. Journal of Services Marketing, 39(10), 1–14. [Google Scholar] [CrossRef]

- Ministry of the Interior and Safety. (2025). Population and household status by administrative dong based on resident registration. Available online: https://jumin.mois.go.kr/statMonth.do (accessed on 19 May 2025).

- Na, S. (2021). Long-term care hospitals and changing elderly care in South Korea. Medicine Anthropology Theory, 8(3), 1–20. [Google Scholar] [CrossRef]

- Nadarzynski, T., Knights, N., Husbands, D., Graham, C. A., Llewellyn, C. D., Buchanan, T., & Ridge, D. (2024). Achieving health equity through conversational AI: A roadmap for design and implementation of inclusive chatbots in healthcare. PLoS Digital Health, 3(5), e0000492. [Google Scholar] [CrossRef] [PubMed]

- Organisation for Economic Co-operation and Development (OECD). (2023). Health at a glance 2023: OECD indicators. OECD Publishing. [Google Scholar]

- Pinch, T. J., & Bijker, W. E. (1984). The social construction of facts and artefacts: Or how the sociology of science and the sociology of technology might benefit each other. Social Studies of Science, 14(3), 399–441. [Google Scholar] [CrossRef]

- Ramlo, S. (2015). Theoretical significance in Q methodology: A qualitative approach to a mixed method. Research in the Schools, 22(1), 73–87. [Google Scholar]

- Ruggiano, N., Brown, E., Roberts, L., Framil Suarez, C., Luo, Y., Hao, Z., & Hristidis, V. (2021). Chatbots to support people with dementia and their caregivers: Systematic review of functions and quality. Journal of Medical Internet Research, 23(6), e25006. [Google Scholar] [CrossRef]

- Sabra, H. E., Abd Elaal, H. K., Sobhy, K. M., & Bakr, M. M. (2023). Utilization of artificial intelligence in health care: Nurses’ perspectives and attitudes. Menoufia Nursing Journal, 8(1), 253–268. [Google Scholar] [CrossRef]

- Siddals, S., Torous, J., & Coxon, A. (2024). It happened to be the perfect thing: Experiences of generative AI chatbots for mental health. NPJ Mental Health Research, 3(1), 48. [Google Scholar] [CrossRef] [PubMed]

- Timon, C. M., Heffernan, E., Kilcullen, S., Hopper, L., Lee, H., Gallagher, P., & Murphy, C. (2024). Developing independent living support for older adults using Internet of Things and AI-based systems: Co-design study. JMIR Aging, 7, e54210. [Google Scholar] [CrossRef]

- Van Exel, J., & de Graaf, G. (2005). Q methodology: A sneak preview. Available online: https://qmethod.org/wp-content/uploads/2016/01/qmethodologyasneakpreviewreferenceupdate.pdf (accessed on 28 April 2025).

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Watts, S., & Stenner, P. (2005). The subjective experience of partnership love: A Q methodological study. British Journal of Social Psychology, 44(1), 85–107. [Google Scholar] [CrossRef]

- World Health Organization. (2024). Ethics and governance of artificial intelligence for health: Guidance on large multi-modal models. Available online: https://iris.who.int/server/api/core/bitstreams/e9e62c65-6045-481e-bd04-20e206bc5039/content (accessed on 19 May 2025).

- Yu, S., & Chen, T. (2024). Understanding older adults’ acceptance of chatbots in healthcare delivery: An extended UTAUT model. Frontiers in Public Health, 12, 1435329. [Google Scholar] [CrossRef]

- Zabala, A. (2014). Qmethod: A package to explore human perspectives using Q methodology. The R Journal, 6(2), 163–173. [Google Scholar] [CrossRef]

- Zaboli, A., Biasi, C., Magnarelli, G., Miori, B., Massar, M., Pfeifer, N., Brigo, F., & Turcato, G. (2025). Arterial blood gas analysis and clinical decision-making in emergency and intensive care unit nurses: A performance evaluation. Healthcare, 13(3), 261. [Google Scholar] [CrossRef]

| Participant Label (Number) | Age (Gender) | Position | Career Choice Motivation | Challenges | Average Patient Age | Key Patient Relations |

|---|---|---|---|---|---|---|

| A (P16) | 48 (F) | Nurse | Job stability | Physical strain/Emotional difficulty | 80~89 | Trust/Emotional support/Communication |

| B (P2) | 68 (F) | Caregiver | Desire to help others | Emotional difficulty | 60~69 | Communication |

| C (P1) | 56 (F) | Nurse | Desire to help others | Emotional difficulty | 80~89 | Trust |

| D (P5) | 55 (M) | Social Worker | Social recognition/Acquisition of professional skills | Relationship with patients’ families | 70~79 | Trust |

| E (P8) | 51 (F) | Caregiver | Desire to help others | Emotional difficulty | 80~89 | Emotional support |

| F (-) | 40 (F) | Nurse | Desire to help others/Job stability | Emotional difficulty | 80~89 | Communication |

| Type | P Sample No. | Factor Weight | Age (Gender) | Position | Career Choice Motivation | Challenges | Average Patient Age | Key Patient Relations |

|---|---|---|---|---|---|---|---|---|

| Type 1 (N = 9) | P11 | 0.7901 | 46 (F) | Caregiver | Meaningful experience | Physical strain | 60~89 | Trust |

| P17 | 0.7695 | 50 (F) | Nursing Assistant | Job stability | Communication issues with patients | 70~79 | Consistent care | |

| P15 | 0.7141 | 45 (F) | Nurse | Acquisition of professional skills | Communication issues with patients | 60~89 | Emotional support | |

| P16 | 0.6999 | 48 (F) | Nurse | Job stability | Physical strain/Emotional difficulty | 80~89 | Trust/Emotional support/Communication | |

| P2 | 0.6934 | 68 (F) | Caregiver | Desire to help others | Emotional difficulty | 60~69 | Communication | |

| P13 | 0.5557 | 42 (F) | Caregiver | Meaningful experience | Communication issues with patients | 80~89 | Trust | |

| P6 | 0.5037 | 43 (F) | Nurse | Job stability/Desire to help others | Emotional difficulty | 80~89 | Communication | |

| P4 | 0.3834 | 61 (F) | Caregiver | Desire to help others | Communication issues with patients | 80~89 | Trust | |

| P10 | −0.3381 | 51 (F) | Caregiver | Desire to help others | Physical strain | 80~89 | Trust | |

| Type 2 (N = 4) | P7 | 0.7963 | 43 (F) | Caregiver | Job stability | Time management | 70~79 | Communication |

| P1 | 0.7 | 56 (F) | Nurse | Desire to help others | Emotional difficulty | 80~89 | Trust | |

| P8 | −0.635 | 51 (F) | Caregiver | Desire to help others | Emotional difficulty | 80~89 | Emotional support | |

| P5 | 0.5693 | 55 (M) | Social Worker | Social recognition/Acquisition of professional skills | Relationship with patients’ families | 70~79 | Trust | |

| Type 3 (N = 4) | P3 | 0.7286 | 52 (F) | Caregiver | Job stability | Physical strain/Communication issues with patients | 70~79 | Trust/Communication |

| P14 | 0.6337 | 58 (F) | Caregiver | Meaningful experience/Job stability | Communication issues with patients | 80~89 | Trust | |

| P9 | 0.5368 | 50 (F) | Nursing Assistant | Job stability | Emotional difficulty | 70~79 | Trust | |

| P12 | 0.3333 | 63 (F) | Caregiver | Meaningful experience/Social recognition | Physical strain | 80~89 | Trust |

| Content | 1 | 2 | 3 |

|---|---|---|---|

| Eigenvalues | 4.01352 | 2.122581 | 1.904245 |

| Explained Variance (%) | 24 | 12 | 11 |

| Cumulative Explained Variance (%) | 24 | 36 | 47 |

| Type | 1 | 2 | 3 |

|---|---|---|---|

| 1 | 1 | −0.0831 | 0.0551 |

| 2 | 1 | 0.0564 | |

| 3 | 1 |

| No. | Statement | 1 | 2 | 3 | |||

|---|---|---|---|---|---|---|---|

| Z-Score | Q-Sort Value | Z-Score | Q-Sort Value | Z-Score | Q-Sort Value | ||

| 1 | The AVH feels too heavy to use comfortably. | −1.18 | −3 | −0.11 | 0 * | −1.48 | −3 |

| 2 | The character in the AVH feels like I’m speaking to a real person. | −0.96 | −2 | −1.21 | −3 | −0.11 | 0 * |

| 3 | Interacting with the AVH feels like receiving counselling. | 0.01 | 0 * | −2.03 | −4 * | 1.57 | 4 * |

| 4 | The AVH is simple to operate. | 0.3 | 1 * | −0.37 | −1 | −0.82 | −2 |

| 5 | I don’t know who to turn to when problems occur while using the AVH. | −0.18 | −1 | −0.33 | −1 | 0.6 | 1 * |

| 6 | The AVH helps me respond effectively to changes in the patient’s condition. | 0.28 | 1 * | −1.89 | −4 | −2 | −4 |

| 7 | It is difficult to use the AVH for medical purposes. | 0.1 | 0 * | 0.69 | 1 | −0.84 | −2 * |

| 8 | I cannot trust the AVH system. | −1.57 | −4 | −0.28 | 0 * | −1.83 | −4 |

| 9 | Interaction with the AVH lifts my mood. | 1.11 | 2 * | −1 | −2 | −0.87 | −3 |

| 10 | I wouldn’t use the system without financial support. | −0.97 | −2 | 1.69 | 4 * | −0.79 | −2 |

| 11 | It’s hard to get the answers I want from the AVH. | −0.21 | −1 | 0.49 | 1 | 0.26 | 1 |

| 12 | Entertainment features help reduce stress when caring for patients. | 1.74 | 4 * | −0.52 | −1 | −0.46 | −1 |

| 13 | Sharing my struggles with the AVH helps relieve stress because it shows empathy. | 1.41 | 3 * | −1.04 | −3 | −1.59 | −3 |

| 14 | Functional issues make the AVH difficult to use. | −1.23 | −3 * | 0.24 | 1 | −0.1 | 0 |

| 15 | It takes too long for the AVH to recognise speech, which is inconvenient. | −1.05 | −2 * | 1.83 | 4 | 1.02 | 2 |

| 16 | I can say things to the AVH that I can’t easily say to others. | 1.44 | 3 * | −1.66 | −3 * | 2.28 | 4 * |

| 17 | The AVH asks too many questions, making it boring. | −1.25 | −3 * | −0.34 | −1 | −0.29 | −1 |

| 18 | It feels like the system lacks sufficient big data. | −0.93 | −1 * | 0.77 | 1 | 0.29 | 1 |

| 19 | The AVH should offer features for emergencies. | 0.43 | 2 | 0.94 | 2 | 1.13 | 3 |

| 20 | Voice recognition often fails with accents or dialects, which is frustrating. | −1.15 | −2 * | 0.56 | 1 | 1.14 | 3 |

| 21 | The device is helpful in suggesting responses to sudden changes in a patient’s condition. | −0.01 | 0 | −0.14 | 0 | 0.18 | 0 |

| 22 | It’s convenient to access the knowledge needed on the spot while caregiving. | 1.49 | 3 | −0.79 | −2 * | 1.02 | 2 |

| 23 | Existing AI-based real-time platforms are more effective than the AVH. | −0.07 | 0 | 0.12 | 0 | −0.25 | 0 |

| 24 | It would be helpful if the system could store and manage patient information. | 0.81 | 2 | 0.92 | 2 | −0.51 | −1 * |

| 25 | Sensor integration for real-time patient monitoring would be useful. | 1.98 | 4 | 1.63 | 3 | −0.04 | 0 * |

| 26 | Searching the internet directly is faster and easier. | −0.6 | −1 | −0.11 | 0 | 1.01 | 2 * |

| 27 | The mechanical nature of the device makes it feel unfamiliar and impersonal. | −1.85 | −4 * | −1.02 | −2 * | 0.86 | 2 * |

| 28 | The AVH should provide personalised care through individual patient data. | 1.04 | 2 | 0.98 | 3 | 0.61 | 1 |

| 29 | Caregivers can collaborate with the AVH to improve work efficiency. | 0.3 | 1 * | −0.75 | −2 | −0.28 | −1 |

| 30 | The AVH should be able to connect directly with medical staff in emergencies. | 0.24 | 0 | 0.23 | 0 | 0.55 | 1 |

| 31 | It would be better if the AVH could assist with medication management. | 0.33 | 1 * | 0.92 | 2 | −0.76 | −2 |

| 32 | The AVH should track eating habits and offer appropriate advice. | 0.32 | 1 | 0.86 | 2 | 0.21 | 0 * |

| 33 | The AVH must strictly protect patient anonymity. | 0.19 | 0 * | 1.01 | 3 | 1.04 | 3 |

| 34 | The AVH’s design should be senior-friendly. | −0.28 | −1 | −0.3 | −1 | −0.73 | −1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, S.J.; Moon, K.Y.; Kim, J.Y.; Jeong, Y.-G.; Lee, S.Y. Human-Centred Perspectives on Artificial Intelligence in the Care of Older Adults: A Q Methodology Study of Caregivers’ Perceptions. Behav. Sci. 2025, 15, 1541. https://doi.org/10.3390/bs15111541

Shin SJ, Moon KY, Kim JY, Jeong Y-G, Lee SY. Human-Centred Perspectives on Artificial Intelligence in the Care of Older Adults: A Q Methodology Study of Caregivers’ Perceptions. Behavioral Sciences. 2025; 15(11):1541. https://doi.org/10.3390/bs15111541

Chicago/Turabian StyleShin, Seo Jung, Kyoung Yeon Moon, Ji Yeong Kim, Youn-Gil Jeong, and Song Yi Lee. 2025. "Human-Centred Perspectives on Artificial Intelligence in the Care of Older Adults: A Q Methodology Study of Caregivers’ Perceptions" Behavioral Sciences 15, no. 11: 1541. https://doi.org/10.3390/bs15111541

APA StyleShin, S. J., Moon, K. Y., Kim, J. Y., Jeong, Y.-G., & Lee, S. Y. (2025). Human-Centred Perspectives on Artificial Intelligence in the Care of Older Adults: A Q Methodology Study of Caregivers’ Perceptions. Behavioral Sciences, 15(11), 1541. https://doi.org/10.3390/bs15111541