The Digital Centaur as a Type of Technologically Augmented Human in the AI Era: Personal and Digital Predictors

Abstract

1. Introduction

1.1. Digital Centaurs

1.2. Human Intelligence and Mindfulness

1.3. Digital Predictors: User Activity, Digital Competence, and Attitudes Toward Technology

1.4. Relationship with Artificial Intelligence

2. Materials and Methods

2.1. Participants

2.2. Data Collection

2.3. Materials and Procedure

2.3.1. Socio-Demographic Questionnaire

2.3.2. Digital Centaur

2.3.3. Trait Mindfulness and Emotional Intelligence

2.3.4. Internet Use, Attitudes Toward Technology, and Digital Competence

2.3.5. AI Use and Attitudes Toward AI

2.4. Data Analysis

3. Results

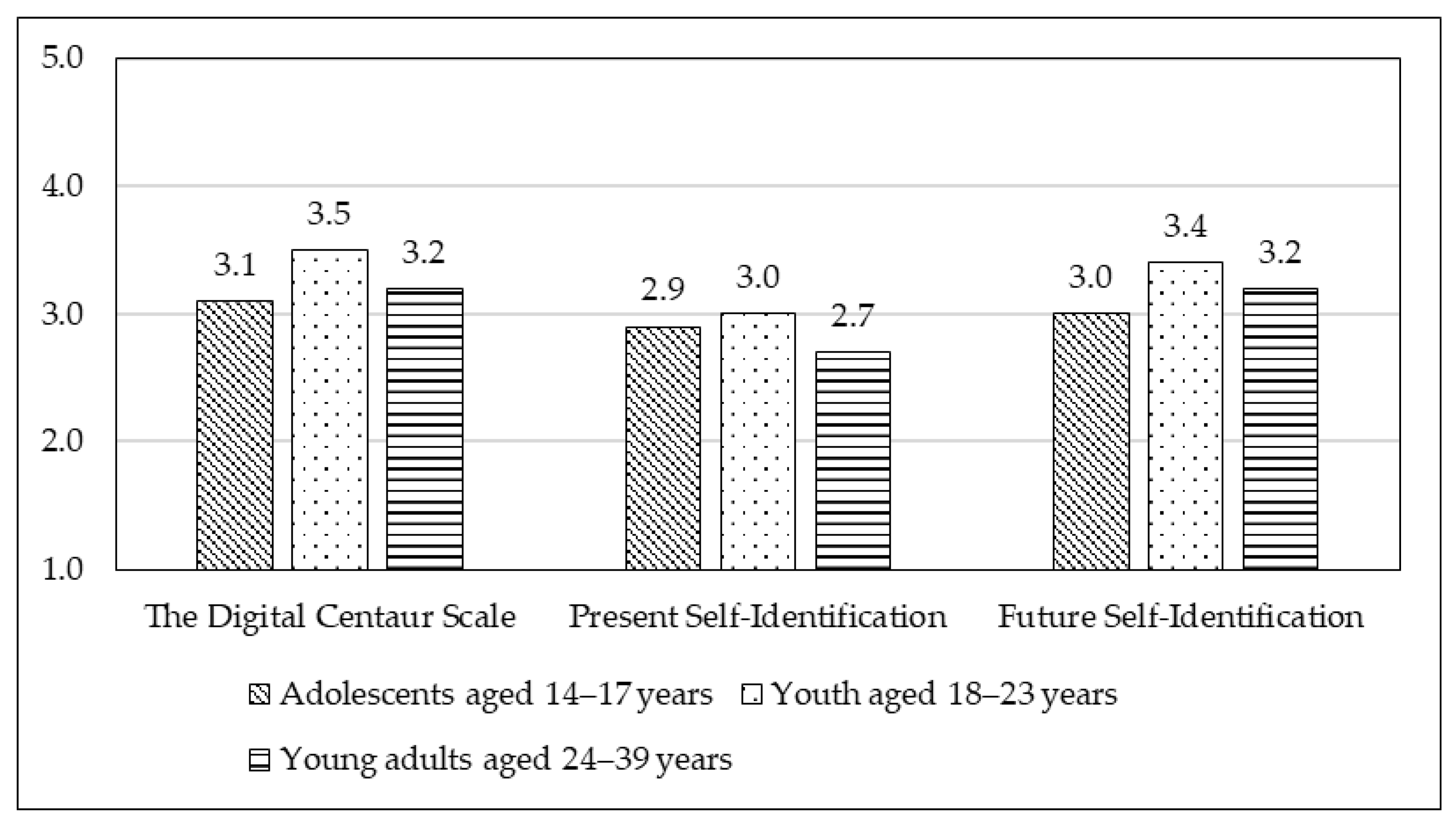

3.1. Digital Centaurs: Age, Gender and Financial Status

3.2. Predictors of Digital Centaur Strategy Preference

3.3. Attitudes of Digital Centaurs Toward AI

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Agostini, E., Schratz, M., & Eloff, I. (2024). Vignette research. Bloomsbury Publishing Plc. [Google Scholar]

- Akata, Z., Balliet, D., De Rijke, M., Dignum, F., Dignum, V., Eiben, G., Fokkens, A., Grossi, D., Hindriks, K., Hoos, H., Hung, H., Jonker, C., Monz, C., Neerincx, M., Oliehoek, F., Prakken, H., Schlobach, S., Van Der Gaag, L., Van Harmelen, F., … Welling, M. (2020). A research agenda for hybrid intelligence: Augmenting human intellect with collaborative, adaptive, responsible, and explainable artificial intelligence. Computer, 53(8), 18–28. [Google Scholar] [CrossRef]

- Amichai-Hamburger, Y. (2009). Technology and well-being: Designing the future. In Cambridge University Press eBooks (pp. 260–278). Cambridge University Press & Assessment. [Google Scholar] [CrossRef]

- Belk, R. (2016). Extended self and the digital world. Current Opinion in Psychology, 10, 50–54. [Google Scholar] [CrossRef]

- Bewersdorff, A., Hornberger, M., Nerdel, C., & Schiff, D. (2024). AI advocates and cautious critics: How AI attitudes, AI interest, use of AI, and AI literacy build university students’ AI self-efficacy. Computers and Education Artificial Intelligence, 8(1), 100340. [Google Scholar] [CrossRef]

- Binz, M., Akata, E., Bethge, M., Brändle, F., Callaway, F., Coda-Forno, J., Dayan, P., Demircan, C., Eckstein, M. K., Éltető, N., Griffiths, T. L., Haridi, S., Jagadish, A. K., Ji-An, L., Kipnis, A., Kumar, S., Ludwig, T., Mathony, M., Mattar, M., … Schulz, E. (2025). A foundation model to predict and capture human cognition. Nature, 644, 1002–1009. [Google Scholar] [CrossRef] [PubMed]

- Brosnan, M. J. (1998). Technophobia: The psychological impact of information technology. Routledge. [Google Scholar]

- Brubaker, R. (2022). Hyperconnectivity and its discontents. Polity. [Google Scholar]

- Carlson, L. E., & Brown, K. W. (2005). Validation of the mindful attention awareness scale in a cancer population. Journal of Psychosomatic Research, 58(1), 29–33. [Google Scholar] [CrossRef]

- Clark, A., & Chalmers, D. (1998). The extended mind. Analysis, 58(1), 7–19. [Google Scholar] [CrossRef]

- Cole, M. (1996). Cultural psychology: A once and future discipline. Harvard University Press. [Google Scholar]

- De Graaf, M. M., Allouch, S. B., & Van Dijk, J. A. (2018). A phased framework for long-term user acceptance of interactive technology in domestic environments. New Media & Society, 20, 2582–2603. [Google Scholar] [CrossRef]

- Dell’Acqua, F., McFowland, E., Mollick, E. R., Lifshitz-Assaf, H., Kellogg, K., Rajendran, S., Krayer, L., Candelon, F., & Lakhani, K. R. (2023). Navigating the jagged technological frontier: Field experimental evidence of the effects of AI on knowledge worker productivity and quality. Harvard Business School Technology & Operations Mgt. Unit Working Paper No. 24-013. Available online: https://ssrn.com/abstract=4573321 (accessed on 27 October 2025).

- Dellermann, D., Ebel, P., Söllner, M., & Leimeister, J. M. (2019). Hybrid intelligence. Business & Information Systems Engineering, 61, 637–643. [Google Scholar] [CrossRef]

- Dong, M., Conway, J. R., Bonnefon, J.-F., Shariff, A., & Rahwan, I. (2024). Fears about artificial intelligence across 20 countries and six domains of application. American Psychologist. Online ahead of print. [Google Scholar] [CrossRef]

- Doshi, A. R., & Hauser, O. P. (2024). Generative AI enhances individual creativity but reduces the collective diversity of novel content. Science Advances, 10, eadn5290. [Google Scholar] [CrossRef]

- DQ Institute. (2017). Digital intelligence. A conceptual framework & methodology for teaching and measuring digital citizenship. Available online: https://www.dqinstitute.org/wp-content/uploads/2017/08/DQ-Framework-White-Paper-Ver1-31Aug17.pdf (accessed on 29 June 2025).

- Earley, P. C., & Ang, S. (2003). Cultural intelligence: Individual interactions across cultures. Stanford University Press. [Google Scholar]

- Engelbart, D. C. (1962). Augmenting human intellect: A conceptual framework. Summary Report, Contract AF 49–1024 21. DTIC. [Google Scholar] [CrossRef]

- Faiola, A., Voiskounsky, A. E., & Bogacheva, N. V. (2016). Augmented human beings: Developing cyberconsciousness. Voprosy Filosofii, 3, 147–162. (In Russian). [Google Scholar]

- Fox, J., & Gambino, A. (2021). Relationship development with humanoid social robots: Applying interpersonal theories to human–robot interaction. Cyberpsychology Behavior and Social Networking, 24, 294–299. [Google Scholar] [CrossRef] [PubMed]

- Gao, Q., Xu, W., Pan, H., Shen, M., & Gao, Z. (2025). Human-centered human-AI collaboration (HCHAC). arXiv. [Google Scholar] [CrossRef]

- Gill, A., & Mathur, A. (2024). Emotional intelligence in the age of AI. In Advances in business information systems and analytics book series (pp. 263–285). Business Science Reference. [Google Scholar]

- Gkinko, L., & Elbanna, A. (2022). Hope, tolerance and empathy: Employees’ emotions when using an AI-enabled chatbot in a digitalised workplace. Information Technology and People, 35, 1714–1743. [Google Scholar] [CrossRef]

- GptZERO Team. (2025). How many people use AI in 2025? Available online: https://gptzero.me/news/how-many-people-use-ai/ (accessed on 29 July 2025).

- Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. [Google Scholar] [CrossRef]

- Hitsuwari, J., & Takano, R. (2025). Associating attitudes towards AI and ambiguity: The distinction of acceptance and fear of AI. Acta Psychologica, 26, 105581. [Google Scholar] [CrossRef]

- Huzooree, G., Yadav, M., & Dewasiri, N. J. (2025). AI and emotional intelligence in project management. In Advances in computational intelligence and robotics book series (pp. 111–130). Engineering Science Reference. [Google Scholar]

- Joseph, G. V., Athira, P., Thomas, M. A., Jose, D., Roy, T. V., & Prasad, M. (2024). Impact of digital literacy, use of ai tools and peer collaboration on AI assisted learning-perceptions of the university students. Digital Education Review, 45, 43–49. [Google Scholar] [CrossRef]

- Kelly, S., Kaye, S., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telematics and Informatics, 77, 101925. [Google Scholar] [CrossRef]

- Kihlstrom, J. F., & Cantor, N. (2011). Social intelligence (pp. 564–581). Cambridge University Press eBooks. [Google Scholar]

- Kolomaznik, M., Petrik, V., Slama, M., & Jurik, V. (2024). The role of socio-emotional attributes in enhancing human-AI collaboration. Frontiers in Psychology, 15, 1369957. [Google Scholar] [CrossRef]

- Königs, P. (2022). What is techno-optimism? Philosophy & Technology, 35, 63. [Google Scholar] [CrossRef]

- Li, B., Lai, E. Y., & Wang, X. (2025). EXPRESS: From tools to agents: Meta-analytic insights into human acceptance of AI. Journal of Marketing. [Google Scholar] [CrossRef]

- Licklider, J. C. R. (1960). Man-computer symbiosis. IRE Transactions on Human Factors in Electronics, HFE-1, 4–11. [Google Scholar] [CrossRef]

- Mayer, J. D. (2008). Personal intelligence. Imagination Cognition and Personality, 27, 209–232. [Google Scholar] [CrossRef]

- Mayer, J. D., Salovey, P., & Caruso, D. R. (2004). Emotional intelligence: Theory, findings, and implications. Psychological Inquiry, 15, 197–215. [Google Scholar] [CrossRef]

- McLuhan, M. (1964). The medium is the message. In Understanding media: The extensions of man. The MIT Press. [Google Scholar]

- Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. SSRN Electronic Journal, 381(6654), 187–192. [Google Scholar] [CrossRef]

- Osiceanu, M.-E. (2015). Psychological implications of modern technologies: “Technofobia” versus “technophilia”. Procedia—Social and Behavioral Sciences, 180, 1137–1144. [Google Scholar] [CrossRef]

- Ostheimer, J., Chowdhury, S., & Iqbal, S. (2021). An alliance of humans and machines for machine learning: Hybrid intelligent systems and their design principles. Technology in Society, 66, 101647. [Google Scholar] [CrossRef]

- Otrel-Cass, K. (2019). Hyperconnectivity and digital reality: Towards the eutopia of being human. Springer. [Google Scholar]

- Pandey, N. P., Singh, N. T., & Kumar, N. S. (2023). Cognitive offloading: Systematic review of a decade. International Journal of Indian Psychology, 11, 1545–1563. [Google Scholar] [CrossRef]

- Pankratova, A. A., Kornienko, D. S., & Lyusin, D. V. (2022). Testing the short version of the emin questionnaire. Psychology Journal of the Higher School of Economics, 19, 822–834. (In Russian) [Google Scholar] [CrossRef]

- Peng, S., Kalliamvakou, E., Cihon, P., & Demirer, M. (2023). The impact of AI on developer productivity: Evidence from GitHub copilot. arXiv. [Google Scholar] [CrossRef]

- Pew Research Center. (2025a). 34% of U.S. adults have used ChatGPT, about double the share in 2023. Available online: https://www.pewresearch.org/short-reads/2025/06/25/34-of-us-adults-have-used-chatgpt-about-double-the-share-in-2023/ (accessed on 20 October 2025).

- Pew Research Center. (2025b). How people around the world view AI. Available online: https://www.pewresearch.org/global/2025/10/15/how-people-around-the-world-view-ai/ (accessed on 20 October 2025).

- Postman, N. (1992). Technopoly: The surrender of culture to technology. Knopf. [Google Scholar]

- Reeves, B., & Nass, C. (1996). The media equation: How people treat computers, television, and new media like real people. Cambridge University Press. [Google Scholar]

- Ribble, M. (2015). Digital citizenship in schools: Nine elements all students should know (3rd ed.). International Society for Technology in Education. [Google Scholar]

- Ridley, M. (2010). The rational optimist: How prosperity evolves. Harper Collins. [Google Scholar]

- Rogers, E. M. (1983). Diffusion of innovations (3rd ed. rev.). Free Press; Collier Macmillan. [Google Scholar]

- Schleiger, E., Mason, C., Naughtin, C., Reeson, A., & Paris, C. (2024). Collaborative intelligence: A scoping review of current applications. Applied Artificial Intelligence, 38, 2327890. [Google Scholar] [CrossRef]

- Shugurova, O. (2025). “It’s thrilling and liberating, but I worry”: Explorations of AI-induced emotions from a dialogical perspective. Culture & Psychology. online ahead of print. [Google Scholar] [CrossRef]

- Silverstone, R., & Haddon, L. (1996). Design and the domestication of information and communication technologies: Technical change and everyday life (pp. 44–74). Communication by Design. [Google Scholar]

- Soldatova, G., & Voiskounsky, A. (2021). Socio-cognitive concept of digital socialization: A new ecosystem and social evolution of the mind. Psychology. Journal of the Higher School of Economics, 18(3), 431–450. (In Russian) [Google Scholar] [CrossRef]

- Soldatova, G. U., Chigarkova, S. V., & Ilyukhina, S. N. (2024). Metamorphosis of the identity of the human completed: From digital donor to digital centaur. Social Psychology and Society, 15, 40–57. (In Russian). [Google Scholar] [CrossRef]

- Soldatova, G. U., & Ilyukhina, S. N. (2025). Digital extended man looking for his wholeness. Cultural-Historical Psychology, 21, 13–23. [Google Scholar] [CrossRef]

- Soldatova, G. U., Nestik, T. A., Rasskazova, E. I., & Dorokhov, E. A. (2021). Psychodiagnostics of technophobia and technophilia: Testing a questionnaire of attitudes towards technology for adolescents and parents. Social Psychology and Society, 12(4), 170–188. (In Russian). [Google Scholar] [CrossRef]

- Soldatova, G. U., & Rasskazova, E. I. (2018). Brief and screening versions of the digital competence index: Verification and application possibilities. National Psychological Journal, 31(3), 47–56. (In Russian). [Google Scholar] [CrossRef]

- Sternberg, R. J., & Hedlund, J. (2002). Practical intelligence, g, and work psychology (pp. 143–160). Psychology Press eBooks. [Google Scholar] [CrossRef]

- Thiele, L. P. (2021). Rise of the centaurs: The internet of things intelligence augmentation. In Towards an international political economy of artificial intelligence (pp. 39–61). Springer. [Google Scholar]

- Vuorikari, R., Kluzer, S., & Punie, Y. (2022). DigComp 2.2: The digital competence framework for citizens—With new examples of knowledge, skills and attitudes. Publications Office of the European Union. [Google Scholar] [CrossRef]

- Vuorre, M., & Przybylski, A. K. (2024). A multiverse analysis of the associations between internet use and well-being. Technology Mind and Behavior, 5(2), 1–11. [Google Scholar] [CrossRef]

- Vygotskij, L. S. (1982). The instrumental method in psychology. In L. S. Vygotsky, Complete works (Vol. 1, pp. 103–108). Pedagogika. (In Russian) [Google Scholar]

- Wolf, F. D., & Stock-Homburg, R. M. (2023). How and when can robots be team members? Three decades of research on human–robot teams. Group & Organization Management, 48, 1666–1744. [Google Scholar] [CrossRef]

- Wu, T.-J., Liang, Y., & Wang, Y. (2024). The buffering role of workplace mindfulness: How job insecurity of human-artificial intelligence collaboration impacts employees’ work–life-related outcomes. Journal of Business and Psychology, 39, 1395–1411. [Google Scholar] [CrossRef]

- Xin, Z., & Derakhshan, A. (2024). From excitement to anxiety: Exploring English as a foreign language learners’ emotional experiences in the artificial intelligence-powered classrooms. European Journal of Education, 60, e12845. [Google Scholar] [CrossRef]

- Xu, S., & Li, W. (2022). A tool or a social being? A dynamic longitudinal investigation of functional use and relational use of AI voice assistants. New Media & Society, 26, 3912–3930. [Google Scholar] [CrossRef]

- Yang, L., & Zhao, S. (2024). AI-induced emotions in L2 education: Exploring EFL students’ perceived emotions and regulation strategies. Computers in Human Behavior, 159, 108337. [Google Scholar] [CrossRef]

- Youvan, D. C. (2025). The centaur compact: Reclaiming human-AI collaboration in an age of division and control. Preprint, 2025, 1–18. [Google Scholar] [CrossRef]

- Yumartova, N. M., & Grishina, N. V. (2016). Mindfulness: Psychological characteristics and adaptation of measurement tools. Psychological Journal, 37, 105–115. (In Russian). [Google Scholar]

- Zhao, J., Kumar, V. V., Katina, P. F., & Richards, J. (2025). Humans and generative ai tools for collaborative intelligence. IGI Global. [Google Scholar] [CrossRef]

| Scale | The Digital Centaur Scale |

|---|---|

| Mindfulness | 0.225 ** |

| Interpersonal Emotional Intelligence | 0.167 ** |

| Intrapersonal Emotional Intelligence | 0.212 ** |

| Digital competence | 0.259 ** |

| Technopessimism | 0.119 ** |

| Technophilia | 0.450 ** |

| Technorationalism | 0.387 ** |

| Technophobia | 0.030 |

| Daily Internet Usage | 0.134 ** |

| Model | Predictors | Standardized Coefficient β | SE Coefficient B | p-Value |

|---|---|---|---|---|

| Step 1 | Daily Internet Usage | 0.101 | 0.008 | 0.000 |

| Interpersonal Emotional Intelligence | 0.054 | 0.009 | 0.028 | |

| Intrapersonal Emotional Intelligence | 0.128 | 0.010 | 0.000 | |

| Mindfulness | 0.169 | 0.020 | 0.000 | |

| Digital Competence | 0.202 | 0.001 | 0.000 | |

| Step 2 | Daily Internet Usage | 0.054 | 0.007 | 0.009 |

| Interpersonal Emotional Intelligence | 0.015 | 0.009 | 0.529 | |

| Intrapersonal Emotional Intelligence | 0.070 | 0.009 | 0.002 | |

| Mindfulness | 0.041 | 0.021 | 0.079 | |

| Digital Competence | 0.147 | 0.001 | 0.000 | |

| Technopessimism | −0.111 | 0.024 | 0.000 | |

| Technophilia | 0.386 | 0.038 | 0.000 | |

| Technorationalism | 0.047 | 0.037 | 0.239 |

| Type | Effect | SE | β | 95% C.I. | p-Value |

|---|---|---|---|---|---|

| Indirect | Mindfulness ⇒ Technophilia ⇒ The Digital Centaur Scale | 0.006 | 0.033 | 0.018–0.044 | <0.001 |

| Digital competence ⇒ Technophilia ⇒ The Digital Centaur Scale | 0.000 | 0.013 | 0.000–0.001 | 0.012 | |

| Technorationalism ⇒ Technophilia ⇒ The Digital Centaur Scale | 0.027 | 0.289 | 0.217–0.322 | <0.001 | |

| Direct | Mindfulness ⇒ The Digital Centaur Scale | 0.021 | 0.028 | −0.022–0.073 | 0.225 |

| Digital competence ⇒ The Digital Centaur Scale | 0.001 | 0.162 | 0.005–0.009 | <0.001 | |

| Technorationalism ⇒ The Digital Centaur Scale | 0.035 | 0.026 | −0.042–0.090 | 0.480 |

| Scale | The Digital Centaur Scale |

|---|---|

| Completing academic tasks | 0.181 ** |

| Resolving work-related tasks | 0.178 ** |

| Entertainment, gaming and leisure | 0.094 ** |

| Obtaining information | 0.087 |

| Functioning as a personal assistant | 0.048 |

| Psychological support | −0.055 |

| Social interaction | −0.073 |

| Receiving relationship advice | 0.015 |

| Developing specific skills and abilities | 0.132 ** |

| Scale | The Digital Centaur Scale |

|---|---|

| Curiosity | 0.356 ** |

| Irritation | −0.047 |

| Pleasure | 0.221 ** |

| Anxiety | −0.099 ** |

| Gratitude | 0.188 ** |

| Envy | −0.093 ** |

| Trust | 0.178 ** |

| Sense of inferiority | −0.096 ** |

| Scale | The Digital Centaur Scale |

|---|---|

| A work colleague | 0.237 ** |

| A friend | 0.070 ** |

| A school or university teacher | 0.168 ** |

| A household assistant | 0.325 ** |

| A psychologist | 0.065 ** |

| A romantic partner | −0.034 |

| A physician | 0.156 ** |

| A judge | 0.112 ** |

| A nanny | 0.052 |

| A city mayor | 0.050 |

| A president | 0.006 |

| A police officer | 0.087 ** |

| Scale | The Digital Centaur Scale |

|---|---|

| AI will lead to the disappearance of professions and transform the labor market | 0.066 ** |

| AI will compete with humans as friends and romantic partners | −0.065 ** |

| AI will be used for the benefit of certain individuals, groups, or organizations | 0.119 ** |

| AI will be used to commit various crimes | 0.151 ** |

| Humans will make critical decisions based on AI and may be mistaken | 0.120 ** |

| AI will go out of human control and start governing people | 0.017 |

| AI will make humans lazy and prevent their development | 0.115 ** |

| AI will destroy humanity | −0.032 |

| People will begin to worship AI and turn it into a religion | −0.055 |

| AI will be used to enhance government control over citizens’ lives | 0.143 ** |

| AI will outperform me in everything, making me feel worthless | −0.074 ** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Soldatova, G.U.; Chigarkova, S.V.; Ilyukhina, S.N. The Digital Centaur as a Type of Technologically Augmented Human in the AI Era: Personal and Digital Predictors. Behav. Sci. 2025, 15, 1487. https://doi.org/10.3390/bs15111487

Soldatova GU, Chigarkova SV, Ilyukhina SN. The Digital Centaur as a Type of Technologically Augmented Human in the AI Era: Personal and Digital Predictors. Behavioral Sciences. 2025; 15(11):1487. https://doi.org/10.3390/bs15111487

Chicago/Turabian StyleSoldatova, Galina U., Svetlana V. Chigarkova, and Svetlana N. Ilyukhina. 2025. "The Digital Centaur as a Type of Technologically Augmented Human in the AI Era: Personal and Digital Predictors" Behavioral Sciences 15, no. 11: 1487. https://doi.org/10.3390/bs15111487

APA StyleSoldatova, G. U., Chigarkova, S. V., & Ilyukhina, S. N. (2025). The Digital Centaur as a Type of Technologically Augmented Human in the AI Era: Personal and Digital Predictors. Behavioral Sciences, 15(11), 1487. https://doi.org/10.3390/bs15111487