Abstract

This study analyzes the perception and usage of ChatGPT based on the technology acceptance model (TAM). Conducting reticular analysis of coincidences (RAC) on a convenience survey among university students in the social sciences, this research delves into the perception and utilization of this artificial intelligence tool. The analysis considers variables such as gender, academic year, prior experience with ChatGPT, and the training provided by university faculty. The networks created with the statistical tool “CARING” highlight the role of perceived utility, credibility, and prior experience in shaping attitudes and behaviors toward this emerging technology. Previous experience, familiarity with video games, and programming knowledge were related to more favorable attitudes towards ChatGPT. Students who received specific training showed lower confidence in the tool. These findings underscore the importance of implementing training strategies that raise awareness among students about both the potential strengths and weaknesses of artificial intelligence in educational contexts.

1. Introduction

Artificial intelligence (AI) represents the generational technological leap of our time [1,2,3]. It is not a new phenomenon, since the first developments were made more than seven decades ago [4]. However, we can consider that the last five years (2020–2024) have been the period representing a definitive explosion in the area. A significant part of these advances can be attributed to the commercial launch of the revolutionary tool Chat Generative Pre-trained Transformer, commonly known as ChatGPT, which has shown the world the enormous potential of AI, much of which is still being explored.

ChatGPT is a natural language processing (NLP) system developed by the company OpenAI. It relies on large language models (LLM) that are pre-trained using deep neural networks to process extensive volumes of data. This technology equips ChatGPT with the ability to learn linguistic and contextual patterns, which is essential for understanding and generating responses to open-ended prompts. Additionally, by emulating human cognitive processes, ChatGPT interacts with users, enabling dynamic conversations [5,6,7,8,9].

Since its launch in 2022, building on previous beta versions, numerous studies have addressed the social implications of ChatGPT at all levels. This has resulted in many arguments in favor of its use in various fields, as well as numerous arguments against it. Currently, we are at a historical moment where more research is necessary to determine the social implications of using this tool in essential areas, such as the educational field. The controversy over its use is intense, and technological advances face many ethical conflicts that are difficult to resolve. This study is framed within this context, aiming to shed light on the issue by analyzing the perception and usage of ChatGPT. This research is based on the technology acceptance model (TAM) and employs reticular analysis of coincidences (RAC) among university students in the social sciences.

2. Literature Review

2.1. ChatGPT and the Technology Acceptance Model (TAM)

The technology acceptance model (TAM) is a theoretical model that aims to explain and predict user acceptance and the usage of technology [10,11]. It has become one of the most widely used models for studying the adoption of information technologies [12]. The TAM focuses on two main factors: (1) Perceived usefulness, i.e., the degree to which a person believes that using a particular technology will enhance their job performance or how they perceive benefits in specific tasks, and (2) Perceived ease of use, or the degree to which a person believes that using a particular technology will be free of effort. This includes the intuitiveness of the user interface, the simplicity of issuing commands, and the clarity of the responses provided by the tool. Ease of use can also encompass the initial learning curve and the ongoing ease of use in various applications.

Based on the psychology-driven theories of reasoned action (TRA) and planned behavior (TPB), the TAM has become a key framework in understanding user behaviors regarding technology [13]. Subsequent developments of the TAM have introduced additional factors that may influence technology acceptance. For instance, the attitude towards using captures users’ overall opinions and feelings about the technology. Behavioral intention of use and actual use focus on users’ plans to use the technology and their actual usage patterns. Social influence examines how the opinions of others or organizational culture impact technology acceptance. Facilitating conditions pertain to the perceived availability of resources and support necessary for using the technology. By applying the TAM, researchers and developers can identify factors that promote or hinder the acceptance of ChatGPT. Elements such as training, support, and experience with similar technologies have become the focus of recent attention because they can boost users’ confidence and perceived usefulness, reduce resistance to adopting technology, and lower perceived complexity, to mention just a few examples.

The extension of the TAM with other theoretical perspectives aiming to explain and predict users’ intentions to use information technology gives rise to the unified theory of acceptance and use of technology (UTAUT) [14,15,16]. This approach identifies four key constructs influencing intention and usage behavior, namely, performance expectancy, which is the degree to which an individual believes that using the technology will enhance their job performance, effort expectancy, which is the degree of ease associated with the use of the technology, social influence, which is the extent to which an individual perceives that important others believe they should use the new technology, and facilitating conditions, which is the perception of the availability of resources and support necessary to use the technology. According to UTAUT, performance expectancy, effort expectancy, and social influence each affect users’ behavioral intention to use technology, whereas behavioral intention and facilitating conditions determine the actual use of the technology.

The relationship between the TAM and UTAUT is evident in several aspects. Perceived usefulness in the TAM is akin to performance expectancy in the UTAUT, as both pertain to the belief that technology use will improve performance. Similarly, perceived ease of use in the TAM is equivalent to effort expectancy in the UTAUT, referring to the ease of technology use. While the TAM indirectly addresses social influence, the UTAUT explicitly includes it as a core construct. The UTAUT introduces facilitating conditions which are not explicitly present in TAM but are somewhat implied in the concept of ease of use. Both models focus on predicting the intention to use and actual use of technology.

2.2. The Emergence of ChatGPT in Educational Settings

ChatGPT finds diverse applications in education, spanning from generating and translating content to summarization in different formats, like stories, essays, letters, and tweets [17]. It is also used for creative tasks, such as composing music, crafting theatrical scripts, screenplay writing, and beyond. Moreover, ChatGPT excels in answering a spectrum of questions, delivering customized responses in various tones, including formal, informal, motivational, commercial, or academic. This adaptability makes it a valuable asset for language acquisition, enhancing writing proficiency, and synthesizing information across diverse research domains [18,19,20,21,22,23,24]. However, it has also been suggested that employing ChatGPT for writing purposes makes it necessary to teach more advanced skills reflecting students’ critical, analytical, and argumentative abilities [25,26].

Beyond its academic applications, ChatGPT holds the potential to enhance student motivation by aiding in task and class management, especially in online teaching scenarios and blended learning environments [23,27,28,29,30,31,32]. It also improves virtual and visual learning in health-related studies [33,34,35,36,37,38]. However, in some tasks related to medical education, ChatGPT does not always provide better learning outcomes than traditional methods [39,40].

Benefits of using it include assisting in finding pertinent resources and offering comprehensive feedback [41], covering aspects, such as content, structure, grammar, and spelling [23,27,28,42,43]. Moreover, it fosters collaboration among students [27,44,45] and enhances creativity, problem-solving, and critical thinking, and improves several metacognitive abilities [46,47,48,49]. It also increases accessibility and inclusion [20], extending its benefits to students with disabilities or those who may not be proficient in the native language they study or work in [21,45]. In summary, it functions as a customized tool fostering autonomy and inclusivity, thereby enhancing the overall learning experience [50].

However, the integration of ChatGPT in educational environments not only demonstrates a positive reception [20,42,48,51,52,53,54], as reflected in the growing number of scientific publications [55], but also raises numerous concerns and reservations related to ethics, academics, and the training of teachers to master this tool [41,56,57,58,59,60,61,62]. Concerns have been highlighted regarding the accuracy and generation of incorrect responses, which can be attributed to the quality, diversity, and complexity of the training data, as well as the input provided by users [7,57]. Further studies also underscore biases and unsuitable content arising from language models and algorithms, coupled with challenges in comprehending the nuances of human language [63,64].

Moreover, the scholarly discussion surrounding ChatGPT within the more critical framework of education emphasizes issues like plagiarism and ethical considerations [27,65,66,67,68], excessive dependence on technology [7,60,61,66], potential threats to critical thinking [7,45,60,69], concerns about data privacy and security [54,70,71], lack of human moderation in user interactions [72] and issues related to the digital divide [7,45].

2.3. Factors Influencing Technology Acceptance among University Students

The initial studies on the acceptance of ChatGPT soon applied the TAM (technology acceptance model) [72,73,74,75,76,77,78,79,80], showing that perceived usefulness and ease of use positively impacted attitude and intention to use ChatGPT, and that most students had a positive view of ChatGPT, finding it user-friendly and beneficial for completing assignments. Since then, numerous factors have been found to influence university students’ acceptance of ChatGPT, including the aforementioned perceived ease of use and perceived usefulness, social influence (i.e., peer recommendations), organizational support, self-evaluation judgments, information quality, reliability, experience, performance expectancy, hedonic motivation, price value, and habits and facilitating conditions [81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97]. The acceptance of ChatGPT by university students may be influenced by experience, habit, and behavioral intention, with facilitating conditions and user behavior also playing significant roles in technology adoption [98]. External factors such as stress and anxiety can negatively impact ChatGPT acceptance by diminishing motivation and perception about utility and usage [99,100,101]. However, in some cases, the stress leading to anxiety drives students to adopt this technology to meet deadlines, revealing a complex relationship between these psychological variables [101].

Research indicates that university students are increasingly utilizing AI tools like ChatGPT for academic assistance, with positive perceived results on performance, particularly in understanding complex concepts, accessing relevant study materials [102], and solving tasks with a higher degree of quality [60,97]. They particularly value that ChatGPT helps them increase self-efficacy while reducing mental effort, although students’ perceptions and interpretations may not always align with reality [97]. The benefits of using ChatGPT include facilitating adaptive learning, providing personalized feedback, supporting research, writing, and data analysis, and aiding in developing innovative assessments [103].

When examining studies that investigate moderating effects, a complex view emerges. Regarding the intention to use ChatGPT, it seems that higher levels of personal innovation may lead to stronger perceptions of usefulness, ease of use, information quality, and reliability, whereas lower levels make perceived risk more salient [84]. User acceptance, mediated by information quality, system quality, perceived learning value, and perceived satisfaction, plays an important role in determining users’ acceptance of ChatGPT [103,104]. According to the diffusion of innovation theory, it has been found that compatibility, observability, and trialability influence students’ adoption of ChatGPT in higher education. These factors are responsible for perceiving this tool as innovative, compatible, user-friendly, and useful [105]. Additionally, perceived ease of use may not directly predict learners’ attitudes but does so through the full mediator perceived usefulness. Those with positive attitudes toward the usefulness of ChatGPT have a higher level of behavioral intention, which positively and strongly predicts their actual use [75].

Regarding factors that could negatively impact the acceptance of ChatGPT among university students, research has found that only a minority perceive this tool as unsuitable for educational tasks. This group is concerned with its impact or bad performance on research, data analysis skills, and creative writing [59,93,106]. However, academic misconduct and plagiarism are the factors that worry students the most, with higher willingness to use it when the perceived risk of detecting the use of ChatGPT is low [102,106,107].

The studies mentioned earlier did not focus on the role of training on students’ perceptions of ChatGPT according to the technology acceptance model. Consequently, our main objective is to fill this gap in the emerging literature related to the educational use of ChatGPT. To accomplish this, we adapted Yilmaz’s questionnaire [72] based on an extended TAM to the Spanish context and included specific questions about prior training for undergraduate students in the faculties of social sciences and law.

We conducted a comparative analysis of attitudes and perceptions regarding the utility, credibility, social influence, privacy and security, ease of use, and intention to use ChatGPT based on the type of training received for classroom use. The main hypothesis of our study is that prior training will have a decisive influence on students’ attitudes towards ChatGPT, breaking down prejudices, fears, and taboos regarding its academic and personal use.

3. Materials and Methods

3.1. Design and Procedures

The study employed a cross-sectional, ex post facto design and utilized a convenience sample comprising a total of 216 Spanish students (72.3% female, 25.8% male, 1.9% preferred not to disclose this information), aged between 17 and 60 years: 17–19 (70%), 20–22 (22%), and 23–60 (7.7%). This latter percentage accounts for 4 sociology students in their third and fourth years, aged 33, 34, 48, and 60. In terms of disciplines, criminology (from the University of Salamanca, USAL) had the highest number of students, followed by sociology (USAL) and social education (University of Valladolid, UVA). This information is gathered in Table 1.

Table 1.

Student characteristics.

A survey evaluating the usage and perception of ChatGPT among social sciences students was conducted. The survey incorporated a previously validated questionnaire, along with additional sociodemographic, artificial intelligence, and technology usage questions. Data collection took place during October and November 2023 within class hours. It is important to note that there might be a bias towards students who regularly attend classes (and achieve higher grades) compared to those who do not. As per the survey results, 70% achieved notable grades, 17% outstanding, 8.3% passed, and only two students failed the previous university course (0.9%). The data collection method used did not allow for the segregation of students based on their degree.

In full compliance with the Research Ethics Committee Regulations of the University of Salamanca and the University of Valladolid, participation was voluntary and required obtaining informed written consent. This research was conducted in accordance with the regulations of the Research Ethics Committee of the Autonomous University of Madrid, which states in its protocol (Article 1.2) that questionnaires are outside the scope of application about specific assessments. The data were anonymized and securely stored for evaluation purposes. According to the Research Ethics Committee Regulations of the University of Salamanca and the University of Valladolid, students’ academic work produced in didactic activities as part of the curriculum may be utilized for research with their explicit written consent. This study adheres to a non-interventional approach, ensuring participant anonymity, in accordance with the Spanish Organic Law 3/2018, dated 5 December, on Data Protection and Guarantee of Digital Rights.

3.2. Instrument

We used the questionnaire on attitudes toward ChatGPT, originally developed in English by Yilmaz [72]. It consists of 21 items distributed across seven dimensions: (1) perceived utility, (2) attitudes towards the use of ChatGPT, (3) perceived credibility, (4) perceived social influence, (5) perceived privacy and security, (6) perceived ease of use, and (7) behavioral intention to use ChatGPT. Table 2 below describes the seven dimensions of the instrument in detail.

Table 2.

Dimensions of the questionnaire on attitudes toward ChatGPT by Yilmaz [72].

In its adaptation to Spanish, the questionnaire underwent a direct and reverse translation process, followed by a final review by two experts. Due to the questionnaire’s simplicity, the Spanish version did not require substantial linguistic adjustments for comprehensibility. Confirmatory factor analysis (CFA) demonstrated a favorable model fit, reliability, and validity of the questionnaire (α = 0.855), and the results showed an overall positive perception of ChatGPT among the participants. Each item was measured on a Likert scale with the characteristics given in Table 3.

Table 3.

Operationalization of ChatGPT perception and usage measurement.

The survey was administered through the educational platforms of the University of Salamanca, “Studium”, and the Virtual Campus of the University of Valladolid. Participants completed the questionnaire during class hours under the supervision of the instructor. Responses submitted outside of class hours were excluded to ensure sample control. The independent variables included to explore variations in subjectivity concerning ChatGPT use encompassed gender, the received training type, academic performance, determined by the average grade of the prior academic course, and, as an indicator of digital skills, proficiency in programming and video game usage.

The data analysis proceeded in two phases. In the descriptive exploration of data, mean comparisons were computed considering both student characteristics and dimensions of the instrument. For inferential analyses, students were categorized based on their subjective evaluation of ChatGPT.

Subsequently, reticular coincidence analysis [108] was employed to uncover coincidences. This method is used in statistics and data analysis to identify and analyze patterns of coincidences or correlations among multiple variables in a visual and intuitive way. In the reticular structure created, the nodes represent variables, while the edges (lines connecting nodes) represent statistically significant coincidences or correlations between these nodes. Nodes can represent individual variables, events, or data points and are depicted as dots in the network. The edges illustrate the relationships or correlations between the nodes, indicating a significant relationship if two nodes are connected by an edge. The reticular graphs highlight only statistically significant matches, determined by Haberman residuals (p < 0.05). This means that the connections (edges) shown in the network are not random; they are statistically meaningful. The size of each node denotes the response percentage or the importance of that variable, with larger nodes indicating higher response rates or greater significance. On the other hand, the thickness of the lines indicates the strength of the match or relationship, as measured by the Haberman coefficient (thicker lines indicate stronger relationships, while thinner lines indicate weaker relationships).

We used the Caring tool (Proyect NetCoin. https://caring.usal.es/) to create the five networks presented in this study. This tool allowed for a detailed visualization of the statistically significant relationships identified in our analysis.

4. Results

The student demographic commonly found in social science classrooms, which was primarily women (as shown in Table 1), did not manifest a specific inclination toward technological topics. Notably, 13% admitted to not engaging in video games, and 31% possessed only basic knowledge, with a mere 20.9% displaying advanced proficiency in video game usage. Concerning programming knowledge, the majority claimed to have no understanding (60%), while only 1.4% asserted possessing advanced knowledge.

These technology-related characteristics of the sample align partially with the outcomes concerning prior experience with ChatGPT. Approximately 16.2% were unaware of its existence, 31.9% had an account but had never used it, and 51.9% had used it at some point. Among the students who had employed it, 22% utilized it as a support for assignments, 16.4% for information retrieval, 8% out of curiosity, and 5% for writing support. Regarding prior training in ChatGPT, 25.5% reported self-directed learning, 47.7% had not received any training, and 26.9% had undergone some form of training from university professors, either through specific classroom activities or various sessions aimed at gaining a deeper understanding of the tool’s advantages and proper usage.

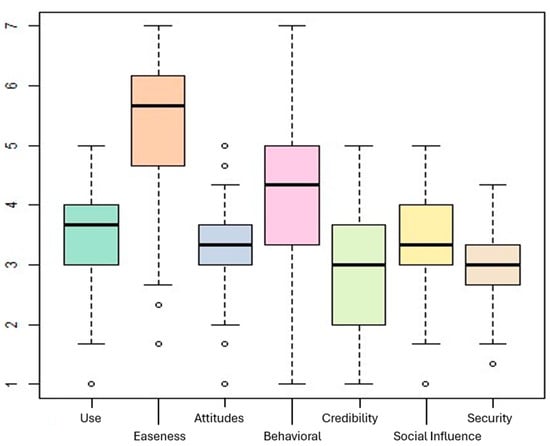

The dimensions of the “perception and use”, illustrated in Figure 1, indicate that students in social disciplines view ChatGPT as an easily accessible tool ( 5.4 on a scale ranging from 1 to 7). Other dimensions show average values around the level corresponding to the “indifferent” category on the Likert scale used (see Table 4), with responses displaying minimal dispersion.

Figure 1.

Dimensions of perception and usage of ChatGPT.

Table 4.

Frequency table of the dimensions of the index.

Analyzing student characteristics (Table 5), no significant differences were observed okin ChatGPT perception based on gender, average grades, or prior familiarity with the tool. Two criteria, familiarity with video games and programming knowledge, were used to evaluate software proficiency.

Table 5.

Mean values of perception and usage of ChatGPT according to student characteristics.

Students with average or advanced video game expertise tended to rate the tool more positively across dimensions such as perceived usage, utility, attitude, and intention to use. Conversely, those with advanced programming skills assigned higher scores to the social influence dimension in ChatGPT use, with a significantly elevated average compared to other categories ( 4.67, p < 0.01). Table 4 further illustrates that increased familiarity with ChatGPT corresponds to more favorable evaluations of the tool. Students with knowledge and prior usage of artificial intelligence tend to rate its use more positively ( 3.68, p < 0.01), perceive greater utility ( 5.72, p < 0.001), exhibit a more positive attitude ( 3.52, p < 0.001), and express a stronger intention to use ( 4.53, p < 0.001) compared to those unfamiliar with or yet to use it. Concerning the “main use of ChatGPT” category, students utilizing it for academic support or information retrieval provided higher ratings across all dimensions, excluding social influence and security, when contrasted with those who had not used it. Lastly, the received training appears to negatively influence the credibility of students who underwent classroom training, especially in intensive sessions. Additionally, they report experiencing less social pressure to use the tool compared to those without training.

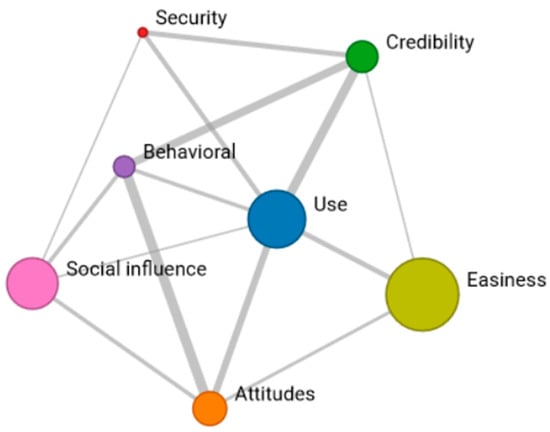

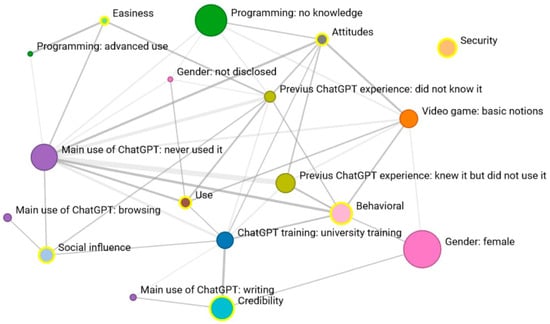

In the second part of the analysis, extreme perceptions of students were isolated by initially identifying the most positive evaluations of ChatGPT usage and then incorporating student characteristics into the analyses. The same process was employed for negative evaluations. The findings are depicted through reticular graphs for each dimension, featuring only statistically significant associations (p < 0.05). As previously outlined, the node’s size indicates the response percentage, while the line thickness reflects the strength of the coincidence or relationship measured by the Haberman coefficient.

Network 1 reveals positive connections among the seven dimensions of Yilmaz’s scale. Particularly, “ease of use” stands out as a highly valued aspect of ChatGPT, strongly linked to increased “credibility” and an overwhelmingly positive “attitude” towards the tool. The dimension most closely associated with others is the “perception of its usage”. Although “perceived security” shows a less robust connection with the other dimensions, it still receives favorable ratings (Figure 2).

Figure 2.

Network 1: Reticular coincidences in the “very positive” response of the seven dimensions.

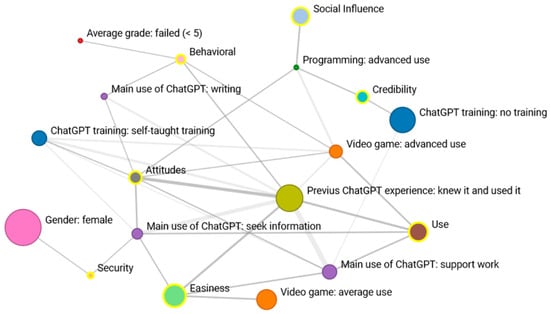

Network 2 shows how previous experience with ChatGPT emerges as a crucial factor strongly linked to four dimensions: “intention to use”, “perceived usefulness”, “attitude”, and “ease of use”. Students who have used it for academic support view ChatGPT as easy to use and beneficial. Meanwhile, those using it as a search tool exhibit a highly positive attitude toward its use, perceiving it as both user-friendly and secure. In the “security” dimension, females perceive the tool as more secure. Students employing ChatGPT for writing support not only maintain a positive attitude towards the tool but also express a clear intention to continue its use in the future.

Concerning students’ technological proficiency (evaluated through their familiarity with video games and programming), there is a link between advanced skills in video games and proficiency in programming. Students with advanced programming abilities also show a social influence and assign high “credibility” to the tool. Conversely, they align with students possessing advanced video game knowledge, indicating a stronger “intention to use” and a more positive evaluation of its “usefulness” and “attitude” toward ChatGPT. Students with average video game usage only display positive associations with the “easiness” dimension (Figure 3).

Figure 3.

Network 2: Reticular coincidences in the “Very positive” response of the 7 dimensions according to student characteristics.

Regarding training with ChatGPT, there is a significant association between the lack of training and high credibility, as well as between self-directed training and an extremely positive attitude toward the tool. In essence, these two nodes are connected. Lastly, two students who failed the previous course showed a strong “intention to use the tool”, representing the only notable coincidence among academic grades.

After examining the coincidences based on negative Likert scale responses, a similar analysis of coincidences was conducted by grouping all negative responses (Network 3). In this context, it can be observed that the attitude toward the potential use of ChatGPT is associated with low “credibility” and an overall negative attitude towards the “use” of ChatGPT. Those who do not find the use of the tool easy only are related with those who have a negative perception, with no overlap between the other dimensions. The most critical ratings focus on “credibility” and “intention to use” (indicated by the size of the node), while “easiness” receives fewer negative responses (Figure 4).

Figure 4.

Network 3: Reticular coincidences in the “very negative” response of the 7 dimensions.

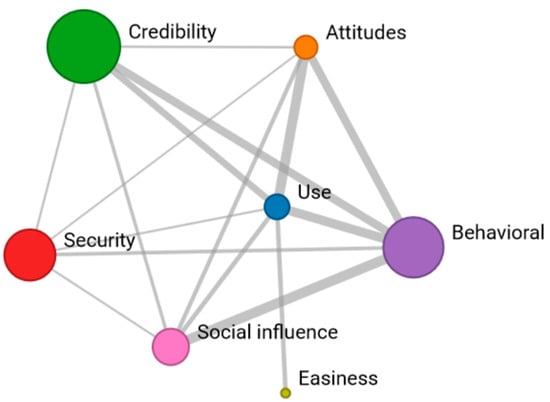

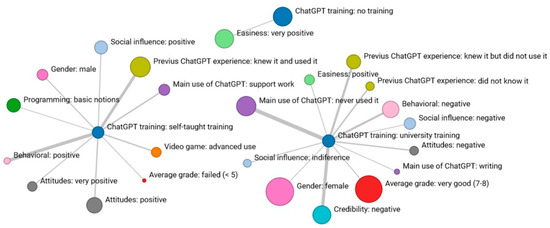

Analyzing the relevant characteristics of students alongside the negative evaluation of dimensions (Network 4), the initial observation is the lack of any significant coincidence related to the “security” dimension, making it the only one isolated in the network among all analyzed dimensions. As indicated in Table 4, there do not appear to be discernible patterns explaining variations in the perception of security among students who, overall, seem rather indifferent in this regard.

It is intriguing to observe a kind of mirror effect between student characteristics coinciding with negative ratings of “perception” and “use” of ChatGPT compared to the results of more positive ratings. The category with the highest number of coincidences is that of students who have never used the tool: they perceive it as difficult, show a negative attitude towards its use, have no intention of using it in the future, and lack social influence to encourage its use. Among these students, those who were not previously aware of the tool stand out; most of them negatively assess a large part of the dimensions (attitude, usefulness, ease, intention, and influence). Additionally, those who, despite being aware of it, had not used it yet also display negative attitudes and intentions of use. Students who have used ChatGPT for writing give it low credibility, while those who have never used it declare not to be socially influenced to use the tool (Figure 5).

Figure 5.

Network 4: Reticular coincidences in the “very negative” response of the 7 dimensions according to student characteristics.

In terms of technological competencies, students with basic knowledge of video games maintained negative attitudes and intentions of use, as well as a poor perception of its utility. However, students with advanced programming knowledge coincided with those who did not find it easy to use ChatGPT. Concerning women, those who positively highlighted the tool’s security coincided with those who negatively assessed its credibility and intention of use. Additionally, significant coincidences were observed among students with an undeclared gender and a negative perception of utility. The type of education received at the university seems to generate rejection of ChatGPT usage, with significant coincidences in the dimensions of “credibility”, “intention of use”, “attitude”, “utility”, and “influence”. The types of grades do not seem to determine negative evaluations of the perception and use of ChatGPT.

This study aimed to analyze the education received during the current academic year (Network 5). Students who have learned to use ChatGPT on their own show coincidences in terms of behavior, social influence, and attitudes. Regarding the characteristics of these students, there are coincidences with usage for school tasks, male gender, advanced technological competencies, and, in terms of grades, these are students who did not pass the last evaluation (Figure 6).

Figure 6.

Network 5: Significant coincidences related to the type of training in ChatGPT.

The training for ChatGPT received at the university does not significantly affect its usage. Among the students who underwent training, many hold a negative perception of ChatGPT yet intend to use it. Within this group, a noticeable alignment is observed between women and high-performing students. Individuals who have not received any training in ChatGPT only value positively the “ease of use”.

5. Discussion

The survey results indicate a somewhat indifferent attitude towards ChatGPT among the surveyed students in the field of social sciences, contrasting with the significant interest observed among teachers and academics. This finding diverges somewhat from other studies, where students have displayed more favorable perceptions towards using this tool [72,75,89,96,105,109]. It aligns with research that reports neutral and less favorable student opinions when evaluating the tool’s usefulness, both within and outside the technology acceptance model (TAM) framework [79,93,110,111].

The reality that students hail from social science backgrounds, where there is a notably lesser presence of technology, may have adversely impacted their views and acceptance of this artificial intelligence tool. Unlike pure or natural sciences, which often provide straightforward “correct” or “incorrect” answers, the social sciences involve a higher degree of subjectivity and place greater emphasis on critical analysis. This inherent complexity in their fields could lead students to be more skeptical or critical of how artificial intelligence tools, which may seem rigid or overly simplistic, integrate into their nuanced academic processes.

Regarding perceived usefulness, previous studies found that this dimension influences attitudes and intentions to use technology [11,14,16,75,77,101,112,113,114]. This study effectively confirmed the hypothesized relationship, as familiarity with video games and programming skills were found to have a significant association with a positive evaluation of ChatGPT. Participants who possessed greater knowledge and experience in these areas tended to appreciate the capabilities and potential of the AI tool more than those with limited exposure, suggesting that technical proficiency can influence perceptions and acceptance of new technologies.

According to Liu and Zheng [24], credibility is a critical factor influencing people’s trust in technology, a finding supported by the responses obtained in this study. Yilmaz emphasizes the dimension of perceived social impact, stating that acceptance and technological adoption behaviors are socially influenced [72]. However, within the context of our study, this dimension does not appear to be a significant variable influencing the outcomes. We found that most students categorized their responses as “indifferent”, indicating a general lack of strong feelings or decisive opinions about the subject matter. This neutrality suggests that other factors may play a more critical role in shaping their perspectives, or that the dimension in question does not resonate strongly with their personal or academic experiences.

Research on the importance of learning and prior experience in technology acceptance revealed that users with more experience in using AI or chatbots may perceive ChatGPT as more useful and easier to use [45,101]. According to this study, students who are well-versed in video games and programming appear to have developed a more robust sense of familiarity, comfort, and confidence when interacting with AI-based systems. This seems to translate into more positive attitudes and intentions to use ChatGPT. Yilmaz [72] also found that students have favorable perceptions of ChatGPT in their educational experiences. However, there is a decreased level of confidence among students who have undergone training, particularly in sessions that involve several different exercises in an intensive format.

Regarding gender, Cai et al.’s meta-analysis suggests that men have a more favorable attitude towards technology [115]. Similarly, Yilmaz [72] found gender differences in the perceived ease of use of ChatGPT, with men finding it easier to use. The study by Raman et al. [106] shows that students who were men favored compatibility, ease of use, and observability, while students who were women highlighted ease of use, compatibility, relative advantage, and trialability. More specifically, they suggest that men and women may have different expectations and experiences with technology. Men are more focused on functional aspects, while women are more attuned to operational and ethical implications, which could influence how ChatGPT is adopted and utilized in different learning environments. Under this assumption, they designed a study focusing on the role of gender, which revealed that students who were men found ChatGPT easy to explore and comprehend for their daily use. Students who were women found ChatGPT to have a greater advantage than other tools for day-to-day use and found it easy to understand, explore, and use. However, like men, women do not find ChatGPT easy to learn simply through observation. According to their findings, both genders found ChatGPT to be easy to use and understand, and that the ability to explore ChatGPT may be a key attribute influencing their usage and adoption intentions. The study of Bouzar et al. [116] found no significant gender difference in ChatGPT acceptance but variations in usage and concerns. Men reported longer usage times, while women reported higher usage frequency and greater apprehension about over-reliance on ChatGPT. Both genders found ChatGPT useful for educational purposes.

The perception of ChatGPT as “male”, as identified in Wong’s study [117] may be a contributing factor to the observed gender differences in the acceptance of this technology. In our study, no gender differences were observed in the perception or use of ChatGPT, which is consistent with other studies based on the TAM [116] and another piece of research examining students’ perceptions from different perspectives [118]. This result also aligns with the literature review by Goswami and Dutta [119], who found that gender differences in technology use and acceptance do not emerge universally but in specific contexts. However, our sample is highly feminized, which could have affected our results.

Debates into gender differences in the acceptance of technology, both in general and in artificial intelligence, may yield complex and sometimes contradictory results. On one hand, it has been suggested that men might exhibit higher levels of comfort and positivity attitudes potentially due to greater exposure to technological fields such as engineering and computer science, which are traditionally male dominated. This exposure could lead to more familiarity and thus a higher level of acceptance. On the other hand, there is a growing body of research indicating that there are no significant gender differences. This could be attributed to the increasing democratization of technology access and education, bridging the gap between genders in tech-related fields. Additionally, societal shifts towards greater gender equality in educational and professional opportunities lead to similar levels of exposure and competency in technology, which could equalize perceptions and acceptance of AI across genders. Therefore, further research on the specific topic of acceptance model applied to IA and ChatGPT addressing gender differences is needed to answer this question.

Contrasting views and mixed results that we have reviewed in the literature suggest that university students’ attitudes towards ChatGPT and AI tools are complex and can vary significantly. This indicates a need for further research and understanding of different perspectives. While students find ChatGPT innovative, user-friendly, and compatible with their educational goals, ethical concerns regarding creativity, plagiarism, and academic integrity hinder its widespread acceptance. These barriers highlight the complex interplay between technological advancements, ethical considerations, and educational practices in the adoption of AI tools like ChatGPT among university students.

6. Conclusions

The main conclusions that emerge from this study can be summarized in the following key points:

- Students in social sciences exhibit notable indifference to ChatGPT, contrasting with the keen interest of the academic community. This may stem from a lower technological presence in social science disciplines and the survey’s timing in October and November of 2023, potentially when students were less acquainted with it.

- Perceived utility emerged as a key factor influencing attitudes and intentions toward technology use, supporting previous findings. Students with knowledge of video games and programming displayed statistically more positive attitudes towards ChatGPT.

- Credibility was confirmed as an influential factor in users’ confidence in this technology. The perceived dimension of social impact seemed less relevant for students in social sciences, suggesting variable dynamics in technological acceptance and adoption in different educational contexts.

- No significant gender disparities were observed in the perception or use of ChatGPT among social sciences students, supporting the idea that gender may not be a determining factor in all contexts, as suggested by previous studies.

- These conclusions provide valuable insights for designing training strategies that can enhance the acceptance of ChatGPT in social science education contexts, and above all, encourage responsible and appropriate use of this tool by emphasizing its strengths and weaknesses.

6.1. The Limitations of the Study

The primary limitations of this study are mostly related to the sample. The students who participated come from the social sciences, which may not be representative of the broader population or other academic disciplines, potentially affecting the generalizability of the findings. Conducting the survey in October and November 2023 may have influenced the results due to varying levels of exposure and familiarity with AI tools among students at that time. The novelty of ChatGPT might not have fully permeated the student body, leading to the indifference or unfamiliarity that we found. Additionally, the lower technological presence in social science disciplines might have skewed the results, as students from these fields may have less interaction with ChatGPT compared to their peers in more tech-oriented disciplines. Furthermore, the study relies on self-reported data, which can be subject to biases such as social desirability bias or inaccurate self-assessment. Lastly, the fact that there were more participants who were women raises questions about how this gender factor might have affected the results according to the literature discussed. Despite it not being clear whether gender differences matter when it comes to technology acceptance and use, we cannot exclude this from consideration.

Future studies should consider a longitudinal approach to assess changes in attitudes and perceptions over time as students become more familiar with ChatGPT. Additionally, expanding the study to include students from diverse disciplines and geographic locations would provide a more comprehensive understanding of the factors influencing ChatGPT acceptance.

6.2. Implications of the Study

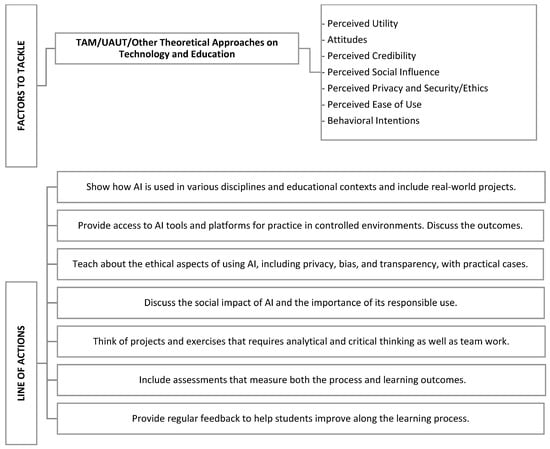

The findings presented in this work indicate a need for tailored educational strategies that highlight both the strengths and limitations of technology to ensure informed and critical use. Higher education institutions have the responsibility to lead these initiatives. Moreover, higher education teachers must be aware of how students are using tools like ChatGPT and similar AI technologies. Failing to do so could result in falling behind in technological advancements and misunderstanding students’ use of AI, which could impact evaluations and grading. To address the challenges posed by AI, it is essential to consider various strategies and initiatives. These should align with the factors influencing technology acceptance and use, as outlined in the following figure (Figure 7):

Figure 7.

Lines of actions for effective programs.

It is not about advocating for or against ChatGPT, but understanding how students are reacting to the widespread adoption of this AI and what factors explain their attitudes. For instance, we have found that perceived utility is a key factor that leads students to use ChatGPT. By highlighting the utility of other academic alternatives or complementary tools to ChatGPT, we can provide more choices to students. Credibility was another important factor, and it is therefore essential to help students develop realistic expectations about AI. Again, it is not about demonizing or romanticizing what ChatGPT can or cannot do, but rather teaching students the importance of verifying information and being analytical, regardless of the tool, resource, or instrument they are using. To this end, it is also essential that teachers introduce ChatGPT into the classroom and critically discuss both correct and incorrect feedback with their students.

Given the positive correlation between knowledge of video games, programming, and favorable attitudes toward ChatGPT that we found, integrating introductory courses on these topics could improve overall competency in using AI tools. ChatGPT has the potential to enhance accessibility by providing personalized learning experiences and offering supplementary support to students with or without special needs. However, it is essential to address potential hindrances such as dependence on technology and accessibility due to economic resources, i.e., a possible gap between those students that can afford the Pro versions of AI tools and those who cannot. In short, a careful integration of ChatGPT into educational settings need to address many factors to ensure that AI complements and helps rather than replaces human interaction and creates inequality. Educators can leverage its benefits while mitigating its drawbacks, thus promoting an inclusive and fair learning environment for all students. For this reason, we need to explore these issues, including the teachers’ perceptions and behaviors that we did not cover in this study.

Author Contributions

Conceptualization, E.M.G.-A.; methodology, E.M.G.-A., A.C.L.-M. and R.G.-O.; validation, E.M.G.-A. and A.C.L.-M.; formal analysis, E.M.G.-A., A.C.L.-M. and R.S.-C.; investigation, E.M.G.-A.; resources, E.M.G.-A.; writing—original draft preparation, E.M.G.-A., A.C.L.-M., R.S.-C. and R.G.-O.; writing—review and editing, E.M.G.-A., A.C.L.-M., R.S.-C. and R.G.-O.; supervision, A.C.L.-M. and R.S.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was developed and funded under the following funded projects: (1) “Opinion Dynamics, Collective Action and Abrupt Social Change. The Role of Preference Falsification and Social Networks” (PID2019-107589GB-I00); (2) “Analytical networks for dissemination and applied research” (PDC2022-133355-I00).

Institutional Review Board Statement

In accordance with the VII Plan Propio de Investigación y Transferencia 2022–2025 (VII Plan of Stimulation and Support for Research and Transfer 2022–2025), the University of Salamanca is dedicated to fostering and advancing the dissemination of knowledge among its student body. This commitment, derived from the specific tenets of the Plan, significantly encourages and promotes the active involvement of student teachers in research endeavors. According to the Research Ethics Committee Regulations of the University of Salamanca and the University of Valladolid, students’ academic work produced in didactic activities as part of the curriculum may be utilized for research with their explicit written consent. This research was conducted too in accordance with the regulations of the Research Ethics Committee of the Autonomous University of Madrid, which states in its protocol (Article 1.2) that questionnaires are outside the scope of application about specific assessments. This study adheres to a non-interventional approach, ensuring participant anonymity, in accordance with the Spanish Organic Law 3/2018, dated 5 December, on Data Protection and Guarantee of Digital Rights. Consequently, this research lies beyond the purview of intervention by the research committee at the University of Salamanca, University of Valladolid, and Autonomous University of Madrid.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Martínez-Plumed, F.; Gómez, E.; Hernández-Orallo, J. Futures of Artificial Intelligence through Technology Readiness Levels. Telemat. Inform. 2021, 58, 101525. [Google Scholar] [CrossRef]

- Ooi, K.-B.; Tan, G.W.-H.; Al-Emran, M.; Al-Sharafi, M.A.; Capatina, A.; Chakraborty, A.; Dwivedi, Y.K.; Huang, T.-L.; Kar, A.K.; Lee, V.-H. The Potential of Generative Artificial Intelligence across Disciplines: Perspectives and Future Directions. J. Comput. Inf. Syst. 2023, 1–32. [Google Scholar] [CrossRef]

- Vannuccini, S.; Prytkova, E. Artificial Intelligence’s New Clothes? A System Technology Perspective. J. Inf. Technol. 2024, 39, 317–338. [Google Scholar] [CrossRef]

- Helm, J.M.; Swiergosz, A.M.; Haeberle, H.S.; Karnuta, J.M.; Schaffer, J.L.; Krebs, V.E.; Spitzer, A.I.; Ramkumar, P.N. Machine Learning and Artificial Intelligence: Definitions, Applications, and Future Directions. Curr. Rev. Musculoskelet. Med. 2020, 13, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Does Chat GPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef] [PubMed]

- Cheng, K.; He, Y.; Li, C.; Xie, R.; Lu, Y.; Gu, S.; Wu, H. Talk with Chat GPT about the Outbreak of Mpox in 2022: Reflections and Suggestions from AI Dimensions. Ann. Biomed. Eng. 2023, 51, 870–874. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, K. Exploring the Opportunities and Challenges of NLP Models in Higher Education: Is Chat GPT a Blessing or a Curse? Front. Educ. 2023, 8, 1166682. [Google Scholar] [CrossRef]

- Kirmani, A.R. Artificial Intelligence-Enabled Science Poetry. ACS Energy Lett. 2022, 8, 574–576. [Google Scholar] [CrossRef]

- OpenAI. Available online: https://openai.com (accessed on 1 January 2024).

- Davis, F.D. User Acceptance of Information Systems: The Technology Acceptance Model (TAM); University of Michigan: Ann Arbor, MI, USA, 1987. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Zin, K.S.L.T.; Kim, S.; Kim, H.S.; Feyissa, I.F. A Study on Technology Acceptance of Digital Healthcare among Older Korean Adults Using Extended Tam (Extended Technology Acceptance Model). Adm. Sci. 2023, 13, 42. [Google Scholar] [CrossRef]

- Marangunić, N.; Granić, A. Technology Acceptance Model: A Literature Review from 1986 to 2013. Univers. Access Inf. Soc. 2015, 14, 81–95. [Google Scholar] [CrossRef]

- Davis, F.D.; Venkatesh, V. A Critical Assessment of Potential Measurement Biases in the Technology Acceptance Model: Three Experiments. Int. J. Hum. Comput. Stud. 1996, 45, 19–45. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Model of the Antecedents of Perceived Ease of Use: Development and Test. Decis. Sci. 1996, 27, 451–481. [Google Scholar] [CrossRef]

- Shidiq, M. The Use of Artificial Intelligence-Based Chat-Gpt and Its Challenges for the World of Education; from the Viewpoint of the Development of Creative Writing Skills. In Proceedings of the International Conference on Education, Society and Humanity, Amsterdam, The Netherlands, 20–23 July 2023; Volume 1, pp. 353–357. [Google Scholar]

- Mahapatra, S. Impact of ChatGPT on ESL Students’ Academic Writing Skills: A Mixed Methods Intervention Study. Smart Learn. Environ. 2024, 11, 9. [Google Scholar] [CrossRef]

- Parker, L.; Carter, C.; Karakas, A.; Loper, A.J.; Sokkar, A. Graduate Instructors Navigating the AI Frontier: The Role of ChatGPT in Higher Education. Comput. Educ. Open 2024, 6, 100166. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Appolloni, A.; Treiblmaier, H.; Iranmanesh, M. Exploring the Impact of ChatGPT on Education: A Web Mining and Machine Learning Approach. Int. J. Manag. Educ. 2024, 22, 100932. [Google Scholar] [CrossRef]

- Tajik, E.; Tajik, F. A Comprehensive Examination of the Potential Application of Chat GPT in Higher Education Institutions. TechRxiv 2023. preprint. [Google Scholar] [CrossRef]

- Rahma, A.; Fithriani, R. The potential impact of using chat GPT on EFL Students’writing: EFL teachers’ perspective. Indonesian EFL Journal. 2024, 10, 11–20. [Google Scholar]

- Lelepary, H.L.; Rachmawati, R.; Zani, B.N.; Maharjan, K. GPT Chat: Opportunities and Challenges in the Learning Process of Arabic Language in Higher Education. JILTECH J. Int. Ling. Technol. 2023, 2, 10–22. [Google Scholar]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. Gpt Understands, Too. arXiv 2021, arXiv:2103.10385. [Google Scholar] [CrossRef]

- Su, Y.; Lin, Y.; Lai, C. Collaborating with ChatGPT in Argumentative Writing Classrooms. Assess. Writ. 2023, 57, 100752. [Google Scholar] [CrossRef]

- Bishop, L. A Computer Wrote This Paper: What Chatgpt Means for Education, Research, and Writing. SSRN 2023. [Google Scholar] [CrossRef]

- Cotton, D.R.E.; Cotton, P.A.; Shipway, J.R. Chatting and Cheating: Ensuring Academic Integrity in the Era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Biswas, S. Role of Chat GPT in Education. J ENT Surg. Res. 2023, 1, 1–3. [Google Scholar]

- Lee, H.-Y.; Chen, P.-H.; Wang, W.-S.; Huang, Y.-M.; Wu, T.-T. Empowering ChatGPT with Guidance Mechanism in Blended Learning: Effect of Self-Regulated Learning, Higher-Order Thinking Skills, and Knowledge Construction. Int. J. Educ. Technol. High. Educ. 2024, 21, 16. [Google Scholar] [CrossRef]

- Elbanna, S.; Armstrong, L. Exploring the Integration of ChatGPT in Education: Adapting for the Future. Manag. Sustain. Arab. Rev. 2024, 3, 16–29. [Google Scholar] [CrossRef]

- Dalgıç, A.; Yaşar, E.; Demir, M. ChatGPT and Learning Outcomes in Tourism Education: The Role of Digital Literacy and Individualized Learning. J. Hosp. Leis. Sport. Tour. Educ. 2024, 34, 100481. [Google Scholar] [CrossRef]

- Wu, T.-T.; Lee, H.-Y.; Li, P.-H.; Huang, C.-N.; Huang, Y.-M. Promoting Self-Regulation Progress and Knowledge Construction in Blended Learning via ChatGPT-Based Learning Aid. J. Educ. Comput. Res. 2024, 61, 3–31. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Wu, C.; Chen, L.; Han, M.; Li, Z.; Yang, N.; Yu, C. Application of ChatGPT-Based Blended Medical Teaching in Clinical Education of Hepatobiliary Surgery. Med. Teach. 2024; online ahead of print. [Google Scholar]

- Topaz, M.; Peltonen, L.-M.; Michalowski, M.; Stiglic, G.; Ronquillo, C.; Pruinelli, L.; Song, J.; O’Connor, S.; Miyagawa, S.; Fukahori, H. The ChatGPT Effect: Nursing Education and Generative Artificial Intelligence. J. Nurs. Educ. 2024, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Ali, K.; Barhom, N.; Tamimi, F.; Duggal, M. ChatGPT—A Double-edged Sword for Healthcare Education? Implications for Assessments of Dental Students. Eur. J. Dent. Educ. 2024, 28, 206–211. [Google Scholar] [CrossRef]

- Boscardin, C.K.; Gin, B.; Golde, P.B.; Hauer, K.E. ChatGPT and Generative Artificial Intelligence for Medical Education: Potential Impact and Opportunity. Acad. Med. 2024, 99, 22–27. [Google Scholar] [CrossRef]

- Sahu, P.K.; Benjamin, L.A.; Singh Aswal, G.; Williams-Persad, A. ChatGPT in Research and Health Professions Education: Challenges, Opportunities, and Future Directions. Postgrad. Med. J. 2024, 100, 50–55. [Google Scholar] [CrossRef] [PubMed]

- Hwang, J.Y. Potential Effects of ChatGPT as a Learning Tool through Students’ Experiences. Med. Teach. 2024, 46, 291. [Google Scholar] [CrossRef]

- Gosak, L.; Pruinelli, L.; Topaz, M.; Štiglic, G. The ChatGPT Effect and Transforming Nursing Education with Generative AI: Discussion Paper. Nurse Educ. Pract. 2024, 75, 103888. [Google Scholar] [CrossRef]

- AlAli, R.; Wardat, Y. How ChatGPT Will Shape the Teaching Learning Landscape in Future. J. Educ. Soc. Res. 2024, 14, 336–345. [Google Scholar] [CrossRef]

- Oguz, F.E.; Ekersular, M.N.; Sunnetci, K.M.; Alkan, A. Can Chat GPT Be Utilized in Scientific and Undergraduate Studies? Ann. Biomed. Eng. 2023, 52, 1128–1130. [Google Scholar] [CrossRef]

- Mhlanga, D. The Value of Open AI and Chat GPT for the Current Learning Environments and the Potential Future Uses. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Lewis, A. Multimodal Large Language Models for Inclusive Collaboration Learning Tasks. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop, Washington, DC, USA, 10–15 July 2022; pp. 202–210. [Google Scholar]

- Kanwal, A.; Hassan, S.K.; Iqbal, I. An Investigation into How University-Level Teachers Perceive Chat-GPT Impact Upon Student Learning. Gomal Univ. J. Res. 2023, 39, 250–265. [Google Scholar] [CrossRef]

- Jing, Y.; Wang, H.; Chen, X.; Wang, C. What Factors Will Affect the Effectiveness of Using ChatGPT to Solve Programming Problems? A Quasi-Experimental Study. Humanit. Soc. Sci. Commun. 2024, 11, 319. [Google Scholar] [CrossRef]

- Xu, X.; Wang, X.; Zhang, Y.; Zheng, R. Applying ChatGPT to Tackle the Side Effects of Personal Learning Environments from Learner and Learning Perspective: An Interview of Experts in Higher Education. PLoS ONE 2024, 19, e0295646. [Google Scholar] [CrossRef] [PubMed]

- Alneyadi, S.; Wardat, Y. Integrating ChatGPT in Grade 12 Quantum Theory Education: An Exploratory Study at Emirate School (UAE). Int. J. Inf. Educ. Technol. 2024, 14, 389. [Google Scholar]

- Chang, C.-Y.; Yang, C.-L.; Jen, H.-J.; Ogata, H.; Hwang, G.-H. Facilitating Nursing and Health Education by Incorporating ChatGPT into Learning Designs. Educ. Technol. Soc. 2024, 27, 215–230. [Google Scholar]

- Firat, M. How Chat GPT Can Transform Autodidactic Experiences and Open Education. OSF Prepr. 2023; preprint. [Google Scholar] [CrossRef]

- Montenegro-Rueda, M.; Fernández-Cerero, J.; Fernández-Batanero, J.M.; López-Meneses, E. Impact of the Implementation of ChatGPT in Education: A Systematic Review. Computers 2023, 12, 153. [Google Scholar] [CrossRef]

- Markel, J.M.; Opferman, S.G.; Landay, J.A.; Piech, C. GPTeach: Interactive TA Training with GPT Based Students. In Proceedings of the L@S ’23: Proceedings of the Tenth ACM Conference on Learning @ Scale, Copenhagen, Denmark, 20–22 July 2023. [Google Scholar]

- Wang, M. Chat GPT: A Case Study. PDGIA J. High. Educ. 2023. Available online: https://journal.pdgia.ca/index.php/education/article/view/10 (accessed on 4 July 2024).

- Lund, B.D.; Wang, T. Chatting about ChatGPT: How May AI and GPT Impact Academia and Libraries? Libr. Hi Tech. News 2023, 40, 26–29. [Google Scholar] [CrossRef]

- Polat, H.; Topuz, A.; Yıldız, M.; Taşlıbeyaz, E.; Kurşun, E. A Bibliometric Analysis of Research on ChatGPT in Education. Int. J. Technol. Educ. (IJTE) 2024, 7, 59–85. [Google Scholar] [CrossRef]

- Graefen, B.; Fazal, N. Chat Bots to Virtual Tutors: An Overview of Chat GPT’s Role in the Future of Education. Arch. Pharm. Pract. 2024, 15, 43–52. [Google Scholar] [CrossRef]

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Luo, W.; He, H.; Liu, J.; Berson, I.R.; Berson, M.J.; Zhou, Y.; Li, H. Aladdin’s Genie or Pandora’s Box for Early Childhood Education? Experts Chat on the Roles, Challenges, and Developments of ChatGPT. Early Educ. Dev. 2024, 35, 96–113. [Google Scholar] [CrossRef]

- Niloy, A.C.; Akter, S.; Sultana, N.; Sultana, J.; Rahman, S.I.U. Is Chatgpt a Menace for Creative Writing Ability? An Experiment. J. Comput. Assist. Learn. 2024, 40, 919–930. [Google Scholar] [CrossRef]

- Yu, H. Reflection on Whether Chat GPT Should Be Banned by Academia from the Perspective of Education and Teaching. Front. Psychol. 2023, 14, 1181712. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Yadav, R. Chat GPT–A Technological Remedy or Challenge for Education System. Glob. J. Enterp. Inf. Syst. 2022, 14, 46–51. [Google Scholar]

- Maboloc, C.R. Chat GPT: The Need for an Ethical Framework to Regulate Its Use in Education. J. Public Health 2023, 46, e125. [Google Scholar] [CrossRef] [PubMed]

- Wilkenfeld, J.N.; Yan, B.; Huang, J.; Luo, G.; Algas, K. “AI Love You”: Linguistic Convergence in Human-Chatbot Relationship Development. Acad. Manag. Proc. 2022, 2022, 17063. [Google Scholar] [CrossRef]

- Chaves, A.P.; Gerosa, M.A. The Impact of Chatbot Linguistic Register on User Perceptions: A Replication Study. In Proceedings of the International Workshop on Chatbot Research and Design, Virtual, 23–24 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 143–159. [Google Scholar]

- Imran, M.; Almusharraf, N. Analyzing the Role of ChatGPT as a Writing Assistant at Higher Education Level: A Systematic Review of the Literature. Contemp. Educ. Technol. 2023, 15, ep464. [Google Scholar] [CrossRef] [PubMed]

- Huallpa, J.J. Exploring the Ethical Considerations of Using Chat GPT in University Education. Period. Eng. Nat. Sci. 2023, 11, 105–115. [Google Scholar]

- Stepanenko, O.; Stupak, O. The Use of GPT Chat among Students in Ukrainian Universities. Sci. J. Pol. Univ. 2023, 58, 202–207. [Google Scholar] [CrossRef]

- Mhlanga, D. Open AI in Education, the Responsible and Ethical Use of ChatGPT towards Lifelong Learning. In FinTech and Artificial Intelligence for Sustainable Development; Palgrave Macmillan: Cham, Switzerland, 2023. [Google Scholar]

- Baskara, F.X.R. The Promises and Pitfalls of Using Chat Gpt for Self-Determined Learning in Higher Education: An Argumentative Review. Pros. Semin. Nas. Fak. Tarb. Dan Ilmu Kegur. IAIM Sinjai 2023, 2, 95–101. [Google Scholar] [CrossRef]

- Borji, A. A Categorical Archive of Chatgpt Failures. arXiv 2023, arXiv:2302.03494. [Google Scholar]

- Mijwil, M.; Aljanabi, M.; Ali, A.H. Chat GPT: Exploring the Role of Cybersecurity in the Protection of Medical Information. Mesopotamian J. Cybersecur. 2023, 2023, 18–21. [Google Scholar] [CrossRef]

- Yilmaz, H.; Maxutov, S.; Baitekov, A.; Balta, N. Student Attitudes towards Chat GPT: A Technology Acceptance Model Survey. Int. Educ. Rev. 2023, 1, 57–83. [Google Scholar] [CrossRef]

- Alshurideh, M.; Jdaitawi, A.; Sukkari, L.; Al-Gasaymeh, A.; Alzoubi, H.; Damra, Y.; Yasin, S.; Kurdi, B.; Alshurideh, H. Factors Affecting ChatGPT Use in Education Employing TAM: A Jordanian Universities’ Perspective. Int. J. Data Netw. Sci. 2024, 8, 1599–1606. [Google Scholar] [CrossRef]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Aldraiweesh, A.; Alturki, U.; Almutairy, S.; Shutaleva, A.; Soomro, R.B. Extended TAM Based Acceptance of AI-Powered ChatGPT for Supporting Metacognitive Self-Regulated Learning in Education: A Mixed-Methods Study. Heliyon 2024, 10, e29317. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Ma, C. Measuring EFL Learners’ Use of ChatGPT in Informal Digital Learning of English Based on the Technology Acceptance Model. Innov. Lang. Learn. Teach. 2024, 18, 125–138. [Google Scholar] [CrossRef]

- Tan, P.J.B. A Study on the Use of Chatgpt in English Writing Classes for Taiwanese College Students Using Tam Technology Acceptance Model. SSRN 2024. [Google Scholar] [CrossRef]

- Salloum, S.A.; Aljanada, R.A.; Alfaisal, A.M.; Al Saidat, M.R.; Alfaisal, R. Exploring the Acceptance of ChatGPT for Translation: An Extended TAM Model Approach. In Artificial Intelligence in Education: The Power and Dangers of ChatGPT in the Classroom; Springer: Berlin/Heidelberg, Germany, 2024; pp. 527–542. [Google Scholar]

- Dehghani, H.; Mashhadi, A. Exploring Iranian English as a Foreign Language Teachers’ Acceptance of ChatGPT in English Language Teaching: Extending the Technology Acceptance Model. Educ. Inf. Technol. 2024, 1–22. [Google Scholar] [CrossRef]

- Vo, T.K.A.; Nguyen, H. Generative Artificial Intelligence and ChatGPT in Language Learning: EFL Students’ Perceptions of Technology Acceptance. J. Univ. Teach. Learn. Pract. 2024, 21. [Google Scholar] [CrossRef]

- Castillo, A.G.R.; Rivera, H.V.H.; Teves, R.M.V.; Lopez, H.R.P.; Reyes, G.Y.; Rodriguez, M.A.M.; Berrios, H.Q.; Arocutipa, J.P.F.; Silva, G.J.S.; Arias-Gonzáles, J.L. Effect of Chat GPT on the Digitized Learning Process of University Students. J. Namib. Stud. Hist. Politics Cult. 2023, 33, 1–15. [Google Scholar]

- Raiche, A.-P.; Dauphinais, L.; Duval, M.; De Luca, G.; Rivest-Hénault, D.; Vaughan, T.; Proulx, C.; Guay, J.-P. Factors Influencing Acceptance and Trust of Chatbots in Juvenile Offenders’ Risk Assessment Training. Front. Psychol. 2023, 14, 1184016. [Google Scholar] [CrossRef]

- Jowarder, M.I. The Influence of ChatGPT on Social Science Students: Insights Drawn from Undergraduate Students in the United States. Indones. J. Innov. Appl. Sci. (IJIAS) 2023, 3, 194–200. [Google Scholar] [CrossRef]

- Romero Rodríguez, J.M.; Ramírez-Montoya, M.S.; Buenestado Fernández, M.; Lara Lara, F. Use of ChatGPT at University as a Tool for Complex Thinking: Students’ Perceived Usefulness. J. New Approaches Educ. Res. 2023, 12, 323–339. [Google Scholar] [CrossRef]

- Kim, H.J.; Oh, S. Analysis of the Intention to Use ChatGPT in College Students’ Assignment Performance: Focusing on the Moderating Effects of Personal Innovativeness. Korean Soc. Cult. Converg. 2023, 45, 203–214. [Google Scholar] [CrossRef]

- Acosta-Enriquez, B.G.; Arbulú Ballesteros, M.A.; Huamaní Jordan, O.; López Roca, C.; Saavedra Tirado, K. Analysis of College Students’ Attitudes toward the Use of ChatGPT in Their Academic Activities: Effect of Intent to Use, Verification of Information and Responsible Use. BMC Psychol. 2024, 12, 255. [Google Scholar] [CrossRef] [PubMed]

- Van Wyk, M.M. Is ChatGPT an opportunity or a threat? Preventive strategies employed by academics related to a GenAI-based LLM at a faculty of education. J. Appl. Learn. Teach. 2024, 7. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.; Barakat, M.; Al-Mahzoum, K.; Al-Tammemi, A.B.; Malaeb, D.; Hallit, R.; Hallit, S. Validation of a Technology Acceptance Model-Based Scale TAME-ChatGPT on Health Students Attitudes and Usage of ChatGPT in Jordan. JMIR Prepr. 2023; preprint. [Google Scholar]

- Xiao, P.; Chen, Y.; Bao, W. Waiting, Banning, and Embracing: An Empirical Analysis of Adapting Policies for Generative AI in Higher Education. arXiv 2023, arXiv:2305.18617. [Google Scholar] [CrossRef]

- Raman, R.; Mandal, S.; Das, P.; Kaur, T.; Sanjanasri, J.P.; Nedungadi, P. University Students as Early Adopters of ChatGPT: Innovation Diffusion Study. Res. Sq. 2023; preprint. [Google Scholar]

- Strzelecki, A. To Use or Not to Use ChatGPT in Higher Education? A Study of Students’ Acceptance and Use of Technology. Interact. Learn. Environ. 2023, 1–14. [Google Scholar] [CrossRef]

- Terblanche, N.; Molyn, J.; Williams, K.; Maritz, J. Performance Matters: Students’ Perceptions of Artificial Intelligence Coach Adoption Factors. Coach. Int. J. Theory Res. Pract. 2023, 16, 100–114. [Google Scholar] [CrossRef]

- Imran, A.A.; Lashari, A.A. Exploring the World of Artificial Intelligence: The Perception of the University Students about ChatGPT for Academic Purpose. Glob. Soc. Sci. Rev. 2023, 8, 375–384. [Google Scholar] [CrossRef]

- Sánchez, O.V.G. Uso y Percepción de ChatGPT En La Educación Superior. Rev. Investig. Tecnol. Inf. 2023, 11, 98–107. [Google Scholar]

- Bonsu, E.M.; Baffour-Koduah, D. From the Consumers’ Side: Determining Students’ Perception and Intention to Use ChatGPT in Ghanaian Higher Education. J. Educ. Soc. Multicult. 2023, 4, 1–29. [Google Scholar] [CrossRef]

- Sevnarayan, K. Exploring the Dynamics of ChatGPT: Students and Lecturers’ Perspectives at an Open Distance e-Learning University. J. Pedagog. Res. 2024, 8, 212–226. [Google Scholar] [CrossRef]

- Elkhodr, M.; Gide, E.; Wu, R.; Darwish, O. ICT Students’ Perceptions towards ChatGPT: An Experimental Reflective Lab Analysis. STEM Educ. 2023, 3, 70–88. [Google Scholar] [CrossRef]

- Urban, M.; Děchtěrenko, F.; Lukavský, J.; Hrabalová, V.; Svacha, F.; Brom, C.; Urban, K. ChatGPT Improves Creative Problem-Solving Performance in University Students: An Experimental Study. Comput. Educ. 2024, 215, 105031. [Google Scholar] [CrossRef]

- Lo, C.K.; Hew, K.F.; Jong, M.S.Y. The influence of ChatGPT on student engagement: A systematic review and future research agenda. Comput. Educ. 2024, 105100. [Google Scholar] [CrossRef]

- Ali, J.K.M.; Shamsan, M.A.A.; Hezam, T.A.; Mohammed, A.A.Q. Impact of ChatGPT on Learning Motivation: Teachers and Students’ Voices. J. Engl. Stud. Arab. Felix 2023, 2, 41–49. [Google Scholar] [CrossRef]

- George, A.S.; George, A.S.H. A Review of ChatGPT AI’s Impact on Several Business Sectors. Partn. Univers. Int. Innov. J. 2023, 1, 9–23. [Google Scholar]

- Saif, N.; Khan, S.U.; Shaheen, I.; ALotaibi, F.A.; Alnfiai, M.M.; Arif, M. Chat-GPT; Validating Technology Acceptance Model (TAM) in Education Sector via Ubiquitous Learning Mechanism. Comput. Hum. Behav. 2024, 154, 108097. [Google Scholar] [CrossRef]

- Playfoot, D.; Quigley, M.; Thomas, A.G. Hey ChatGPT, Give Me a Title for a Paper about Degree Apathy and Student Use of AI for Assignment Writing. Internet High. Educ. 2024, 62, 100950. [Google Scholar] [CrossRef]

- Rasul, T.; Nair, S.; Kalendra, D.; Robin, M.; de Oliveira Santini, F.; Ladeira, W.J.; Sun, M.; Day, I.; Rather, R.A.; Heathcote, L. The Role of ChatGPT in Higher Education: Benefits, Challenges, and Future Research Directions. J. Appl. Learn. Teach. 2023, 6, 41–56. [Google Scholar]

- Salloum, S.; Alfaisal, R.; Al-Maroof, R.; Shishakly, R.; Almaiah, D.; Al-Ali, R. A Comparative Analysis between ChatGPT & Google as Learning Platforms: The Role of Mediators in the Acceptance of Learning Platform. Int. J. Data Netw. Sci. 2024, 8, 2151–2162. [Google Scholar] [CrossRef]

- Raman, R.; Mandal, S.; Das, P.; Kaur, T.; Sanjanasri, J.P.; Nedungadi, P. Exploring University Students’ Adoption of ChatGPT Using the Diffusion of Innovation Theory and Sentiment Analysis with Gender Dimension. Hum. Behav. Emerg. Technol. 2024, 2024, 3085910. [Google Scholar] [CrossRef]

- Ibrahim, H.; Liu, F.; Asim, R.; Battu, B.; Benabderrahmane, S.; Alhafni, B.; Adnan, W.; Alhanai, T.; AlShebli, B.; Baghdadi, R. Perception, Performance, and Detectability of Conversational Artificial Intelligence across 32 University Courses. Sci. Rep. 2023, 13, 12187. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Li, X.; Luo, H.; Yin, S.; Kaynak, O. Quo Vadis Artificial Intelligence? Discov. Artif. Intell. 2022, 2, 4. [Google Scholar] [CrossRef]

- Escobar, M.; Martinez-Uribe, L. Network Coincidence Analysis: The Netcoin R Package. J. Stat. Softw. 2020, 93, 1–32. [Google Scholar] [CrossRef]

- Strzelecki, A. Students’ Acceptance of ChatGPT in Higher Education: An Extended Unified Theory of Acceptance and Use of Technology. Innov. High. Educ. 2024, 49, 223–245. [Google Scholar] [CrossRef]

- Bulduk, A. But Why? A Study into Why Upper Secondary School Students Use ChatGPT: Understanding Students’ Reasoning through Jean Baudrillard’s Theory. 2023. Available online: https://www.diva-portal.org/smash/get/diva2:1773320/FULLTEXT01.pdf (accessed on 4 July 2024).

- Šedlbauer, J.; Činčera, J.; Slavík, M.; Hartlová, A. Students’ Reflections on Their Experience with ChatGPT. J. Comput. Assist. Learn. 2024. [Google Scholar] [CrossRef]

- Granić, A.; Marangunić, N. Technology Acceptance Model in Educational Context: A Systematic Literature Review. Br. J. Educ. Technol. 2019, 50, 2572–2593. [Google Scholar] [CrossRef]

- Teerawongsathorn, J. Understanding the Influence Factors on the Acceptance and Use of ChatGPT in Bangkok: A Study Based on the Technology Acceptance Model. Ph.D. Dissertation, Mahidol University, Nakorn Pathom, Thailand, 2023. [Google Scholar]

- Shaengchart, Y. A Conceptual Review of TAM and ChatGPT Usage Intentions among Higher Education Students. Adv. Knowl. Exec. 2023, 2, 1–7. [Google Scholar]

- Cai, Z.; Fan, X.; Du, J. Gender and Attitudes toward Technology Use: A Meta-Analysis. Comput. Educ. 2017, 105, 1–13. [Google Scholar] [CrossRef]

- Bouzar, A.; El Idrissi, K.; Ghourdou, T. Gender Differences in Perceptions and Usage of Chatgpt. Int. J. Humanit. Educ. Res. 2024, 6, 571–582. [Google Scholar] [CrossRef]

- Wong, J.; Kim, J. ChatGPT Is More Likely to Be Perceived as Male than Female. arXiv 2023, arXiv:2305.12564. [Google Scholar]

- Alkhaaldi, S.M.I.; Kassab, C.H.; Dimassi, Z.; Alsoud, L.O.; Al Fahim, M.; Al Hageh, C.; Ibrahim, H. Medical Student Experiences and Perceptions of ChatGPT and Artificial Intelligence: Cross-Sectional Study. JMIR Med. Educ. 2023, 9, e51302. [Google Scholar] [CrossRef]

- Goswami, A.; Dutta, S. Gender Differences in Technology Usage—A Literature Review. Open J. Bus. Manag. 2015, 4, 51–59. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).